Nathan Young's Shortform

post by Nathan Young · 2022-09-23T17:47:06.903Z · LW · GW · 131 commentsContents

131 comments

131 comments

Comments sorted by top scores.

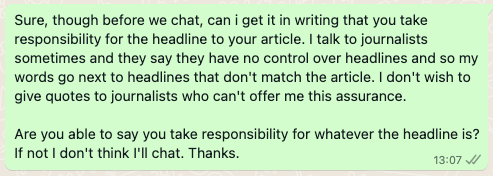

comment by Nathan Young · 2024-07-16T12:26:43.469Z · LW(p) · GW(p)

Trying out my new journalist strategy.

↑ comment by Raemon · 2024-07-16T19:35:29.062Z · LW(p) · GW(p)

Nice.

Replies from: Raemon↑ comment by Raemon · 2024-07-17T00:55:05.636Z · LW(p) · GW(p)

though, curious to hear an instance of it actually playing out

Replies from: Nathan Young, Nathan Young↑ comment by Nathan Young · 2024-07-18T13:11:32.896Z · LW(p) · GW(p)

So far a journalist just said "sure". So n = 1 it's fine.

↑ comment by Nathan Young · 2024-07-24T01:05:23.748Z · LW(p) · GW(p)

I have 2 so far. One journalist agreed with no bother. The other frustratedly said they couldn't guarantee that and tried to negotiate. I said I was happy to take a bond, they said no, which suggested they weren't that confident.

Replies from: Raemon↑ comment by Raemon · 2024-07-24T01:07:46.530Z · LW(p) · GW(p)

I guess the actual resolution here will eventually come from seeing the final headlines and that, like, they're actually reasonable.

Replies from: Nathan Young↑ comment by Nathan Young · 2024-07-24T17:35:00.204Z · LW(p) · GW(p)

I disagree. They don't need to be reasonable so much as I now have a big stick to beat the journalist with if they aren't.

"I can't change my headlines"

"But it is your responsibility right?"

"No"

"Oh were you lying when you said it was"

comment by Nathan Young · 2024-06-22T12:30:46.440Z · LW(p) · GW(p)

Joe Rogan (largest podcaster in the world) giving repeated concerned mediocre x-risk explanations suggests that people who have contacts with him should try and get someone on the show to talk about it.

eg listen from 2:40:00 Though there were several bits like this during the show.

↑ comment by Zac Hatfield-Dodds (zac-hatfield-dodds) · 2024-06-22T16:35:32.219Z · LW(p) · GW(p)

He talked to Gladstone AI founders a few weeks ago; AGI risks were mentioned but not in much depth.

↑ comment by Stephen Fowler (LosPolloFowler) · 2024-06-23T00:10:58.187Z · LW(p) · GW(p)

Obvious and "shallow" suggestion. Whoever goes on needs to be "classically charismatic" to appeal to a mainstream audience.

Potentially this means someone from policy rather than technical research.

Replies from: robert-lynn↑ comment by Foyle (robert-lynn) · 2024-06-23T11:14:08.215Z · LW(p) · GW(p)

AI safety desperately needs to buy in or persuade some high profile talent to raise public awareness. Business as usual approach of last decade is clearly not working - we are sleep walking towards the cliff. Given how timelines are collapsing the problem to be solved has morphed from being a technical one to a pressing social one - we have to get enough people clamouring for a halt that politicians will start to prioritise appeasing them ahead of their big tech donors.

It probably wouldn't be expensive to rent a few high profile influencers with major reach amongst impressionable youth. A demographic that is easily convinced to buy into and campaign against end of the world causes.

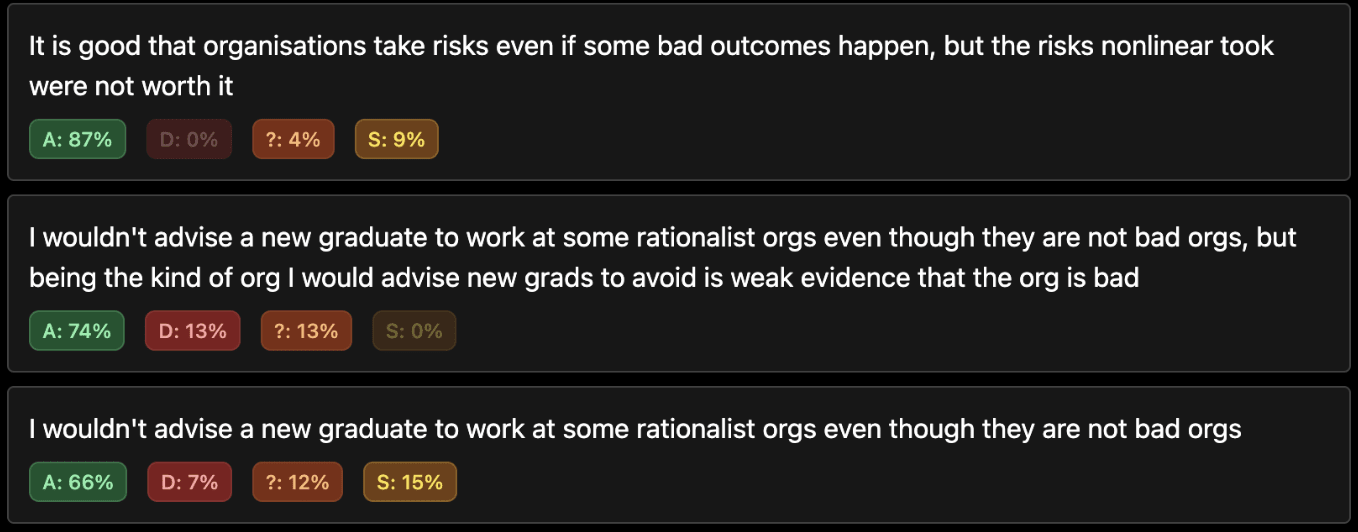

comment by Nathan Young · 2024-09-10T10:40:09.481Z · LW(p) · GW(p)

I read @TracingWoodgrains [LW · GW] piece on Nonlinear and have further updated that the original post by @Ben Pace [LW · GW] was likely an error.

I have bet accordingly here.

comment by Nathan Young · 2024-10-09T12:39:08.273Z · LW(p) · GW(p)

It is disappointing/confusing to me that of the two articles I recently wrote, the one that was much closer to reality got a lot less karma.

- A new process for mapping discussions [LW · GW] is a summary of months of work that I and my team did on mapping discourse around AI. We built new tools, employed new methodologies. It got 19 karma

- Advice for journalists [LW · GW] is a piece that I wrote in about 5 hours after perhaps 5 hours of experiences. It has 73 karma and counting

I think this is isn't much evidence, given it's just two pieces. But I do feel a pull towards coming up with theories rather than building and testing things in the real world. To the extent this pull is real, it seems bad.

If true, I would recommend both that more people build things in the real world and talk about them and that we find ways to reward these posts more, regardless of how alive they feel to us at the time.

(Aliveness being my hypothesis - many of us understand or have more live feelings about dealing with journalists than a sort of dry post about mapping discourse)

Replies from: Seth Herd, steve2152, MondSemmel, Viliam, alexander-gietelink-oldenziel, MichaelDickens, nc, MondSemmel, StartAtTheEnd↑ comment by Seth Herd · 2024-10-09T15:11:44.777Z · LW(p) · GW(p)

Your post on journalists is, as I suspected, a lot better.

I bounced off the post about mapping discussions because the post didn't make clear what potential it might have to be useful to me. The post on journalists, which drew me in and quickly made clear what its use would be: informing me of how journalists use conversations with them.

The implied claims that theory should be worth less than building or that time on task equals usefulness are both wrong. We are collectively very confused, so running around building stuff before getting less confused isn't always the best use of time.

Replies from: Nathan Young↑ comment by Nathan Young · 2024-10-10T00:58:00.432Z · LW(p) · GW(p)

Yeah but the mapping post is about 100x more important/well informed also. Shouldn't that count for something? I'm not saying it's clearer, I'm saying that it's higher priority, probably.

Replies from: alexander-gietelink-oldenziel, MondSemmel↑ comment by Alexander Gietelink Oldenziel (alexander-gietelink-oldenziel) · 2024-10-10T06:47:12.372Z · LW(p) · GW(p)

Why is it more important ?

As a reaction to this shortform I looked at the mapping post and immediately bounced off. Echoing Seth I didnt understand the point.

The journalist post, by contrast, resonated strongly with me. Obviously it's red meat for the commentariat here. It made me feel like (1) other people care about this problem in a real, substantive, and potentially constructive way ; (2) there might be ways to make this genui ely better and this is potentially a very high impact lever.

↑ comment by MondSemmel · 2024-10-10T08:15:13.663Z · LW(p) · GW(p)

You may think the post is far more important and well informed, but if it isn't sufficiently clear, then maybe that didn't come across to your audience.

↑ comment by Steven Byrnes (steve2152) · 2024-10-09T19:20:49.891Z · LW(p) · GW(p)

I was relating pretty similar experiences here [LW(p) · GW(p)], albeit with more of a “lol post karma is stupid amiright?” vibe than a “this is a problem we should fix” vibe.

Your journalist thing is an easy-to-read blog post that strongly meshes with a popular rationalist tribal belief (i.e., “boo journalists”). Obviously those kinds of posts are going to get lots and lots of upvotes, and thus posts that lack those properties can get displaced from the frontpage. I have no idea what can be done about that problem, except that we can all individually try to be mindful about which posts we’re reading and upvoting (or strong-upvoting) and why.

(This is a pro tanto argument for having separate karma-voting and approval-voting for posts, I think.)

And simultaneously, we writers should try not to treat the lesswrong frontpage as our one and only plan for getting what we write in front of our target audience. (E.g. you yourself have other distribution channels.)

↑ comment by MondSemmel · 2024-10-09T13:12:22.554Z · LW(p) · GW(p)

I haven't read either post, but maybe this problem reduces partly to more technical posts getting less views, and thus less karma? One problem with even great technical posts is that very few readers can evaluate that such a post is indeed great. And if you can't tell whether a post is accurate, then it can feel irresponsible to upvote it. Even if your technical post is indeed great, it's not clear that a policy of "upvote all technical posts I can't judge myself" would make great technical posts win in terms of karma.

A second issue I'm just noticing is that the first post contains lots of text-heavy screenshots, and that has a bunch of downsides for engagement. Like, the blue font in the first screenshot is very small and thus hard to read. I read stuff in a read-it-later app (called Readwise Reader), incl. with text-to-speech, and neither the app nor the TTS work great with such images. Also, such images usually don't respect dark mode on either LW or other apps. You can't use LW's inline quote comments. And so on and so forth. Screenshots work better in a presentation, but not particularly well in a full essay.

Another potential issue is that the first post doesn't end on "Tell me what you think" (= invites discussion and engagement), but rather with a Thanks section (does anyone ever read those?) and then a huge skimmable section of Full screenshots.

I'm also noticing that the LW version of the first post is lacking the footnotes from the Substack version.

EDIT: And the title for the second post seems way better. Before clicking on either post, I have an idea what the second one is about, and none whatsoever what the first one is about. So why would I even click on the latter? Would the readers you're trying to reach with that post even know what you mean by "mapping discussions"?

EDIT2: And when I hear "process", I think of mandated employee trainings, not of software solutions, so the title is misleading to me, too. Even "A new website for mapping discussions" or "We built a website for mapping discussions" would already sound more accurate and interesting to me, though I still wouldn't know what the "mapping discussions" part is about.

↑ comment by Viliam · 2024-10-10T11:56:58.637Z · LW(p) · GW(p)

Well, karma is not a perfect tool. It is good at keeping good stuff above zero and bad stuff below zero, by distributed effort. It is not good at quantifying how good or how bad the stuff is.

Solving alignment = positive karma. Cute kitten = positive karma. Ugly kitten = negative karma. Promoting homeopathy = negative karma.

It is a good tool for removing homeopathy and ugly kittens. Without it, we would probably have more of those. So despite all the disadvantages, I want the karma system to stay. Until perhaps we invent something better.

I think we currently don't have a formal tool for measuring "important" as a thing separate from "interesting" or "pleasant to read". The best you can get is someone quoting you approvingly.

Replies from: Taleuntum↑ comment by Taleuntum · 2024-10-10T13:36:09.885Z · LW(p) · GW(p)

Tangential, but my immediate reaction to your example was "ugly kitten? All kittens are cute!", so I searched specifically for "ugly kitten" on Google and it turns out that you were right! There are a lot of ugly kittens even though I never saw them! This probably says something about society..

↑ comment by Alexander Gietelink Oldenziel (alexander-gietelink-oldenziel) · 2024-10-09T12:55:20.127Z · LW(p) · GW(p)

Fwiw I loved your journalist post and I never even saw your other post (until now).

↑ comment by MichaelDickens · 2024-10-09T20:36:37.062Z · LW(p) · GW(p)

Often, you write something short that ends up being valuable. That doesn't mean you should despair about your longer and harder work being less valuable. Like if you could spend 40 hours a week writing quick 5-hour posts that are as well-received as the one you wrote, that would be amazing, but I don't think anyone can do that because the circumstances have to line up just right, and you can't count on that happening. So you have to spend most of your time doing harder and predictably-less-impactful work.

(I just left some feedback for the mapping discussion post on the post itself.)

↑ comment by cdt (nc) · 2024-10-09T21:46:49.280Z · LW(p) · GW(p)

Advice for journalists was a bit more polemic which I think naturally leads to more engagement. But I'd like to say that I strongly upvoted the mapping discussions post and played around with the site quite a bit when it was first posted - it's really valuable to me.

Karma's a bit of a blunt tool - yes I think it's good to have posts with broad appeal but some posts are going to be comparatively more useful to a smaller group of people, and that's OK too.

Replies from: Nathan Young↑ comment by Nathan Young · 2024-10-10T01:00:12.571Z · LW(p) · GW(p)

Sure but shouldn't the karma system be a prioritisation ranking, not just "what is fun to read?"

Replies from: nathan-helm-burger↑ comment by Nathan Helm-Burger (nathan-helm-burger) · 2024-10-13T18:28:05.325Z · LW(p) · GW(p)

I think we should put less faith in the karma system on this site as a ranking system. I agree ranking systems are good to have, but I think short-term upvotes by readers with feedback winner-takes-most mechanisms is inherently ill-suited to this.

If we want a better ranking system, I think we'd need something like a set of voting options at the bottom of the post like:

does this seem to have enduring value?

is this a technical post which reports on a substantial amount of work done?

would I recommend that a researcher in the field this post is in familiarize themself with this post?

and then also a score based on citations, as is done with academia.

Karma, as it stands, is something more like someone wandering by a poster and saying 'nice!'. LessWrong is a weird inbetween zone with aspects of both social media and academic publishing.

Replies from: Nathan Young↑ comment by Nathan Young · 2024-10-14T11:15:04.244Z · LW(p) · GW(p)

But isn't the point of karma to be a ranking system? Surely its bad if it's a suboptimal one?

↑ comment by MondSemmel · 2024-10-09T13:26:52.128Z · LW(p) · GW(p)

Separate feedback / food for thought: You mention that your post on mapping discussions is a summary of months of work, and that the second post took 5h to write and received far more karma. But did you spend at least 5h on writing the first one, too?

Replies from: Nathan Young↑ comment by Nathan Young · 2024-10-10T00:59:34.211Z · LW(p) · GW(p)

I would say I took at least 10 hours to write it. I rewrote it about 4 times.

Replies from: MondSemmel↑ comment by MondSemmel · 2024-10-10T08:30:11.520Z · LW(p) · GW(p)

I see. Then I'll point to my feedback in the other comment [LW(p) · GW(p)] and say that the journalism post was likely better written despite your lower time investment. And that if you spend a lot of time on a post, I recommend spending more of that time on the title in particular, because of the outsized importance of a) getting people to click on your thing and b) having people understand what the thing you're asking them to click on even is. Here [LW(p) · GW(p)] are two long comments on this topic.

Separately, when it comes to the success of stuff like blog posts, I like the framing in Ben Kuhn's post Searching for outliers [LW · GW], about the implications for activities (like blogging) whose impacts are dominated by heavy-tailed outcomes.

↑ comment by StartAtTheEnd · 2024-10-11T00:23:14.348Z · LW(p) · GW(p)

Why does it confuse you? The attention something gets doesn't depend strongly on its quality, but on how accessible it is.

If I get a lot of karma/upvotes/thumbs/hearts/whatever online, then I feel bad, because I would have written something poor.

My best comments are usually ignored, with the occasional reply from somebody who misunderstands me entirely, and the even more rare even that somebody understands me (this type is usually so aligned with what I wrote that they have nothing to add).

The nature of the normal distribution makes it so that popularity and quality never correlate very strongly. This is discouraging to people who do their best in some field with the hope that they will be recognized for it. I've seen many artists troubled by this as well, everything they consider a "masterpiece" is somewhat obscure, while most popular things go against their taste. An example that many can agree with is probably pop music, but I don't think any examples exists which more than 50% of people agree with, because then said example wouldn't exist in the first place.

Replies from: MondSemmel↑ comment by MondSemmel · 2024-10-14T13:50:15.244Z · LW(p) · GW(p)

This is far too cynical. Great writers (e.g. gwern, Scott Alexander, Matt Yglesias) can write excellent, technical posts and comments while still getting plenty attention.

Replies from: StartAtTheEnd↑ comment by StartAtTheEnd · 2024-10-15T01:47:43.476Z · LW(p) · GW(p)

Gwern and Scott are great writers, which is different from writing great things. It's like high-purity silver rather than rough gold, if that makes sense.

I do think they write a lot of great things, but not excellent things. Posts like "Maybe Your Zoloft Stopped Working Because A Liver Fluke Tried To Turn Your Nth-Great-Grandmother Into A Zombie" are probably around the limit of how difficult of an idea somebody can communicate while retaining some level of popularity. Somebody wanting to communicate ideas one or two standard deviations about this would find themselves in obscurity. I think there's more intelligent people out there sharing ideas which don't really reach anyone. Of course, it's hard for me to provide examples, as obscure things are hard to find, and I won't be able to prove that said ideas are good, for if it was easy to recognize as such, then they'd already be popular. And once you get abstract enough, the things you say will basically be indistinguishable from nonsense to anyone below a certain threshold of intelligence.

Of course, it may just be that high levels of abstraction aren't useful, leading intelligent people towards width and expertise with the mundane, rather than rabbit holes. Or it may be that people give up attempting to communicate certain concepts in language, and just make the attempt at showing them instead.

I saw a biologist on here comparing people to fire (as chemical processes) and immediately found the idea familiar as I had made the same connection myself before. To most people, it probably seems like a weird idea?

Replies from: MondSemmel↑ comment by MondSemmel · 2024-10-15T02:51:35.750Z · LW(p) · GW(p)

The idea that popularity must be a sign of shallowness, and hence unpopularity or obscurity a sign of depth, sounds rather shallow to me. My attitude here is more like, if supposedly world-shattering insights can't be explained in relatively simple language, they either aren't that great, or we don't really understand them. Like in this Feynman quote:

Replies from: StartAtTheEndOnce I asked him to explain to me, so that I can understand it, why spin-1/2 particles obey Fermi-Dirac statistics. Gauging his audience perfectly, he said, "I'll prepare a freshman lecture on it." But a few days later he came to me and said: "You know, I couldn't do it. I couldn't reduce it to the freshman level. That means we really don't understand it."

↑ comment by StartAtTheEnd · 2024-10-15T11:01:36.696Z · LW(p) · GW(p)

I think it's necessarily truth given the statistical distribution of things. If I say "There's necessarily less people with PhDs than with masters, and necessarily less masters than college graduates" you'd probably agree.

The theory that "If you understand something, you can explain it simply" is mostly true, but this does not make it easy to understand, as simplicity is not ease (Just try to explain enlightenment / the map-territory distinction to a stupid person). What you understand will seem trivial to you, and what you don't understand will seem difficult. This is just the mental representation of things getting more efficienct and us building mental shortcuts for things and getting used to patterns.

Proof: There's people who understand high level mathematics, so they must be able to explain these concepts simply. In theory, they should be able to write a book of these simple concepts, which even 4th graders can read. Thus, we should already have plenty of 4th graders who understand high level mathematics. But this is not the case, most 4th graders are still 10 years of education away from understanding things on a high level. Ergo, either the initial claim (that what you understand can be explained simply) is false, or else "explained simply" does not imply "understood easily"

The excessive humility is a kind og signaling or defense mechanism against criticism and excessive expectations from other people, and it's rewarded because of its moralistic nature. It's not true, it's mainly pleasant-sounding nonsense originating in herd morality.

comment by Nathan Young · 2024-05-21T16:08:41.532Z · LW(p) · GW(p)

A problem with overly kind PR is that many people know that you don't deserve the reputation. So if you start to fall, you can fall hard and fast.

Likewise it incentivises investigation that you can't back up.

If everyone thinks I am lovely, but I am two faced, I create a juicy story any time I am cruel. Not so if am known to be grumpy.

eg My sense is that EA did this a bit with the press tour around What We Owe The Future. It built up a sense of wisdom that wasn't necessarily deserved, so with FTX it all came crashing down.

Personally I don't want you to think I am kind and wonderful. I am often thoughtless and grumpy. I think you should expect a mediocre to good experience. But I'm not Santa Claus.

I am never sure whether rats are very wise or very naïve to push for reputation over PR, but I think it's much more sustainable.

@ESYudkowsky can't really take a fall for being goofy. He's always been goofy - it was priced in.

Many organisations think they are above maintaining the virtues they profess to possess, instead managing it with media relations.

In doing this they often fall harder eventually. Worse, they lose out on the feedback from their peers accurately seeing their current state.

Journalists often frustrate me as a group, but they aren't dumb. Whatever they think is worth writing, they probably have a deeper sense of what is going on.

Personally I'd prefer to get that in small sips, such that I can grow, than to have to drain my cup to the bottom.

comment by Nathan Young · 2024-12-16T09:06:25.937Z · LW(p) · GW(p)

Physical object.

I might (20%) make a run of buttons that say how long since you pressed them. eg so I can push the button in the morning when I have put in my anti-baldness hair stuff and then not have to wonder whether I did.

Would you be interested in buying such a thing?

Perhaps they have a dry wipe section so you can write what the button is for.

If you would, can you upvote the attached comment.

Replies from: Dagon, Nathan Young, elityre, shankar-sivarajan, Gurkenglas, notfnofn↑ comment by Dagon · 2024-12-16T18:43:19.302Z · LW(p) · GW(p)

Probably not for me. I had a few projects using AWS IoT buttons (no display, but arbitrary code run for click, double-click, or long-click of a small battery-powered wifi button), but the value wasn't really there, and I presume adding a display wouldn't quite be enough to devote the counter space. Amusingly, it turns out the AWS version was EOL'd today - Learn about AWS IoT legacy services - AWS IoT Core

↑ comment by Nathan Young · 2024-12-16T09:06:40.444Z · LW(p) · GW(p)

Upvote to signal: I would buy a button like this, if they existed.

Replies from: UnderTruth↑ comment by UnderTruth · 2024-12-16T15:00:02.125Z · LW(p) · GW(p)

A thought for a possible "version 2" would be to make them capable of reporting a push via Bluetooth or Wi-Fi, to track the action the button represents.

Replies from: shankar-sivarajan↑ comment by Shankar Sivarajan (shankar-sivarajan) · 2024-12-16T18:02:11.488Z · LW(p) · GW(p)

I think this would be missing the point. If it were "smart" like you describe, I definitely wouldn't buy it, and I wouldn't use it even if got it for free: I'd just get an app on my phone. What I want from such an object is infallibility, and the dumber it is, the closer it's likely to get to that ideal.

↑ comment by Eli Tyre (elityre) · 2024-12-20T20:49:01.466Z · LW(p) · GW(p)

I use daily checklists, in spreadsheet form, for this.

↑ comment by Shankar Sivarajan (shankar-sivarajan) · 2024-12-16T17:44:23.146Z · LW(p) · GW(p)

Are you describing a stopwatch?

If you can get it to run off of ambient light with some built-in solar panels (like a calculator), yes, I would buy such a thing for ~$20.

↑ comment by Gurkenglas · 2024-12-16T17:18:16.188Z · LW(p) · GW(p)

Hang up a tear-off calendar?

comment by Nathan Young · 2024-07-23T16:51:47.724Z · LW(p) · GW(p)

Thanks to the people who use this forum.

I try and think about things better and it's great to have people to do so with, flawed as we are. In particularly @KatjaGrace [LW · GW] and @Ben Pace [LW · GW].

I hope we can figure it all out.

comment by Nathan Young · 2024-05-30T16:36:29.160Z · LW(p) · GW(p)

Feels like FLI is a massively underrated org. Cos of the whole vitalik donation thing they have like $300mn.

Replies from: habryka4↑ comment by habryka (habryka4) · 2024-05-30T22:46:27.126Z · LW(p) · GW(p)

Not sure what you mean by "underrated". The fact that they have $300MM from Vitalik but haven't really done much anyways was a downgrade in my books.

Replies from: Nathan Young↑ comment by Nathan Young · 2024-06-23T13:03:50.791Z · LW(p) · GW(p)

Under considered might be more accurate?

And yes, I agree that seems bad.

comment by Nathan Young · 2025-01-08T10:58:59.338Z · LW(p) · GW(p)

My bird flu risk dashboard is here:

If you find it valuable, you could upvote it on HackerNews:

https://news.ycombinator.com/item?id=42632552

Replies from: AllAmericanBreakfast↑ comment by DirectedEvolution (AllAmericanBreakfast) · 2025-01-08T18:22:04.663Z · LW(p) · GW(p)

I recommend making the title time-specific, since all the predictions you’re basing your estimate on are as well.

comment by Nathan Young · 2025-01-28T09:48:13.498Z · LW(p) · GW(p)

I wish we kept and upvotable list of journalists so we could track who is trusted in the community and who isn't.

Seems not hard. Just a page with all the names as comments. I don't particularly want to add people, so make the top level posts anonymous. Then anyone can add names and everyone else can vote if they are trustworthy and add comments of experiences with them.

comment by Nathan Young · 2024-05-24T19:43:25.195Z · LW(p) · GW(p)

Given my understanding of epistemic and necessary truths it seems plausible that I can construct epistemic truths using only necessary ones, which feels contradictory.

Eg 1 + 1 = 2 is a necessary truth

But 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 = 10 is epistemic. It could very easily be wrong if I have miscounted the number of 1s.

This seems to suggest that necessary truths are just "simple to check" and that sufficiently complex necessary truths become epistemic because of a failure to check an operation.

Similarly "there are 180 degrees on the inside of a triangle" is only necessarily true in spaces such as R2. It might look necessarily true everywhere but it's not on the sphere. So what looks like a necessary truth actually an epistemic one.

What am I getting wrong?

Replies from: JBlack, Leviad, cubefox↑ comment by JBlack · 2024-05-25T03:53:54.597Z · LW(p) · GW(p)

Is it a necessary non-epistemic truth? After all, it has a very lengthy partial proof in Principia Mathematica, and maybe they got something wrong. Perhaps you should check?

But then maybe you're not using a formal system to prove it, but just taking it as an axiom or maybe as a definition of what "2" means using other symbols with pre-existing meanings. But then if I define the term "blerg" to mean "a breakfast product with non-obvious composition", is that definition in itself a necessary truth?

Obviously if you mean "if you take one object and then take another object, you now have two objects" then that's a contingent proposition that requires evidence. It probably depends upon what sorts of things you mean by "objects" too, so we can rule that one out.

Or maybe "necessary non-epistemic truth" means a proposition that you can "grok in fullness" and just directly see that it is true as a single mental operation? Though, isn't that subjective and also epistemic? Don't you have to check to be sure that it is one? Was it a necessary non-epistemic truth for you when you were young enough to have trouble with the concept of counting?

So in the end I'm not really sure exactly what you mean by a necessary truth that doesn't need any checking. Maybe it's not even a coherent concept.

↑ comment by Drake Morrison (Leviad) · 2024-05-24T20:40:01.798Z · LW(p) · GW(p)

What do you mean by "necessary truth" and "epistemic truth"? I'm sorta confused about what you are asking.

I can be uncertain about the 1000th digit of pi. That doesn't make the digit being 9 any less valid. (Perhaps what you mean by necessary?) Put another way, the 1000th digit of pi is "necessarily" 9, but my knowledge of this fact is "epistemic". Does this help?

↑ comment by cubefox · 2024-05-25T18:32:39.222Z · LW(p) · GW(p)

Just a note, in conventional philosophical terminology you would say:

Eg 1 + 1 = 2 is an epistemic necessity

But 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 = 10 is an epistemic contingency.

One way to interpret this is to say that your degree of belief in the first equation is 1, while your degree of belief in the second equation is neither 1 nor 0.

Another way to interpret it is to say that the first is "subjectively entailed" by your evidence (your visual impression of the formula), but not the latter, nor is its negation. subjectively entails iff , where is a probability function that describes your beliefs.

In general, philosophers distinguish several kinds of possibility ("modality").

- Epistemic modality is discussed above. The first equation seems epistemically necessary, the second epistemically continent.

- With metaphysical modality (which roughly covers possibility in the widest natural sense of the term "possible"), both equations are necessary, if they are true. True mathematical statements are generally considered necessary, except perhaps for some more esoteric "made-up" math, e.g. more questionable large cardinal axioms. This type is usually implied when the type of modality isn't specified.

- With logical modality, both equations are logically contingent, because they are not logical tautologies. They instead depend on some non-logical assumptions like the Peano axioms. (But if logicism is true, both are actually disguised tautologies and therefore logically necessary.)

- Nomological (physical) modality: The laws of physics don't appear to allow them to be false, so both are nomologically necessary.

- Analytic/synthetic statements: Both equations are usually considered true in virtue of their meaning only, which would make them analytic (this is basically "semantic necessity"). For synthetic statements their meaning would not be sufficient to determine their truth value. (Though Kant, who came up with this distinction, argues that arithmetic statements are synthetic, although synthetic a priori, i.e. not requiring empirical evidence.)

Anyway, my opinion on this is that "1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 = 10" is interpreted as the statement "this bunch of ones [referring to screen] added together equal 10" which has the same truth value, but not the same meaning. The second meaning would be compatible with slightly more or fewer ones on screen than there actually are on screen, which would make the interpretation compatible with a similar false formula which is different from actual one. The interpretation appears to be synthetic, while the original formula is analytic.

This is similar to how the expression "the Riemann hypothesis" is not synonymous to the Riemann hypothesis, since the former just refers to a statement instead of expressing it directly. You could believe "the Riemann hypothesis is true" without knowing the hypothesis itself. You could just mean "this bunch of mathematical notation expresses a true statement" or "the conjecture commonly referred to as 'Riemann hypothesis' is true". This belief expresses a synthetic statement, because it refers to external facts about what type of statement mathematicians happen to refer to exactly, which "could have been" (metaphysical possibility) a different one, and so could have had a different truth value.

Basically, for more complex statements we implicitly use indexicals ("this formula there") because we can't grasp it at once, resulting in a synthetic statement. When we make a math mistake and think something to be false that isn't, we don't actually believe some true analytic statement to be false, we only believe a true synthetic statement to be false.

comment by Nathan Young · 2024-01-02T10:56:49.872Z · LW(p) · GW(p)

I am trying to learn some information theory.

It feels like the bits of information between 50% and 25% and 50% and 75% should be the same.

But for probability p, the information is -log2(p).

But then the information of .5 -> .25 is 1 bit and but from .5 to .75 is .41 bits. What am I getting wrong?

I would appreciate blogs and youtube videos.

Replies from: mattmacdermott↑ comment by mattmacdermott · 2024-01-02T12:16:05.257Z · LW(p) · GW(p)

I might have misunderstood you, but I wonder if you're mixing up calculating the self-information or surpisal of an outcome with the information gain on updating your beliefs from one distribution to another.

An outcome which has probability 50% contains bit of self-information, and an outcome which has probability 75% contains bits, which seems to be what you've calculated.

But since you're talking about the bits of information between two probabilities I think the situation you have in mind is that I've started with 50% credence in some proposition A, and ended up with 25% (or 75%). To calculate the information gained here, we need to find the entropy of our initial belief distribution, and subtract the entropy of our final beliefs. The entropy of our beliefs about A is .

So for 50% -> 25% it's

And for 50%->75% it's

So your intuition is correct: these give the same answer.

comment by Nathan Young · 2024-12-12T11:38:03.409Z · LW(p) · GW(p)

Some thoughts on Rootclaim

Blunt, quick. Weakly held.

The platform has unrealized potential in facilitating Bayesian analysis and debate.

Either

- The platform could be a simple reference document

- The platform could be an interactive debate and truthseeking tool

- The platform could be a way to search the rootclaim debates

Currently it does none of these and is frustrating to me.

Heading to the site I expect:

- to be able to search the video debates

- to be able to input my own probability estimates to the current bayesian framework

- Failing this, I would prefer to just have a reference document which doesn't promise these

comment by Nathan Young · 2024-04-24T22:28:02.763Z · LW(p) · GW(p)

I think I'm gonna start posting top blogpost to the main feed (mainly from dead writers or people I predict won't care)

comment by Nathan Young · 2022-10-03T11:35:14.980Z · LW(p) · GW(p)

If you or a partner have ever been pregnant and done research on what is helpful and harmful, feel free to link it here and I will add it to the LessWrong pregnancy wiki page.

https://www.lesswrong.com/tag/pregnancy [? · GW]

comment by Nathan Young · 2024-05-15T19:59:10.313Z · LW(p) · GW(p)

I've made a big set of expert opinions on AI and my inferred percentages from them. I guess that some people will disagree with them.

I'd appreciate hearing your criticisms so I can improve them or fill in entries I'm missing.

https://docs.google.com/spreadsheets/d/1HH1cpD48BqNUA1TYB2KYamJwxluwiAEG24wGM2yoLJw/edit?usp=sharing

comment by Nathan Young · 2023-07-08T11:57:03.069Z · LW(p) · GW(p)

Epistemic status: written quickly, probably errors

Some thoughts on Manifund

- To me it seems like it will be the GiveDirectly of regranting (perhaps along with NonLinear) rather than the GiveWell

- It will be capable of rapidly scaling (especially if some regrantors are able to be paid for their time if they are dishing out a lot). It's not clear to me that's a bottleneck of granting orgs.

- There are benefits to centralised/closed systems. Just as GiveWell makes choices for people and so delivers 10x returns, I expect that Manifund will do worse, on average than OpenPhil, which has centralised systems, centralised theories of impact.

- Not everyone wants their grant to be public. If you have a sensitive idea (easy to imagine in AI) you may not want to publicly announce you're trying to get funding

- As with GiveDirectly, there is a real benefit of ~dignity/~agency. And I guess I think this is mostly vibes, but vibes matter. I can imagine crypto donors in particular finding a transparent system with individual portfolios much more attractive than OpenPhil. I can imagine that making a big difference on net.

- Notable that the donors aren't public. And I'm not being snide, I just mean it's interesting to me given the transparency of everything else.

- I love mechanism design. I love prizes, I love prediction markets. So I want this to work, but the base rate for clever mechanisms outcompeting bureaucratic ones seems low. But perhaps this finds a way to deliver and then outcompetes at scale (which seems my theory for if GiveDirectly ends up outcompeting GiveWell)

Am I wrong?

comment by Nathan Young · 2025-01-28T09:36:18.505Z · LW(p) · GW(p)

This journalist wants to talk to me about the Zizian stuff.

https://www.businessinsider.com/author/rob-price

I know about as much as the median rat, but I generally think it's good to answer journalists on substantive questions.

Do you think is a particularly good or bad idea, do you have any comments about this particular journalist. Feel free to DM me.

Replies from: Viliam↑ comment by Viliam · 2025-02-01T21:48:45.539Z · LW(p) · GW(p)

Look at the "Selected stories" section of the page you linked. This is the kind of thing that person writes.

My experience with journalists (not this specific one) is negative. They usually come to you after the story is already written in their mind. What they are looking for are the words they could quote to support their story. So whatever you tell them, it probably won't change the article in general, but if they have already decided to say something, and you happen to say something that sounds similar, than that specific sentence (and nothing else) will be added to the story, along with your name, to make it seem that the story is the result of talking to multiple people.

Anything you say that would disagree with the article will simply be ignored, even if that means ignoring 99% of what you said. It doesn't matter. If they interview 10 people, they will get 10 sentences they can quote; that is quite enough for one article to make it seem like the story has a lot of outside support.

Writing negative stuff about Zizians sounds like... not bad, per se; they are indeed horrible people. But you don't know what else will be in the article, who else will be associated with them (and your sentence, taken out of original context, might support that association). Perhaps the conclusion will be that Zizians are representative of the rationalist community in general. Will you get the opportunity to see the new context for your words before they are published?

I think sending him a link to https://zizians.info/ should be safe, because most likely he can google it anyway. Answering a list of questions, using mostly one-sentence answers (to avoid the possibility of a tangential sentence being taken out of the whole paragraph), maaaaaybe okay. Anything else, I think there is 80% chance you will be unhappy about the outcome.

I generally think it's good to answer journalists on substantive questions.

Do you model journalists as truth-seeking people? I don't; based on my previous experience with some of them. (I could still make an exception for a specific person, if I considered their previous articles fair and well reasoned.)

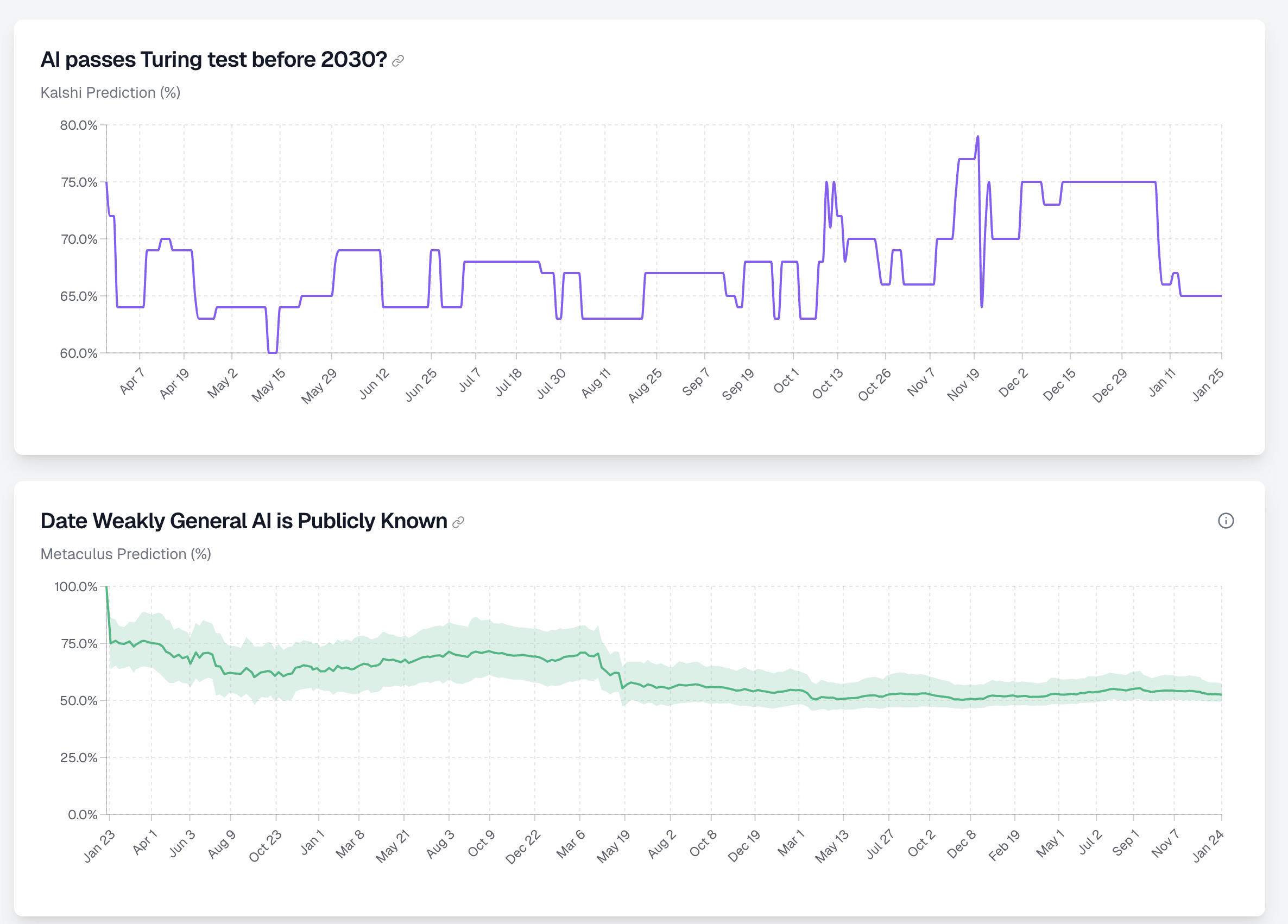

comment by Nathan Young · 2025-01-24T16:59:06.819Z · LW(p) · GW(p)

How might I combine these two datasets? One is a binary market, the other is a date market. So for any date point, one is a percentage P(turing test before 2030) the other is a cdf across a range of dates P(weakly general AI publicly known before that date).

Here are the two datasets.

Suggestions:

- Fit a normal distribution to the turing test market such that the 1% is at the current day and the P(X<2030) matches the probability for that data point

- Mirror the second data set but for each data point elevate the probabilities before 2030 such that P(X<2030) matches the probability for the first dataset

Thoughts:

Overall the problem is that one doesn't know what distribution to fit the second single datapoint to. The second suggestion just uses the distribution of the second data set for the first, but that seems quite complext.

"Why would you want to combine these datasets?"

Well they are two different views when something like AGI will appear. Seems good to combine them.

comment by Nathan Young · 2024-12-18T08:37:43.269Z · LW(p) · GW(p)

I don't really understand the difference between simulacra levels 2 and 3.

- Discussing reality

- Attempting to achieve results in reality by inaccuracy

- Attempting to achieve results in social reality by inaccuracy

I've never really got 4 either, but let's stick to 1 - 3.

Also they seem more like nested circles rather than levels - the jump between 2 and 3 (if I understand it correctly) seems pretty arbitrary.

Replies from: Viliam, AntonTimmer↑ comment by Viliam · 2024-12-19T09:12:07.785Z · LW(p) · GW(p)

I think 3 is more like: "Attempting to achieve results in social reality, by 'social accuracy', regardless of factual accuracy."

- 1 = telling the truth, plainly

- 2 = lying, for instrumental purposes (not social)

- 3 = tribal speech (political correctness, religious orthodoxy, uncritical contrarianism, etc.)

- 4 = buzzwords, used randomly

This is better understood as a 2×2 matrix, rather than a linear sequence of 4 steps.

- 1, 2 = about reality

- 3, 4 = about social reality

- 1, 3 = trying to have a coherent model of (real or social) reality

- 2, 4 = making a random move to achieve a short-term goal in (real or social) reality

↑ comment by AntonTimmer · 2024-12-19T09:37:48.904Z · LW(p) · GW(p)

Maybe a different framework to look at it:

- The map tries to represent the territory faithfully.

- The map consciously misrepresent the territory. But you can still infer through the malevolent map some things about the territory.

- The map does not represent the territory at all but pretends to be 1. Difference to 2 is that 2 is still taking the territory as base case and changing it while 3 is not at all trying to look at the territory.

- The map is the territory. Any reference on the map is just a reference to another part of the map. Claiming that the map might be connected to an external territory is taken as bullshit because people are living in the map. In the optimal case the map is at least self consistent.

↑ comment by Nathan Young · 2024-12-19T10:10:11.620Z · LW(p) · GW(p)

Do you find this an intuitive framework? I find the implication that conversation fits neatly into these boxes or that these are the relevant boxes a little doubtful.

Are you able to quickly give examples in any setting of what 1,2,3 and 4 would be?

Replies from: AntonTimmer↑ comment by AntonTimmer · 2025-01-09T09:08:14.398Z · LW(p) · GW(p)

Here is an example which I believe is directionally correct, it took me roughly 20 minutes to come up with it. The prompt is "how do living systems create meaning "?:

- My life feels like it has meaning (sensory-motor behavior and conceptual intentional aspects). Looking at it through an evolutionary perspective, it is highly likely that meaning assignment is the way through which living systems survived. Thus, there has to be some base biological level at which meaning is created through cell-cell communication/ bioelectricity/ biochemistry /biosensoring etc.

- Life is just made of atoms. Atoms are just automata. This implies, there is no meaning at the atom level and thus it cannot pop at a higher levels through emergence or some shit. You are delusional to believe there is some meaning assignment in life.

- Meaning is something that is defined through the language that we speak. It is well known that different cultures have different words and conceptual framing which implies that meaning is different in different cultures. Meaning thus only depends on language.

- Meaning is just a social construct and we can define anything to have meaning. Thus it doesn't matter what you find meaningful since it is just something you inherited through society and parenting.

I believe points 1-3 are fine, point 4 is kinda shaky.

comment by Nathan Young · 2024-10-14T11:42:47.200Z · LW(p) · GW(p)

Is there a summary of the rationalist concept of lawfulness anywhere. I am looking for one and can't find it.

Replies from: Gunnar_Zarncke, MondSemmel↑ comment by Gunnar_Zarncke · 2024-10-14T19:39:55.230Z · LW(p) · GW(p)

Can you say more which concept you mean exactly?

↑ comment by MondSemmel · 2024-10-14T13:53:07.115Z · LW(p) · GW(p)

Does this tag on Law-Thinking [? · GW] help? Or do you mean "lawful" as in Dungeons & Dragons (incl. EY's Planecrash fic), i.e. neutral vs. chaos vs. lawful?

comment by Nathan Young · 2024-10-10T07:57:20.501Z · LW(p) · GW(p)

I would have a dialogue with someone on whether Piper should have revealed SBF's messages. Happy to take either side.

Replies from: nikolas-kuhn↑ comment by Amalthea (nikolas-kuhn) · 2024-10-10T13:41:07.865Z · LW(p) · GW(p)

Is there a reason to be so specific, or could one equally well formulate this more generally?

comment by Nathan Young · 2024-09-24T11:00:22.144Z · LW(p) · GW(p)

What is the best way to take the average of three probabilities in the context below?

- There is information about a public figure

- Three people read this information and estimate the public figure's P(doom)

- (It's not actually p(doom) but it's their probability of something

- How do I then turn those three probabilities into a single one?

Thoughts.

I currently think the answer is something like for probability a,b,c then the group median is 2^((log2a + log2b + log2c)/3). This feels like a way to average the bits that each person gets from the text.

I could just take the geometric or arithmetic mean, but somehow that seems off to me. I guess I might write my intuitions for those here for correction.

Arithmetic mean (a + b + c)/3. So this feels like uncertain probabilities will dominate certain ones. eg (.0000001 + .25)/2 = approx .125 which is the same as if the first person was either significantly more confident or significantly less. It seems bad to me for the final probability to be uncorrelated with very confident probabilities if the probabilities are far apart.

On the other hand in terms of EV calculations, perhaps you want to consider the world where some event is .25 much more than where it is .0000001. I don't know. Is the correct frame possible worlds or the information each person brings to the table?

Geometric mean (a * b * c)^ 1/3. I dunno, sort of seems like a midpoint.

Okay so I then did some thinking. Ha! Whoops.

While trying to think intuitively about what the geometric mean was, I noticed that 2^((log2a + log2b + log2c)/3) = 2^ (log2 (abc) /3) = 2 ^ log 2 (abc)^1/3 = (abc) ^1/3. So the information mean I thought seemed right is the geometric mean. I feel a bit embarrassed, but also happy to have tried to work it out.

This still doesn't tell me whether the arithmetic worlds intuition or the geometric information interpretation is correct.

Any correction or models appreciated.

Replies from: niplav↑ comment by niplav · 2024-09-24T11:33:59.882Z · LW(p) · GW(p)

Relevant: When pooling forecasts, use the geometric mean of odds [? · GW].

Replies from: neel-nanda-1↑ comment by Neel Nanda (neel-nanda-1) · 2024-09-24T13:39:27.807Z · LW(p) · GW(p)

+1. Concretely this means converting every probability p into p/(1-p), and then multiplying those (you can then convert back to probabilities)

Intuition pump: Person A says 0.1 and Person B says 0.9. This is symmetric, if we instead study the negation, they swap places, so any reasonable aggregation should give 0.5

Geometric mean does not, instead you get 0.3

Arithmetic gets 0.5, but is bad for the other reasons you noted

Geometric mean of odds is sqrt(1/9 * 9) = 1, which maps to a probability of 0.5, while also eg treating low probabilities fairly

comment by Nathan Young · 2024-08-22T10:55:08.409Z · LW(p) · GW(p)

Communication question.

How do I talk about low probability events in a sensical way?

eg "RFK Jr is very unlikely to win the presidency (0.001%)" This statement is ambiguous. Does it mean he's almost certain not to win or that the statement is almost certainly not true?

I know this sounds wonkish, but it's a question I come to quite often when writing. I like to use words but also include numbers in brackets or footnotes. But if there are several forecasts in one sentence with different directions it can be hard to understand.

"Kamala is a slight favourite in the election (54%), but some things are clearer. She'll probably win Virginia (83%) and probably loses North Carolina (43%)"

Something about the North Carolina subclause rubs me the wrong way. It requires several cycles to think "does the 43% mean the win or the loss". Options:

- As is

- "probably loses North Carolina (43% win chance)" - this takes up quite a lot of space while reading. I don't like things that break the flow

↑ comment by RHollerith (rhollerith_dot_com) · 2024-08-22T15:55:05.138Z · LW(p) · GW(p)

I only ever use words to express a probability when I don't want to take the time to figure out a number. I would write your example as, "Kamela will win the election with p = 54%, win Virginia with p =83% and win North Carolina with p = 43%."

↑ comment by Dagon · 2024-08-22T15:25:34.806Z · LW(p) · GW(p)

Most communication questions will have different options depending on audience. Who are you communicating to, and how high-bandwidth is the discussion (lots of questions and back-and-forth with one or two people is VERY different from, say, posting on a public forum).

For your examples, it seems you're looking for one-shot outbound communication, to a relatively wide and mostly educated audience. I personally don't find the ambiguity in your examples particularly harmful, and any of them are probably acceptable.

If anyone complains or it bugs you, I'd EITHER go with

- an end-note that all percentages are chance-to-win

- a VERY short descriptor like (43% win) or even (43%W).

- reduce the text rather than the quantifier - "Kamala is 54% to win" without having to say that means "slight favorite".

↑ comment by Ben (ben-lang) · 2024-08-22T12:02:08.291Z · LW(p) · GW(p)

To me the more natural reading is "probably looses North Caroliner (57%)".

57% being the chance that she "looses North Caroliner". Where as, as it is, you say "looses NC" but give the probabiltiy that she wins it. Which for me takes an extra scan to parse.

↑ comment by RamblinDash · 2024-08-22T11:35:23.177Z · LW(p) · GW(p)

Just move the percent? Instead of "RFK Jr is very unlikely to win the presidency (0.001%)", say "RFK Jr is very unlikely (0.001%) to win the presidency"

comment by Nathan Young · 2024-05-30T04:39:40.853Z · LW(p) · GW(p)

What are the LessWrong norms on promotion? Writing a post about my company seems off (but I think it could be useful to users). Should I write a quick take?

Replies from: kave↑ comment by kave · 2024-05-30T04:45:41.733Z · LW(p) · GW(p)

We have many org announcements on LessWrong! If your company is relevant to the interests of LessWrong, I would welcome an announcement post.

Org announcements are personal blog posts unless they are embedded inside of a good frontpage post.

comment by Nathan Young · 2024-04-26T16:35:45.870Z · LW(p) · GW(p)

Nathan and Carson's Manifold discussion.

As of the last edit my position is something like:

"Manifold could have handled this better, so as not to force everyone with large amounts of mana to have to do something urgently, when many were busy.

Beyond that they are attempting to satisfy two classes of people:

- People who played to donate can donate the full value of their investments

- People who played for fun now get the chance to turn their mana into money

To this end, and modulo the above hassle this decision is good.

It is unclear to me whether there was an implicit promise that mana was worth 100 to the dollar. Manifold has made some small attempt to stick to this, but many untried avenues are available, as is acknowledging they will rectify the error if possible later. To the extent that there was a promise (uncertain) and no further attempt is made, I don't really believe they really take that promise seriously.

It is unclear to me what I should take from this, though they have not acted as I would have expected them to. Who is wrong? Me, them, both of us? I am unsure."

Threaded discussion

Replies from: Nathan Young, Nathan Young, Nathan Young↑ comment by Nathan Young · 2024-04-26T16:38:52.092Z · LW(p) · GW(p)

Carson:

Replies from: Nathan YoungPpl don't seem to understand that Manifold could literally not exist in a year or 2 if they don't find a product market fit

↑ comment by Nathan Young · 2024-04-26T16:44:29.782Z · LW(p) · GW(p)

Austin said [EA(p) · GW(p)] they have $1.5 million in the bank, vs $1.2 million mana issued. The only outflows right now are to the charity programme which even with a lot of outflows is only at $200k. they also recently raised at a $40 million valuation. I am confused by running out of money. They have a large user base that wants to bet and will do so at larger amounts if given the opportunity. I'm not so convinced that there is some tiny timeline here.

But if there is, then say so "we know that we often talked about mana being eventually worth $100 mana per dollar, but we printed too much and we're sorry. Here are some reasons we won't devalue in the future.."

↑ comment by James Grugett (james-grugett) · 2024-04-26T18:10:23.703Z · LW(p) · GW(p)

If we could push a button to raise at a reasonable valuation, we would do that and back the mana supply at the old rate. But it's not that easy. Raising takes time and is uncertain.

Carson's prior is right that VC backed companies can quickly die if they have no growth -- it can be very difficult to raise in that environment.

Replies from: Nathan Young↑ comment by Nathan Young · 2024-04-26T21:22:38.026Z · LW(p) · GW(p)

If that were true then there are many ways you could partially do that - eg give people a set of tokens to represent their mana at the time of the devluation and if at future point you raise. you could give them 10x those tokens back.

↑ comment by Nathan Young · 2024-04-26T16:36:48.073Z · LW(p) · GW(p)

seems like they are breaking an explicit contract (by pausing donations on ~a weeks notice)

Replies from: Nathan Young↑ comment by Nathan Young · 2024-04-26T16:37:18.001Z · LW(p) · GW(p)

Carson's response:

Replies from: Nathan Young, Nathan Youngweren't donations always flagged to be a temporary thing that may or may not continue to exist? I'm not inclined to search for links but that was my understanding.

↑ comment by Nathan Young · 2024-04-26T16:42:29.341Z · LW(p) · GW(p)

From https://manifoldmarkets.notion.site/Charitable-donation-program-668d55f4ded147cf8cf1282a007fb005

"That being said, we will do everything we can to communicate to our users what our plans are for the future and work with anyone who has participated in our platform with the expectation of being able to donate mana earnings."

"everything we can" is not a couple of weeks notice and lot of hassle. Am I supposed to trust this organisation in future with my real money?

↑ comment by James Grugett (james-grugett) · 2024-04-26T18:17:43.102Z · LW(p) · GW(p)

We are trying our best to honor mana donations!

If you are inactive you have until the rest of the year to donate at the old rate. If you want to donate all your investments without having to sell each individually, we are offering you a loan to do that.

We removed the charity cap of $10k donations per month, which is going beyond what we previous communicated.

Replies from: Nathan Young, Nathan Young↑ comment by Nathan Young · 2024-04-26T21:28:26.133Z · LW(p) · GW(p)

Nevertheless lots of people were hassled. That has real costs, both to them and to you.

↑ comment by Nathan Young · 2024-04-26T20:18:34.198Z · LW(p) · GW(p)

I’m discussing with Carson. I might change my mind but i don’t know that i’ll argue with both of you at once.

↑ comment by Nathan Young · 2024-04-26T16:41:14.268Z · LW(p) · GW(p)

Well they have a much larger donation than has been spent so there were ways to avoid this abrupt change:

"Manifold for Good has received grants totaling $500k from the Center for Effective Altruism (via the FTX Future Fund) to support our charitable endeavors."

Manifold has donated $200k so far. So there is $300k left. Why not at least, say "we will change the rate at which mana can be donated when we burn through this money"

(via https://manifoldmarkets.notion.site/Charitable-donation-program-668d55f4ded147cf8cf1282a007fb005 )

↑ comment by Nathan Young · 2024-04-26T16:36:26.185Z · LW(p) · GW(p)

seems breaking an implicity contract (that 100 mana was worth a dollar)

Replies from: Nathan Young↑ comment by Nathan Young · 2024-04-26T16:37:56.719Z · LW(p) · GW(p)

Carson's response:

Replies from: Nathan YoungThere was no implicit contract that 100 mana was worth $1 IMO. This was explicitly not the case given CFTC restrictions?

↑ comment by Nathan Young · 2024-04-26T16:43:36.942Z · LW(p) · GW(p)

Austin took his salary in mana as an often referred to incentive for him to want mana to become valuable, presumably at that rate.

I recall comments like 'we pay 250 in referrals mana per user because we reckon we'd pay about $2.50' likewise in the in person mana auction. I'm not saying it was an explicit contract, but there were norms.

comment by Nathan Young · 2024-04-20T11:24:08.702Z · LW(p) · GW(p)

I recall a comment on the EA forum about Bostrom donating a lot to global dev work in the early days. I've looked for it for 10 minutes. Does anyone recall it or know where donations like this might be recorded?

comment by Nathan Young · 2023-09-26T16:49:14.079Z · LW(p) · GW(p)

No petrov day? I am sad.

Replies from: Dagon, Richard_Kennaway↑ comment by Richard_Kennaway · 2023-09-27T09:33:31.417Z · LW(p) · GW(p)

There is an ongoing Petrov Day poll. I don't know if everyone on LW is being polled.

comment by Nathan Young · 2023-07-19T10:43:42.312Z · LW(p) · GW(p)

Why you should be writing on the LessWrong wiki.

There is way too much to read here, but if we all took pieces and summarised them in their respective tag, then we'd have a much denser resources that would be easier to understand.

Replies from: Vladimir_Nesov↑ comment by Vladimir_Nesov · 2023-07-19T12:00:58.583Z · LW(p) · GW(p)

There are currently no active editors or a way of directing sufficient-for-this-purpose traffic to new edits, and on the UI side no way to undo an edit, an essential wiki feature. So when you write a large wiki article, it's left as you wrote it, and it's not going to be improved. For posts, review related to tags is in voting on the posts and their relevance, and even that is barely sufficient to get good relevant posts visible in relation to tags. But at least there is some sort of signal.

I think your article on Futarchy [? · GW] illustrates this point. So a reasonable policy right now is to keep all tags short. But without established norms that live in minds of active editors, it's not going to be enforced, especially against large edits that are written well.

Replies from: Nathan Young↑ comment by Nathan Young · 2023-07-21T09:29:10.948Z · LW(p) · GW(p)

Thanks for replying.

Would you revert my Futarchy edits if you could?

I think reversion is kind of overpowered. I'd prefer reverting chunks.

I don't see the logic that says we should keep tags short. That just seems less useful

Replies from: Vladimir_Nesov↑ comment by Vladimir_Nesov · 2023-07-21T13:23:13.193Z · LW(p) · GW(p)

I don't see the logic that says we should keep tags short.

The argument is that with the current level of editor engagement, only short tags have any chance of actually getting reviewed and meaningfully changed if that's called for. It's not about the result of a particular change to the wiki, but about the place where the trajectory of similar changes plausibly takes it in the long run.

I think reversion is kind of overpowered.

A good thing about the reversion feature is that reversion can itself be reverted, and so it's not as final as when it's inconvenient to revert the reversions. This makes edit wars more efficient, more likely to converge on a consensus framing rather than with one side giving up in exhaustion.

Would you revert my Futarchy edits if you could?

The point is that absence of the feature makes engagement with the wiki less promising, as it becomes inconvenient and hence infeasible in practice to protect it in detail, and so less appealing to invest effort in it. I mentioned that as a hypothesis for explaining currently near-absent editor engagement, not as something relevant to reverting your edits.

Reverting your edits would follow from a norm that says such edits are inappropriate. I think this norm would be good, but it's also clearly not present, since there are no active editors to channel it. My opinion here only matters as much as the arguments around it convince you or other potential wiki editors, the fact that I hold this opinion shouldn't in itself have any weight. (So to be clear, currently I wouldn't revert the edits if I could. I would revert them only if there were active editors and they overall endorsed the norm of reverting such edits.)

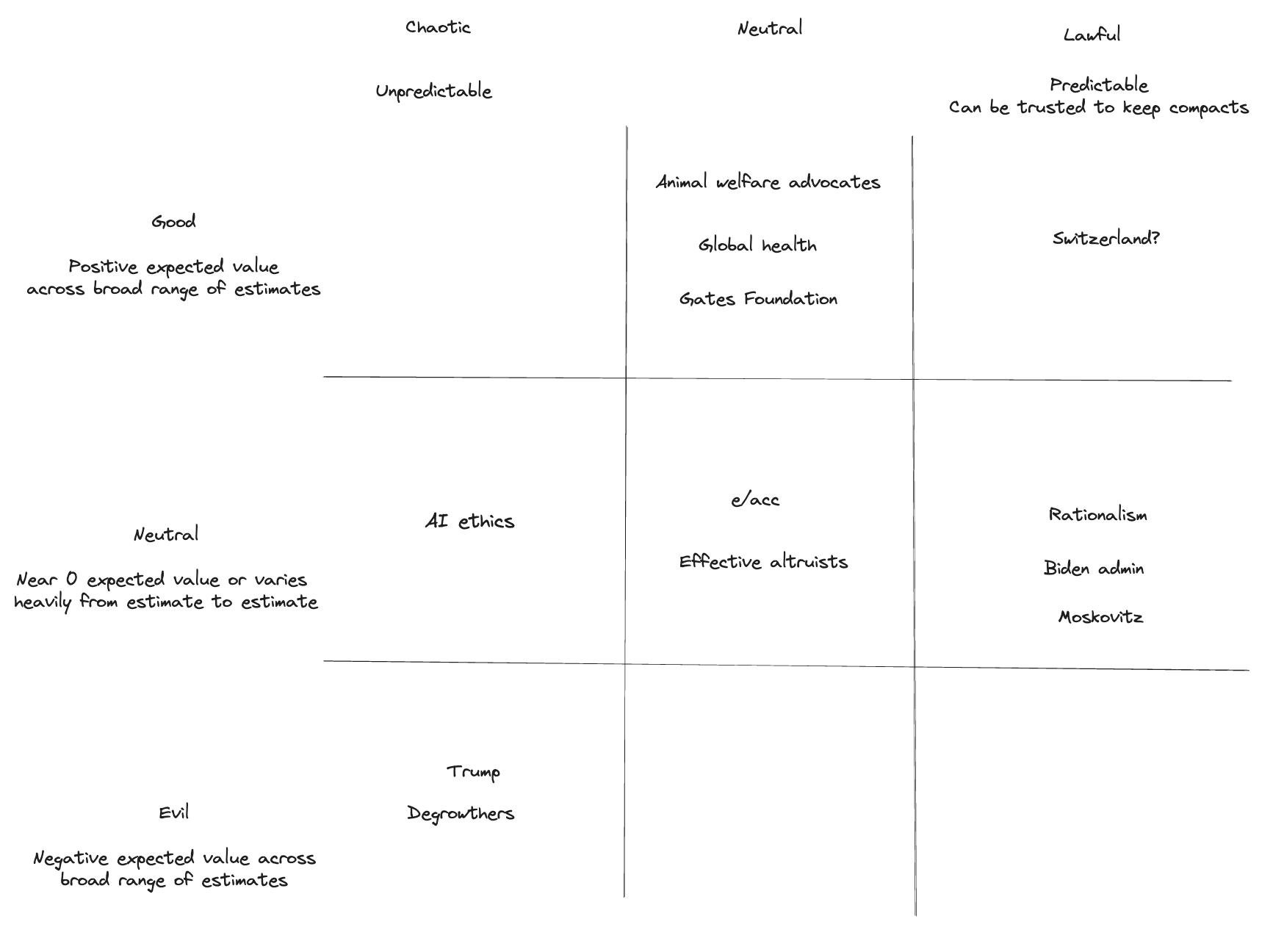

comment by Nathan Young · 2024-06-30T12:48:32.097Z · LW(p) · GW(p)

Here is a 5 minute, spicy take of an alignment chart.

What do you disagree with.

To try and preempt some questions:

Why is rationalism neutral?

It seems pretty plausible to me that if AI is bad, then rationalism did a lot to educate and spur on AI development. Sorry folks.

Why are e/accs and EAs in the same group.

In the quick moments I took to make this, I found both EA and E/acc pretty hard to predict and pretty uncertain in overall impact across some range of forecasts.

Replies from: Zack_M_Davis, Seth Herd, quetzal_rainbow↑ comment by Zack_M_Davis · 2024-06-30T17:40:09.576Z · LW(p) · GW(p)

It seems pretty plausible to me that if AI is bad, then rationalism did a lot to educate and spur on AI development. Sorry folks.

What? This apology makes no sense. Of course rationalism is Lawful Neutral. The laws of cognition aren't, can't be, on anyone's side.

Replies from: programcrafter, Nathan Young↑ comment by ProgramCrafter (programcrafter) · 2024-07-25T12:28:26.808Z · LW(p) · GW(p)

I disagree with "of course". The laws of cognition aren't on any side, but human rationalists presumably share (at least some) human values and intend to advance them; insofar they are more successful than non-rationalists this qualifies as Good.

↑ comment by Nathan Young · 2024-07-01T10:20:47.146Z · LW(p) · GW(p)

So by my metric, Yudkowsky and Lintemandain's Dath Ilan isn't neutral, it's quite clearly lawful good, or attempting to be. And yet they care a lot about the laws of cognition.

So it seems to me that the laws of cognition can (should?) drive towards flouishing rather than pure knowledge increase. There might be things that we wish we didn't know for a bit. And ways to increase our strength to heal rather than our strength to harm.

To me it seems a better rationality would be lawful good.

Replies from: SaidAchmiz↑ comment by Said Achmiz (SaidAchmiz) · 2024-07-01T11:06:45.514Z · LW(p) · GW(p)

The laws of cognition are natural laws. Natural laws cannot possibly “drive towards flourishing” or toward anything else.

Attempting to make the laws of cognition “drive towards flourishing” inevitably breaks them.

Replies from: cubefox↑ comment by cubefox · 2024-07-06T11:54:38.156Z · LW(p) · GW(p)

A lot of problems arise from inaccurate beliefs instead of bad goals. E.g. suppose both the capitalists and the communists are in favor of flourishing, but they have different beliefs on how best to achieve this. Now if we pick a bad policy to optimize for a noble goal, bad things will likely still follow.

↑ comment by Seth Herd · 2024-06-30T22:53:46.690Z · LW(p) · GW(p)

Interesting. I always thought the D&D alignment chart was just a random first stab at quantizing a standard superficial Disney attitude toward ethics. This modification seems pretty sensible.

I think your good/evil axis is correct in terms of a deeper sense of the common terms. Evil people don't try to harm others typically, they just don't care- so their efforts to help themselves and their friends is prone to harm others. Being good means being good to everyone, not just your favorites. It's the size of your circle of compassion. Outright malignancy, cackling about others suffering, is pretty eye-catching when it happens (and it does), but I'd say the vast majority of harm in the world has been done by people who are merely not much concerned with collateral damage. Thus, I think those deserve the term evil, lest we focus on the wrong thing.

Predictable/unpredictable seems like a perfectly good alternate label for the chaotic/lawful. In some adversarial situations, it makes sense to be unpredictable.

One big question is whether you're referring to intentions or likely outcomes in your expected valaue (which I assume is expected value for all sentient beings or somethingg). A purely selfish person without much ambition may actually be a net good in the world; they work for the benefit of themselves and those close enough to be critical for their wellbeing, and they don't risk causing a lot of harm since that might cause blowback. The same personality put in a position of power might do great harm, ordering an invasion or employee downsizing to benefit themselves and their family while greatly harming many.

Replies from: Nathan Young↑ comment by Nathan Young · 2024-07-01T10:17:51.720Z · LW(p) · GW(p)

Yeah I find the intention vs outcome thing difficult.

What do you think of "average expected value across small perturbations in your life". Like if you accidentally hit churchill with a car and so cause the UK to lose WW2 that feels notably less bad than deliberately trying to kill a much smaller number of people. In many nearby universes, you didn't kill churchill, but in many nearby universes that person did kill all those people.

↑ comment by quetzal_rainbow · 2024-06-30T13:46:24.819Z · LW(p) · GW(p)

Chaotic Good: pivotal act

Lawful Evil: "situational awareness"

comment by Nathan Young · 2024-03-11T18:07:51.242Z · LW(p) · GW(p)

I did a quick community poll - Community norms poll (2 mins) [LW · GW]

I think it went pretty well. What do you think next steps could/should be?

Here are some points with a lot of agreement.

comment by Nathan Young · 2023-12-15T10:06:30.567Z · LW(p) · GW(p)

Things I would do dialogues about:

(Note I may change my mind during these discussions but if I do so I will say I have)

- Prediction is the right frame for most things

- Focus on world states not individual predictions

- Betting on wars is underrated

- The UK House of Lords is okay actually

- Immigration should be higher but in a way that doesn't annoy everyone and cause backlash

comment by Nathan Young · 2023-12-09T18:47:04.943Z · LW(p) · GW(p)

I appreciate reading women talk about what is good sex for them. But it's a pretty thin genre, especially with any kind of research behind it.

So I'd recommend this (though it is paywalled):

https://aella.substack.com/p/how-to-be-good-at-sex-starve-her?utm_source=profile&utm_medium=reader2

Also I subscribed to this for a while and it was useful:

https://start.omgyes.com/join

Replies from: rhollerith_dot_com↑ comment by RHollerith (rhollerith_dot_com) · 2023-12-09T18:58:40.553Z · LW(p) · GW(p)

You don't want to warn us that it is behind a paywall?

Replies from: Nathan Young↑ comment by Nathan Young · 2023-12-09T21:01:31.194Z · LW(p) · GW(p)

I didn't think it was relevant, but happy to add it.

comment by Nathan Young · 2023-10-30T10:00:43.395Z · LW(p) · GW(p)

I suggest that rats should use https://manifold.love/ as the Schelling dating app. It has long profiles and you can bet on other people getting on.

What more could you want!

I am somewhat biased because I've bet that it will be a moderate success.

comment by Nathan Young · 2023-09-11T14:03:25.274Z · LW(p) · GW(p)

Relative Value Widget

It gives you sets of donations and you have to choose which you prefer. If you want you can add more at the bottom.

comment by Nathan Young · 2023-08-31T15:44:57.683Z · LW(p) · GW(p)

Other things I would like to be able to express anonymously on individual comments:

- This is poorly framed - Sometimes i neither want to agree nor diagree. I think the comment is orthogonal to reality and agreement and disagreement both push away from truth.

- I don't know - If a comment is getting a lot of agreement/disagreement it would also be interesting to see if there could be a lot of uncertainty

comment by Nathan Young · 2022-09-23T17:47:07.108Z · LW(p) · GW(p)

It's a shame the wiki doesn't support the draft google-docs-like editor. I wish I could make in-line comments while writing.

comment by Nathan Young · 2024-12-13T07:10:32.612Z · LW(p) · GW(p)

Politics is the Mindfiller

There are many things to care about and I am not good at thinking about all of them.

Politics has many many such things.

Do I know about:

- Crime stats

- Energy generation

- Hiring law

- University entrance

- Politicians' political beliefs

- Politicians' personal lives

- Healthcare

- Immigration

And can I actually confidently think that things you say are actually the case. Or do I have a surface level $100 understanding?

Poltics may or may not be the mindkiller, whatever Yud meant by that, but for me it is the mindfiller, it's just a huge amount of work to stay on top of.

I think it would be healthier for me to focus on a few areas and then say I don't know about the rest.

Replies from: Gunnar_Zarncke, WannabeChthonic↑ comment by Gunnar_Zarncke · 2024-12-13T08:49:45.839Z · LW(p) · GW(p)

I do not follow German/EU politics for that reason. I did follow the US elections out of interest and believed that I would be sufficiently detached and neutral - and it still took some share of attention.

In terms of topics (generally, not EU or US), I think it makes sense to have an idea of crime and healthcare etc. - but not on the day-by-day basis, because there is too much short-term information warfare going on (see below). Following decent bloggers or reading papers about longer-term trends makes sense though.

- Politicians' political beliefs

- Politicians' personal lives

I think that is almost hopeless without deep inside knowledge. There is too much Simulacrum Levels [LW · GW] 3 and 4 communication going on. When a politician says: "I will make sure that America produces more oil." What does that mean? It surely doesn't mean that the politician will make sure that America produces more oil. It means (or could mean):

- The general population hears: "Oil prices will go down."

- Oil-producers hear: "Regulations may be relaxed about producing oil in America."

- Other countries hear: "America wants to send us a signal that they may compete on oil."

- ...

Who are the parties the message is directed to, and how will they hear it? It is hard to know without a lot of knowledge about the needed messaging. It is a bit like the stock/crypto market: When you buy (or sell), you have to know why the person who is selling (or buying) your share doing so? If you don't know, then, likely, you are the one making a loss. If you don't know who the message is directed to, you cannot interpret it properly.

And you can't go by the literal words. Or rather, the literal words are likely directed to somebody too (probably intellectuals, but what do I know) and likely intended to distract them.

↑ comment by WannabeChthonic · 2024-12-13T11:23:36.686Z · LW(p) · GW(p)

Early on I politically only specialized in a few areas (digital sovereignty, privacy, computer security) and started being politically active in those areas. I actively decided that other areas such as climate change, housing, diet, ... are already focused on by many other activitst and I can probably do more good by specializing in some areas instead of trying to be well-read in all of them.

Personally I believe the need the be informed is only needed when I have the intent to act. I do intent to act on the new german ePA law and thus I inform myself and I do activism, I do not intent to act on home based heating laws and thus every effort into researching it beyond the basics would be wasted effort.