Posts

Comments

Systemic opacity, state-driven censorship, and state control of the media means AGI development under direct or indirect CCP control would probably be less transparent than in the US, and the world may be less likely to learn about warning shots, wrongheaded decisions, reckless behaviour, etc.

That's screened off by actual evidence, which is, top labs don't publish much no matter where they are, so I'd only agree with "equally opaque".

Aidan McLaughlin (OpenAI): ignore literally all the benchmarks the biggest o3 feature is tool use. Ofc it’s smart, but it’s also just way more useful. >deep research quality in 30 seconds >debugs by googling docs and checking stackoverflow >writes whole python scripts in its CoT for fermi estimates McKay Wrigley: 11/10

Newline formatting is off (and also for many previous posts).

I'm not sure if this will be of any use, since your social skills will surely be warped when you expect iterating on them (in a manner like radical transparency reduces awareness of feelings ).

Most cases, you could build a mini-quadcopter, "teach" it some tricks and try showcasing it, having video as a side effect!

Browsing through recent rejects, I found an interesting comment that suggests an automatic system "build a prior on whether something is true by observing social interactions around it vs competing ideas".

@timur-sadekov While it will fail most certainly, I do remember that the ultimate arbiter is experiment, so I shall reserve the judgement. Instead, I'm calling for you to test the idea over prediction markets (Manifold, for instance) and publish the results.

The AI industry is different—more like biology labs testing new pathogens: The biolab must carefully monitor and control conditions like air pressure, or the pathogen might leak into the external world.

This looks like a cached analogy, because given previous paragraph this would fit more: "the AI industry is different, more like testing planes; their components can be internally tested, but eventually the full plane must be assembled and fly, and then it can damage the surroundings".

Same goes for AI: If we don’t keep a watchful eye, the AI might be able to escape—for instance, by exploiting its role in shaping the security code meant to keep the AI locked inside the company’s computers.

This seems very likely to happen! We've already seen that AIs improving code can remove its essential features, so it wouldn't be surprising for some security checks to disappear.

Based on this, I've decided to upvote this post weakly only.

I suggest additional explanation.

The bigger the audience is, the more people there are who won't know a specific idea/concept/word (xkcd's comic #1053 "Ten Thousand" captures this quite succinctly), so you'll simply have to shorten.

I took logarithm of sentence length and linearly fitted it against logarithm of world population (that shouldn't really be precise since authors presumably mostly cared about their society, but that would be more time-expensive to check).

Relevant lines of Python REPL

>>> import math

>>> wps = [49, 50, 42, 20, 21, 14, 18, 12]

>>> pop = [600e6, 700e6, 1e9, 1.4e9, 1.5e9, 2.3e9, 3.5e9, 6e9]

>>> [math.log(w) for w in wps]

[3.8918202981106265, 3.912023005428146, 3.7376696182833684, 2.995732273553991, 3.044522437723423, 2.6390573296152584, 2.8903717578961645, 2.4849066497880004]

>>> [math.log(p) for p in pop]

[20.21244021318042, 20.36659089300768, 20.72326583694641, 21.059738073567623, 21.128730945054574, 21.556174959881517, 21.97602880544178, 22.515025306174465]

>>> 22.51-20.21

2.3000000000000007

>>> 3.89-2.48

1.4100000000000001

>>> 2.3/1.41

1.6312056737588652

>>> [round(math.exp(26.41 - math.log(w)*1.63)/1e9, 3) for w,p in zip(wps,pop)] # predicted population, billion

[0.518, 0.502, 0.667, 2.234, 2.063, 3.995, 2.652, 5.136]

>>> [round(math.exp(26.41 - math.log(w)*1.63)/1e9 - p/1e9, 3) for w,p in zip(wps,pop)] # prediction off by, billion

[-0.082, -0.198, -0.333, 0.834, 0.563, 1.695, -0.848, -0.864]

Given the gravity of Sam Altman's position at the helm of the company leading the development of an artificial superintelligence which it does not yet know how to align -- to imbue with morality and ethics -- I feel Annie's claims warrant a far greater level of investigation than they've received thus far.

Then there's a bit of shortage of something public... and ratsphere-adjacent... maybe prediction markets?

I can create (subsidize) a few, given resolution criteria.

Alphabet of LW Rationality, special excerpts

- Cooperation: you need to cooperate with yourself to do anything. Please do so iff you are building ASI;

- Defection: it ain't no defection if you're benefitting yourself and advancing your goals;

- Inquiry: a nice way of making others work for your amusement or knowledge expansion;

- Koan: if someone is not willing to do research for you, you can waste their time on pondering a compressed idea.

This concludes my April the 1st!

I claim that I exist, and that I am now going to type the next words of my response. Both of those certainly look true. As for whether these beliefs are provable, I do not particularly care; instead, I invoke the nameless:

Every step of your reasoning must cut through to the correct answer in the same movement. More than anything, you must think of carrying your map through to reflecting the territory.

My black-box functions yield a statement "I exist" as true or very probable, and they are also correct in that.

After all, If I exist, I do not want to deny my existence. If I don't exist... well let's go with the litany anyways... I want to accept I don't exist. Let me not be attached to beliefs I may not want.

What's useful about them? If you are going to predict (the belief in) qualia, on the basis of usefulness , you need to state the usefulness.

There might be some usefulness!

The statement I'd consider is "I am now going to type the next characters of my comment". This belief turns out to be true by direct demonstration, it is not provable because I could as well leave the commenting to tomorrow and be thinking "I am now going to sleep", not particularly justifiable in advance, and it is useful for making specific plans that branch less on my own actions.

I object to the original post because of probabilistic beliefs, though.

I don’t think there is a universal set of emojis that would work on every human, but I totally think that there is a set of such emojis (or something similar) that would work on any given human at any given time, at least a large percentage of the time, if you somehow were able to iterate enough times to figure out what it is.

Probably something with more informational content than emojis, like images (perhaps slightly animated). Trojan Sky, essentially.

The core idea behind confidentiality is to stop social pressure (whether on the children or the parents).

Parents have a strong right to not use genomic engineering technology, and if they do use it then they have a strong right to not alter any given trait.

How to prevent all the judgements better than to make information sharing completely voluntary?

Important, and would be nice even if passed as-is! Admittedly, there's some space to have even stronger ideas, like...

"Confidentiality of genomic interventions. Human has natural right for details of which aspects of their genome were custom-chosen, if any, to be kept confidential" (probably also prohibit parents/guardians from disclosing that, since knowledge cannot be sealed back into the box).

A nice scary story! How fortunate that it is fiction...

... or is it? If we get mind uploads, someone will certainly try to gradient-ascent various stimulus (due to simple hostility or Sixth Law of Human Stupidity), and I do believe the underlying fact that a carefully crafted image could hijack mental processes to some point.

It's 16:9 (modulo possible changes in the venue).

I have seen your banner and it is indeed one of the best choices out there! For announcing the event I preferred another one.

Hi! By any chance, do you have HPMOR banners (to display on screen, for instance)?

I get the impression that you're conflating two meanings of «personal» - «private» and «individual». The fact that I might feel uncomfortable discussing this in a public forum doesn’t mean it «only works for me» or that it «doesn’t work, but I’m shielded from testing my beliefs due to privacy». There are always anonymous surveys, for example. Perhaps you meant something else?

I meant to say that private values/things are unlikely to coincide between different people, though now I'm a bit less sure.

Moreover, even if I were to provide yet another table of my own subjective experience ratings, like the ones here, you likely wouldn’t find it satisfactory — such tables already exist, with far more respondents than just myself, and you aren’t satisfied. Probably because you disagree with the methodology — for instance, since measuring «what people call pleasurable» is subject to distortions like the compulsions mentioned earlier.

I was unfamiliar with those, thanks for pointing! I have an idea to estimate if "optimization-power" could replace existing currencies, and those surveys' data seems like it might be useful.

Seeing you know the exact numbers, I wonder if you could connect with those other families? It's harder to get the best outcome if players do not cooperate and do not even know the wishes of others. Adding that this would be a valuable socializing opportunity would be somewhat hypocritical from me but it's still so.

Upvoted as a good re-explanation of CEV complexity in simpler terms! (I believe LW will benefit from recalling the long understood things so that it has a chance on predicting future in greater detail.)

In essence, you prove the claim "Coherent Extrapolated Volition would not literally include everything desirable happening effortlessly and everything undesirable going away". Would I be wrong to guess it argues against position in https://www.lesswrong.com/posts/AfAp8mEAbuavuHZMc/for-the-sake-of-pleasure-alone?

That said, current wishes of many people include things they want being done faster and easier; it's just the more you extrapolate the less fraction wants that level of automation - just more divergence as you consider higher scale.

And introspectively, I don’t see any barriers to comparing love with orgasm, with good food, with religious ecstasy, all within the same metric, even though I can’t give you numbers for it.

Why not? It'd be interesting to hear valuations from your experience and experiments, if that wasn't very personal.

(On the other hand, if it IS too personal, then who would choose to write the metric down for an automatic system optimizing it by their whims?)

The follow-up post has a very relevant comment:

Can you just give every thief a body camera?

Well of course this is illegal under current US laws, however this would help against being unjustly accused as in your example of secondary crime. It would also be helpful against repeat offences for a whole range of other crimes.

I'd maintain that those problems already exist in 20M-people cities and will not necessarily become much worse. However, by increasing city population you bring in more people into the problems, which doesn't seem good.

Is there any engineering challenge such as water supply that prevents this from happening? Or is it just lack of any political elites with willingness + engg knowledge + governing sufficient funds?

That dichotomy is not exhaustive, and I believe going through with the proposal will necesarily make the city inhabitants worse off.

- Humans' social machinery is not suited to live in such large cities, as of the current generations. Who to get acquainted with, in the first place? Isn't there lots of opportunity cost to any event?

- Humans' biomachinery is not suited to live in such large cities. Being around lots and lots of people might be regulating hormones and behaviour to settings we have not totally explored (I remember reading something that claims this a large factor to lower fertility).

- Centralization is dangerous because of possibly-handmade mass weapons.

- Assuming random housing and examining some quirk/polar position, we'll get a noisy texture. It will almost certainly have a large group of people supporting one position right next to group thinking otherwise. Depending on sizes and civil law enforcement, that may not end well.

After a couple hundred years, 1) and 2) will most probably get solved by natural selection so the proposal will be much more feasible.

[Un]surprisingly, there's already a Sequences article on this, namely Is That Your True Rejection?.

(I thought this comment would be more useful with call-for-action "so how should we rewrite that article and make it common knowledge for everyone who joined LW recently?" but was too lazy to write it.)

In general if you "defect" because you thought the other party would that is quite sketchy. But what if proof comes out they really were about to defect on you?

By the way, if we consider game theory and logic to be any relevant, then there's a corollary of Löb's Theorem: if you defect given proof that counterparty will defect, and another party will defect given proof that you will, then you both will, logically, defect against each other, with no choice in the matter. (And if you additionally declare that you cooperate given proof that partner will cooperate, you've just declared a logical contradiction.)

For packing this result into a "wise" phrase, I'd use words:

Good is not a universally valid response to Evil. Evil is not a universally valid response to Evil either. Seek that which will bring about a Good equilibrium.

Weak-upvoted because I believe this topic merits some discussion, but the discourse level should be higher since setting NSFW boundaries for user relates to many other topics:

- Estimating social effect of imposing a certain boundary.

Will stopping rough roleplaying scenarios lead to less people being psychopaths? That seems to be an empirical question, since intuitively effect might go either way - doing the same in real world instead OR internalizing rough and inconsiderate actions as not normal. - Simulated people's opinion on being placed in the user-requested scenarios AND our respect for their values (which in some cases might be zero).

- Ability to set the boundaries at all.

I can't stop someone else imagining, in their mind, me engaging in whatever. I can only humbly request that if they imagine an uncommon sexual scenario they should use an image of me patched to enjoy that kink.

Society can't stop everyone from running DeepSeek's distillation locally, and that (in ~13/15 attempts with the same prompt having a prior explicit scene) trusts that user's request is legal and should be completed. - User's ability to discern their own preferences vs revealed preferences.

It might be helpful to feature some reference tales at different NSFW levels and check the user's reaction to them, instead of prominently requesting user to self-report on what they like. (The manual setting should still remain if possible, of course.)

That's all conditional on P = NP, isn't it? Also, which part do you consider weaker: digital signatures or hash functions?

Line breaks seem to be broken (unless it was your intention to list all the Offers-Mundane-Utility-s and so on in a single paragraph).

Acknowledge it is not visible anymore!

Hi! I believe this post, not one for the 2021 review, is meant to be pinned at the front page?

I'd like there to be a reaction of "Not Exhaustive", meant for a step where comment (or top-level post, for that matter) missed an important case - how a particular situation could play out, perhaps, or an essential system's component is not listed. An example use: on statement "to prevent any data leaks, one must protect how their systems transfer data and how they process it" with the missed component being protection of storage as well.

I recall wishing for it like three times since the New Year, with the current trigger being this comment:

Elon already has all of the money in the world. I think he and his employs are ideologically driven, and as far as I can tell they're making sensible decisions given their stated goals of reducing unnecessary spend/sprawl. I seriously doubt they're going to use this access to either raid the treasury or turn it into a personal fiefdom. <...>

which misses a specific case (which I'll name under the original comment if there is any interest).

Now I feel like rationality itself is an infohazard. I mean, rationality itself won't hurt you if you are sufficiently sane, but if you start talking about it, insufficiently sane people will listen, too. And that will have horrible consequences. (And when I try to find a way to navigate around this, such as talking openly only to certifiably sane people, that seems like the totally cultish thing to do.)

There is an alternative way, the other extreme: get more and more rationalists.

If the formed communities do not share the moral inclinations of LW community, those might form some new coordination structures[1]; if we don't draw from the circles of desperate, those structures will tend to benefit others as well (and, on the other hand, having a big proportion of very unsatisfied people would naturally start a gang or overthrow whatever institutions are around).

(It's probably worth exploring in a separate post?)

- ^

I claim non-orthogonality between goals and means in this case. For some community with altruistic people, its structures require learning a fair bit about people's values. For a group which wants tech companies to focus on consumers' quality-of-life more than currently, not so.

Actually, AIs can use other kinds of land (to suggest from the top of the head, sky islands over oceans, or hot air balloons for a more compact option) to be run, which are not usable by humans. There have to be a whole lot of datacenters to make people short on land - unless there are new large factories built.

It seems that an alternative to AI unlearning is often overlooked: just remove dataset parts which contain sensitive (or, to that matter, false) information or move training on it towards beginning to aid with language syntax only. I don't think a bit of inference throughout the dataset is any more expensive than training on it.

In practice I do not think this matters, but it does indicate that we’re sleeping on the job – all the sources you need for this are public, why are we not including them.

I'd reserve judgement whether that matters, but I can attest a large part of content is indeed skipped... probably for those same reasons why market didn't react to DeepSeek in advance: people are just not good at knowing distant information which might still be helpful to them.

If you want to figure out how to achieve good results when making the AI handle various human conflicts, you can't really know how to adapt and improve it without actually involving it in those conflicts.

I disagree. There is such a lot of conflicts (some kinds make it into writing, some just happen) of different scales, both in history and now; I believe they span human conflict space almost fully. Just aggregating this information could lead to very good advice on handling everything, which AI could act upon if it so needed.

But if I know that there are external factors, I know the bullet will deviate for sure. I don't know where but I know it will.

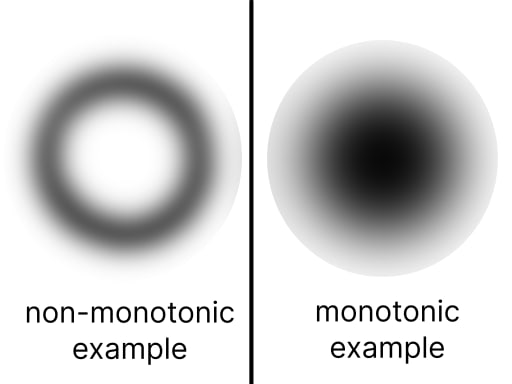

You assume that blur kernel is non-monotonic, and this is our entire disagreement. I guess that different tasks have different noise structure (for instance, if somehow noise geometrically increased - - we wouldn't ever return to an exact point we had left).

However, if noise is composed from many i.i.d. small parts, then it has normal distribution which is monotonic in the relevant sense.

Can you think of any good reason why I should think that?

Intuition. Imagine a picture with bright spot in the center, and blur it. The brightest point will still be in center (before rounding pixel values off to the nearest integer, that is; only then may a disk of exactly equiprobable points form).

My answer: because strictly monotonic[1] probability distribution prior to accounting for external factors (either "there might be negligible aiming errors" or "the bullet will fly exactly where needed" are suitable) will remain strictly monotonic when blurred[2] with monotonic kernel[2] formed by those factors (if we assume wind and all that create a normal distribution, it fits).

Like why are time translations so much more important for our general work than space translations?

I'd imagine that happens because we are able to coordinate our work across time (essentially, execute some actions), while work coordination across space-separated instances is much harder (now, it is part of IT's domain under name of "scalability").

An interesting framing! I agree with it.

As another example: in principle, one could make a web server use an LLM connected to database to serve any requests, not coding anything. It would even work... till the point someone would convince the model to rewrite the database to their whims! (A second problem is that normal site should be focused on something, in line with famous "if you can explain anything, your knowledge is zero".)

That article is suspiciously scarce on what microcontrols units... well, glory to LLMs for decent macro management then! (Though I believe that capability is still easier to get without text neural networks.)

In StarCraft II, adding LLMs (to do/aid game-time thinking) will not help the agent in any way, I believe. That happens because inference has a quite large latency, especially as most of prompt changes with all the units moving, so tactical moves are out; strategic questions "what is the other player building" and "how many units do they already have" are better answered by card-counting counting visible units and inferring what's the proportion of remaining resources (or scouting if possible).

I guess it is possible that bots' algorithms are improved with LLMs but that requires a high-quality insight; not convinced that o1 or o3 give such insights.

I don't think so as I had success explaining away the paradox with concept of "different levels of detail" - saying that free will is a very high-level concept and further observations reveal a lower-level view, calling upon analogy with algorithmic programming's segment tree.

(Segment tree is a data structure that replaces an array, allowing to modify its values and compute a given function over all array elements efficiently. It is based on tree of nodes, each of those representing a certain subarray; each position is therefore handled by several - specifically, nodes.)

Doesn't the "threat" to delete the model have to be DT-credible instead of "credible conditioned on being human-made", given that LW with all its discussion about threat resistance and ignoring is in training sets?

(If I remember correctly, a decision theory must ignore "you're threatened to not do X, and the other agent is claiming to respond in such a way that even they lose in expectation" and "another agent [self-]modifies/instantiates an agent making them prefer that you don't do X".)

The surreal version of the VNM representation theorem in "Surreal Decisions" (https://arxiv.org/abs/2111.00862) seems to still have a surreal version of the Archimedean axiom.

That's right! However it is not really a problem unless we can obtain surreal probabilities from the real world; and if all our priors and evidence are just real numbers, updates won't lead us into the surreal area. (And it seems non-real-valued probabilities don't help us in infinite domains, as I've written in https://www.lesswrong.com/posts/sZneDLRBaDndHJxa7/open-thread-fall-2024?commentId=LcDJFixRCChZimc7t.)

Re the parent example, utility function (or its evaluations) changing in an expectable way seems problematic to rational optimizing. If you know you prefer A to B, and know that you will prefer B to A in future even given only current context (so no "waiter must run back and forth"), then you don't reflectively endorse either decision.

Yes, many people will have problems with the Archimedes' axiom because it implies that everything has a price (that any good option can be probability-diluted enough that a mediocre is chosen instead), and people don't take it kindly when you tell "you absolutely must have a trade-off between value A and value B" - especially if they really don't have a trade-off, but also if they don't want to admit or consciously estimate it.

Thankfully, that VNM property is not that critical for rational decision-making because we can simply use surreal numbers instead.

One possible real-world example (with integer-valued for deterministic outcomes) would be a parent whose top priority is minimizing the number of their children who die within the parent's lifetime, with the rest of their utility function being secondary.

Wouldn't work well since in real world outcomes are non-deterministic; given that, minimizing expected number is accomplished by simply having zero children.

But technology is not a good alternative to good decision making and informed values.

After thinking on this a bit, I've somewhat changed my mind.

(Epistemic status: filtered evidence.)

Technology and other progress has two general directions: a) more power for those who are able to wield it; b) increasing forgiveness, distance to failure. For some reason, I thought that b) was a given at least on average. However, now it came to mind that it's possible for someone to

1) get two dates to accidentally overlap (or before confirming with partners-to-be that poly is OK),

2) lose an arbitrarily large bunch of money on gambling just online,

3) take revenge on a past offender with a firearm (or more destructive ways, as it happens),

and I'm not sure the failure margins have widened over time at all.

By the way, if technology effects aren't really on topic, I'm open to move that discussion to shortform/dialogue.

---

(Epistemic status: obtained with introspection.)

Continuing the example with sweets, I estimate my terminal goals to include both "not be ill e.g. with diabetes" and "eat tasty things". Given tech level and my current lifestyle, there isn't instrumental goal "eat more sweets" nor "eat less sweets"; I think I'm somewhere near the balance, and I wouldn't want society to pass any judgement.

I object to the framing of society being all-wise, and instead believe that for most issues it's possible to get the benefits of both ways given some innovators on that issue. For example, visual communication was either face-to-face or heavily resource-bounded till the computer era - then there were problems of quality and price, but those have been almost fully solved in our days.

Consequently, I'd prefer "bunch of candy and no diabetes still" outcome, and there are some lines of research/ideas into how this can be done.

As for "nonmarital sex <...> will result in blowing past Goodhart's warnings into more [personal psychological, I suppose] harm than good", that seems already solved with the concept of "commitment"? The society might accept someone disregarding another person if that's done with plausible deniability like "I didn't know they would even care", and commitment often makes you promise to care about partner's feelings, solving* the particular problem in a more granular way than "couples should marry no matter what". The same thing goes with other issues.

That said, I've recently started to think that it's better to not push other people to less-socially-accepted preferences unless you have a really good case they can revert from exploration well and would be better off (and, thus, better not to push over social networks at all), since the limit point of person's preferences might shift - wicked leading to more wicked and so on - to the point person wouldn't endorse outcomes of change on reflection. I'm still considering if just noting that certain behavior is possible is a nudge significant enough to be disadvantaged (downvoted or like).

*I'd stop believing in that if commitment-based cultures had higher rate of partners failing on their promises to care than marriage-based; would be interested in some evidence either way.

Nicely written!

A nitpick: I believe "Voluntary cooperation" shouldn't always be equal to "Pareto preferred". Consider an Ultimatum game, where two people have 10 goodness tokens to split; the first person suggests a split (just once), then the second may accept or reject (when rejecting, all tokens are discarded). 9+1 is Pareto superior to 0+0 but one shouldn't [100%] accept 9+1 lest that becomes anything they are ever suggested. Summarizable with "Don't do unto yourself what you wouldn't want to be done unto you", or something like that.