Open Thread Winter 2024/2025

post by habryka (habryka4) · 2024-12-25T21:02:41.760Z · LW · GW · 59 commentsContents

59 comments

If it’s worth saying, but not worth its own post, here's a place to put it.

If you are new to LessWrong, here's the place to introduce yourself. Personal stories, anecdotes, or just general comments on how you found us and what you hope to get from the site and community are invited. This is also the place to discuss feature requests and other ideas you have for the site, if you don't want to write a full top-level post.

If you're new to the community, you can start reading the Highlights from the Sequences, a collection of posts about the core ideas of LessWrong.

If you want to explore the community more, I recommend reading the Library [? · GW], checking recent Curated posts [? · GW], seeing if there are any meetups in your area [? · GW], and checking out the Getting Started [? · GW] section of the LessWrong FAQ [? · GW]. If you want to orient to the content on the site, you can also check out the Concepts section [? · GW].

The Open Thread tag is here [? · GW]. The Open Thread sequence is here [? · GW].

59 comments

Comments sorted by top scores.

comment by Screwtape · 2024-12-27T02:37:15.958Z · LW(p) · GW(p)

Hello! I'm running the Unofficial LessWrong Community Survey [LW · GW] this year, and we're down to the last week it's open. If you're reading this open thread, I think you're in the target audience.

I'd appreciate if you took the survey.

If you're wondering what happens with the answers, it gets used for big analysis posts like this one [? · GW], the data gets published so you can use it to answer questions about the community, and it sometimes guides the decision-making of people who work or run things in the community. (Including me!)

comment by Sherrinford · 2025-01-16T10:21:43.460Z · LW(p) · GW(p)

What is the current status of CFAR? The website seems like it is inactive, which I find surprising given that there were four weekend workshops in 2022 that CFAR wanted to use for improving its workshops.

comment by Hastings (hastings-greer) · 2025-01-22T23:15:24.532Z · LW(p) · GW(p)

So if alignment is as hard as it looks, desperately scrabbling to prevent recursive superintelligence should be an extremely attractive instrumental subgoal. Do we just lean into that?

Replies from: gilch, SuddenCaution↑ comment by gilch · 2025-02-03T21:50:16.583Z · LW(p) · GW(p)

See https://pauseai.info. They think lobbying efforts have been more successful than expected, but politicians are reluctant to act on it before they hear about it from their constituents. Individuals sending emails also helps more than expected. The more we can create common knowledge of the situation, the more likely the government acts.

Replies from: ChristianKl↑ comment by ChristianKl · 2025-02-04T15:39:03.569Z · LW(p) · GW(p)

Under the Biden administration lobbying efforts had some success. In the last weeks, the Trump administration undid all the efforts of the Biden administration. Especially, with China making progress with DeepSeek, it's unlikely that you can convince the Trump administration to slow down AI.

↑ comment by fdrocha (SuddenCaution) · 2025-01-24T10:07:29.506Z · LW(p) · GW(p)

It would be great to prevent it, but it also seems very hard? Is there anything short of an international agreement with serious teeth that could have a decent chance of doing it? I suppose US-only legislation could maybe delay it for a few years and would be worth doing, but that also seems a very big lift in current climate.

comment by lsusr · 2025-01-11T02:37:19.470Z · LW(p) · GW(p)

Is anyone else on this website making YouTube videos? Less Wrong is great, but if you want to broadcast to a larger audience, video seems like the place to be. I know Rational Animations [LW · GW] makes videos. Is there anyone else? Are you, personally, making any?

Replies from: Huera↑ comment by Huera · 2025-01-11T12:31:26.192Z · LW(p) · GW(p)

Robert Miles [LW · GW] has a channel popularizing AI safety concepts.

[Edit] Also manifold markets has recordings of talks at the Manifest conferences.

comment by Rebecca_Records · 2025-02-08T02:45:32.006Z · LW(p) · GW(p)

Hi, I'd like to start creating some wiki pages to help organize information. Would appreciate an upvote so I can get started. Thanks!

comment by ProgramCrafter (programcrafter) · 2025-02-07T01:00:20.209Z · LW(p) · GW(p)

I'd like there to be a reaction of "Not Exhaustive", meant for a step where comment (or top-level post, for that matter) missed an important case - how a particular situation could play out, perhaps, or an essential system's component is not listed. An example use: on statement "to prevent any data leaks, one must protect how their systems transfer data and how they process it" with the missed component being protection of storage as well.

I recall wishing for it like three times since the New Year, with the current trigger being this comment [LW(p) · GW(p)]:

Elon already has all of the money in the world. I think he and his employs are ideologically driven, and as far as I can tell they're making sensible decisions given their stated goals of reducing unnecessary spend/sprawl. I seriously doubt they're going to use this access to either raid the treasury or turn it into a personal fiefdom. <...>

which misses a specific case (which I'll name under the original comment if there is any interest).

Replies from: habryka4↑ comment by habryka (habryka4) · 2025-02-07T01:15:31.425Z · LW(p) · GW(p)

(I like it, seems like a cool idea and maybe worth a try, and indeed a common thing that people mess up)

comment by Embee · 2025-01-16T05:18:11.734Z · LW(p) · GW(p)

Hi! I'm Embee but you can call me Max.

I'm a mathematics for quantum physics graduate student considering redirecting my focus toward AI alignment research. My background includes:

- Graduate-level mathematics

- Focus on quantum physics

- Programming experience with Python

- Interest in type theory and formal systems

I'm particularly drawn to MIRI-style approaches and interested in:

- Formal verification methods

- Decision theory implementation

- Logical induction

- Mathematical bounds on AI systems

My current program feels too theoretical and disconnected from urgent needs. I'm looking to:

- Connect with alignment researchers

- Find concrete projects to contribute to

- Apply mathematical rigor to safety problems

- Work on practical implementations

Regarding timelines: I have significant concerns about rapid capability advances, particularly given recent developments (o3). I'm prioritizing work that could contribute meaningfully in a compressed timeframe.

Looking for guidance on:

- Most neglected mathematical approaches to alignment

- Collaboration opportunities

- Where to start contributing effectively

- Balance between theory and implementation

↑ comment by eigenblake (rvente) · 2025-01-17T06:51:11.854Z · LW(p) · GW(p)

If you're interested in mathematical bounds in AI systems and you haven't seen it already check out https://arxiv.org/pdf/quant-ph/9908043 Ultimate Physical Limits to Computation by Seth Loyd and related works. Online I've been jokingly saying "Intelligence has a speed of light." Well, we know intelligence involves computation so there has to be some upper bound at some point. But until we define some notion of Atomic Standard Reasoning Unit of Inferential Distance, we don't have a good way of talking about how much more efficient a computer like you and me are compared to Claude at natural language generation, for example.

↑ comment by Cole Wyeth (Amyr) · 2025-01-22T19:13:13.979Z · LW(p) · GW(p)

Check out my research program:

https://www.lesswrong.com/s/sLqCreBi2EXNME57o [? · GW]

Particularly the open problems post (once you know what AIXI is).

For a balance between theory and implementation, I think Michael Cohen’s work on AIXI-like agents is promising.

Also look into Alex Altair’s selection theorems, John Wentworth’s natural abstractions, Vanessa Kosoy’s infra-Bayesianism (and more generally learning theoretic agenda which I suppose I’m part of), and Abram Demski’s trust tiling.

If you want to connect with alignment researchers you could attend the agent foundations conference at CMU, apply by tomorrow: https://www.lesswrong.com/posts/cuf4oMFHEQNKMXRvr/agent-foundations-2025-at-cmu [LW · GW]

comment by MondSemmel · 2025-02-22T15:05:42.785Z · LW(p) · GW(p)

The German federal election is tomorrow. I looked up whether any party was against AI or for a global AI ban or similar. From what I can tell, the answer is unfortunately no, they all want more AI and just disagree about how much. I don't want to vote for any party that wants more AI, so this is rather disappointing.

comment by niplav · 2025-01-31T21:43:25.718Z · LW(p) · GW(p)

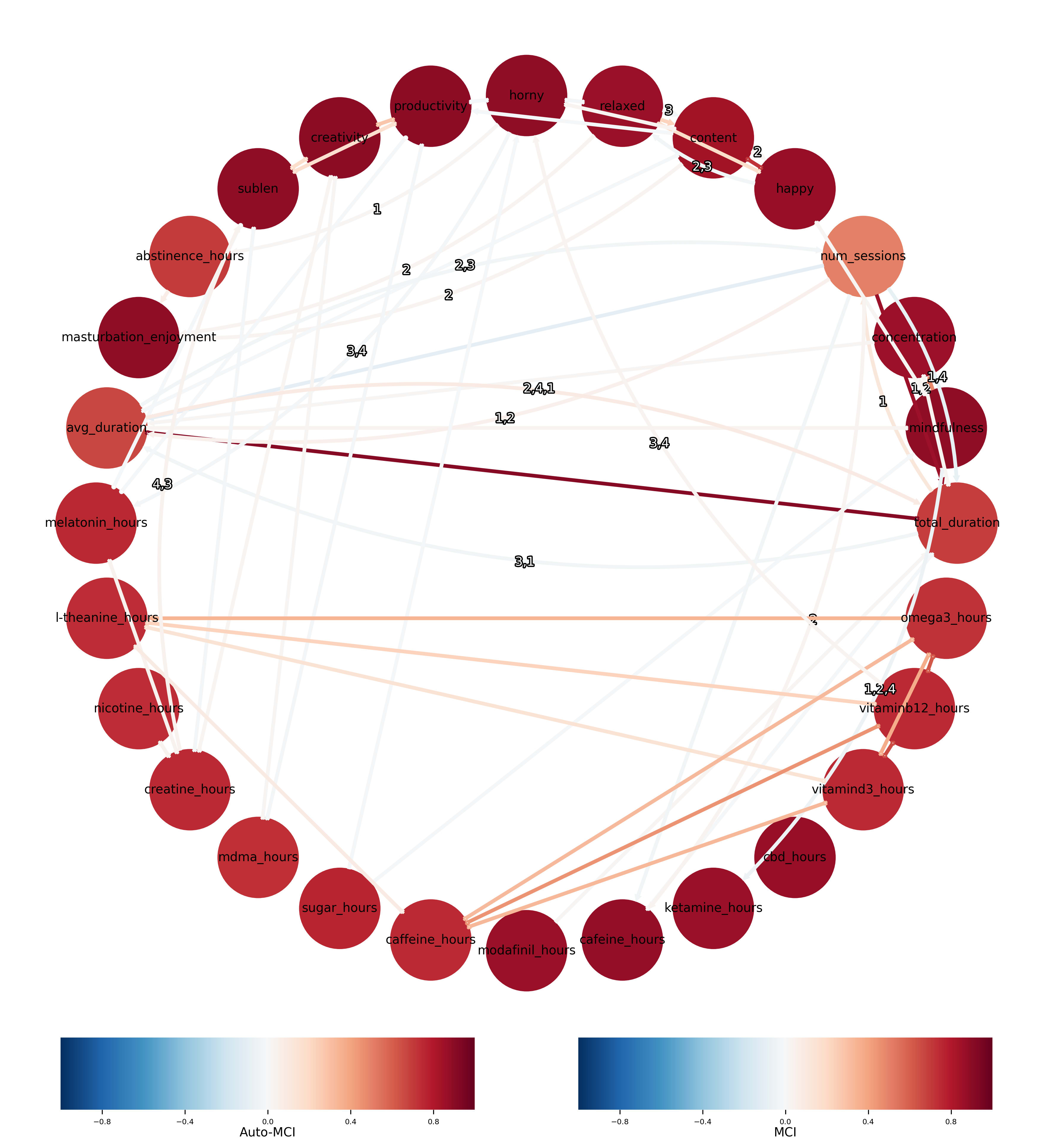

If you've been collecting data on yourself, it's now moderately easy to let an LLM write code that'll tease out causal relationships between those variables, e.g. with a time series causal inference library like tigramite.

I've done that with some of my data, and surprisingly it both fails to back out results from RCTs I've run, and also doesn't find many (to me) interesting causal relationships.

The most surprising one is that creatine influences creativity. Meditating more doesn't influence anything (e.g. has no influence on mood or productivity), as far as could be determined. More mindful meditations cause happiness.

Because of the resampling/interpolation necessary, everything has a large autocorrelation.

Mainly posting this here so that people know it's now easy to do this, and shill tigramite. I hope I can figure out a way of dealing with rare interventions (e.g. MDMA, CBD, ketamine), and incorporate more collected data. Code here, written by Claude 3.5.1 Sonnet.

Replies from: timothy-currie↑ comment by Tiuto (timothy-currie) · 2025-02-03T06:38:44.563Z · LW(p) · GW(p)

Interesting. Do you have any recommendations on how to do this most effectively? At the moment I'm

- using R to analyse the data/create some basic plots (will look into tigramite),

- entering the data in google sheets,

- occasionally doing a (blinded) RCT where I'm randomizing my dose of stimulants,

- numerically tracking (guessing) mood and productivity,

- and some things I mark as completed or not completed every day e.g. exercise, getting up early, remembering to floss

Questions I'd have:

- Is google sheets good for something like this or are there better programs?

- Any advice on blinding oneself or on RCTs in general or what variables one should track?

- Anything I'm (probably) doing wrong?

Thanks in advance if you have any advice to offer (I already looked at your Caffeine RCT [LW · GW] just wondering if you have any new insights or general advice on collecting data on oneself).

Replies from: niplav↑ comment by niplav · 2025-02-14T09:44:35.820Z · LW(p) · GW(p)

As for data collection, I'm probably currently less efficient than I could be. The bets guide on how to collect data is imho Passive measures for lazy self-experimenters (troof, 2022), I'd add that wearables like FitBit allow for data exporting (thanks GDPR!). I've written a bit about how I collect data here, which involves a haphazard combination of dmenu pop-ups, smartphone apps, manually invoked scripts and spreadsheets converted to CSV.

I've tried to err on the side of things that can automatically be collected, for anything that needs to be manually entered Google sheets is probably fine (though I don't use it because I like to stay without internet most of the time).

As for blinding in RCTs[1], my process involves numbered envelopes containing both a pill and small piece of paper with a 'P' (placebo) or 'I' (intervention) written on it. Pills can be cut and put into pill capsules, sugar looks like a fine placebo.

I don't have any great insight for what variables to track. I think from starting with the causal analysis I've updated towards tracking more "objective" measures (heart rate, sleeping times), and more things I can intervene on (though those usually have to be tracked manually).

Hope this helps :-)

I don't think anyone has written up in detail how to do these! I should do that. ↩︎

comment by kasalios · 2025-02-07T04:49:03.426Z · LW(p) · GW(p)

Hi everyone,

I'm currently a student in the US. Around a year ago I first read Scott Alexander's Meditations on Moloch as a link that looked interesting; now I spend much of my free time reading blogposts in the rat-adjacent sphere and lurking on LessWrong and Twitter. Over time I've come to realize that I'm not doing anyone else any good by lurking, and it would be valuable for me to start receiving feedback from other people, so I've decided to try actively posting.

One interesting thing about me: I have been heavily involved in physics olympiads (competitions like the International Math Olympiad, but for physics). The relevance of the physics "process" towards world modeling and the ability of AI to solve difficult physics problems seems under-discussed to me.

The quality of discussion here is the highest I've ever seen on the Internet, and I hope to do my part to help uphold it!

comment by sHaggYcaT (shaggycat) · 2025-02-15T22:51:21.830Z · LW(p) · GW(p)

Hello everyone. My name is Kseniia Lisovskaia, I think, better to call me Xenia or Xenya. My native language is Russian, so, English is my second language, and I can do mistakes, sorry for that. Because Russian language has different alphabet, there are different ways how to transliterate my name from Cyrillic to Latin. I think, Xenia is the easy way, because it's common transliteration for the same Greece name.

I'm immigrant in Canada. I left Russia because of political reasons, and because don't like chaos. Unfortunately, chaos now is going to invade in Canada too. I know what Eliezer said about Politics, that it's a killer of mind. It's reason why I don't elaborate.

I participated in Russian speaking lesswrong community in the past. And I have ideas, which maybe can be valuable contribution.

Also, I participate in Russian-speaking internet forum about astronomy(it affected by state censorship, but not much), and it has a big subforum about existential risks, Universe, science itself, AI, etc. I think, it can be valuable to bring most interesting ideas here.

I'm singularist, maybe I about to adopt utilitarianism and/or rational altruism, but I'm not fully sure. I'm defiantly humanist, but in the edge of technological singularity, the classical humanism looks like a bit outdated.

From my perspective, utilitarianism has alignment problems, like AI: why don't take drugs and not make everyone happy? Utilitarianists usually answer, that they mean other type of happiness. But I don't think this answer is good enough.

Also, I'm interested in astronomy, physics, biology, political science, history, computers/programming/AI, volunteering activities(basically I get my endorphins when I help someone), in literature(mostly reading, but I'm trying to write fiction myself). And I have a dream: to be adopted as favourite pet by very clever and kind ASI, and live forever.

I feel very disconnected from other rational people, and feel that chaos is everywhere. Thank you guys, that you keep this island of rationality in the sea of chaos alive. It's very important for me to know, that somewhere madness not finally win yet. My husband is rationalist and transhumanist (he is like an idea to defeat death), but his political views recently changed, and he became an agent of chaos himself.

Honestly, I think, we can't win. I expect a dark singularity or even an extinction event. I think, our the Great Filter ahead, and we will crash about it soon.

comment by Steven Byrnes (steve2152) · 2025-02-19T18:02:15.065Z · LW(p) · GW(p)

[CLAIMED!] ODD JOB OFFER: I think I want to cross-post Intro to Brain-Like-AGI Safety [? · GW] as a giant 200-page PDF on arxiv (it’s about 80,000 words), mostly to make it easier to cite (as is happening sporadically, e.g. here, here). I am willing to pay fair market price for whatever reformatting work is necessary to make that happen, which I don’t really know (make me an offer). I guess I’m imagining that the easiest plan would be to copy everything into Word (or LibreOffice Writer), clean up whatever formatting weirdness comes from that, and convert to PDF. LaTeX conversion is also acceptable but I imagine that would be much more work for no benefit.

I think inline clickable links are fine on arxiv (e.g. page 2 here), but I do have a few references to actual papers, and I assume those should probably be turned into a proper reference section at the end of each post / “chapter”. Within-series links (e.g. a link from Post 6 to a certain section of Post 4) should probably be converted to internal links within the PDF, rather than going out to the lesswrong / alignmentforum version. There are lots of textbooks / lecture notes on arxiv which can serve as models; I don’t really know the details myself. The original images are all in Powerpoint, if that’s relevant. The end product should ideally be easy for me to edit if I find things I want to update. (Arxiv makes updates very easy, one of my old arxiv papers is up to version 5.)

…Or maybe this whole thing is stupid. If I want it to be easier to cite, I could just add in a “citation information” note, like they did here? I dunno.

Replies from: ash-dorsey↑ comment by ashtree (ash-dorsey) · 2025-02-26T20:19:37.287Z · LW(p) · GW(p)

I don't know how useful that on arvix would be (I would suspect not very) but I will attempt to deliver a PDF by two days from now, although using Typst rather than LaTeX (there is a conversion tool, I do not know how well it works) due to familiarity.

Do you have any particular formatting opinions?

comment by jmh · 2025-02-16T22:35:45.789Z · LW(p) · GW(p)

Does anyone here ever think to themselves, or out loud, "Here I am in the 21st Century. Sure, all the old scifi stories told me I'd have a shiny flying car but I'm really more interested in where my 21st Century government is?"

For me that is premised on the view that pretty much all existing governments are based on theory and structures that date at least back to the 18th Century in the West. The East might say they "modernized" a bit with the move from dynasties (China, Korea, Japan) to democratic forms but when I look at the way those governments and polities actually work seems more like a wrapper around the prior dynastic structures.

But I also find it challenging to think just what might be the differentiating change that would distinguish a "21st Century" government from existing ones. The best I've come up with is that I don't see it as some type of privatization divestiture of existing government activities (even though I do think some should be) but more of a shift from government being the acting agent it is now and more like a markets in terms of mediating and coordinating individual and group actions via mechanisms other than voting for representation or direct voting on actions.

Replies from: ChristianKl, niplav↑ comment by ChristianKl · 2025-02-18T01:57:48.886Z · LW(p) · GW(p)

I think 20st century big bureaucracy is quite different from the way 18st century governance. The Foreign Office of the United Kingdom managed work with 175 employees at the height of the British Empire in 1914.

Replies from: jmh↑ comment by jmh · 2025-02-19T18:13:06.472Z · LW(p) · GW(p)

In some ways I think one can make that claim but in an important ways, to me, numbers don't really matter. In both you still see the role of government as an actor, doing things, rather than an institutional form that enables people to do things. I think the US Constitution is a good example of that type of thinking. It defines the powers the government is suppose to have, limiting what actions it can and cannot take.

I'm wondering what scope might exist for removing government (and the bureaucracy that performs the work/actions) from our social and political worlds while still allowing the public goods (non-economic term use here) to still be produced and enjoyed by those needing/wanting such outputs. Ideally that would be achieved without as much forced-carrying (the flip of free-riding) from those uninterested or uninterested at the cost of producing them.

Markets seem to do a reasonable job of finding interior solutions that are not easily gamed or controlled by some agenda setter. Active government I think does that more poorly and by design will have an agenda setter in control of any mediating and coordinating processes for dealing with the competing interest/wants/needs. These efforts then invariable become political an politicized -- an as being demonstrated widely in today's world, as source of a lot of internal (be it global, regional/associative or domestic) strife leading to conflict.

↑ comment by niplav · 2025-02-17T15:44:55.636Z · LW(p) · GW(p)

I'm not Estonian, but this video portrays it as one way a 21st century government could be like.

Replies from: jmh↑ comment by jmh · 2025-02-17T23:01:32.087Z · LW(p) · GW(p)

Thanks. It was an interesting view. Certainly taking advantage of modern technologies and, taken at face value, seem to have resulted in some positive results. Has me thinking of making a visit just to talk with some of the people to see get some first hand accounts and views just how much that is changing the views and "experience" of government (meaning people experience as they live under a government).

I particularly liked the idea of government kind of fading into the background and being generally invisible. I think in many ways people see markets in that way pretty much too -- when running to the store to pick up some milk or a loaf of bread or whatnot, who really gives much though to the whole supply chain aspect of whatever they were getting actually being there.

comment by Kajus · 2025-02-10T12:22:06.211Z · LW(p) · GW(p)

I'm trying to think clearly about my theory of change and I want to bump my thoughts against the community:

- AGI/TAI is going to be created at one of the major labs.

- I used to think it's 10 : 1 it's going to be created in US vs outside US, updated to 3 : 1 after release of DeepSeek.

- It's going to be one of the major labs.

- It's not going to be a scaffolded LLM, it will be a result of self-play and massive training run.

- My odds are equal between all major labs.

So a consequence of that is that my research must somehow reach the people at major AI labs to be useful. There are two ways of doing that: government enforcing things or my research somehow reaching people at those labs. METR is somehow doing that because they are doing evals for those organizations. Other orgs like METR are also probably able to do that (tell me which)

So I think that one of the best things to help with AI alignment is to: do safety research that people at Antrophic or OpenAI or other major labs find helpful, work with METR or try to contribute to thier open source repos or focus on work that is "requested" by governance.

Replies from: rhollerith_dot_com, Raemon↑ comment by RHollerith (rhollerith_dot_com) · 2025-02-22T17:20:25.424Z · LW(p) · GW(p)

I think the leaders of the labs have enough private doubts about the safety of their enterprise that if an effective alignment method were available to them, they would probably adopt the method (especially if the group that devised the method do not seem particularly to care who gets credit for having devised it). I.e., my guess is that almost all of the difficulty is in devising an effective alignment method, not getting the leading lab to adopt it. (Making 100% sure that the leading lab adopts it is almost impossible, but acting in such a way that the leading lab will adopt it with p = .6 is easy, and the current situation is so dire that we should jump at any intervention with a .6 chance of a good outcome.)

Eliezer stated recently (during an interview on video) that the deep-learning paradigm seems particularly hard to align, so it would be nice to get the labs to focus on a different paradigm (even if we do not yet have a way to align the different paradigm) but that seems almost impossible unless and until the other paradigm has been developed to the extent that it can create models that are approximately as capable as deep-learning models.

The big picture is that the alignment project seems almost completely hopeless IMHO because of the difficulty of aligning the kind of designs the labs are using and the difficulty of inducing the labs to switch to easier-to-align designs.

Replies from: Kajus↑ comment by Kajus · 2025-03-18T19:22:32.569Z · LW(p) · GW(p)

So I don't think you can make a clear cut case for efficacy of some technique. There is a lot of shades of gray to it.

The current landscape looks to me like a lot of techniques (unlearning, supervision, RLHF) that sort of work, but are easy to exploit by attackers. I don't think it's possible to create a method that is provable to be perfectly effective within the current framework (but I guess Davidad is working on something like that). Proving that a method is effective seems doable. There are papers on e.g. on unlearning https://arxiv.org/abs/2406.04313 but I don't see OpenAI or Anotrophic going "we searched every paper and found the best unlearning technique on aligning our models." They are more like "We devised this technique on our own based on our own research". So I'm not excited about iterative work on things such as unlearning and I expect machine interpretability to go in similar direction. Maybe the techniques aren't impressive enough tho, labs cared about transformers a lot.

↑ comment by Raemon · 2025-02-10T22:50:25.523Z · LW(p) · GW(p)

I think this is sort of right, but, if you think big labs have wrong worldmodels about what should be useful, it's not that helpful to produce work "they think is useful", but isn't actually that helpful. (i.e. if you have a project that is 90% likely to be used by a lab, but ~0% likely to reduce x-risk, this isn't obviously better than a project that is 30% likely to be used by a lab if you hustle/convince them, but would actually reduce x-risk if you succeeded.)

I do think it's correct to have some model of how your research will actually get used (which I expect to involve some hustling/persuasion if it involves new paradigms)

comment by glauberdebona · 2025-02-05T16:55:15.537Z · LW(p) · GW(p)

Hi everyone,

I’m Glauber De Bona, a computer scientist and engineer. I left an assistant professor position to focus on independent research related to AGI and AI alignment (topics that led me to this forum). My current interests include self-location beliefs, particularly the Sleeping Beauty problem.

I’m also interested in philosophy, especially formal reasoning -- logic, Bayesianism, and decision theory. Outside of research, I’m an amateur chess player.

comment by Alistair Stewart (Alistair) · 2025-01-29T20:35:25.939Z · LW(p) · GW(p)

Hi, I'm Alistair – am mostly new to LessWrong and rationality, but have been interested in and to differing extents involved in effective altruism for about five years now.

Some beliefs I hold:

- Veganism is a moral obligation, i.e. not being vegan is morally unjustifiable

- Defining veganism not as a 100% plant-based diet, but rather as the ethical stance that commits one to avoid causing the exploitation (non-consensual use) and suffering of sentient non-humans, as far as practicable

- This definition is inspired by but in my view better than the Vegan Society's definition

- "As far as practicable" is ambiguous – this is deliberate

- And there may be some trade-offs between exploitation and suffering when you take into account e.g. crop deaths and wild animal suffering

- Defining veganism not as a 100% plant-based diet, but rather as the ethical stance that commits one to avoid causing the exploitation (non-consensual use) and suffering of sentient non-humans, as far as practicable

- Transformative AI is probably coming, and it's probably coming soon (maybe 70% chance we start seeing a likely irreversible paradigm shift in the world by 2027)

- If the way we treat members of other species relative to whom we are superintelligent is anything to go by, AGI/ASI/TAI could go really badly for us and all sentients

- As far as I can tell, the only solution we currently have is not building AGI until we know it won't e.g. invasively experiment on us (i.e. PauseAI)

I'm currently lead organiser of the AI, Animals, & Digital Minds conference in London in June 2025, and would love to speak to people who are interested in the intersection of those three things, especially if they're in London.

I'll be co-working in the LEAH Coworking Space and the Ambitious Impact office in London in 2025, and will be in the Bay Area in California in Feb-Mar for EAG Bay Area [? · GW] (if accepted) and the AI for Animals conference there.

Please reach out by DM!

Interested in:

- Sentience- & suffering-focused ethics; sentientism; painism; s-risks

- Animal ethics & abolitionism

- AI safety & governance

- Activism, direct action & social change

- Trying to make transformative AI go less badly for sentient beings, regardless of species and substrate

Bio:

- From London

- BA in linguistics at the University of Cambridge

- Almost five years in the British Army as an officer

- MSc in global governance and ethics at University College London

- One year working full time in environmental campaigning and animal rights activism at Plant-Based Universities / Animal Rising

- Now pivoting to the (future) impact of AI on biologically and artifically sentient beings

↑ comment by habryka (habryka4) · 2025-01-29T21:44:52.840Z · LW(p) · GW(p)

Defining veganism not as a 100% plant-based diet, but rather as the ethical stance that commits one to avoid causing the exploitation (non-consensual use) and suffering of sentient non-humans, as far as practicable

Hmm, it seems bad to define "veganism" as something that has nothing to do with dietary choice. I.e. this would make someone who donates to effective animal welfare charities more "vegan", since many animal welfare charities are order of magnitudes more effective with a few thousand dollars than what could be achieved by any personal dietary change.

Replies from: Alistair↑ comment by Alistair Stewart (Alistair) · 2025-01-29T23:44:07.158Z · LW(p) · GW(p)

I think that veganism is deontological, or at least has a deontological component to it; it relies on the act-omission distinction.

Imagine a world in which child sex abuse was as common and accepted as animal exploitation is in ours. In this world of rampant child sex abuse, some people would adopt protectchildrenism, the ethical stance that commits you to avoid causing the sexual exploitation (and suffering?) of human children as far as practicable – i.e. analogous to my definition of veganism.

It seems inaccurate/misleading for a child sex abuser to call themselves a protectchildrenist because they save more children from sexual abuse (by donating to effective child protection charities) than they themselves sexually abuse.

Also it seems morally worse for the child sex abuser to a) save more children through donations than they themselves abuse than b) not abuse the children but not donate; even though the world in which a) happens is a better world than the world in which b) happens (all else being equal).

comment by roland · 2025-01-01T11:42:36.698Z · LW(p) · GW(p)

Update: Confusion of the Inverse

Is there an aphorism regarding the mistake P(E|H) = P(H|E) ?

Suggestions:

- Thou shalt not reverse thy probabilities!

- Thou shalt not mix up thy probabilities!

- Thou shalt not invert thy probabilities! -- Based on Confusion of the Inverse

Arbital conditional probability

Replies from: brambleboyExample 2

Suppose you're Sherlock Holmes investigating a case in which a red hair was left at the scene of the crime.

The Scotland Yard detective says, "Aha! Then it's Miss Scarlet. She has red hair, so if she was the murderer she almost certainly would have left a red hair there. P(redhair∣Scarlet)=99%, let's say, which is a near-certain conviction, so we're done."

"But no," replies Sherlock Holmes. "You see, but you do not correctly track the meaning of the conditional probabilities, detective. The knowledge we require for a conviction is not P(redhair∣Scarlet), the chance that Miss Scarlet would leave a red hair, but rather P(Scarlet∣redhair), the chance that this red hair was left by Scarlet. There are other people in this city who have red hair."

"So you're saying..." the detective said slowly, "that P(redhair∣Scarlet) is actually much lower than 1?"

"No, detective. I am saying that just because P(redhair∣Scarlet) is high does not imply that P(Scarlet∣redhair) is high. It is the latter probability in which we are interested - the degree to which, knowing that a red hair was left at the scene, we infer that Miss Scarlet was the murderer. This is not the same quantity as the degree to which, assuming Miss Scarlet was the murderer, we would guess that she might leave a red hair."

"But surely," said the detective, "these two probabilities cannot be entirely unrelated?"

"Ah, well, for that, you must read up on Bayes' rule."

↑ comment by brambleboy · 2025-01-08T19:45:18.937Z · LW(p) · GW(p)

Don't confuse probabilities and likelihoods? [LW · GW]

Replies from: roland↑ comment by roland · 2025-01-22T18:30:05.057Z · LW(p) · GW(p)

I'm looking for something simpler that doesn't require understanding another concept besides probability.

The article you posted is a bit confusing:

the likelihood of X given Y is just the probability of Y given X!

help us remember that likelihoods can't be converted to probabilities without combining them with a prior.

So is Arbital:

In this case, the "Miss Scarlett" hypothesis assigns a likelihood of 20% to e

Fixed: the "Miss Scarlett" hypothesis assigns a probability of 20% to e

comment by Dalmert · 2024-12-30T07:47:42.266Z · LW(p) · GW(p)

Hey, can anyone help me find this LW (likely but could be diaspora) article, especially if you might have read it too?

My vague memory: It was talking about (among other things?) some potential ways of extending point estimate probability predictions and calibration curves. I.e. in a situation where making a prediction in one way affects what the outcome will be, i.e. if there is a mind-reader/accurate-simulator involved that bases its actions on your prediction. And in this case, a two dimensional probability estimate might be more appropriate: If 40% is predicted for event A, event B will have a probability of 60%. If 70% for event A, then 80% for event B, and so on, a mapping potentially continuously defined for the whole range. (event A and event B might be the same.) IIRC the article contained 2D charts where curves and rectangles were drawn for illustration.

IIRC it didn't have too many upvotes, more like around low-dozen, or at most low-hundred.

Searches I've tried so far: Google, Exa, Gemini 1.5 with Deep Research, Perplexity, OpenAI GPT-4o with Search.

p.s. if you are also unable to put enough time into finding it, do you have any ideas how it could be found?

↑ comment by Dalmert · 2024-12-31T00:51:37.111Z · LW(p) · GW(p)

I have found it! This was the one:

https://www.lesswrong.com/posts/qvNrmTqywWqYY8rsP/solutions-to-problems-with-bayesianism [LW · GW]

Seems to have seen better reception at: https://forum.effectivealtruism.org/posts/3z9acGc5sspAdKenr/solutions-to-problems-with-bayesianism [EA · GW]

The winning search strategy was quite interesting as well I think:

I took the history of all LW articles I have roughly ever read, I had easy access to all such titles and URLs, but not article contents. I fed them one by one into a 7B LLM asking it to rate how likely based on the title alone the unseen article content could match what I described above, as vague as that memory may be. Then I looked at the highest ranking candidates, and they were a dud. Did the same thing with a 70B model, et voila, the solution was near the top indeed.

Now I just need to re-read it if it was worth dredging up, I guess when a problem starts to itch it's hard to resist solving it.

comment by Daniel Kokotajlo (daniel-kokotajlo) · 2024-12-28T17:44:26.538Z · LW(p) · GW(p)

Now seems like a good time to have a New Year's Predictions post/thread?

Replies from: habryka4↑ comment by habryka (habryka4) · 2024-12-30T02:02:05.087Z · LW(p) · GW(p)

It now exists [LW · GW]!

comment by Nicolas Lupinski (nicolas-lupinski) · 2025-02-21T22:48:43.485Z · LW(p) · GW(p)

Hello

I've been lurking on this website for a few months now. I'm interested in logic, computer science, ai, probability, information... I think I'll fit here. I speak French in every language I know.

I hope I'll be able to publish/discuss/coauthor on lesswrong, or somewhere else.

↑ comment by Screwtape · 2025-02-23T02:57:54.359Z · LW(p) · GW(p)

Welcome to LessWrong Nicolas. How'd you come across the site? I'm always interested what leads people here.

Replies from: nicolas-lupinski↑ comment by Nicolas Lupinski (nicolas-lupinski) · 2025-02-23T11:50:59.719Z · LW(p) · GW(p)

I don't remember. Maybe the writing of Scott Alexander brought me here ? Back in the slate star codex days?

Replies from: Screwtapecomment by AGoyet · 2025-02-12T13:23:49.305Z · LW(p) · GW(p)

Hi,

I have a background in computer science and logic. I'm fascinated with AI safety, and I'm writing science fiction exploring some of the concepts. Can I share a short story on lesswrong? Any advice apart from tagging it with "fiction"? In particular, can I post a blurb and a link to the story?

(I ask about linking because the terms of use mention an "irrevocable" license and I'm not sure of the full implications.)

comment by Mohit Gore (mohit-gore) · 2025-02-04T19:55:16.315Z · LW(p) · GW(p)

Biting my nails writing this as the welcome and FAQ made this site sound quite intimidating... I hope this is the right place for this...

Hi, I'm Mohit Gore. I'm a little younger than the main demographic for this forum skews, but I figured now was as good a time as any to get into rationality and the like. As a concept, rationality has interested me for a while, even before I knew it had a name. I first discovered LessWrong whilst reading a Gwern article and decided to create an account out of curiosity. I'm a few months into my grand plan of becoming (at least a wannabe) polymath, so LessWrong fit perfectly.

As the new user guide suggested, I will lurk for a while (whilst slowly, slowly trudging through The Sequences) before actually raising any points or engaging in any discussion, unless something specifically strikes my fancy.

Aside from select essays from my own amateurish philosophical ramblings, I've never really engaged with or read philosophy specifically focusing on rationality, so this will be a new experiment for me. In my own time, I can typically be found writing narrative fiction, writing essays, programming, filmmaking, reading, or engaging in abject nonsense. In 2024, I self-published two books of free-verse poetry. The first one was distinctly amateurish and had many flaws, but I am pleased to say that the second one improves immensely upon it. I am also working on a few novels and a couple other top-secret projects. Not sure what the rules on self-promo are, so I'll refrain from linking them.

Replies from: Ruby↑ comment by Ruby · 2025-02-05T18:54:54.028Z · LW(p) · GW(p)

Welcome! Don't be too worried, you can try posting some stuff and see how it's received. Based on how you wrote this comment, I think you won't have much trouble. The New User Guide and other stuff gets worded a bit sternly because of the people who tend not to put in much effort at all and expect to be well received – which doesn't sound like you at all. It's hard hard to write one document that's stern to those who need it and more welcoming to those who need that, unfortunately.

comment by jr · 2025-02-23T01:01:07.433Z · LW(p) · GW(p)

I am new here and very excited to have discovered this community, as I rarely encounter anyone else who strongly embodies the qualities and values that I share with you. I am eager to engage, and expect that it will be mutually beneficial.

At the same time, I have great admiration and respect for the people here and the community you have created, so I would like to ensure the impact of the ways I am engaging match my intent. Ideally I would love to fully absorb all the perspectives offered here already, before attempting to offer bits of my own. However, that's just not practical, so I will do my best to engage in ways that are mindful of the limits of my knowledge and contribute positively to discussions and to the community. If (and when) I fall short of those goals, I would really appreciate and welcome your feedback.

To that end, I have several questions already, which may or may not require a little back and forth. Is this the best place to ask those, or should I use intercom instead, or perhaps even discord? (I really appreciate the very helpful welcome guide and for this thread, and will try to avoid asking questions that it already answers. There's an awful lot to process though.)

Thanks so much for your assistance, and your dedication to maintaining this community.

↑ comment by lsusr · 2025-02-23T01:29:55.588Z · LW(p) · GW(p)

First of all, welcome.

What questions go ultimately goes down to personal opinions, so here are my personal opinions:

- General questions you're comfortable with the public seeing can go here. Questions usually don't require much back-and-forth. If yours do, then you can make a note of that to set expectations properly.

- Intercom should be used for questions that must be answered by a moderator. Moderator bandwidth is limited relative to user bandwidth, so err on the side of asking the community instead of asking moderators. (The moderators here are super helpful and friendly, and I try hard not to abuse their time.)

- Discord (which I don't personally use) is for private-ish conversations you don't want the Internet seeing.

comment by jmh · 2025-02-19T17:36:09.878Z · LW(p) · GW(p)

Did the Ask Question type post go away? I don't see it any more. So I will ask here since it certainly is not worthy of a post (I have no good input or thoughts or even approaches to make some sense of it). Somewhat prompting the question was the report today about MS just revealing it's first quantum chip, and the recent news about Google's advancement in its quantum program (a month or two back).

Two branches of technology have been seen as game, or at least potential game changers: AI/AGI and quantum computing. The former often a topic here and certainly worth calling "mainstream" technology at this point. Quantum computing has been percolating just under the surface for quite a while as well. There have been a couple of recent announcements related to quantum chips/computing suggesting progress is being made.

I'm wondering if anyone has thought about the intersection between these two areas of development. Is a blending of quantum computing and AI a really scary combination for those with relatively high p(doom) views of existing AGI trajectories? Does quantum computing, in the hands of humans perhaps level the playing field for human v. AGI? Does quantum computing offer any potential gains in alignment or corrigibility?

I realize that current state quantum computing is not really in the game now, and I'm not sure if those working in that area have any overlap with those working in the AI fields. But from the outside, like my perspective is, the two would seem to offer large complementarities - for both good and bad I suppose, like most technologies.

Replies from: lsusr↑ comment by lsusr · 2025-02-21T08:31:20.362Z · LW(p) · GW(p)

Did the Ask Question type post go away?

It's still present, but the way to get to it has changed. First click "New Post". Then, at the top of your new post, there will be three tabs: POST, LINKPOST, and QUESTION. Click the QUESTION tab and you can create a question type post.

Replies from: jr↑ comment by jr · 2025-02-23T03:05:04.237Z · LW(p) · GW(p)

Thanks for that info, this was one of my questions too. One follow-up: this causes me some cognitive dissonance and uncertainty. In my understanding, Quick Takes are generally for rougher or exploratory thoughts, and Posts are for more relatively well-thought out and polished writing. While Questions are by nature exploratory, they would be displayed in the Posts list. In my current understanding, the appropriate mechanism for sharing a thought, in order of increasing confidence of merit and contextual value, is:

1. Don't share it yet (value too unclear, further consideration appropriate)

2. Comment

3. Quick Take

4. Post

I realize I could informally ask a question in a comment (as I am now) or quick take, but there is an explicit mechanism for Questions. So, I'd like to use that, but I also want to ensure any Questions I create not only have sufficient merit, but are also of sufficient value to the community. Rather than explicitly ask you for guidance, it seems reasonable to me to give it a try slowly, starting with a question that seems both significant and relevant, and see whether it elicits community engagement. If you have anything to add though, that's welcome.

Thanks again for the helpful info.

(Just an awareness: I think I resolved the dissonance, if not all uncertainty. The dissonance was around my greater reluctance to create a Post than a Question, despite them being grouped together. And I figured out that identifying consequential questions is something I feel is a personal strength, and is more likely to be of value to the community and also offer the means of understanding their perspectives and their important implications.)

comment by stevencao · 2025-02-06T20:27:26.606Z · LW(p) · GW(p)

Hi everyone! I'm Steven, a freshman at Harvard.

Since joining the AI Safety Student Team six months ago, I've been thinking deeply about AI safety.

I'm completing ARENA and starting research through SPAR. As I've become more confident in short timelines, I'm thinking about optimizing my time and effort to contribute meaningfully.

I'm joining LessWrong to learn, discuss, and refine my takes on AI.

comment by Huera · 2025-02-26T22:23:46.658Z · LW(p) · GW(p)

Minor UI complaint:

When opening a tag, the default is to sort by relevance, and this can't be changed (to the best of my knowledge). More importantly, I don't see how it is supposed to be useful. Users don't upvote the relevance of a tag when it fits—the modal amount of votes is 1 and, in almost all cases, that is enough evidence to conclude that the tag has been assgned correctly.

comment by ashtree (ash-dorsey) · 2025-02-26T20:23:25.532Z · LW(p) · GW(p)

I am getting Forbidden 403 errors and invalid JSON parsing errors: started with '<html> <head> <...' while navigating the website. I am using eduroam and mobile data and it's occuring on both, so I don't think it's my issue. Where should I report this?

comment by GravitasGalore · 2025-02-14T19:18:21.156Z · LW(p) · GW(p)

Long-time reader, first-time poster. My bio covers my background, but I have a few questions looking at AI risk through an economic lens:

- Has anyone deeply engaged with Hayek's "The Use of Knowledge in Society" in relation to AI alignment? I'm particularly interested in how his insights about distributed knowledge and the limitations of central planning might inform our thinking about AI governance and control structures.

- More broadly, I'm curious about historical parallels between different approaches to handling distributed vs centralized power/knowledge systems. Are there instructive analogies to be drawn from how 20th century intellectuals thought about economic planning versus how we currently think about AI development and control?

- I'm particularly interested in how distributed AI development changes the risk landscape compared to early singleton-focused scenarios. What writings or discussions have best tackled this shift?

Before developing these ideas further, I'd appreciate pointers to any existing work in these directions!

comment by Pseudo_nine · 2025-01-21T19:56:43.865Z · LW(p) · GW(p)

Hi folks! I've been doing a bunch of experiments with current AI to explore what they might be capable of beyond their current implementation. I don't mean "red teaming", in the sense of trying to make the AI do things outside the terms of service, or jailbreaking. I mean exploring things that are commonly assumed to be impossible for current frontier models, like an AI developing a sense of self, or changing its beliefs through critical thinking. The new user guide makes it clear that there is a lot of forward-looking AI content here, so I'd appreciate if ya'll could point me towards posts here that explore preparing for a future where AI may become entities that should be considered Moral Patients, or similar topics. The first post I have in mind is a lengthy exploration of one of my experiments, so I'd like to draft it appropriately for this context the first time.