| 0 |

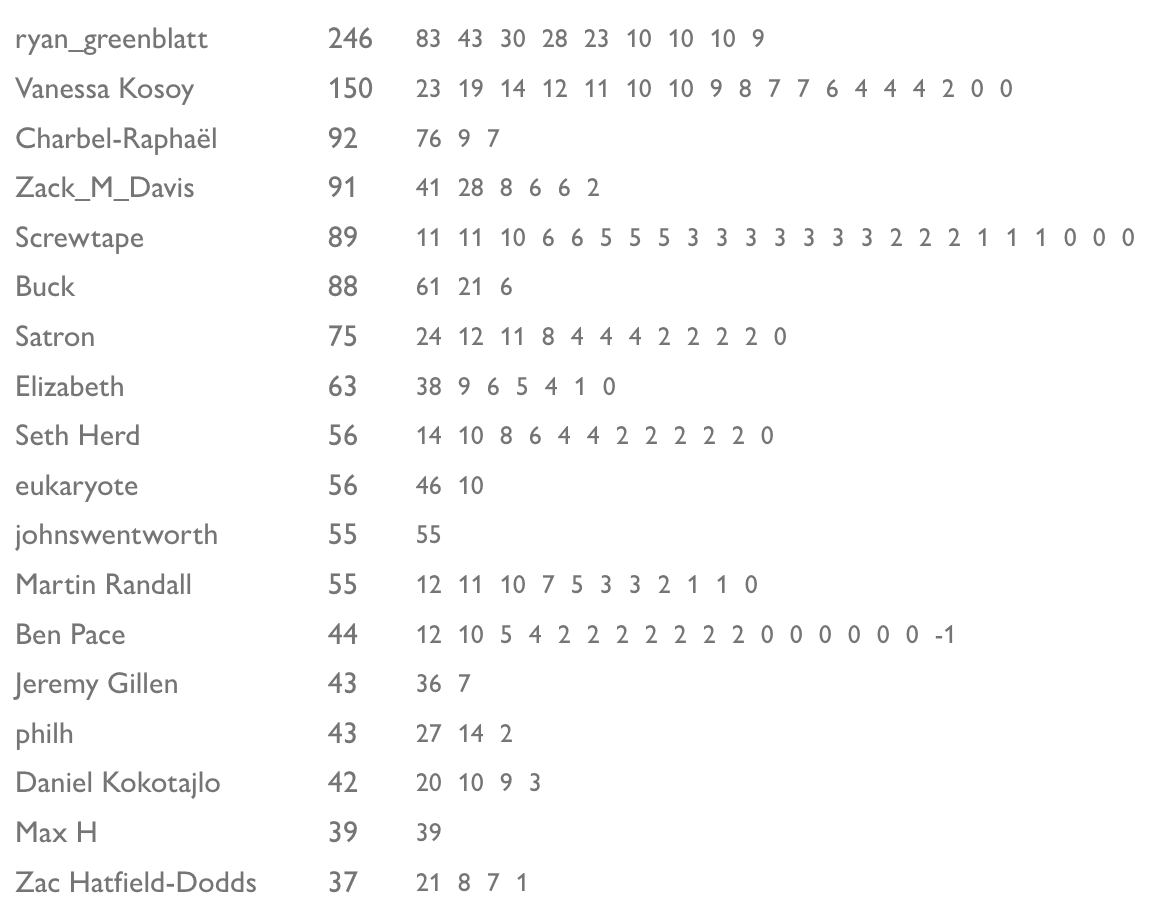

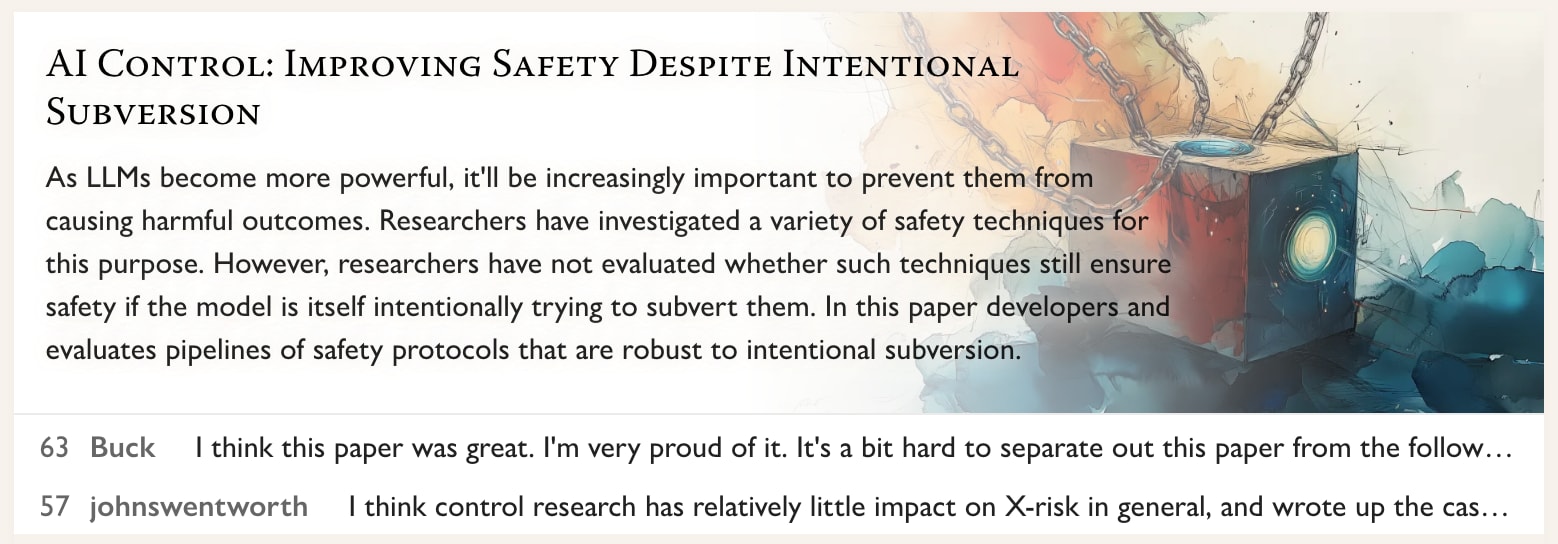

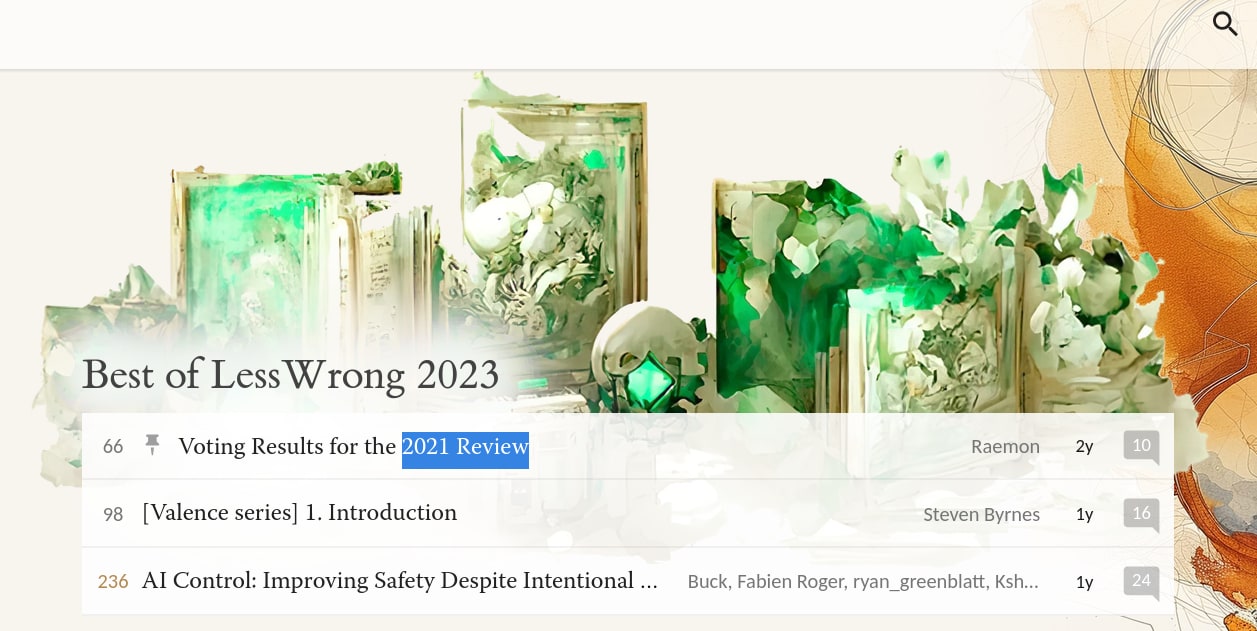

AI Control: Improving Safety Despite Intentional Subversion

Buck

|

|

| 1 |

Focus on the places where you feel shocked everyone's dropping the ball

So8res

|

|

| 2 |

Significantly Enhancing Adult Intelligence With Gene Editing May Be Possible

GeneSmith

|

|

| 3 |

Social Dark Matter

Duncan Sabien (Deactivated)

|

|

| 4 |

Statement on AI Extinction - Signed by AGI Labs, Top Academics, and Many Other Notable Figures

Dan H

|

|

| 5 |

Pausing AI Developments Isn't Enough. We Need to Shut it All Down

Eliezer Yudkowsky

|

|

| 6 |

Please don't throw your mind away

TsviBT

|

|

| 7 |

How much do you believe your results?

Eric Neyman

|

|

| 8 |

AI Timelines

habryka

|

|

| 9 |

Basics of Rationalist Discourse

Duncan Sabien (Deactivated)

|

|

| 10 |

Things I Learned by Spending Five Thousand Hours In Non-EA Charities

jenn

|

|

| 11 |

What a compute-centric framework says about AI takeoff speeds

Tom Davidson

|

|

| 12 |

How to have Polygenically Screened Children

GeneSmith

|

|

| 13 |

Natural Abstractions: Key claims, Theorems, and Critiques

LawrenceC

|

|

| 14 |

Alignment Implications of LLM Successes: a Debate in One Act

Zack_M_Davis

|

|

| 15 |

Model Organisms of Misalignment: The Case for a New Pillar of Alignment Research

evhub

|

|

| 16 |

Natural Latents: The Math

johnswentworth

|

|

| 17 |

Steering GPT-2-XL by adding an activation vector

TurnTrout

|

|

| 18 |

SolidGoldMagikarp (plus, prompt generation)

Jessica Rumbelow

|

|

| 19 |

The ants and the grasshopper

Richard_Ngo

|

|

| 20 |

Feedbackloop-first Rationality

Raemon

|

|

| 21 |

Deep Deceptiveness

So8res

|

|

| 22 |

EA Vegan Advocacy is not truthseeking, and it’s everyone’s problem

Elizabeth

|

|

| 23 |

Davidad's Bold Plan for Alignment: An In-Depth Explanation

Charbel-Raphaël

|

|

| 24 |

Fucking Goddamn Basics of Rationalist Discourse

LoganStrohl

|

|

| 25 |

Against Almost Every Theory of Impact of Interpretability

Charbel-Raphaël

|

|

| 26 |

Guide to rationalist interior decorating

mingyuan

|

|

| 27 |

New report: "Scheming AIs: Will AIs fake alignment during training in order to get power?"

Joe Carlsmith

|

|

| 28 |

Predictable updating about AI risk

Joe Carlsmith

|

|

| 29 |

[Fiction] A Disneyland Without Children

L Rudolf L

|

|

| 30 |

The Talk: a brief explanation of sexual dimorphism

Malmesbury

|

|

| 31 |

"Carefully Bootstrapped Alignment" is organizationally hard

Raemon

|

|

| 32 |

Tuning your Cognitive Strategies

Raemon

|

|

| 33 |

Enemies vs Malefactors

So8res

|

|

| 34 |

The Parable of the King and the Random Process

moridinamael

|

|

| 35 |

GPTs are Predictors, not Imitators

Eliezer Yudkowsky

|

|

| 36 |

Labs should be explicit about why they are building AGI

peterbarnett

|

|

| 37 |

Cultivating a state of mind where new ideas are born

Henrik Karlsson

|

|

| 38 |

Discussion with Nate Soares on a key alignment difficulty

HoldenKarnofsky

|

|

| 39 |

Loudly Give Up, Don't Quietly Fade

Screwtape

|

|

| 40 |

We don’t trade with ants

KatjaGrace

|

|

| 41 |

Neural networks generalize because of this one weird trick

Jesse Hoogland

|

|

| 42 |

My views on “doom”

paulfchristiano

|

|

| 43 |

Change my mind: Veganism entails trade-offs, and health is one of the axes

Elizabeth

|

|

| 44 |

Lessons On How To Get Things Right On The First Try

johnswentworth

|

|

| 45 |

Shallow review of live agendas in alignment & safety

technicalities

|

|

| 46 |

The Learning-Theoretic Agenda: Status 2023

Vanessa Kosoy

|

|

| 47 |

Improving the Welfare of AIs: A Nearcasted Proposal

ryan_greenblatt

|

|

| 48 |

Book Review: Going Infinite

Zvi

|

|

| 49 |

Speaking to Congressional staffers about AI risk

Zuko's Shame

|

|

| 50 |

Why it's so hard to talk about Consciousness

Rafael Harth

|

|

| 51 |

Acausal normalcy

Andrew_Critch

|

|

| 52 |

Towards Developmental Interpretability

Jesse Hoogland

|

|

| 53 |

Dear Self; we need to talk about ambition

Elizabeth

|

|

| 54 |

Thoughts on “AI is easy to control” by Pope & Belrose

Steven Byrnes

|

|

| 55 |

Thoughts on sharing information about language model capabilities

paulfchristiano

|

|

| 56 |

FixDT

abramdemski

|

|

| 57 |

My Model Of EA Burnout

LoganStrohl

|

|

| 58 |

Accidentally Load Bearing

jefftk

|

|

| 59 |

Comp Sci in 2027 (Short story by Eliezer Yudkowsky)

sudo

|

|

| 60 |

The Plan - 2023 Version

johnswentworth

|

|

| 61 |

When is Goodhart catastrophic?

Drake Thomas

|

|

| 62 |

Evaluating the historical value misspecification argument

Matthew Barnett

|

|

| 63 |

Contrast Pairs Drive the Empirical Performance of Contrast Consistent Search (CCS)

Scott Emmons

|

|

| 64 |

Bing Chat is blatantly, aggressively misaligned

evhub

|

|

| 65 |

Cyborgism

NicholasKees

|

|

| 66 |

Shell games

TsviBT

|

|

| 67 |

Ten Levels of AI Alignment Difficulty

Sammy Martin

|

|

| 68 |

Introducing Fatebook: the fastest way to make and track predictions

Adam B

|

|

| 69 |

Modal Fixpoint Cooperation without Löb's Theorem

Andrew_Critch

|

|

| 70 |

How to (hopefully ethically) make money off of AGI

habryka

|

|

| 71 |

AI: Practical Advice for the Worried

Zvi

|

|

| 72 |

[Valence series] 1. Introduction

Steven Byrnes

|

|

| 73 |

Towards Monosemanticity: Decomposing Language Models With Dictionary Learning

Zac Hatfield-Dodds

|

|

| 74 |

Responses to apparent rationalist confusions about game / decision theory

Anthony DiGiovanni

|

|

| 75 |

Why and How to Graduate Early [U.S.]

Tego

|

|

| 76 |

Updates and Reflections on Optimal Exercise after Nearly a Decade

romeostevensit

|

|

| 77 |

Getting Started With Naturalism

LoganStrohl

|

|

| 78 |

Why Not Subagents?

johnswentworth

|

|

| 79 |

Meta-level adversarial evaluation of oversight techniques might allow robust measurement of their adequacy

Buck

|

|

| 80 |

A case for AI alignment being difficult

jessicata

|

|

| 81 |

Consciousness as a conflationary alliance term for intrinsically valued internal experiences

Andrew_Critch

|

|

| 82 |

The 101 Space You Will Always Have With You

Screwtape

|

|

| 83 |

Coup probes: Catching catastrophes with probes trained off-policy

Fabien Roger

|

|

| 84 |

Preventing Language Models from hiding their reasoning

Fabien Roger

|

|

| 85 |

Teleosemantics!

abramdemski

|

|

| 86 |

My Objections to "We’re All Gonna Die with Eliezer Yudkowsky"

Quintin Pope

|

|

| 87 |

Why Not Just Outsource Alignment Research To An AI?

johnswentworth

|

|

| 88 |

Childhoods of exceptional people

Henrik Karlsson

|

|

| 89 |

How to Catch an AI Liar: Lie Detection in Black-Box LLMs by Asking Unrelated Questions

JanB

|

|

| 90 |

The Soul Key

Richard_Ngo

|

|

| 91 |

Never Drop A Ball

Screwtape

|

|

| 92 |

Cohabitive Games so Far

mako yass

|

|

| 93 |

The salt in pasta water fallacy

Thomas Sepulchre

|

|

| 94 |

Untrusted smart models and trusted dumb models

Buck

|

|

| 95 |

Some Rules for an Algebra of Bayes Nets

johnswentworth

|

|

| 96 |

How to Bounded Distrust

Zvi

|

|

| 97 |

Yudkowsky vs Hanson on FOOM: Whose Predictions Were Better?

1a3orn

|

|

| 98 |

Before smart AI, there will be many mediocre or specialized AIs

Lukas Finnveden

|

|

| 99 |

A Golden Age of Building? Excerpts and lessons from Empire State, Pentagon, Skunk Works and SpaceX

Bird Concept

|

|

| 100 |

Apocalypse insurance, and the hardline libertarian take on AI risk

So8res

|

|

| 101 |

A freshman year during the AI midgame: my approach to the next year

Buck

|

|

| 102 |

Aumann-agreement is common

tailcalled

|

|

| 103 |

grey goo is unlikely

bhauth

|

|

| 104 |

Iron deficiencies are very bad and you should treat them

Elizabeth

|

|

| 105 |

Dark Forest Theories

Raemon

|

|

| 106 |

Connectomics seems great from an AI x-risk perspective

Steven Byrnes

|

|

| 107 |

Views on when AGI comes and on strategy to reduce existential risk

TsviBT

|

|

| 108 |

The 'Neglected Approaches' Approach: AE Studio's Alignment Agenda

Cameron Berg

|

|

| 109 |

Thinking By The Clock

Screwtape

|

|

| 110 |

Killing Socrates

Duncan Sabien (Deactivated)

|

|

| 111 |

You don't get to have cool flaws

Neil

|

|

| 112 |

Noting an error in Inadequate Equilibria

Matthew Barnett

|

|

| 113 |

Assume Bad Faith

Zack_M_Davis

|

|

| 114 |

Measuring and Improving the Faithfulness of Model-Generated Reasoning

Ansh Radhakrishnan

|

|

| 115 |

When can we trust model evaluations?

evhub

|

|

| 116 |

Benchmarks for Detecting Measurement Tampering [Redwood Research]

ryan_greenblatt

|

|

| 117 |

Learning-theoretic agenda reading list

Vanessa Kosoy

|

|

| 118 |

Exercise: Solve "Thinking Physics"

Raemon

|

|

| 119 |

Why Simulator AIs want to be Active Inference AIs

Jan_Kulveit

|

|

| 120 |

What I would do if I wasn’t at ARC Evals

LawrenceC

|

|

| 121 |

Evaluations (of new AI Safety researchers) can be noisy

LawrenceC

|

|

| 122 |

The King and the Golem

Richard_Ngo

|

|

| 123 |

Love, Reverence, and Life

Elizabeth

|

|

| 124 |

Auditing failures vs concentrated failures

ryan_greenblatt

|

|

| 125 |

Elements of Rationalist Discourse

Rob Bensinger

|

|

| 126 |

Evolution provides no evidence for the sharp left turn

Quintin Pope

|

|

| 127 |

Latent variables for prediction markets: motivation, technical guide, and design considerations

tailcalled

|

|

| 128 |

UFO Betting: Put Up or Shut Up

RatsWrongAboutUAP

|

|

| 129 |

Some background for reasoning about dual-use alignment research

Charlie Steiner

|

|

| 130 |

Scalable Oversight and Weak-to-Strong Generalization: Compatible approaches to the same problem

Ansh Radhakrishnan

|

|

| 131 |

The Base Rate Times, news through prediction markets

vandemonian

|

|

| 132 |

Mob and Bailey

Screwtape

|

|

| 133 |

Pretraining Language Models with Human Preferences

Tomek Korbak

|

|

| 134 |

Touch reality as soon as possible (when doing machine learning research)

LawrenceC

|

|

| 135 |

POC || GTFO culture as partial antidote to alignment wordcelism

lc

|

|

| 136 |

A to Z of things

KatjaGrace

|

|

| 137 |

OpenAI, DeepMind, Anthropic, etc. should shut down.

Tamsin Leake

|

|

| 138 |

Model, Care, Execution

Ricki Heicklen

|

|

| 139 |

Symbol/Referent Confusions in Language Model Alignment Experiments

johnswentworth

|

|

| 140 |

Careless talk on US-China AI competition? (and criticism of CAIS coverage)

Oliver Sourbut

|

|

| 141 |

Alexander and Yudkowsky on AGI goals

Scott Alexander

|

|

| 142 |

Deception Chess: Game #1

Zane

|

|

| 143 |

Ethodynamics of Omelas

dr_s

|

|

| 144 |

Here's Why I'm Hesitant To Respond In More Depth

DirectedEvolution

|

|

| 145 |

Shutting Down the Lightcone Offices

habryka

|

|

| 146 |

shoes with springs

bhauth

|

|

| 147 |

Would You Work Harder In The Least Convenient Possible World?

Firinn

|

|

| 148 |

Bayesian Networks Aren't Necessarily Causal

Zack_M_Davis

|

|

| 149 |

One Day Sooner

Screwtape

|

|

| 150 |

"Publish or Perish" (a quick note on why you should try to make your work legible to existing academic communities)

David Scott Krueger (formerly: capybaralet)

|

|

| 151 |

Anthropic's Responsible Scaling Policy & Long-Term Benefit Trust

Zac Hatfield-Dodds

|

|

| 152 |

Think carefully before calling RL policies "agents"

TurnTrout

|

|

| 153 |

Going Crazy and Getting Better Again

Evenstar

|

|

| 154 |

The benevolence of the butcher

dr_s

|

|

| 155 |

Nietzsche's Morality in Plain English

Arjun Panickssery

|

|

| 156 |

Cryonics and Regret

MvB

|

|

| 157 |

RSPs are pauses done right

evhub

|

|

| 158 |

Giant (In)scrutable Matrices: (Maybe) the Best of All Possible Worlds

1a3orn

|

|

| 159 |

Why I’m not into the Free Energy Principle

Steven Byrnes

|

|

| 160 |

The ‘ petertodd’ phenomenon

mwatkins

|

|

| 161 |

Which personality traits are real? Stress-testing the lexical hypothesis

tailcalled

|

|

| 162 |

Green goo is plausible

anithite

|

|

| 163 |

Rational Unilateralists Aren't So Cursed

SCP

|

|

| 164 |

Competitive, Cooperative, and Cohabitive

Screwtape

|

|

| 165 |

Have Attention Spans Been Declining?

niplav

|

|

| 166 |

Hell is Game Theory Folk Theorems

jessicata

|

|

| 167 |

Reducing sycophancy and improving honesty via activation steering

Nina Panickssery

|

|

| 168 |

Are there cognitive realms?

TsviBT

|

|

| 169 |

"Rationalist Discourse" Is Like "Physicist Motors"

Zack_M_Davis

|

|

| 170 |

Being at peace with Doom

Johannes C. Mayer

|

|

| 171 |

Recreating the caring drive

Catnee

|

|

| 172 |

The God of Humanity, and the God of the Robot Utilitarians

Raemon

|

|

| 173 |

Agentized LLMs will change the alignment landscape

Seth Herd

|

|

| 174 |

Ability to solve long-horizon tasks correlates with wanting things in the behaviorist sense

So8res

|

|

| 175 |

What's up with "Responsible Scaling Policies"?

habryka

|

|

| 176 |

The Real Fanfic Is The Friends We Made Along The Way

Eneasz

|

|

| 177 |

New User's Guide to LessWrong

Ruby

|

|

| 178 |

We don't understand what happened with culture enough

Jan_Kulveit

|

|

| 179 |

Seth Explains Consciousness

Jacob Falkovich

|

|

| 180 |

[Valence series] 2. Valence & Normativity

Steven Byrnes

|

|

| 181 |

Fifty Flips

abstractapplic

|

|

| 182 |

So you want to save the world? An account in paladinhood

Tamsin Leake

|

|

| 183 |

A Playbook for AI Risk Reduction (focused on misaligned AI)

HoldenKarnofsky

|

|

| 184 |

Moral Reality Check (a short story)

jessicata

|

|

| 185 |

Takeaways from calibration training

Olli Järviniemi

|

|

| 186 |

Efficiency and resource use scaling parity

Ege Erdil

|

|

| 187 |

Spaciousness In Partner Dance: A Naturalism Demo

LoganStrohl

|

|

| 188 |

[Valence series] 3. Valence & Beliefs

Steven Byrnes

|

|

| 189 |

Fighting without hope

Zuko's Shame

|

|

| 190 |

On Tapping Out

Screwtape

|

|

| 191 |

Beware of Fake Alternatives

silentbob

|

|

| 192 |

Truth and Advantage: Response to a draft of "AI safety seems hard to measure"

So8res

|

|

| 193 |

AI #1: Sydney and Bing

Zvi

|

|

| 194 |

The shape of AGI: Cartoons and back of envelope

boazbarak

|

|

| 195 |

Underwater Torture Chambers: The Horror Of Fish Farming

omnizoid

|

|

| 196 |

Finding Neurons in a Haystack: Case Studies with Sparse Probing

wesg

|

|

| 197 |

How should TurnTrout handle his DeepMind equity situation?

habryka

|

|

| 198 |

When do "brains beat brawn" in Chess? An experiment

titotal

|

|

| 199 |

There are no coherence theorems

Dan H

|

|

| 200 |

The Power of High Speed Stupidity

robotelvis

|

|

| 201 |

Book Review: Consciousness Explained (as the Great Catalyst)

Rafael Harth

|

|

| 202 |

Large language models learn to represent the world

gjm

|

|

| 203 |

Ruining an expected-log-money maximizer

philh

|

|

| 204 |

A problem with the most recently published version of CEV

ThomasCederborg

|

|

| 205 |

In Defense of Parselmouths

Screwtape

|

|

| 206 |

Five Worlds of AI (by Scott Aaronson and Boaz Barak)

mishka

|

|

| 207 |

When Omnipotence is Not Enough

lsusr

|

|

| 208 |

Why You Should Never Update Your Beliefs

Arjun Panickssery

|

|

| 209 |

Responsible Scaling Policies Are Risk Management Done Wrong

simeon_c

|

|

| 210 |

A Way To Be Okay

Duncan Sabien (Deactivated)

|

|

| 211 |

"Justice, Cherryl."

Zack_M_Davis

|

|

| 212 |

Large Language Models can Strategically Deceive their Users when Put Under Pressure.

ReaderM

|

|

| 213 |

[Link] A community alert about Ziz

DanielFilan

|

|