Book Review: Consciousness Explained (as the Great Catalyst)

post by Rafael Harth (sil-ver) · 2023-09-17T15:30:33.295Z · LW · GW · 14 commentsContents

1. The Framing and Thesis 2. Heterophenomenology and the Catharsis of someone finally making sense 3. The View from the Other Side 4. The Substance The Apparent Unity of Consciousness Blindsight The Color Phi Phenomenon The Rest 5. Closing Thoughts None 14 comments

[Thanks to wilkox [LW · GW] for helpful discussion, as well as Charlie Steiner [LW · GW], Richard Kennaway [LW · GW], and Said Achmiz [LW · GW] for feedback on a previous version. Extra special thanks to the Long-Term Future Fund for funding research related to this post.]

Consciousness Explained is a book by philosopher and University Professor Daniel Dennett. It's over 30 years old but still the most frequently referenced book about consciousness on LessWrong. It was previously reviewed [LW · GW] by Charlie Steiner.

I was interested in the book because of its popularity. I wanted to understand why so many people reference it and what role it plays in the discourse. To answer these questions, I think the two camps model I suggested in Why it's so hard to talk about Consciousness [LW · GW] is relevant because the book seems to act as a catalyst for Camp #1 thinking. So this review will be all about applying the model to the book in more detail, to see why it's so popular among Camp #1, but also what it has to offer to Camp #2.

Reading the two camps post first is recommended, but here's a one-paragraph summary. It seems to be the case that almost everyone has intuitions about consciousness that fall into one of two camps, which I call Camp #1 and Camp #2. For Camp #1, consciousness is an ordinary process and hence fully reducible to its functional role, which means that it can be empirically studied without introducing new metaphysics. For Camp #2, there is an undeniable fact of experience that's conceptually distinct from information processing, and its relationship to physical states or processes remains an open question. The solutions Camp #2 people propose are all over the place; the only thing they agree on is that the problem is real.

The review is structured into four main sections (plus one paragraph in section 5):

-

Section 1 is about the book's overall thesis and how it frames the issue.

-

Section 2 is about how the book takes sides and why it's popular.

-

Section 3 is about the book's treatment of the opposing camp.

-

Section 4 is about what remains of the book if we subtract the core intuition. I.e., if you are opposed to or undecided about the Camp #1 premise, how much punch do the arguments still have?

Sections #1-3 (~1500 words) are the core of the review; after that, you can pick and choose which parts of section #4 (~4000 words) you find interesting.

1. The Framing and Thesis

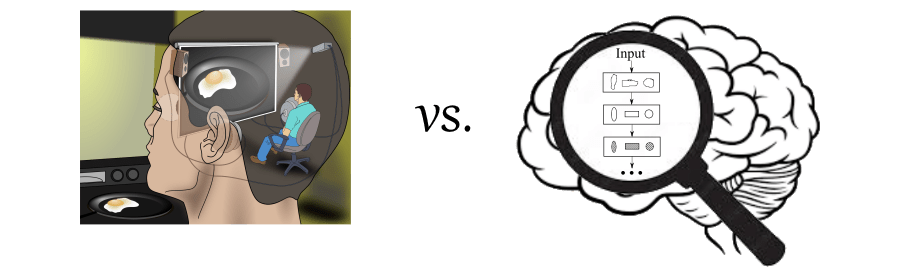

The book's central conflict is framed as a choice between two options called The Cartesian Theater and The Multiple Drafts Model.

The Cartesian Theater is defined as a single place in the brain where "it all comes together and consciousness happens". This isn't a theory of consciousness so much as a property that a theory may or may not postulate. Many theories agree that a Cartesian Theater exists, though they usually won't describe it as such.

Conversely, the Multiple Drafts Model is what Dennett champions throughout the book. Under this model, inputs undergo several drafts during processing, and there's no fact of the matter as to which draft is the correct one – there simply are different versions of inputs at different times/locations throughout your brain.

A good analogy is information delivered to a country at war. In most cases, things will work out well enough, creating the illusion that "received information" is a crisply defined concept, but it's easy to come up with examples where this isn't true. E.g.:

-

An officer dismisses the information as fake. Now, it only ever reaches a small part of the country.

-

A general thinks the information reflects poorly on him, so he lies to his superior. Now, different parts of the country receive different versions of the news.

Even if the information propagates without change, it's still received by different parts at different times. So the core property of this model is that consciousness does not have a ground truth (unlike in the Cartesian Theater). There is no fact of the matter as to which stage of the processing pipeline (or which "draft") is the true representative of consciousness, and there certainly is nothing above and beyond the processing pipeline that could help settle the issue.

In the book, Dennett introduces both models by first talking about dualism, which he defines as the idea that consciousness has causal power operating outside the laws of physics.[1] In a dualist world, the Cartesian Theater comes naturally as the place where the non-physical causal action originates from. Dennett then immediately denounces dualism but keeps the Cartesian Theater around as essentially the non-dualist remnant of the naïve view. He writes:

But as we shall see, the persuasive imagery of the Cartesian Theater keeps coming back to haunt us — laypeople and scientists alike — even after its ghostly dualism has been denounced and exorcized.

(See also the footnote[2] for a more extensive quote.) Having established the Cartesian Theater as problematic, Dennett introduces the Multiple Drafts Model as an alternative.

In any case, whether consciousness has a ground truth is a genuine crux between both options,[3] so at the very least, our central conflict is well-defined.

2. Heterophenomenology and the Catharsis of someone finally making sense

In the ears of Camp #1-ers, Camp #2 people say a lot of wacky stuff, and they usually don't realize how wacky they sound. Worst of all, they arguably comprise a majority of philosophers. All of this can make engagement with the topic exceedingly annoying, and it practically begs for someone like Dennett to come in and cut through all the noise with a simple and compelling proposal.

Dennett takes sides explicitly by championing heterophenomenology (the concept we've already covered in the two camps post), which is the idea that experience claims should be treated like fictional worldbuilding, with the epistemic status of the experience always up for debate. Heterophenomenology immediately closes the door to Camp #2 style reasoning, which is all about leveraging the fact of experience as a phenomenon above and beyond our reports of it.

The heart of the book is Dennett going through various cases of ostensibly conscious processing to explain how they can be dealt with without any special ingredients. Most of these will only make sense if you accept heterophenomenology, but once you do, you're in for a good time. For instance, have you ever been annoyed at how some people make human vision out to be mysterious, taking the idea of "images in the head" way too far? Dennett demonstrates how such talk of "seeing" naturally arises from building even rudimentary image processing systems. (And besides, much of what you supposedly see is surprisingly illusory – you can't even determine whether a playing card is clubs or spades if you hold it in your peripheral.) Or what about reasoning – mustn't there be a central meaner somewhere in the brain? Not so; Dennett shows how a model of many distributed "demons" working together makes a lot more sense than any central control.

As Charlie Steiner put it, "[t]his book might have also been called '455 Pages Of Implications Of There Being No Homuncular Observer Inside The Brain'".

It's remarkable that the book never really feels technical. It has more of a conversational vibe, and that's probably one of the greatest compliments you could make an academic book written by a philosophy professor. It's also not a difficult read, at least for the most part; Dennett's arguments are simple and well-explained enough that an attentive reader can get it on the first try.

The book concludes with five chapters devoted to various philosophical issues (Mary's Room, What it's Like to be a Bat, Epiphenomenalism, etc.), explaining how the Multiple Drafts Model handles them. As of before, if you accept heterophenomenology, you're unlikely to take issues with the material here. Those annoyed by philosophers gushing over, e.g., the mystery of Mary's Room, will likely appreciate Dennett's more sober commentary.

3. The View from the Other Side

We've talked about how the book does a great job elaborating on the Camp #1 intuition. But how well does it justify those ideas? That's the question that will matter for Camp #2 readers: if you're not already predisposed to agree, then explaining the opposing intuitions isn't enough; you want to hear why your current intuitions are wrong.

Here is where it gets harder to give Dennett credit. Even though he opens the book with a discussion of hallucinations and has an entire chapter devoted to the proper way to do phenomenology, he skirts around the key issues (for Camp #2 people) in both cases.[4] Even his argument against dualism is surprisingly weak.[5] (Details in footnotes.)

So the punchline is that the book almost entirely appeals to Camp #1 people, which matches the anecdotal evidence I've seen.[6] It would also take a remarkably high-minded Camp #2 person to come away with a positive feeling because Dennett is surprisingly dismissive of his intellectual opponents. He constantly implies that the opposing side is unsophisticated or in denial, such as when discussing the visual blindspot:

This idea of filling in is common in the thinking of even sophisticated theorists, and it is a dead giveaway of vestigial Cartesian materialism. What is amusing is that those who use the term often know better, but since they find the term irresistible, they cover themselves by putting it in scare-quotes.

He also name-calls people who disagree with him as qualophiles...

But curiously enough, qualophiles (as I call those who still believe in qualia) will have none of it

... and he even implies that people who take the philosophical zombie argument seriously (like David Chalmers) are motivated by bigotry, literally comparing them to Nazis:

It is time to recognize the idea of the possibility of zombies for what it is: not a serious philosophical idea but a preposterous and ignoble relic of ancient prejudices. Maybe women aren't really conscious! Maybe Jews! What pernicious nonsense.

At various points in the book, Dennett has a back-and-forth with a fictional character named Otto, who is introduced to represent the opposing view. These dialogues may have been the time to address Camp #2, but Otto is stuck in a strange limbo between both camps that offers little fuel for resistance. He's condemned to keep opposing Dennett but never disputes heterophenomenology, so his resistance is bound to fail. He will usually reference some internal state, which Dennett will inevitably explain away, leaving Otto bruised and defeated.

Meanwhile, heterophenomenology is sold not as one side of a hotly contested debate but as the only reasonable option. He prefaces it by declaring the approach universal...

Such a theory will have to be constructed from the third-person point of view, since all science is constructed from that

... and a later subchapter is titled "The Neutrality of Heterophenomenology".

In the end, none of these details really make a difference. Once you've burnt the bridge for cross-camp appeal, further outgroup bashing can't cause any more damage. Talking only to your own side may not be the prettiest way to write a book, but it works – in fact, getting only your camp on board is enough to have the most popular book in the literature.

4. The Substance

With the core take out the way, let's get into some of the substance. Since the book is over 500 pages long, this will inevitably include some picking and choosing. Note that these sections have no dependence on each other.

-

The Apparent Unity of Consciousness: The core feature of the Cartesian Theater is that consciousness has a singular, unified ground truth, but a central theme of the book is how the brain's processing is distributed in time and space. How strong is this as an argument against the Theater?

-

Blindsight: Dennett spends over half of a chapter on the phenomenon where supposedly blind people can solve visual tasks. But what does Blindsight actually show?

-

The Color Phi Phenomenon: There is a perceptual experiment said to debunk the Cartesian Theater. This may be the least interesting section because I think Dennett's argument ultimately just doesn't work, but I've included it in the review because Dennett puts a lot of stock in it.

-

The Rest: I briefly go into what I didn't talk about and why.

The Apparent Unity of Consciousness

The Cartesian Theater asserts a unified consciousness, yet information is (both spatially and temporally) smeared throughout the brain. This conflicting state of affairs is probably the most important argument made in the book, seeing as it's the core motivation behind the multiple Drafts Model. As Charlie Steiner put it in his review:

Dennett spends a lot of time defending the distributed nature of the brain, and uses it to do a lot of heavy philosophical lifting, but it's always in a slightly new context, and it feels worthwhile each time.

In the book, it is implicitly assumed that such distributed processing disproves the Cartesian Theater outright. That's because for Camp #1, the labels "consciousness" and "physical information processing in the brain" refer to the same thing; hence, if one is distributed, so is the other. Here's a longer quote from Dennett where you can notice this equivocation clearly:

The Cartesian Theater is a metaphorical picture of how conscious experience must sit in the brain. It seems at first to be an innocent extrapolation of the familiar and undeniable fact that for everyday, macroscopic time intervals, we can indeed order events into the two categories "not yet observed" and "already observed." We do this by locating the observer at a point and plotting the motions of the vehicles of information relative to that point. But when we try to extend this method to explain phenomena involving very short time intervals, we encounter a logical difficulty: If the "point" of view of the observer must be smeared over a rather large volume in the observer's brain, the observer's own subjective sense of sequence and simultaneity must be determined by something other than "order of arrival," since order of arrival is incompletely defined until the relevant destination is specified, If A beats B to one finish line but B beats A to another, which result fixes subjective sequence in consciousness? (Cf. Minsky, 1985, p. 61.) Pappel speaks of the moments at which sight and sound become "centrally available" in the brain, but which point or points of "central availability" would "count" as a determiner of experienced order, and why? When we try to answer this question, we will be forced to abandon the Cartesian Theater and replace it with a new model.

The book sticks with this perspective throughout, so it may offer little to people who don't share it. But we're free to expand on it here, so let's do that – let's see how much punch the argument has for people who accept the Camp #2 axioms. Specifically, say we assume that

-

The unity of consciousness is real; and

-

Consciousness is conceptually distinct from the processes that give rise to it

Given these assumptions, our problem becomes that of explaining how such a unified consciousness can arise from a spatially distributed implementation. In academic philosophy, this is called the Binding Problem (BP),[7] and it remains a subject of heavy debate. The BP only makes sense to Camp #2, which is why it's glossed over in the book, but Dennett's emphasis on spatial distribution can be recast as an argument against Camp #2 by appealing to the impossibility of binding. I.e., "if there was a Cartesian Theater, then you would have to solve the BP, but the BP is obviously impossible, so there is no Cartesian Theater."

Let's take a few paragraphs to understand the BP and why it is hard. If you're in Camp #1, the first and most difficult step here will just be to grasp the problem conceptually. There are a bunch of different processing streams in the brain, and some of them have cross-modal integration – doesn't seem like a problem, does it? But again, remember that we are assuming consciousness is conceptually separate from these processes. Just for one section, you must treat our experience at any time as a single, genuinely unified object that our brain gives rise to.

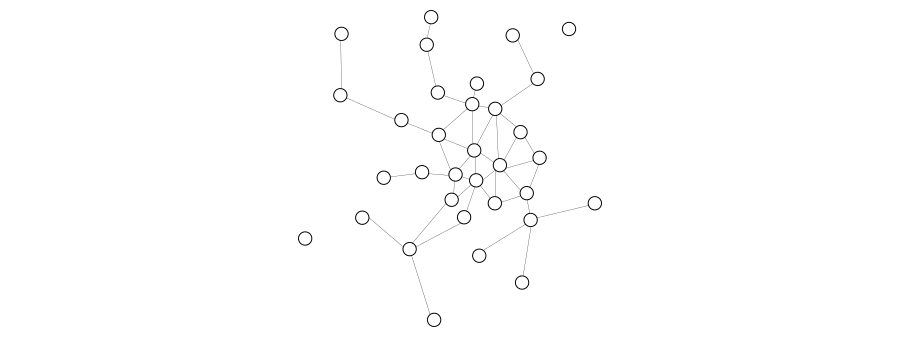

Moreover, we're not entertaining any kind of substance dualism, so there is no mind stuff that can come to the rescue. Instead, we'll assume our brain can be approximately modeled by a causal graph of neurons, like so (only much larger):

This is a model we've already used in the two camps post as an example of how the camps can influence research, and I've mentioned that Camp #1 can get away with some rather hand-wavy answers. E.g., they can vaguely hint at a part of the network, or even just at some of the activity of the entire network. But now we're assuming a Camp #2 perspective, so we require a precise answer – and unless we want to claim that non-synaptic connections are doing some extraordinary work here, that means identifying a (precise!) subset of the network that gives rise to consciousness. Furthermore, it must be a proper subset because many processes in the brain are unconscious (like speech generation), and also because other people can influence your actions, which technically makes them a part of the causal network that implements your behavior.

So, how could we go about selecting such a subset? Well, Global Workspace Theory is the most popular theory in the literature, and this set of neurons over there sort of looks like a densely connected part of the network, so perhaps that is our global workspace, and hence our consciousness?

You can probably see why this approach is problematic. Whether a part of a graph is "densely connected" (or whether a node is "globally accessible") isn't a well-defined thing with crisp boundaries – it's a fuzzy property that lives on a spectrum. Since we're looking for a precise rule here, this is bad news.

Of course, Global Workspace Theory is just one theory, and it probably wasn't intended as a serious Camp #2 proposal in the first place (although some people do treat it as such). Moreover, we've ignored temporal binding and made several simplifying assumptions, so none of this is meant to be a serious breakdown of the problem. But even if we don't take this particular proposal seriously, it still gives you a sense of the kind of difficulty that any approach must overcome: it has to map a crisp, binary property (whether or not a neuron contributes to an experience) to a vague, fuzzy property. You can try to choose a different property than how connected the graph is, but whatever you choose will probably be fuzzy. There simply aren't a lot of crisp, binary properties available that (a) differ from one part of a graph to the other and (b) make any sense as a criterion for what counts toward consciousness.

And note that the above only deals with the easiest parts of the problem. All we've asked for is a rule that passes a surface-level examination – which is a much lower bar than actually being correct! Remember that the physical substrate is, in fact, spatially distributed, so if you really drill down on the causality, it seems like nothing unified ever happens. So how on earth is the consciousness object unified, and why? And if consciousness operates within the laws of physics, how the heck do you make sense of the causality? Those questions are the hard part of the BP.

The upshot is that Dennett was most certainly onto something, even if the argument isn't spelled out in the book. The distributed nature of processing doesn't directly rule out a Cartesian Theater, but it leaves Camp #2 with a serious, perhaps insurmountable problem... much unlike Camp #1, which can simply deny the apparent unity of consciousness and be done with it.

Finally, it's worth noting that the term "Cartesian Theater" is somewhat derogatory since the theater metaphor implies that experience is generated for the benefit of a non-physical observer. The definition also isn't entirely restricted to the unity of experience in the book; other ideas, like dualism or the existence of a self, are often implied to be part of the package. A serious Camp #2 thinker may bulge at the equivocation of these ideas, which is why we're ignoring them throughout the review. So when I mentioned in section 1 that many theories agree a Cartesian Theater exists, I only meant that they accept the unity of consciousness as real.

Blindsight

Blindsight is a medical condition where affected people claim to be blind yet can solve visual tasks with above-chance accuracy. Here's a summary by GPT-4:

Dennett motivates the topic as follows:

What is going on in Blindsight? Is it, as some philosophers and psychologists have urged, visual perception without consciousness — of the sort that a mere automaton might exhibit? Does it provide a disproof (or at any rate a serious embarrassment) to functionalist theories of the mind by exhibiting a case where all the functions of vision are still present, but all the good juice of consciousness has drained out? It provides no such thing.

He then spends three subchapters on Blindsight, making the following argument:

-

There's a significant behavioral difference between blindsight patients and healthy people, which is that blindsight patients need to be prompted to solve visual tasks. They seem to be blind, but if you ask them, "Is the shape we're showing you a square or a circle?", they usually get it right.

-

It's conceivable that a blindsight subject could be trained to volunteer guesses, thus narrowing the behavioral gap

-

When people play games like "locate the thimble", they often stare directly at an object before recognizing it. Dennett discusses this fact a bit and concludes that (1) attention is about ability to act ("Getting something into the forefront of your consciousness is getting it into a position where it can be reported on") and (2) other forms of conscious awareness are undefined ("[...] but [...] what further notice must your brain take of it — for the object to pass from the ranks of the merely unconsciously responded to into the background of conscious experience? The way to answer these "first-person point of view" stumpers is to ignore the first-person point of view and examine what can be learned from the third-person point of view. [...]"). Therefore, if Blindsight patients can act on visual stimuli, the difference to healthy people narrows.

-

If a Blindsight patient were successfully trained to volunteer guesses to the point that they could, e.g., read a magazine by themselves, Dennett postulates that they would most likely report seeing things, just like healthy people. (He does acknowledge that this claim is a guess about an empirical question.)

-

Cases like prosthetic vision, where blind people "see" things based on devices that communicate visual scenes to them via tactile patterns, further show that there is no fact of the matter regarding what is and isn't seeing from the first-person perspective.

If you want to flex your philosophical muscles, you can take this opportunity to think about your reaction – was Dennett successful in arguing that Blindsight doesn't undermine his position? Perhaps it even strengthens it?

.

.

.

.

.

.

.

.

.

.

If you did think about it and had ideas different from mine, you're more than welcome to drop a comment. I'm very curious to read other people's takes on this argument.

My main reaction is that it's unclear what exactly Dennett's model says about this topic and what would constitute genuine evidence for or against it. (And I don't think it's because I've missed important points in my summary – you can check out this alternative summary by Claude if you want to look for missing parts.) We vaguely understand that fuzziness in the observed data is more in line with the Camp #1 side of the issue, whereas crisp boundaries are more in line with Camp #2, but that's about it.

This lack of conceptual clarity isn't just a minor annoyance – I think it's the biggest general obstacle when discussing consciousness, and the primary reason why most successful posts tend to stay within their camp. It's probably not an accident that Dennett never spells out what kind of observation would constitute evidence for or against his position and why. By keeping it vague, he can argue at length for his position without ever really sticking his neck out.

In this case, there seems to be no possible experimental result that would falsify the Multiple Drafts Model. Even if the data conclusively showed that blindsight patients (a) can be trained to match healthy adult performance and (b) claim to still be blind, that still wouldn't clinch the case. Camp #1 people could simply assert that the talk about seeing depends on a specific module of the brain, which happens to be damaged in Blindsight patients. In general, any combination of (1) capability and (2) manner-of-reporting-on-that-capability can be instantiated in an artificial system, so there is no outcome that falsifies the Camp #1 view.

Moreover, if we consider a stronger claim, something like...

For each modality (vision, audio, etc.), if we ask people how it feels like to process inputs in that modality, we always get the same kind of description. I.e., talk about "seeing" necessarily falls out of processing visual inputs, talk about "hearing" necessarily falls out of processing auditory inputs, and so on.

... then this seems falsified by Synesthesia, which is widely recognized as a real effect in which precisely these two things come apart. Even though Blindsight and Synesthesia are generally considered separate phenomena, for our purposes, they seem to be in the same category of "cases where people can do processing in category X but describe it in ways untypical for category X". So it is unclear what the discussion on Blindsight shows – but again, this doesn't falsify the Camp #1 view because the Camp #1 view doesn't rely on the above claim.

I think the most interesting aspect of this section is not the case of Blindsight itself but the more general question of what type of observations constitute evidence for one camp over the other. I will talk more about this in future posts.

The Color Phi Phenomenon

In chapter 5, Dennett talks about cases where people misreport events – e.g., you meet a woman without glasses but later report having met a woman with glasses. He differentiates two ways such false reports can come about:

-

Orwellian. You initially perceived the right thing, but your memory was corrupted later. (E.g., you initially saw the woman without glasses, but your brain mixed up this memory with that of a different woman who was wearing glasses.)

-

Stalinesque. The error was already present when you perceived the thing for the first time. (E.g., you thought the woman was wearing glasses when you first saw her.)

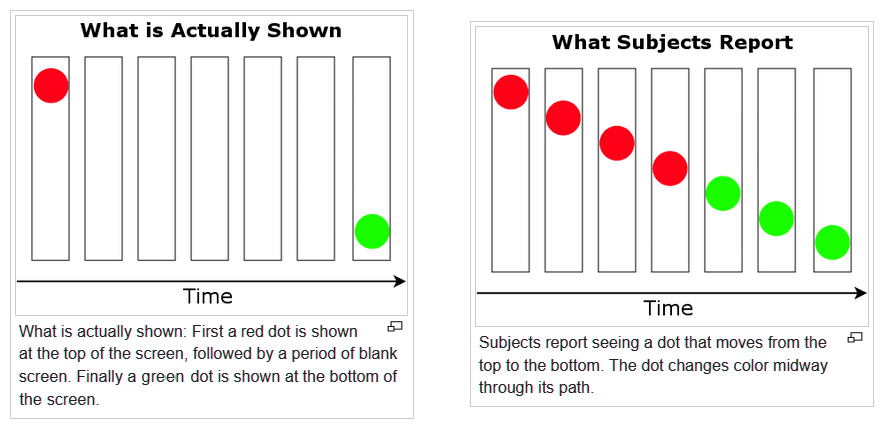

Note that Orwellian and Stalinesque are both Camp #2 ideas since they both claim that there is a fact of the matter as to what you experienced at each point in time. The way to get into Camp #1 territory is if the distinction breaks down, i.e., if there is no fact of the matter as to whether a false report was Orwellian or Stalinesque. According to Dennett, this is precisely what happens in the Color Phi Phenomenon. Here's a great explanation from Wikipedia (except I changed the colors to make it consistent with the book).

(You can try it here, although the website warns that it doesn't work for everyone, and I personally couldn't for the life of me see any movement. I still think the experiment is real, though; at least GPT-4 claims it has been replicated a bunch.)

The critical point here is that subjects not only falsely report a moving dot, but they report [seeing it changing color before the red dot was shown]. (Note the brackets in the last sentence; the report happens after the fact, but what they report is seeing the dot earlier.)

Dennett first argues that this revision cannot be Stalinesque because the brain can't fake illusory motion until the green dot is observed. The only way around this would be if the brain somehow waits 200ms to construct any conscious image, but this hypothesis can be ruled out by asking subjects to press a button as soon as they see the first dot. They can do this in less than 200ms, hence before the green dot is shown.

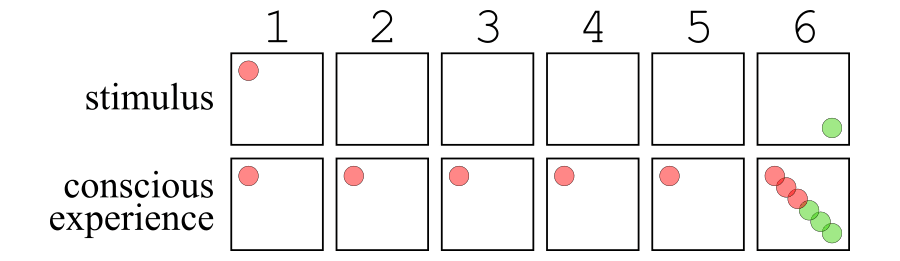

As far as I can see, this reasoning seems correct, which would rule out Stalinsesque explanations. However, Orwellian explanations seem unaffected. For example, suppose the brain integrates visual information from some past time interval – say, the last 0.2 seconds – and uses this to construct a single object, which is then used for further computation (to do object recognition, detect movement, and ultimately output motor commands). Here's how this kind of "Backward Integration" would handle the color phi phenomenon:

After time step 6, the memories of steps 2-5 are overwritten to invent a memory of movement. And the button doesn't matter here, but if the subject was asked to press a button after seeing a red spot, they might do so on, e.g., time step 4.

I don't know if this is how it works, but I think the hypothesis is reasonable enough that anyone arguing against a ground truth must refute it. Unfortunately, Dennett never really gets there. He begins by rescuing the Stalinesque version (a necessary step to argue that Stalinesque and Orwellian are indistinguishable):

The defender of the Stalinesque alternative is not defeated by this, however. Actually, he insists, the subject responded to the red spot before he was conscious of it! The directions to the subject (to respond to a red spot) had somehow trickled down from consciousness into the editing room, which (unconsciously) initiated the button-push before sending the edited version [...] up to consciousness for "viewing." The subject's memory has played no tricks on him; he is reporting exactly what he was conscious of, except for his insistence that he consciously pushed the button after seeing the red spot; his "premature" button-push was unconsciously (or preconsciously) triggered.

He then observes that the subjects' verbal reports can't differentiate between both hypotheses, which does seem right, and finishes his argument from there:

Your retrospective verbal reports must be neutral with regard to two presumed possibilities, but might not the scientists find other data they could use? They could if there was a good reason to claim that some nonverbal behavior (overt or internal) was a good sign of consciousness. But this is just where the reasons run out. Both theorists agree that there is no behavioral reaction to a content that couldn't be a merely unconscious reaction — except for subsequent telling. On the Stalinesque model there is unconscious button-pushing (and why not?). Both theorists also agree that there could be a conscious experience that left no behavioral effects. On the Orwellian model there is momentary consciousness of a stationary red spot which leaves no trace on any later reaction (and why not?).

Both models can deftly account for all the data — not just the data we already have, but the data we can imagine getting in the future. They both account for the verbal reports: One theory says they are innocently mistaken, while the other says they are accurate reports of experienced mistakes.

Moreover, we can suppose, both theorists have exactly the same theory of what happens in your brain; they agree about just where and when in the brain the mistaken content enters the causal pathways; they just disagree about whether that location is to be deemed pre-experiential or post-experiential. [...]

The problem is that he just jumps to the conclusion in the first sentence of the last paragraph. Everything up to that point seems correct, but also consistent with the Orwellian/Backward Integration hypothesis... and then he just assumes the conclusion in the next sentence.

Perhaps you could argue that, if there is no observable difference, it stands to reason that there is no physical difference in the brain? But that doesn't seem right – the only reason there is no observable difference is that Dennett carefully engineered a process in which the observable difference is minimized. It's a bit like if you wrote a code snippet like this...

x = 7

sleep(0.001)

x = 42

print(x)

... and then declared that, because there is no detectable difference between [the case where x was first 7 then 42] and [the case where it was 42 all along], there is thus no fact of the matter about the question. But that would be false – the set of flip-flops in the processor that encode the value of x had different physical states in both cases (and this difference must also leave a trace in the present; it's just too small to be detectable). Similarly, if Backward Integration is true, then subjects did first see the red dot, and this fact did leave a physical trace in the brain. It's just that the effect is minimized because the brain then immediately overwrote that memory, just as the Python program immediately overwrote the value of x.

Anyway, if there is a genuine argument against the Orwellian hypothesis here, I don't see it. Regardless, Dennett certainly does a good job explaining what the Multiple Drafts Model says, and the model he proposes is reasonable. He just doesn't rule out the alternative.

The Rest

Which parts of the book did we not cover, and why?

-

Language Generation. I've briefly mentioned in section 2 that Dennett has an entire chapter on language generation, arguing for a distributed generation mechanism. The problem is that language generation happens unconsciously, which means the Cartesian Theater is probably not involved even if it exists. This fact renders the topic mostly irrelevant to the central conflict.

-

The Evolution of Consciousness. This chapter also seems uncontroversial, but it's unlikely to be news to LessWrongians.

-

Shape Rotation. Dennett briefly discusses people's apparent ability to rotate shapes in their heads. I mostly skipped this because my commentary is too similar to that on Blindsight.

-

Philosophical Issues. The thought experiments discussed near the end of the book do put a neat bow on everything, but they're also mostly trivial given the model. Once you've accepted the Camp #1 approach, "What's it like to be a bat?" ceases to be a philosophically exciting question, and Mary's Room has already received [LW · GW] a much more thorough treatment than what's found in the book.

5. Closing Thoughts

So, should you read this book? Unless it's for catharsis/fun, I'd say probably not. Dennett isn't the best at treating opposing views fairly, so if you want to genuinely get into the topic, you can probably do better. Plus, between Charlie's review [LW · GW] and this one, we've probably covered most of the substance. But whether you read it or not, it's certainly a book to be aware of.

People familiar with the terminology would probably call this idea "substance dualism". Traditionally, other flavors of dualism don't necessarily assert such causal power. ↩︎

↩︎[...] our natural intuition is that the experience of the light or sound happens between the time the vibrations hit our sense organs and the time we manage to push the button signaling that experience. And it happens somewhere centrally, somewhere in the brain on the excited paths between the sense organ and the finger. It seems that if we could say exactly where, we could say exactly when the experience happened. And vice versa: if we could say exactly when it happened, we could say where in the brain conscious experience was located. Let's call the idea of such a centered locus in the brain Cartesian materialism, since it's the view you arrive at when you discard Descartes's dualism but fail to discard the imagery of a central (but material) Theater where "it all comes together."

In the two camps post, I mentioned the difficulty of formulating a well-defined crux between Camp #1 and Camp #2 (i.e., a claim that everyone in one camp will agree with and everyone in the other will disagree with). Is "consciousness does not have a ground truth" a solution? Unfortunately, not quite – the problem is that you can be in Camp #1 and still think consciousness has a ground truth. (In fact, Global Workspace Theory is arguably in the "consciousness in the human brain has a ground truth but is not metaphysically special" bucket.) So the claim is too strong: it will get universal disagreement from Camp #2 but only partial agreement from Camp #1. But it's still one of the better ways to frame the debate. ↩︎

Camp #2 people usually start with the assumption that qualia must exist because they perceive them, and if someone wants to convince them otherwise, it's their job to find good arguments. Such arguments usually come down to whether or not one can be mistaken about perceiving qualia. The primary objection to the idea that qualia can be hallucinated is one TAG [LW · GW] made in a comment [LW(p) · GW(p)] on the two camps post:

Saying that qualia dont really exist, but only appear to, solves nothing. For one thing qualia are definitionally appearances , so you haven't eliminated them.

i.e., the appearance itself is the thing to explain, so calling it a hallucination doesn't solve the problem. This is the key argument Camp #1 people must overcome to make progress, but Dennett doesn't go there. He instead talks about the practical difficulty of believably simulating enough details to make it convincing and uses his conclusions as a setup for his later claims about neuroscience.

The question of how to judge phenomenology comes down to the same crux. Camp #2 will usually argue that it is unscientific to doubt the existence of qualia because they are directly perceived. If that's true, heterophenomenology is the wrong way to do it because it ignores this fact.

Accepting qualia as real still leaves a lot of room for human error, and you could even argue that most of heterophenomenology remains. Nonetheless, the difference is crucial. ↩︎

His case against (substance) dualism boils down to the following two arguments

-

The concept seems contradictory. The substance in question would simultaneously have to not interact with matter (otherwise, it'd be material) but also interact with matter (otherwise, it wouldn't do anything). He draws an analogy to a ghost who can go through walls but also pick up stuff when it wants to.

-

Interaction would violate preservation of energy. The idea that physical energy is preserved is widely accepted, and an additional, non-material force would contradict this.

(& he also mentions theories of new kinds of material stuff but says they don't count as dualism).

It's pretty easy to get around both objections; one can simply hold that the non-material substance only interacts with matter through a few specific channels & that the interactions are all energy-neutral on net.

Having read both books, I think David Chalmers, despite being a Camp #2 person, has a much stronger case against substance dualism in his book The Conscious Mind (but I won't cover it here). ↩︎

-

People who refer to Dennett in discussions about consciousness tend to say stuff like,

-

"I've read the book and immediately agreed with everything in it"; or

-

"What other people said never made sense to me, then I read Dennett, and it all seemed obviously true"; or

-

"I haven't read the entire book, but my views seem similar to those of Dennett".

But I've never seen anyone claim that the book changed their view (though I'm sure there's an exception somewhere). In fairness, people don't tend to change their minds a lot in general, especially not about this topic. ↩︎

-

sometimes also "Boundary Problem" or "Combination Problem" ↩︎

14 comments

Comments sorted by top scores.

comment by reconstellate · 2023-09-18T04:20:38.542Z · LW(p) · GW(p)

Thanks for the review! I remember your last post.

I'm definitely a Camp 2 person, though I have several Camp 1 beliefs. Consciousness pretty obviously has to be physical, and it seems likely that it's evolved. I'm in a perpetual state of aporia trying to reconcile this with Camp 2 intuitions. Treating my own directly-apprehensible experience as fictional worldbuilding seems nonsensical, as any outside evidence is going to be running through that experience, and without a root of trust in my own experiences there's no way out of Cartesian doubt.

Probably relevant is that I have very mild synesthesia, which was mentioned in the post as challenging to Dennett's arguments. Most concepts have a color and/or texture for me - for example nitrogen is a glassy navy blue, the concept of "brittle" is smooth chrome lavender, the number 17 is green and a little rough. Unlike more classical synesthesics this has never been overwhelming to me, but for as far back as I can remember I've associated textures and colors with most things. When drugs move around my synesthesic associations, it becomes very hard to think about or remember anything, it's like the items around my house have all been relocated and everything needs to be searched for from scratch. Having such a relationship with qualia, it's pretty inconceivable for me to deny their existence. For me, qualia are synonymous with the concept of "internal experiences", by which I mean "experiences when there is something it is like to have them".

Being unable to accept the premise that qualia don't exist, my stumbling block with Camp 1 explanations is they never hit on why internal experience would come out of only some small subset of physical reactions. It seems very arbitrary! Information transfer is happening everywhere all at once! And the alternative to believing internal experience is the result of some arbitrary subset of process-space is panpsychism, which comes with countless problems of its own. (namely, the combination problem and the fact it's incompatible with consciousness as an evolved trait).

I would welcome any attempts to help me detangle this, it's been one of the more persistently frustrating issues I've thought about.

Replies from: sil-ver↑ comment by Rafael Harth (sil-ver) · 2023-09-18T18:46:42.211Z · LW(p) · GW(p)

I'm definitely a Camp 2 person, though I have several Camp 1 beliefs. Consciousness pretty obviously has to be physical, and it seems likely that it's evolved. I'm in a perpetual state of aporia trying to reconcile this with Camp 2 intuitions.

I wouldn't call those Camp #1 beliefs. It's true that virtually all of Camp #1 would agree with this, but plenty of Camp #2 does as well. Like, you can accept physicalism and be Camp #2, deny physicalism and be Camp #2, or accept physicalism and be Camp #1 -- those are basically the three options, and you seem to be in the first group. Especially based on your second-last paragraph, I think it's quite clear that you conceptually separate consciousness from the processes that exhibit it. I don't think you'll ever find a home with Camp #1 explanations.

I briefly mentioned in the post that the way Dennett frames the issue is a bit disingenuous since the Cartesian Theater has a bunch of associations that Camp #2 people don't have to hold.

Being a physicalist and Camp #2 of course leaves you with not having any satisfying answer for how consciousness works. That's just the state of things.

The synesthesia thing is super interesting. I'd love to know how strong the correlation is between having this condition, even if mild, and being Camp #2.

comment by TAG · 2023-09-18T12:04:29.559Z · LW(p) · GW(p)

Heterophenomenology immediately closes the door to Camp #2 style reasoning, which is all about leveraging the fact of experience as a phenomenon above and beyond our reports of it.

One can report that one's experiences are not fully describable in one's reports, and people often do. Heterophenomenology as an exclusive approach also implies refusing to introspect ones own consciousness, which seems highly question-begging to Camp 2. In the ears of Camp #2-ers, Camp #1 people say a lot of wacky stuff, and they usually don't realize how wacky they sound.

-It's obvious that conscious experience exists.

-Yes, it sure looks like the brain is doing a lot of non-parallel processing that involves several spatially distributed brain areas at once, so

-You mean, it looks from the outside. But I'm not just talking about the computational process, which I am not even aware of as such, I am talking about qualia, conscious experience.

-Define qualia

-Look at a sunset. The way it looks is a quale. taste some chocolate,. The way it tastes is a quale.

-Well, I got my experimental subject to look at a sunset and taste some chocolate, and wrote down their reports. What's that supposed to tell me?

-No, I mean you do it.

-OK, but I don't see how that proves the existence of non-material experience stuff!

-I didn't say it does!

-Buy you qualophiles are all the same -- you're all dualists and you all believe in zombies!

-Sigh....!

for Camp #1, the labels "consciousness" and "physical information processing in the brain" refer to the same thing;

That's neurologically false: most information processing in the brain is unconscious.

In the two camps post, I mentioned the difficulty of formulating a well-defined crux between Camp #1 and Camp #2 (i.e., a claim that everyone in one camp will agree with and everyone in the other will disagree with).

I would have thought it was "subjective evidence is evidence, too". Or the stronger form "all evidence is fundamentally subjective evidence, perceptions by subjects, and then a subset is counted as objective on basis of measurability, intersubjective agreement, etc"

Remember that the physicalist case against qualia is that qualia are not found on the physical map --which is presumed to be a complete description of the territory -- so they do not exist in the territory. The counterargument is that physics is based on incomplete evidence (see above) and is not even trying to model anything subjective.

Many theories agree that a Cartesian Theater exists, though they usually won't describe it as such. Conversely, the Multiple Drafts Model is what Dennett champions throughout the book.

OK. So what? If there isn't a CT , then ...there isn't a single definitive set of qualia. That includes the idea that there are no qualia at all , but doesn't necessitate it. Most of CE just isn't relevant to qualophilia, providing you take a lightweight view of qualia.

What is all the fuss about? Denett takes it that qualia have a conjunction of properties, and if any one is missing, so much for qualia.

. "Some philosophers (e.g, Dennett 1987, 1991) use the term ‘qualia’ in a still more restricted way so that qualia are intrinsic properties of experiences that are also ineffable, nonphysical, and ‘given’ to their subjects incorrigibly (without the possibility of error). "

But what the fuss is about is materialism, versus dualism, etc. From that point of view, not all the properties of qualia are equal -- and, in fact, it is the ineffability/subjectivty that causes the problems, not the directness/certainty.

(I find it striking, BTW, that neither review quotes Dennet's anti-qualia argument.

Camp #2 people usually start with the assumption that qualia must exist because they perceive them, and if someone wants to convince them otherwise, it's their job to find good arguments. Such arguments usually come down to whether or not one can be mistaken about perceiving qualia.

"Exist enough to need explaining". If you want to say they are illusions, you need to explain how the illusion is generated. "Enough to need explaining" is quite compatible with MD.

It's conceivable that a blindsight subject could be trained to volunteer guesses, thus narrowing the behavioral gap

But, speculation aside, blindsight, vision without qualia, is worse than normal vision. Also, synaesthesia can be an advantage in problem solving. So there are two phenomenal which are only describable in terms of some kind of phenomenality or qualia -- and where qualia make a difference.

Mary's Room has already received a much more thorough treatment than what's found in the book.

That's a relative rather than absolute claim. The article has pushback from camp 2 [LW(p) · GW(p)]

Note that if you accept that Mary wouldn't be able to tell what red looks like, you have admitted that physicalism is wrong in one sense , ie. it isn't a compete description of everything; and if you think the answer is that Mary needs to instantiate the brain state, then you imply that it is false in another sense, that there are fundamentally subjective states.

Replies from: sil-ver↑ comment by Rafael Harth (sil-ver) · 2023-09-18T16:54:23.461Z · LW(p) · GW(p)

That's a relative rather than absolute claim. The article has pushback from camp 2

Yeah -- I didn't mean to imply that orthormal was or wasn't successful in dissolving the thought experiment, only that his case (plus that of some of the commenters who agreed with him) is stronger than what Dennett provides in the book.

comment by Nathaniel Monson (nathaniel-monson) · 2023-09-17T16:55:29.385Z · LW(p) · GW(p)

Do you have candidates for intermediate views? Many-drafts which seem convergent, or fuzzy Cartesian theatres? (maybe graph-theoretically translating to nested subnetworks of neurons where we might say "this set is necessarily core, this larger set is semicore/core in frequent circumstances, this still larger set is usually un-core, but changeable, and outside this is nothing?)

Replies from: TAG, sil-ver↑ comment by TAG · 2023-09-21T22:01:40.731Z · LW(p) · GW(p)

There's an argument that a distributed mind needs to have some sort of central executive, even if fuzzily defined, in order to make decisions about actions ... just because there ultimately have one body to control ...and it can't do contradictory things, and it can't rest in endless indecision.

Consider the Lamprey:

"How does the lamprey decide what to do? Within the lamprey basal ganglia lies a key structure called the striatum, which is the portion of the basal ganglia that receives most of the incoming signals from other parts of the brain. The striatum receives “bids” from other brain regions, each of which represents a specific action. A little piece of the lamprey’s brain is whispering “mate” to the striatum, while another piece is shouting “flee the predator” and so on. It would be a very bad idea for these movements to occur simultaneously – because a lamprey can’t do all of them at the same time – so to prevent simultaneous activation of many different movements, all these regions are held in check by powerful inhibitory connections from the basal ganglia. This means that the basal ganglia keep all behaviors in “off” mode by default. Only once a specific action’s bid has been selected do the basal ganglia turn off this inhibitory control, allowing the behavior to occur. You can think of the basal ganglia as a bouncer that chooses which behavior gets access to the muscles and turns away the rest. This fulfills the first key property of a selector: it must be able to pick one option and allow it access to the muscles."

(Scott Alexander)

But how can a selector make a decision on the basis of multiple drafts which are themselves equally weighted? If inaction is not an option , a coin needs to be flipped. Maybe it's flipped in the theatre, maybe it's cast in the homunculus, maybe there is no way of telling.

But you can tell it works that way because of things like the Necker Cube illusion...your brain, as they say, can switch between two interpretations, but can't hover in the middle.

↑ comment by Rafael Harth (sil-ver) · 2023-09-17T17:05:13.861Z · LW(p) · GW(p)

I think the philosophical component of the camps is binary, so intermediate views aren't possible. On the empirical side, the problem that it's not clear what evidence for one side over the other looks like. You kind of need to solve this first to figure out where on the spectrum a physical theory falls.

Replies from: TAG↑ comment by TAG · 2023-09-20T14:28:57.946Z · LW(p) · GW(p)

The camps as you have defined them differ on what the explanation of consciousness is, and also on what the explanandum is. The latter is much more of a binary than the former. There are maybe 11 putative explanations of mind-body relationship, ranging from eliminativism to idealism, with maybe 5 versions of dualism in the middle. But there is a fairly clear distinction between the people who think consciousness is exemplified but what they, as a subject are/have; and the people who think consciousness is a set of capacities and functions exemplified by other entities.

Looking at it that way, it's difficult to see what your argument for camp 1 is. You don't seem to believe you personally are an experienceless zombie, and you also don't seem to think that camp 2 are making a semantic error in defining consciousness subjectively. And you can't argue that camp 1 have the right definition of consciousness because they have the right ontology , since a) they don't have a single ontology b) the right ontology depends on the right explanation depends on the right definition.

Replies from: sil-ver↑ comment by Rafael Harth (sil-ver) · 2023-09-20T15:32:28.425Z · LW(p) · GW(p)

I fully agree with your first paragraph, but I'm confused by the second. Where am I making an argument for camp #1?

comment by Noosphere89 (sharmake-farah) · 2025-01-20T23:49:58.075Z · LW(p) · GW(p)

For this review, I'd probably give it a +4, mostly for nicely summarizing the book well, but also because Daniel Dennett made some very useful productive mistakes, and identified a very important property that has to be explained, no matter what theory you choose, and I'll describe it here.

The important property is that the brain is distributed, and this matters.

For the most likely theory by far on how consciousness actually works in the general case, see my review of Anil Seth's theory, and the summary is that Anil Seth's theory broadly solves the hard problem by reducing consciousness into specific components, and focusing on the specific properties instead of the general cloud of properties that consciousness invokes, and the cases where it's wrong aren't central, and are generalizable/patchable for more situations.

More here:

https://www.lesswrong.com/posts/FQhtpHFiPacG3KrvD/seth-explains-consciousness#7ncCBPLcCwpRYdXuG [LW(p) · GW(p)]

I basically agree with Global Workspace Theory as an explanation of why consciousness has the weird properties it does in humans, beyond the fact that we are conscious.

But Anil Seth's model isn't the focus, Daniel Dennett's model is, so I'll do that here.

On section 1, Rafael Harth argues that Dennett is making a distinction between 2 models of consciousness, and this difference comes down towards whether consciousness is more like a Cartesian theater, where there's a single unitary actor, or the Multiple Drafts Model, where consciousness is essentially a draft we always rewrite throughout our lives, and there's no ground truth to the matter, and the inputs go through a distributed processing system, and he favors the latter model of consciousness.

On section 2, the review shifts to a discussion of Heterophenomenology, where the premise is that consciousness debates should be treated like fictional works, where things are always in principle epistemically questionable (except for stuff that is impossible by pure logic), and Rafael Harth notes that this seemingly closes the door to Camp #2 style reasoning, though TAG has a counter argument here:

https://www.lesswrong.com/posts/naEDcbicBBykiTFwi/book-review-consciousness-explained-as-the-great-catalyst#N4JhNNqLupbX8maRx [LW(p) · GW(p)]

Also, it talks about how a distributed model is better than a unitary model, and how the Multiple Drafts model can solve quite a number of philosophical problems.

For more on distributed decisions, read here:

https://www.lesswrong.com/posts/32sm7diYTky5KhF6w/distributed-decisions [LW · GW]

On section 3, I agree with Rafael Harth that Dennett doesn't really justify his ideas well, and I found this quote below especially alarming in how badly he failed theory of mind for the intellectual views he disagrees with, and while I myself believe the Cartesian theater doesn't exist, and tend to agree way more with Camp 1 over Camp 2, the quote is still indefensibly bad, and I'll reproduce it:

It is time to recognize the idea of the possibility of zombies for what it is: not a serious philosophical idea but a preposterous and ignoble relic of ancient prejudices. Maybe women aren't really conscious! Maybe Jews! What pernicious nonsense.

I have to agree with this explanation for why he doesn't do well justifying consciousness:

At various points in the book, Dennett has a back-and-forth with a fictional character named Otto, who is introduced to represent the opposing view. These dialogues may have been the time to address Camp #2, but Otto is stuck in a strange limbo between both camps that offers little fuel for resistance. He's condemned to keep opposing Dennett but never disputes heterophenomenology, so his resistance is bound to fail. He will usually reference some internal state, which Dennett will inevitably explain away, leaving Otto bruised and defeated.

For Section 4, we get treated to a few different topics.

One of them is about the distributed nature of the brain, but also about how a unified consciousness can emerge from this approach, if at all.

My own take is that I think 1 is partially true, in the sense that there is a way to synchronize consciousness into an approximately unified state, but that 2 is false, in that the unitary consciousness is not conceptually distinct from the parts that give rise to consciousness.

The binding problem is essentially solvable, but a lot of the specialness of the problem gets removed, IMO, because it's at best approximately unified, and never perfectly unified, and the reason we perceive our consciousness as unitary is because the human body is small enough, and the latency is optimized such that you can almost immediately get conscious awareness of something, because there are hard latency constraints from nature, and if you fail, you get eaten (BTW this also explains why humans use so much energy, since latency needs to be optimized hard, and thus you have to go deep into unfavorable territory to use a brain in an external environment):

https://www.lesswrong.com/posts/zTDkhm6yFq6edhZ7L/inference-cost-limits-the-impact-of-ever-larger-models#ZHdTBrezWFagrAbkF [LW(p) · GW(p)]

On Blindsight, Harth realizes that Dennett's arguments are unfortunately not very falsifiable, and the natural strengthening of the claim is basically false as an empirical matter, which is a problem here.

On the Color Phi phenomenon, Harth is correct to question Dennett's certainty, and this is a problem that the book as a whole shares, where it's too certain of it's own conclusions, and while the models it's arguing against are pretty cartoonishly wrong, it's too certain of itself.

Overall, I view Dennett as someone who made productive mistakes on the way to a better theory of consciousness, but also was too certain of itself in retrospect, combined with the bad theory of mind makes me agree with Harth that the books should not be read, and instead the reviews should be read.

However, the productive mistakes were productive enough, combined with the review being good at it's job that I do have to give it a +4 here.

comment by Caerulea-Lawrence (humm1lity) · 2023-09-17T17:28:22.619Z · LW(p) · GW(p)

Hi Rafael Harth,

I did remember reading, Why it's so hard to talk about Consciousness [LW · GW], and shrinking back from the conflict that you wrote as an example of how the two camps usually interact.

Reading this review was interesting. I do feel like I wouldn't necessarily want to read the book, but reading this was worth it. I also drew a parallel between the contentious point about consciousness, and the Typical Mind/psych Fallacy (Generalizing from One Example [LW · GW] - by Scott Alexander here on LW).

One connection I see is that, similar to the Color Phi phenomenon (I only see two dots), people seem to have different kinds of abilities or skills - but I don't know of anyone having 'All' of them. Other skills involving sight that I know of, are the out-of-the-body experiences of NDE's, (Near death experiences) seeing 'remnants/ghosts' and also the ability to see some sort of 'light' when tracking, as seen by 'modern' animal communicators and tribalist hunters (30:08 and 31:15).

Since we seem to be unaware of the different sets of skills a human might possess, how they can be used, and how different they are 'processed', it kind of seems like Camp 1 and Camp 2 are fighting over a Typical Mind Fallacy - that one's experience is generalized to others, and this view seen as the only one possible.

Personally, I seem to fit into the Camp 2, but at the same time, I don't disagree with Camp 1. I mean, if both people in your example [LW · GW] are trustworthy, the only reasonable thing to me seems to believe that both of them are right about something.

If there is some Typical Mind Fallacy at play, there should be some underlying skills/mechanisms that those in each group have/have developed, each giving them certain advantages, possibilities or simply different perceptions.

On a side-note, for those of us interested in that, I wonder if others thought these two camps align well with Sensors an Intuitives in Myers-Briggs?

Thanks for writing this.

Kindly,

Caerulea-Lawrence

↑ comment by Rafael Harth (sil-ver) · 2023-09-17T18:02:38.875Z · LW(p) · GW(p)

I did remember reading, Why it's so hard to talk about Consciousness, and shrinking back from the conflict that you wrote as an example of how the two camps usually interact.

Thanks for saying that. Yeah hmm I could have definitely opened the post in a more professional/descriptive/less jokey way.

Since we seem to be unaware of the different sets of skills a human might possess, how they can be used, and how different they are 'processed', it kind of seems like Camp 1 and Camp 2 are fighting over a Typical Mind Fallacy - that one's experience is generalized to others, and this view seen as the only one possible.

I tend to think the camps are about philosophical interpretations and not different experiences [LW(p) · GW(p)], but it's hard to know for sure. I'd be skeptical about correlations with MBTI for that reason, though it would be cool.

(I only see two dots)

At this point, I've heard this from so many people that I'm beginning to wonder if the phenomenon perhaps simply doesn't exist. Or I guess maybe the site doesn't do it right.

Replies from: humm1lity↑ comment by Caerulea-Lawrence (humm1lity) · 2023-09-18T18:38:56.582Z · LW(p) · GW(p)

Thanks for the answer,

Still, I know I read somewhere about intuitives using a lot of their energy on reflection, and so I gathered that that kind of dual-action might explain part of the reason why someone would talk about 'qualia' as something you are 'aware' of as it happens.

I mean, if most of one's energy is focused on a process not directly visible/tangible to one's 'sense perception', I don't see why people wouldn't feel that there was something separate from their sense perception alone. Whereas with sensors, and it being flipped, it seems more reasonable to assume that since the direct sense perceptions are heavily focused on, the 'invisible/intangible' process gets more easily disregarded.

The thing is, there have been instances where I didn't 'feel' my usual way around qualia. In situations that were very physical demanding, repetitive or very painful/dangerous, I was definitely 'awake', but I just 'was'. These situations are pretty far between, so it isn't something that I'm that familiar with - but I'm pretty certain that if I was in that space most of the time, and probably learned how to maneuver things there better - I would no longer talk about being in Camp 2, but in Camp 1.

I would be very surprised if I could see the color Phi phenomenon, as I just think that I would have noticed it already. But, as with many such things, maybe it simply is hidden in plain sight?

comment by dirk (abandon) · 2025-01-21T03:10:35.221Z · LW(p) · GW(p)

You can try it here, although the website warns that it doesn't work for everyone, and I personally couldn't for the life of me see any movement.

Thanks for the link! I can only see two dot-positions, but if I turn the inter-dot speed up and randomize the direction it feels as though the red dot is moving toward the blue dot (which in turn feels as though it's continuing in the same direction to a lesser extent). It almost feels like seeing illusory contours but for motion; fascinating experience!