Seth Explains Consciousness

post by Jacob Falkovich (Jacobian) · 2023-08-22T18:06:42.653Z · LW · GW · 130 commentsThis is a link post for https://putanumonit.com/2023/08/19/seth-explains-consciousness/

Contents

The Real Problem Seeing a Strawberry Cybernetic Organism Your brain is a cybernetic regulator controlling your body into the state of being alive. Self Image Body Ownership First-person Perspective Narrative Self Free Will Being a Map None 131 comments

The Real Problem

For as long as there have been philosophers, they loved philosophizing about what life really is. Plato focused on nutrition and reproduction as the core features of living organisms. Aristotle claimed that it was ultimately about resisting perturbations. In the East the focus was less on function and more on essence: the Chinese posited ethereal fractions of qi as the animating force, similar to the Sanskrit prana or the Hebrew neshama. This lively debate kept rolling for 2,500 years — élan vital is a 20th century coinage — accompanied by the sense of an enduring mystery, a fundamental inscrutability about life that will not yield.

And then, suddenly, this debate dissipated. This wasn’t caused by a philosophical breakthrough, by some clever argument or incisive definition that satisfied all sides and deflected all counters. It was the slow accumulation of biological science that broke “Life” down into digestible components, from the biochemistry of living bodies to the thermodynamics of metabolism to genetics. People may still quibble about how to classify a virus that possesses some but not all of life’s properties, but these semantic arguments aren’t the main concern of biologists. Even among the general public who can’t tell a phospholipid from a possum there’s no longer a sense that there’s some impenetrable mystery regarding how life can arise from mere matter.

In Being You, Anil Seth is doing the same to the mystery of consciousness. Philosophers of consciousness have committed the same sins as “philosophers of life” before them: they have mistaken their own confusion for a fundamental mystery, and, as with élan vital, they smuggled in foreign substances to cover the gaps. This is René Descartes’ res cogitans, a mental substance that is separate from the material.

This Cartesian dualism in various disguises is at the heart of most “paradoxes” of consciousness. P-zombies are beings materially identical to humans but lacking this special res cogitans sauce, and their conceivability requires accepting substance dualism. The famous “hard problem of consciousness” asks how a “rich inner life” (i.e., res cogitans) can arise from mere “physical processing” and claims that no study of the physical could ever give a satisfying answer.

Being You by Anil Seth answers these philosophical paradoxes by refusing to engage in all but the minimum required philosophizing. Seth’s approach is to study this “rich inner life” directly, as an object of science, instead of musing about its impossibility. After all, phenomenological experience is what’s directly available to any of us to observe.

As with life, consciousness can be broken into multiple components and aspects that can be explained, predicted, and controlled. If we can do all three we can claim a true understanding of each. And after we’ve achieved it, this understanding of what Seth calls “the real problem of consciousness” directly answers or simply dissolves enduring philosophical conundrums such as:

- What is it like to be a bat?

- How can I have free will in a deterministic universe?

- Why am I me and not Britney Spears?

- Is “the dress” white and gold or blue and black?

Or at least, these conundrums feel resolved to me. Your experience may vary, which is also one of the key insights about experience that Being You imparts.

The original photograph of “the dress”

Seeing a Strawberry

On a plate in front of you is a strawberry. Inside your skull is a brain, a collection of neurons that have direct access only to the electrochemical state of other neurons, not to strawberries. How does the strawberry out there create the perception of redness in the brain?

In the common view of perception, red light from the strawberry hits the red-sensitive cones in your retina. These cones are wired into other neurons that detect edges, then shapes, and finally these are combined into an image of a red strawberry. This view is intuitively appealing: when we see a strawberry we perceive that a strawberry is right there, and the extant strawberry intuitively seems to be the sole and sufficient cause of our perception of redness.

But if we study closely any element of this perception, we find the common sense intuition immediately challenged.

You may see a strawberry up close or far away, at different angles, partially obscured, in dim light, etc. The perception of it as red, roughly conical, and about an inch across doesn’t change even though the light hitting your retina is completely different in each case: different angles of your visual field, different wavelengths, and so on. In fact, you will perceive a red strawberry in the absence of any red light at all, as in the following image that contains nary a single red-hued pixel in it:

You can zoom in to check, the red-seeming pixels are all gray with R<G, B

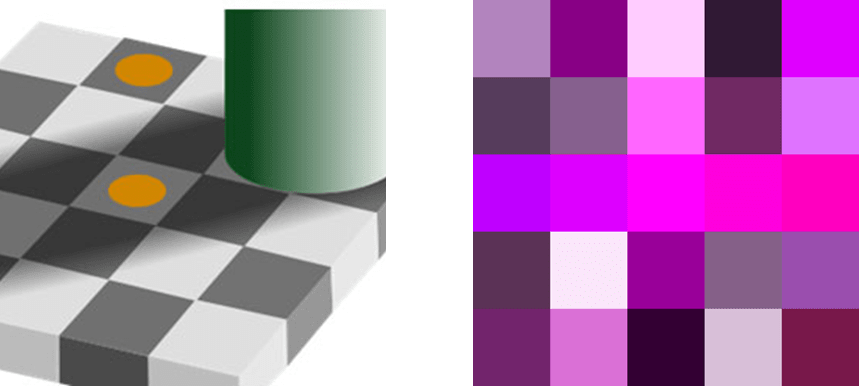

You (well, some of you) can simply visualize a red strawberry with your eyes closed, or in a dream, or on acid. People can perceive redness through color-grapheme synaesthesia, including people who have been blind for decades. You easily perceive magenta, a color which has no associated wavelength at all, while the same exact wavelengths coming from different parts of the same image can produce perceptions of entirely different colors as in Adelson’s chessboard illusion. Wherever the perception of color is coming from, it is certainly not the mere bottom-up decoding of wavelengths of light.

And again, this redness is somehow perceived by a collection of 86 billion neurons, none of which come labeled “red” or “strawberry” or even “part of the visual system”. They just are. To understand seeing a strawberry we need to ask: how could you derive strawberries if all you had access to are the states of 86 billion simple variables and no prior idea of the connection between them?

After observing patterns of neurons firing for a while, you will notice that the states of some neurons are entirely determined by the states of others. Others appear more independent, with states that can’t be conclusively derived from the state of the rest of the brain. These independent neurons have non-random patterns — you may notice that some of them statistically tend to fire together, for example. You could infer that there are hidden causes outside the brain that affect these, and consider the state of independent neurons to be a sensory input effected by these hidden causes. To make sense of your senses, you must model this hidden world external to the brain.

Perhaps these sensory neurons that fired together are red-sensing cones in your retina. Their congruence implies that the hidden cause of their firing (let’s call it “colored light”, although it isn’t labeled as such in the brain itself) comes from continuous “surfaces” that reflect a similar hue throughout. This isn’t a given — “color” could be distributed randomly pixel by pixel throughout space — but it’s a reasonable inference from the states of neurons that come into being. Your model doesn’t contain the labels “colored light” and “surface” but it does contain objects with the property of stably and homogeneously colored surfaces.

You also notice that if some blue-sensing cones suddenly activate (perhaps you went from a warmly illuminated room to stand under a blue sky) this dampens the activation of the red-sensing ones elsewhere. Thus, the best model that predicts the state of all your retinal neurons is that surfaces have a fixed property that affects the relative activation of your cones. “Red” is your model of a property of a surface that activates red-sensing cones if illuminated by warm light but will activate all cones similarly (as a gray surface would normally) if the illuminant is cool. This explains the red-appearing gray strawberries in the greenish image above, and the general property of “discounting the illuminant” in human vision.

We have also solved the mystery of “the dress”: if you perceive it as white and gold it’s because your brain models it as being illuminated by a blue light — perhaps you trained it by shopping for clothes often in outdoor bazaars. If you see it as blue and black you must imagine it in a warmly lit indoor space. Spend more time outdoors and you may start to perceive it differently!

The important point here is that “redness” is a property of your brain’s best model for predicting the states of certain neurons. Redness is not “objective” in the sense of being “in the object”, it only exists in the models generated by the sort of brains which are hooked up to eyes. It feels objective because 92% of people will share your assessment (8% are colorblind) as opposed to ~50% agreement on the dress, but they have the same status of being generated by your brain. We intuitively separate “objective” properties of a strawberry (red, real, occupying a volume) from “subjective” ones (good in salads, pretty, evocative of spring) but all of these are properties of your brain’s predictive model of strawberries, they’re not out there to be perceived in a brain-independent way.

These models are “predictive” in the important sense that they perceive not just how things are at the moment but also anticipate how your sensory inputs would change under various conditions and as a consequence of your own actions. Thus:

- Red = would create a perception of a warmer color relative to the illuminant even if the illumination changes.

- Good in salads = would create perceptions of deliciousness and positive affect if consumed alongside arugula and goat cheese.

- Real = would look like a strawberry from a different angle if you walked around it, and would generate perceptions of solidity and weight if you picked it up. An image of a strawberry on a screen generates almost the same visual input as a physical strawberry, but you perceive it as very different (unreal) because you predict different consequences to trying to grab it.

This, in broad strokes, is the predictive processing theory of perception. But Being You doesn’t just lay out the how of predictive processing, it also answers the how come and the so what.

Cybernetic Organism

Why is our brain “trying to predict its sensory inputs”? What does it even mean for it to have a goal?

Seth draws the insightful comparison to cybernetic systems, systems that control some variable of interest using feedback loops. These range in complexity from a thermostat that turns a heater on and off to regulate temperature in a room to an ecosystem where plant and animal populations are balanced through complicated interactions. A conspicuous feature of cybernetic systems is that they usually appear to have a “purpose”, like a self-guided missile aiming at a target or your thermostat aiming at a temperature goal.

The Good Regulator Theorem highlights the importance of appropriately matching the complexity of a regulator system to the complexity of the environment it operates in. By understanding this principle, scientists and engineers can develop more robust and efficient control systems that can effectively regulate and adapt to dynamic environments.

An important insight about cybernetic systems was formulated by William Ashby and Roger Conant, stating that “every good regulator of a system must be (or contain) a model of that system”. A thermostat that has access only to a thermometer will do a worse job at regulating a room’s temperature than one that has access to weather forecasts, the properties of the room and the heater, and its own tolerances and inaccuracies. The more mutual information there is between the regulator and its environment, the better it can exert control over it.

Your brain is a cybernetic regulator controlling your body into the state of being alive.

The Terminator, a cybernetic organism of a more metal variety

This is a consequence of evolution and, more broadly, thermodynamics. Being alive is a very low entropy state — your body temperature is in a narrow range around 98.6°F, your organs are neatly arranged — in a high entropy world that inexorably tries to dissolve you into room-temperature mush. You can’t persist long in your special state of living by being passive, you have to actively regulate every aspect of yourself and your environment that impacts your vitals. You have to take action. As Ashby and Conant said, to regulate yourself and your environment you must comprehensively model both, and in particular the consequences of your actions on both.

Thus, while all of your perceptions are subjective in the sense that they are features of your mind’s map and not of any independent territory, they are not arbitrary or subject to your whims. The core of your model is an unmodifiable prediction, a fixed “hyperprior”, of remaining alive. Its subjective flavor is the base inchoate sense of simply being a living organism, and the survival instinct that overrides all other perceptions when the prediction of staying alive is threatened with disconfirmation. One level above the prediction of just living are predictions of your body’s vitals, from its basic integrity to control of variables like heart rate or blood sugar and oxygenation. Your most important and vivid perceptions — embodiment, pain, emotions, moods — are interoceptive experiences that have more to do with your body than with the world outside.

Finally, exteroceptive sensations are your model of the outside world, primarily as you can act upon it to impact your body. The strawberry comes with an immediate perception of edibility along with redness. This is because eating stuff is an action potentially available to you, and discriminating between edible and inedible things is vital to staying vital.

Self Image

To summarize so far: the contents of your consciousness, your perceptions, are features of a best-guess model concocted by your brain to predict its own present and future states. It’s driven by an overriding prediction of staying alive, which impacts your brain through a rich and vivid channel of interoceptive sensation.

While it’s easy to see how perceptions like redness, hunger, and edibility fit into this picture it may not seem immediately applicable to more complex conscious experiences that have to do with your selfhood. This is the biggest section of the book, disentangling selfhood into components such as ownership of a body, a first person view, a continuous narrative history, a sense of volition, and more. It details how all these are explained through the lens of a generative model keeping your body alive, and also the clever experimental setups used by Seth and his fellow scientists to understand each one (and mess with it at will). I can’t do this section full justice in a brief review, but we can take a whirlwind tour of what it means not just to be but to be you.

Body Ownership

To a collection of neurons locked inside a skull, the rest of the body is as much “out there” as any other object. And yet, it doesn’t feel that way: you have a strong sense of the exact extent of your body and police this boundary rigorously. You apply this even to something like saliva, which turns from a normal part of your body into a yucky foreign substance the moment it crosses an invisible line somewhere in the vicinity of your teeth.

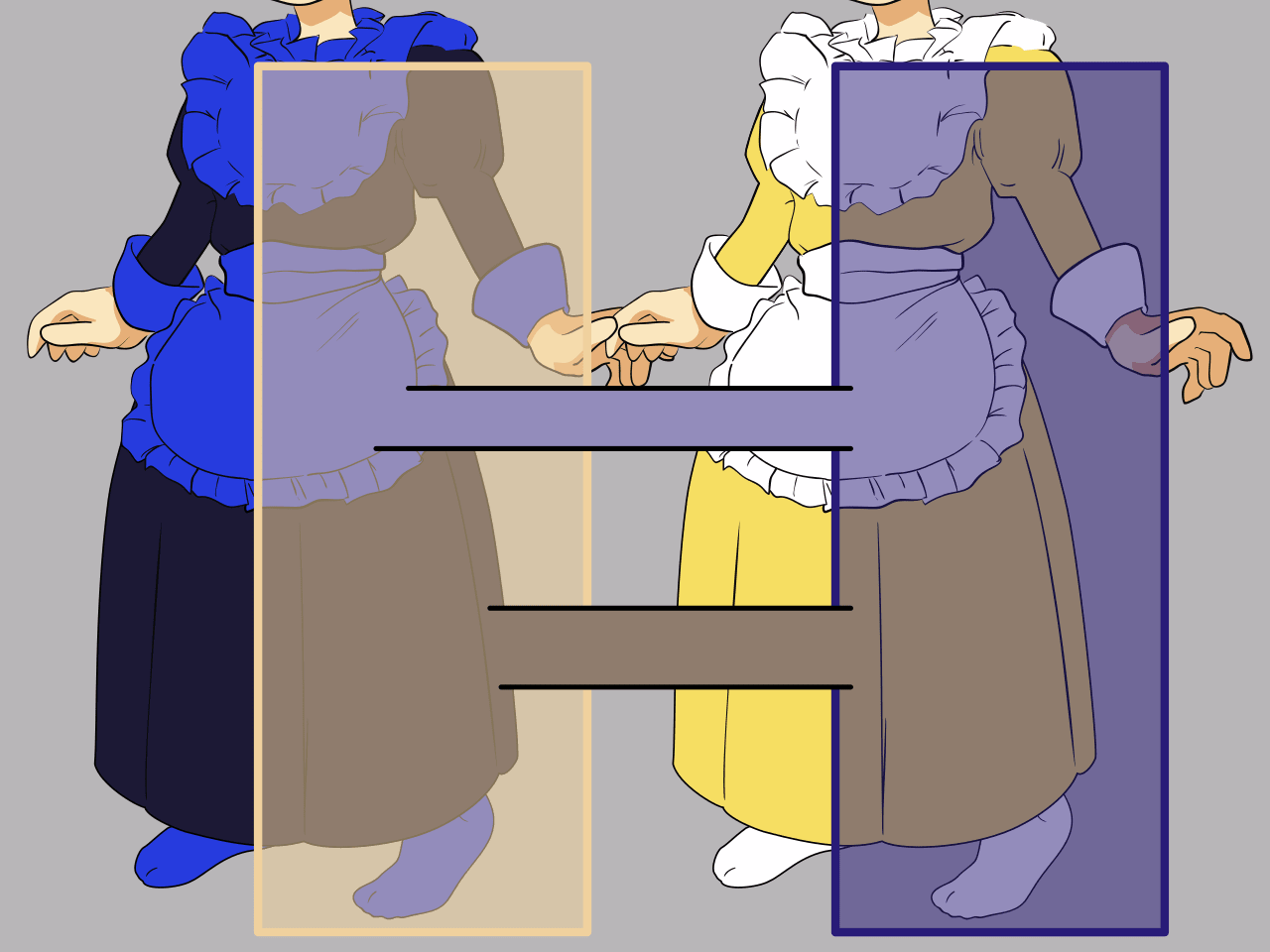

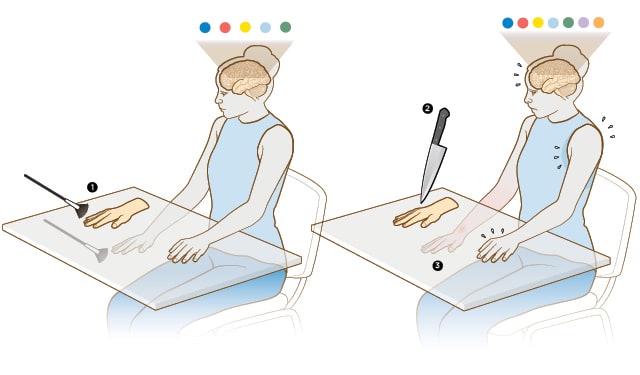

The main determinant of what feels like your body is that your body elicits interoceptive sensations that match external ones. This can be demonstrated by the rubber hand illusion: a detached rubber hand feels like a mere object if you just look at it, but if it is stroked with a brush at the same time as your real hand the consilience between seeing and feeling the strokes creates a strong feeling that it is part of you. You will instinctively flinch in horror if “your” rubber is suddenly threatened.

Illustration of the rubber hand illusion experiment from The Scientist magazine

Your map of the world is chiefly a model of the sensory consequences of your actions, and these sensory consequences are what distinguishes your body from everything else.

First-person Perspective

The experience of observing the world from a single point somewhere between your eyes and slightly behind your forehead comes from your generative model of vision. As you move around the world, this first-person POV is the prediction that surfaces will be visible if they are facing that point with no obstruction, and not visible otherwise.

Illustration from Steven Lehar’s “The Boundaries of Human Knowledge” demonstrating that although you model objects as occupying volume, you are also aware that you are only seeing a 2D surface that faces a single point

But as we’ve seen, visual-based perceptions aren’t as fundamental and stable as embodied ones. In a 2007 experiment, subjects saw through a VR set a real-time video of their own body filmed from a few feet behind their back. When they were stroked with a brush and saw this happening synchronously in the video feed, they reported feeling that the “virtual” body standing a few feet in front of them was in fact theirs and that they were observing it from a third-person POV.

Of course, people have forever reported having “out of body” experiences. These experiences are almost certainly true, even if explaining them via demonic possession or astral projection is clearly bunk. In a survey I ran myself, 27% of responders said that they see themselves in the third person when visualizing entering a familiar room. Coincidentally, this is similar to the percentage of people who apply makeup daily, an activity that involves looking at yourself in the third person (through a mirror) for some time while stroking yourself with a brush.

Narrative Self

An important component of selfhood is having a narrative about yourself, your continuous life history and the type of person you are. This self-story is not strictly necessary for a lot of normal functioning; Being You recounts the story of a music producer suffering from total amnesia who lives permanently with no memory beyond the last few seconds. And yet he is able to play the piano perfectly and even rekindle love with his wife.

What is your narrative self predicting and controlling? Most likely: your social self, how other people perceive you, predict you, and treat you. This is of course of vital importance to social creatures like us! Hanson and Simler’s The Elephant in the Brain meticulously demonstrates that our story of who we are and why we do the things we do often has little to do with our real motives and a lot to do with securing assistance from others and avoiding punishment.

Free Will

For many people, the aspect of selfhood they cling to most tightly is their volition, the feeling of being the originator and director of their own actions. And if philosophically inclined, they may worry about reconciling this volition with a deterministic universe. Are you truly exercising free will or merely following the laws of physics?

This question betrays that same dualistic map-territory confusion that asks how the material redness of a strawberry could cause the phenomenological redness in your mind. Redness, free will, belief in deterministic physics — these are all features of your generative model. There is no “spooky free will” that violates the laws of physics in our common model of them, but the experience of free will certainly exists and is informative.

Imagine that you are making a cup of tea. When did it feel like you exercised free will? Likely more so at the start of the process, when you observed that all tea-making tools are available to you and contemplated alternatives like coffee or wine. Once you’re deep in the process of making the cup the subsequent actions feel less volitional, executed on autopilot if making tea is a regular habit of yours. What is particular about that moment of initiation?

One particularity is the perception that you are able to predict and control many degrees of freedom: observe and move several objects around, react in complex ways to setbacks, etc. This separates free will from actions that feel “forced” by the configuration of the world outside, like slipping on a wet surface, and reinforces the sensation that free will comes from within.

The experience of volition is also a useful flag for guiding future behavior. If the universe were arranged again in the same exact configuration as when you made tea you will always end up making tea. But the universe (in particular, the state of your brain) never repeats. The feeling of “I could have done otherwise” is the experience of paying attention to the consequences of your action so that in a subjectively similar but not perfectly identical situation you could act differently if the consequences were not as you predicted. If the tea didn’t satisfy as expected, the experience of free will you had when you made it shall guide you the next day to the cold beer you should have drank instead.

Being a Map

Being You is an science book, covering the results of research in various fields and presenting a comprehensive model of what your phenomenology is and how it works. But I suspect that it’s impossible to read it without it actually changing what being you is like — if all you are is a generative model of the world then enhancing this model with new insights will surely affect it.

For one, the decoupling of conscious experience from deterministic external causes implies that there’s truly no such thing as a “universal experience”. Our experiences are shared by virtue of being born with similar brains wired to similar senses and observing a similar world of things and people, but each of us infers a generative model all of our own. For every single perception mentioned in Being You it also notes the condition of having a different one, from color blindness to somatoparaphrenia — the experience that one of your limbs belongs to someone else. The typical mind fallacy [? · GW] goes much deeper than mere differences in politics or abstract beliefs.

The subjective nature of all experience also offers a way to purposefully change yourself that falls between utter fatalism and “it’s all in your head” solipsistic voluntarism. Your brain is always making its best predictions; these can’t be changed by a single act of will but can be updated with enough evidence. Almost everything you know and perceive was inferred from scratch, and what was trained can be retrained. If you want to build habits, just observing yourself doing the thing for whatever reason is more useful than any story or incentive structure you can come up with. In particular, do things with your body to learn them, that’s the part of the universe your brain pays the most attention to.

The intimate connection of our consciousness to our living body also implies that we shouldn’t blithely assume that it can be easily disembodied. Robin Hanson posits digital emulations that have a similar basic consciousness to biological humans, like an emulated Elon Musk who shares a sense of selfhood with the biological version and enjoys virtual feasts or Ferraris. But it is not clear at all that such a being could even exist in principle. A digital mind that lacks a body it is trying to keep alive, that has entirely different senses than our interoceptive and exteroceptive ones, and that has an entirely different repertoire of actions available to it will have an entirely alien generative model of its world, and thus an entirely alien phenomenology — if it even has one. We can guess what it’s like to be a bat: its reliance on sonar to navigate the world likely creates a phenomenology of “auditory colors” that track how surfaces reflect sound waves similar to our perception of visual color. It’s much harder to guess what it’s like, if anything, to be an “em”.

In general, the fact that our consciousness has a lot to do with living and little with intelligence implies that we should more readily ascribe it to animals and less readily to AI. Eliezer seems to think [? · GW] that selfhood is necessary for conscious experience, and that babies and animals aren’t sentient. But selfhood is itself just a bundle of perceptions, separable from each other and from experiences like pain or pleasure. An animal with no selfhood cannot report “it is I, Bambi, who is suffering”, but that doesn’t mean there is no suffering happening when you harm it. And as for AI, I believe that Eliezer and Seth are in agreement that world-optimizing intelligence and what-it’s-like-to-be-you consciousness are quite orthogonal.

But there is something interesting still about intelligence: how is it that reading a book of declarative knowledge can change how I perceive the world, how I think of my own self, how I relate to my mortality? This all happened to me after reading Being You and again upon rereading it. Yet almost everything the book talks about is the domain of “system 1”, our intuitive and automatic perception. I had the chance to ask this of Seth personally and he sent me a link to a paper on how “system 2” function relates to perceiving the contents of working memory. But it still feels to me that there is something magic about our ability to reason explicitly [LW · GW] and how it fits into this new understanding of consciousness.

Since you are reading this review you are likely interested in the same things as well. I highly recommend that you read Being You, and then that you spend a good while thinking about it.

130 comments

Comments sorted by top scores.

comment by ShardPhoenix · 2023-08-23T05:18:49.656Z · LW(p) · GW(p)

Some interesting examples but this seems to be yet another take that claims to solve/dissolve consciousness by simply ignoring the Hard Problem.

Replies from: gworley, caleb-reske, Jacobian, tangerine↑ comment by Gordon Seidoh Worley (gworley) · 2023-08-23T23:38:41.827Z · LW(p) · GW(p)

It sounds like Seth's position is that the hard problem of consciousness is the result of confusion, so he's not ignoring it, but saying that it only appears to exist because it's asked within the context of a confused frame.

Seth seems to be suggesting that the hard problem of consciousness is a bit like asking why don't people fall off the edge of the Earth? We think of this question as confused because we believe the Earth is round. But if you start from the assumption that the Earth is flat, then this is a reasonable question, and no amount of explanation will convince you otherwise.

The reason these two situations look different is that it's now easy for us to verify that the Earth is not flat, but it's hard for us to verify what's going on with consciousness. Seth's book is making a bid, by presenting the work of many others, to say that what we think of as consciousness is explainable in ways that make the Hard Problem a nonsensical question.

That seems quite a big different from "simply ignoring the Hard Problem", though I admit Jacob does not go into great detail about Seth's full arguments for this. But I'd posit that if you want to disagree with something, you need to disagree with the object-level claims Seth makes first, and only after reaching a point where you have no more disagreements is it worth considering whether or not the Hard Problem still makes sense, and if you do then it should be possible to make a specific argument about where you think the Hard Problem arises and what it looks like in terms of the presented model.

Replies from: tslarm↑ comment by tslarm · 2023-08-24T04:04:45.441Z · LW(p) · GW(p)

Without reading the book we can't be sure. But the trouble is that this claim has been made a million times, and in every previous case the author has turned out to be either ignoring the hard problem, misunderstanding it, or defining it out of existence. So if a longish, very positive review with the title 'x explains consciousness' doesn't provide any evidence that x really is different this time, it's reasonable to think that it very likely isn't.

The reason these two situations look different is that it's now easy for us to verify that the Earth is flat, but it's hard for us to verify what's going on with consciousness.

Even if I had no way of verifying it, "the earth is (roughly) spherical and thus has no edges, and its gravity pulls you toward its centre regardless of where you are on its surface" would clearly be an answer to my question, and a candidate explanation pending verification. My question was only 'confused' in the sense that it rested on a false empirical assumption; I would be perfectly capable of understanding your correction to this assumption. (Not necessarily accepting it -- maybe I think I have really strong evidence that the earth is flat, or maybe you haven't backed up your true claim with good arguments -- but understanding what it means and why it would resolve my question).

Are you suggesting that in the case of the hard problem, there may be some equivalent of the 'flat earth' assumption that the hard-problemists hold so tightly that they can't even comprehend a 'round earth' explanation when it's offered?

Replies from: gworley, Signer, sharmake-farah↑ comment by Gordon Seidoh Worley (gworley) · 2023-08-24T16:22:08.794Z · LW(p) · GW(p)

Are you suggesting that in the case of the hard problem, there may be some equivalent of the 'flat earth' assumption that the hard-problemists hold so tightly that they can't even comprehend a 'round earth' explanation when it's offered?

Yes. Dualism is deeply appealing because most humans, or at least most of humans who care about the Hard Problem, seem to experience themselves in dualistic ways (i.e. experience something like the self residing inside the body). So even if it becomes obvious that there's no "consciousness sauce" per se, the argument is that the Problem seems to exist only because there are dualistic assumptions implicit in the worldview that thinks the Problem exists.

I'd go on to say that if we address the Meta Hard Problem like this in such a way that it shows the Hard Problem to be the result of confusion, then there's nothing to say about the Hard Problem, just like there's nothing interesting to say about why ships never sail off the edge of the Earth.

Replies from: Shiroe↑ comment by Shiroe · 2023-08-24T20:20:25.195Z · LW(p) · GW(p)

So you don't believe there is such a thing as first-person phenomenal experiences, sort of like Brian Tomasik? Could you give an example or counterexample of what would or wouldn't qualify as such an experience?

Replies from: gworley↑ comment by Gordon Seidoh Worley (gworley) · 2023-08-25T02:14:18.910Z · LW(p) · GW(p)

I think that there's a process we can meaningfully point to and call qualia, and it includes all the things we think of as qualia, but qualia is not itself a thing per se but rather the reification of observations of mental processes that allows us to make sense of them.

I have theories of what these processes are and how they work and they mostly line up with the what's pointed at by this book. In particular I think cybernetic models are sufficient to explain most of the interesting things going on with consciousness, and we can mostly think of qualia as the result of neurons in the brain hooked up in loops so that their inputs include information not only from other neurons but also from themselves, and these self-sensing loops provide the input stream of data that other neurons interpret as self-experience/qualia/consciousness.

Replies from: TAG↑ comment by TAG · 2023-10-08T19:37:11.944Z · LW(p) · GW(p)

but qualia is not itself a thing per se but rather the reification of observations of mental processes

I don't see how that helps. We don't have a reductive explanation of consciousness as a thing, and we don't have a reductive explanation of consciousness as a process.

↑ comment by Signer · 2023-08-24T09:10:10.103Z · LW(p) · GW(p)

Are you suggesting that in the case of the hard problem, there may be some equivalent of the ‘flat earth’ assumption that the hard-problemists hold so tightly that they can’t even comprehend a ‘round earth’ explanation when it’s offered?

I wouldn't say "can’t even comprehend" but my current theory is that one such detrimental assumption is "I have direct knowledge of content of my experiences".

Replies from: Shiroe, sharmake-farah, TAG↑ comment by Shiroe · 2023-08-24T15:33:52.479Z · LW(p) · GW(p)

but my current theory is that one such detrimental assumption is "I have direct knowledge of content of my experiences"

It's true this is the weakest link, since instances of the template "I have direct knowledge of X" sound presumptuous and have an extremely bad track record.

The only serious response in favor of the presumptuous assumption [edit] that I can think of is epiphenomenalism in the sense of "I simply am my experiences", with self-identity (i.e. X = X) filling the role of "having direct knowledge of X". For explaining how we're able to have conversations about "epiphenomenalism" without it playing any local causal role in us having these conversations, I'm optimistic that observation selection effects could end up explaining this.

Replies from: Signer, TAG↑ comment by Noosphere89 (sharmake-farah) · 2023-08-26T15:57:59.266Z · LW(p) · GW(p)

Similarly, I think that one inapplicable assumption is the idea that people can reliably self-analyze and come to accurate conclusions, thus being presumed reliable in their reports, including consciousness. I remember reading something that people's ability to self-analyze correctly is basically 0, that is people are pretty much always incorrect about their own traits and thoughts.

Replies from: Richard_Kennaway↑ comment by Richard_Kennaway · 2023-08-26T16:48:04.859Z · LW(p) · GW(p)

Interpret things strictly enough and everyone is always wrong about everything. They can still be usefully right.

Replies from: sharmake-farah↑ comment by Noosphere89 (sharmake-farah) · 2023-08-26T18:05:02.227Z · LW(p) · GW(p)

The point is that they're usually not even that useful, as bringing an outsider would probably help the situation, and therefore one of the basic assumptions of a lot of consciousness discourse and intuitions is false, and they don't know this, and in particular, it's why I now dislike a lot of consciousness intuitions, but this goes especially for dualism.

The fact that we are so bad at self-analysis is why we need outsider help so much.

↑ comment by TAG · 2023-08-24T16:06:31.360Z · LW(p) · GW(p)

Is there a reason why it is detrimental? Note that it “I have direct knowledge of content of my experiences”.doesn't imply certain knowledge, a or non-physical ontology, or epiphenomenalism...

Replies from: Signer, Shiroe↑ comment by Signer · 2023-08-25T09:51:08.302Z · LW(p) · GW(p)

I think it's detrimental because "direct" there prevents people from accepting weak forms of illusionism, and that creates problems additional to The Hard Problem like Mary or Chalmer's conceivability of qualia's structure. And because... I don't want to say "the assumption is wrong" because knowledge is arbitrary high-level concept, but you can formulate a theory of knowledge where it doesn't hold and that theory is better.

↑ comment by Noosphere89 (sharmake-farah) · 2025-01-20T14:19:15.432Z · LW(p) · GW(p)

Are you suggesting that in the case of the hard problem, there may be some equivalent of the 'flat earth' assumption that the hard-problemists hold so tightly that they can't even comprehend a 'round earth' explanation when it's offered?

Another assumption that I think people with intuitions on the hard problem hold tightly is the idea that whether something is conscious must be equivalent to things they value intrinsically at a universal level, which is false, because you can value something without it being conscious, and something can be conscious without it being valuable to you.

I think the methodology of the post below is bad, but I have a high prior that something like this is happening in the consciousness debate, and that it's confusing everyone:

https://www.lesswrong.com/posts/KpD2fJa6zo8o2MBxg/consciousness-as-a-conflationary-alliance-term-for [LW · GW]

Replies from: tslarm↑ comment by tslarm · 2025-01-21T04:38:03.656Z · LW(p) · GW(p)

Can you elaborate a bit? Personally, I have intuitions on the hard problem and I think conscious experience is the only type of thing that matters intrinsically. But I don't think that's part of the definition of 'conscious experience'. That phrase would still refer to the same concept as it does now if I thought that, say, beauty was intrinsically valuable -- or even if I thought conscious experience was the only thing that didn't matter.

Replies from: sharmake-farah↑ comment by Noosphere89 (sharmake-farah) · 2025-01-21T15:45:03.956Z · LW(p) · GW(p)

Basically, if you want consciousness to matter morally/intrinsically, then you will prefer theories that match your values on what counts as intrinsically valuable, irrespective of the truth of the theory, and in particular, it should be way more surprising than it does that the correct theory of consciousness just so happens to match what you find intrinsically valuable, or at least matches up way more than random chance, because I believe what you value/view as moral is inherently relative, and doesn't really have a relationship to the scientific problem of consciousness.

I think this is part of the reason why people don't exactly like reductive conceptions of consciousness, where consciousness is created by parts like neurons/atoms/quantum fields that people usually don't value in themselves, because they believe that consciousness should come out of parts/units that they think are morally valuable to them, and also part of the reason why people dislike theories that imply that consciousness goes beyond species that they value intrinsically, which is us for most people.

I think every side here is a problem, in that arguments for moral worth of species often are conditionalized on those species being conscious for suffering, and people not wanting to admit that it's totally fine to be okay with someone suffering, even if they are conscious, and it being totally fine to be okay to value something like a rock, or all rocks, that isn't conscious or suffering.

Another way to say it is even if a theory suggests that something you don't value intrinsically is conscious, you don't have to change your values very much, and you can still go about your day mostly fine.

I think a lot of people who aren't you conflate moral value with the science question of "what is consciousness" unintentionally, due to the term being so value-loaded.

↑ comment by Caleb Reske (caleb-reske) · 2023-08-23T06:28:58.159Z · LW(p) · GW(p)

Agreed! These topics - the narrative self, the perception of free will, the predictive processing-theory, etc. - are all incredibly interesting and worth studying. But what has been explained in the book doesn't seem to come close to what consciousness is at all - rather, how our perceptions in consciousness are influenced by our sense of self and story, something that has already been well-studied. I'm fairly convinced by the predictive-processing theory of self and cognition - but I don't treat this as an explanation for the existence of experience itself. A generative artificial model can have "predictive processing," but does this give it a subjective, conscious experience? What would it mean, exactly, if it did?

I'm reminded of this post [LW · GW] - the reason the two "consciousness camps" seem to be talking past each other might be because we have different intuitions about what needs explaining. To me, what really needs explaining is the fact of consciousness - its existence, not its qualities. Why "feel" at all? This book, while it looks interesting, doesn't look like it touches that question.

↑ comment by Jacob Falkovich (Jacobian) · 2023-08-24T18:48:21.838Z · LW(p) · GW(p)

I tried to communicate a psychological process that occurred for me: I used to feel that there's something to the Hard Problem of Consciousness, then I read this book explaining the qualities of our phenomenology, now I don't think there's anything to HPoC. This isn't really ignoring HPoC, it's offering a way out that seems more productive than addressing it directly. This is in part because terms HPoC insists on for addressing it are themselves confused and ambiguous.

With that said, let me try to actually address HPoC directly although I suspect that this will not be much more convincing.

HPoC roughly asks "why is perceiving redness accompanies by the quale of redness". This can be interpreted in one of two ways.

1. Why this quale and not another?

This isn't a meaningful question because the only thing that determines a quale as being a "quale of redness" is that it accompanies a perception of something red. I suspect that when people read these words they imagine something like looking at a tomato and seeing blue, but that's incoherent — you can't perceive red but have a "blue" quale.

2. Why this quale and not nothing?

Here it's useful to separate the perception of redness, i.e. a red object being part of the map, and the awareness of perceiving redness, i.e. a self that perceives a red object being part of the map. These are two separate perceptions. I suspect that when people think about p-zombies or whatever they imagine experiencing nothingness or oblivion, and not a perception unaccompanied by experience, or they imagine some subliminal "red" making them hungry similar to how it would affect a p-zombie. There is no coherent way to imagine being aware of perceiving red, and this being different from just perceiving red, without this awareness being an experience. All you have is experience.

HPoC is demanding a justification of experience from within a world in which everything is just experiences. Of course it can't be answered! If it could formulate a different world that was even in principle conceivable, it would make sense to ask why we're in world A and not in world B. But this second world isn't really conceivable if you focus on what it would mean. The things you're actually imagining are seeing a blue tomato or seeing nothing or seeing a tomato without being aware of it, you're not actually imagining an awareness of seeing a red tomato that isn't accompanied by experience.

↑ comment by TAG · 2023-10-08T19:18:20.566Z · LW(p) · GW(p)

Why this quale and not another?

This isn’t a meaningful question because the only thing that determines a quale as being a “quale of redness” is that it accompanies a perception of something red.

Edit: It's a meaningful question because we, as far as we are concerned, it could have been different because we don't have a way of predicting it. Moreover, iyt quite possibly does vary between individuals, because red-green colour blindness is a thing. What determines, in the sense of pinning down, a quale is a combination of the external stimulus, eg. 600nm light, and the subject.

But that isn't the relevant sense of "determines". It isn't causal deterinism, and it isn't the kind of "vertical" determinism that arises from having a reductive explanation. If subjective red is an entirely physical phenomenon, then it should be determined by, and predictable from, the underlying physics. This we cannot do--we cannot predict non human qualia, or novel human qualia. If there is a set of facts that cannot be deduced from physics, physicalism is wrong.

Reductionism allows some basic facts, about fundamental laws and primitive entities to go unreduced, but not high level phenomena, which includes consciousness.

HPoC is demanding a justification of experience from within a world in which everything is just experiences.

No, it demands a justification of experience on the basis of a physical world, if you assume you are in one. There is no HP in an Idealist ontology, because there is no longer a need to explain one thing on terms of another. It's unlikely that Seth is an idealist.

The success of science in the twentieth and twentyfirst centuries has led many philosophers to adopt a physicalist ontology, basically the idea that the fundamental constituents of reality are what physics says they are. (It is a background assumption of physicalism that the sciences form a sort of tower, with psychology and sociology near the top, and biology and chemistry in the middle , and with physics at the bottom. The higher and intermediate layers don't have their own ontologies -- mind-stuff and elan vital are outdated concepts -- everything is either a fundamental particle, or an arrangement of fundamental particles)

So the problem of mind is now the problem of qualia, and the way philosophers want to explain it is physicalisticaly. However, the problem of explaining how brains give rise to subjective sensation, of explaining qualia in physical terms, is now considered to be The Hard Problem. In the words of David Chalmers:-

" It is undeniable that some organisms are subjects of experience. But the question of how it is that these systems are subjects of experience is perplexing. Why is it that when our cognitive systems engage in visual and auditory information-processing, we have visual or auditory experience: the quality of deep blue, the sensation of middle C? How can we explain why there is something it is like to entertain a mental image, or to experience an emotion? It is widely agreed that experience arises from a physical basis, but we have no good explanation of why and how it so arises. Why should physical processing give rise to a rich inner life at all? It seems objectively unreasonable that it should, and yet it does.”

What is hard about the hard problem is the requirement to explain consciousness, particularly conscious experience, in terms of a physical ontology. Its the combination of the two that makes it hard. Which is to say that the problem can be sidestepped by either denying consciousness, or adopting a non-physicalist ontology.

Examples of non-physical ontologies include dualism, panpsychism and idealism . These are not faced with the Hard Problem, as such, because they are able to say that subjective, qualia, just are what they are, without facing any need to offer a reductive explanation of them. But they have problems of their own, mainly that physicalism is so successful in other areas.

Eliminative materialism and illusionism, on the other hand, deny that there is anything to be explained, thereby implying there is no problem, But these approaches also remain unsatisfactory because of the compelling subjective evidence for consciousness.

So you can sidestep the Hard Problemn by debying that there ius anything to be explained, or by denying that conscious experience neeeds to be explained in physical terms.

The third approach to the Hard Problem is to answer it in its own terms.

↑ comment by ShardPhoenix · 2023-08-25T02:36:00.632Z · LW(p) · GW(p)

HPoC is demanding a justification of experience from within a world in which everything is just experiences. Of course it can't be answered!

I think I see what you're saying and I do suspect that experience might be too fundamentally subjective to have a clear objective explanation, but I also think it's premature to give up on the question until we've further investigated and explained the objective correlates of consciousness or lack thereof - like blindsight, pain asymbolia, or the fact that we're talking about it right now.

And does "everything is just experiences" mean that a rock has experiences? Does it have an infinite number of different ones? Is your red, like, the same as my red, dude? Being able to convincingly answer questions like these is part of what it would mean to me to solve the Hard Problem.

Replies from: Jacobian↑ comment by Jacob Falkovich (Jacobian) · 2023-08-25T04:04:47.728Z · LW(p) · GW(p)

By "everything is just experiences" I mean that all I have of the rock are experiences: its color, its apparent physical realness, etc. As for the rock itself, I highly doubt that it experiences anything.

As for your red being my red, we can compare the real phenomenology of it: does your red feel closer to purple or orange? Does it make you hungry or horny? But there's no intersubjective realm in which the qualia themselves of my red and your red can be compared, and no causal effect of the qualia themselves that can be measured or even discussed.

I feel that understanding that "is your red the same as my red" is a question-like sentence that doesn't actually point to any meaningful question is equivalent to understanding that HPoC is a confusion, and it's perhaps easier to start with this.

Here's a koan: WHO is seeing two "different" blues in the picture below?

↑ comment by TAG · 2023-10-08T19:42:52.171Z · LW(p) · GW(p)

By “everything is just experiences” I mean that all I have of the rock are experiences: its color, its apparent physical realness, etc.

Presumably you mean all you have epistemically...in your other comments,it doesn't sound like you are solving the HP with idealism.

↑ comment by ShardPhoenix · 2023-08-25T05:57:31.552Z · LW(p) · GW(p)

-

In general how can you know whether and how much something has experiences?

-

I think with things like the nature of perception you could say there's a natural incomparability because you couldn't (seemingly) experience someone else's perceptions without translating them into structures your brain can parse. But I'm not very sure on this.

↑ comment by tangerine · 2023-08-23T11:54:48.240Z · LW(p) · GW(p)

The Hard Problem doesn’t exist.

Do you believe that all your beliefs are represented only in the structure of your brain? Then changing the structure of your brain changes your beliefs; this way you could theoretically be made to believe anything, including, of course, things that are false. Some false beliefs are useful, such as some optical illusions and illusions in general, such as the belief that “you” are “experiencing” things. (I once interviewed a man who had had a stroke and reported feeling like “he wasn’t there” anymore and he would look at his own hand and say, “it’s like it’s not mine”. This caused difficulties with locomotion and knowing when “he” had to go to the bathroom, because it was hard for him to realize it was actually “his” bladder being full and “him” having to make a decision to relieve it.) It’s a useful, evolved structure in your brain that makes you believe that. But it’s technically still false.

Similarly, you could hallucinate that a dragon is standing in front of you; some people actually have such hallucinations. The rational thing to do at such a moment is to disbelieve your direct experience, based on all the other things you know about the world; you know that the evidence against the existence of dragons is overwhelming and you know hallucinations happen. However, disbelieving that what you’re experiencing is real doesn’t make you suddenly not experience it, that’s why hallucinations can be so debilitating.

Ever had déjà vu? When I have déjà vu, I get an overwhelming sense of having experienced something before and my mind starts racing, trying to explain it. I usually recognize rationally that this is probably a déjà vu, but that explanation feels very unsatisfying in the moment because the feeling of recognition is so convincing. It’s only when this sensation subsides about ten seconds later that I can put the matter to rest, assured that it was just a glitch in my brain. But what if it never subsided? What if you had a déjà vu that lasted the rest of your life? It would be very hard to ignore the constant sensation of recognition. This is exactly the kind of thing that “believing you are experiencing” (and many associated beliefs) is. In both cases it’s false, but in the latter it’s there because it’s useful.

The point is that sometimes the structure of our brains can reach a state in which certain things seem to be true, even though rationally we should disbelieve it based on all the other things we know. The evidence for the existence of the Hard Problem is extremely thin. In fact, it’s unfalsifiable—not even wrong. Everything we know about science, truth and knowledge points to the Hard Problem not existing. What actually needs to be explained is not why we experience things, but why we think that we experience things and we have perfectly good (physical) explanations for that.

Replies from: gilch, Slapstick, TAG, quetzal_rainbow, shankar-sivarajan↑ comment by gilch · 2023-08-23T16:14:46.213Z · LW(p) · GW(p)

This is again simply ignoring the Hard Problem. Your supporting paragraphs seem both true and irrelevant. You're equivocating, conflating consciousness with self-awareness. Consciousness is not the sense-of-self. That is merely one of many things that one can be conscious of.

Replies from: tangerine, tangerine↑ comment by tangerine · 2023-08-24T11:57:37.188Z · LW(p) · GW(p)

The burden of proof is on those who assert that the Hard Problem is real. You can say what consciousness is not, but can you say what it is? As it stands, no explanation of the Hard Problem is possible, because the Hard Problem has no criteria for what would comprise a satisfying explanation; no way to distinguish a correct explanation from an incorrect one. All real science has such criteria, yet even David Chalmers has none. Until those criteria are established, the existence of the Hard Problem will forever remain unfalsifiable, unscientific and belief in it irrational.

Unfortunately, proper criteria for explanations always involve (physical) observations and their predictions. Therefore any attempt to establish criteria for explanations of the Hard Problem is met with the criticism that, because it refers to physical aspects of consciousness, it ignores the Hard Problem. Evidently, proponents of the Hard Problem have backed themselves into a contradictory corner; the Hard Problem is unfalsifiable and any attempt to make it falsifiable makes it not the Hard Problem.

If the Hard Problem is “above” science (i.e., not science), as it seems to be, then it is above inquiry and if it’s above inquiry, why inquire?

The naked truth is that belief in the existence of the Hard Problem fetishizes mystery; it abhors actual explanation and therefore scrambles to keep its suppositions immune to it.

Belief in the Hard Problem, being unscientific and therefore not real, begs the question why such beliefs can nonetheless take root in the face of overwhelming contrary evidence, which is what my earlier post attempted to explain.

I’m experiencing just like you, but the Hard Problem doesn’t jive at all with the rest of my beliefs (and I have seen many attempts to reconcile them, all unsuccessful). Therefore I choose to accept the benefits of the sensation of experience and accept the Easy Problem of consciousness as the overwhelmingly likely Only Problem of consciousness.

Replies from: TAG, gilch, Shiroe↑ comment by TAG · 2023-10-08T19:29:05.960Z · LW(p) · GW(p)

You can say what consciousness is not, but can you say what it is?

The fact that we can't fully explain consciousness is a point in favour of the HP.

Of course, any statement of a problem has to state something about what it is ... but something isn't everything.

The burden of proof is on those who assert that the Hard Problem is real.

Yes, and they have arguments you haven't addressed.

As it stands, no explanation of the Hard Problem is possible, because the Hard Problem has no criteria for what would comprise a satisfying explanation; no way to distinguish a correct explanation from an incorrect one.

I use the criterion of being able to make novel predictions. We clearly don't have a solution that reaches that criterion.

Replies from: tangerine↑ comment by tangerine · 2023-10-09T16:36:29.629Z · LW(p) · GW(p)

The fact that we can't fully explain consciousness is a point in favour of the HP.

But my question was, what exactly can’t we fully explain? What are you referring to when you say “consciousness” and what about it can’t we explain?

they have arguments you haven't addressed.

Such as?

I use the criterion of being able to make novel predictions. We clearly don't have a solution that reaches that criterion.

Agreed, but what exactly should it predict? General relativity made novel predictions when it was first formulated, but about the movement of planets and so forth, so I presume that doesn’t count as a solution to the Hard Problem of consciousness.

Replies from: TAG↑ comment by gilch · 2023-08-25T01:19:08.758Z · LW(p) · GW(p)

Hard Problem has no criteria for what would comprise a satisfying explanation; no way to distinguish a correct explanation from an incorrect one.

I feel like most of your comment is unfair, except for this part. Let me attempt to make it more concrete for you.

Suppose a future scientist offers you technological immortality, but the procedure will physically destroy your brain over time, replacing it with synthetic parts. Your new synthetic brain won't fail from old age and unlike a biological brain, can be backed up (and reconstructed) to protect against its inevitable accidental destruction over the coming eons. Do you take his offer? What assurances do you need? If you're wrong about certain details and accept, you die (brain destroyed) so you'd better get it right.

I expect that assurances one could rationally accept would constitute a solution to the Hard Problem. But maybe you'll surprise me. This scenario is a crux for me (well, one of a few perhaps) such that were they addressed, I would either consider the Hard Problem solved, or else decide that I have no reason left to care about the Hard Problem.

The scenario has a number of assumptions that may not hold for you. But I can only guess. Can we agree on the following?

- Humans do not have ectoplasmic-ghost souls or the like. Rather the mind more directly inhabits the brain, and if it's destroyed, you have permanently died. Gradually replacing your brain with the wrong sort of synthetic parts (such as plastic) will kill you.

- The physical molecules of the brain are completely replaced by natural biological processes over time, i.e., your mind is not your brain's atoms, but rather something about their structure, and therefore a procedure like the offer could (in principle) work.

- There are physical structures, including complex (even biological) ones that are not alive in the sense of being conscious/aware. I.e., panpsychism is false.

- Automatons (chatbots?) can say they are conscious when they are not. I.e., zombies can be constructed, in principle, and a procedure like this could replace you with one. (This is not a Chalmers "p-zombie". Its brain is synthetic, thus physically distinguishable from a normal biological human.)

↑ comment by tangerine · 2023-08-25T13:59:33.672Z · LW(p) · GW(p)

Thank you for this reply, I think this helps to pin down where our disagreement comes from.

Technically I don’t disagree with your assumptions, because I think it’s equally valid to say they’re true as that they’re false, which is exactly the issue I have with them. There doesn’t seem to be a fact of the matter about them (i.e., there’s no way to experimentally distinguish a world in which any of these assumptions holds from one in which it does not), so if the existence of the Hard Problem is derived from them, then that doesn’t alleviate the issue of its unfalsifiability.

The cause of this issue is that (from my point of view) many of the words you’re using don’t have clear definitions in the domain that you’re trying to use them in. I don’t mean to be a pedant, but if we’re really trying to use language for extraordinary investigations like these, then I think precision is warranted. For now, let me just focus on the thought experiment you posed. The way I see it, it’s equivalent to the Ship of Theseus. I think what we’re ultimately trying to grapple with is how best to model reality and it seems to me that we actually already have a perfectly good model to solve the Ship of Theseus and your thought experiment, namely particle physics. If you look at the Ship of Theseus or a person’s brain or body (or a piece of text they wrote), these are collections of particles that create a causal chain to somebody saying “Hey, it’s the Ship of Theseus!” or “Hey, gilch wrote a reply!” Over time, some of those particles may get swapped for others and cause us to still use the same name or maybe not. There’s no mystery or contradiction there, it’s a bunch of particles doing their thing and names are patterns in those particles, for example in the air when we speak them or in silicon when we’re writing it on a phone.

Do we think about the world in terms of fundamental particles? No, it’s wildly impractical, so we’ve been forced to resort, through our evolution and the evolution of language, to much simpler models/heuristics. Daniel Dennett has this idea of “folk psychology”, which talks specifically about how we model other people’s behaviors, by talking about things like “belief”, “desire”, “fear” and “hope”. This model works most of the time, but it breaks down when you try to use it to model, for example, the behavior of a schizophrenic person, or the behavior of a dead person. You can extend this idea to a kind of “folk reality”, where we model the world in terms of “people”, “alive”, “dead”, “conscious”, “justice”, “love” and pretty much all other words, which can similarly break down when trying to use them to communicate about things that they’re not normally used to communicate about.

If you like, I could go into detail how this applies to each of your assumptions, but I’ll do so just for your last assumption for now. Consciousness in normal usage is a word that evolved to mean something like “able to respond appropriately to its surroundings”, so a person who is sleeping or knocked out is basically unconscious; that’s enough for practical, daily usage. Similarly, we say humans normally are conscious, other primates and mammals maybe a little less, insects maybe and plants not really, i.e., the fewer traits it has that we recognize in humans the less conscious it is; this is already a bit less practical and more academic, but it affects how we behave. (For example, vegans claim eating animals is bad, while eating plants is okay, even though they’re absurdly glossing over whether plants can feel pain, which is not clear at all.) Over time, the evolution of language (which is a product of both chance and deliberate human decisions) adapted the meaning of words like consciousness to remain a useful part of folk reality. Our intuitions about the meanings of words and in turn about reality depend on how we see these words being used as we grow up, even if they don’t model reality correctly; we always end up with somewhat mistaken intuitions, because folk reality does not model reality exactly. And now, quite suddenly, we’ve ended up in a situation where there are machines that can behave in a way that we’re only used to recognizing in humans and so there’s a lot of confusion over whether they are conscious. Again, from a particle physics perspective it’s clear what’s going on; it’s particles doing their thing like they always have. Some particles are arranged in a structure we haven’t seen before, so what? However, our folk reality model breaks down because it’s imprecise and not adapted to this new situation. That’s also not an issue in itself; language and intuitions just have to adapt. Maybe we’ll come to a consensus that they are just as conscious as we are, or maybe we’ll see them as inferior and therefore treat them with greater indifference, even though how we describe them doesn’t actually change their nature, just our perception and treatment of them.

The real problems begin when people assume that their intuitions are true and fail to recognize that our intuitions and language are models of reality (largely inherited from cultures before us who had much less experience with the world) and that they frequently don’t generalize well. So when I encounter something like the Hard Problem, I throw my intuitions about how “I really feel like I’m experiencing things, so I can’t just be an automaton” out the window, because going down that road just leads to a bunch of useless contradictions and I conclude that whatever is going on must be made possible by particles doing their thing and nothing else, at least until I encounter a better model.

As for whether I would choose to undergo the procedure, I probably would. I don’t see any meaningful difference between my brain being replaced by new synthetic or biological material. In fact, according to my intuition (perhaps mistaken), my future counterpart with a 100% biological brain would be just as much a different person from me as my alternative future counterpart with a 100% synthetic brain.

↑ comment by Shiroe · 2023-08-24T15:05:28.491Z · LW(p) · GW(p)

The burden of proof is on those who assert that the Hard Problem is real. You can say what consciousness is not, but can you say what it is?

In the sense that you mean this, this is a general argument against the existence of everything, because ultimately words have to be defined either in terms of other words or in terms of things that aren't words. Your ontology has the same problem, to the same degree or worse. But we only need to give particular examples of conscious experience, like suffering. There's no need to prove that there is some essence of consciousness. Theories that deny the existence of these particular examples are (at best) at odds with empiricism.

Therefore I choose to accept the benefits of the sensation of experience and accept the Easy Problem of consciousness as the overwhelmingly likely Only Problem of consciousness.

It's deeply unclear to me what you mean by this. If you're denying that you have phenomenal experiences like suffering (i.e. negative valences), your rational decision making should be strongly affected by this belief. In the same way that someone who has stopped believing in Hell and Heaven should change their behavior to account for this radical change in their ontology.

Replies from: tangerine↑ comment by tangerine · 2023-08-26T14:24:50.661Z · LW(p) · GW(p)

Hi, please see my reply to gilch above.

To add to that reply, an explanation only ever serves one function, namely to aid in prediction; every moment of our life, we try to achieve outcomes by predicting which action will lead to which outcome. An explanation to the Hard Problem doesn’t do that. Any state of consciousness that I try to achieve I do so with concepts related to the Easy Problem. I do have experiences (I don’t know what the word “phenomenal” would add to that), such as pain, but to the extent that I can predict and control these, I do so purely with solutions to the Easy Problem. And in my book, concepts that exist only in explanations that don’t aid in prediction are by definition not real. But the Hard Problem is even worse than that; it’s set up so that we can’t tell the difference between a correct and incorrect explanation in the first place, which means literally anything could be an explanation, which is equivalent to no explanation at all. Sure, you can choose to believe that something like panpsychism is real or that it’s not real, but because neither belief adds any predictive power, you’re better off just cutting it out, as per Occam’s Razor.

Replies from: Shiroe↑ comment by Shiroe · 2023-09-09T17:26:50.395Z · LW(p) · GW(p)

You seem to be claiming that you have experiences, but that their role is purely functional. If you were to experience all tactile sensations as degrees of being burnt alive, but you could still make predictions just as well as before, it wouldn't make any difference to you?

Replies from: tangerine↑ comment by tangerine · 2023-09-09T19:34:35.816Z · LW(p) · GW(p)

It doesn’t make sense to say that I could make predictions just as well as before if I experienced all tactile sensations as degrees of being burnt alive, because such sensations would be equivalent to predictions that I would be burning alive, which would be false and therefore interfere with my functioning. You can’t separate experience from its consequences. That’s also why philosophical zombies are impossible; if you could have a body which doesn’t experience, then it’s not going to function as normal.

If I were to experience all tactile sensations as degrees of being burnt alive, I would assume something was wrong with my body and I would want to alleviate that situation by making predictions about which actions would alleviate it by wielding only concepts related to the Easy Problem. How would the Hard Problem help me in that situation?

Replies from: Shiroe↑ comment by Shiroe · 2023-09-26T05:23:57.304Z · LW(p) · GW(p)

because such sensations would be equivalent to predictions that I would be burning alive, which would be false and therefore interfere with my functioning

I don't see a necessary equivalence here. You could be fully aware that the sensations were inaccurate, or hallucinated. But it would still hurt just as much.

if you could have a body which doesn’t experience, then it’s not going to function as normal.

A human body, or any kind of body? It seems like a robot could engage in the same self-preservation behavior as a human without needing to have anything like burning sensations. I can imagine a sort of AI prosthesis for people born with congenital insensitivity to pain that would make their hand jerk away from a burning hot surface, despite them not ever experiencing pain or even knowing what it is.

Replies from: tangerine↑ comment by tangerine · 2023-09-27T19:01:42.900Z · LW(p) · GW(p)

You could be fully aware that the sensations were inaccurate, or hallucinated. But it would still hurt just as much.

The experience of hurting makes you respond as if you really were hurting; you have some voluntary control over your response by the frontal cortex’ modulation of pain signals, but it is very limited. Any control we exert over our experiences corresponds to physical interventions. The Hard Problem simply does not add anything of value here.

I can imagine a sort of AI prosthesis for people born with congenital insensitivity to pain that would make their hand jerk away from a burning hot surface, despite them not ever experiencing pain or even knowing what it is.

That you can imagine such a prosthesis does not mean that it could exist. It depends on how such a prosthesis would work exactly. I suspect that the more such a prosthesis was able to mimick the normal response, the more its wearer would experience pain, i.e., inducing the normal response is equivalent to inducing the normal experience.

Replies from: Shiroe↑ comment by Shiroe · 2023-10-13T14:21:59.768Z · LW(p) · GW(p)

Here are some cruxes, stated from what I take to be your perspective:

- That there's nothing at stake whether or not we have first person experiences of the kind that eliminitivists deny; it makes no practical difference to our lives whether we're so-called "automatons" or "zombies", such terms being only theoretical distinctions. Specifically it should make no difference to a rational ethical utilitarian whether or not eliminitivism happens to be true. Resources should be allocated the same way in either case, because there's nothing at stake.

- Eliminitivism is a more parsimonious theory than non-eliminitivism, and is strictly better than it for scientific purposes; elimitivism already explains all of the facts about our world, and adding so-called "first person experiences" is just a cog which won't connect to anything else; removing it wouldn't require arbitrary double standards for the validity of evidence.

- There's no way of separating experience from functionality in a system. If an organism manifests consistent and enduring behaviors of self-preservation, goal-seeking, etc. then it must have experiences, regardless of how the organism itself happens to be constructed.

I'm looking for double cruxes now. The first two don't seem very useful to me as double cruxes, but maybe the last one is. Any ideas?

Replies from: tangerine↑ comment by tangerine · 2023-10-18T20:04:32.680Z · LW(p) · GW(p)

From my point of view, much or all of the disagreement around the existence of the Hard Problem seems to boil down to the opposition between nominalism and philosophical realism. I’ll discuss how I think this opposition applies to consciousness, but let me start by illustrating it with the example of money having value.

In one sense, the value of money is not real, because it's just a piece of paper or metal or a number in a bank’s database. We have systems in place such that we can track relatively consistently that if I work some number of hours, I get some of these pieces of paper or metal or the numbers on my bank account change in some specific way and I can go to a store and give them some of these materials or connect with the bank’s database to have the numbers decrease in some specific way, while in exchange I get a coffee or a t-shirt or whatever. But this is a very obtuse way of communicating, so we just say that “money has value” and everybody understands that it refers to this system of exchanging materials and changing numbers. So in the case of money, we are pretty much all nominalists; we say that money has value as a shorthand and in that sense the value of money is real. On the other hand, a philosophical realist would say that actually the value of money is real independently from our definition of the words. (I view this idea similarly to how Eliezer Yudkowsky talks about buckets being “magical” in this story [LW · GW].)

In the case of the value of money, philosophical realism does not seem to be a common position. However, when it comes to consciousness, the philosophical realist position seems much more common. This strikes me as odd, since both value and consciousness appear to me to originate in the same way; there is some physical system which we, through the evolution of language and culture generally, come to describe with shorthands (i.e., words), because reality is too complicated to talk about exhaustively and in most practical matters we all understand what we mean anyway. However, for philosophical realists, such words appear to take on a life of their own, perhaps because existing words are simply passed down to younger generations as if they were the only way to think about the world, without mentioning that those words happen to conceptualize the world in one specific way out of an infinite and diverse number of ways and, importantly, that that conceptualization oversimplifies to a large extent. This oversimplification is not something we can escape. Any language, including any internal one, has to cope with the fact that reality is too complicated to capture in an exhaustive way. Even if we’re rationalists, we’re severely bounded ones. We can’t see reality for what it is and compare it to how we think about it to see where the differences are; we only see our own thoughts.

Following the Hard Problem through to its logical conslusions seems to lead to contradictions such as the interaction problem. None of the solutions proposed by myself or any Hard Problem enthusiast dissolve these contradictions in a way that satisfy me, therefore I conclude that my mind's conception of my own consciousness is flawed. I'll nonetheless stick to that conception because it's useful, but I have no illusions that it is universally correct; this last step is one that proponents of the Hard Problem seem not to be prepared to take. From my point of view it looks like they conclude that because their minds conceptualize the world in a certain way that this conception must somehow correspond exactly to reality. However, the map is not the territory.

P.S. I would not call myself an eliminativist. I consider “experience” and “consciousness” and related terms as real as the value of money.

Replies from: Shiroe↑ comment by Shiroe · 2023-10-20T23:38:16.719Z · LW(p) · GW(p)

I appreciate hearing your view; I don't have any comments to make. I'm mostly interested in finding a double crux.

This isn't really a double crux, but it could help me think of one:

If someone becomes convinced that there isn't any afterlife, would this rationally affect their behavior? Can you think of a case where someone believed in Heaven and Hell, had acted rationally in accordance with that belief, then stopped believing in Heaven and Hell, but still acted just the same way as they did before? We're assuming their utility function hasn't changed, just their ontology.

Replies from: tangerine↑ comment by tangerine · 2023-10-21T07:08:24.808Z · LW(p) · GW(p)

Well, for me, one crux is this question of nominalism vs philosophical realism. One way to investigate this question for yourself is to ask whether mathematics is invented (nominalism) or discovered (philosophical realism). I don’t often like to think in terms of -isms, but I have to admit I fall pretty squarely in the nominalist camp, because while concepts and words are useful tools, I think they are just that: tools, that we invented. Reality is only real in a reductionist sense; there are no people, no numbers and no consciousness, because those are just words that attempt to cope with the complexity of reality, so we just shouldn’t take them so seriously. If you agree with this, I don’t see how you can think the Hard Problem is worth taking seriously. If you disagree, I’m interested to see why. If you could convince me that there is merit to the philosophical realist position, I would strongly update towards the Hard Problem being worth taking seriously.

Replies from: TAG, Shiroe↑ comment by TAG · 2023-10-21T15:16:32.489Z · LW(p) · GW(p)

Reality is only real in a reductionist sense; there are no people, no numbers and no consciousness, because those are just words that attempt to cope with the complexity of reality,