Posts

Comments

You're making a great point: an important function of the female approach is to filter out guys who think most women are "completely fucking idiotic".

Kudos for getting this interview and posting it! Extremely based.

As for Metz himself, nothing here changed my mind from what I wrote about him three years ago:

But the skill of reporting by itself is utterly insufficient for writing about ideas, to the point where a journalist can forget that ideas are a thing worth writing about. And so Metz stumbled on one of the most prolific generators of ideas on the internet and produced 3,000 words of bland gossip. It’s lame, but it’s not evil.

He just seems not bright or open minded enough to understand different norms of discussion and epistemology than what is in the NYT employee's handbook. It's not dangerous to talk to him (which I did back in 2020, before he pivoted the story to be about Scott). It's just kinda frustrating and pointless.

I feared other women not being into me was a sign she should focus on mating with other guys

When my wife and I just opened up, I did feel jealous quite regularly and eventually realized that the specific thing I was feeling was basically this. It felt like an ego/competitive/status loss thing as opposed to an actual fear of her infidelity or intent to leave me. And then after four years together it went away and never came back.

Now I actually find it kinda fun to not explicitly address "might we fuck?" with some friends, just leave it at the edge of things as a fun wrinkle and a permission to fantasize. A little monogamous frisson, as a treat.

This article made me realize a truth that should've been obvious to me a long time ago: the main benefit I get from polyamory is close female friends (where I don't have to worry about attraction ruining the friendship), sex and romance are secondary.

I spent a long time figuring out the same thing about women's beauty, and came to roughly similar conclusions: https://putanumonit.com/2022/12/13/why-are-women-hot/

We already established that men will happily have sex with women who aren’t optimizing for sexiness, and date women who aren’t the most sexually desirable, and persist in long-term relationships independent of the woman’s looks, and will care about their woman’s beauty in large part to the extent that they care about its effects their status in the hierarchy of men. And so my answer to the original question is:

Women are hot to see themselves, through the internalized standards of society’s judgment, as worthy of their relationships and their happiness.

The honor system sucks ass. Men want to fight for fun or to defend their tribe (I did both!) but not to be compelled into a fight by any random moron insulting them or they'll face social repercussions from within their own people.

Countercounterpoint: I just wanted to fight in rationalist fight club and it was great fun, I don't really care about winning (and not much about training).

By "everything is just experiences" I mean that all I have of the rock are experiences: its color, its apparent physical realness, etc. As for the rock itself, I highly doubt that it experiences anything.

As for your red being my red, we can compare the real phenomenology of it: does your red feel closer to purple or orange? Does it make you hungry or horny? But there's no intersubjective realm in which the qualia themselves of my red and your red can be compared, and no causal effect of the qualia themselves that can be measured or even discussed.

I feel that understanding that "is your red the same as my red" is a question-like sentence that doesn't actually point to any meaningful question is equivalent to understanding that HPoC is a confusion, and it's perhaps easier to start with this.

Here's a koan: WHO is seeing two "different" blues in the picture below?

I tried to communicate a psychological process that occurred for me: I used to feel that there's something to the Hard Problem of Consciousness, then I read this book explaining the qualities of our phenomenology, now I don't think there's anything to HPoC. This isn't really ignoring HPoC, it's offering a way out that seems more productive than addressing it directly. This is in part because terms HPoC insists on for addressing it are themselves confused and ambiguous.

With that said, let me try to actually address HPoC directly although I suspect that this will not be much more convincing.

HPoC roughly asks "why is perceiving redness accompanies by the quale of redness". This can be interpreted in one of two ways.

1. Why this quale and not another?

This isn't a meaningful question because the only thing that determines a quale as being a "quale of redness" is that it accompanies a perception of something red. I suspect that when people read these words they imagine something like looking at a tomato and seeing blue, but that's incoherent — you can't perceive red but have a "blue" quale.

2. Why this quale and not nothing?

Here it's useful to separate the perception of redness, i.e. a red object being part of the map, and the awareness of perceiving redness, i.e. a self that perceives a red object being part of the map. These are two separate perceptions. I suspect that when people think about p-zombies or whatever they imagine experiencing nothingness or oblivion, and not a perception unaccompanied by experience, or they imagine some subliminal "red" making them hungry similar to how it would affect a p-zombie. There is no coherent way to imagine being aware of perceiving red, and this being different from just perceiving red, without this awareness being an experience. All you have is experience.

HPoC is demanding a justification of experience from within a world in which everything is just experiences. Of course it can't be answered! If it could formulate a different world that was even in principle conceivable, it would make sense to ask why we're in world A and not in world B. But this second world isn't really conceivable if you focus on what it would mean. The things you're actually imagining are seeing a blue tomato or seeing nothing or seeing a tomato without being aware of it, you're not actually imagining an awareness of seeing a red tomato that isn't accompanied by experience.

I understand where you're coming from, but I think that norms about e.g. warning people about writing from an objectionable frame only makes sense for personal blogs and it's not a very reasonable expectation for a forum like LessWrong. These things are always very subjective (the three women I sent this post to for review certainly didn't feel that it assumed a male audience!). While a single author can create a shared expectation of what they mean by e.g. "warning: sexualizing" with their readers I don't think a whole community can or should try to formalize this as a norm.

Which means that it's on the reader to look out for themselves. I'm not going to put content warnings on my writing, but if you decide based on this post that you will not read anything written by me that's tagged "sex and gender" that's fair.

This was a very interesting read. Aside from just illuminating history and how people used to think differently, I think this story has a lot of implications for policy questions today.

The go-to suggestions for pretty much any structural ill in the world today is to "raise awareness" and "appoint someone". These two things often make the problem worse. "Raising awareness" mostly acts to give activists moral license to do nothing practical about the problem, and can even backfire by making the problem a political issue. For example, a campaign to raise awareness of HPV vaccines in Texas lowered the numbered of teenage girls getting the vaccine because it made the vaccine a signal of affiliation with the Democrat party. Appointing a "dealing with problem X officer" often means creating an office of people who work tirelessly to perpetuate problem X, lest they lose their livelihood.

So what was different with factory safety? This post does a good job highlighting the two main points:

• The problem was actually solvable

• The people who could actually solve it were given a direct financial incentive to solve it

This is a good model to keep in mind both for optimistic activists who believe in top down reforms, and for cynical economists and public choice theorists. Now how can we apply it to AI safety?

The best compliment I can give this post is that the core idea seems so obviously true that it seems impossible that I haven't thought of or read it before. And yet, I don't think I have.

Aside from the core idea that it's scientifically useful to determine the short list of variables that fully determine or mediate an effect, the secondary claim is that this is the main type of science that is useful and the "hypothesis rejection" paradigm is a distraction. This is repeated a few times but not really proven, and it's not hard to think of counterexamples: most medical research tries to find out whether a single molecule or intervention will ameliorate a single condition. While we know that most medical conditions depend on a whole slew of biological, environmental, and behavioral factors that's not the most relevant thing for treatment. I don't think this a huge weakness of the post, but certainly a direction to follow up on.

Finally, the post is clear and well written. I'm not entirely sure what the purpose of the digression about mutual information was, but everything else was concise and very readable.

This seems very obviously incorrect. Googling "how to make boyfriend happy" brings up a lot of articles about showing trust, making romantic gestures, giving compliments, doing extra chores, etc.

That's true, but I think this sort of thing isn't usually given as "dating advice" for women and many would bristle at the suggestion that the girl has to do and practice all those things to find a happy relationship. A girl who's googling "how to make boyfriend happy" instead of "how to get boyfriend" is already on the right track.

And again, I'm not saying that women don't contribute to relationships or marriages — they clearly do and you can make the fair argument that it's more than 50% on average. I'm saying that they don't signal their abilities and willingness to contribute in the early phases of mating and courting, and all advice about how to find a boyfriend doesn't talk about that at all.

Jack Sparrow is clearly recognized as a man by me, you, and everyone we know. Maybe where you grew up all men were limited in their gender expression to be somewhere between Jack Sparrow and John Rambo, in which case you really wouldn't need more than two genders. But that doesn't begin to cover the range of gender expression we see, not in some abstract thought experiment or rare medical edge case but in our very own community.

Just as a data point for you: I made the conscious decision to spend 100 hours on Elden Ring the day I bought it, and have spent almost none of these 100 hours feeling conflicted or shamed. Writing this post was also fun — was writing the comment fun for you?

I don't want to go into a discussion of all the topics this touches on from self-coercion to time management to AI timelines to fun, just a reminder to be careful about typical minding.

>if you hung out talking to people at a random bar, or on a random Discord server, or at work

The difference is that the Twitter ingroup has much more variety and quality (as evidenced by the big LW contingent) than your local bar, since it selects from a huge pool of people in large part for the ability to come up with cool ideas and takes. It's also much more conducive to open conversation on any and every topic whatsoever in a way that your workplace clearly isn't (nor should be, you have work to do!)

Of course, your local bar or server or workplace may just happen to be a unique scene that's even better, I'm not claiming that the Twitter ingroup is somehow ideal or optimal. But most people's local bars aren't like that, while Twitter is easily available for everyone everywhere to try out.

>Who do I follow, what buttons do I click...

Twitter shows you not only what someone posted, but also who they follow and a list of the tweets they liked. You can start from there for me or the people I linked to, find enough follows to at least entertain you while you learn the norms and see if you like the vibe enough to stay long-term. You won't find a clearer set of instructions for joining something as nebulous as the Twitter ingroup than what I wrote up here.

Yes. I think it ultimately wasn't a momentous historical event, especially in the short-term, but it was hard to know at the time and that's good practice for staying rational as history is happening (or not) as well.

Kind of a dark thought, but: there's always a baby boom after a war, fertility shoots way up. Putin has tried to prop up the Russian birth rate for many years to no avail...

I think it's extremely useful practice to follow momentous live events, try to figure out what's happening, and make live bets (which you can do for example by trading Russian/European stock indices and commodities). When the event of historic importance happens at your doorstep there will be even more FUD to deal with as you're looking for critical information to make decisions, and even more emotions to control.

I know this sounds kinda morbid, but I often ask myself the following question: what would I have done if I was a rich Jew in Vienna in 1936? This is my personal bar for my own rationality. I think it is quite likely that I will face at least one decision of this magnitude in my life, and my ability to be rational then will outweigh almost everything else I do. I know that life will only give me a few practice sessions for this event, like November 2016 and February 2020. I think it's quite worth taking a couple of days to immerse yourself in the news because it's hard to do right now.

This is a useful clarification. I use "edge" normally to include both the difference in probability of winning and losing and the different payout ratios. I think this usage is intuitive: if you're betting 5:1 on rolls of a six-sided die, no one would say they have a 66.7% "edge" in guessing that a particular number will NOT come up 5/6 of the time — it's clear that the payout ratio offsets the probability ratio.

Anyway, I don't want to clunk up the explanation so I just added a link to the precise formula on Wikipedia. If this essay gets selected on condition that I clarify the math, I'll make whatever edits are needed.

I feel like I don't have a good sense of what China is trying to do by locking down millions of people for weeks at a time and how they're modeling this. Some possibilities:

- They're just looking to keep a lid on things until the Chinese New Year (2/1) and the Olympics (2/4 - 2/20) at which point they'll relax restrictions and just try to flatten the top in each city.

- They legit think they're going to keep omicron contained forever (or until omicron-targeting vaccines?) and will lock down hard wherever it pops out.

- No one thinks they're not merely delaying the inevitable, but "zero COVID" is now the official party line and no one at any level of governance can ever admit it and so by the spring they're likely to have draconian lockdowns and exponential omicron.

Ironically, if the original SARS-COV-2 looked like a bioweapon targeted at the west (which wasn't disciplined enough about lockdowns), omicron really looks like a bioweapon targeted at China (which is too disciplined about even hopeless lockdowns).

I was in a few long-term relationship in my early twenties when I myself wasn't mature/aware enough for selfless dating. Then, after a 4-year relationship that was very explicit-rules based had ended, I went on about 25 first dates in the space of about 1 year before meeting my wife. Basically all of those 25 didn't work because of a lack of mutual interest, not because we both tried to make it a long-term thing but failed to hunt stag.

If I was single today, I would date not through OkCupid as I did back in 2014 but through the intellectual communities I'm part of now. And with the sort of women I would like to date in these communities I would certainly talk about things like selfless dating (and dating philosophy in general) on a first date. Of course, I am unusually blessed in the communities I'm part of (including Rationality).

A lot of my evidence comes from hearing other people's stories, both positive and negative. I've been writing fairly popular posts on dating for half a decade now, and I've had both close friends and anonymous online strangers in the dozens share their dating stories and struggles with me. For people who seem generally in a good place to go in the selfless direction the main pitfalls seem to be insecurity spirals and forgetting to communicate.

The former is when people are unable to give their partner the benefit of the doubt on a transgression, which usually stems from their own insecurity. Then they act more selfishly themselves, which causes the partner to be more selfish in turn, and the whole thing spirals.

The latter is when people who hit a good spot stop talking about their wants and needs. As those change they end up with a stale model of each other. Then they inevitably end up making bad decisions and don't understand why their idyll is deteriorating.

To address your general tone: I am lucky in my dating life, and my post (as I wrote in the OP itself) doesn't by itself constitute enough evidence for an outside-view update that selfless relationships are better. If this speaks to you intuitively, hopefully this post is an inspiration. If it doesn't, hopefully it at least informs you of an alternative. But my goal isn't to prove anything to a rationalist standard, in part because I think this way of thinking is not really helpful in the realm of dating where every person's journey must be unique.

As a note, I've spoken many times about the importance of having empathy for romanceless men because they're a common punching bag and have written about incel culture specifically. The fact that the absolute worst and most aggravating commenters on my blog identify as incels doesn't make me anti-incel, it just makes me anti those commenters.

I should've written "capitulated to consumerism" but "capitulate to capital" just sounds really cool if you say it out loud.

"Bitcoin" comes from the old Hebrew "Beit Cohen", meaning "house of the priest" or "temple". Jesus cleansed the temple in Jerusalem by driving out the money lenders. The implications of this on Bitcoin vis a vis interchangeable fiat currencies are obvious and need no elaboration.

The full text of John 2 proves this connection beyond any doubt. "𝘏𝘦 𝘴𝘤𝘢𝘵𝘵𝘦𝘳𝘦𝘥 𝘵𝘩𝘦 𝘤𝘩𝘢𝘯𝘨𝘦𝘳'𝘴 𝘤𝘰𝘪𝘯𝘴 𝘢𝘯𝘥 𝘰𝘷𝘦𝘳𝘵𝘩𝘳𝘦𝘸 𝘵𝘩𝘦𝘪𝘳 𝘵𝘢𝘣𝘭𝘦𝘴" (John 2:15) refers to overthrowing the database tables of the centralized ledger and "scattering" the record of transactions among decentralized nodes on the blockchain.

"𝘔𝘢𝘯𝘺 𝘱𝘦𝘰𝘱𝘭𝘦 𝘴𝘢𝘸 𝘵𝘩𝘦 𝘴𝘪𝘨𝘯𝘴 𝘩𝘦 𝘸𝘢𝘴 𝘱𝘦𝘳𝘧𝘰𝘳𝘮𝘪𝘯𝘨 𝘢𝘯𝘥 𝘣𝘦𝘭𝘪𝘦𝘷𝘦𝘥 𝘪𝘯 𝘩𝘪𝘴 𝘯𝘢𝘮𝘦. 𝘉𝘶𝘵 𝘑𝘦𝘴𝘶𝘴 𝘸𝘰𝘶𝘭𝘥 𝘯𝘰𝘵 𝘦𝘯𝘵𝘳𝘶𝘴𝘵 𝘩𝘪𝘮𝘴𝘦𝘭𝘧 𝘵𝘰 𝘵𝘩𝘦𝘮." (John 2:23-24) couldn't be any clearer as to the identity of Satoshi Nakamoto.

And finally, "𝘏𝘦 𝘥𝘪𝘥 𝘯𝘰𝘵 𝘯𝘦𝘦𝘥 𝘢𝘯𝘺 𝘵𝘦𝘴𝘵𝘪𝘮𝘰𝘯𝘺 𝘢𝘣𝘰𝘶𝘵 𝘮𝘢𝘯𝘬𝘪𝘯𝘥, 𝘧𝘰𝘳 𝘩𝘦 𝘬𝘯𝘦𝘸 𝘸𝘩𝘢𝘵 𝘸𝘢𝘴 𝘪𝘯 𝘦𝘢𝘤𝘩 𝘱𝘦𝘳𝘴𝘰𝘯." (John 2:25) explains that there's no need for the testimony of any trusted counterparty when you can see what's in each person's submitted block of Bitcoin transactions.

And if you think of shorting Bitcoin, remember John 2:19: "𝘑𝘦𝘴𝘶𝘴 𝘢𝘯𝘴𝘸𝘦𝘳𝘦𝘥 𝘵𝘩𝘦𝘮, '𝘋𝘦𝘴𝘵𝘳𝘰𝘺 𝘵𝘩𝘪𝘴 𝘵𝘦𝘮𝘱𝘭𝘦, 𝘢𝘯𝘥 𝘐 𝘸𝘪𝘭𝘭 𝘳𝘢𝘪𝘴𝘦 𝘪𝘵 𝘢𝘨𝘢𝘪𝘯 𝘪𝘯 𝘵𝘩𝘳𝘦𝘦 𝘥𝘢𝘺𝘴.'"

The ordering is based on measures of neuro-correlates of the level of consciousness like neural entropy or perturbational complexity, not on how groovy it subjectively feels.

Copying from my Twitter response to Eliezer:

Anil Seth usefully breaks down consciousness into 3 main components:

1. level of consciousness (anesthesia < deep sleep < awake < psychedelic)

2. contents of consciousness (qualia — external, interoceptive, and mental)

3. consciousness of the self, which can further be broken down into components like feeling ownership of a body, narrative self, and a 1st person perspective.

He shows how each of these can be quite independent. For example, the selfhood of body-ownership can be fucked with using rubber arms and mirrors, narrative-self breaks with amnesia, 1st person perspective breaks in out-of-body experiences which can be induced in VR, even the core feeling of the reality of self can be meditated away.

Qualia such as pain are also very contextual, the same physical sensation can be interpreted positively in the gym or a BDSM dungeon and as acute suffering if it's unexpected and believed to be caused by injury. Being a self, or thinking about yourself, is also just another perception — a product of your brain's generative model of reality — like color or pain are. I believe enlightened monks who say they experience selfless bliss, and I think it's equally likely that chickens experience selfless pain.

Eliezer seems to believe that self-reflection or some other component of selfhood is necessary for the existence of the qualia of pain or suffering. A lot of people believe this simply because they use the word "consciousness" to refer to both (and 40 other things besides). I don't know if Eliezer is making such a basic mistake, but I'm not sure why else he would believe that selfhood is necessary for suffering.

The "generalist" description is basically my dream job right until

>The team is in Berkeley, California, and team members must be here full-time.

Just yesterday I was talking to a friend who wants to leave his finance job to work on AI safety and one of his main hesitations is that whichever organization he joins will require him to move to the Bay. It's one thing to leave a job, it's another to leave a city and a community (and a working partner, and a house, and a family...)

This also seems somewhat inefficient in terms of hiring. There are many qualified AI safety researchers and Lightcone-aligned generalists in the Bay, but there are surely even more outside it. So all the Bay-based orgs are competing for the same people, all complaining about being talent-constrained above anything else. At the same time, NYC, Austin, Seattle, London, etc. are full of qualified people with nowhere to apply.

I'm actually not suggesting you should open this particular job to non-Berkeley people. I want to suggest something even more ambitious. NYC and other cities are crying out for a salary-paying organization that will do mission-aligned work and would allow people to change careers into this area without uprooting their entire lives, potentially moving on to other EA organizations later. Given that a big part of Lightcone's mission is community building, having someone start a non-Bay office could be a huge contribution that will benefit the entire EA/Rationality ecosystem by channeling a lot of qualified people into it.

And if you decide to go that route you'll probably need a generalist who knows people...

I'm not sure what's wrong, it works for me. Maybe change the https to http?

https://quillette.com/2021/05/13/the-sex-negative-society/

Googling "sex negative society quillette" should bring it up in any case.

rationality is not merely a matter of divorcing yourself from mythology. Of course, doing so is necessary if we want to seek truth...

I think there's a deep error here, one that's also present in the sequences. Namely, the idea that "mythology mindset" is something one should or can just get rid of, a vestige of silly stories told by pre-enlightenment tribes in a mysterious world.

I think the human brain does "mythological thinking" all the time, and it serves an important individual function of infusing the world with value and meaning alongside the social function of binding a tribe together. Thinking that you can excise mythological thinking from your brain only blinds you to it. The paperclip maximizer is a mythos, and the work it does in your mind of giving shape and color to complex ideas about AGI is no different from the work Biblical stories do for religious people. "Let us for the purpose of thought experiment assume that in the land of Uz lived a man whose name was Job and he was righteous and upright..."

The key to rationality is recognizing this type of thinking in yourself and others as distinct from Bayesian thinking. It's the latter that's a rare skill that can be learned by some people in specialized dojos like LessWrong. When you really need to get the right answer to a reality-based question you can keep the mythological thinking from polluting the Bayesian calculation — if you're trained at recognizing it and haven't told yourself "I don't believe in myths".

PS5 scalpers redistribute consoles away from those willing to burn time to those willing to spend money. Normally this would be a positive — time burned is just lost, whereas the money is just transferred from Sony to the scalpers who wrote the quickest bot. However, you can argue that gaming consoles in particular are more valuable to people with a lot of spare time to burn than to people with day jobs and money!

Disclosure: I'm pretty libertarian and have a full-time job but because there weren't any good exclusives in the early months I decided to ignore the scalpers. I followed https://twitter.com/PS5StockAlerts and got my console at base price in April just in time for Returnal. Returnal is excellent and worth getting the PS5 for even if costs you a couple of hours or an extra $100.

Empire State of Mind

I want to second Daniel and Zvi's recommendation of New York culture as an advantage for Peekskill. An hour away from NYC is not so different from being in NYC — I'm in a pretty central part of Brooklyn and regularly commute an hour to visit friends uptown or further east in BK and Queens. An hour in traffic sucks, an hour on the train is pleasant. And being in NYC is great.

A lot of the Rationalist-adjacent friends I made online in 2020 have either moved to NYC in the last couple of months or are thinking about it, as rents have dropped up to 20% in some neighborhoods and everyone is eager to rekindle their social life. New York is also a vastly better dating market for male nerds given a slightly female-majority sex ratio and thousands of the smartest and coolest women on the planet as compared to the male-skewed and smaller Bay Area.

Peekskill is also 2 hours from Philly and 3 from Boston, which is not too much for a weekend trip. That could make it the Schelling point for East Coast megameetups/conferences/workshops since it's as easy to get to as NYC and a lot cheaper to rent a giant AirBnB in.

Won't Someone Think of the Children

I love living in Brooklyn, but the one thing that could make us move in the next year or two is a community of my tribe that are willing to help each other with childcare, from casual babysitting to homeschooling pods. I'm keenly following the news of where Rationalist groups are settling, especially those who plan to (like us) or already have kids. A critical mass of Rationalist parents in Peekskill may be enticing enough for us to move there, since we could have the combined benefits of living space, proximity to NYC, and the community support we would love.

I don't think that nudgers are consequentialists who also try to accurately account for public psychology. I think 99% of the time they are doing something for non-consequentialist reasons, and using public psychology as a rationalization. Ezra Klein pretty explicitly cares about advancing various political factions above mere policy outcomes, IIRC on a recent 80,000 Hours podcast Rob was trying to talk about outcomes and Klein ignored him to say that it's bad politics.

I understand, I think we have an honest disagreement here. I'm not saying that the media is cringe in an attempt to make it so, as a meta move. I honestly think that the current prestige media establishment is beyond reform, a pure appendage of power. It's impact can grow weaker or stronger, but it will not acquire honesty as a goal (and in fact, seems to be giving up even on credibility).

In any case, this disagreement is beyond the scope of your essay. What I learn from it is to be more careful of calling things cringe or whatever in my own speech, and to see this sort of thing as an attack on the social reality plane rather than an honest report of objective reality.

Other people have commented here that journalism is in the business of entertainment, or in the business of generating clicks etc. I think that's wrong. Journalism is in the business of establishing the narrative of social reality. Deciding what's a gaffe and who's winning, who's "controversial" and who's "respected", is not a distraction from what they do. It's the main thing.

So it's weird to frame this is "politics is way too meta". Too meta for whom? Politicians care about being elected, so everything they say is by default simulacrum level 3 and up. Journalists care about controlling the narrative, so everything they say is by default simulacrum level 3 and up. They didn't aim at level 1 and miss, they only brush against level 1 on rare occasion, by accident.

Here are some quotes from our favorite NY Times article, Silicon Valley's Safe Space:

the right to discuss contentious issues

The ideas they exchanged were often controversial

even when those words were untrue or could lead to violence

sometimes spew hateful speech

step outside acceptable topics

turned off by the more rigid and contrarian beliefs

his influential, and controversial, writings

push people toward toxic beliefs

These aren't accidental. Each one of the bolded words just means "I think this is bad, and you better follow me". They're the entire point of the article — to make it so that it's social reality to think that Scott is bad.

So I think there are two takeaways here. One is for people like us, EAs discussing charity impact or Rationalists discussing life-optimization hacks. The takeaway for us is to spend less time writing about the meta and more about the object level. And then there's a takeaway about them, journalists and politicians and everyone else who lives entirely in social reality. And the takeaway is to understand that almost nothing they say is about objective reality, and that's unlikely to change.

I agree that advertising revenue is not an immediate driving force, something like "justifying the use of power by those in power" is much closer to it and advertising revenue flows downstream from that (because those who are attracted to power read the Times).

I loved the rest of Viliam's comment though, it's very well written and the idea of the eigen-opinion and being constrained by the size of your audience is very interesting.

Here's my best model of the current GameStop situation, after nerding out about it for two hours with smart friends. If you're enjoying the story as a class warfare morality play you can skip this, since I'll mostly be talking finance. I may all look really dumb or really insightful in the next few days, but this is a puzzle I wanted to figure out. I'm making this public so posterity can judge my epistemic rationality skillz — I don't have a real financial stake either way.

Summary: The longs are playing the short game, the shorts are playing the long game.

At $300, GameStop is worth about $21B. A month ago it was worth $1B, so there's $20B at stake between the long-holders and short sellers.

Who's long right now? Some combination of WSBers on a mission, FOMOists looking for a quick buck, and institutional money (i.e., other hedge funds). The WSBers don't know fear, only rage and loss aversion. A YOLOer who bought at $200 will never sell at $190, only at $1 or the moon. FOMOists will panic but they're probably a majority and today's move shook them off. The hedgies care more about risk, they may hedge with put options or trust that they'll dump the stock faster than the retail traders if the line breaks.

The interesting question is who's short. Shorts can probably expect to need a margin equal to ~twice the current share price, so anyone who shorted too early or for 50% of their bankroll (like Melvin and Citron) got squeezed out already. But if you shorted at $200 and for 2% of your bankroll you can hold for a long time. The current borrowing fee is 31% APR, or just 0.1% a day. I think most of the shorts are in the latter category, here's why:

Short interest has stayed at 71M shares even as this week saw more than 500M shares change hands. I think this means that new shorts are happy to take the places of older shorts who cash out, they're only constrained by the fact that ~71M are all that's available to borrow. Naked shorts aren't really a thing, forget about that. So everyone short $GME now is short because they want to be, if they wanted to get out they could. In a normal short squeeze the available float is constrained, but this hasn't really happened with $GME.

WSBers can hold the line but can't push higher without new money that would take some of these 71M shares out of borrowing circulation or who will push the price up so fast the shorts will get margin-called or panic. For the longs to win, they probably need something dramatic to happen soon.

One dramatic thing that could happen is that people who sold the huge amount of call options expiring Friday aren't already hedged and will need to buy shares to deliver. It's unclear if that's realistic, most option sellers are market makers who don't stay exposed for long. I don't think there were options sold above the current price of $320, so there's no gamma left to squeeze.

I think $GME getting taken off retail brokerages really hurt the WSBers. It didn't cause panic, but it slowed the momentum they so dearly needed and scared away FOMOists. By the way, I don't think brokers did it to screw with the small people, they're their clients after all. It just became too expensive for brokerages to make the trade because they need to post clearing collateral for two days. They were dumb not to anticipate this, but I don't think they were bribed by Citadel or anything.

For the shorts to win they just need to wait it out not get over-greedy. Eventually the longs will either get bored or turn on each other — with no squeeze this becomes just a pyramid scheme. If the shorts aren't knocked out tomorrow morning by a huge flood of FOMO retail buys, I think they'll win over the next weeks.

This is a self-review, looking back at the post after 13 months.

I have made a few edits to the post, including three major changes:

1. Sharpening my definition of what counts as "Rationalist self-improvement" to reduce confusion. This post is about improved epistemics leading to improved life outcomes, which I don't want to conflate with some CFAR techniques that are basically therapy packaged for skeptical nerds.

2. Addressing Scott's "counterargument from market efficiency" that we shouldn't expect to invent easy self-improvement techniques that haven't been tried.

3. Talking about selection bias, which was the major part missing from the original discussion. My 2020 post The Treacherous Path to Rationality is somewhat of a response to this one, concluding that we should expect Rationality to work mostly for those who self-select into it and that we'll see limited returns to trying to teach it more broadly.

The past 13 months also provided more evidence in favor of epistemic Rationality being ever more instrumentally useful. In 2020 I saw a few Rationalist friends fund successful startups and several friends cross the $100k mark for cryptocurrency earnings. And of course, LessWrong led the way on early and accurate analysis of most COVID-related things. One result of this has been increased visibility and legitimacy, and of course another is that Rationalists have a much lower number of COVID cases than all other communities I know.

In general, this post is aimed at someone who discovered Rationality recently but is lacking the push to dive deep and start applying it to their actual life decisions. I think the main point still stands: if you're Rationalist enough to think seriously about it, you should do it.

Trade off to a promising start :P

There's a whole lot to respond to here, and it may take the length of Surfing Uncertainty to do so. I'll point instead to one key dimension.

You're discussing PP as a possible model for AI, whereas I posit PP as a model for animal brains. The main difference is that animal brains are evolved and occur inside bodies.

Evolution is the answer to the dark room problem. You come with prebuilt hardware that is adapted a certain adaptive niche, which is equivalent to modeling it. Your legs are a model of the shape of the ground and the size of your evolutionary territory. Your color vision is a model of berries in a bush, and your fingers that pick them. Your evolved body is a hyperprior you can't update away. In a sense, you're predicting all the things that are adaptive: being full of good food, in the company of allies and mates, being vigorous and healthy, learning new things. Lying hungry in a dark room creates a persistent error in your highest-order predictive models (the evolved ones) that you can't change.

Your evolved prior supposes that you have a body, and that the way you persist over time is by using that body. You are not a disembodied agent learning things for fun or getting scored on some limited test of prediction or matching. Everything your brain does is oriented towards acting on the world effectively.

You can see that perception and action rely on the same mechanism in many ways, starting with the simple fact that when you look at something you don't receive a static picture, but rather constantly saccade and shift your eyes, contract and expand your pupil and cornea, move your head around, and also automatically compensate for all of this motion. None of this is relevant to an AI who processes images fed to it "out of the void", and whose main objective function is something other than maintaining homeostasis of a living, moving body.

Zooming out, Friston's core idea is a direct consequence of thermodynamics: for any system (like an organism) to persist in a state of low entropy (e.g. 98°F) in an environment that is higher entropy but contains some exploitable order (e.g. calories aren't uniformly spread in the universe but concentrated in bananas), it must exploit this order. Exploiting it is equivalent to minimizing surprise, since if you're surprised there some pattern of the world that you failed to make use of (free energy).

Now if you just apply this basic principle to your genes persisting over an evolutionary time scale and your body persisting over the time scale of decades and this sets the stage for PP applied to animals.

Off the top of my head, here are some new things it adds:

1. You have 3 ways of avoiding prediction error: updating your models, changing your perception, acting on the world. Those are always in play and you often do all three in some combination (see my model of confirmation bias in action).

2. Action is key, and it shapes and is shaped by perception. The map you build of any territory is prioritized and driven by the things you can act on most effectively. You don't just learn "what is out there" but "what can I do with it".

3. You care about prediction over the lifetime scale, so there's an explore/exploit tradeoff between potentially acquiring better models and sticking with the old ones.

4. Prediction goes from the abstract to the detailed. You perceive specifics in a way that aligns with your general model, rarely in contradiction.

5. Updating always goes from the detailed to the abstract. It explains Kuhn's paradigm shifts but for everything — you don't change your general theory and then update the details, you accumulate error in the details and then the general theory switches all at once to slot them into place.

6. In general, your underlying models are a distribution but perception is always unified, whatever your leading model is. So when perception changes it does so abruptly.

7. Attention is driven in a Bayesian way, to the places that are most likely to confirm/disconfirm your leading hypothesis, balancing the accuracy of perceiving the attended detail correctly and the leverage of that detail to your overall picture.

8. Emotions through the lens of PP.

9. Identity through the lens of PP.

10. The above is fractal, applying at all levels from a small subconscious module to a community of people.

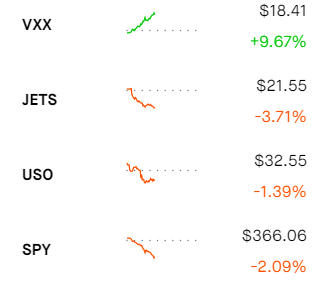

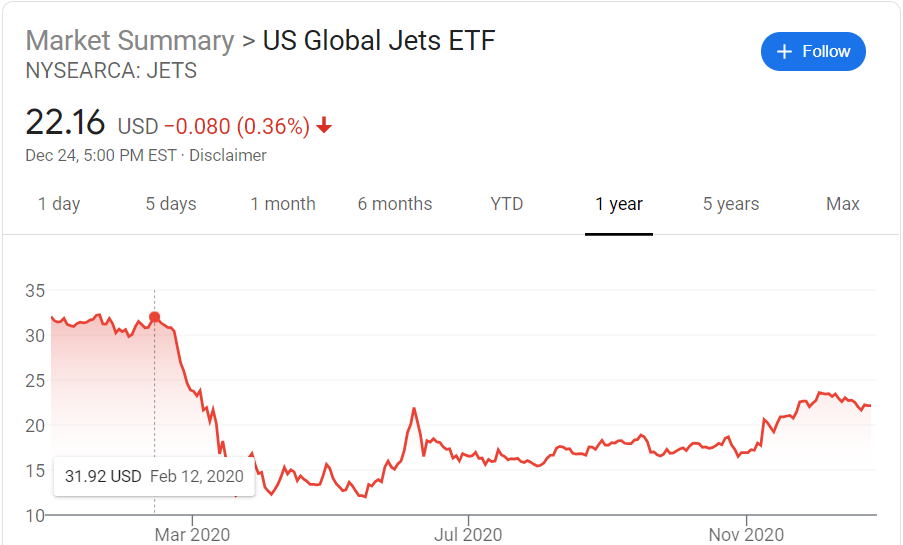

The new strain has been confirmed in the US and the vaccine rollout is still sluggish and messed up, so the above are in effect. The trades I made so far are buying out-of-the-money calls on VXX (volatility) and puts on USO (oil) and JETS (airlines) all for February-March. I'll hold until the market has a clear, COVID related drop or until these options all expire worthless and I take the cap gains write-off. And I'm HODLing all crypto although that's not particularly related to COVID. I'm not in any way confident that this is wise/useful, but people asked.

I don't think it was that easy to get to the saturated end with the old strain. As I remember, the chance of catching COVID from a sick person in your household was only around 20-30%, and at superspreader events it was still just a small minority of total attendees that were infected.

The VXX is basically at multi-year lows right now, so one of the following is true:

1. Markets think that the global economy is very calm and predictable right now.

2. I'm misunderstanding an important link between "volatility = unpredictability of world economics" and "volatility = premium on short-term SP500 options".

Some options and their 1-year charts:

JETS - Airline ETF

XLE - Energy and oil company ETF

AWAY - Travel tech (Expedia, Uber) ETF

Which would you buy put options on, and with what expiration?

Those are good points. I think competition (real and potential) is always at least worth considering in any question of business, and I was surprised the OP didn't even mention it. But yes, I can imagine situations where you operate with no relevant competition.

But this again would make me think that pricing and the story you tell a client is strictly secondary to finding these potential clients in the first place. If they were the sort of people who go out seeking help you'd have competition, so that means you have to find people who don't advertise their need. That seems to be the main thing the author doing and the value they're providing: finding people who need recruitment help and don't realize it.

This pricing makes sense if your only competition is your client just going at it by themselves, in which case you clearly demonstrate that you offer a superior deal. But job seekers have a lot of consultants/agencies/headhunters they can turn to and I'd imagine your price mostly depends on the competition. In the worst case, you not only lose good clients to cheaper competition, but get an adverse selection of clients who would really struggle to find a job in 22 weeks and so your services are cheap/free for them.

This statement for example:

> Motivating you to punish things is what that part of your brain does, after all; it’s not like it can go get another job!

I'm coming more from a predictive processing / bootstrap learning / constructed emotion paradigm in which your brain is very flexible about building high-level modules like moral judgment and punishment. The complex "moral brain" that you described is not etched into our hardware and it's not universal, it's learned. This means it can work quite differently or be absent in some people, and in others it can be deconstructed or redirected — "getting another job" as you'd say.

I agree that in practice lamenting the existence of your moral brain is a lot less useful than dissolving self-judgment case-by-case. But I got a sense from your description that you see it as universal and immutable, not as something we learned from parents/peers and can unlearn.

P.S.

Personal bias alert — I would guess that my own moral brain is perhaps in the 5th percentile of judginess and desire to punish transgressors. I recently told a woman about EA and she was outraged about young people taking it on themselves to save lives in Africa when billionaires and corporations exist who aren't helping. It was a clear demonstration of how different people's moral brains are.

I've come across a lot of discussion recently about self-coercion, self-judgment, procrastination, shoulds, etc. Having just read it, I think this post is unusually good at offering a general framework applicable to many of these issues (i.e., that of the "moral brain" taking over). It's also peppered with a lot of nice insights, such as why feeling guilty about procrastination is in fact moral licensing that enables procrastination.

While there are many parts of the posts that I quibble with (such as the idea of the "moral brain" as an invariant specialized module), this post is a great standalone introduction and explanation of a framework that I think is useful and important.