The Origin of Consciousness Reading Companion, Part 1

post by Jacob Falkovich (Jacobian) · 2020-04-06T22:07:35.190Z · LW · GW · 1 commentsContents

Introduction: The Problem of Consciousness Chapter 1: The Consciousness of Consciousness Chapter 2: Consciousness Language Analogs Consciousness Following Language None 1 comment

Cross-posted, as always, from Putanumonit.

As I threatened two weeks ago, the Bicameral Book Club met for its first session to discuss the first few chapters of The Origin of Consciousness in the Breakdown of the Bicameral Mind by Julian Jaynes. The book club will meet weekly, and every week I’ll write up a summary of the conversation and my follow up thoughts. I have a lot of thoughts about consciousness — this post has over 10% of the word count of the book chapters it covers.

This is not intended to be a review of the book but more of a reading companion — if you can’t join our book club live you can follow along or read this whenever you read the book.

I’m not going to track who came up with each thought below on the book club calls. Everything below is the result of a collective effort, and I’m grateful to all the participants.

Introduction: The Problem of Consciousness

Jaynes goes over the history of failed scientific conceptions of consciousness in a series of somewhat vignettes on views from Associationism to Skinnerian Behaviorism. There’s a strong whiff of straw about this section, but it’s an important reminder that for most of its modern history academic psychology refused to take the study of consciousness seriously. Christof Koch writes in his own book on the topic that “in the late 1980s, writing about consciousness was taken as a sign of cognitive decline.” I think that one of Jaynes’ historic contributions is mainstreaming consciousness as a topic of interest for both science and pop-science. The Origin, published in 1976, may have paved the way for the work of Koch, Dennett, Sachs, et al.

In a premonition for the rest of the book, the historic review notes how every age used metaphors from whichever science was prominent at the time to refer to consciousness. To the ancient Greeks, it was an open space like the sea. When geology was popular consciousness was a structure of hidden layers, and in the steam age it was “a boiler of straining energy”.

A survey of the book club revealed the without exception, the prevailing metaphor today is the brain as a computer. While this metaphor surely introduces its own biases, at least the world of computing is rich enough to provide variety: algorithms, global workspace theory, CPU allocation, input/output, etc.

Speaking of bias, it’s notable that Jaynes’ first scientific foray into consciousness research was trying to demonstrate consciousness-as-learning in mimosa plants and protozoa. Three decades after his failure to demonstrate that consciousness extends even to plants, Jaynes is arguing for a maximally opposed view, denying consciousness not just to all animals but also to Homer.

What bias do I bring to the topic? Perhaps my main one is a Rationalist’s faith in reductionism [? · GW] and a failure to be moved by the hardness of the Hard Problem of Consciousness. I’m not sure how David Chalmers, having agreed that all ontologies are fake, can claim that no scientific discovery or conceptual advance could ever bridge the gap in understanding consciousness. My instinct is to follow the discoveries and the concepts; I bet that as they accumulate we will find the mysteriousness of consciousness dissolved just as the mysteriousness of light, life, etc has gone before.

Chapter 1: The Consciousness of Consciousness

Is Jaynes the worst ever at naming books and chapters? I’m getting tired of typing out the word, and he wrote the book in pencil.

Throughout this chapter, I felt that Jaynes is pulling a fast one on the readers. He demonstrates the irrelevance of consciousness to tasks like judging which hand holds a heavier weight by asking if you remember making the determination consciously. But this is asking two things of your consciousness: to judge, and also to remember judging. Those are different tasks! Blackout drunk people presumably have subjective experience in the moment, even though their hungover consciousness can’t recall which of the poor judgments made the night before it contributed to.

The experiencing self is not the remembering self. They’re barely even friends.

Jaynes makes the case, which informed introspection confirms, that consciousness is not on 100% of the time, and it’s not doing 100% of the work. But “not 100%” doesn’t mean zero, and it’s very hard to tell where the truth lies in between the two.

The book club estimated being conscious between 0-30% of the time while doing zone-out tasks like driving, and 60-90% for engaging tasks. But even while driving a familiar road one is conscious of some things, once in a while, like a memory triggered by the song on the radio. If consciousness “checked-in” for a brief moment every few seconds, would we even notice or remember?

We’ve known for a long time that tasks requiring precise body movements like playing tennis or the piano are performed best when one thinks about their movement the least. Jaynes draws the causal arrow in the intuitive direction: lack of awareness -> good performance. But what if it’s the other way around?

Predictive processing tells us that the only information actually propagated through our neurons bottom-up is prediction error. When a musician sits down to play a piano piece he knows exactly how it should sound, and so would only snap into awareness if he misses a note. Poor performance -> conscious awareness, and once awareness is turned on it can reflect and fix the situation.

Why would consciousness inhibit the performance of tasks like tennis or piano? Here’s my half-baked hypothesis.

A large role of consciousness, and a possible reason for its evolution, may be the allocation System 2-type thinking to various tasks. System 2 thinking is detail-oriented, serial, demanding of working memory, and slow. It’s very resource-intensive, and so our brains generally prefer not to do it. Once in a while, some stimulus or subconscious process generates enough energy to reach conscious awareness, which can then allocate some hard thinking to deal with the issue at hand. System 2 is also the only one that can do cognitive decoupling: imagine logical counterfactuals abstracted from their current context.

But System 2 is way too slow to figure out where your left finger should go in the middle of playing an allegro passage. Anyone can play a concerto at a tempo of one note every 3 seconds. What makes a physical skill impressive is the speed of precise movement, and speed requires training your System(s) 1.

I probably like this hypothesis more than most because I feel like I came up with it independently, so take it with a grain of salt. This theory also implies a pretty restricted domain of consciousness: ancient Greeks had it, but most animals don’t since they don’t demonstrate anything that looks like System 2 thinking.

The section titled Consciousness Not a Copy of Experience is ironic because my own experience is nothing at all like a copy of Jaynes’.

Consider the following problems: Does the door of your room open from the right or the left? Which is your second longest finger? At a stoplight, is it the red or the green that is on top? How many teeth do you see when brushing your teeth? What letters are associated with what numbers on a telephone dial? […]

I think you will be surprised how little you can retrospect in consciousness on the supposed images you have stored from so much previous attentive experience.

I had absolutely no problem answering any of the questions above and I’m not a particularly observant person, as my wife can attest to her frustration.

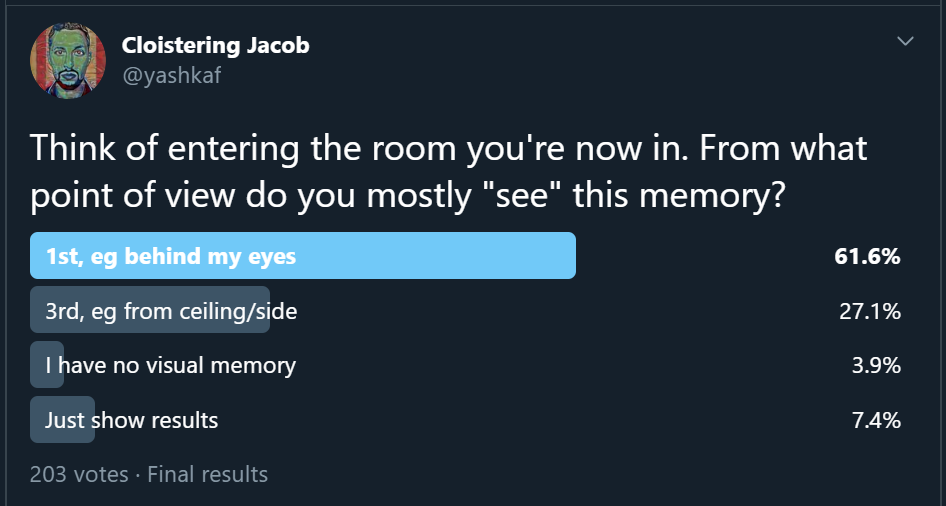

Think, if you will, of when you entered the room you are now in. Are the images of which you have copies the actual sensory fields? Don’t you have an image of yourself coming through one of the doors, perhaps even a bird’s-eye view of one of the entrances?

No. No, I fucking don’t. What kind of sick pervert “remembers” themselves from a third-person view? I’ve seen hundreds of minutes of myself on camera, played hundreds of hours of third-person video games, and yet my memories are all very much in the first-person.

Ok, it turns out that roughly a quarter of all people are third-person perverts. I wonder how aware Jaynes was that the flavor of his subjective experience is far from universal and that some universal experiences he may be completely missing out on. I should keep this in mind for the rest of the book.

This section is arguing against a strawman version of consciousness-as-tape-recorder. We know that memory is imperfect, changes over time, and gets mixed up with confabulations. It also has a lot of gaps by necessity, remembering only bits and pieces of your moment-to-moment sensory experience. And what if I remembered something I imagined or hallucinated, is that a “real” memory or a false one?

But since a lot of Jaynes’ arguments in this chapter rely on a lack of memory to disprove the role of consciousness, the fallibility of memory weakens those arguments rather than enhance them.

Chapter 2: Consciousness

This chapter sketches out an argument in three parts:

- All language is based on metaphor, on analogizing something new to something familiar based on similarities. Metaphor is not only the main way we communicate, it is the main way we understand reality.

- Consciousness is a metaphor-based analog of the real world, a space where we can play out mental actions that correspond to behavior in reality.

- 1+2 = Consciousness is based on language, and it must have come after language.

Let’s address these in turn.

Language

I found the idea of language being based on metaphors very compelling. The foundational blocks of today’s languages come from ancient metaphors (“to be” comes from the Sanskrit “bhu” which means “to grow”), and almost all the new concepts our language accrues are metaphors (“coronavirus” means “the thing that is like poison surrounded by spikes like a crown”).

But if you use “metaphor” itself as a metaphor and stretch it to include all kinds of understanding-by-analogy, it becomes obvious that metaphor is not limited to language. You can think of visual metaphors, a caveman drawing a bat as a mouse with wings applies the same metaphor as a modern German calling it fledermaus. Even a simple gesture like showing your palms to defuse conflict is a metaphor for a situation where your hands could’ve held a rock.

What separates language from other systems of metaphors? The two relevant differences I can think of are:

- Language is used to communicate with other people, not just to organize things in your own mind. Why would this make it the key to consciousness? Well, we know that a lot of simple associative learning and reasoning-by-similarity happens unconsciously based on repeated experience. But another person can’t transmit direct experience, only words. And to infer from those someone else’s experience that is novel to you personally, that requires consciousness.

- Unlike a picture or a gesture, language uses pure symbols (words) that have no inherent connection to their referent. This is what allows language to evolve and grow in complexity. Perhaps this is the step that requires consciousness — linking observable objects to purely mental constructs.

Analogs

Jaynes explains the difference between a model (like Bohr’s solar system-like atom, where no particular electron corresponds to a particular planet) and an analog (like a map, where every region corresponds to a particular plot of land). He writes that consciousness is an analog of the real world, not a model. Some people use the metaphor of the map and the territory to say “map” when they mean “model”, but I think the distinction is important.

Models can clearly live in your subconscious, but how about analogs? Think of mimicking the motions of a humanoid cartoon character on TV. You can do so automatically, with your sub-conscious brain holding an analog between your body parts and the shapes created by pixels on a screen.

But the main argument presented isn’t that all mental analogs are conscious, but that consciousness is an analog of reality. In Jaynes’ words:

We have said that consciousness is an operation rather than a thing, a repository, or a function. It operates by way of analogy, by way of constructing an analog space with an analog “I” that can observe that space, and move metaphorically in it. It operates on any reactivity, excerpts relevant aspects, narratizes and conciliates them together in a metaphorical space where such meanings can be manipulated like things in space. Conscious mind is a spatial analog of the world and mental acts are analogs of bodily acts.

Consider the mental act of multiplying 37 by 12. It seems to me that multiplication doesn’t have to be “seen in physical space”, doesn’t contain an observing self, is free of narrative, and isn’t directly analogous to any physical behavior. When I multiply I don’t think of myself stacking blocks in towers, I just manipulate mental objects with no context. And yet multiplying numbers in my head is an intensely conscious process, requiring full awareness and attention on the task. I can’t multiply in my head while distracted, and the process always leaves a trace in memory at least in the short-term.

Of course, a hand calculator can multiply two numbers. Presumably what grants me consciousness is that it’s like something to be me while I’m doing the math, which is presumably not true of the calculator. But I’m having a hard time seeing how this fits into Jaynes’ theory.

If anything, it seems that mental objects can fall on a ladder of abstraction, going from direct sensory experiences (the weight of a rock in your hand), to language (the word “rock” standing in for a category of things), to things like math and logic. Throwing a rock requires little consciousness, talking about it requires more, deriving the formula of its flight takes the most.

Consciousness Following Language

I challenged the book club to either steelman Jaynes’ assertion that #3 follows from #1 and #2 or point out if they think I mischaracterized the argument chapter. No one did either. In a poll of the likelihood we give Jaynes’ assertion that consciousness appeared after language for humans the median was my own 35% with a range of 1%-80%. After writing this post, I’m down to 20% at most.

A good test of theory would be a feral child growing up without language, someone like Genie — a girl who spent the first 13 years of her life locked alone in a room by her father before being discovered in 1970. It’s hard to assess how conscious a teenager is as they’re learning simple words for the first time, but Genie can provide some clues.

On the pro-Jaynes side, Genie didn’t react to temperatures, even extreme ones, until several years after rejoining society. She also had trouble distinguishing herself from others, as evidenced by her ongoing confusion with the pronouns “you” and “I”. If bodily sensations and a sense of self are core markers of consciousnes, Genie was deficient in both in her pre-lingual state. But does that mean that a monk in ninth jhana is unconscious having detached from bodily sensations and dissolved their sense of self?

On the opposing side is the fact that upon learning rudimentary language, Genie recalled and retold some of her experiences from her pre-lingual days. Unless she was completely confabulating, this would point to conscious memories being created in the absence of language. A similar thing can be observed in deaf kids who grow up in a family that doesn’t use sign language and learn a language only later in life.

The assertion that consciousness follows language also implies the modus tollens, that you can have language without consciousness. Jaynes’ own argument that real language is built on conceptual metaphors would seem to contradict that.

Consider a concept like decision tree. You can think of a tree and come up with various associations you have with the term automatically. Same with the idea of making decisions. But to understand the word for the first time, to realize how the process of making discrete decisions is analogous to a squirrel running up ever-narrower branches, this connection seems to me to require consciousness.

Perhaps these conceptual, consciousness-requiring metaphors are a recent addition to human language and thought, and that would be the book’s argument. But on the evidence presented by the book so far, metaphoric language requires consciousness more than the other way around.

I started this post by noting that Jaynes’ greatest contribution may have been to pave the way to serious scientific inquiry into consciousness in the last few decades. And perhaps it could only be achieved through a genius-level application of Cunningham’s Law. Jaynes’ conclusions are provocative, and if his arguments are wrong they are wrong in subtle enough ways that refuting them requires serious thought and research. I’m not starting this book convinced of Jaynes’ theory so far, but I’ve also never spent as much time thinking hard about consciousness as I have in the last week.

1 comments

Comments sorted by top scores.

comment by mako yass (MakoYass) · 2020-04-06T23:18:05.607Z · LW(p) · GW(p)

I look forward to seeing attentive scrutiny turned to the later chapters, the claims of evidence of shocking historical neurodiversity. When people criticise the book, they're usually talking about the first few chapters, to me that is not half as interesting as the claims about the strata of the human mind. If deliberative thought really has only been relatively recently evolved and then shunted (by further evolution? Or by a rapidly spreading global psycholinguistic monoculture?) into a unified self-transparent system, that would explain a lot of things.