Confirmation Bias in Action

post by Jacob Falkovich (Jacobian) · 2021-01-24T17:38:08.873Z · LW · GW · 1 commentsContents

Inadequate Explanations 1. Cognitive vs. motivational 2. Cost-benefit 3. Exploratory vs. confirmatory 4. Make-believe Active Prediction Blue and Green, Nice and Mean Act Against the Bias Embodied Rationality None 1 comment

Cross-posted, as always, from Putanumonit.

The heart of Rationality is learning how to actually change your mind [? · GW], and the biggest obstacle to changing your mind is confirmation bias. Half the biases on the laundry list are either special cases of confirmation bias or generally helpful heuristics that become delusional when combined with confirmation bias.

And yet, neither the popular nor the Rationalist [? · GW] literature does a good job of explicating it. It’s defined as “the tendency to search for, interpret, favor, and recall information in a way that confirms or supports one’s prior beliefs or values”. The definition is a good start, but it doesn’t explain why this tendency arises or even why the four underlined verbs go together. Academic papers are more often operationalizing pieces of confirmation bias in strange ways to eke out their p-values than offering a compelling theory of the phenomenon.

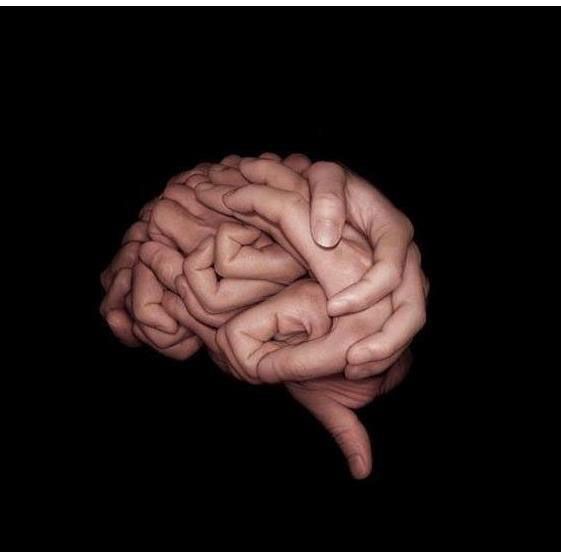

My goal with this post is to reconceptualize confirmation bias using the paradigm of predictive processing, with particular focus on the centrality of action. This focuses CB as a phenomenon of human epistemology, with our brains designed to manage our bodies and move them to manipulate the world. This view of CB wouldn’t apply to, for example, a lonely oracle AI passively monitoring the world and making predictions. But it applies to us.

My theory is speculative and incomplete, and I don’t have a mountain of experimental data or neuroimaging to back it up. But if you’re somewhat familiar with both predictive processing (as you will be by the end) and confirmation bias, I think you’ll find that it point in the right direction.

Inadequate Explanations

Wikipedia offers four explanations of confirmation bias. All are somewhat plausible, but all are also lacking in important ways and don’t offer a comprehensive picture even when combined.

1. Cognitive vs. motivational

Our brain has limited capacity to hold and compare multiple alternatives simultaneously and seeks lazy shortcuts. For example, it’s easier to answer “does this new data obviously contradict my held beliefs and force me to change them?” than to do the hard work of inferring to what extent the new data supports or opposes various hypotheses and updating incrementally. We’re also motivated to believe things that make us happy.

Our brains are lazy and do use shortcuts, but this doesn’t explain why we’re biased towards things we already believe. Wouldn’t it be easier to change your mind in the face of compelling data than to come up with labored rationalizations? A lazy brain could still use its limited capacity to optimally search for truth, instead of searching for confirmation.

2. Cost-benefit

This mostly explains why it can be rational to ask questions that anticipate a positive answer, such as in the Wason experiments. Wason coined the term “confirmation bias” and studied it in a limited context, but it’s a small part of CB as broadly construed. People fall down rabbit holes of ideology, conspiracy theories, and their own made up fantasies, becoming utterly immune to evidence and argument. This seems more important than some positivity bias when playing “20 questions”.

3. Exploratory vs. confirmatory

This model focuses on the social side, positing the importance of justifying your beliefs to others. People will only consider competing hypotheses critically when they anticipate having to explain themselves to listeners who are both well-informed and truth-seeking, which is rare. In most cases, you can just tell the crowd what it wants to hear or, in the case of a hostile audience, reject the need for explanation outright.

This is certainly an important component of confirmation bias, but it cannot be the whole story. Our brains are hungry infovores from the moment we’re born; if our sole purpose was to signal conformity then human curiosity would not exist.

4. Make-believe

“[Confirmation bias has] roots in childhood coping through make-believe, which becomes the basis for more complex forms of self-deception and illusion into adulthood”.

My goal is not to disprove these four models, but to offer one that puts more meat on the confirmation bias bone: how it works, when we’ll see it, how to fight it. Before we get there, we need a quick tour the basics of predictive processing to set us up. I won’t get too deep into the nitty-gritty of PP or list the evidence for its claims, follow the links if you want more detail.

Active Prediction

Let’s start with basic physics: for an ordered system to persist over time in a less-ordered environment it must learn and exploit whatever order does exist in the environment. Replace ‘disorder’ with ‘entropy’ to make it sound more physicky if you need to.

For a human this means that the point of life is roughly to keep your body at precisely 37°C as long as possible in an environment that fluctuates between hot and cold. Love, friendship, blogging — these are all just instrumental to that goal. If you deviate from 37°C long enough, you will no longer persist.

If the world was completely chaotic, the task of keeping yourself at 37°C would be (will be) impossible. Luckily, there is some exploitable order to the world. For example: calories, which you need to maintain your temperature, aren’t smeared uniformly across the universe but concentrated in cherries and absent from pebbles.

To persist, you must eat a lot cherries and few pebbles. This is equivalent to correctly predicting that you will do so. Evolution gave you this “hyperprior”: those animals who ate pebbles, or nothing at all, died and didn’t become your ancestors. If you are constantly surprised, i.e. if you bite on things not knowing what they might be or bite down on pebbles expecting cherries, you will not persist. Surprisal means that you are leaving free energy on the table — literally so in the case of uneaten cherries.

You have three ways of making sure your prediction of your sensory inputs and your sensory inputs are the same:

- Priors: change your perception to match your model of reality. For example, you know that shadows make things appear darker and so your mind perceives square A to be darker than B in the picture below.

- Update: change your model based on the raw inputs. For example, you can zoom in on the squares and see that they’re the same color.

- Action: change the world to generate the inputs you expect. For example, the act of painting the squares pink starts by predicting that you will perceive them as pink, and then moving your pink brush until the input is close enough to what you expect.

Ultimately, action is the only way your evolutionary priors can be satisfied — you have to pick up the cherry and put it in your mouth. Perception and attention will be guided by what’s actionable. When you approach a tree your eyes will focus on the red dots (which may be fruit that can be eaten) and anything that moves (which may be an animal you need to run from), less so on the unactionable trunk.

Proper action requires setting up your body. If you’re about to eat cherries, you need to produce saliva and divert blood flow to the stomach. If you’re about to run from a snake, you need to release cortisol and adrenaline and divert blood to your legs. These resources are often called your body budget, and your brain’s goal is to maintain it. Regulating your body budget is usually unconscious, but it requires time and resources. As a result, it’s extremely important for your brain to predict what you yourself will do and it can’t wait too long to decide.

One consequence of this is that we are “fooled by randomness” and hallucinate patterns where none exist. This is doubly the case in chaotic and anti-inductive [LW · GW] environments where no exploitable regularities exists to be learned. In those situations, any superstition whatsoever is preferable to a model of maximum ignorance since the former at least allows your brain to predict your own behavior.

The stock market is one such anti-inductive domain, which explains the popularity of superstitions like technical analysis. Drawing lines on charts won’t tell you whether the stock will go up or down (nothing really will), but it at least tells you whether you yourself will buy or sell.

Once your brain thinks it discovered an exploitable pattern in the world and uses it for prediction, it will use all its tools to make it come true. It will direct attention to areas that the pattern highlights. It will alter perception to fit the pattern. And, importantly, it will act to make the world confirm to its prediction.

And so predictive processing suggests three claims with regards to confirmation bias:

- We’d expect to see confirmation bias in chaotic and anti-inductive environments where data is scarce, feedback on decisions is slow and ambiguous, and useful patterns are hard to learn because they either don’t exist or are too complex. We’ll see more CB in day-trading than in chess.

- Confirmation bias will be strong in actionable domains, particularly where quick decisions are required, and weak for abstract and remote issues.

- Confirmation bias will be driven by all the ways of making predictions come true: selective attention, perception modified by prediction, and shaping the world through action.

Blue and Green, Nice and Mean

Let’s use a fable [LW · GW] of Blues and Greens for illustration.

These are two groups of people you are about to meet. Exactly 50% of Blues are nice and honest, 50% are mean and deceitful, and the same proportion is true of Greens. You, however, cannot know that.

Let’s say that you have developed an inkling that the Blues are nasty and not to be trusted. Perhaps the first Blue you met is lashing out angrily (because they just had their lunch money eaten by the vending machine [LW · GW]). Perhaps you asked a Green for directions and they told you to watch out for those vile Blues. Either way, you have the start of a predictive model. What happens next?

The next Blue person is more likely to confirm your model than to disprove it. Predictive processing says that you’ll direct your attention to where your model may be tested. If you think the person may be lying, you may focus on their eye movements to catch them shifting their gaze. If you think they’re angry, you may look to see if their nostrils flare. If the signal is ambiguous enough — people’s eyes move and nostrils dilate at times regardless of mood — you will interpret it as further confirmation. The same is true of more general ambiguity: if they are blunt, you perceive that as rudeness. If they’re tactful, you perceive that as insincerity. A loud Blue is aggressive, a quiet one is scheming.

The reason you’ll update in the direction of your preexisting model is to avoid going back to a position of uncertainty which leaves you undecided about your own reaction. If you had interpreted Blue bluntness as honesty, you are now dealing with someone who is nasty (on priors) but also honest — you can’t tell whether to trust or avoid them. If you go the other way you now see the person as nasty and rude — you easily decide to avoid them. The halo and horns effects are special cases of confirmation bias, expediting the important decision of whether you should defect or cooperate with the person in question.

This is partly driven by the need for speed. Reading people’s intentions is important to do quickly, not just accurately. It is also difficult. Your brain knows that additional time and data aren’t guaranteed to clarify the issue. Within a second of encountering a Blue person your brain is already using your negative prior to activate your sympathetic nervous system and prepare your body for hostile or avoidant action.

So why not update to think that Blues are good and kind? Replacing models incurs a cost in surprisal — you predicted that Blue people are nasty but were wrong. You also don’t know how this update will propagate and whether it will clash with other things you believe, perhaps causing you to doubt your Green friends which will hurt you socially. And you also incur a body budget cost: the resources you spent preparing for hostile action have been wasted, and new ones need to be summoned. To an embodied brain changing your opinion is not as cheap as flipping a bit in flash memory. It’s as costly as switching the AC back and forth between heating and cooling.

Your brain cares about having good predictive models into the future but it will only switch if the new model does a superior job of explaining past and present sensory data, not merely one that is equally good. If you happen to meet several wonderful Blues in a row you may update, but that would require unusual luck (and may start confirmation bias going the other way, multiplied by the zeal of the convert).

And finally, if you predicted that someone will be nasty and it’s not obvious that they are, your brain perceives a prediction error and will try to correct it with action. You may taunt, provoke, or intimidate the Blue person, even in subtle ways you’re not aware of. Nothing makes a bro madder than asking.

Thinking that Blue people are bad will also reinforce the belief that Greens are good — they dislike the Blues, after all, and the world must have some people who are nicer than average. Now your confirmation bias, already working through your explicit beliefs, intuition, raw perception, and your actions, will also start working through your social environment.

What does the model “Blue people are evil” actually predict in a world where you don’t interact with any Blues? Beliefs should pay rent [? · GW] in anticipated experiences, after all, and your brain knows this. For many people, the model that Blues (or Republicans, Democrats, whites, blacks, Jews, Muslims, Boomers, Millennials…) are evil makes predictions that have little to do with Blues themselves. It predicts that you will see several stories about Blues being evil on your social media feed every day from your Green friends. It predicts that sharing those stories will be rewarded in likes and status.

In this way the explicit belief “Blues are bad” may become slightly decoupled from the actual model of all Blues as bad. You may have that one Blue friend who is “not like that”. But the explicit and predictive model still reinforce each other.

Act Against the Bias

How would one go about curing themselves or others of confirmation-biased cyanophobia?

The least effective is shaming and yelling, particularly when done by Blue people themselves. Being shamed and yelled at is an extremely unpleasant experience, and that unpleasantness is now further associated with Blues. Being told to “educate yourself” obviously doesn’t help even when it’s not simply meant as a condescending dismissal. Looking for information yourself, (including on the topic of confirmation bias [LW · GW]), will often just reinforce your original position (because of confirmation bias).

How about changing what you say, as opposed to merely what you hear, about Blue people? Perhaps you find yourself in a society where expressing anti-blue bias is unacceptable, and instead everyone must repeats pro-blue slogans like “Blues Look Marvelous”.

Unfortunately, simply saying things doesn’t do a whole lot to change a person’s mind. We are very good at compartmentalizing and signaling, keeping track separately of beliefs we express in public and those we actually believe [LW · GW]. From predictive processing we wouldn’t expect saying thing to change your mind much because updates start from the bottom, and explicit speech is at the top.

Our best current model of how predictive processing works is hierarchical predictive coding. In this scheme, predictions propagate from general, abstract, and sometimes-conscious models “down” to the detailed, specific, and unconscious. Error signals propagate “up” from detailed sensory inputs altering perception at the lowest level at which sensory data can be reconciled.

To explain this in detail, here’s what (probably) happens in your brain when you observe the picture below:

First, you get the general gist of the image: browns and yellows, complex shapes with intersecting edges. The first conscious percept you form is a gestalt of the scene based on the immediate gist — you are looking at a nature scene of plants in an arid biome. You start predicting familiar components of that scene: yellow grass, brown bushes, trees. Now that you’re predicting those objects, your eyes locate them in the image. Two trees in the background, a bush in the front, grass all over.

Then you may notice two white spots just to the right of the image’s center. These do not fit into your prediction of what a bush looks like, and so propagate error up the chain. Your eyes dart to that location and notice the antelope’s head, with your brain predicting the rest of its body even before you confirmed that it’s there. This all happened subconsciously and very quickly. Now you perceive an antelope camouflaged in dry grass and can describe the scene consciously in words.

As with the two white dots of the antelope’s muzzle, small prediction errors accumulate before an update is made. Your brain works like Thomas Kuhn’s theory of science, with unexplained observations building up before they trigger a paradigm shift. If you try to insert a statement like “Blue people are always awesome and never at fault” at the top, your brain will try to fit it with the entirety of data you previously observed (observed in a biased way), hit a huge discrepancy, and reject it.

Seeing counterexamples to your model, especially surprising ones, is more promising. You may watch a movie with a Blue character set up to look like a villain, until it is revealed that they were the hero all along and all the previous actions were in the service of good and justice. Making incremental updates is easier. If you go to a Blue Rationalist meetup you will confirm that Blues are rude and disagreeable, but you will update that they all seem scrupulously honest at least.

But the best way to change your mind is to change your actions.

This idea is well known in social psychology, often known as self-perception and dissonance avoidance. As many clever experiments show, we often infer our beliefs from our actions, especially when our actions are otherwise underexplained. Under predictive processing, anticipating your own actions is the most important prediction of all. If some abstract ideas need to change to accommodate what you observe yourself doing, your brain will change them.

So, to see Blue people as nice, you can simply act as if they were by being nice to them yourself. Your behavior will not only change your beliefs directly, but also their own behavior as they reciprocate. I think this is an important function of norms of politeness. If you address someone by honorifics, bow or shake their hand, wish them well upon greeting etc., you will inevitably start seeing them more as a person worthy of friendship and respect.

This should work for other confirmation-biased beliefs as well. To start believing in astrology, start doing as the horoscope suggests. To disbelieve astrology, just do the opposite. Ironically, this point is extremely well understood by Judaism and it’s focus on conduct and the mitzvot. Rabbis know that faith often follows action. Christianity, on the other hand, shifted the focus from conduct to personal belief. PP would suggest that this will lead many of its adherents to act in non-Christ-like ways, without a corresponding strengthening of faith.

This view flips Voltaire on his head: those who can make you commit atrocities, can make you believe absurdities.

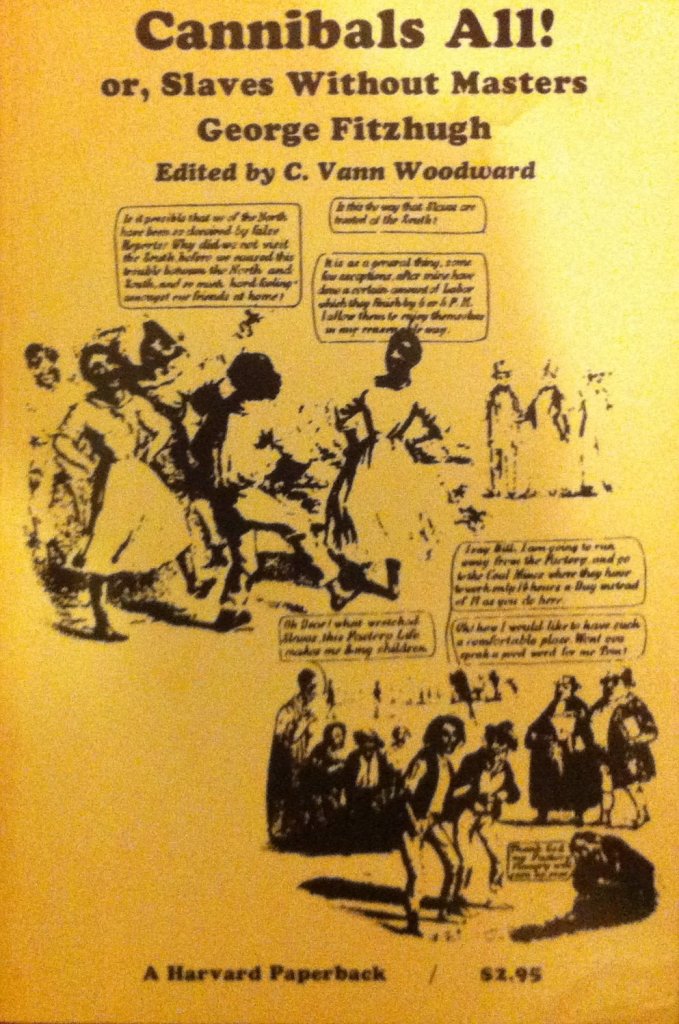

In my favorite EconTalk episode, Mike Munger talks about his research on the shifting justification for slavery in the US South before the Civil War. In the 18th Century it was widely accepted on both sides that the economy of the South (and that of Thomas Jefferson) simply could not survive without slave labor. Cotton was cheap and workers were scarce. There was talk of paying reparations to slave owners if the slaves were freed, otherwise the entire South would be bankrupt. Canada and England abolished slavery in part because, having no cotton fields to pick, slave labor wasn’t a necessity to them.

By the 1820s no one could credibly claim this was still the case in the South. The invention of the cotton gin and spinning mule kickstarted the industrial revolution and also sent the price of cotton soaring, while the working age population of the US quadrupled from 1776. Slave ownership was still lucrative, but no longer a matter of economic survival.

And so within a couple of decades everyone in the South adopted a new justification for slavery: that blacks cannot take care of themselves in Western civilization and so depend on the slave owners for food, healthcare, and basic survival in America. This was developed into a full proto-Marxist theory of why slaves are treated better than wage labor (as you treat an owned asset better than a rented one) and was sincerely accepted by many Southerners as we can tell from their private correspondences. The act of slave ownership convinced an entire society of a preposterous theory, even though that theory was challenged daily by every “ungrateful” slave’s attempts to run away from bondage.

On a personal level, this means that will not be able to accept something as true until you have a basic idea of what you would do if that was true. Leave yourself a line of retreat [LW · GW]. And make sure your habits of action don’t drag you into preposterous beliefs you wouldn’t hold otherwise.

I think this is why it was so hard a year ago for most people to accept the proposition that there is a small but non-zero chance of global pandemic on the horizon. All their plans and habits were made in a non-pandemic world, and no one had experience planning for the plague. But when people were told “there’s a chance of a pandemic, and if/when it comes we will work remotely and wear masks and stock up on beans and wait for a vaccine and sing songs about how much 2020 sucks” it was easier to accept since it came with an action plan.

Embodied Rationality

To sum up: confirmation bias isn’t an artifact of brains who are too lazy to be correct or determined to be wrong. To our brains, beliefs that are correct on paper are secondary to those that can lead to effective, quick, and predictable action. Our view of the world shapes our actions and is shaped by them in turn. In the interplay of priors, sensory input, attention, and action the latter is most important. Least important are the explicit beliefs we express, even though these are easiest to notice, measure, and police.

I think that embodied cognition is going to be the next great area of progress in Rationality. Kahneman’s research on biases compared our brains to homo economicus making decisions in a vacuum. The Sequences [? · GW] compared us to an ideal Bayesian AI. In the second half of the 2010s Rationality focused on the social component: signaling, tribalism, the game theory of our social and moral intuitions.

The next step in understanding our minds is to see them as emerging from brains that evolved in bodies. Embodied cognition is not a new idea, of course, it’s millennia old. I’m far from the first one to connect it to Rationality either, as things like Focusing [? · GW] and body exercises were part of CFAR’s curriculum from the start. But I hope that this post gives people a taste of what is possible in this space, of how thinking in terms of bodies, action, and predictive processing can reframe and expand on core ideas in Rationality. With Scott back and many others excited by these ideas, I hope there’s much more to come.

1 comments

Comments sorted by top scores.

comment by pjeby · 2021-01-25T04:52:39.343Z · LW(p) · GW(p)

On a personal level, this means that will not be able to accept something as true until you have a basic idea of what you would do if that was true.

The fourth question of The Work is: "Who would you be without that thought?", intended to provoke a near-mode, concrete prediction of what your life would be like if you were not thinking/believing the thought in question.

Which is to say that it is also hard to accept something is not true until you have a basic idea of what you would do if it were false. ;-)

Also, the energy model presented in this article is, I think, a very good one. The idea that we reject new models unless they're comprehensively better, yet continue using old ones until they can be comprehensively disproven, is an apt description of the core difficulties in Actually Changing One's Mind at the level of emotional "knowledge" and assumptions.

I also like the hierarchical processing part -- it'll give me another tool to explain why changing general beliefs and patterns of behavior requires digging into details of experience, and provides a good intuition pump for seeing why you can't just "decide" to think differently and have it work, if the belief in question is a predictive or evaluative alief, rather than just a verbal profession to others.