Is Rationalist Self-Improvement Real?

post by Jacob Falkovich (Jacobian) · 2019-12-09T17:11:03.337Z · LW · GW · 78 commentsContents

Basketballism AsWrongAsEver Great Expectations Akrasia 3% LessWrong None 78 comments

Cross-posted from Putanumonit where the images show up way bigger. I don't know how to make them bigger on LW.

Basketballism

Imagine that tomorrow everyone on the planet forgets the concept of training basketball skills.

The next day everyone is as good at basketball as they were the previous day, but this talent is assumed to be fixed. No one expects their performance to change over time. No one teaches basketball, although many people continue to play the game for fun.

Geneticists explain that some people are born with better hand-eye coordination and are thus able to shoot a basketball accurately. Economists explain that highly-paid NBA players have a stronger incentive to hit shots, which explains their improved performance. Psychologists note that people who take more jump shots each day hit a higher percentage and theorize a principal factor of basketball affinity that influences both desire and skill at basketball. Critical theorists claim that white men’s under-representation in the NBA is due to systemic oppression.

Papers are published, tenure is awarded.

New scientific disciplines emerge and begin studying jump shots more systematically. Evolutionary physiologists point out that our ancestors threw stones more often than they tossed basketballs, which explains our lack of adaptation to that particular motion. Behavioral kinesiologists describe systematic biases in human basketball, such as the tendency to shoot balls with a flatter trajectory and a lower release point than is optimal.

When asked by aspiring basketball players if jump shots can be improved, they all shake their heads sadly and say that it is human nature to miss shots. A Nobel laureate behavioral kinesiologist tells audiences that even after he wrote a book on biases in basketball his shot did not improve much. Someone publishes a study showing that basketball performance improves after a one-hour training session with schoolchildren, but Shott Ballexander writes a critical takedown pointing out that the effect wore off after a month and could simply be random noise. The field switches to studying “nudges”: ways to design systems so that players hit more shots at the same level of skill. They recommend that the NBA adopt larger hoops.

Papers are published, tenure is awarded.

Then, one day, a woman sits down to read those papers who is not an academic, just someone looking to get good at basketball. She realizes that the lessons of behavioral kinesiology can be used to improve her jump shot, and practices releasing the ball at the top of her jump from above the forehead. Her economist friend reminds her to give the ball more arc. As the balls start swooshing in, more people gather at the gym to practice shooting. They call themselves Basketballists.

Most people who walk past the gym sneer at the Basketballists. “You call yourselves Basketballists and yet none of you shoots 100%”, they taunt. “You should go to grad school if you want to learn about jump shots.” Some of Basketballists themselves begin to doubt the project, especially since switching to the new shooting techniques lowers their performance at first. “Did you hear what the Center for Applied Basketball is charging for a training camp?” they mutter. “I bet it doesn’t even work.”

Within a few years, some dedicated Basketballists start talking about how much their shot percentage improved and the pick-up tournaments they won. Most people say it’s just selection bias, or dismiss them by asking why they can’t outplay Kawhi Leonard for all their training.

The Basketballists insist that the training does help, that they really get better by the day. But how could they know?

AsWrongAsEver

A core axiom of Rationality is that it is a skill that can be improved with time and practice. The very names Overcoming Bias and Less Wrong reflect this: rationality is a vector, not a fixed point.

A core foundation of Rationality is the research on heuristic and biases led by Daniel Kahneman. The very first book [? · GW] in The Sequences is in large part a summary of Kahneman’s work.

Awkwardly for Rationalists, Daniel Kahneman is hugely skeptical of any possible improvement in rationality, especially for whole groups of people. In an astonishing interview with Sam Harris, Kahneman describes bias after bias in human thinking, emotions, and decision making. For every one, Sam asks: how do we get better at this? And for every one, Daniel replies: we don’t, we’ve been telling people about this for decades and nothing has changed, that’s just how people are.

Daniel Kahneman is familiar with CFAR but as far as I know, he has not put as much effort himself into developing a community and curriculum dedicated to improving human rationality. He has discovered and described human irrationality, mostly to an audience of psychology undergrads. And psychology undergrads do worse than pigeons at learning a simple probabilistic game so we shouldn’t expect them to learn rationality just by reading about biases [LW · GW]. Perhaps if they started reading Slate Star Codex…

Alas, Scott Alexander himself is quite skeptical of Rationalist self-improvement. He certainly believes that Rationalist thinking can help you make good predictions and occasionally distinguish truth from bullshit, but he’s unconvinced that the underlying ability can be improved upon. Scott is even more skeptical of Rationality’s use for life-optimization [LW · GW].

I told Scott that I credit Rationality with a lot of the massive improvements in my financial, social, romantic, and mental life that happened to coincide with my discovery of LessWrong. Scott argued that I would do equally well in the absence of Rationality by finding other self-improvement philosophies to pour my intelligence and motivation into and that these two are the root cause of my life getting better. Scott also seems to have been doing very well since he discovered LessWrong, but he credits Rationality with not much more than being a flag that united the community he’s part of.

So: on one side are Yudkowsky, CFAR, and several Rationalists, sharing the belief that Rationality is a learnable skill that can improve the lives of most seekers who step on the path. On the other side are Kahneman, Scott, several other Rationalists, and all anti-Rationalists, who disagree.

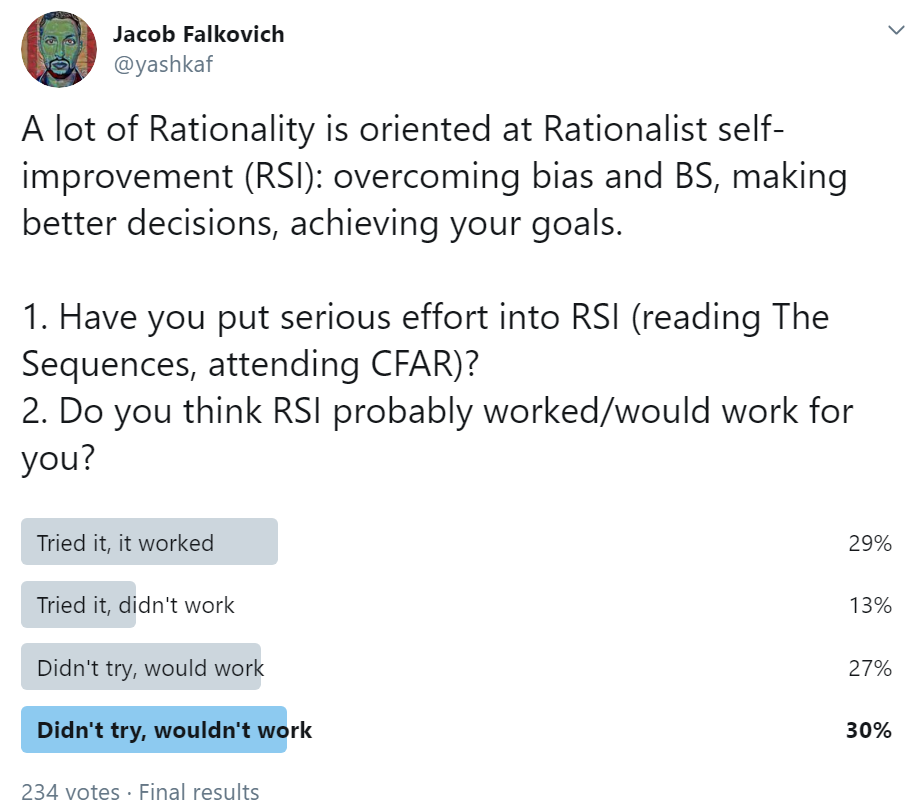

When I surveyed my Twitter followers, the results distributed somewhat predictably:

The optimistic take is that RSI works for most people if they only tried it. The neutral take is that people are good at trying self-improvement philosophies that would work for them. The pessimistic take is that Rationalists are deluded by sunk cost and confirmation bias.

Who’s right? Is Rationality trainable like jump shots or fixed like height? Before reaching any conclusions, let’s try to figure out how why so many smart people who are equally familiar with Rationality disagree so strongly about this important question.

Great Expectations

An important crux of disagreement between me and Scott is in the question of what counts as successful Rationalist self-improvement. We can both look at the same facts and come to very different conclusions regarding the utility of Rationality.

Here’s how Scott parses the fact that 15% of SSC readers who were referred by LessWrong have made over $1,000 by investing in cryptocurrency [LW · GW] and 3% made over $100,000:

The first mention of Bitcoin on Less Wrong, a post called Making Money With Bitcoin [? · GW], was in early 2011 – when it was worth 91 cents. Gwern predicted [? · GW] that it could someday be worth “upwards of $10,000 a bitcoin”. […]

This was the easiest test case of our “make good choices” ability that we could possibly have gotten, the one where a multiply-your-money-by-a-thousand-times opportunity basically fell out of the sky and hit our community on its collective head. So how did we do?

I would say we did mediocre. […]

Overall, if this was a test for us, I give the community a C and me personally an F. God arranged for the perfect opportunity to fall into our lap. We vaguely converged onto the right answer in an epistemic sense. And 3 – 15% of us, not including me, actually took advantage of it and got somewhat rich.

Here’s how I would describe it:

Of the 1289 people who were referred to SSC from LessWrong, two thirds are younger than 30, a third are students/interns or otherwise yet to start their careers, and many are for other reasons too broke for it to be actually rational to risk even $100 on something that you saw recommended on a blog. Of the remainder, the majority were not around in the early days when cryptocurrencies were discussed — the median “time in community” on LessWrong surveys is around two years. In any case, “invest in crypto” was never a major theme or universally endorsed in the Rationalist community.

Of those that were around and had the money to invest early enough, a lot lost it all when Mt. Gox was hacked or when Bitcoin crashed in late 2013 and didn’t recover until 2017 or through several other contingencies.

If I had to guess the percent of Rationalists who were even in a position to learn about crypto on LessWrong and make more than $1,000 by following Rationalist advice, I’d say it’s certainly less than 50%. Maybe not much larger than 15%.

Only 8% of Americans own cryptocurrency today. At the absolute highest end estimate, 1% of Americans, and 0.1% of people worldwide, made >$1,000 from crypto. So Rationalists did at least an order of magnitude better than the general population, almost as well as they could’ve done in a perfect world, and also funded MIRI and CFAR with Bitcoin for years ahead. I give the community an A and myself an A.

In an essay called Extreme Rationality: It’s Not That Great [LW · GW] Scott writes:

Eliezer writes:

The novice goes astray and says, “The Art failed me.”

The master goes astray and says, “I failed my Art.”

Yet one way to fail your Art is to expect more of it than it can deliver.

Scott means to say that Eliezer expects too much of the art in demanding that great Rationalist teachers be great [LW · GW] at other things as well. But I think that expecting 50% of LessWrongers filling out a survey to have made thousands of dollars from crypto is setting the bar far higher than Eliezer’s criterion [LW · GW] of “Being a math professor at a small university who has published a few original proofs, or a successful day trader who retired after five years to become an organic farmer, or a serial entrepreneur who lived through three failed startups before going back to a more ordinary job as a senior programmer.”

Akrasia

Scott blames the failure of Rationality to help primarily on akrasia.

One factor we have to once again come back to [? · GW] is akrasia. I find akrasia in myself and others to be the most important limiting factor to our success. Think of that phrase “limiting factor” formally, the way you’d think of the limiting reagent in chemistry. When there’s a limiting reagent, it doesn’t matter how much more of the other reagents you add, the reaction’s not going to make any more product. Rational decisions are practically useless without the willpower to carry them out. If our limiting reagent is willpower and not rationality, throwing truckloads of rationality into our brains isn’t going to increase success very much.

I take this paragraph to imply a model that looks like this:

[Alex reads LessWrong] -> [Alex tries to become less wrong] -> [akrasia] -> [Alex doesn’t improve].

I would make a small change to this model:

[Alex reads LessWrong] -> [akrasia] -> [Alex doesn’t try to become less wrong] -> [Alex doesn’t improve].

A lot of LessWrong is very fun to read, as is all of SlateStarCodex. A large number of people on these sites, as on Putanumonit, are just looking to procrastinate during the workday, not to change how their mind works. Only 7% of the people who were engaged enough to fill out the last LessWrong survey [LW · GW] have attended a CFAR workshop. Only 20% ever wrote a post, which is some measure of active rather than passive engagement with the material.

In contrast, one person wrote a sequence on trying out applied rationality for 30 days straight: Xiaoyu “The Hammer” He [? · GW]. And he was quite satisfied with the result.

I’m not sure that Scott and I disagree much, but I didn’t get the sense that his essay was saying “just reading about this stuff doesn’t help, you have to actually try”. It also doesn’t explain was he was so skeptical about me crediting my own improvement to Rationality.

Akrasia is discussed a lot on LessWrong, and applied rationality has several tools that help with it. What works for me and my smart friends is not to try and generate willpower but to use lucid moments to design plans that take a lack of willpower into account. Other approaches work for other people. But of course, if someone lacks the willpower to even try and take Rationality improvement seriously, a mere blog post will not help them.

3% LessWrong

Scott also highlights the key sentence in his essay:

I think it may help me succeed in life a little, but I think the correlation between x-rationality and success is probably closer to 0.1 than to 1.

What he doesn’t ask himself is: how big is a correlation of 0.1?

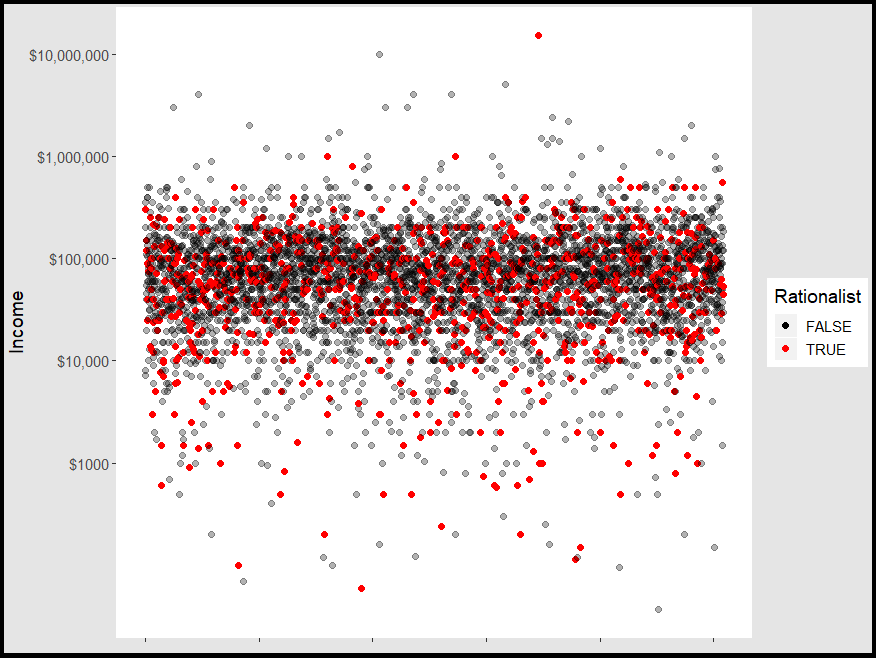

Here’s the chart of respondents to the SlateStarCodex survey, by self-reported yearly income and whether they were referred from LessWrong (Scott’s criterion for Rationalists).

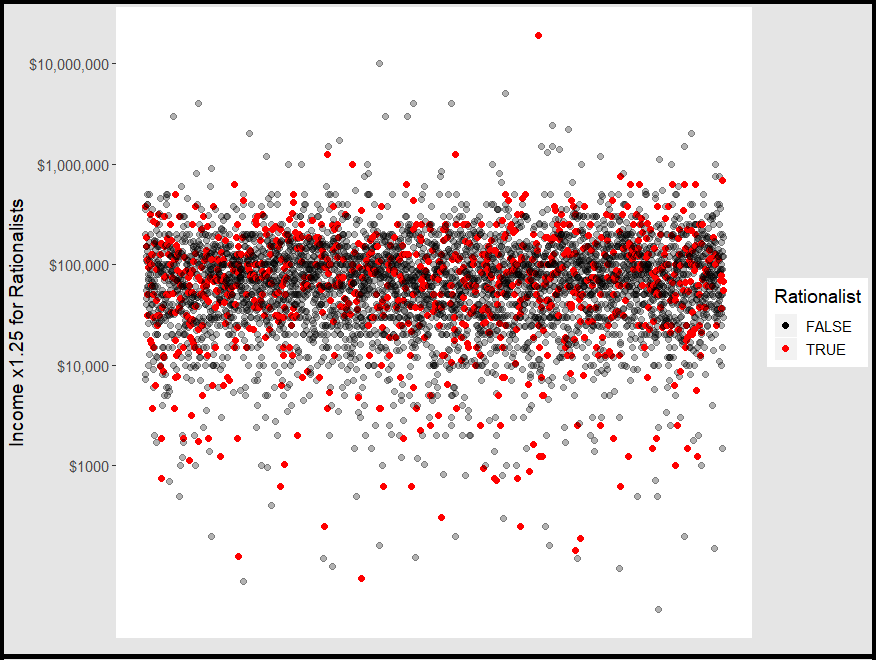

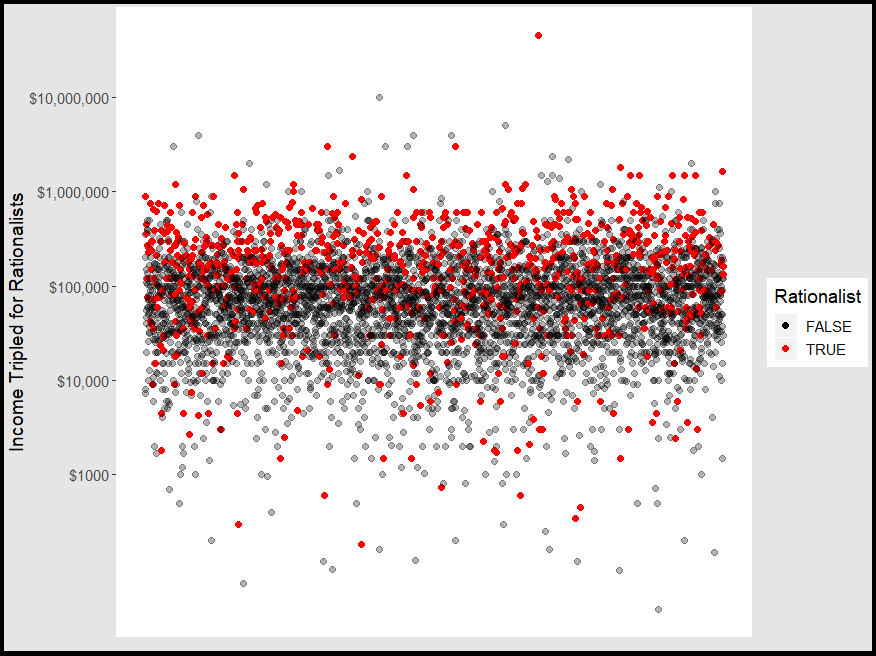

And here’s the same chart with a small change. Can you notice it?

For the second chart, I increased the income of all rationalists by 25%.

The following things are both true:

- When you eyeball the group as a whole, the charts look identical. A 25% improvement for a quarter of the people in a group you observe is barely noticeable. The rich stayed rich, the poor stayed poor.

- If your own income increased 25% you would certainly notice it. And if the increase came as a result of reading a few blog posts and coming to a few meetups, you would tell everyone you know about this astounding life hack.

The correlation between Rationality and income in Scott’s survey is -0.01. That number goes up to a mere 0.02 after the increase. A correlation of 0.1 is absolutely huge, it would require tripling the income of all Rationalists.

The point isn’t to nitpick Scott’s choice of “correlation = 0.1” as a metaphor. But every measure of success we care about, like impact on the world or popularity or enlightenment, is probably distributed like income is on the survey. And so if Rationality made you 25% more successful it wouldn’t be as obviously visible as Scott thinks it would be — especially since everyone pursues a different vision of success. In this 25% world, the most and least successful people would still be such for reasons other than Rationality. And in this world, Rationality would be one of the most effective self-improvement approaches ever devised. 25% is a lot!

Of course, the 25% increase wouldn’t happen immediately. Most people who take Rationality seriously have been in the community for several years. You get to 25% improvement by getting 3% better each year for 8 years.

Here’s what 3% improvement feels like:

You know what feels crappy? 3% improvement. You busted your ass for a year, trying to get better at dating, at being less of an introvert, at self-soothing your anxiety – and you only managed to get 3% better at it.

If you worked a job where you put in that much time at the office and they gave you a measly 3% raise, you would spit in your boss’s face and walk the fuck out.

And, in fact, that’s what most people do: quit. […]

The model for most self-improvement is usually this:

* You don’t have much of a problem

* You found The Breakthrough that erased all the issues you had

* When you’re done, you’ll be the opposite of what you were. Used to be bad at dating? Now you’ll have your own personal harem. Used to be useless at small talk? Now you’re a fluent raconteur.

Which, when you’ve agonized to scrape together a measly 3% improvement, feels like crap. If you’re burdened with such social anxiety that it takes literally everything you have to go out in public for twenty minutes, make one awkward small talk, and then retreat home to collapse in embarrassment, you think, “Well, this isn’t worth it.”

But most self-improvement isn’t immediate improvement, my friend.

It’s compound interest.

I think that Rationalist self-improvement is like this. You don’t get better at life and rationality after taking one class with Prof. Kahnemann. After 8 years of hard work, you don’t stand out from the crowd even as the results become personally noticeable. And if you discover Rationality in college and stick with it, by the time you’re 55 you will be three times better than what you would have been if you hadn’t compounded these 3% gains year after year, and everyone will notice that.

What’s more, the outcomes don’t scale smoothly with your level of skill. When rare, high leverage opportunities come around, being slightly more rational can make a huge difference. Bitcoin was one such opportunity; meeting my wife was another such one for me. I don’t know what the next one will be: an emerging technology startup? a political upheaval? cryonics? I know that the world is getting weirder faster, and the payouts to Rationality are going to increase commensurately.

Here’s what Scott himself wrote in response to a critic of Bayesianism:

Probability theory in general, and Bayesianism in particular, provide a coherent philosophical foundation for not being an idiot.

Now in general, people don’t need coherent philosophical foundations for anything they do. They don’t need grammar to speak a language, they don’t need classical physics to hit a baseball, and they don’t need probability theory to make good decisions. This is why I find all the “But probability theory isn’t that useful in everyday life!” complaining so vacuous.

“Everyday life” means “inside your comfort zone”. You don’t need theory inside your comfort zone, because you already navigate it effortlessly. But sometimes you find that the inside of your comfort zone isn’t so comfortable after all (my go-to grammatical example is answering the phone “Scott? Yes, this is him.”) Other times you want to leave your comfort zone, by for example speaking a foreign language or creating a conlang.

When David says that “You can’t possibly be an atheist because…” doesn’t count because it’s an edge case, I respond that it’s exactly the sort of thing that should count because it’s people trying to actually think about an issue outside their comfort zone which they can’t handle on intuition alone. It turns out when most people try this they fail miserably. If you are the sort of person who likes to deal with complicated philosophical problems outside the comfortable area where you can rely on instinct – and politics, religion, philosophy, and charity all fall in that area – then it’s really nice to have an epistemology that doesn’t suck.

If you’re the sort of person for whom success in life means stepping outside the comfort zone that your parents and high school counselor charted out for you, if you’re willing to explore spaces of consciousness and relationships that other people warn you about, if you compare yourself only to who you were yesterday and not to who someone else is today… If you’re weird like me, and if you’re reading this you probably are, I think that Rationality can improve your life a lot.

But to get better at basketball, you have to actually show up to the gym.

See also: The Martial Art of Rationality [? · GW].

78 comments

Comments sorted by top scores.

comment by Scott Alexander (Yvain) · 2019-12-09T20:18:56.707Z · LW(p) · GW(p)

I have some pretty complicated thoughts on this, and my heart isn't really in responding to you because I think some things are helpful for some people, but a sketch of what I'm thinking:

First, a clarification. Some early claims - like the ones I was responding to in my 2009 essay - were that rationalists should be able to basically accomplish miracles, become billionaires with minimal work, unify physics with a couple of years of study, etc. I still occasionally hear claims along those lines. I am still against those, but I interpret you as making weaker claims, like that rationalists can be 10% better at things than nonrationalists, after putting in a decent amount of work. I'm less opposed to those claims, especially if "a decent amount of work" is interpreted as "the same amount of work you would need to get good at those things through other methods". But I'm still a little bit concerned about them.

First: I'm interpreting "rationalist self-help" to mean rationalist ideas and practices that are helpful for getting common real-life goals like financial, social, and romantic success. I'm not including things like doing charity better, for reasons that I hope will become clear later.

These are the kinds of things most people want, which means two things. First, we should expect a lot of previous effort has gone into optimizing them. Second, we should expect that normal human psychology is designed to optimize them. If we're trying to do differential equations, we're outside our brain's design specs; if we're trying to gain status and power, we're operating exactly as designed.

When the brain fails disastrously, it tends to be at things outside the design specs, that don't matter for things we want. For example, you quoted me describing some disastrous failures in people understanding some philosophy around atheism, and I agree that sort of thing happens often. But this is because it's outside of our common sense. I can absolutely imagine a normal person saying "Since I can't prove God doesn't exist, God must exist", but it would take a much more screwed-up person to think "Since I can't prove I can't fly, I'm going to jump off this cliff."

Another example: doctors fail miserably on the Bayes mammogram problem, but usually handle actual breast cancer diagnosis okay. And even diagnosing breast cancer is a little outside common sense and everyday life. Faced with the most chimpish possible version of the Bayes mammogram problem - maybe something like "This guy I met at a party claims he's the king of a distant country, and admittedly he is wearing a crown, but what's the chance he's *really* a king?" my guess is people are already near-optimal.

If you have this amazing computer perfectly-tuned for finding strategies in a complex space, I think your best bet is just to throw lots and lots of training data at it, then try navigating the complex space.

I think it's ironic that you use practicing basketball as your example here, because rationalist techniques very much are *not* practice. If you want to become a better salesman, practice is going out and trying to make lots of sales. I don't think this is a "rationalist technique" and I think the kind of self-help you're arguing for is very different (though it may involve better ways to practice). We both agree that practice is useful; I think our remaining disagreement is on whether there are things other than practice that are more useful to do, on the margin, than another unit of practice.

Why do I think this is unlikely?

1. Although rationalists have done pretty well for themselves, they don't seem to have done too remarkably well. Even lots of leading rationalist organizations are led by people who haven't put particular effort into anything you could call rationalist self-help! That's really surprising!

2. Efficient markets. Rationalists developed rationalist self-help by thinking about it for a while. This implies that everyone else left a $100 bill on the ground for the past 4000 years. If there were techniques to improve your financial, social, and romantic success that you could develop just by thinking about them, the same people who figured out the manioc detoxification techniques, or oracle bone randomization for hunting, or all the other amazingly complex adaptations they somehow developed, would have come up with them. Even if they only work in modern society, one of the millions of modern people who wanted financial, social, and romantic success before you would have come up with them. Obviously this isn't 100% true - someone has to be the first person to discover everything - but you should expect the fruits here to be very high up, high enough that a single community putting in a moderate amount of effort shouldn't be able to get too many of them.

(some of this becomes less relevant if your idea of rationalist self-help is just collecting the best self-help from elsewhere and giving it a stamp of approval, but then some of the other considerations apply more.)

3. Rationalist self-help starts looking a lot like therapy. If we're trying to make you a more successful computer programmer using something other than studying computer programming, it's probably going to involve removing mental blocks or something. Therapy has been pretty well studied, and the most common conclusion is that it is mostly nonspecific factors and the techniques themselves don't seem to have any special power. I am prepared to suspend this conclusion for occasional miracles when extremely charismatic therapists meet exactly the right patient and some sort of non-scaleable flash of lightning happens, but this also feels different from "the techniques do what they're supposed to". If rationalists are trying to do therapy, they are competing with a field of tens of thousands of PhD-level practitioners with all the resources of the academic and health systems who have worked on the problem for decades. This is not the kind of situation that encourages me we can make fast progress. See https://slatestarcodex.com/2019/11/20/book-review-all-therapy-books/ for more on this.

4. General skepticism of premature practical application. It took 300 years between Harvey discovering the circulatory system and anyone being very good at treating circulatory disease. It took 50 years between Pasteur discovering germ theory and anyone being very good at treating infections. It took 250 years between Newton discovering gravity and anyone being very good at flying. I have a lower prior than you on good science immediately translating into useful applications. And I am just not too impressed with the science here. Kahneman and Tversky discovered a grab bag of interesting facts, some of which in retrospect were false. I still don't think we're anywhere near the deep understanding of rationality that would make me feel happy here.

This doesn't mean I think rationality is useless. I think there are lots of areas outside our brain's normal design specs where rationality is really useful. And because these don't involve getting sex or money, there's been a lot less previous exploration of the space and the low hanging fruits haven't been gobbled up. Or, when the space has been explored, people haven't done a great job formalizing their insights, or they haven't spread, or things like that. I am constantly shocked by how much really important knowledge there is sitting around that nobody knows about or thinks about because it doesn't have an immediate payoff.

Along with all of this, I'm increasingly concerned that anything that has payoff in sex or money is an epistemic death zone. Because you can make so much money teaching it, it attracts too much charlatanry to navigate easily, and it subjects anyone who enters to extreme pressure to become charlatan-adjacent. Because it touches so closely on our emotions and sense of self-worth, it's a mind-killer in the same way politics are. Because everybody is so different, there's almost irresistible pressure to push the thing that saved your own life, without checking whether it will help anyone else. Because it's such a noisy field and RCTs are so hard, I don't trust us to be able to check our intuitions against reality. And finally, I think there are whole things lurking out there of the approximate size and concerningness of "people are homeostasis-preserving control systems which will expend their entire energy on undoing any change you do to them" that we just have no idea about even though they have the potential to make everything in this sphere useless if we don't respond to them.

I actually want to expand on the politics analogy. If someone were to say rationality was great at figuring out whether liberalism or conservatism was better, I would agree that this is the sort of thing rationality should be able to do, in principle. But it's such a horrible topic that has the potential to do so much damage to anyone trying to navigate it that I would be really nervous about it - about whether we were really up to the task, and about what it would do to our movement if we tried. These are some of my concerns around self-help too.

↑ comment by Jacob Falkovich (Jacobian) · 2019-12-10T05:12:14.034Z · LW(p) · GW(p)

Thank you for the detailed reply. I'm not going to reply point by point because you made a lot of points, but also because I don't disagree with a lot of it. I do want to offer a couple of intuitions that run counter to your pessimism.

While you're right that we shouldn't expect Rationalists to be 10x better at starting companies because of efficient markets, the same is not true of things that contribute to personal happiness. For example: how many people have a strong incentive in helping you build fulfilling romantic relationships? Not the government, not capitalism, not most of your family or friends, often not even your potential partners. Even dating apps make money when you *don't* successfully seduce your soulmate. But Rationality can be a huge help: learning that your emotions are information, learning about biases and intuitions, learning about communication styles, learning to take 5-minute timers to make plans — all of those can 10x your romantic life.

Going back to efficient markets, I get the sense that a lot of things out there are designed by the 1% most intelligent and ruthless people to take advantage of the 95% and their psychological biases. Outrage media, predatory finance, conspicuous brand consumption and other expensive status ladders, etc. Rationality doesn't help me design a better YouTube algorithm or finance scam, but at least it allows me to escape the 95% and keeps me away from outrage and in index funds.

Finally, I do believe that the world is getting weirder faster, and the thousands of years of human tradition are becoming obsolete at a faster pace. We are moving ever further from our "design specs". In this weirding world, I already hit jackpot with Bitcoin and polyamory, two things that couldn't really exist successfully 100 years ago. Rationality guided me to both. You hit jackpot with blogging— can you imagine your great grand uncle telling you that you'll become a famous intellectual by writing about cactus people and armchair sociology for free? And we're both still very young.

For any particular achievement like basketball or making your first million, there are more dedicated practices that help you to your goal faster than Rationality. But for taking advantage of unknown unknowns, the only two things I know that work are Rationality and making friends.

Replies from: Yvain↑ comment by Scott Alexander (Yvain) · 2019-12-10T09:09:25.894Z · LW(p) · GW(p)

Thanks, all good points.

I think efficient market doesn't just suggest we can't do much better at starting companies. It also means we can't do much better at providing self-help, which is a service that can make people lots of money and status if they do it well.

I'm not sure if you're using index fund investing as an example of rationalist self-help, or just as a metaphor for it. If you're using it an example, I worry that your standards are so low that almost any good advice could be rationalist self-help. I think if you're from a community where you didn't get a lot of good advice, being part of the rationalist community can be really helpful in exposing you to it (sort of like the theory where college makes you successful because it inducts you into the upper-middle class). I think I got most of my "invest in index funds" level good advice before entering the rationalist community, so I didn't count that.

Being part of the rationalist community has definitely improved my life, partly through giving me better friends and partly through giving me access to good ideas of the "invest in index funds" level. I hadn't counted that as part of our discussion, but if I do, then I agree it is great. My archetypal idea of "rationalist self-help" is sitting around at a CFAR workshop trying very hard to examine your mental blocks. I'm not sure if we agree on that or if I'm caricaturing your position.

I'm not up for any gigantic time commitment, but if you want to propose some kind of rationalist self-help exercise that I should try (of the order of 10 minutes/day for a few weeks) to see if I change my mind about it, I'm up for that, though I would also believe you if you said such a halfhearted commitment wouldn't be a good test.

↑ comment by Jacob Falkovich (Jacobian) · 2019-12-10T15:53:41.670Z · LW(p) · GW(p)

I have several friends in New York who are a match to my Rationalist friends in age, class, intelligence etc. and who:

- Pick S&P 500 stocks based on CNBC and blogs because their intuition tells them they've beat the market (but they don't check or track it, just remember the winners).

- Stay in jobs they hate because they don't have a robust decision process for making such a switch (I used goal factoring, Yoda timer job research, and decision matrices to decide where to work).

- Go so back asswards about dating that it hurts to watch (because they can't think about it systematically).

- Retweet Trump with comment.

- Throw the most boring parties.

- Spend thousands of dollars on therapists but would never do a half-hour debugging session with a friend because "that would be weird".

- In general, live mostly within "social reality" where the only question is "is this weird/acceptable" and never "is this true/false".

Now perhaps Rationalist self-improvement can't help them, but if you're reading LessWrong you may be someone who may snap out of social reality long enough for Rationality to change your life significantly.

> if you want to propose some kind of rationalist self-help exercise that I should try

Different strokes for different folks. You can go through alkjash's Hammertime Sequence [? · GW] and pick one, although even there the one that he rates lowest (goal factoring) is the one that was the most influential in my own life. You must be friends with CFAR instructors/mentors who know your personality and pressing issues better than I do and can recommend and teach a useful exercise.

Replies from: romeostevensit, ChristianKl, panashe-fundira

↑ comment by romeostevensit · 2019-12-10T21:46:00.154Z · LW(p) · GW(p)

Agreed, I see a major problem with an argument that seems to imply that since advice exists elsewhere/wasn't invented by rationality techniques, a meta-heuristic for aggregating trustworthy sources isn't hugely valuable.

↑ comment by ChristianKl · 2019-12-10T17:09:27.548Z · LW(p) · GW(p)

In general, live mostly within "social reality" where the only question is "is this weird/acceptable" and never "is this true/false".

It seems to me like people who primarily think in terms of weird/acceptable never join the rationality in the first place. Or do you believe that our community has taught people who used to think in those terms to think otherwise?

Replies from: Jacobian↑ comment by Jacob Falkovich (Jacobian) · 2019-12-10T18:23:00.848Z · LW(p) · GW(p)

As I said, someone who is 100% in thrall to social reality will probably not be reading this. But once you peek outside the bubble there is still a long way to enlightenment: first learning how signaling, social roles, tribal impulses etc. shape your behavior [LW · GW] so you can avoid their worst effects, then learning to shape the rules [LW · GW] of social reality to suit your own goals. Our community is very helpful for getting the first part right, it certainly has been for me. And hopefully we can continue fruitfully exploring the second part too.

↑ comment by Panashe Fundira (panashe-fundira) · 2019-12-11T21:06:18.537Z · LW(p) · GW(p)

Retweet Trump with comment.

What is the error that you're implying here?

Replies from: pas↑ comment by Viliam · 2019-12-10T21:43:43.139Z · LW(p) · GW(p)

It also means we can't do much better at providing self-help, which is a service that can make people lots of money and status if they do it well.

Maybe the incentives are all wrong here, and the most profitable form of "self-help" is one that doesn't provide long term improvement, so that customers return for more and more books and seminars.

In that case, we can easily do better -- better for the "customers", but less profitable for the "gurus".

↑ comment by zby · 2019-12-14T11:35:06.966Z · LW(p) · GW(p)

through giving me access to good ideas of the "invest in index funds" level

for me the point is about getting *consistently* good ideas, getting reliable ideas where applying scientific method is too hard. It is much less about self-improvement as it is about community improvement in the face of more and more connected (and thus weird) world. Rationality is epistemology for the internet era.

↑ comment by orthonormal · 2019-12-14T23:22:15.638Z · LW(p) · GW(p)

Even lots of leading rationalist organizations are led by people who haven't put particular effort into anything you could call rationalist self-help! That's really surprising!

Indeed, I'm surprised to read that, because for the leading Berkeley rationalist organizations (MIRI, CFAR, CEA) I can think of at least one person in the top part of their org chart whom I personally know has done a rationalist self-help push for at least a couple of months before taking said role. (In two of those cases it's the top person.)

Can you say what organizations you're thinking of?

↑ comment by orthonormal · 2019-12-14T23:47:27.627Z · LW(p) · GW(p)

Also, yes rationalists do more curating of good advice than invention of it, just as we do with philosophy. But there's a huge value-add in sorting out the good advice in a domain from the bad advice, and this I think the community does in a more cross-domain way than I see elsewhere.

↑ comment by Rob Bensinger (RobbBB) · 2019-12-10T01:33:05.892Z · LW(p) · GW(p)

I'm not sure how much we disagree; it sounds like I disagree with you, but maybe most of that is that we're using different framings / success thresholds.

Efficient markets. Rationalists developed rationalist self-help by thinking about it for a while. This implies that everyone else left a $100 bill on the ground for the past 4000 years. If there were techniques to improve your financial, social, and romantic success that you could develop just by thinking about them, the same people who figured out the manioc detoxification techniques, or oracle bone randomization for hunting, or all the other amazingly complex adaptations they somehow developed, would have come up with them.

If you teleported me 4000 years into the past and deleted all of modernity and rationalism's object-level knowledge of facts from my head, but let me keep as many thinking heuristics and habits of thought as I wanted, I think those heuristics would have a pretty large positive effect on my ability to pursue mundane happiness and success (compared to someone with the same object-level knowledge but more normal-for-the-time heuristics).

The way you described things here feels to me like it would yield a large overestimate of how much deliberate quality-adjusted optimization (or even experimentation and random-cultural-drift-plus-selection-for-things-rationalists-happen-to-value) human individuals and communities probably put into discovering, using, and propagating "rationalist skills that work" throughout all of human history.

Example: implementation intentions / TAPs are an almost comically simple idea. AFAIK, it has a large effect size that hasn't fallen victim to the replication crisis (yet!). Humanity crystallized this idea in 1999. A well-calibrated model of "how much optimization humanity has put into generating, using, and propagating rationality techniques" shouldn't strongly predict that an idea this useful and simple will reach fixation in any culture or group throughout human history before the 1990s, since this in fact never happened. But your paragraph above seems to me like it would predict that many societies throughout history would have made heavy use of TAPs.

I'd similarly worry that the "manioc detoxification is the norm + human societies are as efficient at installing mental habits and group norms as they are at detoxifying manioc" model should predict that the useful heuristics underlying the 'scientific method' (e.g., 'test literally everything', using controls, trying to randomize) reach fixation in more societies earlier.

Plausibly science is more useful to the group than to the individual; but the same is true for manioc detoxification. There's something about ideas like science that caused societies not to converge on them earlier. (And this should hold with even more force for any ideas that are hard to come up with, deploy, or detect-the-usefulness-of without science.)

Another thing that it sounds like your stated model predicts: "adopting prediction markets wouldn't help organizations or societies make money, or they'd already have been widely adopted". (Of course, what helps the group succeed might not be what helps the relevant decisionmakers in that organization succeed. But it didn't sound like you expected rationalists to outperform common practice or common sense on "normal" problems, even at the group level.)

Replies from: Yvain↑ comment by Scott Alexander (Yvain) · 2019-12-10T09:19:49.090Z · LW(p) · GW(p)

I'd similarly worry that the "manioc detoxification is the norm + human societies are as efficient at installing mental habits and group norms as they are at detoxifying manioc" model should predict that the useful heuristics underlying the 'scientific method' (e.g., 'test literally everything', using controls, trying to randomize) reach fixation in more societies earlier.

I'd disagree! Randomized controlled trials have many moving parts, removing any of which makes them worse than useless. Remove placebo control, and your trials are always positive and you do worse than intuition. Remove double-blinding, same. Remove power calculations, and your trials give random results and you do worse than intuition. Remove significance testing, same. Even in our own advanced civilization, if RCTs give a result different than common sense it's a 50-50 chance which is right; a primitive civilization who replaced their intuitions with the results of proto-RCTs would be a disaster. This ends up like the creationist example where evolution can't use half an eye so eyes don't evolve; obviously this isn't permanently true with either RCTs or eyes, but in both cases it took a long time for all the parts to evolve independently for other reasons.

Also, you might be underestimating inferential distance - tribes that count "one, two, many" are not going to be able to run trials effectively. Did you know that people didn't consistently realize you could take an average of more than two numbers until the Middle Ages?

Also, what would these tribes use RCTs to figure out? Whether their traditional healing methods work? St. John's Wort is a traditional healing method, there have now been about half a dozen high-quality RCTs investigating it, with thousands of patients, and everyone is *still* confused. I am pretty sure primitive civilizations would not really have benefited from this much.

I am less sure about trigger-action plans. I think a history of the idea of procrastination would be very interesting. I get the impression that ancient peoples had very confused beliefs around it. I don't feel like there is some corpus of ancient anti-procrastination techniques from which TAPs are conspicuously missing, but why not? And premodern people seem weirdly productive compared to moderns in a lot of ways. Overall I notice I am confused here, but this could be an example where you're right.

I'm confused about how manioc detox is more useful to the group than the individual - each individual self-interestedly would prefer to detox manioc, since they will die (eventually) if they don't. This seems different to me than the prediction market example, since (as Robin has discussed) decision-makers might self-interestedly prefer not to have prediction markets so they can keep having high status as decision-makers.

Replies from: orthonormal, Richard_Kennaway, RobbBB↑ comment by orthonormal · 2019-12-14T23:44:01.230Z · LW(p) · GW(p)

Randomized controlled trials have many moving parts, removing any of which makes them worse than useless.

I disagree with this- for one thing, they caught on before those patches were known, and still helped make progress. The patches help you discern smaller effects, with less bias, and better understanding of whether the result is a fluke; but the basic version of a randomized trial between two interventions is still vastly superior to human intuition when it comes to something with a large but not blindingly obvious effect size.

↑ comment by Richard_Kennaway · 2019-12-10T12:45:41.717Z · LW(p) · GW(p)

And premodern people seem weirdly productive compared to moderns in a lot of ways.

I am curious. Could you expand on this?

↑ comment by Rob Bensinger (RobbBB) · 2019-12-12T21:49:28.355Z · LW(p) · GW(p)

I'm confused about how manioc detox is more useful to the group than the individual - each individual self-interestedly would prefer to detox manioc, since they will die (eventually) if they don't.

Yeah, I was wrong about manioc.

Something about the "science is fragile" argument feels off to me. Perhaps it's that I'm not really thinking about RCTs; I'm looking at Archimedes, Newton, and Feynman, and going "surely there's something small that could have been tweaked about culture beforehand to make some of this low-hanging scientific fruit get grabbed earlier by a bunch of decent thinkers, rather than everything needing to wait for lone geniuses". Something feels off to me when I visualize a world where all the stupidly-simple epistemic-methods-that-are-instrumentally-useful fruit got plucked 4000 years ago, but where Feynman can see big gains from mental habits like "look at the water" (which I do think happened).

Your other responses make sense. I'll need to chew on your comments longer to see how much I end up updating overall toward your view.

Replies from: johnswentworth↑ comment by johnswentworth · 2019-12-12T23:06:43.145Z · LW(p) · GW(p)

Something about the "science is fragile" argument feels off to me. Perhaps it's that I'm not really thinking about RCTs; I'm looking at Archimedes, Newton, and Feynman, and going "surely there's something small that could have been tweaked about culture beforehand to make some of this low-hanging scientific fruit get grabbed earlier by a bunch of decent thinkers, rather than everything needing to wait for lone geniuses".

I'd propose that there's a massive qualitative difference between black-box results (like RCTs) and gears-level model-building (like Archimedes, Newton, and Feynman). The latter are where basically all of the big gains are, and it does seem like society is under-invested in building gears-level models. One possible economic reason for the under-investment is that gears-level models have very low depreciation rates, so they pay off over a very long timescale.

Replies from: Vaniver↑ comment by Vaniver · 2019-12-13T06:52:25.452Z · LW(p) · GW(p)

One possible economic reason for the under-investment is that gears-level models have very low depreciation rates, so they pay off over a very long timescale.

I would suspect it's the other way around, that they have very high depreciation rates; we no longer have Feynman's gears-level models, for example.

↑ comment by Zack_M_Davis · 2019-12-09T20:41:18.158Z · LW(p) · GW(p)

about whether we were really up to the task, and about what it would do to our movement if we tried

Does your outlook change at all if you try dropping the assumption that there is a "we" who cares about "our movement"? I've certainly found a lot of the skills I learned from reading the Sequences useful in my subsequent thinking about politics and social science. (Which thinking, obviously, mostly does not take the form of asking whether "liberalism" or "conservatism" is "better"; political spectra are dimensionality reductions.) I'm trying. I'm not sure how much I've succeeded. But I expect my inquiries to be more fruitful than those of people who make appeals to consequences to someone's "movement" before trying to think about something.

Replies from: Yvain↑ comment by Scott Alexander (Yvain) · 2019-12-09T23:02:57.950Z · LW(p) · GW(p)

You're right in catching and calling out the appeal to consequences there, of course.

But aside from me really caring about the movement, I think part of my thought process is that "the movement" is also the source of these self-help techniques. If some people go into this space and then report later with what they think, I am worried that this information is less trustworthy than information that would have come from these same people before they started dealing with this question.

↑ comment by Tenoke · 2019-12-10T12:07:01.299Z · LW(p) · GW(p)

Even if they only work in modern society, one of the millions of modern people who wanted financial, social, and romantic success before you would have come up with them.

Nobody is claiming that everything around rationalist circles is completely new or invented by them. It's often looked to me more like separating the more and less useful stuff with various combinations of bottom-up and top-down approaches.

Additionally, I'd like to also identify as someone who is definitely in a much much better place now because they discovered LW almost a decade ago even though I also struggle with akrasia and do less to improve myself than I'd like, I'm very sure that just going to therapy wouldn't have improved my outcomes in so many areas, especially financially.

↑ comment by SebastianG (JohnBuridan) · 2019-12-15T04:26:59.954Z · LW(p) · GW(p)

There are so many arguments trying to be had at once here that it's making my head spin.

Here's one. What do we mean by self-help?

I think by self-help Scott is thinking about becoming psychologically a well-adjusted person. But what I think Jacobian means by "rationalist self-help" is coming to a gears level understanding of how the world works to aid in becoming well-adapted. So while Scott is right that we shouldn't expect rationalist self-help to be significantly better than other self-help techniques for becoming a well-adjusted person, Jacobian is right that rationalist self-help is an attempt to become both a well-adjusted person AND a person who participates in developing an understanding of how the world works.

So perhaps you want to learn how to navigate the space of relationships, but you also have this added constraint that you want the theory of how to navigate relationships to be part of a larger understanding of the universe, and not just some hanging chad of random methods without satisfactory explanations of how or why they work. That is to say, you are not willing to settle for unexamined common sense. If that is the case, then rationalist self-help is useful in a way that standard self-help is not.

A little addendum. This is not a new idea. Socrates thought of philosophy as way of life, and tried to found a philosophy which would not only help people discover more truths, but also make them better, braver, and more just people. Stoics and Epicureans continued the tradition of philosophy as a way of life. Since then, there have always been people who have made a way of life out of applying the methods of rationality to normal human endeavors, and human society since then has pretty much always been complicated enough to marginally reward them for the effort.

↑ comment by ChristianKl · 2019-12-11T07:58:41.652Z · LW(p) · GW(p)

If someone were to say rationality was great at figuring out whether liberalism or conservatism was better, I would agree that this is the sort of thing rationality should be able to do, in principle.

I think the best answer would be that rationality 101 is tabooing terms and not having the discussion on the level of "is liberalism or conservatism better".

OpenPhil does invest money into individual political decisions such as prison reform and I would count them to be part of our rationalist project.

↑ comment by ChristianKl · 2019-12-10T13:01:48.287Z · LW(p) · GW(p)

If rationalists are trying to do therapy, they are competing with a field of tens of thousands of PhD-level practitioners with all the resources of the academic and health systems who have worked on the problem for decades.

Part of the "resources" is working inside a bureaucratic system where it's necessary to spend a lot of time jumping through hoops. There's another large chunk of time invested in intellectual analysis. Most of the people inside academia spend relatively little time in deliberate practice to build skills to do intervention. It's not surprising to me to have outsiders outperform academia by a large margin.

↑ comment by ChristianKl · 2019-12-10T08:34:38.954Z · LW(p) · GW(p)

Another example: doctors fail miserably on the Bayes mammogram problem, but usually handle actual breast cancer diagnosis okay.

If doctors would be good at breast cancer diagnosis, the US wouldn't have upped the age where testing is done under the Obama administration.

We live in a world where doctors created so many unnecessary operations after they did their diagnosis, that we decided we should do less testing.

Replies from: gjm↑ comment by gjm · 2019-12-10T10:37:48.323Z · LW(p) · GW(p)

I don't know what the actual causal story is here, but it's at any rate not obviously right that if doctors were good at it then there'd be no reason to increase the age, for a few reasons.

- Changing the age doesn't say anything about who's how good at what, it says that something has changed.

- What's changed could be that doctors have got worse at breast cancer diagnosis, or that we've suddenly discovered that they're bad. But it could also be, for instance:

- That patients have become more anxious and therefore (1) more harmed directly by a false-positive result and (2) more likely to push their doctors for further procedures that would in expectation be bad for them.

- That we've got better or worse, or discovered we're better or worse than we thought, at treating certain kinds of cancers at certain stages, in a way that changes the cost/benefit analysis around finding things earlier.

- E.g., I've heard it said (but I don't remember by whom and it might be wrong, so this is not health advice) that the benefits of catching cancers early are smaller than they used to be thought to be, because actually the reason why earlier-caught cancers kill you less is that ones you catch when they're smaller are more likely to be slower-growing ones that were less likely to kill you whenever you caught them; if that's true and a recent discovery then it would suggest reducing the amount of screening you do.

- That previous protocols were designed without sufficient attention to the downsides of testing.

↑ comment by ChristianKl · 2019-12-10T12:36:15.311Z · LW(p) · GW(p)

You had a situation where the amount of people who died from cancer were roughly the same in the US and Europe.

At the same time the US started diagnosis earlier and had a higher rate of curing people who are diagnosed with cancer. This does suggest that women got diagnosed in the US with cancer while they wouldn't have been in Europe with lower testing rates but where whether or not they are treated had in the end little effect on mortality due to breast cancer.

If someone is good at making treatment decisions then he should get better outcomes if he gets more testing data. The fact that this didn't seem to happen suggests a problem with the decision making of the cancer doctors.

It's not definite but at the same time I don't see evidence that the doctors are actually good at making decisions.

comment by johnswentworth · 2020-12-29T02:41:23.588Z · LW(p) · GW(p)

Looking back, I have quite different thoughts on this essay (and the comments) than I did when it was published. Or at least much more legible explanations; the seeds of these thoughts have been around for a while.

On The Essay

The basketballism analogy remains excellent. Yet searching the comments, I'm surprised that nobody ever mentioned the Fosbury Flop or the Three-Year Swim Club. In sports, from time to time somebody comes along with some crazy new technique and shatters all the records.

Comparing rationality practice to sports practice, rationality has not yet had its Fosbury Flop.

I think it's coming. I'd give ~60% chance that rationality will have had its first Fosbury Flop in another five years, and ~40% chance that the first Fosbury Flop of rationality is specifically a refined and better-understood version of gears-level modelling. It's the sort of thing that people already sometimes approximate by intuition or accident, but has the potential to yield much larger returns once the technique is explicitly identified and intentionally developed.

Once that sort of technique is refined, the returns to studying technique become much larger.

On The Comments - What Does Rationalist Self-Improvement Look Like?

Scott's prototypical picture of rationalist self-improvement "starts looking a lot like therapy". A concrete image:

My archetypal idea of "rationalist self-help" is sitting around at a CFAR workshop trying very hard to examine your mental blocks.

... and I find it striking that people mostly didn't argue with that picture, so much as argue that it's actually pretty helpful to just avoid a lot of socially-respectable stupid mistakes.

I very strongly doubt that the Fosbury Flop of rationality is going to look like therapy. It's going to look like engineering. There will very likely be math.

Today's "rationalist self-help" does look a lot like therapy, but it's not the thing which is going to have impressive yields from studying the techniques.

On The Comments - What Benefits Should Rationalist Self-Improvement Yield?

This is one question where I didn't have a clear answer a year ago, but now I feel ready to put a stake in the ground. Money cannot buy expertise [LW · GW]. Whenever expertise is profitable, there will be charlatans, and the charlatans win the social status game at least as often as not. 2020 has provided ample evidence of this, and in places where actually making the map match the territory matters.

The only way to reliably avoid the charlatans is to learn enough oneself, model enough of the world oneself, acquire enough expertise oneself, to distinguish the truth from the bullshit.

That is a key real-world skill - arguably the key real-world skill - at which rationalists should outperform the rest of the world, especially as a community. Rationalist self-improvement should make us better at modelling the world, better at finding the truth in a pile of bullshit. And 2020 gave us a great problem on which to show off that skill. We did well compared to the rest of the world, and there's a whole lot of room left to grow.

Replies from: romeostevensit, mr-hire, TAG↑ comment by romeostevensit · 2023-12-28T23:19:03.693Z · LW(p) · GW(p)

It has now been 4 years since this post, and 3 years since your prediction. Two thoughts:

- prediction markets have really taken off in the past few years and this has been a substantial upgrade in keeping abreast of what's going on in the world.

- the Fosbury Flop of rationality might have already happened: Korzybski's consciousness of abstraction. It's just not used because of the dozens to hundred hours it takes.

↑ comment by Matt Goldenberg (mr-hire) · 2020-12-29T02:51:30.997Z · LW(p) · GW(p)

I think it's coming. I'd give ~60% chance that rationality will have had its first Fosbury Flop in another five years, and ~40% chance that the first Fosbury Flop of rationality is specifically a refined and better-understood version of gears-level modelling.

The fosbury flop succeeded because there's very clear win conditions for high jump, and it beat everyone else.

I think it's much harder to have a fosbury flop for rationality because even if the technique is better it's not immediately obvious and therefore far less memetic.

Replies from: johnswentworth↑ comment by johnswentworth · 2020-12-29T14:33:59.482Z · LW(p) · GW(p)

Missing some other necessary conditions here, but I think your point is correct.

This is a big part of why having a rationalist community matters. Presumably people had jumping competitions in antiquity, and probably at some point someone tried something Fosbury-like (and managed to not break their spine in the process). But it wasn't until we had a big international sporting community that the conditions were set for it to spread.

Now we have a community of people who are on the lookout for better learning/modelling/problem-solving techniques, and have some decent (though far from perfect) epistemic tools in place to distinguish such things from self-help bullshit. Memetically, a Fosbury flop of rationality probably won't be as immediately obvious a success as the Fosbury flop, since we don't have a rationality Olympics (and if we did, it would be Goodharted). On the other hand, we have the internet, we have much faster diffusion of information, and we have a community of people who actively experiment with this sort of stuff, so it's not obvious whether a successful new technique would spread more quickly or less on net.

Replies from: Yoav Ravid, alexander-gietelink-oldenziel↑ comment by Yoav Ravid · 2021-01-23T15:39:41.485Z · LW(p) · GW(p)

The fosbury flop is a good analogy. Where i think it comes short is that rationality is indeed a much more complex thing than jumping. You would need more than just the invention and application of a technique by one person for a paradigm shift - It would at least also require distilling the technique well, learning how to teach it well, and changing the rationality cannon in light of it.

I think a paradigm shift would happen when a new rationality cannon will be created and adopted that outperforms the current sequences (very likely also containing new techniques) - and i think that's doable (for a start, see the flaws in the sequence Eliezer himself described in the preface [LW · GW]).

This isn't low hanging fruit, as it would require a lot of effort from skilled and knowledgeable people, but i would say it's at least visible fruit, so to speak.

↑ comment by Alexander Gietelink Oldenziel (alexander-gietelink-oldenziel) · 2021-12-21T11:18:57.616Z · LW(p) · GW(p)

The obvious candidate for the Rationalist Fosbury flop is the development of good Forecasting environment/software/culture/theory etc.

↑ comment by TAG · 2023-08-15T12:49:34.039Z · LW(p) · GW(p)

if the central claim of rationality is that there are a small number of generic techniques that can make you better at a wide range of things, then the basketball analogy is misleading because its a specific skill. The central claim of rationality was that there is such a small number of generic techniques, ie. remove biases and use Bayes. Bayes (Bayes!, Bayes!) was considered the Fosbury Flop for everything. But that seems not to have worked , and to have been quietly dropped. All the defences of rationalism in this article implicitly use a toolbox approach, although law thinking is explicitly recommended. [LW · GW]

comment by Viliam · 2019-12-10T23:04:47.691Z · LW(p) · GW(p)

Jacobian and Scott, you should do the adversarial collaboration thing!

I suspect that the debate about rationalist self-improvement has a problem similar to the "nature vs nurture" debates: it depends on the population. Take a population of literally clones, and all their differences can be explained by environment. Take a population that lives in a uniform environment, and their differences will be mostly genetic.

Similarly, if you already lived in a subculture that gave you the good answers and good habits, all that rationality can do is to give you a better justification for what you already know, and maybe somewhat prepare you for situations when you might meet something you don't know yet. On the other hand, if your environment got many things wrong, and you already kinda suspect it but the peer pressure is strong, learning the right answers and finding the people who accept them is a powerful change.

Scott makes a good point that the benefits of rationality not only fail to live up to expectation based on the rationalist fictional evidence, but often seem invisible. (It's not just that I fail to be Anasûrimbor Kellhus and change the world, but I mostly fail to overcome my own procrastination. And even if I feel an improvement initially, my life seven years after finding Less Wrong doesn't seem visibly different from outside.) Jacobian makes a good point that in a world with already existing great differences, a significant improvement for an individual is still invisible in the large scale. (Doubling my income and living in greater peace is a huge thing for me, but people counting the number of successful startups will be unimpressed.)

It is also difficult to see how x-rationality changed my life, because I cannot control for all the other things that happened during recent years. Things I found on LW, I could have found them elsewhere. I can't see the things that didn't happen to me thanks to LW. I can't be even sure about the impact on myself, so how could I talk about the community as a whole?

comment by jollybard · 2019-12-10T03:09:31.632Z · LW(p) · GW(p)

I've said it elsewhere, but wringing your hands and crying "it's because of my akrasia!" is definitely not rational behavior; if anything, rationalists should be better at dealing with akrasia. What good is a plan if you can't execute it? It is like a program without a compiler.

Your brain is part of the world. Failing to navigate around akrasia is epistemic failure.

Replies from: strangepoop, ChristianKl↑ comment by a gently pricked vein (strangepoop) · 2019-12-12T12:42:10.952Z · LW(p) · GW(p)

While you're technically correct, I'd say it's still a little unfair (in the sense of connoting "haha you call yourself a rationalist how come you're failing at akrasia").

Two assumptions that can, I think you'll agree, take away from the force of "akrasia is epistemic failure":

- if modeling and solving akrasia is, like diet, a hard problem that even "experts" barely have an edge on, and importantly, things that do work seem to be very individual-specific making it quite hard to stand on the shoulders of giants

- if a large percentage of people who've found and read through the sequences etc have done so only because they had very important deadlines to procrastinate

...then on average you'd see akrasia over-represented in rationalists. Add to this the fact that akrasia itself makes manually aiming your rationality skills at what you want harder. That can leave it stable even under very persistent efforts.

↑ comment by ChristianKl · 2019-12-10T12:33:38.432Z · LW(p) · GW(p)

That's irrelevant to the question of whether interventions such as reading the sequences or going to a CFAR workshop improve peoples outcomes.

It's useful for this discussion to see "rationalist self improvement" as being about the current techniques instead of playing motte-and-bailey.

comment by romeostevensit · 2019-12-09T20:31:53.550Z · LW(p) · GW(p)

The search space is multiplicative

Most people have a serious problem with doubling down on the things they're already good at rather than improving the areas they are bad at. This behavior interfaces well with the need to develop comparative advantage in a tribe of 150. It is misfiring badly in the modern context with massive peer groups.

Being embarrassingly bad at things is really difficult past the identity formation stage of adolescence where people calcify around whichever reward signals they invested a few hundred hours in, thus getting over the hump. People build an acceptable life out of whatever skills they have available and avoid areas of life that will provide evidence of incompetence. Midlife crises are often about remembering this forgotten thing when context changes enough to highlight it.

Much of the variance for the outcomes people most care about isn't very controlled by skill, this inculcates learned helplessness in other domains.

Replies from: ESRogs↑ comment by ESRogs · 2019-12-10T07:55:43.145Z · LW(p) · GW(p)

This behavior interfaces well with the need to develop comparative advantage in a tribe of 150. It is misfiring badly in the modern context with massive peer groups.

Wouldn't the modern context make comparative advantage even more important? It seems to me that the bigger a society you're operating in, the greater the returns to specialization.

Replies from: romeostevensit↑ comment by romeostevensit · 2019-12-10T21:49:08.721Z · LW(p) · GW(p)

Ultra high returns and positive externalities in the tails. Really bad internalities to personal quality of life if some basic thresholds aren't met. I am reminded of David Foster Wallace talking about how the sports press tries to paper over the absurd lifestyles that elite athletes actually live and try to make them seem relatable because that's the story the public wants.

What good is it to become a famous rich athlete if you lose all your money and wind up with brain damage because you never learned to manage any risks?

Replies from: ESRogscomment by FactorialCode · 2019-12-11T06:07:06.548Z · LW(p) · GW(p)

I think most of the strength of rationalism hasn't come from the skill of being rational, but rather from the rationality memeplex that has developed around it. Forming accurate beliefs from scratch is a lot more work and happens very slowly compared to learning them from someone else. Compare how much an individual PHD student achieves in his/her doctorate compared to the body of knowledge that they learn before making an original contribution. Likewise, someone who's practised the art of rationality will be instinctively better at distilling information into accurate beliefs. As a result they might have put together slightly more accurate beliefs about the world given what they've seen, but that slight increase in knowledge due to rationality isn't going to hold a candle to the massive pile of cached ideas and arguments that have been debated to hell and back in the rat-sphere.

To paint a clearer picture of what I'm getting at, suppose we model success as:

[Success] ~ ([Knowledge]*[Effort/Opportunities])

I.E. Success is proportional to how much you know, times how many opportunities you have to make use of it/how much effort you make to preform actions that you have chosen base on that knowledge. I think this could be further broken down into:

[Success] ~ ([Local Cultural Knowledge]+[Personal Knowledge])*[Effort/Opportunities]

I.E. Some knowledge is culturally transmitted, and some knowledge is acquired by interacting with the world. Then being a good practitioner of rationality outside the rationality community corresponds to maybe this:

[Success] ~ (1.05*[Local Cultural Knowledge]+1.05*[Personal Knowledge]))*[Effort/Opportunities]

In other words, a good rationalist will be better than the equivalent person at filtering though their cultural knowledge to identify the valuable aspects, and they'll be better at taking in all the information that they have personally observed, and used it to form beliefs. However, humans already need to be good at this, and evolution has spent a large amount of time honing heuristics for making this happen. So any additional gains in information efficiency will be hard won and possibly pyrrhic gains after extensive practice.

However, I think it's better to model an individual in the rationalist community as:

[Success] ~ ([Local Cultural Knowledge]+[Personal Knowledge]+[Rationalist Cultural Knowledge]))*[Effort/Opportunities]

LessWrong and the adjacent rat-sphere has fostered a community of individuals who all care about forming accurate beliefs about a very broad set of topics, and a focus on the topic of forming accurate beliefs. I think that because of this, the cultural knowledge that has been put together by the rationalist community has exceptionally high "quality" compared to what you would find locally.

Anyone who comes to LW will be exposed to a very large pile of new cultural knowledge, and will be able to put this knowledge into practice when the opportunity comes or they put in effort. I think that the value of this cultural knowledge vastly exceeds any gains that one could reasonably make by being a good rationalist.

A rationalist benefiting from LW doesn't look like someone going over a bunch of information and pulling out better conclusions or ideas than an equivalent non-rationalist. Rather, it looks like someone remembering some concept or idea they read in a blog post, and putting it into practice or letting it influence their decision when the opportunity shows itself.

Off the top of my head, some of the ideas that I've invoked or have influenced me IRL to positive effect have been rationalist taboo, meditation, CO2 Levels, everything surrounding AI risk, and the notion of effective altruism. To a lesser extent, I would also say that rat fiction has also influenced me. I can't produce any evidence that I've benefited from it, but characters like HJPEV and the Comet King have become something like role models for me and this has definitely influenced my personality and behaviour.

The question I think we should ask ourselves then, is has the art of rationality allowed us to put together a repository of cultural knowledge with an unusually high quality compared to what you would see in daily life.

Replies from: Viliam↑ comment by Viliam · 2019-12-11T19:52:13.337Z · LW(p) · GW(p)

The question I think we should ask ourselves then, is has the art of rationality allowed us to put together a repository of cultural knowledge with an unusually high quality compared to what you would see in daily life.

Yep. A group can usually collect much more knowledge than an individual. But the individual can (a) choose the group, and (b) contribute to its knowledge. From selfish perspective, the most important result of rationality is recognizing other rational people and choosing to learn from them. Luckily, information is free, so more people taking the knowledge doesn't increase the costs of those who generate it.

comment by Jacob Falkovich (Jacobian) · 2021-01-10T18:56:25.881Z · LW(p) · GW(p)

This is a self-review, looking back at the post after 13 months.

I have made a few edits to the post, including three major changes:

1. Sharpening my definition of what counts as "Rationalist self-improvement" to reduce confusion. This post is about improved epistemics leading to improved life outcomes, which I don't want to conflate with some CFAR techniques that are basically therapy packaged for skeptical nerds.

2. Addressing Scott's "counterargument from market efficiency" that we shouldn't expect to invent easy self-improvement techniques that haven't been tried.

3. Talking about selection bias, which was the major part missing from the original discussion. My 2020 post The Treacherous Path to Rationality [LW · GW] is somewhat of a response to this one, concluding that we should expect Rationality to work mostly for those who self-select into it and that we'll see limited returns to trying to teach it more broadly.

The past 13 months also provided more evidence in favor of epistemic Rationality being ever more instrumentally useful. In 2020 I saw a few Rationalist friends fund successful startups and several friends cross the $100k mark for cryptocurrency earnings. And of course, LessWrong led the way on early and accurate analysis of most COVID-related things. One result of this has been increased visibility and legitimacy, and of course another is that Rationalists have a much lower number of COVID cases than all other communities I know.

In general, this post is aimed at someone who discovered Rationality recently but is lacking the push to dive deep and start applying it to their actual life decisions. I think the main point still stands: if you're Rationalist enough to think seriously about it, you should do it.

comment by alkjash · 2020-12-03T02:23:43.006Z · LW(p) · GW(p)

Nominating this post because it asks a very important question - it seems worth considering that rationalists should get out of self-improvement altogether and only focus on epistemics - and gives a balanced picture of the discourse. The section on akrasia seems particularly enlightening and possibly the crux on whether or not techniques work, though I still don't have too much clarity on this. This post also gives me the push necessary to write a long overdue retrospective on my CFAR and Hammertime experience.

comment by norswap · 2020-12-10T03:31:43.560Z · LW(p) · GW(p)

Nominating because the idea that rationalists should win (which we can loosely defined as "be better at achieving their goals than non-rationalists") has been under fire in the community (see for instance Scott's comment on this post).