Coupling for Decouplers

post by Jacob Falkovich (Jacobian) · 2025-04-07T15:40:30.743Z · LW · GW · 2 commentsContents

Bayesian Brain Inside View Do or Do not The Void Episteme? I Hardly Know Him None 2 comments

Previously in sequence: Moonlight Reflected [LW · GW]

Cross-posted from SecondPerson.dating

Rationalism has a dating problem. I don’t mean simply the fact that a lot of rationalists are single, which may be adequately explained by age, sex ratio, and an unusual combination of slow life history with fears of an imminent apocalypse. A young guy once approached my friend at a party in Lighthaven:

Guy: I recognize you, I read your date-me doc.

My friend: Did you fill out the application?

Guy: Ah, well, I don’t think your family plans will work with my AI timelines.

We’ll come back to this guy eventually, but today I want to talk about rationality as a philosophy, not just a collection of single men. What do I mean by “a problem”? The last popular post on LessWrong tagged “Relationships (Interpersonal) [? · GW]” is from 3 years ago. It’s titled “Limerence Messes Up Your Rationality Real Bad, Yo [LW · GW]”.

Flirting, crushes, sex, dating — something about these doesn’t jive with Bay Area indigenous ways of knowing. Despite their broad curiosity about human nature and behavior, major rationalist writers like Eliezer [LW · GW], Scott, and Zvi have written very little about dating, and almost nothing about their own romantic lives. When I get feedback from rationalist readers, I often get a sense of deep resistance not to some particular claim I’ve made, but to Second Person’s entire way of thinking about dating.

Here’s an email from a longtime reader of my old rationalist blog, emphasis mine:

I had high hopes, but I'm a bit disappointed so far. Putanumonit was often quite good, but Second Person so far is not living up to it. Where are the numbers???

Of course markets are a useful frame to start with, but how about some example Fermi estimates of supply and demand? Or you could walk through some "backprop from the incentive gradient" examples - i.e. if I tighten/relax requirement X, how does that impact supply (as a Fermi estimate)? If I change what I'm offering in manner Y, how does that impact demand?

It mostly feels like exhortations about how to relate to the world, rather than fleshing out my models of the world.

There’s the rub: models of the dating world do you little good on the page. They need to be in your flesh. And it is these affairs of the flesh, physically and metaphorically, that rationalism prefers to ignore.

Bayesian Brain

The posterior odds [? · GW] are equal to the prior odds multiplied by the ratio of likelihoods. This is the entire Bayesian Torah, the rest is commentary.

Rationalists are really excited about Bayes’ Rule — accusations that LessWrong is a Bayesian cult predate accusations of it being a sex cult, a right wing cult, a shrimp cult, a Bitcoin cult, or a doomsday cult. The excitement is understandable: start with any prior distribution that’s at least open minded enough to contain the true hypothesis, keep applying Bayes’s Rule to all evidence, and you’ll walk the most efficient and reliable path to true belief. Test yourself by making predictions of the world, and reap the rewards when your Bayes-informed predictions come true!

So you can only imagine the excitement when, a few years after LessWrong was established, we found that literally all every part of your brain does is make Bayesian updates to minimize prediction errors. Scott Alexander wrote several excited articles about it. I wrote several excited articles about it. And most everyone else said ok cool story we’re gonna ignore it and just do AI posting now.

It turns out that the Bayesian brain is not quite a rationalist homunculus sitting in your head doing math. It doesn’t evaluate separate propositions, it updates grand models of everything [LW · GW] that don’t split neatly into discrete parts. These models are never impartial, they’re always centered in you. Crucially, your brain is all about that action: it minimizes prediction error by making the prediction come true with your body, not by passively updating.

The rationalist tries to use their brain for something quite different:

Stanovich talks about “cognitive decoupling”, the ability to block out context and experiential knowledge and just follow formal rules, as a main component of both performance on intelligence tests and performance on the cognitive bias tests that correlate with intelligence. Cognitive decoupling is the opposite of holistic thinking. It’s the ability to separate, to view things in the abstract, to play devil’s advocate.

Speculatively, we might imagine that there is a “cognitive decoupling elite” of smart people who are good at probabilistic reasoning and score high on the cognitive reflection test and the IQ-correlated cognitive bias tests. These people would be more likely to be male, more likely to have at least undergrad-level math education, and more likely to have utilitarian views. Speculating a bit more, I’d expect this group to be likelier to think in rule-based, devil’s-advocate ways, influenced by economics and analytic philosophy. I’d expect them to be more likely to identify as rational.

Cognitive decoupling is very useful, but we didn’t inherit our brains from ancestors chosen for their performance on IQ-correlated cognitive bias tests. We inherited it from ancestors who fucked. Fucking takes more of what our brain does naturally, less of what rationalists bend it towards.

Inside View

To decouple means to separate. To separate evaluation from action, global truths from personal context, finding out from fucking around. It works great in domains where there’s a universal truth, a lot of data, and personal biases preventing other smart people from putting it all together. Epidemiology turned out to be such a domain, and rationalists got basically everything right about COVID weeks ahead of “experts” and years ahead of journalists.

This success came from applying the “outside view [? · GW]” of a detached observer who reasons about the problem by analogy to an appropriate reference class. The “inside view” pollutes reasoning about what is true with impertinent subjectivity: what you wish to be true, what you’re scared to admit, whether it feels cringe to be seen caring about COVID in February 2020.

In dating, there’s only the inside view.

The question everyone wants to answer in dating is: are we compatible? The outside view can’t answer it. There are no reference classes fine-grained enough to guarantee romantic compatibility and thank goodness — you wouldn’t want a partner who’s indifferent between you and anyone else in your reference class.

The “subjective biases” of the inside view alone can answer the question of compatibility. Do you really wish to be compatible? That wish itself can make it happen through your actions. Are you scared you aren’t? The fear will make it so. Waiting for outsiders to decide for you? You’re already doomed. The inside view is immodest [LW · GW], like the immodesty it takes to believe that you alone are special to your partner and they to you. If you both believe that, population statistics be damned, then you’re compatible.

The desire to stand outside, objective and impartial, is deeply rooted in rationalism. It explains the draw of utilitarianism, an ethics that promises an objective answer to any moral choice that doesn’t depend on who you are and doesn’t ask itself how come you’re there to make that choice. It’s a philosophy of nobody in particular.

But your dating life is particularly yours. There’s no standing outside it, no replacing you with some arbitrary observer. Dating can’t be utilitarian, impersonal, or objective.

Do or Do not

Rationalism isn’t a philosophy of everything, but it covers two main things: knowing and doing. Or, in rationalist speak: epistemology and decision theory.

The two coexist somewhat uneasily within rationality, careful not to mix: like oil and water, or like quanta and gravity. Rationalist epistemology asks that you take care not to break anything by jumping to conclusions and doing something. Decision theory asks that you learn all that’s certain and put a probability on all that isn’t before deciding.

In dating, learning can’t be separated from action.

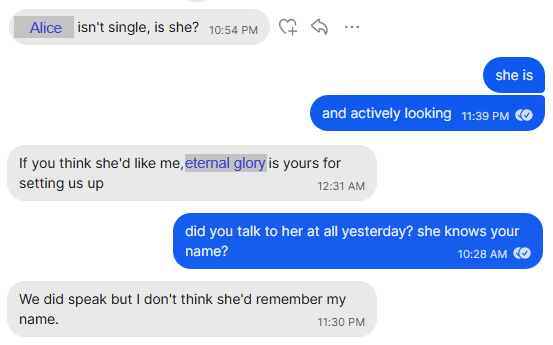

A few weeks ago, I hosted a brunch where my rationalist friend “Bob” spotted the attractive “Alice”. The next day, Bob texted me:

Rationalism teaches that when you reach a decision point that hinges on a simple question, you should find out the answer before proceeding. This sounds trivial but it isn’t: laziness, confirmation bias, and wishful thinking send many people driving into trees that could be avoided by asking a simple question first. Bob had a simple question: whether Alice is single, and an easy way to find out: asking me.

It turns out that whether Alice is single is far from the only question affecting the outcome. It matters just as much whether Bob finds this out by asking a friend, or asking her directly, or infers her status from hints she drops in conversation. It matters a lot how Bob treats Alice when he doesn’t know for sure if she’s single, whether he’s bold or timid, friendly or reserved, interested or aloof.

If Bob approached Alice with confidence, delighted her in conversation, and paid enough attention to glean who she is and what she cares about, it would have a much greater impact on their future prospects than whether she’s “single”. Maybe Alice’s relationship status is “complicated”. Maybe she wasn’t single but will soon be. Maybe she’s married but will introduce Bob, who impressed her, to her single friends.

Since Bob did none of those things, it didn’t matter at all that Alice was single. I asked her if I could give Bob her number; she replied with a curt “no thank you”.

At its best, Rationalism is a generalization of the scientific method that takes into account the biases of the scientist. But science can’t tell you shit about your dating life.

“Is Alice single?” is a general, scientific question. In principle, it could be answered by me, or Alice, or Bob himself and we should arrive at the same answer. That’s why it’s the wrong question. “Will Alice go out with me?” is a personal, contextual question. The answer depends on who you are and how you ask and whether Sagittarius is in retrograde. Bob can act to make a date happen or fail to do so; taking action must have priority over asking questions.

The Void

A rationalist objected to the woman in my example setting the stage for her attraction to develop instead of directly pursuing the man she’s interested in:

if that's how your brain works, if that's the only way you can be attracted to someone, yowza. good luck with that, i guess i just can't understand it on some level. something in my intuition is telling me something about this is wrong […]

on further reflection the thing that repulses me about the behavior described in the screenshot is that it's like, the opposite of the nameless virtue [LW · GW]

One could raise an objection in a similar spirit to what I’ve written in this essay: “All you’re saying is that dating is a hard system to model: dynamic, anti-inductive, and observer-dependent. Perhaps this makes rationalist techniques less reliable, or difficult to implement. But the techniques were always just means, not the end. You know what you want in dating — let this alone guide you and don’t worry about the methods.”

I don’t think you know what you want in dating. I asked you, and you responded with a mix of true desires, mimetic artifacts, self-reflection, and confusion. In retrospect, I shouldn’t have expected anything different. I did this exercise myself, a long time ago, and get the same mixed results.

Does Bob “know what he wants” if he wants a date with Alice? It’s a desire he didn’t have 10 minutes before he saw her, and one that would transform to something different 10 minutes into the date. This certainly doesn’t seem like the sort of unfaltering focus on a fixed target that the “nameless virtue” implies.

Would he “know what he wants” if he had a comprehensive list of dating desiderata, all of which indeed correlated with his relationship satisfaction? Such a list would guide him to compile databases and derive correlations, not to flirt with a girl at brunch about whom he knows almost nothing. Yet, as we discussed, it’s the latter that has any chance of leading him anywhere.

A romantic relationship changes who you are and what you want. You cannot truly connect with another person if you’re unwilling to budge from where you stood. You cannot love them if you only see them through a checklist you came up with before you met them. Dating involves making yourself less predictable and legible to yourself, a motion that is contrary to all rationalist instinct.

Episteme? I Hardly Know Him

Scott Alexander explains why rationalists don’t write about dating in his seminal review of Seeing Like a State:

[James] Scott often used the word “rationalism” to refer to the excesses of High Modernism, and I’ve deliberately kept it. What relevance does this have for the LW-Yudkowsky-Bayesian rationalist project? I think the similarities are more than semantic; there certainly is a hope that learning domain-general skills will allow people to leverage raw intelligence and The Power Of Science to various different object-level domains. I continue to be doubtful that this will work in the sort of practical domains where people have spent centuries gathering metis in the way Scott describes; this is why I’m wary of any attempt of the rationality movement to branch into self-help. […]

I also think that a good art of rationality would look a lot like metis, combining easily teachable mathematical rules with more implicit virtues which get absorbed by osmosis.

This also explains how I, a card-carrying [LW · GW] honored [LW · GW] rationalist veteran [LW · GW], am writing a dating blog.

I am trying to be helpful by writing the opposite of self-help. I’m combining teachable mathematical rules with trying to describe virtues that can only be slowly absorbed and practiced. And in this post I have used rationality to point at the limits of the rationalist approach. Dating is the realm where episteme fails and metis reigns. Rationalism has a lot to contribute to it — but only if it can get over itself.

2 comments

Comments sorted by top scores.

comment by johnswentworth · 2025-04-07T17:17:01.696Z · LW(p) · GW(p)

(Context: I've been following the series in which this post appeared, and originally posted this comment on the substack version.)

Overcompressed summary of this post: "Look, man, you are not bottlenecked on models of the world, you are bottlenecked on iteration count. You need to just Actually Do The Thing a lot more times; you will get far more mileage out of iterating more than out of modeling stuff.".

I definitely buy that claim for at least some people, but it seems quite false in general for relationships/dating.

Like, sure, most problems can be solved by iterating infinitely many times. The point of world models is to not need so many damn iterations. And because we live in a very high-dimensional world, naive iteration will often not work at all without a decent world model; one will never try the right things without having some prior idea of where to look.

Example: Aella's series on how to be good in bed. I was solidly in the audience for that post: I'd previously spent plenty of iterations becoming better in bed, ended up with solid mechanics, but consistently delivering great orgasms does not translate to one's partner wanting much sex. Another decade of iteration would not have fixed that problem; I would not have tried the right things, my partner would not have given the right feedback (indeed, much of her feedback was in exactly the wrong direction). Aella pointed in the right vague direction, and exploring in that vague direction worked within only a few iterations. That's the value of models: they steer the search so that one needs fewer iterations.

That's the point of all the blog posts. That's where the value is, when blog posts are delivering value. And that's what's been frustratingly missing from this series so far. (Most of my value of this series has been from frustratedly noticing the ways in which it fails to deliver, and thereby better understanding what I wish it would deliver!) No, I don't expect to e.g. need 0 iterations after reading, but I want to at least decrease the number of iterations.

And in regards to "I don’t think you know what you want in dating"... the iteration problem still applies there! It is so much easier to figure out what I want, with far fewer iterations, when I have better background models of what people typically want. Yes, there's a necessary skill of not shoving yourself into a box someone else drew, but the box can still be extremely valuable as evidence of the vague direction in which your own wants might be located.

comment by Said Achmiz (SaidAchmiz) · 2025-04-08T00:55:33.336Z · LW(p) · GW(p)

So you can only imagine the excitement when, a few years after LessWrong was established, we found that *literally all every part of your brain does is make Bayesian updates to minimize prediction errors. *Scott Alexander wrote several excited articles about it. I wrote several excited articles about it. And most everyone else said ok cool story we’re gonna ignore it and just do AI posting now.

Did we “find” this? That’s not what seems to me to have happened.

As far as I can tell, this is a speculative theory that some people found to be very interesting. Others said “well, perhaps, this is certainly an interesting way of thinking about some things, although there seem to be some hiccups, and maybe it has some correspondence to actual reality, maybe not—in any case do you have a more concrete model and stronger evidence?” and got the answer “more research is needed”. Alright, cool, by all means, proceed, research more.

And then nothing much came of it, and so of course it was thereafter mostly ignored. What is there to say about it or do with it?