Why I’m not a Bayesian

post by Richard_Ngo (ricraz) · 2024-10-06T15:22:45.644Z · LW · GW · 101 commentsThis is a link post for https://www.mindthefuture.info/p/why-im-not-a-bayesian

Contents

Degrees of belief Degrees of truth Model-based reasoning The correct role of Bayesianism None 102 comments

This post focuses on philosophical objections to Bayesianism as an epistemology. I first explain Bayesianism and some standard objections to it, then lay out my two main objections (inspired by ideas in philosophy of science). A follow-up post will speculate about how to formalize an alternative.

Degrees of belief

The core idea of Bayesian epistemology: we should ideally reason by assigning credences to propositions which represent our degrees of belief that those propositions are true. (Note that this is different from Bayesianism as a set of statistical techniques, or Bayesianism as an approach to machine learning, which I don’t discuss here.)

If that seems like a sufficient characterization to you, you can go ahead and skip to the next section, where I explain my objections to it. But for those who want a more precise description of Bayesianism, and some existing objections to it, I’ll more specifically characterize it in terms of five subclaims. Bayesianism says that we should ideally reason in terms of:

- Propositions which are either true or false (classical logic)

- Each of which is assigned a credence (probabilism)

- Representing subjective degrees of belief in their truth (subjectivism)

- Which at each point in time obey the axioms of probability (static rationality)

- And are updated over time by applying Bayes’ rule to new evidence (rigid empiricism)

I won’t go into the case for Bayesianism here except to say that it does elegantly formalize many common-sense intuitions. Bayes’ rule follows directly from a straightforward Venn diagram. The axioms of probability are powerful and mathematically satisfying. Subjective credences seem like the obvious way to represent our uncertainty about the world. Nevertheless, there are a wide range of alternatives to Bayesianism, each branching off from the claims listed above at different points:

- Traditional epistemology only accepts #1, and rejects #2. Traditional epistemologists often defend a binary conception of knowledge—e.g. one defined in terms of justified true belief (or a similar criterion, like reliable belief).

- Frequentism accepts #1 and #2, but rejects #3: it doesn’t think that credences should be subjective. Instead, frequentism holds that credences should correspond to the relative frequency of an event in the long term, which is an objective fact about the world. For example, you should assign 50% credence that a flipped coin will come up heads, because if you continued flipping the coin the proportion of heads would approach 50%.

- Garrabrant induction [LW · GW] accepts #1 to #3, but rejects #4. In order for credences to obey the axioms of probability, all the logical implications of a statement must be assigned the same credence. But this “logical omniscience” is impossible for computationally-bounded agents like ourselves. So in the Garrabrant induction framework, credences instead converge to obeying the axioms of probability in the limit, without guarantees that they’re coherent after only limited thinking time.

- Radical probabilism [LW · GW] accepts #1 to #4, but rejects #5. Again, this can be motivated by qualms about logical omniscience: if thinking for longer can identify new implications of our existing beliefs, then our credences sometimes need to update via a different mechanism than Bayes’ rule. So radical probabilism instead allows an agent to update to any set of statically rational credences at any time, even if they’re totally different from its previous credences. The one constraint is that each credence needs to converge over time to a fixed value—i.e. it can’t continue oscillating indefinitely (otherwise the agent would be vulnerable to a Dutch Book).

It’s not crucial whether we classify Garrabrant induction and radical probabilism as variants of Bayesianism or alternatives to it, because my main objection to Bayesianism doesn’t fall into any of the above categories. Instead, I think we need to go back to basics and reject #1. Specifically, I have two objections to the idea that idealized reasoning should be understood in terms of propositions that are true or false:

- We should assign truth-values that are intermediate between true and false (fuzzy truth-values)

- We should reason in terms of models rather than propositions (the semantic view)

I’ll defend each claim in turn.

Degrees of truth

Formal languages (like code) are only able to express ideas that can be pinned down precisely. Natural languages, by contrast, can refer to vague concepts which don’t have clear, fixed boundaries. For example, the truth-values of propositions which contain gradable adjectives like “large” or “quiet” or “happy” depend on how we interpret those adjectives. Intuitively speaking, a description of something as “large” can be more or less true depending on how large it actually is. The most common way to formulate this spectrum is as “fuzzy” truth-values which range from 0 to 1. A value close to 1 would be assigned to claims that are clearly true, and a value close to 0 would be assigned to claims that are clearly false, with claims that are “kinda true” in the middle.

Another type of “kinda true” statements are approximations. For example, if I claim that there’s a grocery store 500 meters away from my house, that’s probably true in an approximate sense, but false in a precise sense. But once we start distinguishing the different senses that a concept can have, it becomes clear that basically any concept can have widely divergent category boundaries depending on the context. A striking example from Chapman:

A: Is there any water in the refrigerator?

B: Yes.

A: Where? I don’t see it.

B: In the cells of the eggplant.

The claim that there’s water in the refrigerator is technically true, but pragmatically false. And the concept of “water” is far better-defined than almost all abstract concepts (like the ones I’m using in this post). So we should treat natural-language propositions as context-dependent by default. But that’s still consistent with some statements being more context-dependent than others (e.g. the claim that there’s air in my refrigerator would be true under almost any interpretation). So another way we can think about fuzzy truth-values is as a range from “this statement is false in almost any sense” through “this statement is true in some senses and false in some senses” to “this statement is true in almost any sense”.

Note, however, that there’s an asymmetry between “this statement is true in almost any sense” and “this statement is false in almost any sense”, because the latter can apply to two different types of claims. Firstly, claims that are meaningful but false (“there’s a tiger in my house”). Secondly, claims that are nonsense—there are just no meaningful interpretations of them at all (“colorless green ideas sleep furiously”). We can often distinguish these two types of claims by negating them: “there isn’t a tiger in my house” is true, whereas “colorless green ideas don’t sleep furiously” is still nonsense. Of course, nonsense is also a matter of degree—e.g. metaphors are by default less meaningful than concrete claims, but still not entirely nonsense.

So I've motivated fuzzy truth-values from four different angles: vagueness, approximation, context-dependence, and sense vs nonsense. The key idea behind each of them is that concepts have fluid and amorphous category boundaries (a property called nebulosity). However, putting all of these different aspects of nebulosity on the same zero-to-one scale might be an oversimplification. More generally, fuzzy logic has few of the appealing properties of classical logic, and (to my knowledge) isn’t very directly useful. So I’m not claiming that we should adopt fuzzy logic wholesale, or that we know what it means for a given proposition to be X% true instead of Y% true (a question which I’ll come back to in a follow-up post). For now, I’m just claiming that there’s an important sense in which thinking in terms of fuzzy truth-values is less wrong (another non-binary truth-value) than only thinking in terms of binary truth-values.

Model-based reasoning

The intuitions in favor of fuzzy truth-values become clearer when we apply them, not just to individual propositions, but to models of the world. By a model I mean a (mathematical) structure that attempts to describe some aspect of reality. For example, a model of the weather might have variables representing temperature, pressure, and humidity at different locations, and a procedure for updating them over time. A model of a chemical reaction might have variables representing the starting concentrations of different reactants, and a method for determining the equilibrium concentrations. Or, more simply, a model of the Earth might just be a sphere.

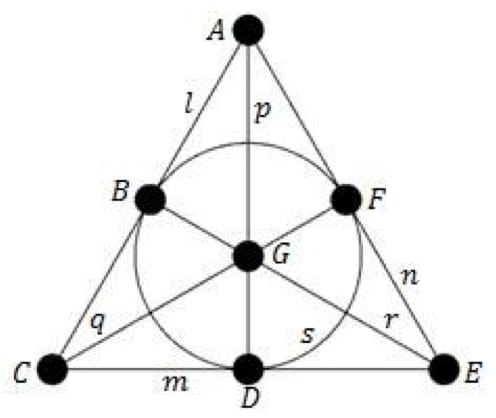

In order to pin down the difference between reasoning about propositions and reasoning about models, philosophers of science have drawn on concepts from mathematical logic. They distinguish between the syntactic content of a theory (the axioms of the theory) and its semantic content (the models for which those axioms hold). As an example, consider the three axioms of projective planes:

- For any two points, exactly one line lies on both.

- For any two lines, exactly one point lies on both.

- There exists a set of four points such that no line has more than two of them.

There are infinitely many models for which these axioms hold; here’s one of the simplest:

If propositions and models are two sides of the same coin, does it matter which one we primarily reason in terms of? I think so, for two reasons. Firstly, most models are very difficult to put into propositional form. We each have implicit mental models of our friends’ personalities, of how liquids flow, of what a given object feels like, etc, which are far richer than we can express propositionally. The same is true even for many formal models—specifically those whose internal structure doesn’t directly correspond to the structure of the world. For example, a neural network might encode a great deal of real-world knowledge, but even full access to the weights doesn’t allow us to extract that knowledge directly—the fact that a given weight is 0.3 doesn’t allow us to claim that any real-world entity has the value 0.3.

What about scientific models where each element of the model is intended to correspond to an aspect of reality? For example, what’s the difference between modeling the Earth as a sphere, and just believing the proposition “the Earth is a sphere”? My answer: thinking in terms of propositions (known in philosophy of science as the syntactic view) biases us towards assigning truth values in a reductionist way. This works when you’re using binary truth-values, because they relate to each other according to classical logic. But when you’re using fuzzy truth-values, the relationships between the truth-values of different propositions become much more complicated. And so thinking in terms of models (known as the semantic view) is better because models can be assigned truth-values in a holistic way.

As an example: “the Earth is a sphere” is mostly true, and “every point on the surface of a sphere is equally far away from its center” is precisely true. But “every point on the surface of the Earth is equally far away from the Earth’s center” seems ridiculous—e.g. it implies that mountains don’t exist. The problem here is that rephrasing a proposition in logically equivalent terms can dramatically affect its implicit context, and therefore the degree of truth we assign to it in isolation.

The semantic view solves this by separating claims about the structure of the model itself from claims about how the model relates to the world. The former are typically much less nebulous—claims like “in the spherical model of the Earth, every point on the Earth’s surface is equally far away from the center” are straightforwardly true. But we can then bring in nebulosity when talking about the model as a whole—e.g. “my spherical model of the Earth is closer to the truth than your flat model of the Earth”, or “my spherical model of the Earth is useful for doing astronomical calculations and terrible for figuring out where to go skiing”. (Note that we can make similar claims about the mental models, neural networks, etc, discussed above.)

We might then wonder: should we be talking about the truth of entire models at all? Or can we just talk about their usefulness in different contexts, without the concept of truth? This is the major debate in philosophy of science. I personally think that in order to explain why scientific theories can often predict a wide range of different phenomena, we need to make claims about how well they describe the structure of reality—i.e. how true they are. But we should still use degrees of truth when doing so, because even our most powerful scientific models aren’t fully true. We know that general relativity isn’t fully true, for example, because it conflicts with quantum mechanics. Even so, it would be absurd to call general relativity false, because it clearly describes a major part of the structure of physical reality. Meanwhile Newtonian mechanics is further away from the truth than general relativity, but still much closer to the truth than Aristotelian mechanics, which in turn is much closer to the truth than animism. The general point I’m trying to illustrate here was expressed pithily by Asimov: “Thinking that the Earth is flat is wrong. Thinking that the Earth is a sphere is wrong. But if you think that they’re equally wrong, you’re wronger than both of them put together.”

The correct role of Bayesianism

The position I’ve described above overlaps significantly with the structural realist position in philosophy of science. However, structural realism is usually viewed as a stance on how to interpret scientific theories, rather than how to reason more generally. So the philosophical position which best captures the ideas I’ve laid out is probably Karl Popper’s critical rationalism. Popper was actually the first to try to formally define a scientific theory's degree of truth (though he was working before the semantic view became widespread, and therefore formalized theories in terms of propositions rather than in terms of models). But his attempt failed on a technical level; and no attempt since then has gained widespread acceptance. Meanwhile, the field of machine learning evaluates models by their loss, which can be formally defined—but the loss of a model is heavily dependent on the data distribution on which it’s evaluated. Perhaps the most promising approach to assigning fuzzy truth-values comes from Garrabrant induction [LW · GW], where the “money” earned by individual traders could be interpreted as a metric of fuzzy truth. However, these traders can strategically interact with each other, making them more like agents than typical models.

Where does this leave us? We’ve traded the crisp, mathematically elegant Bayesian formalism for fuzzy truth-values that, while intuitively compelling, we can’t define even in principle. But I’d rather be vaguely right than precisely wrong. Because it focuses on propositions which are each (almost entirely) true or false, Bayesianism is actively misleading in domains where reasoning well requires constructing and evaluating sophisticated models (i.e. most of them).

For example, Bayesians measure evidence in “bits” [LW · GW], where one bit of evidence rules out half of the space of possibilities. When asking a question like “is this stranger named Mark? [LW · GW]”, bits of evidence are a useful abstraction: I can get one bit of evidence simply by learning whether they’re male or female, and a couple more by learning that their name has only one syllable. Conversely, talking in Bayesian terms about discovering scientific theories [LW · GW] is nonsense. If every PhD in fundamental physics had contributed even one bit of usable evidence about how to unify quantum physics and general relativity, we’d have solved quantum gravity many times over by now. But we haven’t, because almost all of the work of science is in constructing sophisticated models, which Bayesianism says almost nothing about. (Formalisms like Solomonoff induction attempt to sidestep this omission by enumerating and simulating all computable models, but that’s so different from what any realistic agent can do that we should think of it less as idealized cognition and more as a different thing altogether, which just happens to converge to the same outcome in the infinite limit.)

Mistakes like these have many downstream consequences. Nobody should be very confident about complex domains that nobody has sophisticated models of (like superintelligence); but the idea that “strong evidence is common [LW · GW]” helps justify [LW(p) · GW(p)] confident claims about them. And without a principled distinction between credences that are derived from deep, rigorous models of the world, and credences that come from vague speculation (and are therefore subject to huge Knightian uncertainty), it’s hard for public discussions to actually make progress [LW · GW].

Should I therefore be a critical rationalist? I do think Popper got a lot of things right. But I also get the sense that he (along with Deutsch, his most prominent advocate) throws the baby out with the bathwater. There is a great deal of insight encoded in Bayesianism which critical rationalists discard (e.g. by rejecting induction). A better approach is to view Bayesianism as describing a special case of epistemology, which applies in contexts simple enough that we’ve already constructed all relevant models or hypotheses, exactly one of which is exactly true (with all the rest of them being equally false), and we just need to decide between them. Interpreted in that limited way, Bayesianism is both useful (e.g. in providing a framework for bets and prediction markets) and inspiring: if we can formalize this special case so well, couldn’t we also formalize the general case? What would it look like to concretely define degrees of truth? I don’t have a solution, but I’ll outline some existing attempts, and play around with some ideas of my own, in a follow-up post.

101 comments

Comments sorted by top scores.

comment by johnswentworth · 2024-10-06T18:54:31.348Z · LW(p) · GW(p)

You're pointing to good problems, but fuzzy truth values seem to approximately-totally fail to make any useful progress on them; fuzzy truth values are a step in the wrong direction.

Walking through various problems/examples from the post:

- "For example, the truth-values of propositions which contain gradable adjectives like 'large' or 'quiet' or 'happy' depend on how we interpret those adjectives." You said it yourself: the truth-values depend on how we interpret those adjectives. The adjectives are ambiguous, they have more than one common interpretation (and the interpretation depends on context). Saying that "a description of something as 'large' can be more or less true depending on how large it actually is" throws away the whole interesting phenomenon here: it treats the statement as having a single fixed truth-value (which happens to be quantitative rather than 0/1), when the main phenomenon of interest is that humans use multiple context-dependent interpretations (rather than one interpretation with one truth value).

- "For example, if I claim that there’s a grocery store 500 meters away from my house, that’s probably true in an approximate sense, but false in a precise sense." Right, and then the quantity you want is "to within what approximation?", where the approximation-error probably has units of distance in this example. The approximation error notably does not have units of truthiness; approximation error is usually not approximate truth/falsehood, it's a different thing.

- <water in the eggplant>. As you said, natural language interpretations are usually context-dependent. This is just like the adjectives example: the interesting phenomenon is that humans interpret the same words in multiple ways depending on context. Fuzzy truth values don't handle that phenomenon at all; they still just have context-independent assignments of truth. Sure, you could interpret a fuzzy truth value as "how context-dependent is it?", but that's still throwing out nearly the entire interesting phenomenon; the interesting questions here are things like "which context, exactly? How can humans efficiently cognitively represent and process that context and turn it into an interpretation?". Asking "how context-dependent is it?", as a starting point, would be like e.g. looking at neuron polysemanticity in interpretability, and investing a bunch of effort in measuring how polysemantic each neuron is. That's not a step which gets one meaningfully closer to discovering better interpretability methods.

- "there's a tiger in my house" vs "colorless green ideas sleep furiously". Similar to looking at context-dependence and asking "how context-dependent is it?", looking at sense vs nonsense and asking "how sensical is it?" does not move one meaningfully closer to understanding the underlying gears of semantics and which things have meaningful semantics at all.

- "We each have implicit mental models of our friends’ personalities, of how liquids flow, of what a given object feels like, etc, which are far richer than we can express propositionally." Well, far richer than we know how to express propositionally, and the full models would be quite large to write out even if we knew how. That doesn't mean they're not expressible propositionally. More to the point, though: switching to fuzzy truth values does not make us significantly more able to express significantly more of the models, or to more accurately express parts of the models and their relevant context (which I claim is the real thing-to-aim-for here).

- Note here that I totally agree that thinking in terms of large models, rather than individual small propositions, is the way to go; insofar as one works with propositions, their semantic assignments are highly dependent on the larger model. But that

Furthermore, most of these problems can be addressed just fine in a Bayesian framework. In Jaynes-style Bayesianism, every proposition has to be evaluated in the scope of a probabilistic model; the symbols in propositions are scoped to the model, and we can't evaluate probabilities without the model. That model is intended to represent an agent's world-model, which for realistic agents is a big complicated thing. It is totally allowed for semantics of a proposition to be very dependent on context within that model - more precisely, there would be a context-free interpretation of the proposition in terms of latent variables, but the way those latents relate to the world would involve a lot of context (including things like "what the speaker intended", which is itself latent).

Now, I totally agree that Bayesianism in its own right says little-to-nothing about how to solve these problems. But Bayesianism is not limiting our ability to solve these problems either; one does not need to move outside a Bayesian framework to solve them, and the Bayesian framework does provide a useful formal language which is probably quite sufficient for the problems at hand. And rejecting Bayesianism for a fuzzy notion of truth does not move us any closer.

Replies from: abramdemski, ricraz, cubefox, xpym, Radamantis↑ comment by abramdemski · 2024-10-07T17:23:30.268Z · LW(p) · GW(p)

I would like to defend fuzzy logic at greater length, but I might not find the time. So, here is my sketch.

Like Richard, I am not defending fuzzy logic as exactly correct, but I am defending it as a step in the right direction.

The Need for Truth

As Richard noted, meaning is context-dependent. When I say "is there water in the fridge?" I am not merely referring to h2o; I am referring to something like a container of relatively pure water in easily drinkable form.

However, I claim: if we think of statements as being meaningful, we think these context-dependent meanings can in principle be rewritten into a language which lacks the context-independence.

In the language of information theory, the context-dependent language is what we send across the communication channel. The context-independent language is the internal sigma algebra used by the agents attempting to communicate.

You seem to have a similar picture:

It is totally allowed for semantics of a proposition to be very dependent on context within that model - more precisely, there would be a context-free interpretation of the proposition in terms of latent variables, but the way those latents relate to the world would involve a lot of context (including things like "what the speaker intended", which is itself latent).

I am not sure if Richard would agree with this in principle (EG he might think that even the internal language of agents needs to be highly context-independent, unlike sigma-algebras).

But in any case, if we take this assumption and run with it, it seems like we need a notion of accuracy for these context-independent beliefs. This is typical map-territory thinking; the propositions themselves are thought of as having a truth value, and the probabilities assigned to propositions are judged by some proper scoring rule.

The Problem with Truth

This works fine so long as we talk about truth in a different language (as Tarski pointed out with Tarski's Undefinability Theorem and the Tarski Hierarchy). However, if we believe that an agent can think in one unified language (modeled by the sigma-algebra in standard information theory / Bayesian theory) and at the same time think of its beliefs in map-territory terms (IE think of its own propositions as having truth-values), we run into a problem -- namely, Tarski's aforementioned undefinability theorem, as exemplified by the Liar Paradox.

The Liar Paradox constructs a self-referential sentence "This sentence is false". This cannot consistently be assigned either "true" or "false" as an evaluation. Allowing self-referential sentences may seem strange, but it is inevitable in the same way that Goedel's results are -- sufficiently strong languages are going to contain self-referential capabilities whether we like it or not.

Lukasiewicz came up with one possible solution, called Lukaziewicz logic. First, we introduce a third truth value for paradoxical sentences which would otherwise be problematic. Foreshadowing the conclusion, we can call this new value 1/2. The Liar Paradox sentence can be evaluated as 1/2.

Unfortunately, although the new 1/2 truth value can resolve some paradoxes, it introduces new paradoxes. "This sentence is either false or 1/2" cannot be consistently assigned any of the three truth values.

Under some plausible assumptions, Lukaziewicz shows that we can resolve all such paradoxes by taking our truth values from the interval [0,1]. We have a whole spectrum of truth values between true and false. This is essentially fuzzy logic. It is also a model of linear logic.

So, Lukaziewicz logic (and hence a version of fuzzy logic and linear logic) are particularly plausible solutions to the problem of assigning truth-values to a language which can talk about the map-territory relation of its own sentences.

Relative Truth

One way to think about this is that fuzzy logic allows for a very limited form of context-dependent truth. The fuzzy truth values themselves are context-independent. However, in a given context where we are going to simplify such values to a binary, we can do so with a threshhold.

A classic example is baldness. It isn't clear exactly how much hair needs to be on someone's head for them to be bald. However, I can make relative statements like "well if you think Jeff is bald, then you definitely have to call Sid bald."

Fuzzy logic is just supposing that all truth-evaluations have to fall on a spectrum like this (even if we don't know exactly how). This models a very limited form of context-dependent truth, where different contexts put higher or lower demands on truth, but these demands can be modeled by a single parameter which monotonically admits more/less as true when we shift it up/down.

I'm not denying the existence of other forms of context-dependence, of course. The point is that it seems plausible that we can put up with just this one form of context-dependence in our "basic picture" and allow all other forms to be modeled more indirectly.

Vagueness

My view is close to the view of Saving Truth from Paradox by Hartry Field. Field proposes that truth is vague (so that the baldness example and the Liar Paradox example are closely linked). Based on this idea, he defends a logic (based on fuzzy logic, but not quite the same) based on this idea. His book does (imho) a good job of defending assumptions similar to those Lukaziewicz makes, so that something similar to fuzzy logic starts to look inevitable.

Replies from: johnswentworth, programcrafter, programcrafter, qv^!q↑ comment by johnswentworth · 2024-10-08T02:46:36.513Z · LW(p) · GW(p)

I generally agree that self-reference issues require "fuzzy truth values" in some sense, but for Richard's purposes I expect that sort of thing to end up looking basically Bayesian (much like he lists logical induction as essentially Bayesian).

Replies from: abramdemski↑ comment by abramdemski · 2024-10-08T16:43:07.169Z · LW(p) · GW(p)

Yeah, I agree with that.

↑ comment by ProgramCrafter (programcrafter) · 2024-10-07T17:58:45.826Z · LW(p) · GW(p)

Unfortunately, although the new 1/2 truth value can resolve some paradoxes, it introduces new paradoxes. "This sentence is either false or 1/2" cannot be consistently assigned any of the three truth values.

Under some plausible assumptions, Lukaziewicz shows that we can resolve all such paradoxes by taking our truth values from the interval [0,1]...

Well, a straightforward continuation of paradox would be "This sentence has truth value in "; is it excluded by "plausible assumptions" or overlooked?

Replies from: abramdemski↑ comment by abramdemski · 2024-10-08T16:17:11.155Z · LW(p) · GW(p)

Excluded. Truth-functions are required to be continuous, so a predicate that's true of things in the interval [0,1) must also be true at 1. (Lukaziewicz does not assume continuity, but rather, proves it from other assumptions. In fact, Lukaziewicz is much more restrictive; however, we can safely add any continuous functions we like.)

One justification of this is that it's simply the price you have to pay for consistency; you (provably) can't have all the nice properties you might expect. Requiring continuity allows consistent fixed-points to exist.

Of course, this might not be very satisfying, particularly as an argument in favor of Lukaziewicz over other alternatives. How can we justify the exclusion of [0,1) when we seem to be able to refer to it?

As I mentioned earlier, we can think of truth as a vague term, with the fuzzy values representing an ordering of truthiness. Therefore, there should be no way to refer to "absolute truth".

We have to think of assigning precise numbers to the vague values as merely a way to model this phenomenon. (It's up to you to decide whether this is just a bit of linguistic slight-of-hand or whether it constitutes a viable position...)

When we try to refer to "absolute truth" we can create a function which outputs 1 on input 1, but which declines sharply as we move away from 1.[1] This is how the model reflects the fact that we can't refer to absolute truth. We can map 1 to 1 (make a truth-function which is absolutely true only of absolute truth), however, such a function must also be almost-absolutely-true in some small neighborhood around 1. This reflects the idea that we can't completely distinguish absolute truth from its close neighborhood.

Similarly, when we negate this function, it "represents" [0,1) in the sense that it is only 0 (only 'absolutely false') for the value 1, and maps [0,1) to positive truth-values which can be mostly 1, but which must decline in the neighborhood of 1.

And yes, this setup can get us into some trouble when we try to use quantifiers. If "forall" is understood as taking the min, we can construct discontinuous functions as the limit of continuous functions. Hartry Field proposes a fix, but it is rather complex.

- ^

Note that some relevant authors in the literature use 0 for true and 1 for false, but I am using 1 for true and 0 for false, as this seems vastly more intuitive.

↑ comment by Dweomite · 2024-10-27T07:10:31.897Z · LW(p) · GW(p)

I'm confused about how continuity poses a problem for "This sentence has truth value in [0,1)" without also posing an equal problem for "this sentence is false", which was used as the original motivating example.

I'd intuitively expect "this sentence is false" == "this sentence has truth value 0" == "this sentence does not have a truth value in (0,1]"

Replies from: abramdemski↑ comment by abramdemski · 2024-10-28T15:41:23.240Z · LW(p) · GW(p)

"X is false" has to be modeled as something that is value 1 if and only if X is value 0, but continuously decreases in value as X continuously increases in value. The simplest formula is value(X is false) = 1-value(X). However, we can made "sharper" formulas which diminish in value more rapidly as X increases in value. Hartry Field constructs a hierarchy of such predicates which he calls "definitely false", "definitely definitely false", etc.

Proof systems for the logic should have the property that sentences are derivable only when they have value 1; so "X is false" or "X is definitely false" etc all share the property that they're only derivable when X has value zero.

↑ comment by ProgramCrafter (programcrafter) · 2024-10-08T18:43:30.296Z · LW(p) · GW(p)

Understood. Does that formulation include most useful sentences?

For instance, "there exists a sentence which is more true than this one" must be excluded as equivalent to "this statement's truth value is strictly less than 1", but the extent of such exclusion is not clear to me at first skim.

↑ comment by ProgramCrafter (programcrafter) · 2024-10-07T21:49:13.011Z · LW(p) · GW(p)

As Richard noted, meaning is context-dependent. When I say "is there water in the fridge?" I am not merely referring to h2o; I am referring to something like a container of relatively pure water in easily drinkable form.

Then why not consider structure as follows?

- you are searching for "something like a container of relatively pure water in easily drinkable form" - or, rather, "[your subconscious-native code] of water-like thing + for drinking",

- you emit sequence of tokens (sounds/characters) "is there water in the fridge?", approximating previous idea (discarding your intent to drink it as it might be inferred from context, omitting that you can drink something close to water),

- conversation partner hears "is there water in the fridge?", converted into thought "you asked 'is there water in the fridge?'",

- and interprets words as "you need something like a container of relatively pure water in easily drinkable form" - or, rather, "[their subconscious-native code] for another person, a water-like thing + for drinking".

That messes up with "meanings of sentences" but is necessary to rationally process filtered evidence [? · GW].

Replies from: abramdemskiEach statement that the clever arguer makes is valid evidence—how could you not update your probabilities? Has it ceased to be true that, in such-and-such a proportion of Everett branches or Tegmark duplicates in which box B has a blue stamp, box B contains a diamond? According to Jaynes, a Bayesian must always condition on all known evidence, on pain of paradox. But then the clever arguer can make you believe anything they choose, if there is a sufficient variety of signs to selectively report.

↑ comment by abramdemski · 2024-10-08T16:56:57.983Z · LW(p) · GW(p)

It seems to me that there is a really interesting interplay of different forces here, which we don't yet know how to model well.

Even if Alice tries meticulously to only say literally true things, and be precise about her meanings, Bob can and should infer more than what Alice has literally said, by working backwards to infer why she has said it rather than something else.

So, pragmatics is inevitable, and we'd be fools not to take advantage of it.

However, we also really like transparent contexts -- that is, we like to be able to substitute phrases for equivalent phrases (equational reasoning, like algebra), and make inferences based on substitution-based reasoning (if all bachelors are single, and Jerry is a bachelor, then Jerry is single).

To put it simply, things are easier when words have context-independent meanings (or more realistically, meanings which are valid across a wide array of contexts, although nothing will be totally context-independent).

This puts contradictory pressure on language. Pragmatics puts pressure towards highly context-dependent meaning; reasoning puts pressure towards highly context-independent meaning.

If someone argues a point by conflation (uses a word in two different senses, but makes an inference as if the word had one sense) then we tend to fault using the same word in two different senses, rather than fault basic reasoning patterns like transitivity of implication (A implies B, and B implies C, so A implies C). Why is that? Is that the correct choice? If meanings are inevitably context-dependent anyway, why not give up on reasoning? ;p

↑ comment by qvalq (qv^!q) · 2024-10-07T18:08:25.892Z · LW(p) · GW(p)

↑ comment by Richard_Ngo (ricraz) · 2024-10-09T05:47:59.456Z · LW(p) · GW(p)

Ty for the comment. I mostly disagree with it. Here's my attempt to restate the thrust of your argument:

The issues with binary truth-values raised in the post are all basically getting at the idea that the meaning of a proposition is context-dependent. But we can model context-dependence in a Bayesian way by referring to latent variables in the speaker's model of the world. Therefore we don't need fuzzy truth-values.

But this assumes that, given the speaker's probabilistic model, truth-values are binary. I don't see why this needs to be the case. Here's an example: suppose my non-transhumanist friend says "humanity will be extinct in 100 years". And I say "by 'extinct' do you include genetically engineered until future humans are a different species? How about being uploaded? How about all being cryonically frozen, to be revived later? How about...."

In this case, there is simply no fact of the matter about which of these possibilities should be included or excluded in the context of my friend's original claim, because (I'll assume) they hadn't considered any of those possibilities.

More prosaically, even if I have considered some possibilities in the past, at the time when I make a statement I'm not actively considering almost any of them. For some of them, if you'd raised those possibilities to me when I'd asked the question, I'd have said "obviously I did/didn't mean to include that", but for others I'd have said "huh, idk" and for others still I would have said different things depending on how you presented them to me. So what reason do we have to think that there's any ground truth about what the context does or doesn't include? Similar arguments apply re approximation error about how far away the grocery store is: clearly 10km error is unacceptable, and 1m is acceptable, but what reason do we have to think that any "correct" threshold can be deduced even given every fact about my brain-state when I asked the question?

I picture you saying in response to this "even if there are some problems with binary truth-values, fuzzy truth-values don't actually help very much". To this I say: yes, in the context of propositions, I agree. But that's because we shouldn't be doing epistemology in terms of propositions. And so you can think of the logical flow of my argument as:

- Here's why, even for propositions, binary truth is a mess. I'm not saying I can solve it but this section should at least leave you open-minded about fuzzy truth-values.

- Here's why we shouldn't be thinking in terms of propositions at all, but rather in terms of models.

- And when it comes to models, something like fuzzy truth-values seems very important (because it is crucial to be able to talk about models being closer to the truth without being absolutely true or false).

I accept that this logical flow wasn't as clear as it could have been. Perhaps I should have started off by talking about models, and only then introduced fuzzy truth-values? But I needed the concept of fuzzy truth-values to explain why models are actually different from propositions at all, so idk.

I also accept that "something like fuzzy truth-values" is kinda undefined here, and am mostly punting that to a successor post.

Replies from: johnswentworth↑ comment by johnswentworth · 2024-10-09T06:10:29.843Z · LW(p) · GW(p)

But this assumes that, given the speaker's probabilistic model, truth-values are binary.

In some sense yes, but there is totally allowed to be irreducible uncertainty in the latents - i.e. given both the model and complete knowledge of everything in the physical world, there can still be uncertainty in the latents. And those latents can still be meaningful and predictively powerful. I think that sort of uncertainty does the sort of thing you're trying to achieve by introducing fuzzy truth values, without having to leave a Bayesian framework.

Let's look at this example:

suppose my non-transhumanist friend says "humanity will be extinct in 100 years". And I say "by 'extinct' do you include genetically engineered until future humans are a different species? How about being uploaded? How about all being cryonically frozen, to be revived later? How about...."

In this case, there is simply no fact of the matter about which of these possibilities should be included or excluded in the context of my friend's original claim...

Here's how that would be handled by a Bayesian mind:

- There's some latent variable representing the semantics of "humanity will be extinct in 100 years"; call that variable S for semantics.

- Lots of things can provide evidence about S. The sentence itself, context of the conversation, whatever my friend says about their intent, etc, etc.

- ... and yet it is totally allowed, by the math of Bayesian agents, for that variable S to still have some uncertainty in it even after conditioning on the sentence itself and the entire low-level physical state of my friend, or even the entire low-level physical state of the world.

If this seems strange and confusing, remember: there is absolutely no rule saying that the variables in a Bayesian agent's world model need to represent any particular thing in the external world. I can program a Bayesian reasoner hardcoded to believe it's in the Game of Life, and feed that reasoner data from my webcam, and the variables in its world model will not represent any particular stuff in the actual environment. The case of semantics does not involve such an extreme disconnect, but it does involve some useful variables which do not fully ground out in any physical state.

Replies from: ricraz↑ comment by Richard_Ngo (ricraz) · 2024-10-09T06:40:49.274Z · LW(p) · GW(p)

Here's how that would be handled by a Bayesian mind:

- There's some latent variable representing the semantics of "humanity will be extinct in 100 years"; call that variable S for semantics.

- Lots of things can provide evidence about S. The sentence itself, context of the conversation, whatever my friend says about their intent, etc, etc.

- ... and yet it is totally allowed, by the math of Bayesian agents, for that variable S to still have some uncertainty in it even after conditioning on the sentence itself and the entire low-level physical state of my friend, or even the entire low-level physical state of the world.

What would resolve the uncertainty that remains after you have conditioned on the entire low-level state of the physical world? (I assume that we're in the logically omniscient setting here?)

Replies from: johnswentworth↑ comment by johnswentworth · 2024-10-09T15:47:56.696Z · LW(p) · GW(p)

We are indeed in the logically omniscient setting still, so nothing would resolve that uncertainty.

The simplest concrete example I know is the Boltzman distribution for an ideal gas - not the assorted things people say about the Boltzmann distribution, but the actual math, interpreted as Bayesian probability. The model has one latent variable, the temperature T, and says that all the particle velocities are normally distributed with mean zero and variance proportional to T. Then, just following the ordinary Bayesian math: in order to estimate T from all the particle velocities, I start with some prior P[T], calculate P[T|velocities] using Bayes' rule, and then for ~any reasonable prior I end up with a posterior distribution over T which is very tightly peaked around the average particle energy... but has nonzero spread. There's small but nonzero uncertainty in T given all of the particle velocities. And in this simple toy gas model, those particles are the whole world, there's nothing else to learn about which would further reduce my uncertainty in T.

↑ comment by cubefox · 2024-10-06T19:34:18.979Z · LW(p) · GW(p)

You said it yourself: the truth-values depend on how we interpret those adjectives. The adjectives are ambiguous, they have more than one common interpretation (and the interpretation depends on context).

Fuzzy truth values can't be avoided by disambiguation and fixing a context. They are the result of vague predicates: adjectives, verbs, nouns etc. Most concepts don't have crisp boundaries, and some objects will fit a term more or less than others.

Replies from: johnswentworth, localdeity↑ comment by johnswentworth · 2024-10-06T20:30:01.505Z · LW(p) · GW(p)

That's still not a problem of fuzzy truth values, it's a problem of a fuzzy category boundaries. These are not the same thing.

The standard way to handle fuzzy category boundaries in a Bayesian framework is to treat semantic categories as clusters [LW · GW], and use standard Bayesian cluster models.

Replies from: tailcalled, cubefox, Benaya Koren↑ comment by tailcalled · 2024-10-07T07:34:41.152Z · LW(p) · GW(p)

The Eggplant later discusses some harder problems with fuzzy categories:

What even counts as an eggplant? How about the various species of technically-eggplants that look and taste nothing like what you think of as one? Is a diced eggplant cooked with ground beef and tomato sauce still an eggplant? At exactly what point does a rotting eggplant cease to be an eggplant, and turn into “mush,” a different sort of thing? Are the inedible green sepals that are usually attached to the purple part of an eggplant in a supermarket—the “end cap,” we might say—also part of the eggplant? Where does an unpicked eggplant begin, and the eggplant bush it grows from end?

(I think this is hard than it looks because in addition to severing off the category at some of these edge-cases, one also has to avoid severing off the category at other edge-cases. The Eggplant mostly focuses on reductionistic categories rather than statistical categories and so doesn't bother proving that the Bayesian clustering can't go through.)

You might think these are also solved with Bayesian cluster models, but I don't think they are [? · GW], unless you put in a lot of work beyond basic Bayesian cluster models to bias it towards giving the results you want. (Like, you could pick the way people talk about the objects as the features you use for clustering, and in that case I could believe you would get nice/"correct" clusters, but this seems circular in the sense that you're not deriving the category yourself but just copying it off humans.)

Roughly speaking, you are better off thinking of there as being an intrinsic ranking of the features of a thing by magnitude or importance, such that the cluster a thing belongs to is its most important feature.

Replies from: ChristianKl, D0TheMath, localdeity↑ comment by ChristianKl · 2024-10-20T14:22:46.776Z · LW(p) · GW(p)

Before writing The Eggplant, Chapman did write more specifically about why Bayesianism doesn't work in https://metarationality.com/probability-and-logic

David Chapman's position of "I created a working AI that makes deductions using mathematics that are independent of probability and can't be represented with probability" seem like it does show that Bayesianism as a superset for agent foundations doesn't really work as agents can reason in ways that are not probability based.

Replies from: johnswentworth↑ comment by johnswentworth · 2024-10-20T14:55:49.930Z · LW(p) · GW(p)

Hadn't seen that essay before, it's an interesting read. It looks like he either has no idea that Bayesian model comparison is a thing, or has no idea how it works, but has a very deep understanding of all the other parts except model comparison and has noticed a glaring model-comparison-shaped hole.

Replies from: ChristianKl↑ comment by ChristianKl · 2024-10-20T15:13:09.539Z · LW(p) · GW(p)

How does Bayesian model comparison allow you to do predicate calculus?

Replies from: johnswentworth↑ comment by johnswentworth · 2024-10-20T15:32:13.195Z · LW(p) · GW(p)

First, the part about using models/logics with probabilities. (This part isn't about model comparison per se, but is necessary foundation.) (Terminological note: the thing a logician would call a "logic" or possibly a "logic augmented with some probabilities" I would instead normally call a "model" in the context of Bayesian probability, and the thing a logician would call a "model" I would instead normally call a "world" in the context of Bayesian probability; I think that's roughly how standard usage works.) Roughly speaking: you have at least one plain old (predicate) logic, and all "random" variables are scoped to their logic, just like ordinary logic. To bring probability into the picture, the logic needs to be augmented with enough probabilities of values of variables in the logic that the rest of the probabilities can be derived. All queries involving probabilities of values of variables then need to be conditioned on a logic containing those variables, in order to be well defined.

Typical example: a Bayes net is a logic with a finite set of variables, one per node in the net, augmented with some conditional probabilities for each node such that we can derive all probabilities.

Most of the interesting questions of world modeling are then about "model comparison" (though a logician would probably rather call it "logic comparison"): we want to have multiple hypotheses about which logics-augmented-with-probabilities best predict some real-world system, and test those hypotheses statistically just like we test everything else. That's why we need model comparison.

Replies from: ChristianKl, tailcalled, tailcalled↑ comment by ChristianKl · 2024-10-20T19:12:09.820Z · LW(p) · GW(p)

the thing a logician would call a "logic" or possibly a "logic augmented with some probabilities"

The main point of the article is that once you add probabilities you can't do predicate calculus anymore. It's a mathematical operation that's not defined for the entities that you get when you do your augmentation.

Replies from: johnswentworth↑ comment by johnswentworth · 2024-10-20T19:28:10.218Z · LW(p) · GW(p)

Is the complaint that you can't do predicate calculus on the probabilities? Because I can certainly use predicate calculus all I want on the expressions within the probabilities.

And if that is the complaint, then my question is: why do we want to do predicate calculus on the probabilities? Like, what would be one concrete application in which we'd want to do that? (Self-reference and things in that cluster would be the obvious use-case, I'm mostly curious if there's any other use-case.)

Replies from: ChristianKl↑ comment by ChristianKl · 2024-10-21T10:37:38.403Z · LW(p) · GW(p)

Imagine, you have a function f that takes a_1, a_2, ..., a_n and returns b_1, b_2, ... b_m. a_1, a_2, ..., a_n are boolean states of the known world and b_1, b_2, ... b_m boolean states of the world you don't yet know. Because f uses predicate logic internally you can't modify it to take values between 0 and 1 and have to accept that it can only take boolean values.

When you do your probability augmentation you can easily add probabilities to a_1, a_2, ..., a_n and have P(a_1), P(a_2), ..., P(a_n), as those are part of the known world.

On the other hand, how would you get P(b_1), P(b_2), ... , P(b_m)?

Replies from: johnswentworth↑ comment by johnswentworth · 2024-10-21T15:49:00.145Z · LW(p) · GW(p)

I'm not quite understanding the example yet. Two things which sound similar, but are probably not what you mean because they're straightforward Bayesian models:

- I'm given a function f: A -> B and a distribution over the set A. Then I push forward the distribution on A through f to get a distribution over B.

- Same as previous, but the function f is also unknown, so to do things Bayesian-ly I need to have a prior over f (more precisely, a joint prior over f and A).

How is the thing you're saying different from those?

Or: it sounds like you're talking about an inference problem, so what's the inference problem? What information is given, and what are we trying to predict?

Replies from: ChristianKl↑ comment by ChristianKl · 2024-10-21T16:00:52.968Z · LW(p) · GW(p)

I'm talking about a function that takes a one-dimensional vector of booleans A and returns a one-dimensional vector B. The function does not accept a one-dimensional vector of real numbers between 0 and 1.

To be able to "push forward" probabilities, f would need to be defined to handle probabilities.

Replies from: johnswentworth↑ comment by johnswentworth · 2024-10-21T16:15:29.189Z · LW(p) · GW(p)

The standard push forward here would be:

where is an indicator function. In terms of interpretation: this is the frequency at which I will see B take on value b, if I sample A from the distribution P[A] and then compute B via B = f(A).

What do you want to do which is not that, and why do you want to do it?

Replies from: ChristianKl↑ comment by ChristianKl · 2024-10-22T20:56:59.845Z · LW(p) · GW(p)

Most of the time, the data you gather about the world is that you have a bunch of facts about the world and probabilities about the individual data points and you would want as an outcome also probabilities over individual datapoints.

As far as my own background goes, I have not studied logic or the math behind the AI algorithm that David Chapman wrote. I did study bioinformatics in that that study we did talk about probabilities calculations that are done in bioinformatics, so I have some intuitions from that domain, so I take a bioinformatics example even if I don't know exactly how to productively apply predicate calculus to the example.

If you for example get input data from gene sequencing and billions of probabilities (a_1, a_2, ..., a_n) and want output data about whether or not individual genetic mutations exist (b_1, b_2, ..., b_m) and not just P(B) = P(b_1) * P(b_2) * ... * P(b_m).

If you have m = 100,000 in the case of possible genetic mutations, P(B) is a very small number with little robustness to error. A single bad b_x will propagate to make your total P(B) unreliable. You might have an application where getting a b_234, b_9538 and b _33889 wrong is an acceptable error because most of the values where good.

↑ comment by tailcalled · 2024-10-20T15:51:00.637Z · LW(p) · GW(p)

To bring probability into the picture, the logic needs to be augmented with enough probabilities of values of variables in the logic that the rest of the probabilities can be derived.

I feel like this treat predicate logic as being "logic with variables", but "logic with variables" seems more like Aristotelian logic than like predicate logic to me.

Replies from: johnswentworth↑ comment by johnswentworth · 2024-10-20T15:53:30.206Z · LW(p) · GW(p)

Another way to view it: a logic, possibly a predicate logic, is just a compact way of specifying a set of models (in the logician's sense of the word "models", i.e. the things a Bayesian would normally call "worlds"). Roughly speaking, to augment that logic into a probabilistic model, we need to also supply enough information to derive the probability of each (set of logician!models/Bayesian!worlds which assigns the same truth-values to all sentences expressible in the logic).

Does that help?

Replies from: tailcalled↑ comment by tailcalled · 2024-10-21T12:29:06.754Z · LW(p) · GW(p)

Idk, I guess the more fundamental issue is this treats the goal as simply being assigning probabilities to statements in predicate logic, whereas his point is more about whether one can do compositional reasoning about relationships while dealing with nebulosity, and it's this latter thing that's the issue.

Replies from: johnswentworth↑ comment by johnswentworth · 2024-10-21T15:25:23.810Z · LW(p) · GW(p)

What's a concrete example in which we want to "do compositional reasoning about relationships while dealing with nebulosity", in a way not handled by assigning probabilities to statements in predicate logic? What's the use-case here? (I can see a use-case for self-reference; I'm mainly interested in any cases other than that.)

↑ comment by tailcalled · 2024-10-20T15:38:46.440Z · LW(p) · GW(p)

You seem to be assuming that predicate logic is unnecessary, is that true?

Replies from: johnswentworth↑ comment by johnswentworth · 2024-10-20T15:42:28.115Z · LW(p) · GW(p)

No, I explicitly started with "you have at least one plain old (predicate) logic". Quantification is fine.

Replies from: tailcalled↑ comment by tailcalled · 2024-10-20T15:45:14.190Z · LW(p) · GW(p)

Ah, sorry, I think I misparsed your comment.

↑ comment by Garrett Baker (D0TheMath) · 2024-10-07T15:53:44.453Z · LW(p) · GW(p)

Roughly speaking, you are better off thinking of there as being an intrinsic ranking of the features of a thing by magnitude or importance, such that the cluster a thing belongs to is its most important feature.

How do you get the features, and how do you decide on importance? I expect for certain answers of these questions John will agree with you.

Replies from: tailcalled↑ comment by tailcalled · 2024-10-07T17:13:51.447Z · LW(p) · GW(p)

Those are difficult questions that I don't know the full answer to yet.

↑ comment by localdeity · 2024-10-07T19:31:26.036Z · LW(p) · GW(p)

I am dismayed by the general direction of this conversation. The subject is vague and ambiguous words causing problems, there's a back-and-forth between several high-karma users, and I'm the first person to bring up "taboo [LW · GW] the vague words and explain more precisely what you mean"?

Replies from: abramdemski, tailcalled↑ comment by abramdemski · 2024-10-08T17:15:28.828Z · LW(p) · GW(p)

That's an important move to make, but it is also important to notice how radically context-dependent and vague our language is, to the point where you can't really eliminate the context-dependence and vagueness via taboo (because the new words you use will still be somewhat context-dependent and vague). Working against these problems is pragmatically useful, but recognizing their prevalence can be a part of that. Richard is arguing against foundational pictures which assume these problems away, and in favor of foundational pictures which recognize them.

Replies from: localdeity↑ comment by localdeity · 2024-10-08T18:25:56.502Z · LW(p) · GW(p)

to the point where you can't really eliminate the context-dependence and vagueness via taboo (because the new words you use will still be somewhat context-dependent and vague)

You don't need to "eliminate" the vagueness, just reduce it enough that it isn't affecting any important decisions. (And context-dependence isn't necessarily a problem if you establish the context with your interlocutor.) I think this is generally achievable, and have cited [LW(p) · GW(p)] the Eggplant essay on this. And if it is generally achievable, then:

Richard is arguing against foundational pictures which assume these problems away, and in favor of foundational pictures which recognize them.

I think you should handle the problems separately. In which case, when reasoning about truth, you should indeed assume away communication difficulties. If our communication technology was so bad that 30% of our words got dropped from every message, the solution would not be to change our concept of meanings; the solution would be to get better at error correction, ideally at a lower level, but if necessary by repeating ourselves and asking for clarification a lot.

Elsewhere there's discussion [LW(p) · GW(p)] of concepts themselves being ambiguous. That is a deeper issue. But I think it's fundamentally resolved in the same way: always be alert for the possibility that the concept you're using is the wrong one, is incoherent or inapplicable to the current situation; and when it is, take corrective action, and then proceed with reasoning about truth. Be like a digital circuit, where at each stage your confidence in the applicability of a concept is either >90% or <10%, and if you encounter anything in between, then you pause and figure out a better concept, or find another path in which this ambiguity is irrelevant.

Replies from: abramdemski↑ comment by abramdemski · 2024-10-08T19:34:28.948Z · LW(p) · GW(p)

Richard is arguing against foundational pictures which assume these problems away, and in favor of foundational pictures which recognize them.

I think you should handle the problems separately. In which case, when reasoning about truth, you should indeed assume away communication difficulties. If our communication technology was so bad that 30% of our words got dropped from every message, the solution would not be to change our concept of meanings; the solution would be to get better at error correction, ideally at a lower level, but if necessary by repeating ourselves and asking for clarification a lot.

You seem to be assuming that these issues arise only due to communication difficulties, but I'm not completely on board with that assumption. My argument is that these issues are fundamental to map-territory semantics (or, indeed, any concept of truth).

One argument for this is to note that the communicators don't necessarily have the information needed to resolve the ambiguity, even in principle, because we don't think in completely unambiguous concepts. We employ vague concepts like baldness, table, chair, etc. So it is not as if we have completely unambiguous pictures in mind, and merely run into difficulties when we try to communicate.

A stronger argument for the same conclusion relies on structural properties of truth. So long as we want to be able to talk and reason about truth in the same language that the truth-judgements apply to, we will run into self-referential problems. Crisp true-false logic has greater difficulties dealing with these problems than many-valued logics [LW(p) · GW(p)] such as fuzzy logic.

↑ comment by tailcalled · 2024-10-07T19:33:29.244Z · LW(p) · GW(p)

The Eggplant discusses why that doesn't work.

Replies from: localdeity↑ comment by localdeity · 2024-10-07T20:04:06.639Z · LW(p) · GW(p)

It's a decent exploration of stuff, and ultimately says that it does work:

Language is not the problem, but it is the solution. How much trouble does the imprecision of language cause, in practice? Rarely enough to notice—so how come? We have many true beliefs about eggplant-sized phenomena, and we successfully express them in language—how?

These are aspects of reasonableness that we’ll explore in Part Two. The function of language is not to express absolute truths. Usually, it is to get practical work done in a particular context. Statements are interpreted in specific situations, relative to specific purposes. Rather than trying to specify the exact boundaries of all the variants of a category for all time, we deal with particular cases as they come up.

If the statement you're dealing with has no problematic ambiguities, then proceed. If it does have problematic ambiguities, then demand further specification (and highlighting and tabooing the ambiguous words is the classic way to do this) until you have what you need, and then proceed.

I'm not claiming that it's practical to pick terms that you can guarantee in advance will be unambiguous for all possible readers and all possible purposes for all time. I'm just claiming that important ambiguities can and should be resolved by something like the above strategy; and, therefore, such ambiguities shouldn't be taken to debase the idea of truth itself.

Edit: I would say [LW(p) · GW(p)] that the words you receive are an approximation to the idea in your interlocutor's mind—which may be ambiguous due to terminology issues, transmission errors, mistakes, etc.—and we should concern ourselves with the truth of the idea. To speak of truth of the statement is somewhat loose; it only works to the extent that there's a clear one-to-one mapping of the words to the idea, and beyond that we get into trouble.

Replies from: tailcalled↑ comment by tailcalled · 2024-10-08T12:41:08.061Z · LW(p) · GW(p)

It probably works for Richard's purpose (personal epistemology) but not for John's or my purpose (agency foundations research).

↑ comment by cubefox · 2024-10-06T20:48:02.549Z · LW(p) · GW(p)

A proposition expressed by " is " has a fuzzy truth value whenever is a vague predicate. Since vague concepts figure in most propositions, their truth values are affected as well.

When you talk about "standard Bayesian cluster models", you talk about (Bayesian) statistics. But Richard talks about Bayesian epistemology. This doesn't involve models, only beliefs, and beliefs are propositions combined with a degree to which they are believed. See the list with the five assumptions of Bayesian epistemology in the beginning.

↑ comment by Benaya Koren · 2024-10-15T09:48:17.698Z · LW(p) · GW(p)

I don't think that this solution gives you everything that you want from semantic categories. Assume for example that you have a multidimensional cluster with heavy tails (for simplicity, assume symmetry under rotation). You measure some of the features, and determine that the given example belongs to the cluster almost surely. You want to use this fact to predict the other features. knowing the deviation of the known features is still relevant for your uncertainty about the other features. You may think about this extra property as measuring "typicality", or as measuring "how much does it really belong in the cluster.

↑ comment by localdeity · 2024-10-07T19:23:33.864Z · LW(p) · GW(p)

Solution: Taboo the vague predicates and demand that the user explain more precisely what they mean.

↑ comment by xpym · 2024-10-08T09:34:17.246Z · LW(p) · GW(p)

Furthermore, most of these problems can be addressed just fine in a Bayesian framework. In Jaynes-style Bayesianism, every proposition has to be evaluated in the scope of a probabilistic model; the symbols in propositions are scoped to the model, and we can’t evaluate probabilities without the model. That model is intended to represent an agent’s world-model, which for realistic agents is a big complicated thing.

It still misses the key issue of ontological remodeling. If the world-model is inadequate for expressing a proposition, no meaningful probability could be assigned to it.

↑ comment by NunoSempere (Radamantis) · 2024-11-06T09:14:03.503Z · LW(p) · GW(p)

Maybe you could address these problems, but could you do so in a way that is "computationally cheap"? E.g., for forecasting on something like extinction, it is much easier to forecast on a vague outcome than to precisely define it.

comment by Raymond D · 2024-10-07T00:20:38.943Z · LW(p) · GW(p)

When I read this post I feel like I'm seeing four different strands bundled together:

1. Truth-of-beliefs as fuzzy or not

2. Models versus propositions

3. Bayesianism as not providing an account of how you generate new hypotheses/models

4. How people can (fail to) communicate with each other

I think you hit the nail on the head with (2) and am mostly sold on (4), but am sceptical of (1) - similar to what several others have said, it seems to me like these problems don't appear when your beliefs are about expected observations, and only appear when you start to invoke categories that you can't ground as clusters in a hierarchical model.

That leaves me with mixed feelings about (3):

- It definitely seems true and significant that you can get into a mess by communicating specific predictions relative to your own categories/definitions/contexts without making those sufficiently precise

- I am inclined to agree that this is a particularly important feature of why talking about AI/x-risk is hard

- It's not obvious to me that what you've said above actually justifies knightian uncertainty (as opposed to infrabayesianism or something), or the claim that you can't be confident about superintelligence (although it might be true for other reasons)

comment by Kaarel (kh) · 2024-10-14T17:10:14.421Z · LW(p) · GW(p)

I find it surprising/confusing/confused/jarring that you speak of models-in-the-sense-of-mathematical-logic=:L-models as the same thing as (or as a precise version of) models-as-conceptions-of-situations=:C-models. To explain why these look to me like two pretty much entirely distinct meanings of the word 'model', let me start by giving some first brushes of a picture of C-models. When one employs a C-model, one likens a situation/object/etc of interest to a situation/object/etc that is already understood (perhaps a mathematical/abstract one), that one expects to be better able to work/play with. For example, when one has data about sun angles at a location throughout the day and one is tasked with figuring out the distance from that location to the north pole, one translates the question to a question about 3d space with a stationary point sun and a rotating sphere and an unknown point on the sphere and so on. (I'm not claiming a thinker is aware of making such a translation when they make it.) Employing a C-model making an analogy. From inside a thinker, the objects/situations on each side of the analogy look like... well, things/situations; from outside a thinker, both sides are thinking-elements.[1] (I think there's a large GOFAI subliterature trying to make this kind of picture precise but I'm not that familiar with it; here are two papers that I've only skimmed: https://www.qrg.northwestern.edu/papers/Files/smeff2(searchable).pdf , https://api.lib.kyushu-u.ac.jp/opac_download_md/3070/76.ps.tar.pdf .)

I'm not that happy with the above picture of C-models, but I think that it seeming like an even sorta reasonable candidate picture might be sufficient to see how C-models and L-models are very different, so I'll continue in that hope. I'll assume we're already on the same page about what an L-model is ( https://en.wikipedia.org/wiki/Model_theory ). Here are some ways in which C-models and L-models differ that imo together make them very different things:

- An L-model is an assignment of meaning to a language, a 'mathematical universe' together with a mapping from symbols in a language to stuff in that universe — it's a semantic thing one attaches to a syntax. The two sides of a C-modeling-act are both things/situations which are roughly equally syntactic/semantic (more precisely: each side is more like a syntactic thing when we try to look at a thinker from the outside, and just not well-placed on this axis from the thinker's internal point of view, but if anything, the already-understood side of the analogy might look more like a mechanical/syntactic game than the less-understood side, eg when you are aware that you are taking something as a C-model).

- Both sides of a C-model are things/situations one can reason about/with/in. An L-model takes from a kind of reasoning (proving, stating) system to an external universe which that system could talk about.

- An L-model is an assignment of a static world to a dynamic thing; the two sides of a C-model are roughly equally dynamic.

- A C-model might 'allow you to make certain moves without necessarily explicitly concerning itself much with any coherent mathematical object that these might be tracking'. Of course, if you are employing a C-model and you ask yourself whether you are thinking about some thing, you will probably answer that you are, but in general it won't be anywhere close to 'fully developed' in your mind, and even if it were (whatever that means), that wouldn't be all there is to the C-model. For an extreme example, we could maybe even imagine a case where a C-model is given with some 'axioms and inference rules' such that if one tried to construct a mathematical object 'wrt which all these axioms and inference rules would be valid', one would not be able to construct anything — one would find that one has been 'talking about a logically impossible object'. Maybe physicists handling infinities gracefully when calculating integrals in QFT is a fun example of this? This is in contrast with an L-model which doesn't involve anything like axioms or inference rules at all and which is 'fully developed' — all terms in the syntax have been given fixed referents and so on.

- (this point and the ones after are in some tension with the main picture of C-models provided above but:) A C-model could be like a mental context/arena where certain moves are made available/salient, like a game. It seems difficult to see an L-model this way.

- A C-model could also be like a program that can be run with inputs from a given situation. It seems difficult to think of an L-model this way.

- A C-model can provide a way to talk about a situation, a conceptual lens through which to see a situation, without which one wouldn't really be able to [talk about]/see the situation at all. It seems difficult to see an L-model as ever doing this. (Relatedly, I also find it surprising/confusing/confused/jarring that you speak of reasoning using C-models as a semantic kind of reasoning.)

(But maybe I'm grouping like a thousand different things together unnaturally under C-models and you have some single thing or a subset in mind that is in fact closer to L-models?)

All this said, I don't want to claim that no helpful analogy could be made between C-models and L-models. Indeed, I think there is the following important analogy between C-models and L-models:

- When we look for a C-model to apply to a situation of interest, perhaps we often look for a mathematical object/situation that satisfies certain key properties satisfied by the situation. Likewise, an L-model of a set of sentences is (roughly speaking) a mathematical object which satisfies those sentences.

(Acknowledgments. I'd like to thank Dmitry Vaintrob and Sam Eisenstat for related conversations.)

- ^

This is complicated a bit by a thinker also commonly looking at the C-model partly as if from the outside — in particular, when a thinker critiques the C-model to come up with a better one. For example, you might notice that the situation of interest has some property that the toy situation you are analogizing it to lacks, and then try to fix that. For example, to guess the density of twin primes, you might start from a naive analogy to a probabilistic situation where each 'prime' p has probability (p-1)/p of not dividing each 'number' independently at random, but then realize that your analogy is lacking because really p not dividing n makes it a bit less likely that p doesn't divide n+2, and adjust your analogy. This involves a mental move that also looks at the analogy 'from the outside' a bit.

comment by Mark Xu (mark-xu) · 2024-10-07T16:02:27.219Z · LW(p) · GW(p)

tentative claim: there are models of the world, which make predictions, and there is "how true they are", which is the amount of noise you fudge the model with to get lowest loss (maybe KL?) in expectation.

E.g. "the grocery store is 500m away" corresponds to "my dist over the grocery store is centered at 500m, but has some amount of noise"

Replies from: mark-xu, cubefox↑ comment by Mark Xu (mark-xu) · 2024-10-07T17:15:36.722Z · LW(p) · GW(p)

related to the claim that "all models are meta-models", in that they are objects capable of e.g evaluating how applicable they are for making a given prediction. E.g. "newtonian mechanics" also carries along with it information about how if things are moving too fast, you need to add more noise to its predictions, i.e. it's less true/applicable/etc.

comment by Haiku · 2024-10-20T00:04:51.430Z · LW(p) · GW(p)

I am not well-read on this topic (or at-all read, really), but it struck me as bizarre that a post about epistemology would begin by discussing natural language. This seems to me like trying to grasp the most fundamental laws of physics by first observing the immune systems of birds and the turbulence around their wings.

The relationship between natural language and epistemology is more anthropological* that it is information-theoretical. It is possible to construct models that accurately represent features of the cosmos without making use of any language at all, and as you encounter in the "fuzzy logic" concept, human dependence on natural language is often an impediment to gaining accurate information.