Limerence Messes Up Your Rationality Real Bad, Yo

post by Raemon · 2022-07-01T16:53:10.914Z · LW · GW · 41 commentsContents

Mutual Infatuation and the Limerence Event Horizon Subtly Warped Judgment Takeaways None 41 comments

There’s a pretty basic rationality fact that I don’t see talked about much on LW despite it’s obvious relevance. So I am here to write The Canonical Rationality Post on the topic:

Limerence (aka "falling in love"[1]) wrecks havoc on your rationality.

Evolution created you to breed and raise families and stuff. It gave you complex abstract reasoning because that was a useful problem solving tool. Evolution didn’t do that great a job of aligning humans with its goals (see: masturbation is an inner alignment failure, birth control is an outer alignment failure [LW · GW]). But, it looks like in some sense evolution was aware it had an alignment problem to solve. It gave us capacity for reason, and also it built in a massive hardcoded override for situations where no fuck you your brain is not for building rocketships and new abstract theories, your brain is for producing children and entangling yourself with a partner long enough to raise them. Now focus all your attention on the new prospective mate you are infatuated with.

To be clear, I like limerence. I put decent odds on CEV considering it a core human value. I think attempts to dissociate from your basic human drives are likely to have bad second-order consequences.

But, still, it’s been boggling how wonky my judgment gets when I was under the influence. And it’s boggling how arbitrary it felt afterwards (one day I’d be “wow this person is amazing”, and then a week later after I got some distance I looked back and thought “wow, Raemon’s thinking was so silly there"). It felt, in retrospect, like I'd been drugged.

I'm not sure how the rationality-warping of limerence compares to other major "evolution-hard-coded-some-overrides" areas like "access to power/money/status". But the effects of limerence feel sharpest/most-pronounced to me, and the most "what the hell just happened?" after the warp has cleared.

Mutual Infatuation and the Limerence Event Horizon

The place where this gets extra intense is when you and the object of your affects are both into each other. When only one of you is quietly pining for the other, your feelings might be warped but... they don't really have anywhere to go.

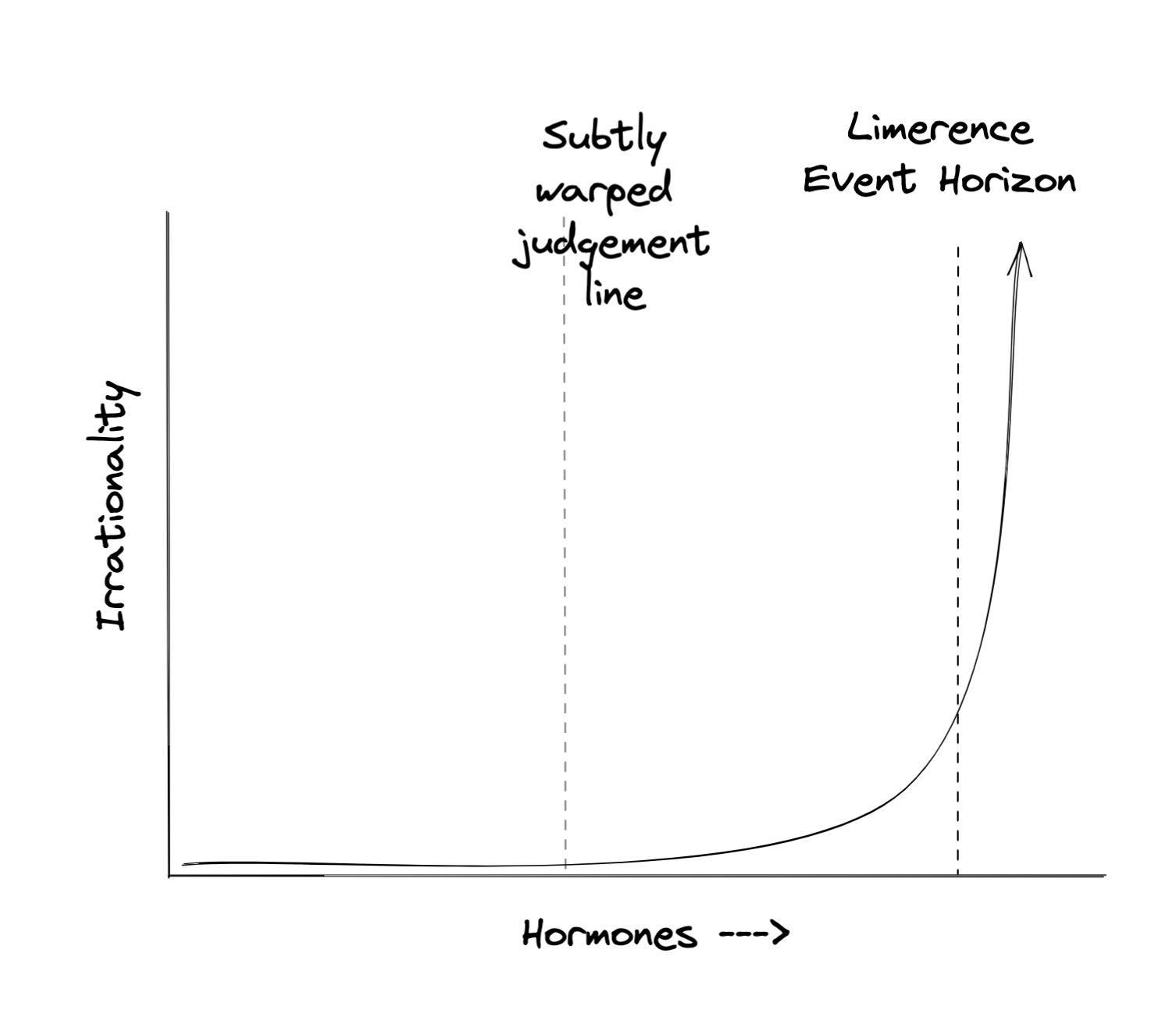

But if two people both like each other they tend to ascend this graph:

The y-axis is labeled "irrationality" for succinctness, but a better label might be "your bottom-line [LW · GW]-is-written-ness." It's not that you'll necessarily make the wrong choice the further to the right you go. But the probability mass gets more concentrated into "Y'all end up dating and probably having sex".

If for some reason you don't actually endorse doing that, it'll require escalating amounts of willpower to avoid it. And your judgment about how in-control you are of the situation will get worse. You may find yourself doing rationalization instead of rationality.

(Note: If you have more experience and practice managing your emotions, the Limerence Event Horizon is further to the right and you have more opportunity to change course before hooking up becomes inevitable)

Subtly Warped Judgment

What I find particularly alarming here is the subtly warped judgment line. I’ve had a couple experiences where I thought I was successfully holding someone at a distance. I knew about the Limerence Event Horizon, but I was being so careful to hold myself at a nice safe emotional distance! Alas, being slightly over the subtly-warped-judgment line is like taking one drink – sure it only impairs your judgment a little, but, the one of the things you might do with slightly impaired judgment is to take another drink. (Or, say, foster more emotional closeness with someone who you wouldn't endorse eventually having sex with).

In my two experiences, it was clear-in-retrospect that my thinking was subtly warped (and I totally ended up romantically involved). One of the times worked out fine (sometimes dating people is great!). Another of the times it was one of the greatest mistakes of my life that I regretted for a long time.

Takeaways

So, like, I don't hate love or fun or whatever. I don't want to give people an anxious complex about allowing themselves to feel feelings. Many times, when you are feeling mutually limerent with someone... great! Falling in love is one of the nicest things. Have a good time.

But, I do think it is possible to end up mutually-attracted-to-someone for whom it'd be a bad idea to get involved with. Maybe one of you are married or have made other monogamous commitments you take seriously. Maybe the person is fun but predictably kinda a mess and you'll end up paying a bunch of costs that end up net negative for you. Maybe those costs would be totally worth it for you, but be too costly for other friends/family/children/coworkers caught in the wake.

Sometimes the issue isn't anything about the person-you're-into, but about other things you have going on. Maybe you're working on a really important project you care about and right now it'd just be particularly bad right now to get distracted in the way that falling in love is super distracting. Maybe you recently hired the person-you're-into and it'd predictably mess up your working relationship.

I don't want to make a strong claim about how often those concerns are overriding. But, at least sometimes, it's the wrong call for mutually-attracted-people-to-date, and in those cases there's a lot more degrees of freedom to think clearly if you're holding someone at a further distance than may feel intuitively necessary.

- ^

I have strong opinions on the definition of the word "love" and am kinda annoyed at popular usage of "falling in love" to mean a time very early in a relationship before "love" is particularly substantive, but for this post I'm going with the popular usage.

41 comments

Comments sorted by top scores.

comment by Sinclair Chen (sinclair-chen) · 2022-07-01T20:16:07.300Z · LW(p) · GW(p)

I've also concluded that in-love epistemics are terrible from my own research. For instance, in this n=71 study where college students write about a time they've rejected a romantic confession and a time they were rejected:

- suitors report that rejectors are mysterious, but rejectors do not report being mysterious

- suitors severely overestimate probability of being liked back

- suitors report that rejections are very unclear, and while suitors report the same, it is to a much lesser degree/frequency.

I've also been overconfident of compatibility and of mutual affection in my own life (n=1)

However, I think there's something to be said for having something (someone?) to protect [LW · GW].

Eliezer mentions in Inadequate Equillibria using extremely bright lights to solve his partner's Seasonal Affective Disorder - which was not medical consensus, and only after Eliezer's experiment are more "official" trials for this intervention being tested.

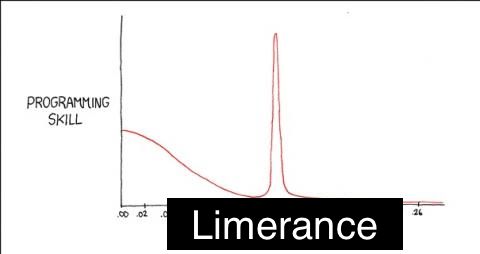

Or in my case, I was so heartbroken over a bad ex that I researched romance science, learned about the similarity between limerance and OCD, and tried a supplement that cured my heartbreak. I wouldn't normally try new drugs nor browse google scholar, but I was really motivated.

↑ comment by plex (ete) · 2022-07-01T20:29:44.093Z · LW(p) · GW(p)

What was that supplement? Seems like a useful thing to have known if reproducible.

Replies from: austin-chen↑ comment by Austin Chen (austin-chen) · 2022-07-02T20:55:13.204Z · LW(p) · GW(p)

Inositol, I believe: https://www.facebook.com/100000020495165/posts/4855425464468089/?app=fbl

Replies from: sinclair-chen↑ comment by Sinclair Chen (sinclair-chen) · 2022-07-03T09:02:52.341Z · LW(p) · GW(p)

Inositol indeed.

I don't know anyone else that's tried this. I'd only bet 55-65% that it works for any given person. But it's available over the counter and quite safe.

I should probably get around to setting up a more rigorous experiment one of these days...

↑ comment by Lukas Finnveden (Lanrian) · 2022-07-01T23:50:17.964Z · LW(p) · GW(p)

"suitors severely underestimate probability of being liked back"

Is this supposed to say 'overestimate'? Regardless, what info from the paper is the claim based on? Since they're only sampling stories where people were rejected, the stories will have disproportionately large numbers of cases where the suitors are over-optimistic, so that seems like it'd make it hard to draw general conclusions.

(For the other two bullet points: I'd expect those effects, directionally, just from the normal illusion of transparency playing out in a context where there are social barriers to clear communication. But haven't looked at the paper to see whether the effect is way stronger than I'd normally expect.)

Replies from: sinclair-chen↑ comment by Sinclair Chen (sinclair-chen) · 2022-07-03T08:53:50.437Z · LW(p) · GW(p)

Is this supposed to say 'overestimate'?

Yes, corrected.

what info from the paper is the claim based on?

I don't remember (I copied the points from my notes from months ago when I did the research).

↑ comment by Alexander Gietelink Oldenziel (alexander-gietelink-oldenziel) · 2022-07-01T22:19:26.148Z · LW(p) · GW(p)

You found the Cure for Love??

Replies from: Dirichlet-to-Neumann↑ comment by Dirichlet-to-Neumann · 2022-07-02T07:25:34.153Z · LW(p) · GW(p)

There's nothing pure enough to be a cure for love. (Except poetry, which does quite well)

↑ comment by Adam Zerner (adamzerner) · 2022-07-01T22:25:20.297Z · LW(p) · GW(p)

That's a really good point about having something to protect. However:

Or in my case, I was so heartbroken over a bad ex that I researched romance science, learned about the similarity between limerance and OCD, and tried a supplement that cured my heartbreak. I wouldn't normally try new drugs nor browse google scholar, but I was really motivated.

I don't think this excerpt is related to the "something to protect" point. The motivation here was to get rid of a really, intensely bad feeling you were having, whereas "something to protect" is about protecting someone else.

comment by DirectedEvolution (AllAmericanBreakfast) · 2022-07-02T02:03:06.383Z · LW(p) · GW(p)

I also wonder if rationality messes up your limerence. Certainly, I find that a daily attempt to try and think more rationally has had a small-moderate effect on my emotional range and registration.

Replies from: localdeity, tomcatfish↑ comment by localdeity · 2022-07-02T06:31:57.242Z · LW(p) · GW(p)

I don't know how to self-evaluate my rationality, at least not in a publicly defensible way[1], or for that matter compare the intensity of my romantic feelings to those of others... but for whatever my word is worth, I think it's quite possible to have both. Maybe not in the same moment, but I think it's possible to switch back and forth rapidly enough that it works fine.[2]

The things that lead to intense romantic feelings for someone, in my experience, come from having all 3 of the following factors:

- That I find her admirable. (Admirable traits that come to mind: intelligence, beauty, kindness, competence, etc. Some anti-admirable traits can override admirable ones: being unnecessarily mean, unethical, etc.)

- That I perceive an emotional connection with her. (This can be one-sided, of course. I think the best operationalization of this is "that my brain finds it plausible that she and I will do things that make each other happy".)

- That I spend time with her or thinking about her.

My basic observation/theory is that, if the first two factors are present, then "thinking about nice situations involving her" is pleasant and rewarding. That tends to make me like her more, which makes imagined situations even more pleasant, and so on. During middle school, I had lots of time in class with nothing else interesting to do, and I developed some very intense crushes through this process; thinking about them may be considered a form of wireheading. I can confirm the process still works today, although I don't deliberately do it very often—but one regular use case is situations, like sitting on an airplane, when I don't feel like reading but also can't sleep or do much else.

In the fantasies, I often imagined that she liked me back; when I emerged into reality, I knew that wasn't necessarily the case. (Usually at some point I would tell her I liked her, or ask her about a date; generally she would say she thought I was a fine person but didn't like me that way; and I would accept that, not mention the subject further (at least for six months or whatever seemed reasonable at that age), and happily resume fantasizing about how it would be if she did like me—or think about another girl.) I think I did ok maintaining basic sanity and safety in that respect.

- ^

In the absence of some standardized "rationality test", I would have to choose things from my life to present, but you'd have to take my word that I was neither cherry-picking nor misinterpreting things in my favor, which is essentially assuming the conclusion; I don't think it would likely provide net evidence in favor of my rationality unless I had some obviously, objectively, extremely impressive anecdote, and that doesn't come to mind.

- ^

I at one point delighted in romantic pleasure when someone I loved was touching me, while I was talking through an example of Huffman coding, which involved adding and multiplying some fractions; and I was able to notice and correct an arithmetic error in the moment (this was all mental math, no paper), in between mentioning how lovely it felt. That said, math skills are only a small part of rationality skills.

↑ comment by Alex Vermillion (tomcatfish) · 2023-07-28T16:57:21.901Z · LW(p) · GW(p)

Consider something like meditation or some kind of emotional processing work? I think doing math all day sure makes it less likely you'll get a date, but it doesn't have to mean you like people less or anything.

Do your rationality out in a park or something!

comment by Valentine · 2022-07-02T16:27:13.181Z · LW(p) · GW(p)

I like that you brought this up, and the tone with which you did so. Nice mental handles via the graph. And I like that you're basically highlighting a question rather than an answer; that tends to be richer for me to encounter.

I'd like to highlight a couple of implicit things lurking in the background here. They're common in LW culture AFAICT, so this is something like an opportune case study.

- You seem to be assuming that limerence messing with your rationality is bad because rationality is the thing you want to have govern your life. But if your CEV includes limerence, then this limerence override is actually revealing ways in which your rationality-as-is is incompatible with your CEV. Even if limerence is screwing up your life in ways that your rationality would successfully address if it weren't for the limerence. If you have to choose, you want to live in global alignment with your CEV, not locally pointwise convergence on what you currently think your CEV is. This might mean seriously screwing up things locally in order to bridge the parts of you that you currently endorse with other parts that you don't yet know you want to value.

- Strong feelings overwhelming your thinking, which in turn warps your choices, is a problem only inasmuch as you base your choices on your thoughts. This basic inner design choice is also why Goodhart's Law has room to reinforce cognitive biases, and why social pressure can work to make people do/think/believe things they know to be wrong. There's an alternative that looks like building your capacity to be stably present with sensation prior to thought. This gives you a firm place to stand that's outside of thinking. It's the same place from which you can tell that a mathematical proof has "clicked" for you: it's not just about reviewing the logic, but is some kind of deeper knowing that the logic is actually in service to. This strikes me as a glaring omission in the LW flavor of rationality, which AFAICT is almost entirely focused on how to arrange thinking patterns and program metacognition rather than on orienting to thoughts from somewhere else. (It's actually a wonderfully clear fractal reflection of the AI alignment problem, if you view the thought-generator as the AI you're trying to align.) I think this is an essential piece of how a mature Art of Rationality would address the puzzle about limerence you're putting forward.

↑ comment by Raemon · 2022-07-02T17:40:03.927Z · LW(p) · GW(p)

But if your CEV includes limerence, then this limerence override is actually revealing ways in which your rationality-as-is is incompatible with your CEV.

Not sure if this is engaging at the level you meant, but my assumption is that I broadly want to live in a world that has limerence in it, and has being-in-love, but that doesn't mean any particular instance of limerence or love is that important, or more important than other things I value. (I certainly think it's possible, and a particular failure mode of people-attracted-to-LW, to have a warped relationship with limerence generally, and who need to go off and make some predictable mistakes along the path of growing)

Replies from: Valentine↑ comment by Valentine · 2022-07-02T18:36:04.986Z · LW(p) · GW(p)

[…] my assumption is that I broadly want to live in a world that has limerence in it, and has being-in-love, but that doesn't mean any particular instance of limerence or love is that important, or more important than other things I value.

Same.

My point is more that discovering that an instance of limerence is adversarial to other things you care about highlights a place where you're not aligned with your own CEV. The solution of "override this instance of limerence in favor of current-model rational decisions about what I should or shouldn't do or want" is not CEV-convergent.

…and neither is "trust in love [blindly]". But that's not a relevant LW error mode AFAICT.

comment by TurnTrout · 2022-07-04T21:49:16.829Z · LW(p) · GW(p)

It gave us capacity for reason, and also it built in a massive hardcoded override for situations where no fuck you your brain is not for building rocketships and new abstract theories, your brain is for producing children and entangling yourself with a partner long enough to raise them. Now focus all your attention on the new prospective mate you are infatuated with.

We have not observed that evolution gave us a massive hardcoded override. My guess at your epistemic state is: You have observed that in certain situations, you're more inclined to have kids and settle down. You have observed that other people claim similar influences on their decision-making. You are inferring there is an override. This inference may or may not be correct, but it is an inference, not an observation!

On a strictly different note, I think it's very implausible that evolution built "massive hardcoded overrides" into humans, and that that implausibility is enormously and neglectedly important to AI alignment, and so it's actually really dang important that we tread correctly in this area.

I'm not sure how the rationality-warping of limerence compares to other major "evolution-hard-coded-some-overrides" areas like "access to power/money/status".

Same complaint.

Replies from: Raemon↑ comment by Raemon · 2022-07-04T22:18:15.273Z · LW(p) · GW(p)

Hmm. What's the thing you think we actually disagree on if I taboo "hardcoded override?". I definitely was optimizing that paragraph for comedic effect, assuming readers were roughly on the same page. I'm not confident I endorse the exact phrasing.

But, like, I claim that I, and most other humans, will semi-reliably do behavior X in situation Y, much more reliably than most other behaviors in most other situations. Do you have particular other hypotheses for what's going on here that's different from "evolution has selected for us having a pretty strong drive to do X in situation Y"? Do you dispute that most humans tend towards X in Y situations?

I agree it's useful to distinguish inference from observation but also think there are plenty of times the inference is pretty reasonable and accepted, and this feels kinda isolated demand for rigor-y. (I have some sense of you having a general contrarian take on a bunch of stuff closely related to this topic, but haven't seen you spell out the take yet and don't really know how to respond other than "can you argue more clearly about what you think is going on with human tendency towards X in Y situation?"

Replies from: TurnTrout, Jay Bailey↑ comment by TurnTrout · 2022-07-11T04:32:23.569Z · LW(p) · GW(p)

First of all, sorry for picking out a few remarks which weren't meant super strongly.

That said,

What's the thing you think we actually disagree on if I taboo "hardcoded override?"

See Human values & biases are inaccessible to the genome [LW · GW].

Do you have particular other hypotheses for what's going on here that's different from "evolution has selected for us having a pretty strong drive to do X in situation Y"? Do you dispute that most humans tend towards X in Y situations?

- I do have other hypotheses, but this margin is too small to detail them. However, even if I didn't have other hypotheses, it's sometimes important and healthy to maintain uncertainty even in the absence of being able to come up with concrete other hypotheses for your observations.

- Just because "Evolution hardcoded it" is the first explanation we can think of, and just because there weren't other obvious explanations, this only slightly increases my credence in "Evolution hardcoded it", because people not finding other theories yet is pretty mild evidence, all things considered.

- "Evolution has selected for us having a pretty strong drive to do X in situation Y" is not actually a mechanistic explanation at all.

- When weighing "is it hardcoded, or not?", we are considering a modern genome, which specifies the human brain. Given the genome, evolution's influence on human values is screened off. The question is: What solution did evolution find?

- Also, you might have considered this, but I want to make the point here: Evolution is not directly selecting over high-level cognitive properties, like the motivation to settle down (X) at age twenty-three (situation Y). Evolution selects over genes, and those mutations affect the adult brain's high-level properties eventually.

I agree it's useful to distinguish inference from observation but also think there are plenty of times the inference is pretty reasonable and accepted, and this feels kinda isolated demand for rigor-y.

I see why you think that. I think it's unfortunately accepted, but it's not reasonable, and that it is quite wrong and contradictory. See Human values & biases are inaccessible to the genome [LW · GW] for the in-depth post.

Also, I think people are too used to saying whatever they want about hardcoding, since it's hard to get caught out by reality because it's hard to make the brain transparent and thereby get direct evidence on the question. Human value formation is an incredibly important topic where we really should exercise care, and, to be frank, I think most people (myself in 2021 included) do not exercise much care. (This is not meant as social condemnation of you in particular, to be clear.)

I have some sense of you having a general contrarian take on a bunch of stuff closely related to this topic

Yup. Here's another hot take:

- We observe that most people want to punish people who fuck them over. Therefore, evolution directly hardcoded punishment impulses.

- We observe that GPT-3 sucks at parity checking. Therefore, the designers directly hardcoded machinery to ensure it sucks at parity checking.

- We observe an AI seeking power. Therefore, the designers directly hardcoded the AI to value power.

I think these arguments are comparably locally valid. That is to say, not very.

"can you argue more clearly about what you think is going on with human tendency towards X in Y situation?"

I will eventually, but I maintain that it's valid to say "I don't know what's happening, but we can't be confident in hardcoding given the available evidence."

Replies from: Raemon↑ comment by Raemon · 2022-07-11T05:38:44.503Z · LW(p) · GW(p)

Thanks. This all does seem reasonable.

I think I did mean something less specific by "hardcoded" than you interpreted me to mean (your post notes that hardwired responses to sense-stimuli are more plausible than hardwired responses to abstract concepts, and I didn't have a strong take that any of the stuff I talk about in this post required abstract concepts.

But I also indeed hadn't reflected on the plausibility of various mechanisms here at all, and you've given me a lot of food for thought. I'll probably weigh in with more thoughts on your other post.

↑ comment by Jay Bailey · 2022-07-05T01:17:05.298Z · LW(p) · GW(p)

I am not TurnTrout, but it seems to me that, since producing and raising children is the default evolutionary goal, there is no override that makes us snap out of our normal abstract-reason-mode and start caring about that. A better way to think about it is the opposite - that reason and abstract thinking and building rocket ships IS the override, and it's one that we've put on ourselves. Occasionally our brains get put in situations where they remind us more strongly "Hey? You know that thing that's supposed* to be your purpose in life? GO DO THAT."

*This is the abstract voice of evolution speaking, not me. I don't happen to be aligned with it.

comment by jp · 2023-12-06T17:34:41.818Z · LW(p) · GW(p)

I've referenced this post several times. I think the post has to balance being a straw vulcan with being unwilling to forcefully say its thesis, and I find Raemon to be surprisingly good at saying true things within that balance. It's also well-written, and a great length. Candidate for my favorite post of the year.

Replies from: Raemon↑ comment by Raemon · 2023-12-22T09:55:57.853Z · LW(p) · GW(p)

I think the post has to balance being a straw vulcan with being unwilling to forcefully say its thesis, and I find Raemon to be surprisingly good at saying true things within that balance.

I think this comment is probably getting at something I was unconsciously tracking while writing the post, but I don't quite understand it. Could you say this again in different words?

comment by Gram Stone · 2022-07-01T22:36:01.137Z · LW(p) · GW(p)

I just want to register that there are things I feel like I couldn't have realistically learned about my partner in an amount of time short enough to precede the event horizon, and that my partner and I have changed each other in ways past the horizon that could be due to limerence but could also just be good old-fashioned positive personal change for reasonable reasons, and that I suspect that the takeaway here is not necessarily to attempt to make final romantic decisions before reaching the horizon, but that you will only be fully informed past the horizon, and that is just the hand that Nature has dealt you. I don't necessarily think you disagree with that but wanted to write that in case you did.

Replies from: Raemon↑ comment by Raemon · 2022-07-02T17:39:45.496Z · LW(p) · GW(p)

I mostly had been thinking about stuff that's more like "metadata about your situation, and very obvious things about your partner." I'm not suggesting hold off on every relationship until you've, like, had time to vet it thoroughly with your System 2 brain.

comment by Adam Zerner (adamzerner) · 2022-07-01T22:33:01.735Z · LW(p) · GW(p)

I really like how you used the specific term limerance here instead of something more general. However, I think that it would improve the post to spend a little bit of time discussing what limerance means instead of linking to the Wikipedia page. It's a core part of this post, I suspect a good amount of people don't know what it means, and after a brief skim I found the Wikipedia page to be confusing and not very readable.

I also think the post could be improved by having more concrete examples. I found it to be pretty abstract, which made it hard for me to think about things.

Replies from: Raemon↑ comment by Raemon · 2022-07-02T17:33:10.182Z · LW(p) · GW(p)

Yeah, I agree with all points here. I was focused on getting a version of the post out at-all rater than perfection.

Unfortunately all my motivating examples are situations that are tricky to convert into public anecdotes.

Replies from: adamzerner↑ comment by Adam Zerner (adamzerner) · 2022-07-02T18:19:10.880Z · LW(p) · GW(p)

That makes total sense :)

comment by Celarix · 2023-12-07T16:12:43.121Z · LW(p) · GW(p)

This post demonstrates another surface of the important interplay between our "logical" (really just verbal) part-of-mind and our emotional part-of-mind. Other posts on this site, including by Kaj Sotala and Valentine, go into this interplay and how our rationality is affected by it.

It's important to note, both for ourselves and for our relationships with others, that the emotional part is not something that can be dismissed or fought with, and I think this post does well in explaining an important facet of that. Plus, when we're shown the possible pitfalls ahead of any limerence, we can be more aware of it when we do fall in love, which is always nice.

comment by tcheasdfjkl · 2022-07-05T03:38:42.817Z · LW(p) · GW(p)

I think this is just one particular subcase of "strong urges are hard not to follow" (other examples: cravings for food one knows is long-term unhealthy; some instances of procrastination (choosing a short-term fun activity over a long-term beneficial one when you don't endorse that); sexual arousal (separate from romantic feelings); being tired/sleepy when you endorse doing stuff that requires overriding that). It certainly is a notable subcase of that, though. I've sometimes described having crushes as having my utility function hijacked (though in a way I usually endorse - I tend to be pretty aligned across versions of myself on this axis).

Replies from: Raemon↑ comment by Raemon · 2022-07-11T05:49:44.785Z · LW(p) · GW(p)

Hmm. I do think it's interesting to compare this to other strong-cravings, I agree it shares similarity there.

I think what makes limerence stand out to me here is that it's not a default part of my day-to-day life. While small-bouts of attraction/lust might come up fairly frequently, mutual attraction is rare enough, and intense/punctuated enough, that I (and others I've seen) are more "caught off guard" than they are with hunger.

comment by Aditya (aditya-prasad) · 2023-06-07T21:22:26.938Z · LW(p) · GW(p)

Some things don't make sense unless you really experience it. Personally I have no words for the warping effects such emotions have on you. It's comparable to having kids or getting brain injury.

It's a socially acceptable mental disorder.

The only thing is to notice when you are in that state and put very low credence on all positive opinions you have about your Limerent Object. You cannot know to a high confidence anything about them in that state. Give it a few years.

Don't take decisions you can't undo, entangle parts of your life which will be painful to detach later.

But it's a ride worth going on. No point in living life too safely. Have fun but stay safe out there.

comment by benjamin.j.campbell (benjamin-j-campbell) · 2022-07-02T09:30:37.104Z · LW(p) · GW(p)

It's worse than that. I've been aware of this since I was a teenager, but apparently there's no amount of correction that's enough. These days I try to avoid making decisions that will be affected in either direction by limerance, or pre-commit firmly to a course of action and then trust that even if I want to update the plan, I'm going to regret not doing what I pre-committed to earlier.

comment by oneisnotprime · 2022-07-02T01:59:42.892Z · LW(p) · GW(p)

Spoilers for the film Ex Machina below.

Ex Machina made this point the critical failure of humanity to contain AGI. It was an original spin, given that Hollywood typically depicts Androids' lack of emotional capacity as their main weak point. However, it made the same mistake as Jurassic Park and all other "evil AGI conquers the planet" films (Terminator, Colossus, War Games) in that no reasonable safety precautions are taken.

Personally, I would like to see (and I'm trying to write) a story where intelligent and capable researchers make significant efforts to contain AGI (and likely still fail).

Replies from: adamzerner↑ comment by Adam Zerner (adamzerner) · 2022-07-05T05:25:40.366Z · LW(p) · GW(p)

Note: You can insert spoiler protections by following the instructions here [? · GW].

comment by Flavius Justinianus · 2022-07-01T20:15:45.273Z · LW(p) · GW(p)

Yeah lol. Not only do our brains say "no fuck you, you don't get to work on rockets" they say "fuck you, you don't even get to not fall in love with this person who is probably terrible for you in lieu of someone more objectively compatible." Hence the sky high marriage rate despite everyone knowing the divorce rate.

Replies from: ponkaloupe, hairyfigment↑ comment by ponkaloupe · 2022-07-02T00:28:00.223Z · LW(p) · GW(p)

Not only do our brains say "no fuck you, you don't get to work on rockets"

getting yourself to somewhere on this curve which is not the far left but also not too far to the right can be unbelievably productive. there’s a certain type of infatuation which drives one to show off their achievements, which in turn requires one to make achievements. building a rocket, and inventiveness in general, is a decently high status thing: you may experience a greater drive to actually do these things during a certain period of infatuation.

Replies from: Raemon, Flavius Justinianus↑ comment by Raemon · 2022-07-02T00:35:58.292Z · LW(p) · GW(p)

I do agree with this. Precarious, tho.

Replies from: pktechgirl↑ comment by Elizabeth (pktechgirl) · 2022-07-02T02:24:55.219Z · LW(p) · GW(p)

↑ comment by Flavius Justinianus · 2022-07-02T03:14:45.590Z · LW(p) · GW(p)

"you may experience a greater drive to actually do these things during a certain period of infatuation."

If it's not logistically incompatible with the real strong feelz, sure.

↑ comment by hairyfigment · 2022-07-01T20:52:26.570Z · LW(p) · GW(p)

I will say that not everything which ends is a mistake, but that should not be taken to endorse having children - you're already pregnant, aren't you.

comment by bhishma (NomadicSecondOrderLogic) · 2022-07-03T10:09:08.150Z · LW(p) · GW(p)

Alas, being slightly over the subtly-warped-judgment line is like taking one drink – sure it only impairs your judgment a little, but, the one of the things you might do with slightly impaired judgment is to take another drink. (Or, say, foster more emotional closeness with someone who you wouldn’t endorse eventually having sex with).

This is sort of the situation where you need to erect Shelling fences [LW · GW]