Posts

Comments

Strong upvote from me, this is a huge cause issue in my life and I'm sure in many others. Any mental stuff aside, it seems the brain has strong control systems around not wanting to do too much of stuff it doesn't like, and more of stuff it can't get enough of.

One thing I've always wondered about is how a person's affinities and frustrations are made. Why do some people love to write, so much so that time for writing just appears without conscious effort, whereas others find it a grinding chore they can't wait to be done with? What makes some people feel a calling to be, say, a veterinarian or a plumber? Why do most people dislike exercise but a few really love it and couldn't make themselves stop if they tried?

If we can figure this out, maybe we can figure out how to move stuff between categories. Rather than trying to learn how to live with the suck, maybe we can find ways to make stuff not suck, as much as we can, at least.

Sorry, yes, this is what I was also getting at, that the joke has basis in reality. My comment was not worded very well.

I mean, it's a joke for a reason. SpongeBob had its "daring escape through the perfume department" gag, too.

But somehow it feels wrong to just talk to yourself out loud.

Haha, yeah, totally. Uh... I never talk to myself for extended periods while pacing up and down my apartment... or workplace. Nope, never.

(it helps me think better if I do it out loud. Sorry to anyone who I give off insane vibes to!)

The concept of one-shotting psychological and mental issues is quite intriguing, I must admit. I really do think there's a sizable blindspot around actual solutions for tons of mental issues, and not because I think people are faking or lying about wanting to change. I do it myself, even; when I think about trying to make my life better, I often get caught up in the absurdities of my mind and what it does, and that System-1-FEELS like the correct place to start.

For some stuff, I do think there can be one-neat-trick style fixes, too, but that they're often quite out of left field and hard to synthesize on one's own, which does rather suck. The "and?" example you have above fits that quite well, it's not something someone would ordinarily say (well, out loud, the fear of coming off as rude will stop a lot of people).

Speaking of, maybe one of the biggest obstacles here is making the other person feel heard and understood and not dismissed? You seem to do quite well per your accounts, but man alive I'd say probably >99.5% of Internet discussions I read that involve a) two sides and b) any level of distrust devolve into pointless arguing about what was said, meant, heard, and felt. A real shame, and I think this can come up a lot in therapeutic environments, too, where the therapist knows what they want to say but must not say it because the patient needs to realize it for themselves via gentle questioning and guiding. Communication is really hard and no one's intended message ever makes it 100% over (see https://thezvi.substack.com/p/just-saying-what-you-mean-is-impossible).

Why, the Practical tag (https://www.lesswrong.com/w/practical) has a lot of cool stuff like this.

My opinion is that whatever value of epsilon you pick should be low enough such that it never happens once in your life. "I flipped a coin but it doesn't actually exist" should never happen. Maybe it would happen if you lived for millions of years, but in a normal human lifespan, never once.

She then proceeded to sock puppet it in mock dialogue to the student next to her.

AAAAAAAAAAAAAAAAA

...

Uh, to contribute something useful: good piece! I love the idea of aiming for any goal in a broader direction, landing even close to an idealized "perfect goal" is probably still OOMs better than trying for the perfect goal, failing, and going "eh, well, guess I'll lay bricks for 40 years".

I also like the section on intrinsic motivation - describing it as "all the things you find yourself doing if left to your own devices". I do fear that, for many, this category contains things that can't be used to support you, though, but then your diagram showing the dots outside the bounds of "video games" but pointing back to it I think nicely resolves that conundrum.

And when all else fails, apply random search.

Hell yes. Speaking from my own experience here, whatever you do, don't get stuck. Random search if you have to, but if you're unhappy, keep moving.

Ah, I think I see where you're pointing at. You're afraid we might be falling prey to the streetlamp effect, thinking that some quality specifically about Western diets is causing obesity, and restricting our thoughts if we accept that as true. I agree, and it's pretty terrifying how little we know and how much conflicting data there is out there about the causes of obesity.

It might very well be that the true cause is outside of the Western diet and has little to do with it, and I could definitely see that being true given how much we've spent and how little we've gotten for research taking the Western diet connection for granted.

Sure, I broadly agree, and I do prefer that people are living longer, even obese, than they would be with severe and long-term malnutrition. I think what you're saying here is "the modern Western diet provides a benefit in that it turns what would have been fatalities by malnutrition into survival with obesity", but please correct me if I'm wrong.

Basically, it is good - very good, one of the greatest human accomplishments - that we have been able to roll back so much suffering from starvation and malnutrition. I think, though, that we can address obesity while also avoiding a return to the days of malnutrition.

Or, in other words, there are three tiers, each better than the last:

- Planes get shot down and pilots die

- Planes get riddled with bullets but return safely

- Planes don't get damaged and pilots can complete mission

We would still have to explain the downsides of obesity, and not just in the long-term health effects like heart disease or diabetes risks, but in the everyday life of having to carry around so much extra weight.

Despite that, I'd still agree that being overweight is better than being underweight.

The visual techniques of TV—cuts, zooms, pans, and sudden noises—all activate the orient response.

Anecdote, but this form of rapid cutting is most assuredly alive and well. I saw a promotional ad for an upcoming MLB baseball game on TBS. In a mere 25 seconds, I counted over 35 different cuts, cuts between players, cuts between people in the studio, cut after cut after cut. It was strangely exhausting.

The thing about Newcomb's problem for me was always the distribution between the two boxes, one being $1,000,000 and the other being $1,000. I'd rather not risk losing $999,000 for a chance at an extra $1,000! I could just one-box for real, take the million, then put it in an index fund and wait for it to go up by 0.1%.

I do understand that the question really comes into play when the amounts vary and Omega's success rate is lower - if I could one-box for $500 and two-box for $1,500 total and Omega is wrong 25% of the time observed, that would be a different play.

I don’t want to spend ten years figuring this out.

A driving factor in my own philosophy around figuring out what to do with my life. Some people spend decades doing something or living with something they don't like, or even something more trivially correctable, like spending one weekend to clean up the basement vs. living with a cluttered mess for years on end.

Hmm. My family and I always let the ice cream sit for about 10 to 15 minutes to let it soften first. Interesting to see the wide range of opinions, wasn't even aware that wasn't a thing.

My thinking is that the more discussed threads would have more value to the user. Small threads with 1 or 2 replies are more likely to be people pointing out typos or just saying +1 to a particular passage.

Of course, there is a spectrum - deeply discussed threads are more likely to be angry back-and-forths that aren't very valuable.

Ooh, nice. I've been wanting this kind of discussion software for awhile. I do have a suggestion: maybe, when hovering over a highlighted passage, you could get some kind of indicator of how many child comments are under that section, and/or change the highlight contrast for threads that have more children, so we can tell which branches of the discussion got the most attention

Noted, thank you. This does raise my confidence in Alcor.

This doesn't really raise my confidence in Alcor, an organization that's supposed to keep bodies preserved for decades or centuries.

I can kind of see the original meme's point in the extremes. Consider a mechanic shop that has had very, very slow business for months and is in serious financial trouble. I can see the owners Moloching their way into "suggesting" that their technicians maybe don't fix it all the way. After all, what's the harm in having a few customers come back a little more often if it means maybe saving the business?

But this is only on the extremes.

Noted, thank you.

Here's Duncan Sabien describing the experience of honing down on a particular felt sense

I'm confused - the original author seems to be Connor Morton?

I mean, sure, but that does kinda answer the question in the question - "if event X happens, should you believe that event X is possible?" Well, yes, because it happened. I guess, in that case, the question could be more measuring something like "I, a Rationalist, would not believe in ghosts because that would lower my status in the Rationalist community, despite seeing strong evidence for it"

Sort of like asking "are you a Rationalist or are you just saying so for status points?"

I kinda disagree - if you see ghosts, almost all the probability space should be moving to "I am hallucinating".

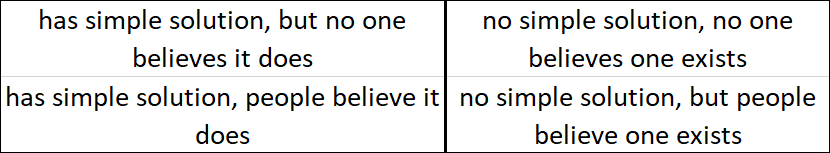

Fair! That's a simple if not easy solution, definitely bottom-left quadrant instead of bottom-right!

Likely true. The sorts of problems I was thinking about for the razor are ones that have had a simple solutions for a very long time - walking, talking, sending electrical current from one place to another, illuminating spaces, stuff like that.

Perhaps a 2x2 grid would be helpful?

I feel like this post is standing against the top-left quadrant and would prefer everyone to move to the bottom-left quadrant, which I agree with. My concern is the people in the bottom-right quadrant, which I don't believe lukehmiles is in, but I fear they may use this post as fuel for their belief - i.e. "depression is easy, you attention-seeking loser! just stop being sad, it's a solved problem!"

Yes, so long as one can tell the difference between a problem that is solved (construction, microprocessor design, etc.) and one that is not ("depressed? just stop being sad, it's easy")

Also, we might apply an unnamed razor: If a problem has a simple solution, everyone would already be doing it.

I broadly agree, but I think it's worth it to learn to distinguish scenarios where a simple solution is known from ones where it is not. We have, say, building design and construction down pat, but AGI alignment? A solid cure for many illnesses? The obesity crisis? No simple solution is currently known.

Pretty good overall. My favorite posts are about the theory of the human mind that helps me build a model of my own mind and the minds of others, especially in how it can go wrong (mental illness, ADHD, et. al.)

The AI stuff is way over my head, to the point where my brain just bounces off of the titles alone, but that's fine - not everything is for everyone. Also reading the acronyms EDT and CDT always make me think of the timezones, not the decision theories.

About the only complaint I have is that the comments can get pretty dense and recursively meta, which can be a bit hard to follow. Zvi will occasionally talk about a survey of AI safety experts giving predictions about stuff and it just feels like a person talking about people talking about predictions about risks associated with AI. But this is more of a me thing and probably people who can keep up find these things very useful.

This post demonstrates another surface of the important interplay between our "logical" (really just verbal) part-of-mind and our emotional part-of-mind. Other posts on this site, including by Kaj Sotala and Valentine, go into this interplay and how our rationality is affected by it.

It's important to note, both for ourselves and for our relationships with others, that the emotional part is not something that can be dismissed or fought with, and I think this post does well in explaining an important facet of that. Plus, when we're shown the possible pitfalls ahead of any limerence, we can be more aware of it when we do fall in love, which is always nice.

My review mostly concerns the SMTM's A Chemical Hunger part of this review. RaDVaC was interesting if not particularly useful, but SMTM's series has been noted by many commenters to be a strange theory, possibly damaging, and there were, as of my last check, no response by SMTM to the various rebuttals.

It does not behoove rationalism to have members that do not respond to critical looks at their theories. They stand to do a lot of damage and cost a lot of lives if taken seriously.

Oh, yes, true. However, I still maintain that particularly jerkish people would be happy to misgender in that manner as they'd think that the only good gender is male or somesuch nonsense.

Counterpoint: it could also be because the speaker thinks male is default and automatically thinks of an unknown person as male.

20. Memetic Razor: If you hear news "through the grapevine" or see something on the "popular" feeds of social media, it has likely traveled a long journey of memetic selection to get to you, and is almost certainly modified from the original.

I notice I feel some opposition to this, mostly on the grounds that messing with nature tends to end rather poorly for us. Nature is trillions of deeply interconnected dimensions doing who-knows-what at every layer; there is a small chance that release of these gene drives could be an x-risk. So do we take a guaranteed 600,000 dead every year, or an x% chance of accidentally wiping out all life on Earth? What value of x is acceptably low?

This is good stuff, thank you. I think these are all good ways to avoid the trap of letting others decide your goals for you, and I like the idea of continuously changing your goals if you find they aren't working/have been Goodharted/etc.

Good catch, didn't think of that. Definitely seems like peer pressure is a better way to change minds rather than one-on-one. This is still parasitism, though - I don't know if I'd trust most people to form a group to hold me accountable for changes in my behavior. Seems too easy for them to, intentionally or not, shape my request into ways that benefit them.

For example, I might form a group to help me lose weight. I care very much about my physical wellbeing and reducing discomfort, but they might care more about my ugly appearance and assume that's what I'm going for, too. Worse yet, my discomfort is invisible to them, and my ugliness in their eyes is invisible to me!

Certainly not an insurmountable obstacle, of course, but one to be aware of.

EDIT: I read your paragraph on cults and then completely ignored it when writing my response. Of course you know that peer pressure can be bad, you said it yourself. My mistake.

I do have this feeling from time to time. Some stuff that's helped me:

- Simplify, simplify, simplify. Do your chores with as little effort as possible, per https://mindingourway.com/half-assing-it-with-everything-youve-got/. Buy stuff that does your work for you. Your goal is to complete your chores, not to do work; work is in service to the goal, not the goal itself.

- Success by default. Make the easiest, lowest-energy, laziest way to do the thing also the right way. Have exactly one clothes hamper for dirty clothes. Buy clothes that don't need ironing. Put decorative items on unused surfaces so you don't put random junk on them.

- Suggested to me by ACX commenters: random rewards. Pick something you like (chocolates, kisses from your wife, etc.) and set up a timer that goes off at a randomly selected interval which entitles you to that reward. The randomness might help your brain to associate chores with better feelings.

- Make it satisfying: Power-washers for big jobs, steam cleaners, compressed air, powered dish brushes. There's a lot of cleaning content on /r/oddlysatisfying for a reason.

This next one's a lot more speculative, feel free to completely disregard. Seriously, I'm suggesting the following because it works for me, but my anxiety response might be way less than yours is and my method might be totally useless for that.

Anyway, it sounds like you have a strong anxiety response when either doing chores or thinking about them. I had a similar issue, and my strategy was flooding, exposure therapy turned up to eleven. Do not recommend.

My real suggestion is, when you feel these racing thoughts and fast breathing, is to stop the chore for a moment and stand there. Don't try to distract yourself, because the focus is, for a moment, now on the anxiety response itself.

For me, anxiety is a series of waves of intense physical symptoms like fast heartbeat and breathlessness. There's definitely a sense of "here it comes!" before each next wave. One thing that helped me was realizing that, while the anxiety response can be quite long-lived, each wave was only 10-15 seconds long. Noticing this gave me strength; if I make it through this wave, I'll have a few minutes to work with the anxiety before the next. This may not be true for you, though.

You say that the endless nature of these chores terrifies you. In this moment of standing still, your goal is to dig a bit deeper, to try to ask "why does the fact that these chores are endless scare me so much?"

Possible answers could be:

- Endless chores scare me because they'll take up a huge chunk of my finite life.

- Endless chores scare me because every time I finish them, I have to do them again soon; all my previous work has amounted to nothing.

- Endless chores scare me because my thoughts race and my breathing speeds up.

Or something else entirely! It might take awhile to get a good answer, and you might have to think through many of them until one seems to fit.

All this might sound very familiar; you say you're taking anxiety medication, but you may also have heard all this through therapy (Cognitive Behavioral Therapy) or meditation. Again, my advice works for me but may not work for you! Please don't feel bad if this doesn't apply!

Not a full answer, but Kaj Sotala's Multiagent Models of Mind (https://www.lesswrong.com/s/ZbmRyDN8TCpBTZSip) is a great sequence that introduces some of these concepts.

Cool, thank you!

Oh HELL yeah. I tried Metaculus's private predictions for this, but they needed just as much detail as the public ones did, at least in terms of "this field is required". They seem to be aiming more for the superforecaster/people who actually give their predictions some thought camp, which is perfectly fine, but not suited for me, who just wants something quick and simple.

Signup was easy, I love how it watches for dates in the question and automatically sets them in the resolve field. Posting a comment containing a link by itself (https://www.cnbc.com/2023/04/24/bitcoin-btc-price-could-hit-100000-by-end-2024-standard-chartered.html) on one of my private predictions seems to have posted a blank comment, though. (p.s. my odds on 1 BTC = $100,000 are about 15%)

Absolutely love this one. I've already migrated my (admittedly few) existing predictions over. Thanks for this!

EDIT: "Each day I’ll write down whether I want to leave or stay in my job. After 2 months, will I have chosen ‘leave’ on >30 days?" is such a good suggested question, both to demonstrate details about the site and also to get people to think about what prediction can do for them, personally! And I love how you can just click the suggestion and have it auto-populate into the title field.

That's actually one I wanted to link but I just could not remember the title for the life of me. Thanks!

Sounds about right! Thanks for these links, I look forward to reading them. Pulling sideways is an underappreciated life skill - sometimes you have to question the playing field, not just the game.

This is why it is important that the 'spirit of cricket' is never properly codified into laws. If it was, then players would simply game the rules and find the most successful strategy that operates within the laws of the game and the process would be Goodharted.

This is a fascinating take! Ambiguity and things different people see differently as a defense against Moloch and Goodhart. I think there's a lot of people in this community, myself very included, that don't like ambiguity and would prefer if everything had a solid, clear, objective answer.

I'd say kind of... you definitely have to keep your attention and wits about you on the road, but if you're relying on anxiety and unease to help you drive, you're probably actually doing a bit worse than optimal safety - too quick to assume that something bad will happen, likely to overcorrect and possibly cause a crash.

I'm afraid I don't have the time for a full writeup, but the Stack Exchange community went through a similar problem: should the site have a place to discuss the site? Jeff Atwood, cofounder, said [no](https://blog.codinghorror.com/meta-is-murder/) initially, but the community wanted a site-to-discuss-the-site so badly, they considered even a lowly phpBB instance. Atwood eventually [realized he was wrong](https://blog.codinghorror.com/listen-to-your-community-but-dont-let-them-tell-you-what-to-do/) and endorsed the concept of Meta StackExchange.

I think if you model things as just "an internet community" this will give you the wrong intuitions.

This, plus Vaniver's comment, has made me update - LW has been doing some pretty confusing things if you look at it like a traditional Internet community that make more sense if you look at it as a professional community, perhaps akin to many of the academic pursuits of science and high-level mathematics. The high dollar figures quoted in many posts confused me until now.

Yeah, that does seem like what LW wants to be, and I have no problem with that. A payout like this doesn't really fit neatly into my categories of what money paid to a person is for, and that may be on my assumptions more than anything else. Said could be hired, contracted, paid for a service he provides or a product he creates, paid for the rights to something he's made, paid to settle a legal issue... the idea of a payout to change part of his behavior around commenting on LW posts was just, as noted on my reply to habryka, extremely surprising.

The amount of moderator time spent on this issue is both very large and sad, I agree, but I think it causes really bad incentives to offer money to users with whom moderation has a problem. Even if only offered to users in good standing over the course of many years, that still represents a pretty big payday if you can play your cards right and annoy people just enough to fall in the middle between "good user" and "ban".

I guess I'm having trouble seeing how LW is more than a (good!) Internet forum. The Internet forums I'm familiar with would have just suspended or banned Said long, long ago (maybe Duncan, too, I don't know).

I do want to note that my problem isn't with offering Said money - any offer to any user of any Internet community feels... extremely surprising to me. Now, if you were contracting a user to write stuff on your behalf, sure, that's contracting and not unusual. I'm not even necessarily offended by such an offer, just, again, extremely surprised.

by offering him like $10k-$100k to change his commenting style or to comment less in certain contexts

What other community on the entire Internet would offer 5 to 6 figures to any user in exchange for them to clean up some of their behavior?

how is this even a reasonable-

Isn't this community close in idea terms to Effective Altruism? Wouldn't it be better to say "Said, if you change your commenting habits in the manner we prescribe, we'll donate $10k-$100k to a charity of your choice?"

I can't believe there's a community where, even for a second, having a specific kind of disagreement with the moderators and community (while also being a long-time contributor) results in considering a possibly-six-figure buyout. I've been a member on other sites with members who were both a) long-standing contributors and b) difficult to deal with in moderation terms, and the thought of any sort of payout, even $1, would not have even been thought of.