Open Thread Spring 2024

post by habryka (habryka4) · 2024-03-11T19:17:23.833Z · LW · GW · 160 commentsContents

161 comments

If it’s worth saying, but not worth its own post, here's a place to put it.

If you are new to LessWrong, here's the place to introduce yourself. Personal stories, anecdotes, or just general comments on how you found us and what you hope to get from the site and community are invited. This is also the place to discuss feature requests and other ideas you have for the site, if you don't want to write a full top-level post.

If you're new to the community, you can start reading the Highlights from the Sequences, a collection of posts about the core ideas of LessWrong.

If you want to explore the community more, I recommend reading the Library [? · GW], checking recent Curated posts [? · GW], seeing if there are any meetups in your area [? · GW], and checking out the Getting Started [? · GW] section of the LessWrong FAQ [? · GW]. If you want to orient to the content on the site, you can also check out the Concepts section [? · GW].

The Open Thread tag is here [? · GW]. The Open Thread sequence is here [? · GW].

160 comments

Comments sorted by top scores.

comment by Linch · 2024-05-20T21:38:55.464Z · LW(p) · GW(p)

Do we know if @paulfchristiano [LW · GW] or other ex-lab people working on AI policy have non-disparagement agreements with OpenAI or other AI companies? I know Cullen [EA(p) · GW(p)] doesn't, but I don't know about anybody else.

I know NIST isn't a regulatory body, but it still seems like standards-setting should be done by people who have no unusual legal obligations. And of course, some other people are or will be working at regulatory bodies, which may have more teeth in the future.

To be clear, I want to differentiate between Non-Disclosure Agreements, which are perfectly sane and reasonable in at least a limited form as a way to prevent leaking trade secrets, and non-disparagement agreements, which prevents you from saying bad things about past employers. The latter seems clearly bad to have for anybody in a position to affect policy. Doubly so if the existence of the non-disparagement agreement itself is secretive.

↑ comment by gwern · 2024-05-21T02:24:27.165Z · LW(p) · GW(p)

Sam Altman appears to have been using non-disparagements at least as far back as 2017-2018, even for things that really don't seem to have needed such things at all, like a research nonprofit arm of YC.* It's unclear if that example is also a lifetime non-disparagement (I've asked), but nevertheless, given that track record, you should assume the OA research non-profit also tried to impose it, followed by the OA LLC (obviously), and so Paul F. Christiano (and all of the Anthropic founders) would presumably be bound.

This would explain why Anthropic executives never say anything bad about OA, and refuse to explain exactly what broken promises by Altman triggered their failed attempt to remove Altman and subsequent exodus.

(I have also asked Sam Altman on Twitter, since he follows me, apropos of his vested equity statement, how far back these NDAs go, if the Anthropic founders are still bound, and if they are, whether they will be unbound.)

* Note that Elon Musk's SpaceX does the same thing and is even worse because they will cancel your shares after you leave for half a year, and if they get mad at you after that expires, they may simply lock you out of tender offers indefinitely - which is not much different inasmuch as SpaceX may never IPO. Given the close connections there, Musk may be where Altman picked this bag of tricks up.

Replies from: habryka4↑ comment by habryka (habryka4) · 2024-05-21T02:35:06.961Z · LW(p) · GW(p)

It seems really quite bad for Paul to work in the U.S. government on AI legislation without having disclosed that he is under a non-disparagement clause for the biggest entity in the space the regulator he is working at is regulating. And if he signed an NDA that prevents him from disclosing it, then it was IMO his job to not accept a position in which such a disclosure would obviously be required.

I am currently like 50% Paul has indeed signed such a lifetime non-disparagement agreement, so I do think I don't buy that a "presumably" is appropriate here (though I am not that far away from it).

Replies from: gwern, Linch, tao-lin↑ comment by gwern · 2024-05-21T03:26:19.415Z · LW(p) · GW(p)

It would be bad, I agree. (An NDA about what he worked on at OA, sure, but then being required to never say anything bad about OA forever, as a regulator who will be running evaluations etc...?) Fortunately, this is one of those rare situations where it is probably enough for Paul to simply say his OA NDA does not cover that - then either it doesn't and can't be a problem, or he has violated the NDA's gag order by talking about it and when OA then fails to sue him to enforce it, the NDA becomes moot.

↑ comment by Linch · 2024-05-21T19:55:39.984Z · LW(p) · GW(p)

At the very least I hope he disclosed it to the gov't (and then it was voided at least in internal government communications, I don't know how the law works here), though I'd personally want it to be voided completely or at least widely communicated to the public as well.

↑ comment by Tao Lin (tao-lin) · 2024-05-23T18:11:50.391Z · LW(p) · GW(p)

lol Paul is a very non-disparaging person. He always makes his criticism constructive, i don't know if there's any public evidence of him disparaging anyone regardless of NDAs

Replies from: ryan_greenblatt, Linch↑ comment by ryan_greenblatt · 2024-05-23T19:38:56.769Z · LW(p) · GW(p)

Examples of maybe disparagement:

↑ comment by Orpheus16 (akash-wasil) · 2024-05-21T12:53:11.486Z · LW(p) · GW(p)

+1. Also curious about Jade Leung (formerly Open AI)– she's currently the CTO for the UK AI Safety Institute. Also Geoffrey Irving (formerly DeepMind), who is a research director at the UKAISI.

Replies from: Linch↑ comment by Linch · 2024-05-21T19:58:37.260Z · LW(p) · GW(p)

Geoffrey Irving was one of the first people to publicly say some very aggressive + hard-to-verify things about Sam Altman during the November board fiasco, so hopefully this means he's not bound (or doesn't feel bound) by a very restrictive non-disparagement agreement.

Replies from: akash-wasil↑ comment by Orpheus16 (akash-wasil) · 2024-05-21T20:02:27.550Z · LW(p) · GW(p)

Great point! (Also oops– I forgot that Irving was formerly OpenAI as well. He worked for DeepMind in recent years, but before that he worked at OpenAI and Google Brain.)

Do we have any evidence that DeepMind or Anthropic definitely do not do non-disparagement agreements? (If so then we can just focus on former OpenAI employees.)

↑ comment by Garrett Baker (D0TheMath) · 2024-05-29T02:52:52.966Z · LW(p) · GW(p)

comment by atergitna (greta-goodwin) · 2024-04-03T19:29:17.991Z · LW(p) · GW(p)

Hi! I have been lurking here for over a year but I've been too shy to participate until now. I'm 14, and I've been homeschooled all my life. I like math and physics and psychology, and I've learned lots of interesting things here. I really enjoyed reading the sequences last year. I've also been to some meetups in my city and the people there (despite – or maybe because of – being twice my age) are very cool. Thank you all for existing!

Replies from: nim, kave↑ comment by nim · 2024-05-06T20:09:25.273Z · LW(p) · GW(p)

hey, welcome! Congrats on de-lurking, I think? I fondly remember my own teenage years of lurking online -- one certainly learns a lot about the human condition.

If I was sending my 14-year-old self a time capsule of LW, it'd start with the sequences, and beyond that I'd emphasize the writings of adults examining how their own cognition works. Two reasons -- first, being aware that one is living in a brain as it finishes wiring itself together is super entertaining if you're into that kind of thing, and even more fun when you have better data to guess how it's going to end up. (I got the gist of that from having well-educated and openminded parents, who explained that it's prudent to hold off on recreational drug use until one's brain is entirely done with being a kid, because most recreational substances make one's brain temporarily more childlike in some way and the real thing is better. Now I'm in my 30s and can confirm that's how such things, including alcohol, have worked for me)

Second, my 20s would have been much better if someone had taken kid-me aside and explained some neurodiversity stuff to her: "here's the range of normal, here's the degree of suffering that's not expected nor normal and is worth consulting a professional for even if you're managing through great effort to keep it together", etc.

If you'd like to capitalize on your age for some free internet karma, I would personally enjoy reading your thoughts on what your peers think of technology, how they get their information, and how you're all updating the language at the moment.

I also wish that my 14-year-old self had paid more attention to the musical trends and attempted to guess which music that was popular while I was of highschool age would stand the test of time and remain on the radio over the subsequent decades. In retrospect, I'm pretty sure I could probably have taken some decent guesses, but I didn't so now I'll never know whether I would have guessed right :)

Replies from: greta-goodwin↑ comment by atergitna (greta-goodwin) · 2024-06-05T22:20:04.940Z · LW(p) · GW(p)

I really don't know much about popular music, but I'm guessing that music from video games is getting more popular now, because when I ask people what they are humming they usually say it's a song from a game. But maybe those songs are just more humm-able, and the songs that I hear people humming are not a representative sample of all the songs that they listen to.

About updating the language, if you mean the abbreviations and phrases that people use in texts, I think that people do it so that they don't sound overly formal. Sometimes writing a complete sentence in a text would be like speaking to a friend in rhyming verses.

I think the kids I know get most of their information (and opinions) from their parents, and a few things from other places to make them feel grown up (I do this too). I think that because I often hear a friend saying something and then I find out later that that is what their parents think.

(sorry it took me so long to respond)

comment by CronoDAS · 2024-04-27T20:26:03.471Z · LW(p) · GW(p)

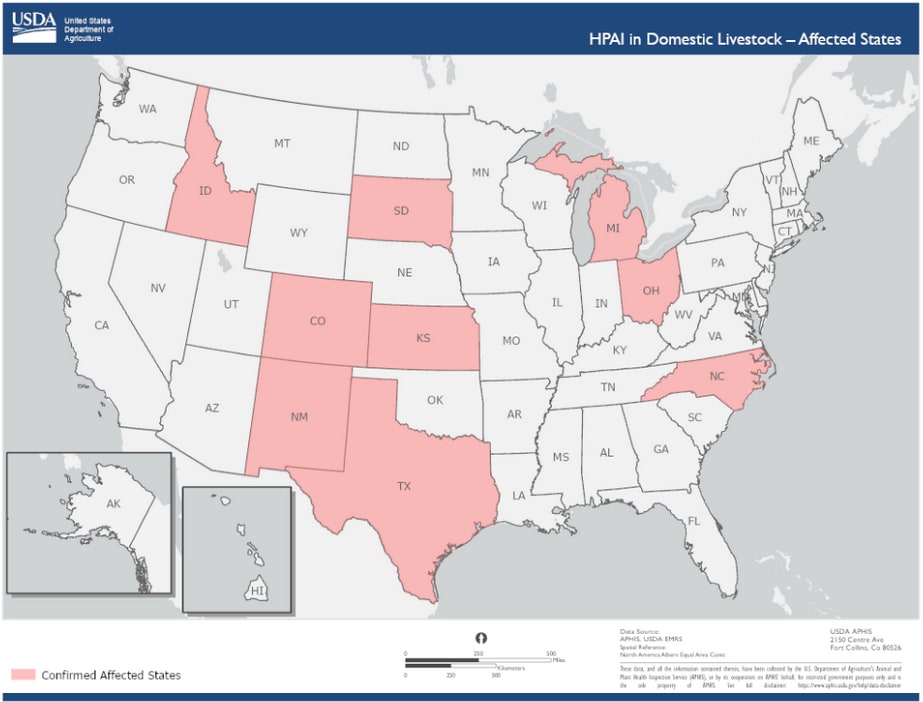

H5N1 has spread to cows. Should I be worried?

Replies from: JenniferRM, wilkox↑ comment by JenniferRM · 2024-05-22T23:44:47.531Z · LW(p) · GW(p)

As of May 16, 2024 an easily findable USDA/CDC report says that widely dispersed cow herds are being detectably infected.

So far, that I can find reports of, only one human dairy worker has been detected as having an eye infection.

I saw a link to a report on twitter from an enterprising journalist who claimed to have gotten some milk directly from small local farms in Texas, and the first lab she tried refuse to test it. They asked the farms. The farms said no. The labs were happy to go with this!

So, the data I've been able to get so far is consistent with many possibly real worlds.

The worst plausible world would involve a jump to humans, undetected for quite a while, allowing time for adaptive evolution, and an "influenza normal" attack rate of 5% -10% for adults and ~30% for kids, and an "avian flu plausible" mortality rate of 56%(??) (but maybe not until this winter when cold weather causes lots of enclosed air sharing?) which implies that by June of 2025 maybe half a billion people (~= 7B*0.12*0.56) will be dead???

But probably not, for a variety of reasons.

However, I sure hope that the (half imaginary?) Administrators who would hypothetically exist in some bureaucracy somewhere (if there was a benevolent and competent government) have noticed that paying two or three people $100k each to make lots of phone calls and do real math (and check each other's math) and invoke various kinds of legal authority to track down the real facts and ensure that nothing that bad happens is a no-brainer in terms of EV.

Replies from: JenniferRM↑ comment by JenniferRM · 2024-05-23T14:59:31.075Z · LW(p) · GW(p)

Also, there's now a second detected human case, this one in Michigan instead of Texas.

Both had a surprising-to-me "pinkeye" symptom profile. Weird!

The dairy worker in Michigan had various "compartments" tested and their nasal compartment (and people they lived with) were all negative. Hopeful?

Apparently and also hopefully this virus is NOT freakishly good at infecting humans and also weirdly many other animals (like covid was with human ACE2, in precisely the ways people have talked about when discussing gain-of-function in years prior to covid).

If we're being foolishly mechanical in our inferences "n=2 with 2 survivors" could get rule of succession treatment. In that case we pseudocount 1 for each category of interest (hence if n=0 we say 50% survival chance based on nothing but pseudocounts), and now we have 3 survivors (2 real) versus 1 dead (0 real) and guess that the worst the mortality rate here would be maybe 1/4 == 25% (?? (as an ass number)), which is pleasantly lower than overall observed base rates for avian flu mortality in humans! :-)

Naive impressions: a natural virus, with pretty clear reservoirs (first birds and now dairy cows), on the maybe slightly less bad side of "potentially killing millions of people"?

I haven't heard anything about sequencing yet (hopefully in a BSL4 (or homebrew BSL5, even though official BSL5s don't exist yet), but presumably they might not bother to treat this as super dangerous by default until they verify that it is positively safe) but I also haven't personally looked for sequencing work on this new thing.

When people did very dangerous Gain-of-Function research with a cousin of this, in ferrets, over 10 year ago (causing a great uproar among some) the supporters argued that it was was worth creating especially horrible diseases on purpose in labs in order to see the details, like a bunch of geeks who would Be As Gods And Know Good From Evil... and they confirmed back then that a handful of mutations separated "what we should properly fear" from "stuff that was ambient".

Four amino acid substitutions in the host receptor-binding protein hemagglutinin, and one in the polymerase complex protein basic polymerase 2, were consistently present in airborne-transmitted viruses. (same source)

It seems silly to ignore this, and let that hilariously imprudent research of old go to waste? :-)

The transmissible viruses were sensitive to the antiviral drug oseltamivir and reacted well with antisera raised against H5 influenza vaccine strains. (still the same source)

(Image sauce.)

Since some random scientists playing with equipment bought using taxpayer money already took the crazy risks back then, it would be silly to now ignore the information they bought so dearly (with such large and negative EV) back then <3

To be clear, that drug worked against something that might not even be the same thing.

All biological STEM stuff is a crapshoot. Lots and lots of stamp-collecting. Lots of guess and check. Lots of "the closest example we think we know might work like X" reasoning. Biological systems or techniques can do almost anything physically possible eventually, but each incremental improvement in repeatability (going from having to try 10 million times to get something to happen to having to try 1 million times (or going from having to try 8 times on average to 4 times on average) due to "progress" ) is kinda "as difficult as the previous increment in progress that made things an order of magnitude more repeatable".

The new flu just went from 1 to 2. I hope it never gets to 4.

comment by Mateusz Bagiński (mateusz-baginski) · 2024-03-15T13:22:43.444Z · LW(p) · GW(p)

Does anybody know what happened to Julia Galef?

Replies from: lahwran↑ comment by the gears to ascension (lahwran) · 2024-04-06T19:26:31.022Z · LW(p) · GW(p)

The only thing I can conclude looking around for her is that she's out of the public eye. Hope she's ok, but I'd guess she's doing fine and just didn't feel like being a public figure anymore. Interested if anyone can confirm that, but if it's true I want to make sure to not pry.

comment by Anand Baburajan (anand-baburajan) · 2024-03-13T18:30:22.464Z · LW(p) · GW(p)

Hello! I'm building an open source communication tool with a one-of-a-kind UI for LessWrong kind of deep, rational discussions. The tool is called CQ2 (https://cq2.co). It has a sliding panes design with quote-level threads. There's a concept of "posts" for more serious discussions with many people and there's "chat" for less serious ones, and both of them have a UI crafted for deep discussions.

I simulated some LessWrong discussions there – they turned out to be a lot more organised and easy to follow. You can check them out in the chat channel and direct message part of the demo on the site. However, it is a bit inconvenient – there's horizontal scrolling and one needs to click to open new threads. Since forums need to prioritize convenience, I think CQ2's design isn't good for LessWrong. But I think the inconvenience is worth it for such discussions at writing-first teams, since it helps them with hyper-focusing on one thing at a time and avoid losing context in order to come to a conclusion and make decisions.

If you have such discussions at work, I would love to learn about your team, your frustrations with existing communication tools, and better understand how CQ2 can help! I would appreciate any feedback or leads! I feel my comment might come off as an ad, but I (and CQ2) strongly share LessWrong's "improving our reasoning and decision-making" core belief and it's open source.

I found LessWrong a few months back. It's a wonderful platform and I particularly love the clean design. I've always loved how writing forces a deeper clarity of thinking and focuses on getting to the right answer.

P.S. I had mistakenly posted this comment in the previous, old Open Thread, hence resharing here.

Replies from: papetoast, anand-baburajan, habryka4, Celarix↑ comment by papetoast · 2024-04-21T08:08:41.697Z · LW(p) · GW(p)

I just stumbled on this website: https://notes.andymatuschak.org/About_these_notes It has a similar UI but for Obsidian-like linked notes. The UI seem pretty good.

↑ comment by Anand Baburajan (anand-baburajan) · 2024-04-23T09:44:27.857Z · LW(p) · GW(p)

I like his UI. In fact, I shared about CQ2 with Andy in February since his notes site was the only other place where I had seen the sliding pane design. He said CQ2 is neat!

Replies from: papetoast↑ comment by Anand Baburajan (anand-baburajan) · 2024-03-27T05:33:18.365Z · LW(p) · GW(p)

Update: now you can create discussions on CQ2! And, here's a demo with an actual LessWrong discussion between Vanessa and Rob: https://cq2.co/demo.

↑ comment by habryka (habryka4) · 2024-03-13T20:26:47.048Z · LW(p) · GW(p)

This is cool!

Two pieces of feedback:

- I think it's quite important that I can at least see the number of responses to a comment before I have to click on the comment icon. Currently it only shows me a generic comment icon if there are any replies.

- I think one of the core use-cases of a comment UI is reading back and forth between two users. This UI currently makes that a quite disjointed operation. I think it's fine to prioritize a different UI experience, but it does feel like a big loss to me.

↑ comment by Anand Baburajan (anand-baburajan) · 2024-03-14T15:30:36.084Z · LW(p) · GW(p)

Thanks for the feedback!

I think it's quite important that I can at least see the number of responses to a comment before I have to click on the comment icon. Currently it only shows me a generic comment icon if there are any replies.

Can you share why you think it's quite important (for a work communication tool)? For a forum, I think it would make sense -- many people prefer reading the most active threads. For a work communication tool, I can't think of any reason why it would matter how many comments a thread has.

I think one of the core use-cases of a comment UI is reading back and forth between two users. This UI currently makes that a quite disjointed operation. I think it's fine to prioritize a different UI experience, but it does feel like a big loss to me.

I thought about this for quite a while and have started to realise that the "posts" UI could be too complicated. I'm going to try out the "chat" and "DMs" UI for posts and see how it goes. Thanks!

Although "Chat" and "DMs"' UI allows easily followable back and forth between people, I would like to point out that CQ2 advocates for topic-wise discussions, not person-wise. Here [LW(p) · GW(p)]'s an example comment from LessWrong. In that comment, it's almost impossible to figure out where the quotes are from -- i.e., what's the context. And what happened next is another person replied to that comment with more quotes. This example was a bit extreme with many quotes but I think my point applies to every comment with quotes. One needs to scroll person-wise through so many topics, instead of topic-wise. I (and CQ2) prefer exploring what are people's thoughts topic-by-topic, not what are the thoughts on all topics simultaneously, person-by-person.

Again, not saying my design is good for LessWrong; I understand forums have their own place. But I think for a tool for work, people would prefer topic-wise over person-wise.

Replies from: jmh, anand-baburajan↑ comment by jmh · 2024-03-15T16:46:23.935Z · LW(p) · GW(p)

My sense, regarding the read the most active thread desire, is that the most active thread might well be amongst either the team working on some project under discussion or across teams that are envolved in or impacted by some project. In such a case I would think knowing where the real discussion is taking place regarding some "corporate discussions" might be helpful and wanted.

I suppose the big question there is what about all the other high volume exchanges, are they more personality driven rather than subject/substance driven. Does the comment count just be a really noisy signal to try keying off?

↑ comment by Anand Baburajan (anand-baburajan) · 2024-03-14T17:32:08.594Z · LW(p) · GW(p)

P.S. I'm open to ideas on building this in collaboration with LessWrong!

↑ comment by Celarix · 2024-04-03T14:26:03.566Z · LW(p) · GW(p)

Ooh, nice. I've been wanting this kind of discussion software for awhile. I do have a suggestion: maybe, when hovering over a highlighted passage, you could get some kind of indicator of how many child comments are under that section, and/or change the highlight contrast for threads that have more children, so we can tell which branches of the discussion got the most attention

Replies from: anand-baburajan↑ comment by Anand Baburajan (anand-baburajan) · 2024-04-05T05:53:28.858Z · LW(p) · GW(p)

Thanks @Celarix [LW · GW]! I've got the same feedback from three people now, so seems like a good idea. However, I haven't understood why it's necessary. For a forum, I think it would make sense -- many people prefer reading the most active threads. For a discussion tool, I can't think of any reason why it would matter how many comments a thread has. Maybe the point is to let a user know if there's any progress in a thread over time, which makes sense.

Replies from: Celarix↑ comment by Celarix · 2024-04-05T15:14:02.010Z · LW(p) · GW(p)

My thinking is that the more discussed threads would have more value to the user. Small threads with 1 or 2 replies are more likely to be people pointing out typos or just saying +1 to a particular passage.

Of course, there is a spectrum - deeply discussed threads are more likely to be angry back-and-forths that aren't very valuable.

Replies from: anand-baburajan↑ comment by Anand Baburajan (anand-baburajan) · 2024-04-08T06:22:01.247Z · LW(p) · GW(p)

would have more value to the user

This feels self and learning focused, as opposed to problem and helping focused, and I'm building CQ2 for the latter.

Small threads with 1 or 2 replies are more likely to be people pointing out typos or just saying +1 to a particular passage.

There could also be important and/or interesting points in a thread with only 1 or 2 replies, and implementing this idea would prevent many people from finding that point, right?

just saying +1 to a particular passage

Will add upvote/downvote.

comment by thornoar · 2024-04-19T17:43:57.819Z · LW(p) · GW(p)

Hello everyone! My name is Roman Maksimovich, I am an immigrant from Russia, currently finishing high school in Serbia. My primary specialization is mathematics, and back in middle school I have had enough education in abstract mathematics (from calculus to category theory and topology) to call myself a mathematician.

My other strong interests include computer science and programming (specifically functional programming, theoretical CS, AI, and systems programming s.a. Linux) as well as languages (specifically Asian languages like Japanese).

I ended up here after reading HP:MOR, which I consider to be an all-time masterpiece. The Sequences are very good too, although not that gripping. Rationality is a very important principle in my life, and so far I found the forum to be very well-organized and the posts to be very informative and well-written, so I will definitely stick around and try to engage in the forum to the best of my ability.

I thought I might do a bit of self-advertising as well. Here's my GitHub: https://github.com/thornoar

If any of you use this very niche mathematical graphics tool called Asymptote, you might be interested to know that I have been developing a cool 6000-line Asymptote library called 'smoothmanifold', which is sort of like a JavaScript framework (an analogy that I do not like) but for drawing abstract mathematical diagrams with Asymptote, whose main problem is the lack of abstraction. In plain Asymptote, you usually have to specify all the coordinates manually and draw objects line by line. In my library I make it so that the code resembles the logical structure of the picture more. You can draw a set as a blob on the plane, and then draw arrows that connect different sets, which would be a nightmare to do manually. And this is only the beginning -- there is a lot more features. If any of this is what interests you, feel free to read the README.md.

I have also written some mathematical papers, the most recent one paired with a software program for strong password creation. If you are interested in cryptography and cybersecurity, and would like to create strong passwords using a hash algorithm, you can take a look at 'pshash', which contains both the algorithm source and binaries, as well as the paper/documentation.

Replies from: nim↑ comment by nim · 2024-05-06T20:32:42.262Z · LW(p) · GW(p)

Congratulations! I'm in today's lucky 10,000 for learning that Asymptote exists. Perhaps due to my not being much of a mathematician, I didn't understand it very clearly from the README... but the examples comparing code to its output make sense! Comparing your examples to the kind of things Asymptote likes to show off (https://asymptote.sourceforge.io/gallery/), I see why you might have needed to build the additional tooling.

I don't think you necessarily have to compare smoothmanifold to a JavaScript framework to get the point across -- it seems to be an abstraction layer that allows one to describe a drawn image in slightly more general terms than Asymptote supports.

I admire how you're investing so much effort to use your talents to help others.

Replies from: thornoar↑ comment by thornoar · 2024-05-06T23:19:55.561Z · LW(p) · GW(p)

Thank you for your kind words! Unfortunately, Asymptote doesn't really have much of a community development platform, but I'll be trying to make smoothmanifold part of the official project in some way or another. Right now the development is so fast that the README is actually out of date... gotta fix that. So far, though, my talents seem less to help others and more to serve as a pleasurable pastime :)

I'm also glad that another person discovered Asymptote and liked it --- it's a language that I cannot stop to admire for the graphical functionality, ease of image creation (pdf's, jpeg's, svg's, etc., all with the same interface), and at the same time amazing programming potential (you can redefine any builtin function, for example, and Asymptote will carry on with your definition)

comment by Nick M (nick-m) · 2024-05-15T09:19:57.452Z · LW(p) · GW(p)

Hey all

I found out about LessWrong through a confluence of factors over the past 6 years or so, starting with Rob Miles' Computerphile videos and then his personal videos, seeing Aella make rounds on the internet, and hearing about Manifold, which all just sorta pointed me towards Eliezer and this website. I started reading the rationality a-z posts about a year ago and have gotten up to the value theory portion, but over the past few months I've started realizing just how much engaging content there is to read on here. I just graduated with my bachelor's and I hope to get involved with AI alignment (but Eliezer paints a pretty bleak picture [LW · GW]for a newcomer like myself (and I know not to take any one person's word as gospel, but I'd be lying if I said it wasn't a little disheartening)).

I'm not really sure how to break into the field of AI safety/alignment, given that college has left me without a lot of money and I don't exactly have a portfolio or degree that scream machine learning. I fear that I would have to go back and get an even higher education to even attempt to make a difference. Maybe, however, this is where my lack of familiarity in the field shows, because I don't actually know what qualifications are required for the positions I'd be interested in or if there's even a formal path for helping with alignment work. Any direction would be appreciated.

↑ comment by Nick M (nick-m) · 2024-05-15T13:15:15.170Z · LW(p) · GW(p)

Additional Context that I realized might be useful for anyone that wants to offer advice:

I'm in my early 20's, so when I say 'portfolio' there's nothing really there outside of hobby projects that aren't that presentable to employers, and my degree is like a mix of engineering and physics simulation. Additionally, I live in Austin, so that might help with opportunities, yet I'm not entirely sure where to look for those.

↑ comment by Screwtape · 2024-05-24T18:10:06.696Z · LW(p) · GW(p)

I'm not an AI safety specialist, but I get the sense that a lot of extra skillsets became useful over the last few years. What kind of positions would be interesting to you?

MIRI was looking for technical writers recently. Robert Miles makes youtube videos. Someone made the P(Doom) question well known enough to be mentioned in the senate. I hope there's a few good contract lawyers looking over OpenAI right now. AISafety.Info is a collection of on-ramps, but it also takes ongoing web development and content writing work. Most organizations need operations teams and accountants no matter what they do.

You might also be surprised how much engineering and physics is a passable starting point. Again, this isn't my field, but if you haven't already done so it might be worth reading a couple recent ML papers and seeing if they make sense to you, or better yet if it looks like you see an idea for improvement or a next step you could jump in or try.

Put your own oxygen mask on though. Especially if you don't have a cunning idea and can't find a way to get started, grab a regular job and get good at that.

Sorry I don't have a better answer.

comment by complicated.world (w_complicated) · 2024-03-28T01:29:59.362Z · LW(p) · GW(p)

Hi LessWrong Community!

I'm new here, though I've been an LW reader for a while. I'm representing complicated.world website, where we strive to use similar rationality approach as here and we also explore philosophical problems. The difference is that, instead of being a community-driven portal like you, we are a small team which is working internally to achieve consensus and only then we publish our articles. This means that we are not nearly as pluralistic, diverse or democratic as you are, but on the other hand we try to present a single coherent view on all discussed problems, each rooted in basic axioms. I really value the LW community (our entire team does) and would like to start contributing here. I would also like to present from time to time a linkpost from our website - I hope this is ok. We are also a not-for-profit website.

Replies from: habryka4↑ comment by habryka (habryka4) · 2024-03-28T04:17:15.606Z · LW(p) · GW(p)

Hey!

It seems like an interesting philosophy. Feel free to crosspost. You've definitely chosen some ambitious topics to try to cover, which I am generally a fan of.

Replies from: w_complicated↑ comment by complicated.world (w_complicated) · 2024-03-28T13:03:53.016Z · LW(p) · GW(p)

Thanks! The key to topic selection is where we find that we are most disagreeing with the popular opinions. For example, the number of times I can cope with hearing someone saying "I don't care about privacy, I have nothing to hide" is limited. We're trying to have this article out before that limit is reached. But in order to reason about privacy's utility and to ground it in root axioms, we first have to dive into why we need freedom. That, in turn requires thinking about mechanisms of a happy society. And that depends on our understanding of happiness, hence that's where we're starting.

comment by P. · 2024-05-25T17:53:48.727Z · LW(p) · GW(p)

Does anyone have advice on how I could work full-time on an alignment research agenda I have? It looks like trying to get a LTFF grant is the best option for this kind of thing, but if after working more time alone on it, it keeps looking like it could succeed, it’s likely that it would become too big for me alone, I would need help from other people, and that looks hard to get. So, any advice from anyone who’s been in a similar situation? Also, how does this compare with getting a job at an alignment org? Is there any org where I would have a comparable amount of freedom if my ideas are good enough?

Edit: It took way longer than I thought it would, but I've finally sent my first LTFF grant application! Now let's just hope they understand it and think it is good.

Replies from: D0TheMath↑ comment by Garrett Baker (D0TheMath) · 2024-05-25T18:31:02.150Z · LW(p) · GW(p)

My recommendation would be to get an LTFF, manifund, or survival and flourishing fund grant to work on the research, then if it seems to be going well, try getting into MATS, or move to Berkeley & work in an office with other independent researchers like FAR for a while, and use either of those situations to find co-founders for an org that you can scale to a greater number of people.

Alternatively, you can call up your smart & trustworthy college friends to help start your org.

I do think there's just not that much experience or skill around these parts with setting up highly effective & scalable organizations, so what help can be provided won't be that helpful. In terms of resources for how to do that, I'd recommend Y Combinator's How to Start a Startup lecture recordings, and I've been recommended the book Traction: Get a Grip on Your Business.

It should also be noted that if you do want to build a large org in this space, once you get to the large org phase, OpenPhil has historically been less happy to fund you (unless you're also making AGI[1]).

This is not me being salty, the obvious response to "OpenPhil has historically not been happy to fund orgs trying to grow to larger numbers of employees" is "but what about OpenAI or Anthropic?" Which I think are qualitatively different than, say, Apollo. ↩︎

comment by metalcrow · 2024-03-26T20:33:05.610Z · LW(p) · GW(p)

Hello! I'm dipping my toes into this forum, coming primarily from the Scott Alexander side of rationalism. Wanted to introduce myself, and share that i'm working on a post about ethics/ethical frameworks i hope to share here eventually!

Replies from: habryka4↑ comment by habryka (habryka4) · 2024-03-27T22:29:34.597Z · LW(p) · GW(p)

Hey metalcrow! Great to have you here! Hope you have a good time and looking forward to seeing your post!

comment by Daniel Kokotajlo (daniel-kokotajlo) · 2024-04-05T15:12:28.612Z · LW(p) · GW(p)

Feature request: I'd like to be able to play the LW playlist (and future playlists!) from LW. I found it a better UI than Spotify and Youtube, partly because it didn't stop me from browsing around LW and partly because it had the lyrics on the bottom of the screen. So... maybe there could be a toggle in the settings to re-enable it?

Replies from: habryka4↑ comment by habryka (habryka4) · 2024-04-05T18:50:22.897Z · LW(p) · GW(p)

I was unsure whether people would prefer that, and decided yesterday to instead cut it, but IDK, I do like it. I might clean up the code and find some way to re-activate it on the site.

Replies from: Dagon, whestler↑ comment by whestler · 2024-04-10T09:52:50.230Z · LW(p) · GW(p)

In terms of my usage of the site, I think you made the right call. I liked the feature when listening but I wanted to get rid of it afterwards and found it frustrating that it was stuck there. Perhaps something hidden on a settings page would be appropriate, but I don't think it's needed as a default part of the site right now.

comment by niplav · 2024-04-22T18:51:12.456Z · LW(p) · GW(p)

There are several sequences which are visible on the profiles of their authors, but haven't yet been added to the library [? · GW]. Those are:

- «Boundaries» Sequence (Andrew Critch) [? · GW]

- Maximal Lottery-Lotteries (Scott Garrabrant) [? · GW]

- Geometric Rationality (Scott Garrabrant) [? · GW]

- UDT 1.01 (Diffractor) [? · GW]

- Unifying Bargaining (Diffractor) [? · GW]

- Why Everyone (Else) Is a Hypocrite: Evolution and the Modular Mind (Kaj Sotala) [? · GW]

- The Sense Of Physical Necessity: A Naturalism Demo (LoganStrohl) [? · GW]

- Scheming AIs: Will AIs fake alignment during training in order to get power? (Joe Carlsmith) [? · GW]

I think these are good enough to be moved into the library.

Replies from: habryka4↑ comment by habryka (habryka4) · 2024-04-22T21:08:26.706Z · LW(p) · GW(p)

This probably should be made more transparent, but the reason why these aren't in the library is because they don't have images for the sequence-item. We display all sequences that people create that have proper images on the library (otherwise we just show it on user's profiles).

Replies from: nim, nim↑ comment by nim · 2024-05-06T20:35:34.629Z · LW(p) · GW(p)

Can random people donate images for the sequence-items that are missing them, or can images only be provided by the authors? I notice that I am surprised that some sequences are missing out on being listed just because images weren't uploaded, considering that I don't recall having experienced other sequences' art as particularly transformative or essential.

Replies from: habryka4↑ comment by habryka (habryka4) · 2024-05-06T22:01:48.764Z · LW(p) · GW(p)

Only the authors (and admins) can do it.

If you paste some images here that seem good to you, I can edit them unilaterally, and will message the authors to tell them I did that.

Replies from: Lorxus↑ comment by Lorxus · 2024-05-09T20:46:32.082Z · LW(p) · GW(p)

I'm neither of these users, but for temporarily secret reasons I care a lot about having the Geometric Rationality and Maximal Lottery-Lottery sequences be slightly higher-quality. Warning: these are AI-generated, if that's a problem. It's that, an abstract pattern, or programmer art from me.

Two options for Maximal Lottery-Lotteries:

Two options for Geometric Rationality:

↑ comment by nim · 2024-05-09T23:39:30.177Z · LW(p) · GW(p)

I am delighted that you chimed in here; these are pleasingly composed and increase my desire to read the relevant sequences. Your post makes me feel like I meaningfully contributed to the improvement of these sequences by merely asking a potentially dumb question in public, which is the internet at its very best.

Artistically, I think the top (fox face) image for lotteries cropped for its bottom 2/3 would be slightly preferable to the other, and the bottom (monochrome white/blue) for geometric makes a nicer banner in the aspect ratio that they're shown as.

Replies from: habryka4, Lorxus↑ comment by habryka (habryka4) · 2024-05-10T02:05:39.036Z · LW(p) · GW(p)

Uploaded them both!

Replies from: Lorxus↑ comment by Lorxus · 2024-05-10T02:01:30.997Z · LW(p) · GW(p)

Your post makes me feel like I meaningfully contributed to the improvement of these sequences by merely asking a potentially dumb question in public, which is the internet at its very best.

IMO you did! Like I said in my comment, for reasons that are secret temporarily I care about those two sequences a lot, but I might not have thought to just ask whether they could be added to the library, nor did I know that the blocker was suitable imagery.

↑ comment by nim · 2024-05-09T23:46:45.120Z · LW(p) · GW(p)

I notice that I am confused: an image of lily pads appears on https://www.lesswrong.com/s/XJBaPPEYAPeDzuAsy [? · GW] when I load it, but when I expand all community sequences on https://www.lesswrong.com/library [? · GW] (a show-all button might be nice....) and search the string "physical" or "necessity" on that page, I do not see the post appearing. This seems odd, because I'd expect that having a non-default image display when the sequence's homepage is loaded and having a good enough image to appear in the list should be the same condition, but it seems they aren't identical for that one.

Replies from: habryka4↑ comment by habryka (habryka4) · 2024-05-09T23:48:34.327Z · LW(p) · GW(p)

There are two images provided for a sequence, the banner image and the card image. The card image is required for it to show up in the Library.

comment by Blacknsilver · 2024-03-19T08:29:14.567Z · LW(p) · GW(p)

Post upvotes are at the bottom but user comment upvotes are at the top of each comment. Sometimes I'll read a very long comment and then have to scroll aaaaall the way back up to upvote it. Is there some reason for this that I'm missing or is it just an oversight?

Replies from: habryka4↑ comment by habryka (habryka4) · 2024-03-19T16:12:15.161Z · LW(p) · GW(p)

Post upvotes are both at the bottom and top, but repeating them for comments at the bottom looks a lot too cluttered. Having them at the top is IMO more important since you want to be able to tell how good something is before you read it.

comment by niplav · 2024-04-08T17:44:56.895Z · LW(p) · GW(p)

Obscure request:

Short story by Yudkowsky, on a reddit short fiction subreddit, about a time traveler coming back to the 19th century from the 21st. The time traveler is incredibly distraught about the red tape in the future, screaming about molasses and how it's illegal to sell food on the street.

Nevermind, found it.

comment by IlluminateReality · 2024-05-28T09:41:40.773Z · LW(p) · GW(p)

Hi everyone!

I found lesswrong at the end of 2022, as a result of ChatGPT’s release. What struck me fairly quickly about lesswrong was how much it resonated with me. Much of the ways of thinking discussed on lesswrong were things I was already doing, but without knowing the name for it. For example, I thought of the strength of my beliefs in terms of probabilities, long before I had ever heard the word “bayesian”.

Since discovering lesswrong, I have been mostly just vaguely browsing it, with some periods of more intense study. But I’m aware that I haven’t been improving my world model at the rate I would like. I have been spending far too much time reading things that I basically already know, or that only give me a small bit of extra information. Therefore, I recently decided to pivot to optimising for the accuracy of my map of the territory. Some of the areas I want to gain a better understanding of are perhaps some of the more ”weird” things discussed on lesswrong, such as quantum immortality or the simulation hypothesis.

Replies from: habryka4↑ comment by habryka (habryka4) · 2024-05-28T19:41:57.401Z · LW(p) · GW(p)

Welcome! I hope you have a good time here!

comment by latterframe · 2024-04-29T18:26:49.686Z · LW(p) · GW(p)

Hey everyone! I work on quantifying and demonstrating AI cybersecurity impacts at Palisade Research with @Jeffrey Ladish [LW · GW].

We have a bunch of exciting work in the pipeline, including:

- demos of well-known safety issues like agent jailbreaks or voice cloning

- replications of prior work on self-replication and hacking capabilities

- modelling of above capabilities' economic impact

- novel evaluations and tools

Most of my posts here will probably detail technical research or announce new evaluation benchmarks and tools. I also think a lot about responsible release, offence/defence balance, and general governance to flesh out my work's theory of change; some of that might also slip in.

See you around 🙃

comment by skybluecat · 2024-04-06T14:00:34.425Z · LW(p) · GW(p)

Hi! I have lurked for quite a while and wonder if I can/should participate more. I'm interested in science in general, speculative fiction and simulation/sandbox games among other stuff. I like reading speculations about the impact of AI and other technologies, but find many of the alignment-related discussions too focused on what the author wants/values rather than what future technologies can really cause. Also, any game recommendations with a hard science/AI/transhumanist theme that are truly simulation-like and not narratively railroading?

Replies from: nim↑ comment by nim · 2024-05-06T20:41:33.516Z · LW(p) · GW(p)

Welcome! If you have the emotional capacity to happily tolerate being disagreed with or ignored, you should absolutely participate in discussions. In the best case, you teach others something they didn't know before, or get a misconception of your own corrected. In the worst case, your remarks are downvoted or ignored.

Your question on games would do well fleshed out into at least a quick take, if not a whole post, answering:

- What games you've ruled out for this and why

- what games in other genres you've found to capture the "truly simulation-like" aspect that you're seeking

- examples of game experiences that you experience as narrative railroading

- examples of ways that games that get mostly there do a "hard science/AI/transhumanist theme" in the way that you're looking for

- perhaps what you get from it being a game that you miss if it's a book, movie, or show?

If you've tried a lot of things and disliked most, then good clear descriptions of what you dislike about them can actually function as helpful positive recommendations for people with different preferences.

comment by cubefox · 2024-04-03T13:36:34.308Z · LW(p) · GW(p)

Is it really desirable to have the new "review bot" in all the 100+ karma comment sections? To me it feels like unnecessary clutter, similar to injecting ads.

Replies from: habryka4↑ comment by habryka (habryka4) · 2024-04-03T16:28:07.162Z · LW(p) · GW(p)

Where else would it go? We need a minimum level of saliency to get accurate markets, and I care about the signal from the markets a good amount.

Replies from: Dagon, neel-nanda-1↑ comment by Dagon · 2024-04-06T16:05:17.241Z · LW(p) · GW(p)

I haven't noticed it (literally at all - I don't think I've seen it, though I'm perhaps wrong). Based on this comment, I just looked at https://www.lesswrong.com/users/review-bot?from=search_autocomplete [LW · GW] and it seems a good idea (and it points me to posts I may have missed - I tend to not look at the homepage, just focusing on recent posts and new comments on posts on https://www.lesswrong.com/allPosts). [? · GW]

I think putting a comment there is a good mechanism to track, and probably easier and less intrusive than a built-in site feature. I have no clue if you're actually getting enough participation in the markets to be useful - it doesn't look like it at first glance, but perhaps I'm wrong.

It does seem a little weird (and cool, but mostly in the "experiment that may fail, or may work so well we use it elsewhere" way) to have yet another voting mechanism for posts. I kind of like the explicitness of "make a prediction about the future value of this post" compared to "loosely-defined up or down".

↑ comment by Neel Nanda (neel-nanda-1) · 2024-05-21T10:30:38.352Z · LW(p) · GW(p)

I only ever notice it on my own posts when I get a notification about it

comment by spencerkaplan (spencermkaplan) · 2024-05-20T20:48:47.844Z · LW(p) · GW(p)

Hi everyone! I'm new to LW and wanted to introduce myself. I'm from the SF bay area and working on my PhD in anthropology. I study AI safety, and I'm mainly interested in research efforts that draw methods from the human sciences to better understand present and future models. I'm also interested in the AI safety's sociocultural dynamics, including how ideas circulate the research community and how uncertainty figures into our interactions with models. All thoughts and leads are welcome.

This work led me to LW. Originally all the content was overwhelming but now there's much I appreciate. It's my go-to place for developments in the field and informed responses. More broadly, learning about rationality through the sequences and other posts is helping me improve my work as a researcher and I'm looking forward to continuing this process.

Replies from: habryka4↑ comment by habryka (habryka4) · 2024-05-20T21:16:19.015Z · LW(p) · GW(p)

Welcome! I hope you have a good time here!

comment by Nevin Wetherill (nevin-wetherill) · 2024-05-09T18:59:09.849Z · LW(p) · GW(p)

Hey, I'm new to LessWrong and working on a post - however at some point the guidelines which pop up at the top of a fresh account's "new post" screen went away, and I cannot find the same language in the New Users Guide or elsewhere on the site.

Does anyone have a link to this? I recall a list of suggestions like "make the post object-level," "treat it as a submission for a university," "do not write a poetic/literary post until you've already gotten a couple object-level posts on your record."

It seems like a minor oversight if it's impossible to find certain moderation guidelines/tips and tricks if you've already saved a draft/posted a comment.

I am not terribly worried about running headfirst into a moderation filter, as I can barely manage to write a comment which isn't as high effort of an explanation as I can come up with - but I do want that specific piece of text for reference, and now it appears to have evaporated into the shadow realm.

Am I just missing a link that would appear if I searched something else?

(Edit: also, sorry if this is the wrong place for this, I would've tried the "intercom" feature, but I am currently on the mobile version of the site, and that feature appears to be entirely missing there - and yes, I checked my settings to make sure it wasn't "hidden")

Replies from: T3t, habryka4, Benito↑ comment by RobertM (T3t) · 2024-05-09T21:37:13.237Z · LW(p) · GW(p)

EDIT: looks like habryka got there earlier and I didn't see it.

https://www.lesswrong.com/posts/zXJfH7oZ62Xojnrqs/#sLay9Tv65zeXaQzR4 [LW · GW]

Intercom is indeed hidden on mobile (since it'd be pretty intrusive at that screen size).

Replies from: nevin-wetherill↑ comment by Nevin Wetherill (nevin-wetherill) · 2024-05-10T03:52:21.381Z · LW(p) · GW(p)

Thanks anyway :)

Also, yeah, makes sense. Hopefully this isn't a horribly misplaced thread taking up people's daily scrolling bandwidth with no commensurate payoff.

Maybe I'll just say something here to cash out my impression of the "first post" intro-message in question: its language has seemed valuable to my mentality in writing a post so far.

Although, I think I got a mildly misleading first-impression about how serious the filter was. The first draft for a post I half-finished was a fictional explanatory dialogue involving a lot of extended metaphors... After reading that I had the mental image of getting banned immediately with a message like "oh, c'mon, did you even read the prompt?"

Still, that partially-mistaken mental frame made me go read more documentation on the editor and take a more serious approach to planning a post. A bit like a very mild temperature-drop shock to read "this is like a university application."

I grok the intent, and I'm glad the community has these sorta norms. It seems likely to help my personal growth agenda on some dimensions.

↑ comment by habryka (habryka4) · 2024-05-09T20:07:14.239Z · LW(p) · GW(p)

It's not the most obvious place, but the content lives here: https://www.lesswrong.com/posts/zXJfH7oZ62Xojnrqs/lesswrong-moderation-messaging-container?commentId=sLay9Tv65zeXaQzR4 [LW(p) · GW(p)]

Replies from: nevin-wetherill↑ comment by Nevin Wetherill (nevin-wetherill) · 2024-05-09T20:57:04.928Z · LW(p) · GW(p)

Thanks! :)

Yeah, I don't know if it's worth it to make it more accessible. I may have just failed a Google + "keyword in quotation marks" search, or failed to notice a link when searching via LessWrong's search feature.

Actually, an easy fix would just be for Google to improve their search tools, so that I can locate any link regardless of how specific for any public webpage just by ranting at my phone.

Anyway, thanks as well to Ben for tagging those mod-staff people.

comment by Nathan Helm-Burger (nathan-helm-burger) · 2024-05-02T20:51:59.051Z · LW(p) · GW(p)

Some features I'd like:

a 'mark read' button next to posts so I could easily mark as read posts that I've read elsewhere (e.g. ones cross-posted from a blog I follow)

a 'not interested' button which would stop a given post from appearing in my latest or recommended lists. Ideally, this would also update my recommended posts so as to recommend fewer posts like that to me. (Note: the hide-from-front-page button could be this if A. It worked even on promoted/starred posts, and B. it wasn't hidden in a three-dot menu where it's frustrating to access)

a 'read later' button which will put the post into a reading list for me that I can come back to later.

a toggle button for 'show all' / 'show only unread' so that I could easily switch between the two modes.

These features would help me keep my 'front page' feeling cleaner and more focused.

Replies from: Alex_Altair↑ comment by Alex_Altair · 2024-05-11T05:54:12.198Z · LW(p) · GW(p)

You can "bookmark" a post, is that equivalent to your desired "read later"?

Replies from: nathan-helm-burger↑ comment by Nathan Helm-Burger (nathan-helm-burger) · 2024-05-11T18:28:19.789Z · LW(p) · GW(p)

Yeah, I should use that. I'd need to remember to unbookmark after reading it I suppose.

comment by niplav · 2024-04-29T16:42:18.639Z · LW(p) · GW(p)

Someone strong-downvoted a post/question of mine [LW · GW] with a downvote strength of 10, if I remember correctly.

I had initially just planned to keep silent about this, because that's their good right to do, if they think the post is bad or harmful.

But since the downvote, I can't shake off the curiosity of why that person disliked my post so strongly—I'm willing to pay $20 for two/three paragraphs of explanation by the person why they downvoted it.

Replies from: ektimo↑ comment by ektimo · 2024-04-29T19:30:18.191Z · LW(p) · GW(p)

Maybe because somebody didn't think your post qualified as a "Question"? I don't see any guidelines on what qualifies as a "question" versus a "post" -- and personally I wouldn't have downvoted because of this --- but your question seems a little long/opinionated.

Replies from: niplav, Linch, ektimo↑ comment by ektimo · 2024-04-29T19:38:48.459Z · LW(p) · GW(p)

Btw, I really appreciate if people explain downvotes, and it would be great if there was some way to still allow unexplained downvotes while incentivizing adding explanations. Maybe a way (attached to the post) for people to guess why other people downvoted?

Replies from: habryka4↑ comment by habryka (habryka4) · 2024-04-29T19:50:33.911Z · LW(p) · GW(p)

Yeah, I feel kind of excited about having some strong-downvote and strong-upvote UI which gives you one of a standard set of options for explaining your vote, or allows you to leave it unexplained, all anonymous.

comment by gilch · 2024-04-17T21:33:14.218Z · LW(p) · GW(p)

PSA: Tooth decay might be reversible! The recent discussion around the Lumina [LW · GW] anti-cavity prophylaxis reminded me of a certain dentist's YouTube channel I'd stumbled upon recently, claiming that tooth decay can be arrested and reversed using widely available over-the-counter dental care products. I remember my dentist from years back telling me that if regular brushing and flossing doesn't work, and the decay is progressing, then the only treatment option is a filling. I wish I'd known about alternatives back then, because I definitely would have tried that first. Remineralization wouldn't have helped in the case when I broke a tooth, but I maybe could have avoided all my other fillings. I am very suspicious of random health claims on the Internet, but this one seemed reasonably safe and cheap to try, even if it ultimately doesn't work.

Replies from: nathan-helm-burger, abandon↑ comment by Nathan Helm-Burger (nathan-helm-burger) · 2024-04-24T17:08:27.824Z · LW(p) · GW(p)

I've been using a remineralization toothpaste imported from Japan for several years now, ever since I mentioned reading about remineralization to a dentist from Japan. She recommended the brand to me. FDA is apparently bogging down release in the US, but it's available on Amazon anyway. It seems to have slowed, but not stopped, the formation of cavities. It does seem to result in faster plaque build-up around my gumline, like the bacterial colonies are accumulating some of the minerals not absorbed by the teeth. The brand I use is apagard. [Edit: I'm now trying the recommended mouthwash CloSys as the link above recommended, using it before brushing, and using Listerine after. The CloSys seems quite gentle and pleasant as a mouthwash. Listerine is harsh, but does leave my teeth feeling cleaner for much longer. I'll try this for a few years and see if it changes my rate of cavity formation.]

Replies from: gilch↑ comment by gilch · 2024-05-15T21:04:40.078Z · LW(p) · GW(p)

That dentist on YouTube also recommended a sodium fluoride rinse (ACT) after the Listerine and mentioned somewhere that if you could get your teenager to use only one of the three rinses, that it should be the fluoride. (I've heard others suggest waiting 20 minutes after brushing before rinsing out the toothpaste to allow more time for the fluoride in the toothpaste to work.) She also mentioned that the brands involved sell multiple formulations with different concentrations and even different active ingredients (some of which may even be counterproductive), and she can't speak to the efficacy of the treatment if you don't use the exact products that she has experience with.

↑ comment by dirk (abandon) · 2024-04-18T08:39:10.440Z · LW(p) · GW(p)

I apologize for my lack of time to find the sources for this belief, so I could well be wrong, but my recollection of looking up a similar idea is that I found it to be reversible only in the very earliest stages, when the tooth has weakened but not yet developed a cavity proper.

Replies from: gilch↑ comment by gilch · 2024-04-18T16:40:04.135Z · LW(p) · GW(p)

I didn't say "cavity"; I said, "tooth decay". No-one is saying remineralization can repair a chipped, cracked, or caved-in tooth. But this dentist does claim that the decay (caries) can be reversed even after it has penetrated the enamel and reached the dentin, although it takes longer (a year instead of months), by treating the underlying bacterial infection and promoting mineralization. It's not clear to me if the claim is that a small hole can fill in on its own, but a larger one probably won't although the necessary dental treatment (filling) in that case will be less invasive if the surrounding decay has been arrested.

I am not claiming to have tested this myself. This is hearsay. But the protocol is cheap to try and the mechanism of action seems scientifically plausible given my background knowledge.

comment by jmh · 2024-03-17T13:55:25.150Z · LW(p) · GW(p)

How efficient are equity markets? No, not in the EMH sense.

My take is that market efficiency viewed from economics/finance is about total surplus maximization -- the area between the supply and demand curves. Clearly when S and D are order schedules and P and Q correspond to the S&D intersection one maximizes the area of the triangle defined in the graph.

But existing equity markets don't work off an ordered schedule but largely match trades in a somewhat random order -- people place orders (bids and offers) throughout the day and as they come in during market hours trades occur.

Given these are pure pecuniary markets the total surplus represents something of a total profit in the market for the day's activities (clearly something different than the total profits to the actual share owners who sold so calling it profit might be a bit confusing). One might think markets should be structured to maximize that area but clearly that is not the case.

It would be a very unsual case for the daily order flow to perfectly match with the implied day demand and supply curves that would represent the bids and offers (lets call them the "real" bids and offers but I'm not entirely sure how to distinquish that from other bids and offers that will start evaporating as soon as they become the market bid or offer). So would a settlement structure line mutual funds produce a better outcome for equities?

Maybe. In other words, rather than putting a market order in and having it executed right then or putting a limit order in and if the market moves to that price it executes, all orders get put in the order book and then at market close the clearing price is determed and those trades that actually make sense occur. What is prevented is the case of either buyers above the clearlin price from getting paired with sellers that are also above the clearling price, or the reverse, buyers biding below the clearing price pairing with sellers who are also willing to sell below the clearin price. Elimination of both inframarginal and extramarginal trades that represend low value exchange pairings.

One thing I wonder about here is what information might be lost/masked and if the informational value might outweigh the reduction in suplus captured. But I'm also not sure that whatever information might be seen in the current structure is not also present in the end of day S&D schedules and so fully reflected in the price outcomes.

Replies from: thomas-kwa↑ comment by Thomas Kwa (thomas-kwa) · 2024-03-17T18:58:48.629Z · LW(p) · GW(p)

In practice it is not as bad as uniform volume throughout the day would be for two reasons:

- Market-makers narrow spreads to prevent any low-value-exchange pairings that would be predictable price fluctuations. They do extract some profits in the process.

- Volume is much higher near the open and close.

I would guess that any improvements of this scheme would manifest as tighter effective spreads, and a reduction in profits of HFT firms (which seem to provide less value to society than other financial firms).

Replies from: jmh↑ comment by jmh · 2024-03-17T19:43:01.299Z · LW(p) · GW(p)

I had prehaps a bit unjustly tossed the market maker role into that "not real bid/off" bucket. I also agree they do serve to limit the worst case matches. But such a role would simply be unnecessary so I still wonder about the cost in terms of the profits captured by the market makers. Is that a necessary cost in today's world? Not sure.

And I do say that as someone who is fairly active in the markets and have taken advantage of thin markets in the off market hours sessions where speads can widen up a lot.

comment by Drake Morrison (Leviad) · 2024-04-17T20:18:35.953Z · LW(p) · GW(p)

Feature Suggestion: add a number to the hidden author names.

I enjoy keeping the author names hidden when reading the site, but find it difficult to follow comment threads when there isn't a persistent id for each poster. I think a number would suffice while keeping the hiddenness.

comment by Mateusz Bagiński (mateusz-baginski) · 2024-04-10T05:52:44.125Z · LW(p) · GW(p)

Any thoughts on Symbolica? (or "categorical deep learning" more broadly?)

All current state of the art large language models such as ChatGPT, Claude, and Gemini, are based on the same core architecture. As a result, they all suffer from the same limitations.

Extant models are expensive to train, complex to deploy, difficult to validate, and infamously prone to hallucination. Symbolica is redesigning how machines learn from the ground up.

We use the powerfully expressive language of category theory to develop models capable of learning algebraic structure. This enables our models to have a robust and structured model of the world; one that is explainable and verifiable.

It’s time for machines, like humans, to think symbolically.

- How likely is it that Symbolica [or sth similar] produces a commercially viable product?

- How likely is it that Symbolica creates a viable alternative for the current/classical DL?

- I don't think it's that different from the intentions behind Conjecture's CoEms proposal. [LW · GW] (And it looks like Symbolica have more theory and experimental results backing up their ideas.)

- Symbolica don't use the framing of AI [safety/alignment/X-risk], but many people behind the project are associated with the Topos Institute that hosted some talks from e.g. Scott Garrabrant or Andrew Critch.

- I don't think it's that different from the intentions behind Conjecture's CoEms proposal. [LW · GW] (And it looks like Symbolica have more theory and experimental results backing up their ideas.)

- What is the expected value of their research for safety/verifiability/etc?

- How likely is it that whatever Symbolica produces meaningfully contributes to doom (e.g. by advancing capabilities research without at the same time sufficiently/differentially advancing interpretability/verifiability of AI systems)?

(There's also PlantingSpace but their shtick seems to be more "use probabilistic programming and category theory to build a cool Narrow AI-ish product" whereas Symbolica want to use category theory to revolutionize deep learning.)

Replies from: D0TheMath, faul_sname↑ comment by Garrett Baker (D0TheMath) · 2024-04-16T23:44:42.750Z · LW(p) · GW(p)

Hi John,

thank you for sharing the job postings. We’re starting something really exciting, and as research leads on the team, we - Paul Lessard and Bruno Gavranović - thought wed provide clarifications.

Symbolica was not started to improve ML using category theory. Instead, Symbolica was founded ~2 years ago, with its 2M seed funding round aimed at tackling the problem of symbolic reasoning, but at the time, its path to getting there wasn’t via categorical deep learning (CDL). The original plan was to use hypergraph rewriting as means of doing learning more efficiently. That approach however was eventually shown unviable.

Symbolica’s pivot to CDL started about five months ago. Bruno had just finished his Ph.D. thesis laying the foundations for the topic and we reoriented much of the organization towards this research direction. In particular, we began: a) refining a roadmap to develop and apply CDL, and b) writing a position paper, in collaboration with with researchers at Google DeepMind which you’ve cited below.

Over these last few months, it has become clear that our hunches about applicability are actually exciting and viable research directions. We’ve made fantastic progress, even doing some of the research we planned to advocate for in the aforementioned position paper. Really, we discovered just how much Taking Categories Seriously gives you in the field of Deep Learning.

Many advances in DL are about creating models which identify robust and general patterns in data (see the Transformers/Attention mechanism, for instance). In many ways this is exactly what CT is about: it is an indispensable tool for many scientists, including ourselves, to understand the world around us: to find robust patterns in data, but also to communicate, verify, and explain our reasoning.

At the same time, the research engineering team of Symbolica has made significant, independent, and concrete progress implementing a particular deep learning model that operates on text data, but not in an autoregressive manner as most GPT-style models do.

These developments were key signals to Vinod and other investors, leading to the closing of the 31M funding round.

We are now developing a research programme merging the two, leveraging insights from theories of structure, e.g. categorical algebra, as means of formalising the process by which we find structure in data. This has twofold consequence: pushing models to identify more robust patterns in data, but also interpretable and verifiable ones.

In summary:

a) The push to apply category theory was not based on a singular whim, as the the post might suggest,

but that instead

b) Symbolica is developing a serious research programme devoted to applying category theory to deep learning, not merely hiring category theorists

All of this is to add extra context for evaluating the company, its team, and our direction, which does not come across in the recently published tech articles.

We strongly encourage interested parties to look at all of the job ads, which we’ve tailored to particular roles. Roughly, in the CDL team, we’re looking for either

1) expertise in category theory, and a strong interest in deep learning, or

2) expertise in deep learning, and a strong interest in category theory.

at all levels of seniority.

Happy to answer any other questions/thoughts.

Bruno Gavranović,

Paul Lessard

↑ comment by faul_sname · 2024-04-13T00:55:32.733Z · LW(p) · GW(p)

I'd bet against anything particularly commercially successful. Manifold could give better and more precise predictions if you operationalize "commercially viable".

Replies from: D0TheMath↑ comment by Garrett Baker (D0TheMath) · 2024-04-13T01:55:21.051Z · LW(p) · GW(p)

Is this coming from deep knowledge about Symbolica's method, or just on outside view considerations like "usually people trying to think too big-brained end up failing when it comes to AI".

Replies from: faul_sname↑ comment by faul_sname · 2024-04-13T03:53:17.557Z · LW(p) · GW(p)

Outside view (bitter lesson).

Or at least that's approximately true. I'll have a post on why I expect the bitter lesson to hold eventually, but is likely to be a while. If you read this blog post you can probably predict my reasoning for why I expect "learn only clean composable abstraction where the boundaries cut reality at the joints" to break down as an approach.

Replies from: D0TheMath↑ comment by Garrett Baker (D0TheMath) · 2024-04-13T16:33:19.019Z · LW(p) · GW(p)

I don’t think the bitter lesson strictly applies here. Since they’re doing learning, and the bitter lesson says “learning and search is all that is good”, I think they’re in the clear, as long as what they do is compute scalable.

(this is different from saying there aren’t other reasons an ignorant person (a word I like more than outside view in this context since it doesn’t hide the lack of knowledge) may use to conclude they won’t succeed)

Replies from: faul_sname↑ comment by faul_sname · 2024-04-13T18:40:54.558Z · LW(p) · GW(p)

By building models which reason inductively, we tackle complex formal language tasks with immense commercial value: code synthesis and theorem proving.

There are commercially valuable uses for tools for code synthesis and theorem proving. But structured approaches of that flavor don't have a great track record of e.g. doing classification tasks where the boundary conditions are messy and chaotic, and similarly for a bunch of other tasks where gradient-descent-lol-stack-more-layer-ML shines.

comment by Steven Byrnes (steve2152) · 2024-04-02T17:00:40.285Z · LW(p) · GW(p)

I’m in the market for a new productivity coach / accountability buddy, to chat with periodically (I’ve been doing one ≈20-minute meeting every 2 weeks) about work habits, and set goals, and so on. I’m open to either paying fair market rate, or to a reciprocal arrangement where we trade advice and promises etc. I slightly prefer someone not directly involved in AGI safety/alignment—since that’s my field and I don’t want us to get nerd-sniped into object-level discussions—but whatever, that’s not a hard requirement. You can reply here, or DM or email me. :) update: I’m all set now

comment by Mickey Beurskens · 2024-06-11T20:39:02.586Z · LW(p) · GW(p)

Hi everyone, my name is Mickey Beurskens. I've been reading posts for about two years now, and I would like to participate more actively in the community, which is why I'll take the opportunity to introduce myself here.

In my daily life I am doing independent AI engineering work (contracting mostly). About three years ago a (then) colleague introduced me to HPMOR, which was a wonderful introduction to what would later become some quite serious deliberations on AI alignment and LessWrong! After testing out rationality principles in my life I was convinced this was a skill I wanted to pursue, which naturally led to me reading posts here regularly.

Unfortunately I've been quite stupid on the topic of AI alignment and safety. I actually set up an association in the Netherlands with some friends for students to learn AI through competitions and project work, so I was always interested in the topic, yet it took an introduction to LessWrong after my studies had finished to even consider if building fully general autonomous systems would be a safe thing to just go ahead and do. Now that the initial shock has settled I'm quite interested in alignment in particular, doing some of my own research and dedicating some serious time to understand the issue. Any recommendations would be highly appreciated.

Other than that I love exploring psychological topics and am generally broadly interested. I'm looking forward to engage more, thanks for creating such an awesome place to connect with!

Replies from: habryka4↑ comment by habryka (habryka4) · 2024-06-11T22:16:03.152Z · LW(p) · GW(p)

Hello and welcome! I also found all of this stuff via HPMoR many years ago. Hope you have a good time commenting more!

Replies from: Mickey Beurskens↑ comment by Mickey Beurskens · 2024-06-11T22:26:00.873Z · LW(p) · GW(p)

It took some time to go from reading to commenting, so I appreciate the kind words!

comment by Marius Adrian Nicoară · 2024-05-19T08:27:26.527Z · LW(p) · GW(p)

Hello, I'm Marius, an embedded SW developer looking to pivot into AI and machine learning.

I've read the Sequences and am reading ACX somewhat regularly.

Looking forward to a fruitful discussions.

Best wishes,

Marius Nicoară

↑ comment by habryka (habryka4) · 2024-05-19T18:01:09.798Z · LW(p) · GW(p)

Welcome! Looking forward to having you around!

comment by Lorxus · 2024-05-10T16:03:42.816Z · LW(p) · GW(p)

I'm neither of these users, but for temporarily secret reasons I care a lot about having the Geometric Rationality and Maximal Lottery-Lottery sequences be slightly higher-quality.

The reason is not secret anymore! I have finished and published a two [LW · GW]-post [LW · GW]sequence on maximal lottery-lotteries.

comment by jenn (pixx) · 2024-05-05T02:08:59.225Z · LW(p) · GW(p)

I'm trying to remember the name of a blog. The only things I remember about it is that it's at least a tiny bit linked to this community, and that there is some sort of automatic decaying endorsement feature. Like, there was a subheading indicating the likely percentage of claims the author no longer endorses based on the age of the post. Does anyone know what I'm talking about?

Replies from: Raemon↑ comment by Raemon · 2024-05-05T03:16:03.421Z · LW(p) · GW(p)

The ferrett.

Replies from: pixx↑ comment by jenn (pixx) · 2024-05-06T19:18:05.106Z · LW(p) · GW(p)

That's it! Thank you.

comment by michael_mjd · 2024-04-25T19:17:41.756Z · LW(p) · GW(p)

Is there a post in the Sequences about when it is justifiable to not pursue going down a rabbit hole? It's a fairly general question, but the specific context is a tale as old as time. My brother, who has been an atheist for decades, moved to Utah. After 10 years, he now asserts that he was wrong and his "rigorous pursuit" of verifying with logic and his own eyes, leads him to believe the Bible is literally true. I worry about his mental health so I don't want to debate him, but felt like I should give some kind of justification for why I'm not personally embarking on a bible study. There's a potential subtext of, by not following his path, I am either not that rational, or lack integrity. The subtext may not really be there, but I figure if I can provide a well thought out response or summarize something from EY, it might make things feel more friendly, e.g. "I personally don't have enough evidence to justify spending the time on this, but I will keep an open mind if any new evidence comes up."

Replies from: nim, kromem, gilch↑ comment by nim · 2024-05-06T20:57:53.785Z · LW(p) · GW(p)

More concrete than your actual question, but there's a couple options you can take:

-