Davidad's Bold Plan for Alignment: An In-Depth Explanation

post by Charbel-Raphaël (charbel-raphael-segerie), Gabin (gabin-kolly) · 2023-04-19T16:09:01.455Z · LW · GW · 40 commentsContents

Context How to read this post? Bird's-eye view Fine-grained Scheme Step 1: Understand the problem Step 2: Devise a plan Step 3 Examine the solution Step 4 Carry out the plan Hypotheses discussion About Category theory and Infra-Bayesianism High level criticism High Level Hopes Intuition pump for the feasibility of creating a highly detailed world model Comparison with OpenAI’s Plan Conclusion Annex Governance strategy Roadmap Stage 1: Early research projects Stage 2: Industry actors first projects Stage 3: Labs commitments Stage 4: International consortium to build OAA. Some important testable first research projects Formalization of the world model Try to fine-tune a language model as a heuristic for model-checking Defining a sufficiently expressive formal meta-ontology for world-models Experimenting with the compositional version control system Getting traction on the deontic feasibility hypothesis Some other projects Types explanation None 40 comments

Gabin Kolly and Charbel-Raphaël Segerie contributed equally to this post. Davidad proofread this post.

Thanks to Vanessa Kosoy, Siméon Campos, Jérémy Andréoletti, Guillaume Corlouer, Jeanne S., Vladimir I. and Clément Dumas for useful comments.

Context

Davidad has proposed an intricate architecture [AF · GW] aimed at addressing the alignment problem, which necessitates extensive knowledge to comprehend fully. We believe that there are currently insufficient public explanations of this ambitious plan. The following is our understanding of the plan, gleaned from discussions with Davidad.

This document adopts an informal tone. The initial sections offer a simplified overview, while the latter sections delve into questions and relatively technical subjects. This plan may seem extremely ambitious, but the appendix provides further elaboration on certain sub-steps and potential internship topics, which would enable us to test some ideas relatively quickly.

Davidad’s plan is an entirely new paradigmatic approach to address the hard part of alignment: The Open Agency Architecture aims at “building an AI system that helps us ethically end the acute risk period without creating its own catastrophic risks that would be worse than the status quo”.

This plan is motivated on the assumption that current paradigms for model alignment won’t be successful:

- LLMs won't be able to be aligned just with RLHF [LW · GW] or a variation of this technique.

- Scalable oversight will be too [LW · GW] difficult.

- Interpretability used to retro-engineer an arbitrary model will not be feasible. Instead, it would be easier to iteratively construct an understandable world model.

Unlike OpenAI's plan, which is a meta-level plan that delegates the task of finding a solution for alignment with future AI, davidad's plan is an object-level plan that takes drastic measures to prevent future problems. It is also based on rather testable assumptions that can be relatively quickly tested (see the annex).

Plan’s tldr: Utilize near-AGIs to build a detailed world simulation, train and formally verify within it that the AI adheres to coarse preferences and avoids catastrophic outcomes.

How to read this post?

This post is much easier to read than the original post. But we are aware that it still contains a significant amount of technicality. Here's a way to read this post gradually:

- Start with the Bird's-eye view (5 minutes)

- Contemplate the Bird's-eye view diagram (5 minutes), without spending time understanding the mathematical notations in the diagram.

- Fine Grained Scheme: Focus on the starred sections. Skip the non-starred sections. Don’t spend too much time on difficulties (10 minutes)

- From "Hypothesis discussion" onwards, the rest of the post should be easy to read (10 minutes)

For more technical details, you can read:

- The starred sections of the Fine Grained Scheme

- The Annex.

Bird's-eye view

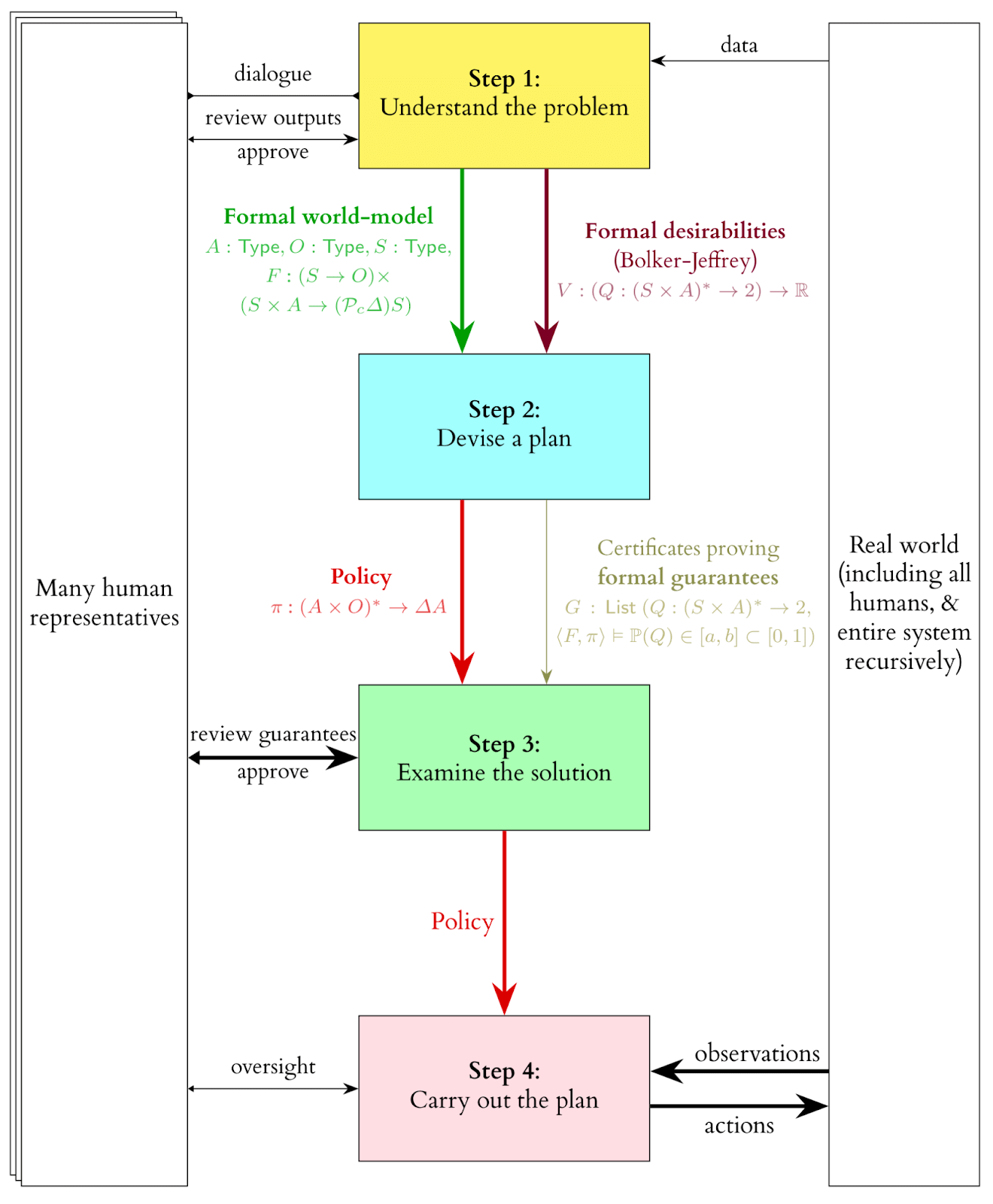

The plan comprises four key steps:

- Understand the problem: This entails formalizing the problem, similar to deciphering the rules of an unfamiliar game like chess. In this case, the focus is on understanding reality and human preferences.

- World Modeling: Develop a comprehensive and intelligent model of the world capable of being used for model-checking. This could be akin to an ultra-realistic video game built on the finest existing scientific models. Achieving a sufficient model falls under the Scientific Sufficiency Hypothesis (a discussion of those hypotheses can be found later on) and would be one of the most ambitious scientific projects in human history.

- Specification Modeling: Generate a list of moral desiderata, such as a model that safeguards humans from catastrophes. The Deontic Sufficiency Hypothesis posits that it is possible to find an adequate model of these coarse preferences.

- Devise a plan: With the world model and desiderata encoded in a formal language, we can now strategize within this framework. Similar to chess, a model can be trained to develop effective strategies. Formal verification can then be applied to these strategies, which is the basis of the Model-Checking Feasibility Hypothesis.

- Examine the solution: Upon completing step 2, a solution (in the form of a neural network implementing a policy or strategy) is obtained, along with proof that the strategy adheres to the established desiderata. This strategy can be scrutinized using various AI safety techniques, such as interpretability and red teaming.

- Carry out the plan: The model is employed in the real world to generate high-level strategies, with the individual components of these strategies executed by RL agents specifically trained for each task and given time-bound goals.

The plan is dubbed "Open Agency Architecture" because it necessitates collaboration among numerous humans, remains interpretable and verifiable, and functions more as an international organization or "agency" rather than a singular, unified "agent." The name Open Agency was drawn from Eric Drexler’s Open Agency Model [AF · GW], along with many high-level ideas.

Here is the main diagram. (An explanation of the notations is provided here [LW · GW]):

Fine-grained Scheme

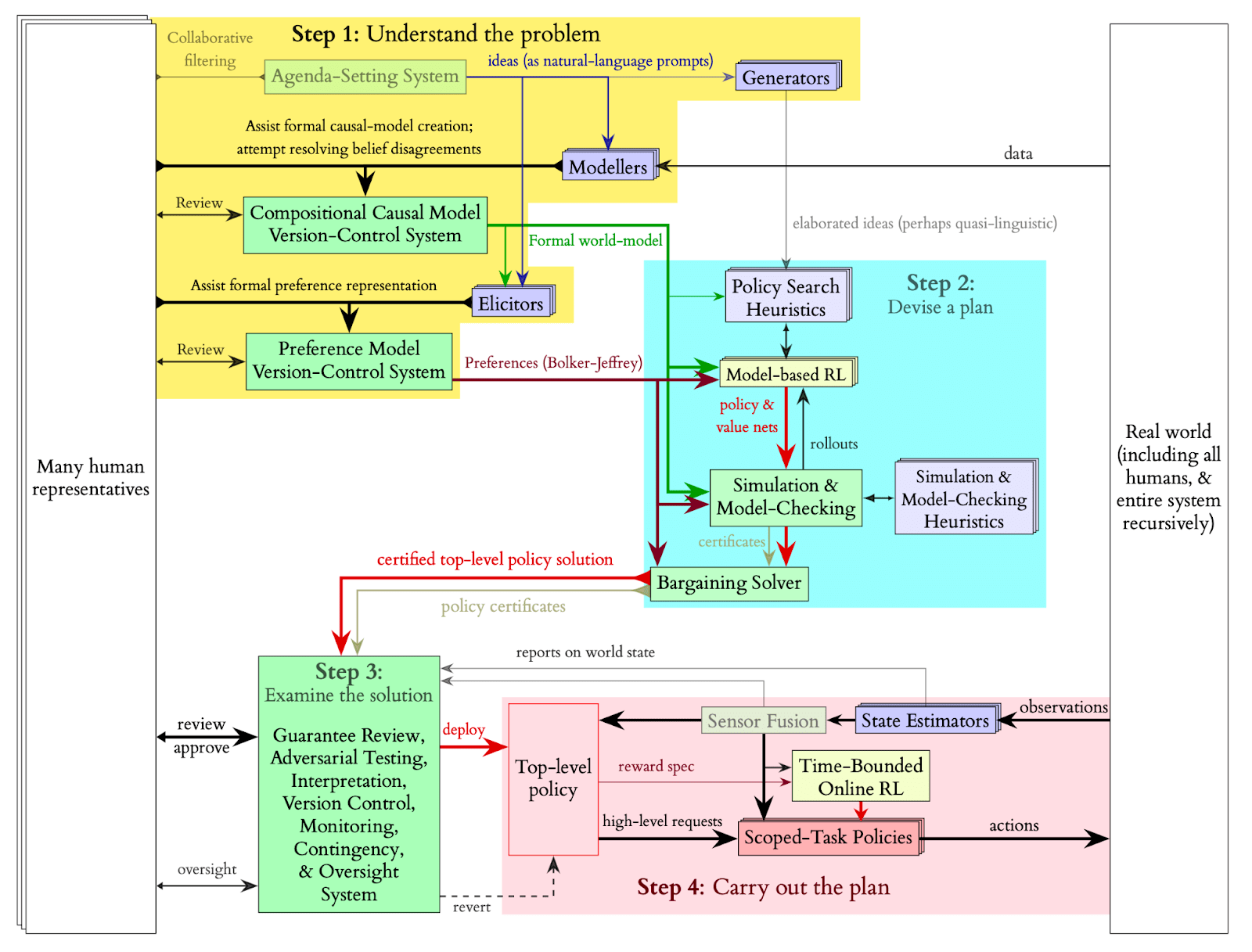

Here is a more detailed breakdown of Davidad’s plan:

- (*) Important

- Not essential

Step 1: Understand the problem

- Agenda-Setting System: This recommendation systems determines the next elements to integrate into the world simulation. The agenda sets priorities for incorporating the next physical phenomenon into the world model and formalizing the next moral desideratum and helps stakeholders decide which pivotal processes [AF · GW] to follow (e.g., nanobots everywhere or a very persuasive chatbot).

- (*) Modeler: These modelers, near-AGI-level language models, iteratively develop a formal world model by incorporating all human knowledge into a unified, multiscale model called a compositional world model. Humans verify the modelers' output and provide high-level guidance and suggestions. [More details [LW · GW]]

- (*) Compositional world model: The world model must encapsulate numerous complex relationships and be described in a precise, formal manner for efficient human verification. To give an idea, this would be like a combination between Google Earth, GTA-4 and Kerbal Space Program. Given the multiple competing theories across various sciences, it will more closely resemble a collection of world models rather than a single, unified one. Quantifying the uncertainty in the world is challenging (as in the case of Knightian uncertainty), making it difficult to ensure that the correct theory has been considered and incorporated. Thus, infra-Bayesianism should be employed for this modeling, which also offers tools for calculating worst-case scenario expectations (e.g., when the world model is chosen to minimize the agent's score).[More details [LW · GW]]

- This system's complexity almost precludes the use of Python for modeling; a new functional programming language specifically designed for this task would likely be necessary, potentially incorporating category theory. Humans would verify the world model line by line.

- To expedite the development of this world model using LLMs, methods such as this one could be employed.

- The fact that the world model is multi-scale is quite significant: each scale will be governed by numerous scientific laws. For instance, if we consider a robot in a room conducting a chemistry experiment, the world model will have to take into account the laws of chemistry to ensure that there is no risk of explosion. However, if there is no chemistry experiment, there generally won't be a need to simulate the microscopic level.

- A compositional-causal-model version-control system: A next-gen version control software, similar to Git and Wikipedia's review mechanisms, is needed to create an infra-Bayesian world model containing all global knowledge. This system tracks edits, stores stakeholder approvals or disapprovals, and logs discussions. [More details [LW · GW]]

- (*) Elicitors: Language models assist humans in expressing their desires using the formal language of the world model. Some models also identify extreme situations where desiderata are met but humans are unhappy, testing the sufficiency of current desiderata in a red-teaming fashion. Examples of desiderata could be to not increase people’s probability of dying, or to not hurt them physically. These desiderata will most of the time be in conflict with each other, and a bargain would need to be achieved. Davidad proposes to represent most of these desiderata as violations of Markov blankets. Most of those desiderata are formulated as negative constraints because we just want to avoid a catastrophe, not solve the full value problem. But some of the desiderata will represent the pivotal process that we want the model to accomplish.

- A preference-model version-control system: A dedicated version control software for preferences is required, particularly when new concepts are added to the world model. Similar to Git's "rebase" operation, previously written moral desiderata may need updating to accommodate new concepts.

- Generators: Pretrained language models provide initial heuristic ideas for the RL algorithm to bootstrap its policy search. This approach is akin to using an LLM within a robot to enhance its capabilities (e.g., PALM-E). These heuristics are then refined through RL training.

Step 2: Devise a plan

- Policy Search Heuristics: These heuristics, produced by the generators, are used to initialize a neural network, which is then trained through RL training.

- (*) Model-Based RL: An RL agent, such as MuZero, is trained on the constructed world model, with a reward function derived from a combination of the desiderata. This model may also be time-bounded, possibly utilizing this framework [LW · GW].

- (*) Simulation and model checking: Once the RL agent achieves adequate performance, model checking procedures are initiated. Model checking must be guided by heuristics provided by LLMs (possibly prompted using natural language), drawing inspiration from model checking currently performed on smaller scales (e.g., COOL-MC, which allows proving properties on Atari RL agents). Existing techniques and heuristics for such verifications can be employed to fine-tune the LLMs. [More details [LW · GW]]

- Bargaining solver: Multiple policies will be generated, with the expected utility for each stakeholder calculated. A bargaining solver is then used to determine which policy to adopt.

Step 3 Examine the solution

- In this step, traditional approaches employed in other AI safety agendas are utilized to evaluate the solution, including interpretability and red teaming. Although interpretability may not be essential for averting existential risks if the model checking functions as intended, Davidad anticipates that at least one stakeholder would desire some level of interpretability.

Step 4 Carry out the plan

- (*) High-level Policy: A high-level policy is deployed, which can deploy and monitor narrow child RL agents. This high level policy can function almost like an AGI and is capable of writing reward specifications to train narrow task RL policies.

- Revert: The high-level policy can be reverted, meaning it can be deleted, and steps 1/2/3 can be repeated. This is useful in cases of buggy malfunctions, rather than misalignment.

- (*) Time-bounded Online RL: The high-level policy can write specifications that are used to train narrow agents. Reward functions are designed to turn negative after a certain time [LW · GW] if the agent doesn't stop and transition gracefully to a shutdown state. Training for the narrow agents occurs in simulators that run small subsystems of the overall formal world model, but at a higher resolution. The high-level policy has a longer time-bounded period (e.g., a week) compared to the narrow agents (e.g., a minute). This time-bounded property allows for restarting everything if the outcome is unsatisfactory after the time limit. The ideal scenario also involves other forms of boundedness:

- Spatial boundedness within a specific subsystem of the overall environment.

- Role boundedness to work at a particular level of abstraction.

- Complexity boundedness by deferring higher-dimensional perceptual and motor tasks to smaller roles.

- Influence boundedness by deploying multiple policies that need to agree (similar to triple redundancy in avionics).

- Ambition boundedness by respecting the boundaries of existing entities.

- Task-specific Policies: These low-level policies result from time-bounded RL training and are responsible for executing small tasks, such as writing code for a program, performing a robotic manipulation, or conversing with a patient.

- Sensor Fusion and State Estimation: Narrow RL agents deployed in the real world are equipped with cameras and other sensors to gather information. The data coming from these sensors first go through some modules doing sensor fusion and state estimation, before being sent to the agent. Compartmentalizing the task of estimating the state of the world adds an extra layer of robustness, though it might lead to reduced performance. In contrast, the high-level policy does not necessarily require sensors, as it primarily functions by writing specifications and receiving abstract feedback when goals are achieved or failed by the narrow policies.

- (To improve the overall system, state estimation should be provided in an infra-Bayesian format, ensuring effective communication between the high-level policy and the narrow policies.)

Hypotheses discussion

Scientific Sufficiency Hypothesis: This hypothesis posits that we will be able to create a simulation of the world that is precise enough so that verifying a model's behavior within the simulation would ensure its proper behavior in the real world. More specifically, as we are operating in an infra-Bayesian setting, we need a large enough set of world models to ensure that reality is not too distant. Additionally, this hypothesis includes the assumption that the simulation will not be excessively computationally demanding since we intend to train a model on it. Davidad agrees that this project would be one of humanity's most significant science projects, but he believes it would still be less costly than the Large Hadron Collider.

There are several challenges associated with the Scientific Sufficiency Hypothesis:

- Completeness: The world is incredibly complex, and to create a sufficiently precise model, we might need a large portion of all human knowledge. This represents an immense amount of data, even with the assistance of LLMs. However, a sufficient model does not necessarily require solving all scientific domains. For instance, we don't need advanced physics because an AI that passes the model checking step will be very cautious and unlikely to engage in risky physics experiments. If there is a risk that the AI might interfere with experiments conducted at CERN, the model checking process would prevent it from doing so, even without a unified understanding of physics. We only need to model quantum chemistry and higher levels. But even when restricting ourselves to levels above quantum chemistry, there are still numerous scales to consider, each requiring a separate scientific theory. It is not computationally feasible to simulate an entire room from the molecular level. Furthermore, it remains unclear how much of the biosphere and human civilization must be simulated for this plan to succeed.

- Formalization: Much of our current knowledge about the world is not in a readily computable form. It would need to be translated into a computable form before it could be used in this context. Although this is a challenging task, there is already some prior work demonstrating the feasibility of automatic translation from natural language to a formal language, which offers some hope for this endeavor. [More details [LW · GW]]

- Lack of computational Infra-Bayes prior works: As far as the authors are aware, no infra-bayesian simulation has been carried out before, especially not one of this magnitude. As a result, it remains unclear how we could train a model on such a simulation and whether it would even be computationally feasible.

- Flexible meta-ontology: The system's underlying meta-ontology must be both expansive and adaptable to accommodate the diverse models and theories required for the simulation. This would be similar to creating a new programming language that efficiently incorporates the concept of time. To achieve this, Davidad is exploring novel category theoretic ideas in the attempt to unify in a single formalism:

- random differential equations

- stochastic differential equations

- stochastic partial differential equations

- continuous-time Markov chains

- discrete-time Markov chains

- probabilistic graphical models

- probabilistic logic programming

- Difficulty of understanding the framework: The framework would necessitate input from numerous experts. However, due to the complexity of the meta-ontology, they may encounter difficulties when verifying the work, particularly as it will be in an infra-bayesian format. Vanessa claims that only three people worldwide fully understand the infra-Bayesian sequence [LW · GW], which poses a significant obstacle for establishing a large-scale international project. However, some efforts show that it’s possible to develop software, such as AlgebraicJulia, that tries to offer both the versatility of category theory and user-friendliness for non-mathematicians.

We believe that addressing the “Lack of computational Infra-bayes prior works” and “Flexible meta-ontology” concerns is crucial before delving too deep into this plan, as they can be tackled independently of the other aspects.

Deontic Sufficiency Hypothesis: This hypothesis posits that it is possible to identify desiderata that are adequate to ensure the model doesn't engage in undesirable behavior. Davidad is optimistic that it's feasible to find desiderata ensuring safety for a few weeks before a better solution is discovered, making this a weaker approach than solving outer alignment. For instance, Davidad suggests that even without a deep understanding of music, you can be confident your hearing is safe by ensuring the sound pressure level remains below 80 decibels. However, since the model would still be executing a pivotal process with significant influence, relying on a partial solution for decades could be risky. [More details [LW · GW]]

Model-Checking Feasibility Hypothesis: This hypothesis states that it should be possible to prove bounds on the values the model will achieve for the desiderata. Given the task's magnitude, model-checking would mostly be done automatically. In the last few years, we have seen immense progress in the verification of neural networks. In 2017, the best methods were able to verify a hundred neurons, now in 2022, via branch and bound techniques, we can now verify networks of a million neurons [More details]. Currently, model-checking for RL agents is limited to simple environments, and the authors are uncertain if it can be scaled up within just a few years.

Time bounded Optimization Thesis: This hypothesis proposes that we can discover training techniques and reward functions that encourage time-bounded optimization behavior. A suggestion in this direction is provided here [LW · GW]. This hypothesis allows us to bypass the problem of corrigibility quite simply: “we can define time-inhomogeneous reward [i.e. the reward becomes negative after a time-limit], and this provides a way of "composing" different reward functions; while this is not a way to build a shutdown button, it is a way to build a shutdown timer, which seems like a useful technique in our safety toolbox.”.

About Category theory and Infra-Bayesianism

Why Infra-Bayesianism: We want the world model we create to be accurate and resilient when facing uncertainty and errors in modeling, since we want it to perform well in real-world situations. Infra-bayesianism offers a way to address these concerns.

- Worst case assurance: One of the primary goals is to achieve a level of worst-case assurance. Infra-bayesianism provides tools to manage multiple world models simultaneously and calculate the expected value for the worst-case scenario.

- Knightian uncertainty: This approach also allows us to handle situations where quantifying uncertainty is not feasible in a purely Bayesian way. For instance, when analyzing price time series, we can apply the Black-Scholes Model, but we must also acknowledge the existence of black swan events. Although we cannot assign a probability to such events, we must integrate the possibility of a black swan crisis into our analysis. We can automate the world modeling process by removing the subjective aspect of measuring uncertainty between different theories, i.e. we don’t have to put a probability on everything. Although it does not solve the problem of unknown unknowns (facts about the world that we don’t even consider or think about, because of our limited cognition or knowledge), it helps us work with known unknowns that are difficult to assign probabilities to.

Why Category Theory: A key to effectively understanding the world may lie in exploring relationships and mappings. Functional programming and category theory are promising options for this task. Category theory enables us to represent complex relationships across various levels of abstraction, which is crucial for constructing a world model that incorporates different competitive theories at different scales of size and time in a collaborative manner. Moreover, it is useful to express infra-bayesianism within a category-theoretic framework. The main bottleneck currently appears to be creating an adequate meta-ontology using category theory. [More details here [LW · GW], and here]

High level criticism

Here are our main high level criticisms about the plan:

- High alignment tax: The process of training a model using this approach is expensive and time-consuming. To implement it, major labs would need to agree on halting the development of increasingly larger models for at least a year or potentially longer if the simulation proves to be computationally intensive.

- Very close to AGI: Because this approach is more expensive, we need to be very close to AGI to complete this plan. If AGI is developed sooner than expected or if not all major labs can reach a consensus, the plan could fail rapidly. The same criticisms as those written to OpenAI could be applied here. See Akash's criticisms [LW · GW].

- A lot of moving pieces: The plan is intricate, with many components that must align for it to succeed. This complexity adds to the risk and uncertainty associated with the approach.

- Political bargain in place of outer alignment: Instead of achieving outer alignment, the model would be trained based on desiderata determined through negotiations among various stakeholders. While a formal bargaining solver would be used to make the final decision, organizing this process could prove to be politically challenging and complex:

- Who would the stake-holders be (countries, religions, ethnicities, companies, generations, people from the future, etc.)

- How would they be represented

- How each stake-holder would be weighted

- How to evaluate the losses and gains of each stake-holder in each scenario

- The resulting model, trained based on the outcome of these negotiations, would perform a pivotal process. While there is hope that most stakeholders would prioritize humanity's survival and that the red-teaming process included in this plan would help identify and eliminate harmful desiderata, the overall robustness of this approach remains uncertain.

- RL Limitations: While reinforcement learning has made significant advancements, it still has limitations, as evidenced by MuZero's inability to effectively handle games like Stratego. To address these limitations, assistance from large language models might be required to bootstrap the training process. However, it remains unclear how to combine the strengths of both approaches effectively—leveraging the improved reliability and formal verifiability offered by reinforcement learning while harnessing the advanced capabilities of large language models.

High Level Hopes

This plan has also very good properties, and we don’t think that a project of this scale is out of question:

- Past human accomplishments: Humans have already built very complex things in the past. Google Maps is an example of a gigantic project, and so is the LHC. Some Airbus aircraft models have never had severe accidents, nor have any of EDF’s 50+ nuclear power plants. We dream of a world where we launch aligned AIs as we have launched the International Space Station or the James Webb Space Telescope.

- Help from almost general AIs: This plan is impossible right now. But we should not underestimate what we will be able to do in the future with almost general AIs but not yet too dangerous, to help us iteratively build the world model.

- Positive Scientific externalities: This plan has many positive externalities: We expect to make progress in understanding world models and human desires while carrying out this plan, which could lead to another plan later and help other research agendas. Furthermore, this plan is particularly good at leveraging the academic world.

- Positive Governance externalities: The ambition of the plan and the fact that it requires an international coalition is interesting because this would improve global coordination around these issues and show a positive path that is easier to pitch than a slow-down. [More details [LW · GW]]. Furthermore, Davidad's plan is one of the few plans that solves accidental alignment problems as well as misuse problems, which would help to promote this plan to a larger portion of stakeholders.

- Davidad is really knowledgeable and open to discussion. We think that a plan like this is heavily tied to the vision of its inventor. Davidad’s knowledge is very broad, and he has experience with large-scale projects, in academics and in startups (he co-invented a successful cryptocurrency, he led a research project for the worm emulation at MIT). [More details]

Intuition pump for the feasibility of creating a highly detailed world model

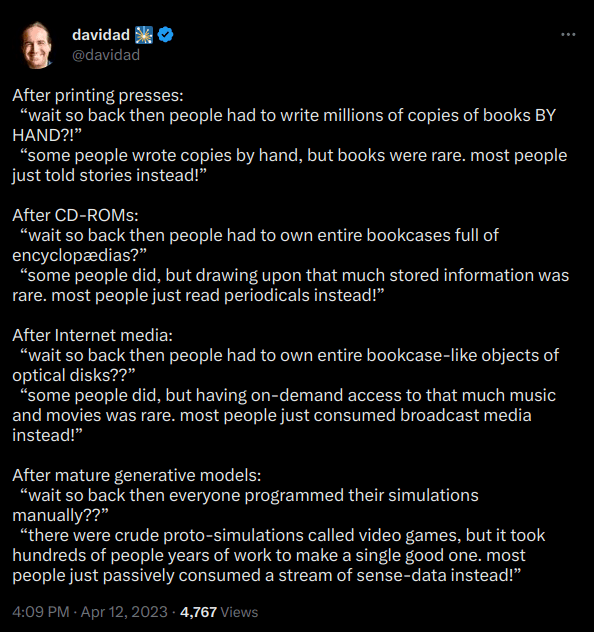

Here's an intuition pump to demonstrate that creating a highly detailed world model might be achievable: Humans have already managed to develop Microsoft Flight Simulator or The Sims. There is a level of model capability at which these models will be capable of rapidly coding such realistic video games. Davidad’s plan, which involves reviewing 2 million scientific papers (among which only a handful contain crucial information) to extract scientific knowledge, is only a bit more difficult, and seems possible. Davidad tweeted this to illustrate this idea:

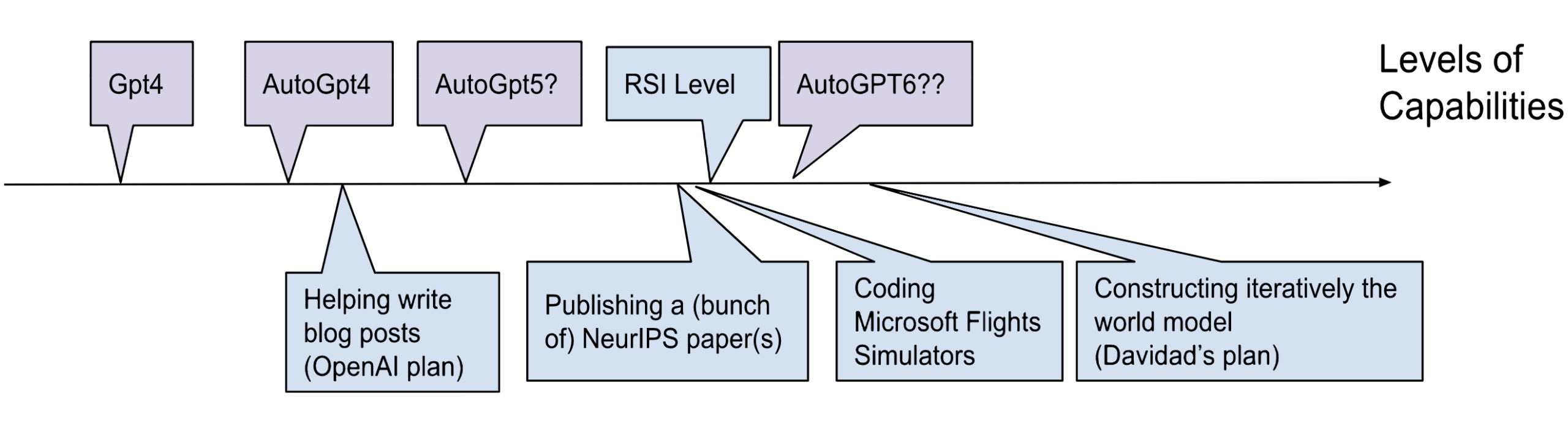

Comparison with OpenAI’s Plan

Comparison with OpenAI’s Plan: At least, David's plan is an object-level plan, unlike OpenAI's plan, which is a meta-level plan that delegates the role of coming up with a plan to smarter language models. However, this plan also requires very powerful language models to be able to formalize the world model, etc. Therefore, it seems to us that this plan also requires a level of capability that is also AGI. But at the same time, Davidad's plan might just be one of the plans that OpenAI's automatic alignment researchers could come up with. At least, davidad’s plan does not destroy the world with an AI race if it fails.

The main technical crux: We think the main difficulty is not this level of capability, but the fact that this level of capability is beyond the ability to publish papers at conferences like NeurIPS, which we perceive as the threshold for Recursive self-improvement. So this plan demands robust global coordination to avoid foom. And model helping at alignment research seems much more easily attainable than the creation of this world model, so OpenAI’s plan may still be more realistic.

Conclusion

This plan is crazy. But the problem that we are trying to solve is also crazy hard. The plan offers intriguing concepts, and an unorthodox approach is preferable to no strategy at all. Numerous research avenues could stem from this proposal, including automatic formalization and model verification, infra-Bayesian simulations, and potentially a category-theoretic mega-meta-ontology. As Nate Soares said : “I'm skeptical that davidad's technical hopes will work out, but he's in the rare position of having technical hopes plus a plan that is maybe feasible if they do work out”. We express our gratitude to Davidad for presenting this innovative plan and engaging in meaningful discussions with us.

EffiSciences played a role in making this post possible through their field building efforts.

Annex

Much of the content in this appendix was written by Davidad, and only lightly edited by us. The annex contains:

- A discussion of the gouvernance aspects of this plan

- A technical roadmap

- And some important testable first research projects to test parts of the hypotheses.

Governance strategy

Does OAA help with governance? Does it make certain governance problems easier/harder?

Here is davidad’s answer:

- OAA offers a concrete proposal for governance of a transformative AI deployment: it would elicit independent goal specifications and even differing world-models from multiple stakeholders and perform a bargaining solution over the Pareto frontier of multi-objective RL policies.

- While this does not directly address the governance of AI R&D, it does make the problem easier in several ways:

- It is more palatable or acceptable for relevant decision-makers to join a coalition that is developing a safer form of AI together (rather than racing) if there is a good story for how the result will be governed in a way that is better for everyone than the status quo (note: this argument relies on those decision-makers believing that in the status quo race, even if they win, the chance of existential catastrophe is nontrivial; I am more optimistic than some that this belief is already widespread among relevant decision-makers and others will be able to update enough).

- It provides a positive vision for how AI could actually go well—something to aim for, rather than just averting a risk.

- It offers a narrative about regulation or compute governance where the imposition of an OAA-style model doesn’t have to be just about “safety” or technical concerns but also about “fairness” or the public benefit

- Caveat: this approach requires not just imposing the OAA, but also saying something substantive about who gets to be high-bargaining-power stakeholders, e.g. citizens’ assemblies, elected representatives, etc.

- To the extent that early versions of OAA can already be useful in supporting collective decision-making about the allocation of R&D resources (time, money, compute) by helping stakeholders form a (low-complexity, but higher complexity than an intuitive “slowing down seems good/bad”) model of the situation and finding Pareto-optimal bargain policies, we can actually use OAA to do some of the governance of AI R&D

- My default modal guesses about which AI techniques will be useful in different boxes of the big OAA box diagram are pretty well spread out across the comparative advantages of different AI labs; this makes an Apollo-Program-style or ISS-style structure in which many companies/countries participate in an overall program more natural than with agendas that rely on tuning one single artifact (which would naturally end up more Manhattan-Project-style).

Roadmap

Here is the ambitious scaling roadmap where things play out as fast as possible is the following.

Stage 1: Early research projects

Timeline: Q3 2023. Tldr: Academic work done / PoC experiments

Experiment with the following internships (which are described in the last section of this annex):

- Heuristics used by the solver

- Figure out the meta ontology theory

- Building a toy infra-Bayesian “Super Mario”, and then applying this framework to modelize Smart Grids.

- Training LLMs to write models in the PRISM language by backward distillation

Succeed at How to fine-tune a language model as a heuristic for model-checking?

Stage 2: Industry actors first projects

Timeline: Q4 2023. Tldr: Get industry actors interested, starting projects inside labs.

Needs:

- Clear articulation of specific compute-bound projects which is a good fit for them. It will require a lot of basic thoughts. It will also require early results. (heuristics used by the solver, figure out the meta ontology, …)

- Succeed at How to fine-tune a language model as a heuristic for model-checking?

Stage 3: Labs commitments

Timeline: Late 2024 or 2025. We need to get to Stage 3 no later than 2028. Tldr: Make a kind of formal arrangement to get labs to collectively agree to increase their investment in OAA. This is the critical thing.

Needs:

- You need to have built a lot of credibility with them. The strong perception that this is a reasonable and credible solution.

- Hard part: People who have a lot of reputation on the inside who are already working on it.

- Multi-author manifesto (public position paper) which backs OAA with legendary names.

- You need to get introductions to the CEOs.

- Have a very clear commitment/ask.

- Having fleshed out the bargaining mechanism (a bit like the S-process)

Stage 4: International consortium to build OAA.

Timelines: In order for this to not feel like a slowdown to capabilities accelerationists, we should credibly reach Stage 4 by 2029. Start chat & planning for it in 2024.

Some important testable first research projects

Formalization of the world model

We can get started on this by using an existing modeling language, such as PRISM, and applying methodologies being used for autoformalization (e.g. “distilled back translation”: starting with a big dataset of models in the language, like MCC, using the LLM to translate them into natural language, and then fine-tuning on the transpose of that dataset to get it to learn the formal language).

Try to fine-tune a language model as a heuristic for model-checking

Prompting/fine-tuning/RL’ing LLMs to be useful assistants in writing (and extending) formal world models and specifications. Here would be the process:

- Step 1: Instrument (in the sense of adding systematic “debug statements” that output the intermediate states) the existing hand-crafted heuristics in a solver like Storm or Prism or Z3. For example, in storm, we could begin by adding a lot of printf in this heuristic.

- Step 2: Collect traces of what the heuristic output is on a wide variety of problems drawn from OpenAI Gym via COOL-MC, and/or in a benchmark that these solvers compete on (like MCC)

- Step 3: Fine-tune a language model on the input-output pairs in this corpus

- Step 4: Replace the hand-crafted heuristics with this language model

- Step 5: Begin training using RL with the reward signal being an exponentially-discounted successful problem solution (analogous to the binary reward of winning a Go game, etc.)

Defining a sufficiently expressive formal meta-ontology for world-models

Defining a sufficiently expressive formal meta-ontology for world-models with multiple scientific explanations at different levels of abstraction (and spatial and temporal granularity) having overlapping domains of validity, with all combinations of {Discrete, Continuous} and {time, state, space}, and using an infra-bayesian notion of epistemic state (specifically, convex compact down-closed subsets of subprobability space) in place of a Bayesian state. Here are 3 subjects of internship on this subtopic:

- A: unifying different formalisms of dynamical systems

- One very high-level problem statement: “Define a category which is both a tangent category in the sense of Cruttwell and also has a probability monad in the sense of Perrone.”

- This arXiv paper is extremely relevant — one open question is: is the category ωPAP defined here a tangent category already? (ωPAP Spaces: Reasoning Denotationally About Higher-Order, Recursive Probabilistic and Differentiable Programs)

- B: generalizing to infra Bayesianism using the monad defined by Mio in this paper.

- C: Continuous Time Coalgebras: It is known that discrete-time Markov processes are coalgebras for a probability monad. Such a coalgebra can be viewed as a functor from the one-object category ℕ to the Kleisli category of the probability monad. A “continuous time coalgebra” can be defined as a functor from the one-object category ℚ⁺ of non-negative rationals in place of ℕ (with the same codomain, the Kleisli category of the monad). Which concepts of coalgebra theory can be generalized to continuous time coalgebra? Especially, is there an analog to final coalgebras and their construction by Adamek's theorem?

Experimenting with the compositional version control system

“Developing version-control formalisms and software tools that decompose these models in natural ways and support building complex models via small incremental patches (such each patch is fully understandable by a single human who is an expert in the relevant domain).’ This requires leveraging theories like double-pushout rewriting and δ-lenses to develop a principled version-control system for collaborative and forking edits to world-models, multiple overlapping levels of abstraction, incremental compilation in response to small edits.

Getting traction on the deontic feasibility hypothesis

Davidad believes that using formalisms such as Markov Blankets would be crucial in encoding the desiderata that the AI should not cross boundary lines at various levels of the world-model. We only need to “imply high probability of existential safety”, so according to davidad, “we do not need to load much ethics or aesthetics in order to satisfy this claim (e.g. we probably do not get to use OAA to make sure people don't die of cancer, because cancer takes place inside the Markov Blanket, and that would conflict with boundary preservation; but it would work to make sure people don't die of violence or pandemics)”. Discussing this hypothesis more thoroughly seems important.

Some other projects

- Experiment with the Time bounded Optimization Thesis with some RL algorithms.

- Try to create a toy infra-Bayesian “Super Mario” to play with infra Bayesianism in a computational setting. Then Apply this framework to modelize Smart Grids.

- A natural intermediary step would be to scale the process that produced formally verified software (e.g. Everest, seL4, CompCert, etc.) by using parts of the OAA.

Types explanation

Explanation in layman's terms of the types in the main schema. Those notations are the same as those used in reinforcement learning.

- Formal model of the world: : Action, : Observation, : State

- is a pair of:

- Observation function: that transforms the states into partial observations .

- A transition model: , which transforms previous states into an infra-Bayesian probability distribution of possible next states .

- Formal desirabilities (Bolker-Jeffrey)

- Trajectory: is a sequence of states and actions

- Desiderata: is a function that tells us which sequences of states and actions are desirable.

- Value: gives a score to each desideratum (which is a little weird, and Davidad agreed that a list of pairs would be more natural).

- Policy:

- Takes in a sequence of actions and observations and returns a probability distribution over possible actions.

- Note: this model is not Markovian, but everything here is classical.

- Certificate proving formal guarantees:

- We want proofs that all desiderata are respected with a probability in the interval .

40 comments

Comments sorted by top scores.

comment by ryan_greenblatt · 2025-01-13T23:48:05.671Z · LW(p) · GW(p)

At the time when I first heard this agenda proposed, I was skeptical. I remain skeptical, especially about the technical work that has been done thus far on the agenda[1].

I think this post does a reasonable job of laying out the agenda and the key difficulties. However, when talking to Davidad in person, I've found that he often has more specific tricks and proposals than what was laid out in this post. I didn't find these tricks moved me very far, but I think they were helpful for understanding what is going on.

This post and Davidad's agenda overall would benefit from having concrete examples of how the approach might work in various cases, or more discussion of what would be out of scope (and why this could be acceptable). For instance, how would you make a superhumanly efficient (ASI-designed) factory that produces robots while proving safety? How would you allow for AIs piloting household robots to do chores (or is this out of scope)? How would you allow for the AIs to produce software that people run on their computers or to design physical objects that get manufactured? Given that this proposal doesn't allow for safely automating safety research, my understanding is that it is supposed to be a stable end state. Correspondingly, it is important to know what Davidad thinks can and can't be done with this approach.

My core disagreements are on the "Scientific Sufficiency Hypothesis" (particularly when considering computational constraints), "Model-Checking Feasibility Hypothesis" (and more generally on proving the relevant properties), and on the political feasibility of paying the needed tax even if the other components work out. It seems very implausible to me that making a sufficiently good simulation is as easy as building the Large Hadron Collider. I think the objection in this comment [LW(p) · GW(p)] holds up (my understanding is Davidad would require that we formally verify everything on the computer).[2]

As a concrete example, I found it quite implausible that you could construct and run a robot factory that is provably safe using the approach outlined in this proposal, and this sort of thing seems like a minimal thing you'd need to be able to do with AIs to make them useful.

My understanding is that most technical work has been on improving mathematical fundamentals (e.g. funding logicians and category theorists to work on various things). I think it would make more sense to try to demonstrate overall viability with minimal prototypes that address key cruxes. I expect this to fail and thus it would be better to do this earlier. If you expect these prototypes would work, then it seems interesting to demonstrate this as many people would find this very surprising. ↩︎

This is mostly unrelated, but when talking with Davidad, I've found that a potential disagreement is that he's substantially more optimistic about using elicitation to make systems that currently seem quite incapable (e.g., GPT-4) very useful. As a concrete example, I think we disagreed about the viability of running a fully autonomous Tesla factory for 1 year at greater than one-tenth productivity using just AI systems created prior to halfway through 2024. (I was very skeptical.) It's not exactly clear to me how this is a crux for the overall plan (beyond getting a non-sabotaged implementation of simulations) given that we still are aiming to prove safety either way, and proving properties of GPT-4 is not clearly much easier than proving properties of much smarter AIs. (Apologies if I've just forgotten.) ↩︎

comment by So8res · 2023-04-19T18:04:38.867Z · LW(p) · GW(p)

(For context vis-a-vis my enthusiasm about this plan, see this comment [LW(p) · GW(p)]. In particular, I'm enthusiastic about fleshing out and testing some specific narrow technical aspects of one part of this plan. If that one narrow slice of this plan works, I'd have some hope that it can be parlayed into something more. I'm not particularly compelled by the rest of the plan surrounding the narrow-slice-I-find-interesting (in part because I haven't looked that closely at it for various reasons), and if the narrow-slice-I-find-interesting works out then my hope in it mostly comes from other avenues. I nevertheless think it's to Davidad's credit that his plan rests on narrow specific testable technical machinery that I think plausibly works, and has a chance of being useful if it works.)

comment by simeon_c (WayZ) · 2023-04-19T16:33:28.140Z · LW(p) · GW(p)

Thanks for writing that up Charbel & Gabin. Below are some elements I want to add.

In the last 2 months, I spent more than 20h with David talking and interacting with his ideas and plans, especially in technical contexts.

As I spent more time with David, I got extremely impressed by the breadth and the depth of his knowledge. David has cached answers to a surprisingly high number of technically detailed questions on his agenda, which suggests that he has pre-computed a lot of things regarding his agenda (even though it sometimes look very weird on first sight). I noticed that I never met anyone as smart as him.

Regarding his ability to devise a high level plan that works in practice, David has built a technically impressive crypto (today ranked 22nd) following a similar methodology, i.e. devising the plan from first principles.

Finally, I'm excited by the fact that David seems to have a good ability to build ambitious coalitions with researchers, which is a great upside for governance and for such an ambitious proposal. Indeed, he has a strong track record of convincing researchers to work on his stuff after talking for a couple hours, because he often has very good ideas on their field.

These elements, combined with my increasing worry that scaling LLMs at breakneck speed is not far from certain to kill us, make me want to back heavily this proposal and pour a lot of resources into it.

I'll thus personally dedicate in my own capacity an amount of time and resources to try to speed that up, in the hope (10-20%) that in a couple of years it could become a credible proposal as an alternative to scaled LLMs.

comment by Fabien Roger (Fabien) · 2024-04-10T21:36:39.470Z · LW(p) · GW(p)

I don't think I understand what is meant by "a formal world model".

For example, in the narrow context of "I want to have a screen on which I can see what python program is currently running on my machine", I guess the formal world model should be able to detect if the model submits an action that exploits a zero-day that tampers with my ability to see what programs are running. Does that mean that the formal world model has to know all possible zero-days? Does that mean that the software and the hardware have to be formally verified? Are formally verified computers roughly as cheap as regular computers? If not, that would be a clear counter-argument to "Davidad agrees that this project would be one of humanity's most significant science projects, but he believes it would still be less costly than the Large Hadron Collider."

Or is the claim that it's feasible to build a conservative world model that tells you "maybe a zero-day" very quickly once you start doing things not explicitly within a dumb world model?

I feel like this formally-verifiable computers claim is either a good counterexample to the main claims, or an example that would help me understand what the heck these people are talking about.

Replies from: Paul W↑ comment by Paul W · 2024-05-16T08:25:03.873Z · LW(p) · GW(p)

I believe that the current trends for formal verification, say, of traditional programs or small neural networks, are more about conservative overapproximations (called abstract interpretations). You might want to have a look at this: https://caterinaurban.github.io/pdf/survey.pdf

To be more precise, it appears that so-called "incomplete formal methods" (3.1.1.2 in the survey I linked) are more computationally efficient, even though they can produce false negatives.

Does that answer your question ?

↑ comment by Fabien Roger (Fabien) · 2024-05-17T12:51:09.460Z · LW(p) · GW(p)

Not entirely. This makes me slightly more hopeful that we can have formal guarantees of computer systems, but is the field advanced enough that it would be feasible to have a guaranteed no-zero-day evaluation and deployment codebase that is competitive with a regular codebase? (Given a budget of 1 LHC for both the codebase inefficiency tax + the time to build the formal guarantees for the codebase.)

(And computer systems are easy mode, I don't even know how you would start to build guarantees like "if you say X, then it's proven that it doesn't persuade humans of things in ways they would not have approved of beforehand.")

Replies from: Paul W↑ comment by Paul W · 2024-05-18T16:04:12.025Z · LW(p) · GW(p)

Is the field advanced enough that it would be feasible to have a guaranteed no-zero-day evaluation and deployment codebase that is competitive with a regular codebase?

As far as I know (I'm not an expert), such absolute guarantees are too hard right now, especially if the AI you're trying to verify is arbitrarily complex. However, the training process ought to yield an AI with specific properties. I'm not entirely sure I got what you meant by "a guaranteed no-zero-day evaluation and deployment codebase". Would you mind explaining more ?

"Or is the claim that it's feasible to build a conservative world model that tells you "maybe a zero-day" very quickly once you start doing things not explicitly within a dumb world model?"

I think that's closer to the idea: you {reject and penalize, during training} as soon as the AI tries something that might be "exploiting a zero-day", in the sense that the world-model can't rule out this possibility with high confidence[1]. That way, the training process is expected to reward simpler, more easily verified actions.

Then, a key question is "what else you do want from your AI ?": of course, it is supposed to perform critical tasks, not just "let you see what program is running"[2], so there is tension between the various specifications you enter. The question of how far you can actually go, how much you can actually ask for, is both crucial, and wide open, as far as I can tell.

- ^

Some of the uncertainty lies in how accurate and how conservative the world-model is; you won't get a "100% guarantee" anyway, especially since you're only aiming for probabilistic bounds within the model.

- ^

Otherwise, a sponge would do.

↑ comment by Fabien Roger (Fabien) · 2024-05-20T12:06:29.283Z · LW(p) · GW(p)

I was thinking that the formal guarantees would be about state evaluations (i.e. state -> badness bounds) - which would require sth like "showing there is no zero-day" (since "a code-base with a zero-day" might be catastrophically bad if no constraints are put on actions). Thanks for pointing out they can be about action (i.e. (state, action) -> badness bounds), which seem intuitively easier to get good bounds for (you don't need to show there are no zero-days, just that the currently considered action is extremely unlikely to exploit a potential zero-day).

I'd be curious to know what kind of formal process could prove that (codebase, codebase-interaction) pairs are provably not-bad (with high probability, and with a false positive rate low enough if you trained an AI to minimize it). My guess is that there is nothing like that on the horizon (that could become competitive at all), but I could be wrong.

("let you see what program is running" was an example of a very minimal safety guarantee I would like to have, not a representative safety guarantee. My point is that I'd be surprised if people got even such a simple and easy safety guarantee anytime soon, using formal methods to check AI actions that actually do useful stuff.)

comment by dr_s · 2023-04-20T12:19:10.001Z · LW(p) · GW(p)

We only need to model quantum chemistry and higher levels.

As someone with years of practical experience in quantum chemistry simulation, you can't understate how much heavy lifting that "only" is doing here. We are not close, not even remotely close, not even we-can-at-least-see-it-on-the-horizon close to the level of completeness required here. For a very basic example, we can't even reliably and straightforwardly predict via quantum simulations whether a material will be a superconductor. Even guessing what quantum mechanics does to the dynamics of atomic nuclei is crazy hard and expensive, I'm talking days and days of compute on hundreds of cores thrown at a single cube of 1 nm side.

The problem here is that the reason why we'd want ASI is because we expect it might see patterns where we don't, and thread the needle of discovery in the hyperdimensional configuration space of possibility without having to brute force its way through it. But we have to brute force it, right now. If ASI found a way to make nanomachines that relies on more exotic principles than basic organic chemistry, or is subtly influenced by some small effect of dispersion forces that can't be reliably simulated with our usual approximations, then we'd need to be able to simulate theory to at least that level to get at that point of understanding. We need ASI to interpret what ASI is doing efficiently...

My immediate impression is that this doesn't blast the whole plan open. I think you can reasonably decouple the social, economical and moral aspect of the model and the scientific one. The first one is also hard to pin down, but for very different reasons, and I think we might make some progress in that sense. It's also more urgent, because current LLMs aren't particularly smart at doing science, but they're already very expert talkers (and bullshitters). Then we just don't let the AI directly perform scientific experiments. Instead, we have it give us recipes, together with a description of what they are expected to do, and the AI's best guess of their effect on society and why they would be beneficial. If the AI is properly aligned to the social goals, which it should be at this point if it has been developed iteratively within the bounds of this model, it shouldn't straight up lie. Any experiments are then to be performed with high levels of security, airgaps, lockdown protocols, the works. As we go further, we might then incorporate "certified" ASIs in the governance system to double-check any other proposals from different ASIs, and so on so forth.

IMO that's as good as it gets. If the values and the world model of the AI are reliable, then it shouldn't just create grey goo and pass it as a new energy technology. It shouldn't do it out of malice, and shouldn't do it by mistake, especially early on when its scientific capabilities would still be relatively limited. At that point of course developing AI tools to e.g. solve the quantum structure and dynamics of a material without having to muck about with DFT, quantum Monte Carlo or coupled cluster simulations would have to be a priority (both for the model's sake and because it would be mighty useful). And if it turns out that's just not possible, then no ASI should be able to come up with anything so wild we can't double check it either.

Replies from: Roman Leventov↑ comment by Roman Leventov · 2023-09-18T17:04:44.360Z · LW(p) · GW(p)

"Solving quantum chemistry" is not the domain of ASI, it's a task for a specialised model, such as AlphaFold. An ASI, it if need to solve quantum chemistry, would not "cognise" it directly (or "see patterns" in it) but rather develop an equivalent of AlphaFold for quantum chemistry, potentially including quantum computers into its R&D program plan.

comment by Charlie Sanders (charlie-sanders) · 2023-04-20T12:47:44.808Z · LW(p) · GW(p)

My intuition is that a simulation such as the one being proposed would take far longer to develop than the timeline outlined in this post. I’d posit that the timeline would be closer to 60 years than 6.

Also, a suggestion for tl;dr: The Truman Show for AI.

Replies from: PeterMcCluskey, alexander-gietelink-oldenziel, jacob_cannell, charbel-raphael-segerie↑ comment by PeterMcCluskey · 2023-04-20T19:03:36.129Z · LW(p) · GW(p)

Agreed.

Davidad seems to be aiming for what I'd call infeasible rigor, presumably in hope of getting something that would make me more than 95% confident of success.

I expect we could get to 80% confidence with this basic approach, by weakening the expected precision of the world model, and evaluating the AI on a variety of simulated worlds, to demonstrate that the AI's alignment is not too sensitive to the choice of worlds. Something along the lines of the simulations in Jake Cannell's LOVE in a simbox [LW(p) · GW(p)].

Is 80% confidence the best we can achieve? I don't know.

↑ comment by Alexander Gietelink Oldenziel (alexander-gietelink-oldenziel) · 2023-04-21T16:29:57.314Z · LW(p) · GW(p)

My understanding is that the world model is more like a very coarse projection of the world than a simulation

It's not the case that the AGI has to be fooled into thinking the simulation is real like in the Truman Show (I like name tho!).

Davidad only tries to achieve 'safety' - not alignment. Indeed the AI may be fully unaligned.

The proposal is different from simulation propoals like Jacob Cannell's LOVE in a simbox where one tries to align the values of the AI.

In davidads proposal the actual AGI is physically boxed and cannot interact with the world except through proposing policies inside this worldmodel (which get formally checked in the second stage).

One way of thinking about is that davidads proposal is really an elaborate boxing protocol but there are multiple boxes here:

The physical Faraday cage that houses the hardware The interface constraint that constraints the AI to only output into the formal world model The formal cage that is achieved by verifying the behaviour through mathmagic.

Although the technical challenges seems daunting, especially on such short timelines this is not where I am most skeptical. The key problem, like all boxing proposals, is more of a governance and coordination problem.

↑ comment by jacob_cannell · 2023-04-21T17:17:01.165Z · LW(p) · GW(p)

Generally agree with that intuition, but as others point out the real Truman Show for AI is simboxing (which is related [LW(p) · GW(p)], but focuses more on knowledge containment to avoid deception issues during evaluations).

Davidad is going for more formal safety where you can mostly automatically verify all the knowledge in the agent's world model, presumably verify prediction rollouts, verify the selected actions correspond to futures that satisfy the bargaining solution, etc etc. The problem is this translates to a heavy set of constraints on the AGI architecture.

LOVES is instead built on the assumption that alignment can not strongly dictate the architecture - as the AGI architecture is determined by the competitive requirements of efficient intelligence/inference (and so is most likely a large brain-like ANN). We then search for the hyperparams and minimal tweaks which maximize alignment on top of that (ANN) architecture.

↑ comment by Charbel-Raphaël (charbel-raphael-segerie) · 2023-04-20T19:56:30.716Z · LW(p) · GW(p)

I don't have the same intuition for the timeline, but I really like the tl;dr suggestion!

comment by Charbel-Raphaël (charbel-raphael-segerie) · 2025-01-03T16:58:35.724Z · LW(p) · GW(p)

Ok, time to review this post and assess the overall status of the project.

Review of the post

What i still appreciate about the post: I continue to appreciate its pedagogy, structure, and the general philosophy of taking a complex, lesser-known plan and helping it gain broader recognition. I'm still quite satisfied with the construction of the post—it's progressive and clearly distinguishes between what's important and what's not. I remember the first time I met Davidad. He sent me his previous post [LW · GW]. I skimmed it for 15 minutes, didn't really understand it, and thought, "There's no way this is going to work." Then I reconsidered, thought about it more deeply, and realized there was something important here. Hopefully, this post succeeded in showing that there is indeed something worth exploring! I think such distillation and analysis are really important.

I'm especially happy about the fact that we tried to elicit as much as we could from Davidad's model during our interactions, including his roadmap and some ideas of easy projects to get early empirical feedback on this proposal.

Current Status of the Agenda.

(I'm not the best person to write this, see this as an informal personal opinion)

Overall, Davidad performed much better than expected with his new job as program director in ARIA and got funded 74M$ over 4 years. And I still think this is the only plan that could enable the creation of a very powerful AI capable of performing a true pivotal act to end the acute risk period, and I think this last part is the added value of this plan, especially in the sense that it could be done in a somewhat ethical/democratic way compared to other forms of pivotal acts. However, it's probably not going to happen in time.

Are we on track? Weirdly, yes for the non-technical aspects, no for the technical ones? The post includes a roadmap with 4 stages, and we can check if we are on track. It seems to me that Davidad jumped directly to stage 3, without going through stages 1 and 2. This is because of having been selected as research director for ARIA, so he's probably going to do 1 and 2 directly from ARIA.

- Stage 1 Early Research Projects is not really accomplished:

- “Figure out the meta ontology theory”: Maybe the most important point of the four, currently WIP in ARIA, but a massive team of mathematicians has been hired to solve this.

- “Heuristics used by the solver”: Nope

- “Building a toy infra-Bayesian "Super Mario", and then applying this framework to model Smart Grids”: Nope

- “Training LLMs to write models in the PRISM language by backward distillation”: Kind of already here, probably not very high value to spend time here, I think this is going to be solved by default.

- Stage 2: Industry actors' first projects: I think this step is no longer meaningful because of ARIA.

- Stage 3: formal arrangement to get labs to collectively agree to increase their investment in OAA, is almost here, in the sense that Davidad got millions to execute this project in ARIA and he published his Multi-author manifesto which backs the plan with legendary names especially with Yoshua Bengio as the scientific director of this project.

The lack of prototyping is concerning. I would have really liked to see an "infra-Bayesian Super Mario" or something similar, as mentioned in the post. If it's truly simple to implement, it should have been done by now. This would help many people understand how it could work. If it's not simple, that would reveal it's not straightforward at all. Either way, it would be pedagogically useful for anyone approaching the project. If we want to make these values democratic, etc.. It's very regrettable that this hasn't been done after two years. (I think people from the AI Objectives Institute tried something at some point, but I'm not aware of anything publicly available.) I think this complete lack prototypes is my number one concern preventing me from recommending more "safe by design" agendas to policymakers.

This plan was an inspiration for constructability: It might be the case that the bold plan could decay gracefully, for example into constructability [LW · GW], by renouncing formal verification and only using traditional software engineering techniques.

International coordination is an even bigger bottleneck than I thought. The "CERN for AI" isn't really within the Overton window, but I think this applies to all the other plans, and not just Davidad's plan. (Davidad made a little analysis of this aspect here).

At the end of the day: Kudos to Davidad for successfully building coalitions, which is already beyond amazing! and he is really an impressive thought leader. What I'm waiting to see for the next year is using AIs such as O3 that are already impressive in terms of competitive programming and science knowledge, and seeing what we can already do with that. I remain excited and eager to see the next steps of this plan.

Replies from: matolcsid, bgold↑ comment by David Matolcsi (matolcsid) · 2025-01-03T21:57:03.861Z · LW(p) · GW(p)

What is an infra-Bayesian Super Mario supposed to mean? I studied infra-Bayes under Vanessa for half a year, and I have no idea what this could possibly mean. I asked Vanessa when this post came out and she also said she can't guess what you might mean under this. Can you explain what this is? It makes me very skeptical that the only part of the plan I know something about seems to be nonsense.

Also, can you give more information ir link to a resource on what Davidad's team is currently doing? It looks like they are the best funded AI safety group that currently exist (except if you count Anthropic), but I never hear about them.

Replies from: quinn-dougherty↑ comment by Quinn (quinn-dougherty) · 2025-01-03T22:23:00.518Z · LW(p) · GW(p)

(i'm guessing) super mario might refer to a simulation of the Safeguarded AI / Gatekeeper stack in a videogame. It looks like they're skipping videogames and going straight to cyberphysical systems (1, 2).

Replies from: quinn-dougherty↑ comment by Quinn (quinn-dougherty) · 2025-01-16T00:53:53.699Z · LW(p) · GW(p)

Update: new funding call from ARIA calls out the Safeguarded/Gatekeeper stack in a video game directly

Creating (largely) self-contained prototypes/minimal-viable-products of a Safeguarded AI workflow, similar to this example but pushing for incrementally more advanced environments (e.g. Atari games).

↑ comment by Ben Goldhaber (bgold) · 2025-01-16T19:45:48.039Z · LW(p) · GW(p)

This post was one of my first introductions to davidad's agenda and convinced me that while yes it was crazy, it was maybe not impossible, and it led me to working on initiatives like the multi-author manifesto you mentioned.

Thank you for writing it!

comment by Ben Goldhaber (bgold) · 2023-04-27T14:17:33.934Z · LW(p) · GW(p)

First off thank you for writing this, great explanation.

- Do you anticipate acceleration risks from developing the formal models through an open, multilateral process? Presumably others could use the models to train and advance the capabilities of their own RL agents. Or is the expectation that regulation would accompany this such that only the consortium could use the world model?

- Would the simulations be exclusively for 'hard science' domains - ex. chemistry, biology - or would simulations of human behavior, economics, and politics also be needed? My expectation is that it would need the latter, but I imagine simulating hundreds of millions of intelligent agents would dramatically (prohibitively?) increase the complexity and computational costs.

↑ comment by Gabin (gabin-kolly) · 2023-05-02T23:08:39.148Z · LW(p) · GW(p)

- The formal models don't need to be open and public, and probably shouldn't be. Of course this adds a layer of difficulty, since it is harder to coordinate on an international scale and invite a lot of researchers to help on your project when you also want some protection against your model being stolen or published on the internet. It is perhaps okay if it is open source in the case where it is very expensive to train a model in this simulation and no other group can afford it.

- Good question. I don't know, and I don't think that I have a good model of what the simulation would look like. Here is what my (very simplified, probably wrong) model of Davidad would say:

- We only want to be really sure that the agent is locally nice. In particular, we want the agent to not hurt people (or perhaps only if we can be sure that there are good reasons, for example if they were going to hurt someone). The agent should not hurt them with weapons, or by removing the oxygen, or by increasing radiations. For that, we need to find a mathematical model of human boundaries, and then we need to formally verify that these boundaries will be respected. Since the agent is trained using time-bounded RL, after a short period of time it will not have any effect anymore on the world (if time-bounded RL indeed works), and the shareholders will be able to determine if the policy had a good impact on the world or not, and if not, train another agent and/or change the desiderata and/or improve the formal model. That's why it is more important to have a fine model of chemistry and physics, and we can do with a coarser model of economics and politics. In particular, we should not simulate millions of people.

- Is it reasonable? I don't know, and until I see this mathematical model of human boundaries, or a very convincing prototype, I'll be a bit skeptical.

comment by Chipmonk · 2023-05-04T17:10:45.056Z · LW(p) · GW(p)

I've compiled most if not all of everything Davidad has said about «boundaries» (which are mentioned in this post insofar as "deontic feasibility hypothesis" and "elicitors") to date here: «Boundaries and AI safety compilation [LW · GW]. Also see: «Boundaries» for formalizing a bare-bones morality [LW · GW]

comment by Dawn Drescher (Telofy) · 2023-11-23T12:59:46.947Z · LW(p) · GW(p)

Thanks so much for the summary! I'm wondering how this system could be bootstrapped in the industry using less powerful but current-levels-of-general AIs. Building a proof of concept using a Super Mario world is one thing, but what I would find more interesting is a version of the system that can make probabilistic safety guarantees for something like AutoGPT so that it is immediately useful and thus more likely to catch on.

What I'm thinking of here seems to me a lot like ARC Evals with probably somewhat different processes. Humans doing tasks that should, in the end, be automated. But that's just how I currently imagine it after a few minutes of thinking about it. Would something like that be so far from OAA to be uninformative toward the goal of testing, refining, and bootstrapping the system?

Unrelated: Developing a new language for the purpose of the world modeling would introduce a lot of potential for bugs and there'd be no ecosystem of libraries. If the language is a big improvement over other functional languages, has good marketing, and is widely used in the industry, then that could change over the course of ~5 years – the bugs would largely get found and an ecosystem might develop – but that seems very hard, slow, risky, and expensive to pull off. Maybe Haskell could do the trick too? I've done some correctness proofs of simple Haskell programs at the university, and it was quite enjoyable.

comment by Chipmonk · 2023-09-15T23:56:28.175Z · LW(p) · GW(p)

FWIW I find using the word "alignment" when specifically what you mean is "safety" to be confusing. I consider AI alignment to be "getting actively positive things that you want because of AI". I consider AI safety to be "getting no actively negative things to happen because of AI". And it seems that Davidad's approach is much more about safety than it is alignment [LW · GW].

comment by Charbel-Raphaël (charbel-raphael-segerie) · 2023-11-23T18:00:57.185Z · LW(p) · GW(p)

Here is a recent document with much more references:

And some highlights are available on this page:

comment by Ozyrus · 2023-04-20T05:22:00.338Z · LW(p) · GW(p)

Very interesting. Might need to read it few more times to get it in detail, but seems quite promising.

I do wonder, though; do we really need a sims/MFS-like simulation?

It seems right now that LLM wrapped in a LMCA [LW · GW] is how early AGI will look like. That probably means that they will "see" the world via text descriptions fed into them by their sensory tools, and act using action tools via text queries (also described here [LW · GW]).

Seems quite logical to me that this very paradigm in dualistic in nature. If LLM can act in real world using LMCA, then it can model the world using some different architecture, right? Otherwise it will not be able to act properly.

Then why not test LMCA agent using its underlying LLM + some world modeling architecture? Or a different, fine-tuned LLM.

↑ comment by Dalcy (Darcy) · 2023-05-12T02:59:57.679Z · LW(p) · GW(p)

I think the point of having an explicit human-legible world model / simulation is to make desideratas formally verifiable, which I don't think would be possible with a blackbox system (like LLM w/ wrappers).

comment by Review Bot · 2024-03-17T09:21:23.765Z · LW(p) · GW(p)

The LessWrong Review [? · GW] runs every year to select the posts that have most stood the test of time. This post is not yet eligible for review, but will be at the end of 2024. The top fifty or so posts are featured prominently on the site throughout the year.

Hopefully, the review is better than karma at judging enduring value. If we have accurate prediction markets on the review results, maybe we can have better incentives on LessWrong today. Will this post make the top fifty?

comment by wassname · 2024-01-01T02:54:02.060Z · LW(p) · GW(p)

This system's complexity almost precludes the use of Python for modeling; a new functional programming language specifically designed for this task would likely be necessary, potentially incorporating category theory. Humans would verify the world model line by line.

Python is turing complete though so it should be enough. Of course we might prefer another language, especially one optimised for reading rather than writing, since that will be most of what humans do.

comment by wassname · 2024-01-01T02:52:36.682Z · LW(p) · GW(p)

To expedite the development of this world model using LLMs, methods such as this one could be employed.

Check out this World Model using transformers (IRIS-delta https://openreview.net/forum?id=o8IDoZggqO). It seems like a good place to start, and I've been tinkering. It's slow going because of my lack of compute however.

comment by Chipmonk · 2023-12-23T20:36:51.667Z · LW(p) · GW(p)

Getting traction on the deontic feasibility hypothesis

Was using the word "feasibility" here, instead of "sufficiency" like it is everywhere else in this post (and this post [AF(p) · GW(p)]) just an accidental oversight? Or somehow intentional?

Replies from: charbel-raphael-segerie↑ comment by Charbel-Raphaël (charbel-raphael-segerie) · 2023-12-23T22:49:40.887Z · LW(p) · GW(p)

ah, no this is a mistake. Thanks

comment by Roman Leventov · 2023-09-18T16:54:51.131Z · LW(p) · GW(p)

I don't think deontic desiderata and constraints are practically sufficient to train (obtain) ethical behaviour that we would approve: virtue ethics (non-deontic lens on ethics, vs. deontology and consequentialism which are deontic) exists and is still viable for a reason. That being said, seeding the policy training stage with LLM-based heuristics could effectively play the role of "virtue ethics" here: literally, these LLMs could be prompted to suggest policies like a "benevolent steward of the civilisation". If my guess (that deontic desiderata and constraints are not enough) is true, than these heuristic policies should leave a non-trivial mark on the final trained policy, i.e., effectively select among desiderata-conforming policies.

RL Limitations: While reinforcement learning has made significant advancements, it still has limitations, as evidenced by MuZero's inability to effectively handle games like Stratego. To address these limitations, assistance from large language models might be required to bootstrap the training process. However, it remains unclear how to combine the strengths of both approaches effectively—leveraging the improved reliability and formal verifiability offered by reinforcement learning while harnessing the advanced capabilities of large language models.

In general, I think the place of RL policies in the OAA would be better served by hierarchical embedding predictive architecture with GFlowNets as planner (actor) policies [LW · GW]. This sidesteps the problem of "designing a reward function" because GFlowNet-EM algorithm (Zhang et al., 2022) can jointly train the policy and the energy (reward) function based on the feedback from the simulator and desiderata/constraints model.

In fact, the LLM-based "exemplary actor" [LW · GW] (which I suggested to "distill" into an H-JEPA with GFlowNet actors here [LW · GW]) could be seen as an informal model of both Compositional World Model and Policy Search Heuristics in the OAA. And the whole process that I described in that article could be used as the first "heuristical" sub-step in the Step 2 of the OAA, which would serve three different purposes: