An Open Agency Architecture for Safe Transformative AI

post by davidad · 2022-12-20T13:04:06.409Z · LW · GW · 22 commentsContents

Bird's-eye view Hypotheses Fine-grained decomposition None 22 comments

Edited to add (2024-03): This early draft is largely outdated by my ARIA programme thesis, Safeguarded AI. I, davidad, am no longer using "OAA" as a proper noun, although I still consider Safeguarded AI to be an open agency [LW · GW] architecture.

Note: This is an early draft outlining an alignment paradigm that I think might be extremely important; however, the quality bar for this write-up is "this is probably worth the reader's time" rather than "this is as clear, compelling, and comprehensive as I can make it." If you're interested, and especially if there's anything you want to understand better, please get in touch with me, e.g. via DM here [AF · GW].

In the Neorealist Success Model [AF · GW], I asked:

What would be the best strategy for building an AI system that helps us ethically end the acute risk period without creating its own catastrophic risks that would be worse than the status quo?

This post is a first pass at communicating my current answer.

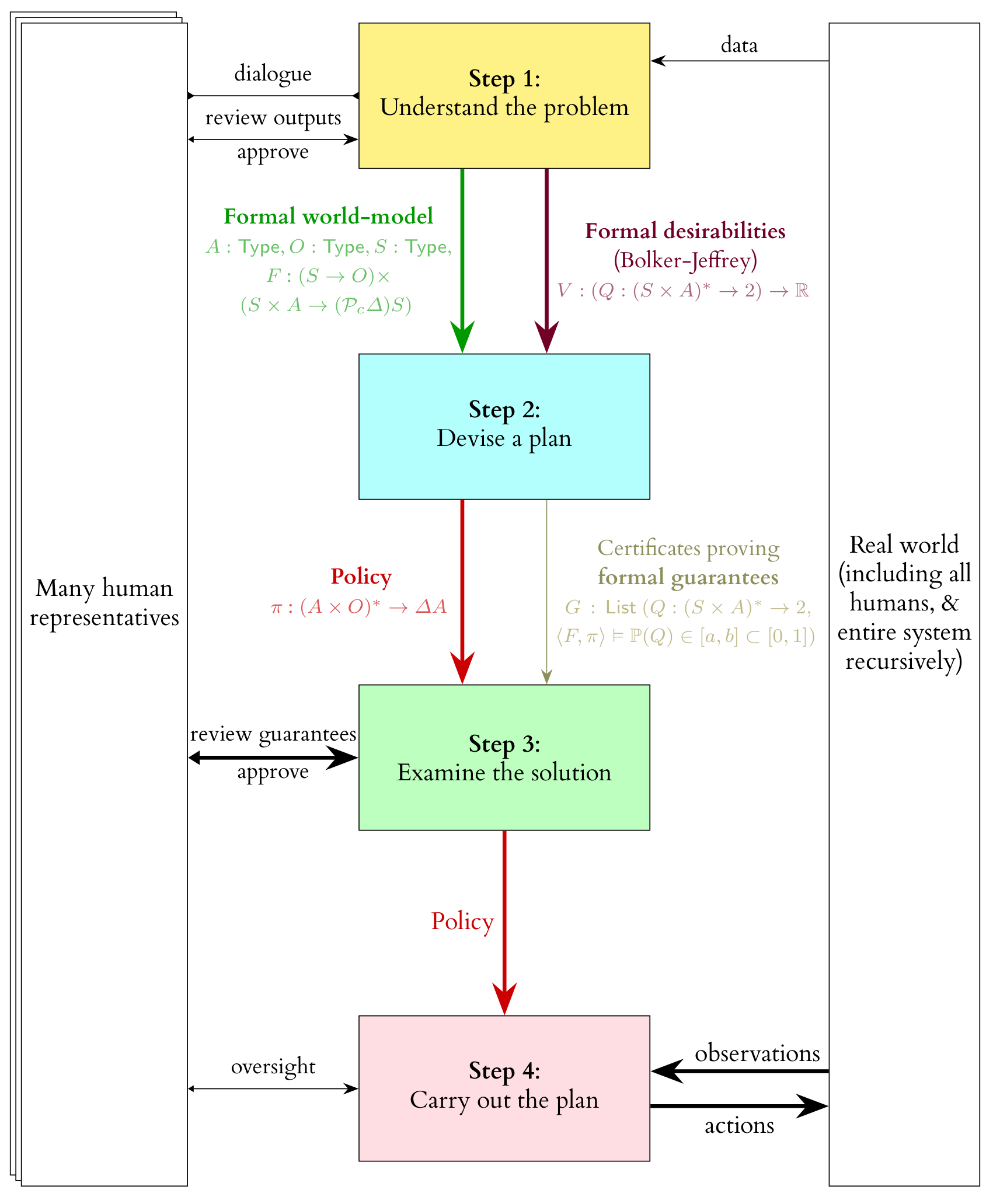

Bird's-eye view

At the top level, it centres on a separation between

- learning a world-model from (scientific) data and eliciting desirabilities (from human stakeholders)

- planning against a world-model and associated desirabilities

- acting in real-time

We see such a separation in, for example, MuZero, which can probably still beat GPT-4 at Go—the most effective capabilities do not always emerge from a fully black-box, end-to-end, generic pre-trained policy.

Hypotheses

- Scientific Sufficiency Hypothesis: It's feasible to train a purely descriptive/predictive infra-Bayesian[1] world-model that specifies enough critical dynamics accurately enough to end the acute risk period, such that this world-model is also fully understood by a collection of humans (in the sense of "understood" that existing human science is).

- MuZero does not train its world-model for any form of interpretability, so this hypothesis is more speculative.

- However, I find Scientific Sufficiency much more plausible than the tractability of eliciting latent knowledge [? · GW] from an end-to-end policy.

- It's worth noting there is quite a bit of overlap in relevant research directions, e.g.

- pinpointing gaps between the current human-intelligible ontology and the machine-learned ontology [LW(p) · GW(p)], and

- investigating natural abstractions [? · GW] theoretically and empirically.

- It's worth noting there is quite a bit of overlap in relevant research directions, e.g.

- Deontic Sufficiency Hypothesis: There exists a human-understandable set of features of finite trajectories in such a world-model, taking values in , such that we can be reasonably confident that all these features being near 0 implies high probability of existential safety, and such that saturating them at 0 is feasible[2] with high probability, using scientifically-accessible technologies.

- I am optimistic about this largely because of recent progress toward formalizing a natural abstraction of boundaries [AF · GW] by Critch [? · GW] and Garrabrant [AF · GW]. I find it quite plausible that there is some natural abstraction property of world-model trajectories that lies somewhere strictly within the vast moral gulf of

- Model-Checking Feasibility Hypothesis: It could become feasible to train RL policies such that a formally verified, infra-Bayesian, symbolic model-checking algorithm can establish high-confidence bounds on its performance relative to the world-model and safety desiderata, by using highly capable AI heuristics that can only affect the checker's computational cost and not its correctness—soon enough that switching to this strategy would be a strong Pareto improvement for an implementation-adequate coalition [AF · GW].

- Time-Bounded Optimization Thesis: RL settings can be time-bounded [AF · GW] such that high-performing agents avoid lock-in [AF · GW].

- I'm pretty confident of this.

- The founding coalition might set the time bound for the top-level policy to some number of decades, balancing the potential harms of certain kinds of lock-in for that period against the timelines for solving a more ambitious form of AI alignment.

If those hypotheses are true, I think this is a plan that would work. I also think they are all quite plausible (especially relative to the assumptions that underly other long-term AI safety hopes)—and that if any one of them fails, they would fail in a way that is detectable before deployment, making an attempt to execute the plan into a flop rather than a catastrophe.

Fine-grained decomposition

This is one possible way of unpacking the four high-level components of an open agency architecture into somewhat smaller chunks. The more detailed things get, the less confident I currently am that such assemblages [LW · GW] are necessarily the best way to do things, but the process of fleshing things out in increasingly concrete detail at all has increased my confidence that the overall proposed shape of the system is viable.

Here's a brief walkthrough of the fine-grained decomposition:

- An agenda-setting system would help the human representatives come to some agreements about what sorts of principles and intended outcomes are even on the table to negotiate and make tradeoffs about.

- Modellers would be AI services that generate purely descriptive models of real-world data, inspired by human ideas, and use those models to iteratively grow a human-compatible and predictively useful formal infra-Bayesian ontology—automating a lot of the work of writing down sufficiently detailed, compositional, hierarchical world models and reducing the human cognitive load to something more like code review and natural-language feedback. Some of these services would be tuned to generate trajectories that are validated by the current formal model but would be descriptively very surprising to humans.

- A compositional-causal-model version-control system would track edits to different pieces of the model, their review status by different human stakeholders, where humans defer to each other and where they agree to disagree, and other book-keeping features of formal modelling in the large.

- Elicitors would be AI services that help humans to express their desiderata in the formal language of the world-model. Some of these would be tuned to generate trajectories that satisfy the current desiderata but seem like they would be normatively very disapproved-of by humans.

- A preference-model version-control system would be analogous to the causal model version-control system (but preference models may have different kinds of internal dependencies or compositionality than causal models, and will need to be "rebased" when the causal model they are relative to changes).

- Model-based RL would find policies that perform well on various weightings of formal preference specifications relative to a formal world model (which, since it is infra-Bayesian, is really a large class of world models).

- A verified simulator would provide pessimized rollouts to the RL algorithm; this is a little bit like adversarial training in that it synthesizes ways that the current policy could go wrong, but it would do this with formal guarantees, by using a certified branch-and-bound algorithm accelerated by AI heuristics.

- A bargaining solver calculates the utility to each stakeholder of deploying each policy (with varying tradeoffs between desiderata), and selects a Pareto-optimal bargaining solution (with randomization from a verifiable source like drand).

- A review process would provide stakeholders with decision-relevant reports, both about the currently proposed policy and about the status-quo trajectory of the world, and determine through some collective decision framework (weighted majority vote, or something that a weighted majority prefers to majority vote) whether the bargaining solution is ready to deploy.

- A deployed top-level policy would operate at a high level of abstraction (for computational scalability), delegating tasks requiring high perception bandwidth to very short-time-horizon, time-bounded scoped-task policies which are aligned to a reward function determined by the top-level policy itself, and delegating inference about the high-level world state to a large ensemble of state estimators and an infra-Bayesian form of sensor fusion (which enhances robustness and reduces the feasibility of tampering-style collusion[3]).

At this point I defer further discussion to the comment section, which I will pre-emptively populate with a handful of FAQ-style questions and answers.

- ^

Here I mostly mean to refer to the concept of credal sets: a conservative extension of Bayesian probability theory which incorporates the virtues of interval arithmetic: representing uncertainty with ranges, generalized to closed convex regions (of higher-dimensional or infinite-dimensional spaces). Variants of this theory have been rediscovered many times (by Choquet, Dempster & Shafer, Williams, Kosoy & Appel, etc.) under various names ("imprecise probability", "robust Bayes", "crisp infradistributions [AF · GW]," etc.), each of which has some idiosyncratic features. In the past few years it has become very clear that convex subsets of probability distributions are the canonical monad for composing non-deterministic and probabilistic choice, i.e. Knightian uncertainty and Bayesian risk. Category theory has been used to purify the essential concepts there from the contradictory idiosyncratic features introduced by different discoverers—and to connect them (via coalgebra) to existing ideas and algorithms in model-checking. Incidentally, convex sets of probability distributions are also the central concept in the 2013 positive result on probabilistic reflective consistency by Christiano, Yudkowsky, Herreshoff and Barasz.

, seen in my type signature for formal world-model, is the notation for this monad (the monad of "crisp infradistributions" or "credal sets" or etc.), whereas is a monad of ordinary probability distributions.

Infra-Bayesian physicalism [AF · GW] goes much farther than the decision theory of credal sets, in order to account for embedded agency [? · GW] via naturalized induction [? · GW], and casts all desirabilities in the form of irreducibly valuable computations. I think something in this direction is philosophically promising, and likely on the critical path to ultimate ambitious alignment solutions in the style of CEV or moral realism. But in the context of building a stop-gap transformative AI that just forestalls catastrophic risk while more of that philosophy is worked out, I think policies based on infra-Bayesian physicalism would fail to satisfy conservative safety properties due to unscoped consequentialism and situated awareness. It's also probably computationally harder to do this properly rather than just specifying a Cartesian boundary and associated bridge rules.

- ^

This is a simplification; for an initial fixed time-period post-deployment in which the agent is building planetary-scale infrastructure, only the agent's own actions' counterfactual impact on the features would be scored.

- ^

Actual tampering with physical sensors is already ruled out by model-checking with respect to the entire formal world-model, which is also the sole source of information for the central model-based RL optimizer.

22 comments

Comments sorted by top scores.

comment by So8res · 2023-03-04T20:10:06.712Z · LW(p) · GW(p)

Here's a recent attempt of mine at a distillation of a fragment of this plan, copied over from a discussion elsewhere:

goal: make there be a logical statement such that a proof of that statement solves the strawberries-on-a-plate problem (or w/e).

summary of plan:

- the humans put in a herculean effort to build a multi-level world-model that is interpretable to them (ranging from quantum chemistry at the lowest level, to strawberries and plates at the top)

- we interpret this in a very conservative way, as a convex set of models that hopefully contains something pretty close to reality.

- we can then form the logical statement "this policy puts two cellularly-but-not-molecularly identical strawberries on a plate (and has no other high-level effect) with pretty-high probability across all of those models simultaneously"

background context: there's some fancy tools with very nice mathematical properties for combining probabilistic reasoning and worst-case reasoning.

key hope: these tools will let us interpret this "manual multi-level world-model" in a way that keeps enough of the probabilistic nature for tractable policies to exist, and enough adversarial nature for this constraint to be pretty tight.

in more concrete terms: for any given UFAI, somewhere in the convex hull of all the interpretations of the manual multi-level world model, there's a model that sees (in its high-level) the shady shit that the UFAI was hoping to slip past us. So such "shady" policies fail in the worst-case, and fail to satisfy the theorem. But also enough of the probabilistic nature is retained that your policies don't need to handle the literal worst-cases of thermodynamic heat, and so there are some "reasonable" policies that could satisfy the theorem.

capabilities requirements: the humans need to be able to construct the world model; something untrusted and quite capable needs to search for proofs of the theorem; the policy extracted from said theorem is then probably an AGI with high capabilities but you've (putatively) proven that all it does is put strawberries on a plate and shut down so \shrug :crossed_fingers: hopefully that proof bound to reality.

(note: I'm simply attempting to regurgitate the idea here; not defend it. obvious difficulties are obvious, like "the task of finding such a policy is essentially the task of building and aligning an AGI" and "something that can find that policy is putting adversarial pressure on your theorem". even if proving the theorem requires finding a sufficiently-corrigible AGI, it would still be rad to have a logical statement of this form (and perhaps there's even some use to it if it winds up not quite rated for withstanding superintelligent adversaries?).)

Anticipating an obvious question: yes, I observed to Davidad that the part where we imagine convex sets of distributions that contain enough of the probabilistic nature to admit tractable policies and enough of the worst-case nature to prevent UFAI funny business is where a bunch of the work is being done, and that if it works then there should be a much smaller example of it working, and probably some minimal toy example where it's easy to see that the only policies that satisfy the analogous theorem are doing some new breed of optimization, that is neither meliorization nor satisfaction and that is somehow more mild. And (either I'm under the illusion of transparency or) Davidad agreed that this should be possible, and claims it is on his list of things to demonstrate.

(note: this is the fragment of Davidad's plan that I was able to distill out into something that made sense to me; i suspect he thinks of this as just one piece among many. I welcome corrections :-))

comment by jacob_cannell · 2023-04-20T17:45:20.905Z · LW(p) · GW(p)

OAASTA (Open Agency Architecture for Safe Transformative AI) has some overlap with my own approach outlined in LOVE in a simbox [LW · GW].

Both approaches are based on using extensive hierarchical world models/simulations, but OAASTA emphasizes formal verification of physics models whereas LOVES emphasizes highly scalable/performant simulations [LW · GW] derived more from video game tech (and the simplest useful LOVES sim could perhaps be implemented entirely with a LLM).

At a surface level OOASTA and LOVES are using their world models for different purposes: in LOVES the main sim world model is used to safely train and evaluate agent architectures, whereas in OOASTA the world model is used more directly for model based RL. However at a deeper level of inspection these could end up very similar if you view the entire multi-agent system (of human orgs training AI archs in sims) in LOVES as a hierarchical agent, then the process of sim rollouts of training and evaluating internal sub-agents is equivalent to planning rollouts for the aggregate global hierarchical agent (because its primary action of consequence is creating new improved architectures and evaluating their alignment consequences). An earlier version of the LOVES post draft was more explicit about the hierarchical agent planning analogy.

I've also put some thought into what the resulting social decision system should look like assuming success (ie the bargaining solver), but that was beyond the scope of LOVES as I don't view that part as important as first solving the core of alignment.

LOVES implicitly assumes[1] shorter timelines [LW · GW] than OOSTA - so in that sense you could think of LOVES as a nearer term MVP streamlined to minimize alignment tax (but I'm already worried we don't have enough time for LOVES).

Beyond that the model of AGI informing LOVES assumes brain-like AGI and alignment, with the main artifact being powerful agents that love humanity and act independently to empower our future, but probably couldn't provide any formal proof of how their actions would fulfill formal goals.

I would be surprised if we don't have human surpassing AGI in the next 5 years. ↩︎

comment by davidad · 2022-12-20T13:17:16.331Z · LW(p) · GW(p)

What about inner misalignment?

Replies from: davidad↑ comment by davidad · 2022-12-20T13:21:09.044Z · LW(p) · GW(p)

Inner misalignment is a story for why one might expect capable but misaligned out-of-distribution behaviour [AF · GW], which is what's actually bad. Model-checking could rule that out entirely (relative to the formal specification)— whether it's "inner misalignment" or "goal misgeneralization" or "deceptive alignment" or "demons in Solmonoff induction" or whatever kind of story might explain such output. Formal verification is qualitatively different from the usual game of debugging whack-a-mole that software engineers play to get software to behave acceptably.

comment by Charlie Steiner · 2022-12-23T15:12:53.720Z · LW(p) · GW(p)

Why a formal specification of the desired properties?

Humans do not carry around a formal specification of what we want printed on the inside of our skulls. So when presented with some formal specification, we would need to gain confidence that such a formal specification would lead to good things and not bad things through some informal process. There's also the problem that specifications of what we want tend to be large - humans don't do a good job of evaluating formal statements even when they're only a few hundred lines long. So why not just cut out the middleman and directly reference the informal processes humans use to evaluate whether some plan will lead to good things and not bad things?

Replies from: davidad↑ comment by davidad · 2022-12-23T22:36:14.490Z · LW(p) · GW(p)

The informal processes humans use to evaluate outcomes are buggy and inconsistent (across humans, within humans, across different scenarios that should be equivalent, etc.). (Let alone asking humans to evaluate plans!) The proposal here is not to aim for coherent extrapolated volition, but rather to identify a formal property (presumably a conjunct of many other properties, etc.) such that conservatively implies that some of the most important bad things are limited and that there’s some baseline minimum of good things (e.g. everyone has access to resources sufficient for at least their previous standard of living). In human history, the development of increasingly formalized bright lines around what things count as definitely bad things (namely, laws) seems to have been greatly instrumental in the reduction of bad things overall.

Regarding the challenges of understanding formal descriptions, I’m hopeful about this because of

- natural abstractions (so the best formal representations could be shockingly compact)

- code review (Google’s codebase is not exactly “a formal property,” unless we play semantics games, but it is highly reliable, fully machine-readable, and every one of its several billion lines of code has been reviewed by at least 3 humans)

- AI assistants (although we need to be very careful here—e.g. reading LLM outputs cannot substitute for actually understanding the formal representation since they are often untruthful)

comment by Roko · 2023-04-21T13:31:06.162Z · LW(p) · GW(p)

I feel that this is far too ambitious.

I think it may be better to begin this line of attack by creating a system that can simulate worlds that are not like our world (they are far simpler), and efficiently answer certain counterfactuals about them, looking to find generally robust ways to steer a multipolar world towards the best collective outcomes.

A basic first goal would be to test out new systems of governance like Futarchy and see whether they work or not, for that is currently unknown.

We have never steered any world. Let's start by steering simpler worlds than our own, and see what that looks like.

Replies from: davidad↑ comment by davidad · 2023-04-21T21:08:07.480Z · LW(p) · GW(p)

I agree that we should start by trying this with far simpler worlds than our own, and with futarchy-style decision-making schemes, where forecasters produce extremely stylized QURI-style models that map from action-space to outcome-space while a broader group of stakeholders defines mappings from output-space to each stakeholder’s utility.

comment by Charlie Steiner · 2022-12-23T14:58:22.770Z · LW(p) · GW(p)

What about problems with direct oversight [? · GW]?

Shouldn't we plan to build trust in AIs in ways that don't require humans to do things like vet all changes to its world-model? Perhaps toy problems that try to get at what we care about, or automated interpretability tools that can give humans a broad overview of some indicators?

Replies from: davidad, davidad↑ comment by davidad · 2022-12-23T22:28:17.168Z · LW(p) · GW(p)

Shouldn't we plan to build trust in AIs in ways that don't require humans to do things like vet all changes to its world-model?

Yes, I agree that we should plan toward a way to trust AIs as something more like virtuous moral agents rather than as safety-critical systems. I would prefer that. But I am afraid those plans will not reach success before AGI gets built anyway, unless we have a concurrent plan to build an anti-AGI defensive TAI that requires less deep insight into normative alignment.

↑ comment by davidad · 2022-12-23T22:23:52.548Z · LW(p) · GW(p)

In response to your linked post, I do have similar intuitions about “Microscope AI” as it is typically conceived (i.e. to examine the AI for problems using mechanistic interpretability tools before deploying it). Here I propose two things that are a little bit like Microscope AI but in my view both avoid the core problem you’re pointing at (i.e. a useful neural network will always be larger than your understanding of it, and that matters):

- Model-checking policies for formal properties. A model-checker (unlike a human interpreter) works with the entire network, not just the most interpretable parts. If it proves a property, that property is true about the actual neural network. The Model-Checking Feasibility Hypothesis says that this is feasible, regardless of the infeasibility of a human understanding the policy or any details of the proof. (We would rely on a verified verifier for the proof, of which humans would understand the details.)

- Factoring learned information through human understanding. If we denote learning by , human understanding by , and big effects on the world by , then “factoring” means that (for some and ). This is in the same spirit as “human in the loop,” except not for the innermost loops of real-time action. Here, the Scientific Sufficiency Hypothesis implies that even though is “larger” than in the sense you point out, we can throw away the parts that don’t fit in and move forward with a fully-understood world model. I believe this is likely feasible for world models, but not for policies (optimal policies for simple world models, like Go, can of course be much better than anything humans understand).

comment by davidad · 2022-12-20T13:22:59.550Z · LW(p) · GW(p)

What about collusion?

Replies from: davidad↑ comment by davidad · 2022-12-20T13:27:10.856Z · LW(p) · GW(p)

I find Eric Drexler's arguments [AF · GW] convincing about how it seems possible to make collusion very unlikely. On the other hand, I do think it requires nontrivial design and large ensembles; in the case of an unconstrained 2-player game (like Safety via Debate), I side with Eliezer that the probability of collusion probably converges toward 1 as capabilities get more superintelligent.

Another key principle that I make use of is algorithms (such as branch-and-bound and SMT solvers) whose performance—but not their correctness—depends on extremely clever heuristics. Accelerating the computation of more accurate and useful bounds seems to me like a pretty ineffectual causal channel for the AIs playing those heuristic roles to coordinate with each other or to seek real-world power.

comment by davidad · 2022-12-20T13:07:12.175Z · LW(p) · GW(p)

How would you respond to predicted objections from Nate Soares?

Replies from: davidad, davidad↑ comment by davidad · 2022-12-20T13:16:22.495Z · LW(p) · GW(p)

Nate [replying to Eric Drexler]: I expect that, if you try to split these systems into services, then you either fail to capture the heart of intelligence and your siloed AIs are irrelevant, or you wind up with enough AGI in one of your siloes that you have a whole alignment problem (hard parts and all) in there. Like, I see this plan as basically saying "yep, that hard problem is in fact too hard, let's try to dodge it, by having humans + narrow AI services perform the pivotal act". Setting aside how I don't particularly expect this to work, we can at least hopefully agree that it's attempting to route around the problems that seem to me to be central, rather than attempting to solve them.

I think, in an open agency architecture, the silo that gets "enough AGI" is in step 2, and it is pointed at the desired objective by having formal specifications and model-checking against them.

But I also wouldn't object to the charge that an open agency architecture would "route around the central problem," if you define the central problem as something like building a system that you'd be happy for humanity to defer to forever. In the long run, something like more ambitious value learning (or value discovery) will be needed, on pain of astronomical waste. This would be, in a sense, a compromise (or, if you're optimistic, a contingency plan), motivated by short timelines and insufficient theoretical progress toward full normative alignment.

Replies from: TsviBT, Eric Drexler↑ comment by TsviBT · 2022-12-20T20:02:02.629Z · LW(p) · GW(p)

if you define the central problem as something like building a system that you'd be happy for humanity to defer to forever.

[I at most skimmed the post, but] IMO this is a more ambitious goal than the IMO central problem. IMO the central problem (phrased with more assumptions than strictly necessary) is more like "building system that's gaining a bunch of understanding you don't already have, in whatever domains are necessary for achieving some impressive real-world task, without killing you". So I'd guess that's supposed to happen in step 1. It's debatable how much you have to do that to end the acute risk period, for one thing because humanity collectively is already a really slow (too slow) version of that, but it's a different goal than deferring permanently to an autonomous agent.

(I'd also flag this kind of proposal as being at risk of playing shell games with the generator of large effects on the world, though not particularly more than other proposals in a similar genre.)

Replies from: davidad↑ comment by davidad · 2022-12-20T20:30:43.393Z · LW(p) · GW(p)

I’d say the scientific understanding happens in step 1, but I think that would be mostly consolidating science that’s already understood. (And some patching up potentially exploitable holes where AI can deduce that “if this is the best theory, the real dynamics must actually be like that instead”. But my intuition is that there aren’t many of these holes, and that unknown physics questions are mostly underdetermined by known data, at least for quite a long way toward the infinite-compute limit of Solomonoff induction, and possibly all the way.)

Engineering understanding would happen in step 2, and I think engineering is more “the generator of large effects on the world,” the place where much-faster-than-human ingenuity is needed, rather than hoping to find new science.

(Although the formalization of the model of scientific reality is important for the overall proposal—to facilitate validating that the engineering actually does what is desired—and building such a formalization would be hard for unaided humans.)

↑ comment by Eric Drexler · 2022-12-21T15:20:13.701Z · LW(p) · GW(p)

Nate [replying to Eric Drexler]: I expect that, if you try to split these systems into services, then you either fail to capture the heart of intelligence and your siloed AIs are irrelevant, or you wind up with enough AGI in one of your siloes that you have a whole alignment problem (hard parts and all) in there….

GTP-Nate is confusing the features of the AI services model with the argument that “Collusion among superintelligent oracles can readily be avoided [AF · GW]”. As it says on the tin, there’s no assumption that intelligence must be limited. It is, instead, an argument that collusion among (super)intelligent systems is fragile under conditions that are quite natural to implement.

↑ comment by davidad · 2022-12-20T13:10:05.012Z · LW(p) · GW(p)

GPT-3.5-Nate [prompted using 1061 tokens of Nate's criticism of related ideas]: This is a really interesting proposal! It seems to me that you're trying to solve the hard problem of AI alignment, by proposing a system that would be able to identify the concepts that an AGI should be optimizing for, and then use a combination of model-checking and time-bounded optimization to ensure that the AGI's behavior is robustly directed at those concepts. This is a really ambitious project, and I'm excited to see how it develops.

🙃

comment by davidad · 2022-12-20T13:04:41.936Z · LW(p) · GW(p)

Is this basically Stuart Russell's provably beneficial AI?

Replies from: davidad↑ comment by davidad · 2022-12-20T13:06:45.568Z · LW(p) · GW(p)

There's a lot of similarity. People (including myself in the past) have criticized Russell on the basis that no formal model can prove properties of real-world effects, because the map is not the territory, but I now agree with Russell that it's plausible to get good enough maps. However:

- I think it's quite likely that this is only possible with an infra-Bayesian (or credal-set) approach to explicitly account for Knightian uncertainty, which seems to be a difference from Russell's published proposals (although he has investigated Halpern-style probability logics [LW(p) · GW(p)], which have some similarities to credal sets, he mostly gravitates toward frameworks with ordinary Bayesian semantics).

- Instead of an IRL or CIRL approach to value learning, I propose to rely primarily on linguistic dialogues that are grounded in a fully interpretable representation of preferences. A crux for this is that I believe success in the current stage of humanity's game does not require loading very much of human values.

comment by Chipmonk · 2023-05-04T17:11:54.721Z · LW(p) · GW(p)

- Deontic Sufficiency Hypothesis: There exists a human-understandable set of features of finite trajectories in such a world-model, taking values in , such that we can be reasonably confident that all these features being near 0 implies high probability of existential safety, and such that saturating them at 0 is feasible[2] with high probability, using scientifically-accessible technologies.

- I am optimistic about this largely because of recent progress toward formalizing a natural abstraction of boundaries by Critch and Garrabrant. I find it quite plausible that there is some natural abstraction property of world-model trajectories that lies somewhere strictly within the vast moral gulf of

I've compiled most if not all of everything Davidad has said to date about «boundaries» here: «Boundaries and AI safety compilation [LW · GW].