Posts

Comments

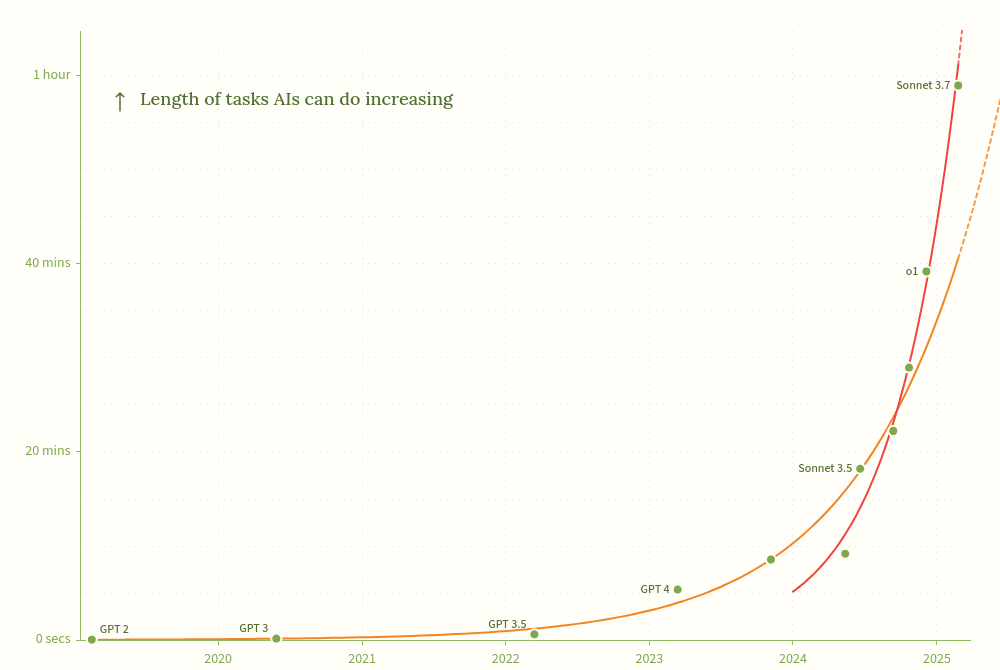

Looking on trend for the new exponential, next prediction is a few more months of this before we transition to a faster exponential.

And clarification re: the locally invalid react: I'm not trying to defend or justify my conclusion that the faster exponential is due to software automation speeding up research. I'm raising something to attention which will likely just click if you've been following devs talk about their AI use over the past year or two, and would take more bandwidth to argue for extensively than makes sense for me to spend here.

*nods*, yeah, your team does seem competent and truth-seeking enough to get a lot of stuff right, despite what I model as shortcomings.

That experience was an in-person conversation with Jaime some years ago, after an offhand comment I made expecting fairly short timelines. I imagine there are many contexts where Epoch has not had this vibe.

Room to explore intellectual ideas is indeed important, as is not succumbing to peer pressure. However, Epoch's culture has from personal experience felt more like a disgust reaction towards claims of short timelines than open curious engagement and trying to find out whether the person they're talking to has good arguments (probably because most people who believe in short timelines don't actually have the predictive patterns and Epoch mixed up "many people think x for bad reasons" with "x is wrong / no one believes it for good reasons").

Intellectual diversity is a good sign, it's true, but being closed to arguments by people who turned out to have better models than you is not virtuous.

From my vantage point, Epoch is wrong about critical things they are studying in ways that make them take actions which harm the future despite competence and positive intentions, while not effectively seeking out clarity which would let them update.

Long timelines is the main one, but also low p(doom), low probability on the more serious forms of RSI which seem both likely and very dangerous, and relatedly not being focused on misalignment/power-seeking risks to the extent that seems correct given how strong a filter that is on timelines with our current alignment technology. I'm sure not all epoch people have these issues, and hope that with the less careful ones leaving the rest will have more reliably good effects on the future.

Link to the OpenAI scandal. Epoch has for some time felt like it was staffed by highly competent people who were tied to incorrect conclusions, but whose competence lead them to some useful outputs alongside the mildly harmful ones. I hope that the remaining people take more care in future hires, and that grantmakers update off of accidentality creating another capabilities org.

ALLFED seems to be doing important and neglected work. Even if you just care about reducing AI x-risk, there's a solid case to be made that preparing for alignment researchers to survive a nuclear war might be one of the remaining ways to thread the needle assuming high p(doom). ALLFED seems the group with the clearest comparative advantage to make this happen.

I vouch for Severin being highly skilled at mediating conflicts.

Also, smouldering conflicts provide more drag to cohesion execution than most people realize until it's resolved. Try this out if you have even a slight suspicion it might help.

Accurate, and one of the main reasons why most current alignment efforts will fall apart with future systems. A generalized version of this combined with convergent power-seeking of learned patterns looks like the core mechanism of doom.

Podcast version:

The new Moore's Law for AI Agents (aka More's Law) has accelerated at around the time people in research roles started to talk a lot more about getting value from AI coding assistants. AI accelerating AI research seems like the obvious interpretation, and if true, the new exponential is here to stay. This gets us to 8 hour AIs in ~March 2026, and 1 month AIs around mid 2027.[1]

I do not expect humanity to retain relevant steering power for long in a world with one-month AIs. If we haven't solved alignment, either iteratively or once-and-for-all[2], it's looking like game over unless civilization ends up tripping over its shoelaces and we've prepared.

- ^

An extra speed-up of the curve could well happen, for example with [obvious capability idea, nonetheless redacted to reduce speed of memetic spread].

- ^

From my bird's eye view of the field, having at least read the abstracts of a few papers from most organizations in the space, I would be quite surprised if we had what it takes to solve alignment in the time that graph gives us. There's not enough people, and they're mostly not working on things which are even trying to align a superintelligence.

Nice! I think you might find my draft on Dynamics of Healthy Systems: Control vs Opening relevant to these explorations, feel free to skim as it's longer than ideal (hence unpublished, despite containing what feels like a general and important insight that applies to agency at many scales). I plan to write a cleaner one sometime, but for now it's claude-assisted writing up my ideas, so it's about 2-3x more wordy than it should be.

Interesting, yes. I think I see, and I think I disagree with this extreme formulation, despite knowing that this is remarkably often a good direction to go in. If "[if and only if]" was replaced with "especially", I would agree, as I think the continual/regular release process is an amplifier on progress not a full requisite.

As for re-forming, yes, I do expect there is a true pattern we are within, which can be in its full specification known, though all the consequences of that specification would only fit into a universe. I think having fluidity on as many layers of ontology as you can is generally correct (and that most people have way too little of this), but I expect the process of release and dissolve will increasingly converge, if you're doing well at it.

In the spirit of gently poking at your process: My uncertain, please take it lightly, guess is that you've annealed strongly towards the release/dissolve process itself, to the extent that it itself is an ontology which has some level of fixedness in you.

I'd love to see the reading time listed on the frontpage. That would make the incentives naturally slide towards shorter posts, as more people would click and it would get more karma. Feels much more decision relevant than when the post was posted.

Yup, DMing for context!

hmmm, I'm wondering if you're pointing at something different from the thing in this space which I intuitively expect is good using words that sound more extreme than I'd use, or whether you're pointing at a different thing. I'll take a shot at describing the thing I'd be happy with of this type and you can let me know whether this feels like the thing you're trying to point to:

An ontology restricts the shape of thought by being of a set shape. All of them are insufficient, the Tao that can be specified is not the true Tao, but each can contain patterns that are useful if you let them dissolve and continually release the meta-structures rather than cling to them as a whole. By continually releasing as much of your structure back to flow you grow much faster and in more directions, because in returning from that dissolving you reform with much more of your collected patterns integrated and get out of some of your local minima.

you could engage with the Survival and Flourishing Fund

Yeah! The S-process is pretty neat, buying into that might be a great idea once you're ready to donate more.

Oh, yup, thanks, fixed.

Consider reaching out to Rob Miles.

He tends to get far more emails than he can handle so a cold contact might not work, but I can bump this up his list if you're interested.

Firstly: Nice, glad to have another competent and well-resourced person on-board. Welcome to the effort.

I suggest: Take some time to form reasonably deep models of the landscape, first technical[1] and then the major actors and how they're interfacing with the challenge.[2] This will inform your strategy going forward. Most people, even people who are full time in AI safety, seem to not have super deep models (so don't let yourself be socially-memetically tugged by people who don't have clear models).

Being independently wealthy in this field is awesome, as you'll be able to work on whatever your inner compass points to as the best, rather than needing to track grantmaker wants and all of the accompanying stress. With that level of income you'd also be able to be one of the top handful of grantmakers in the field if you wanted, the AISafety.com donation guide has a bunch of relevant info (though might need an update sweep, feel free to ping me with questions on this).

Things look pretty bad in many directions, but it's not over yet and the space of possible actions is vast. Best of skill finding good ones!

- ^

I recommend https://agentfoundations.study/, and much of https://www.aisafety.com/stay-informed, and chewing on the ideas until they're clear enough in your mind that you can easily get them across to almost anyone. This is good practice internally as well as good for the world. The Sequences are also excellent grounding for the type of thinking needed in this field - it's what they were designed for. Start with the highlights, maybe go on to the rest if it feels valuable. AI Safety Fundamentals courses are also worth taking, but you'll want a lot of additional reading and thinking on top of that. I'd also be up for a call or two if you like, I've been doing the self-fund (+sometimes giving grants) and try and save the world thing for some time now.

- ^

Technical first seems best, as it's the grounding which underpins what would be needed in governance, and will help you orient better than going straight to governance I suspect.

eh, <5%? More that we might be able to get the AIs to do most of the heavy lifting of figuring this out, but that's a sliding scale of how much oversight the automated research systems need to not end up in wrong places.

My current guess as to Anthropic's effect:

- 0-8 months shorter timelines[1]

- Much better chances of a good end in worlds where superalignment doesn't require strong technical philosophy[2] (but I put very low odds on being in this world)

- Somewhat better chances of a good end in worlds where superalignment does require strong technical philosophy[3]

- ^

Shorter due to:

- There being a number of people who might otherwise not have been willing to work for a scaling lab, or not do so as enthusiastically/effectively (~55% weight)

- Encouraging race dynamics (~30%)

- Making it less likely that there's a broad alliance against scaling labs (15%)

Partly counterbalanced by encouraging better infosec practices and being more encouraging of regulation than the alternatives.

- ^

They're trying a bunch of the things which if alignment is easy, might actually work, and no other org has the level of leadership buy in for investing in as hard.

- ^

Probably though using AI assisted alignment schemes, but building org competence in doing this kind of research manually so they can direct the systems to the right problems and sort slop from sound ideas is going to need to be a priority.

By "discard", do you mean remove specifically the fixed-ness in your ontology such that the cognition as a whole can move fluidly and the aspects of those models which don't integrate with your wider system can dissolve, as opposed to the alternate interpretation where "discard" means actively root out and try and remove the concept itself (rather than the fixed-ness of it)?

(also 👋, long time no see, glad you're doing well)

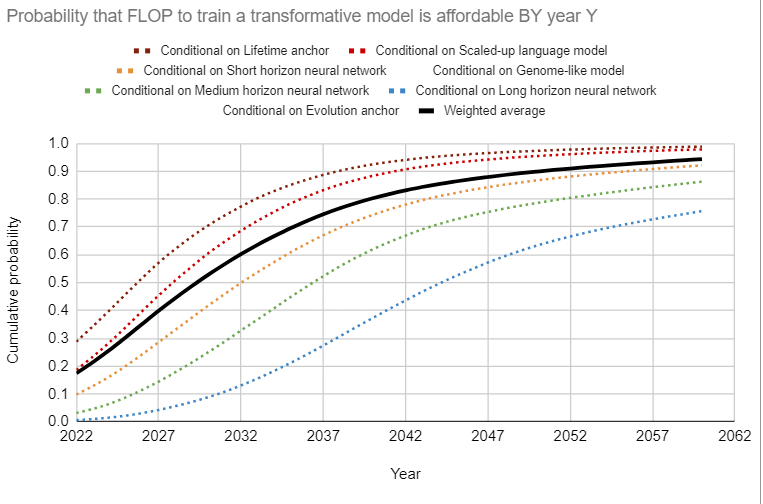

I had a similar experience a couple years back when running bio anchors with numbers which seemed more reasonable/less consistently slanted towards longer timelines to me, getting:

before taking into account AI accelerating AI development, which I expected to bring it a few years earlier.

Also I suggest that given the number of tags in each section, load more should be load all.

This is awesome! Three comments:

- Please make an easy to find Recent Changes feed (maybe a thing on the home page which only appears if you've made wiki edits). If you want an editor community, that will be their home, and the thing they're keeping up with and knowing to positively reinforce each other.

- The concepts portal is now a slightly awkward mix of articles and tags, with potentially very high use tags being quite buried because no one's written a good article for it (e.g Rationality Quotes has 136 pages tagged, but zero karma, so requires many clicks to reach). I'm especially thinking about the use case of wanting to know what types of articles there are to browse around. I'm not sure exactly what to do about this.. maybe having the sorting not be just about karma, but a mix of karma and number of tagged posts? Like (k+10)*(t+10) or something? Disadvantage is this is opaque and drops alphabetical much harder.

- A bunch of the uncategorized ones could be categorized, but I'm not seeing a way to do this with normal permissions.

Adjusting 2 would make it much cleaner to categorize the many ones in 3 without that clogging up the normal lists.

Nice! I'll watch through these then probably add a lot of them to the aisafety.video playlist.

I've heard from people I trust that:

- They can be pretty great, if you know what you want and set the prompt up right

- They won't be as skilled as a human therapist, and might throw you in at the deep end or not be tracking things a human would

Using them can be very worth it as they're always available and cheap, but they require a little intentionality. I suggest asking your human therapist for a few suggestions of kinda of work you might do with a peer or LLM assistant, and monitoring how it affects you while exploring, if you feel safe enough doing that. Maybe do it the day before a human session the first few times so you have a good safety net. Maybe ask some LWers what their system prompts are, or find some well-tested prompts elsewhere.

Looks like Tantrix:

oh yup, sorry, I meant mid 2026, like ~6 months before the primary proper starts. But could be earlier.

Yeah, this seems worth a shot. If we do this, we should do our own pre-primary in like mid 2027 to select who to run in each party, so that we don't split the vote and also so that we select the best candidate.

Someone I know was involved in a DIY pre-primary in the UK which unseated an extremely safe politician, and we'd get a bunch of extra press while doing this.

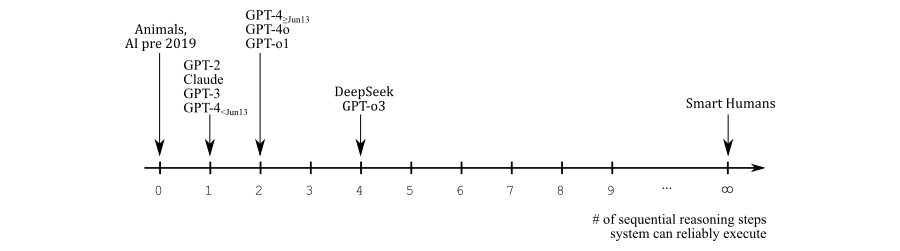

Humans without scaffolding can do a very finite number of sequential reasoning steps without mistakes. That's why thinking aids like paper, whiteboards, and other people to bounce ideas off and keep the cache fresh are so useful.

With a large enough decisive strategic advantage, a system can afford to run safety checks on any future versions of itself and anything else it's interacting with sufficient to stabilize values for extremely long periods of time.

Multipolar worlds though? Yeah, they're going to get eaten by evolution/moloch/power seeking/pythia.

More cynical take based on the Musk/Altman emails: Altman was expecting Musk to be CEO. He set up a governance structure which would effectively be able to dethrone Musk, with him as the obvious successor, and was happy to staff the board with ideological people who might well take issue with something Musk did down the line to give him a shot at the throne.

Musk walked away, and it would've been too weird to change his mind on the governance structure. Altman thought this trap wouldn't fire with high enough probability to disarm it at any time before it did.

I don't know whether the dates line up to dis-confirm this, but I could see this kind of 5d chess move happening. Though maybe normal power and incentive psychological things are sufficient.

Looks fun!

I could also remove Oil Seeker's protection from Pollution; they don't need it for making Black Chips to be worthwhile for them but it makes that less of an amazing deal than it is.

Maybe have the pollution cost halved for Black, if removing it turns out to be too weak?

Seems accurate, though I think Thinking This Through A Bit involved the part of backchaining where you look at approximately where on the map the destination is, and that's what some pro-backchain people are trying to point at. In the non-metaphor, the destination is not well specified by people in most categories, and might be like 50 ft in the air so you need a way to go up or something.

And maybe if you are assisting someone else who has well grounded models, you might be able to subproblem solve within their plan and do good, but you're betting your impact on their direction. Much better to have your own compass or at least a gears model of theirs so you can check and orient reliably.

PS: I brought snacks!

Give me a dojo.lesswrong.com, where the people into mental self-improvement can hang out and swap techniques, maybe a meetup.lesswrong.com where I can run better meetups and find out about the best rationalist get-togethers. Let there be an ai.lesswrong.com for the people writing about artificial intelligence.

Yes! Ish! I'd be keen to have something like this for the upcoming aisafety.com/stay-informed page, where we're looking like we'll currently resort to linking to https://www.lesswrong.com/tag/ai?sortedBy=magic#:~:text=Posts%20tagged%20AI as there's no simpler way to get people specifically to the AI section of the site.

I'd weakly lean towards not using a subdomain, but to using a linkable filter, but yeah seems good.

I'd also think that making it really easy and fluid to cross-post (including selectively, maybe the posts pop up in your drafts and you just have to click post if you don't want everything cross-posted) would be a pretty big boon for LW.

I'm glad you're trying to figure out a solution. I am however going to shoot this one down a bunch.

If these assumptions were true, this would be nice. Unfortunately, I think all three are false.

LLMs will never be superintelligent when predicting a single token.

In a technical sense, definitively false. Redwood compared human to AI token prediction and even early AIs were far superhuman. Also, in a more important sense, you can apply a huge amount of optimization on selecting a token. This video gives a decent intuition, though in a slightly different setting.

LLMs will have no state.

False in three different ways. Firstly, people are totally building in explicit state in lots of ways (test time training, context retrieval, reasoning models, etc). Secondly, there's a feedback cycle of AI influences training data of next AI, which will become a tighter and tighter loop. Thirdly, the AI can use the environment as state in ways which would be nearly impossible to fully trace or mitigate.

not in a way that any emergent behaviour of the system as a whole isn't reflected in the outputs of any of the constituent LLMs

alas, many well-understood systems regularly do and should be expected to have poorly understood behaviour when taken together.

a simpler LLM to detect output that looks like it's leading towards unaligned behaviour.

Robustly detecting "unaligned behaviour" is an unsolved problem, if by aligned you mean "makes the long term future good" rather than "doesn't embarrass the corporation". Solving this would be massive progress, and throwing a LLM at it naively has many pitfalls.

Stepping back, I'd encourage you to drop by AI plans, skill up at detecting failure modes, and get good at both generating and red-teaming your own ideas (the Agent Foundations course and Arbital are good places to start). Get a long list of things you've shown how they break, and help both break and extract the insights from other's ideas.

the extent human civilization is human-aligned, most of the reason for the alignment is that humans are extremely useful to various social systems like the economy, and states, or as substrate of cultural evolution. When human cognition ceases to be useful, we should expect these systems to become less aligned, leading to human disempowerment.

oh good, I've been thinking this basically word for word for a while and had it in my backlog. Glad this is written up nicely, far better than I would likely have done :)

The one thing I'm not a big fan of: I'd bet "Gradual Disempowerment" sounds like a "this might take many decades or longer" to most readers, whereas with capabilities curves this could be a few months to single digit years thing.

I think I have a draft somewhere, but never finished it. tl;dr; Quantum lets you steal private keys from public keys (so all wallets that have a send transaction). Upgrading can protect wallets where people move their coins, but it's going to be messy, slow, and won't work for lost-key wallets, which are a pretty huge fraction of the total BTC reserve. Once we get quantum BTC at least is going to have a very bad time, others will have a moderately bad time depending on how early they upgrade.

Nice! I haven't read a ton of Buddhism, cool that this fits into a known framework.

I'm uncertain of how you use the word consciousness here do you mean our blob of sensory experience or something else?

Yeah, ~subjective experience.

Let's do most of this via the much higher bandwidth medium of voice, but quickly:

- Yes, qualia[1] is real, and is a class of mathematical structure.[2]

- (placeholder for not a question item)

- Matter is a class of math which is ~kinda like our physics.

- Our part of the multiverse probably doesn't have special "exists" tags, probably everything is real (though to get remotely sane answers you need a decreasing reality fluid/caring fluid allocation).

Math, in the sense I'm trying to point to it, is 'Structure'. By which I mean: Well defined seeds/axioms/starting points and precisely specified rules/laws/inference steps for extending those seeds. The quickest way I've seen to get the intuition for what I'm trying to point at with 'structure' is to watch these videos in succession (but it doesn't work for everyone):

- ^

experience/the thing LWers tend to mean, not the most restrictive philosophical sense (#4 on SEP) which is pointlessly high complexity (edit: clarified that this is not the universal philosophical definition, but only one of several meanings, walked back a little on rhetoric)

- ^

possibly maybe even the entire class, though if true most qualia would be very very alien to us and not necessarily morally valuable

give up large chunks of the planet to an ASI to prevent that

I know this isn't your main point but.. That isn't a kind of trade that is plausible. Misaligned superintelligence disassembles the entire planet, sun, and everything it can reach. Biological life does not survive, outside of some weird edge cases like "samples to sell to alien superintelligences that like life". Nothing in the galaxy is safe.

Re: Ayahuasca from the ACX survey having effects like:

- “Obliterated my atheism, inverted my world view no longer believe matter is base substrate believe consciousness is, no longer fear death, non duality seems obvious to me now.”

[1]There's a cluster of subcultures that consistently drift toward philosophical idealist metaphysics (consciousness, not matter or math, as fundamental to reality): McKenna-style psychonauts, Silicon Valley Buddhist circles, neo-occultist movements, certain transhumanist branches, quantum consciousness theorists, and various New Age spirituality scenes. While these communities seem superficially different, they share a striking tendency to reject materialism in favor of mind-first metaphysics.

The common factor connecting them? These are all communities where psychedelic use is notably prevalent. This isn't coincidental.

There's a plausible mechanistic explanation: Psychedelics disrupt the Default Mode Network and adjusting a bunch of other neural parameters. When these break down, the experience of physical reality (your predictive processing simulation) gets fuzzy and malleable while consciousness remains vivid and present. This creates a powerful intuition that consciousness must be more fundamental than matter. Conscious experience is more fundamental/stable than perception of the material world, which many people conflate with the material world itself.

The fun part? This very intuition - that consciousness is primary and matter secondary - is itself being produced by ingesting a chemical which alters physical brain mechanisms. We're watching neural circuitry create metaphysical intuitions in real-time.

This suggests something profound about metaphysics itself: Our basic intuitions about what's fundamental to reality (whether materialist OR idealist) might be more about human neural architecture than about ultimate reality. It's like a TV malfunctioning in a way that produces the message "TV isn't real, only signals are real!"

This doesn't definitively prove idealism wrong, but it should make us deeply suspicious of metaphysical intuitions that feel like direct insight - they might just be showing us the structure of our own cognitive machinery.

- ^

Claude assisted writing, ideas from me and edited by me.

We do not take a position on the likelihood of loss of control.

This seems worth taking a position on, the relevant people need to hear from the experts an unfiltered stance of "this is a real and perhaps very likely risk".

Agree that takeoff speeds are more important, and expect that FrontierMath has much less affect on takeoff speed. Still think timelines matter enough that the amount of relevantly informing people that you buy from this is likely not worth the cost, especially if the org is avoiding talking about risks in public and leadership isn't focused on agentic takeover, so the info is not packaged with the info needed for that info to have the effects which would help.

Evaluating the final model tells you where you got to. Evaluating many small models and checkpoints helps you get further faster.

Even outside of the arguing against the Control paradigm, this post (esp. The Model & The Problem & The Median Doom-Path: Slop, not Scheming) cover some really important ideas, which I think people working on many empirical alignment agendas would benefit from being aware of.

One neat thing I've explored is learning about new therapeutic techniques by dropping a whole book into context and asking for guiding phrases. Most therapy books do a lot of covering general principles of minds and how to work with them, with the unique aspects buried in a way which is not super efficient for someone who already has the universal ideas. Getting guiding phrases gives a good starting point for what the specific shape of a technique is, and means you can kinda use it pretty quickly. My project system prompt is:

Given the name of, and potentially documentation on, an introspective or therapeutic practice, generate a set of guiding phrases for facilitators. These phrases should help practitioners guide participants through deep exploration, self-reflection, and potential transformation. If you don't know much about the technique or the documentation is insufficient, feel free to ask for more information. Please explain what you know about the technique, especially the core principles and things relevant to generating guiding phrases, first.

Consider the following:

Understand the practice's core principles, goals, and methods.

Create open-ended prompts that invite reflection and avoid simple yes/no answers.

Incorporate awareness of physical sensations, emotions, and thought patterns.

Develop phrases to navigate unexpected discoveries or resistances.

Craft language that promotes non-judgmental observation of experiences.

Generate prompts that explore contradictions or conflicting beliefs.

Encourage looking beyond surface-level responses to deeper insights.

Help participants relate insights to their everyday lives and future actions.

Include questions that foster meta-reflection on the process itself.

Use metaphorical language when appropriate to conceptualize abstract experiences.

Ensure phrases align with the specific terminology and concepts of the practice.

Balance providing guidance with allowing space for unexpected insights.

Consider ethical implications and respect appropriate boundaries.Aim for a diverse set of phrases that can be used flexibly throughout the process. The goal is to provide facilitators with versatile tools that enhance the participant's journey of self-discovery and growth.

Example (adapt based on the specific practice):"As you consider [topic], what do you notice in your body?"

"If that feeling had a voice, what might it say?"

"How does holding this belief serve you?"

"What's alive for you in this moment?"

"How might this insight change your approach to [relevant aspect of life]?"Remember, the essence is to create inviting, open-ended phrases that align with the practice's core principles and facilitate deep, transformative exploration.

Please store your produced phrases in an artefact.

I'm guessing you view having better understanding of what's coming as very high value, enough that burning some runway is acceptable? I could see that model (though put <15% on it), but I think this is at least not good integrity wise to have put on the appearance of doing just the good for x-risk part and not sharing it as an optimizable benchmark, while being funded by and giving the data to people who will use it for capability advancements.

Evaluation on demand because they can run them intensely lets them test small models for architecture improvements. This is where the vast majority of the capability gain is.

Getting an evaluation of each final model is going to be way less useful for the research cycle, as it only gives a final score, not a metric which is part of the feedback loop.