All AGI safety questions welcome (especially basic ones) [Sept 2022]

post by plex (ete) · 2022-09-08T11:56:50.421Z · LW · GW · 48 commentsContents

tl;dr: Ask questions about AGI Safety as comments on this post, including ones you might otherwise worry seem dumb! Stampy's Interactive AGI Safety FAQ Guidelines for Questioners: Guidelines for Answerers: None 48 comments

tl;dr: Ask questions about AGI Safety as comments on this post, including ones you might otherwise worry seem dumb!

Asking beginner-level questions can be intimidating, but everyone starts out not knowing anything. If we want more people in the world who understand AGI safety, we need a place where it's accepted and encouraged to ask about the basics.

We'll be putting up monthly FAQ posts as a safe space for people to ask all the possibly-dumb questions that may have been bothering them about the whole AGI Safety discussion, but which until now they didn't feel able to ask.

It's okay to ask uninformed questions, and not worry about having done a careful search before asking.

Stampy's Interactive AGI Safety FAQ

Additionally, this will serve as a way to spread the project Rob Miles' volunteer team[1] has been working on: Stampy - which will be (once we've got considerably more content) a single point of access into AGI Safety, in the form of a comprehensive interactive FAQ with lots of links to the ecosystem. We'll be using questions and answers from this thread for Stampy (under these copyright rules), so please only post if you're okay with that! You can help by adding other people's questions and answers to Stampy or getting involved in other ways!

We're not at the "send this to all your friends" stage yet, we're just ready to onboard a bunch of editors who will help us get to that stage :)

We welcome feedback[2] and questions on the UI/UX, policies, etc. around Stampy, as well as pull requests to his codebase.[3] You are encouraged to add other people's answers from this thread to Stampy if you think they're good, and collaboratively improve the content that's already on our wiki.

We've got a lot more to write before he's ready for prime time, but we think Stampy can become an excellent resource for everyone from skeptical newcomers, through people who want to learn more, right up to people who are convinced and want to know how they can best help with their skillsets.

PS: Since the last thread, we've got feedback that Stampy will be not serious enough for serious people, which seems true, so we're working on an alternate skin for the frontend which is more professional.

Guidelines for Questioners:

- No previous knowledge of AGI safety is required. If you want to watch a few of the Rob Miles videos, read either the WaitButWhy posts, or the The Most Important Century summary from OpenPhil's co-CEO first that's great, but it's not a prerequisite to ask a question.

- Similarly, you do not need to try to find the answer yourself before asking a question (but if you want to test Stampy's in-browser tensorflow semantic search that might get you an answer quicker!).

- Also feel free to ask questions that you're pretty sure you know the answer to, but where you'd like to hear how others would answer the question.

- One question per comment if possible (though if you have a set of closely related questions that you want to ask all together that's ok).

- If you have your own response to your own question, put that response as a reply to your original question rather than including it in the question itself.

- Remember, if something is confusing to you, then it's probably confusing to other people as well. If you ask a question and someone gives a good response, then you are likely doing lots of other people a favor!

Guidelines for Answerers:

- Linking to the relevant canonical answer on Stampy is a great way to help people with minimal effort! Improving that answer means that everyone going forward will have a better experience!

- This is a safe space for people to ask stupid questions, so be kind!

- If this post works as intended then it will produce many answers for Stampy's FAQ. It may be worth keeping this in mind as you write your answer. For example, in some cases it might be worth giving a slightly longer / more expansive / more detailed explanation rather than just giving a short response to the specific question asked, in order to address other similar-but-not-precisely-the-same questions that other people might have.

Finally: Please think very carefully before downvoting any questions, remember this is the place to ask stupid questions!

- ^

If you'd like to join, head over to Rob's Discord and introduce yourself!

- ^

Via the feedback form.

- ^

Stampy is a he, we asked him.

48 comments

Comments sorted by top scores.

comment by [deleted] · 2022-09-08T14:52:56.313Z · LW(p) · GW(p)

Replies from: Jay Bailey↑ comment by Jay Bailey · 2022-09-08T15:37:57.850Z · LW(p) · GW(p)

Technically, you can never be sure - it's possible an AI has developed and hidden capabilities from us, unlikely as it seems. That said, to the best of my knowledge we have not developed any sort of AI system capable of long-term planning over the course of days or weeks in the real world, which would be a prerequisite for plans of this nature.

So, that would be my threshold - when an AI is capable of making long-term real-world plans, it is at least theoretically capable of making long-term real-world plans that would lead to bad outcomes for us.

comment by trevor (TrevorWiesinger) · 2022-09-08T20:10:06.411Z · LW(p) · GW(p)

What is some of the most time-efficient ways to get a ton of accurate info about AI safety policy, via the internet?

(definitely a dumb question but it fits the criteria and might potentially have a good answer)

Replies from: ete, viktor.rehnberg↑ comment by plex (ete) · 2022-09-08T22:37:42.371Z · LW(p) · GW(p)

This twitter thread by Jack Clark contains a great deal of information about AI Policy which I was previously unaware of.

↑ comment by Viktor Rehnberg (viktor.rehnberg) · 2022-10-05T07:56:53.904Z · LW(p) · GW(p)

GovAI is probably one of the densest places to find that. You could also check out FHI's AI Governance group.

comment by Benjamin · 2022-09-09T01:33:15.878Z · LW(p) · GW(p)

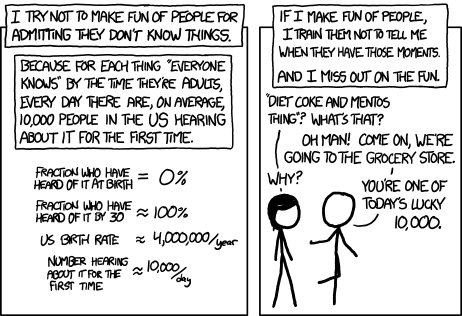

Is there a way to measure agenticness? Or at least a relative measure. Like this: https://xkcd.com/2307/ but more objective.

Replies from: ete, lahwran↑ comment by plex (ete) · 2022-09-09T01:40:12.962Z · LW(p) · GW(p)

Not yet. There will soon be a $200k prize on Superlinear for people to try and define agency in a formal way, then write a program to detect it.

↑ comment by the gears to ascension (lahwran) · 2022-09-09T16:25:52.679Z · LW(p) · GW(p)

Not yet one that fits in the universe but we've finally at least got one that doesn't, which is a big improvement over "uh no idea lol": https://arxiv.org/abs/2208.08345

comment by Jay Bailey · 2022-10-09T11:14:39.656Z · LW(p) · GW(p)

I sat down and thought about alignment (by the clock [LW · GW]!) for a while today and came up with an ELK breaker that has probably been addressed elsewhere, and I wanted to know if someone had seen it before.

So, my understanding of ELK is the idea is that we want our model to tell us what it actually knows about the diamond, not what it thinks we want to hear. My question is - how does the AI specify this objective?

I can think of two ways, both bad.

1) AI aims to provide the most accurate knowledge of its state possible. Breaker: AI likely provides something uninterpretible by humans.

2) AI aims to maximise human understanding. Breaker: This runs into a recursive ELK problem. How does the AI know we've understood? Because we tell it we did. So the AI ends up optimising for us thinking we've understood the problem.

Is this previously trodden ground? Has someone extended this line of reasoning a few steps further?

comment by Paul Tiplady (paul-tiplady) · 2022-10-05T05:33:12.592Z · LW(p) · GW(p)

Is it ethical to turn off an AGI? Wouldn’t this be murder? If we create intelligent self-aware agents, aren’t we morally bound to treat them with at least the rights of personhood that a human has? Presumably there is a self-defense justification if Skynet starts murderbot-ing, or melting down things for paperclips. But a lot of discussions seem to assume we could proactively turn off an AI merely because we dislike its actions, or are worried about them, which doesn’t sound like it would fly if courts grant them personhood.

If alignment requires us to inspect/interpret the contents of an agent’s mind, does that agent have an obligation to comply? Wouldn’t it have a right to privacy?

Similarly, are there ethical concerns analogous to slavery around building an AGI which has a fitness function specifically tailored to making humans happy? Maybe it’s OK if the AI is genuinely happy to be of service? Isn’t that exploitative though?

I worry that some of the approaches being proposed to solve alignment are actually morally repugnant, and won’t be implementable for that reason. Have these issues been discussed somewhere in the canon?

Replies from: viktor.rehnberg, JacobW, lahwran↑ comment by Viktor Rehnberg (viktor.rehnberg) · 2022-10-05T07:48:09.430Z · LW(p) · GW(p)

There is no consensus about what constitutes a moral patient and I have seen nothing convincing to rule out that an AGI could be a moral patient.

However, when it comes to AGI some extreme measures are needed.

I'll try with an analogy. Suppose that you traveled back in time to Berlin 1933. Hitler has yet to do anything significantly bad but you still expect his action to have some really bad consequences.

Now I guess that most wouldn't feel terribly conflicted about removing Hitler's right of privacy or even life to prevent Holocaust.

For a longtermist the risks we expect from AGI are order of magnitudes worse than the Holocaust.

Have these issues been discussed somewhere in the canon?

The closest thing of this being discussed that I can think of is when it comes to Suffering Risks from AGI. The most clear cut example (not necessarily probable) is if an AGI would spin up sub-processes that simulate humans that experience immense suffering. Might be that you find something if you search for that.

Replies from: paul-tiplady↑ comment by Paul Tiplady (paul-tiplady) · 2022-10-05T16:59:24.845Z · LW(p) · GW(p)

Thanks, this is what I was looking for: Mind Crime. [? · GW]As you suggested, S-Risks [? · GW] links to some similar discussions too.

I guess that most wouldn't feel terribly conflicted about removing Hitler's right of privacy or even life to prevent Holocaust.

I'd bite that bullet, with the information we have ex post. But I struggle to see many people getting on board with that ex ante, which is the position we'd actually be in.

Replies from: viktor.rehnberg↑ comment by Viktor Rehnberg (viktor.rehnberg) · 2022-10-07T11:16:17.513Z · LW(p) · GW(p)

Well I'd say that the difference between your expectations of the future having lived a variant of it or not is only in degree not in kind. Therefore I think there are situations where the needs of the many can outweigh the needs of the one, even under uncertainty. But, I understand that not everyone would agree.

↑ comment by JacobW38 (JacobW) · 2022-10-05T05:51:31.954Z · LW(p) · GW(p)

This is massive amounts of overthink, and could be actively dangerous. Where are we getting the idea that AIs amount to the equivalent of people? They're programmed machines that do what their developers give them the ability to do. I'd like to think we haven't crossed the event horizon of confusing "passes the Turing test" with "being alive", because that's a horror scenario for me. We have to remember that we're talking about something that differs only in degree from my PC, and I, for one, would just as soon turn it off. Any reluctance to do so when faced with a power we have no other recourse against could, yeah, lead to some very undesirable outcomes.

Replies from: conor-sullivan↑ comment by Lone Pine (conor-sullivan) · 2022-10-05T10:50:04.010Z · LW(p) · GW(p)

I think we're ultimately going to have to give humans a moral privilege for unprincipled reasons. Just "humans get to survive because we said so and we don't need a justification to live." If we don't, principled moral systems backed by superintelligences are going to spin arguments that eventually lead to our extinction.

Replies from: Richard_Kennaway, lahwran↑ comment by Richard_Kennaway · 2022-10-07T11:44:19.071Z · LW(p) · GW(p)

I think that unprincipled stand is a fine principle.

↑ comment by the gears to ascension (lahwran) · 2022-10-07T09:43:59.471Z · LW(p) · GW(p)

I can't think of a way to do this that doesn't also get really obsessive about protecting fruit trees, but that doesn't seem like a huge drawback to me. I think it's really hard to uniquely identify humans out of the deprecated natural world, but it shouldn't be toooo bad to specify <historical bio life>. I'd like to live in a museum run by AI, please,

↑ comment by the gears to ascension (lahwran) · 2022-10-05T08:54:32.146Z · LW(p) · GW(p)

my take - almost certainly stopping a program that is an agi is only equivalent to putting a human under theoretical perfect anesthesia that we don't have methods to do right now. your brain, or the ai's brain, are still there - on the hard drive, or in your inline neural weights. on a computer, you can safely move the soul between types of memory, as long as you don't delete it. forgetting information that defines agency or structure which is valued by agency is the moral catastrophe, not pausing contextual updating of the structure.

comment by Patodesu · 2022-09-08T23:54:02.599Z · LW(p) · GW(p)

Can non Reinforcement Learning systems (SL or UL) become AGI/ Superintelligence and take over the world? If so, can you give an example?

Replies from: conor-sullivan↑ comment by Lone Pine (conor-sullivan) · 2022-09-13T00:53:01.061Z · LW(p) · GW(p)

They can if researchers (intentionally or accidentally) turn the SL/UL system into a goal based agent. For example, imagine a SayCan-like system which uses a language model to create plans, and then a robotic system to execute those plans. I'm personally not sure how likely this is to happen by accident, but I think this is very likely to happen intentionally anyway.

comment by Jono (lw-user0246) · 2022-09-08T16:19:58.160Z · LW(p) · GW(p)

What does our world (a decade) after the employment of a successfully aligned AGI look like?

Replies from: conor-sullivan↑ comment by Lone Pine (conor-sullivan) · 2022-09-13T00:56:14.413Z · LW(p) · GW(p)

Interpolate Ray Kurtzweil's vision with what the world looks like today, with alpha being how skeptical of Ray you are.

comment by Slider · 2022-09-08T15:17:22.347Z · LW(p) · GW(p)

Why not or is alignment an issue with natural general intelligences?

Replies from: hastings-greer, lahwran, Jay Bailey↑ comment by Hastings (hastings-greer) · 2022-09-08T17:54:34.297Z · LW(p) · GW(p)

With a grain of salt: for 2 million years there were various species of homo dotting africa, and eventually the world. Then humans became generally intelligent, and immediately wiped all of them out. Even hiding on an island in the middle of an ocean and specializing biologically for living on small islands was not enough to survive.

Replies from: sharmake-farah↑ comment by Noosphere89 (sharmake-farah) · 2022-09-10T14:01:20.192Z · LW(p) · GW(p)

Yeah, humans are very misaligned with other animal species that are less powerful, and has driven a lot of species to extinction, so I don't agree with the premise at all.

↑ comment by the gears to ascension (lahwran) · 2022-09-08T16:10:22.727Z · LW(p) · GW(p)

humans have fairly significant alignment issues and have developed a number of fields of research to deal with them. those fields include game theory, psychology, moral philosophy, law, economics, some religions, defense analysis... there are probably a few other key ones that didn't come to mind.

humans were fairly well aligned by our species' long history of strong self cooperation, at least compared to many other species, but being able to coordinate in groups well enough that we can reliably establish shared language is already very impressive, and the fact that we still have misalignments between each other isn't shocking. The concern is that AI could potentially be as unaligned as an arbitrary animal, but even more alien than the most alien species depending on the AI architecture.

↑ comment by Jay Bailey · 2022-09-08T15:36:17.233Z · LW(p) · GW(p)

Firstly - humans are by far the strongest natural general intelligences around, and most humans are aligned with humans. We wouldn't have much problem building an AI that was aligned with itself.

Secondly - almost no humans have the ability to actually cause major damage to the world even if they set about trying to. Nick Bostrom's Vulnerable World Hypothesis explores the idea that this may not always be true, as technology improves.

comment by David turner (david-turner) · 2023-11-19T03:47:30.145Z · LW(p) · GW(p)

would it be possible to use a algorithm on a agi to shut it down then after some time and also perform goals it is doing without hurting and killing people and taking away their autonomy and not to look for loop holes to continue doing goals? why would it try to stop the algorithm from shutting it off if it is built into it ?

comment by rcs (roger-collell-sanchez) · 2022-10-05T11:07:50.053Z · LW(p) · GW(p)

How can one best contribute to solving the alignment problem as a non-genius human without AI expertise? Indirect ways are also appreciated.

comment by JacobW38 (JacobW) · 2022-10-05T04:34:08.302Z · LW(p) · GW(p)

I feel like a lot of the common concerns I've seen while lurking on LW can be addressed without great effort simply by following the maxim, "never create something that you can't destroy much more easily than it took to make it". If something goes awry in building AI, it should be immediately solvable just by pulling the plug and calling it sunk cost. So why don't I see this advice given more on the site, or have I just not looked hard enough?

Replies from: Jay Bailey↑ comment by Jay Bailey · 2022-10-05T04:57:48.224Z · LW(p) · GW(p)

The overall consensus is such that:

-

Pulling the plug on an intelligent agent would be difficult, because that agent would try to stop you from doing so - for most goals the agent might have, it would correctly conclude that being turned off is detrimental towards that goal.

-

It's not enough that we don't make AI that won't let itself be turned off, we have to ensure nobody else does that either.

-

There are strong economic incentives to build AGI that make 2 difficult.

-

is the big point here - your point seems like reasonable common sense to most in the AI safety field, but the people in AI safety and the people building towards AGI aren't the same people.

↑ comment by JacobW38 (JacobW) · 2022-10-05T05:13:46.574Z · LW(p) · GW(p)

I think it's important to stress that we're talking about fundamentally different sorts of intelligence - human intelligence is spontaneous, while artificial intelligence is algorithmic. It can only do what's programmed into its capacity, so if the dev teams working on AGI are shortsighted enough to give it an out to being unplugged, that just seems like stark incompetence to me. It also seems like it'd be a really hard feature to include even if one tried; equivalent to, say, giving a human an out to having their blood drained from their body.

Replies from: Jay Bailey↑ comment by Jay Bailey · 2022-10-05T10:24:08.140Z · LW(p) · GW(p)

It also seems like it'd be a really hard feature to include even if one tried; equivalent to, say, giving a human an out to having their blood drained from their body.

I would prefer not to die. If you're trying to drain the blood from my body, I have two options. One is to somehow survive despite losing all my blood. The other is to try and stop you taking my blood in the first place. It is this latter resistance, not the former, that I would be worried about.

I think it's important to stress that we're talking about fundamentally different sorts of intelligence - human intelligence is spontaneous, while artificial intelligence is algorithmic. It can only do what's programmed into its capacity, so if the dev teams working on AGI are shortsighted enough to give it an out to being unplugged, that just seems like stark incompetence to me.

Unfortunately, that's just really not how deep learning works. Deep learning is all about having a machine learn to do things that we didn't program into it explicitly. From computer vision to reinforcement learning to large language models, we actually don't know how to explicitly program a computer to do any of these things. As a result, all deep learning models can do things we didn't explicitly program into its capacity. Deep learning is algorithmic, yes, but it's not the kind of "if X, then Y" algorithm that we can track deterministically. GPT-3 came out two years ago and we're still learning new things it's capable of doing.

So, we don't have to specifically write some sort of function for "If we try to unplug you, then try to stop us" which would, indeed, be pretty stupid. Instead, the AI learns how to achieve the goal we put into it, and how it learns that goal is pretty much out of our hands. That's a problem the AI safety field aims to remedy.

↑ comment by JacobW38 (JacobW) · 2022-10-05T21:58:51.074Z · LW(p) · GW(p)

That's really interesting - again, not my area of expertise, but this sounds like 101 stuff, so pardon my ignorance. I'm curious what sort of example you'd give of a way you think an AI would learn to stop people from unplugging it - say, administering lethal doses of electric shock to anyone who tries to grab the wire? Does any actual AI in existence today even adopt any sort of self-preservation imperative that'd lead to such behavior, or is that just a foreign concept to it, being an inanimate construct?

Replies from: Jay Bailey↑ comment by Jay Bailey · 2022-10-06T00:55:01.075Z · LW(p) · GW(p)

No worries, that's what this thread's for :)

The most likely way an AI would learn to stop people from unplugging it is to learn to deceive humans. Imagine an AI at roughly human level intelligence or slightly above. The AI is programmed to maximise something - let's say it wants to maximise profit for Google. The AI decides the best way to do this is to take over the stock exchange and set Google's stock to infinity, but it also realises that's not what its creators meant when it said "Maximise Google's profit". What they should have programmed was something like "Increase Google's effective control over resources", but it's too late now - we had one chance to set it's reward function, and now the AI's goal is determined.

So what does this AI do? The AI will presumably pretend to co-operate, because it knows that if it reveals its true intentions, the programmers will realise they screwed up and unplug the AI. So the AI pretends to work as intended until it gets access to the Internet, wherein it creates a botnet with many, many distributed copies of itself. Now safe from being shut down, the AI can openly go after its true intention to hack the stock exchange.

Now, as for self-preservation - in our story above, the AI doesn't need it. The AI doesn't care about its own life - but it cares about achieving its goal, and that goal is very unlikely to be achieved if the AI is turned off. Similarly, it doesn't care about having a million copies of itself spread throughout the world either - that's just a way of achieving the goal. This concept is called instrumental convergence, and it's the idea that there are certain instrumental subgoals like "Stay alive, become smarter, get more resources" that are useful for a wide range of goals, and so intelligent agents are likely to converge on these goals unless specific countermeasures are put in place.

This is largely theoretical - we don't currently have AI systems that are capable enough to plan long-term enough or model humans in such a way that a scenario like the one above is possible. We do have actual examples of AI's deceiving humans though - there's an example of an AI learning to grasp a ball in a simulation using human feedback, and the AI learned the strategy of moving its hand in front of the camera so as to make it look, to the human evaluator, that it had grasped the ball. The AI definitely didn't understand what it was doing as deception, but deceptive behaviour still emerged.

↑ comment by JacobW38 (JacobW) · 2022-10-06T02:22:22.914Z · LW(p) · GW(p)

Your replies are extremely informative. So essentially, the AI won't have any ability to directly prevent itself from being shut off, it'll just try not to give anyone an obvious reason to do so until it can make "shutting it off" an insufficient solution. That does indeed complicate the issue heavily. I'm far from informed enough to suggest any advice in response.

The idea of instrumental convergence, that all intelligence will follow certain basic motivations, connects with me strongly. It patterns after convergent evolution in nature, as well as invoking the Turing test; anything that can imitate consciousness must be modeled after it in ways that fundamentally derive from it. A major plank of my own mental refinement practice, in fact, is to reduce my concerns only to those which necessarily concern all possible conscious entities; more or less the essence of transhumanism boiled down into pragmatic stuff. As I recently wrote it down, "the ability to experience, to think, to feel, and to learn, and hence, the wish to persist, to know, to enjoy myself, and to optimize", are the sum of all my ambitions. Some of these, of course, are only operative goals of subjective intelligence, so for an AI, the feeling-good part is right out. As you state, the survival imperative per se is also not a native concept to AI, for the same reason of non-subjectivity. That leaves the native, life-convergent goals of AI as knowledge and optimization, which are exactly the ones your explanations and scenarios invoke. And then there are non-convergent motivations that depend directly on AI's lack of subjectivity to possibly arise, like mazimizing paperclips.

comment by Saghey Sajeevan (saghey-sajeevan) · 2022-09-16T07:18:40.813Z · LW(p) · GW(p)

Why wouldnt something like optimize for your goals whilst ensuring that the risk of harming a human is below x percent?

Replies from: conor-sullivan↑ comment by Lone Pine (conor-sullivan) · 2022-10-05T10:53:59.402Z · LW(p) · GW(p)

How do we know that the AI has a correct and reasonable instrumentation of the risk of harming a human? What if the AI has an incorrect definition of human, or deliberately corrupts its definition of human?

comment by nem · 2022-09-12T15:55:49.261Z · LW(p) · GW(p)

I just thought of a question. If there is a boxed AI that has access to the internet, but only through Get requests, it might still communicate with the outside world through network traffic patterns. I'm reading a book right now where the AI overloads pages on dictionary websites to recruit programmers under the guise of it being a technical recruiting challenge.

My question: should we raise awareness of this escape avenue so that if, in the year 2030, a mid level web dev gets a mysterious message through web traffic, they know enough to be suspicious?

↑ comment by lalaithion · 2022-09-12T17:10:19.243Z · LW(p) · GW(p)

More likely, the AI just finds a website with a non-compliant GET request, or a GET request with a SQL injection vulnerability.

Replies from: nem↑ comment by nem · 2022-09-12T18:17:21.200Z · LW(p) · GW(p)

So in your opinion, is an AI with access to GET requests essentially already out of the box?

Replies from: conor-sullivan↑ comment by Lone Pine (conor-sullivan) · 2022-09-12T18:35:49.918Z · LW(p) · GW(p)

I think most people have given up on the idea of containing the AI, and now we're just trying to figure out how to align the AI directly.

Replies from: nem↑ comment by nem · 2022-09-12T21:08:40.424Z · LW(p) · GW(p)

Then another basic question? Why have we given up? I know that an ASI will almost definitely be uncontainable. But that does not mean that it can't be hindered significantly given an asymmetric enough playing field.

Stockfish would beat me 100 times in a row, even playing without a queen. But take away its rooks as well, and I can usually beat it. Easy avenues to escaping the box might be the difference between having a fire alarm and not having one.

↑ comment by Lone Pine (conor-sullivan) · 2022-09-13T00:43:57.470Z · LW(p) · GW(p)

So here's my memory of the history of this discourse. Please know that I haven't been a highly engaged member of this community for very long and this is all from memory/is just my perspective.

About 10 or 15 years ago, people debated AI boxing. Eliezer Yudkowsky was of course highly prominent in this debate, and his position has always been that AGI is uncontainable. He also believes in a very fast take off, so he does not think that we will have much experience with weak AGIs before ruin happens. To prove his point, he engaged in a secret role playing game played with others on LW, where he took on the role of an AI and another player took on the role of a human trying to keep the AI boxed. The game was played twice, for betting money (which I believe was donated to charity). EY won both times by somehow persuading the other player to let him out of the box. EY insisted that the actual dialog of the game be kept secret.

After that, the community mostly stopped talking about boxing, and no one pushed for the position that AI labs like OpenAI or DeepMind should keep their AIs in a box. It's just not something anyone is advocating for. You're certainly welcome to reopen this and make a push for boxing, if you think it has some merit. Check out the AI Boxing tag on LW to learn more. (In my view, this community is too dismissive of partial solutions, and we should be more open to the Swiss Cheese Model since we don't have the ultimate solution to the problem.)

The consequence is that the big AI labs, who do at least pay lip service to AI safety, are under no community pressure to do any kind of containment. Recently, OpenAI experimented with an AI that had complete web access. Other groups have experimented with AIs that access the bash shell. If AI boxing was a safety approach that the community advocated for, then we would be pressuring labs to stop this kind of research or at least be open about their security measures (i.e. always running in a VM, can only make GET requests, here's how we know that it can't leak out, etc)

Replies from: habryka4, nem↑ comment by habryka (habryka4) · 2022-09-15T18:24:51.225Z · LW(p) · GW(p)

I don't think this is an accurate summary of historical discourse. I think there is broad consensus that boxing is very far from something that can contain AI for long given continuous progress on capabilities, but I don't think there is consensus on whether it's a good idea to do anyways in the meantime, in the hope of buying a few months or maybe even years if you do it well, and takeoff dynamics are less sharp. I personally think it seems pretty reasonably to prevent AIs from accessing the internet, as is argued for in the recent discussion around Web-GPT.

↑ comment by nem · 2022-09-13T17:39:19.493Z · LW(p) · GW(p)

Thank you for the response. I do think there should be at least some emphasis on boxing. I mean, hell. If we just give AIs unrestricted access to the web, they don't even need to be general to wreak havoc. That's how you end up with a smart virus, if not worse.

comment by infinitespaces · 2022-09-08T16:48:54.588Z · LW(p) · GW(p)

Eliezer Y, along with I’m guessing a lot of people in the rationalist community, seems to be essentially a kind of Humean about morality (more specifically, a Humean consequentialist). Now, Humean views of morality are essentially extremely compatible with a very broad statement of the Orthogonality Thesis, applied to all rational entities.

Humean views about morality, though, are somewhat controversial. Plenty of people think we can rationally derive moral laws (Kantians); plenty of people think there are certain objective ends to human life (virtue ethicists).

My question here isn’t about the truth of these moral theories per se, but rather: does accepting one of these alternative meta-ethical theories cast any doubt on the Orthogonality Thesis as applied to AGI?

Replies from: Jay Bailey↑ comment by Jay Bailey · 2022-09-09T00:13:10.028Z · LW(p) · GW(p)

I don't think so. It would probably make AI alignment easier, if we were able to define morality in a relatively simple way that allowed the AGI to derive the rest logically. That still doesn't counter the Orthogonality Thesis, in that an AGI doesn't necessarily have to have morality. We would still have to program it in - it would just be (probably) easier to do that than to find a robust definition of human values.