Posts

Comments

It seems the recent tariff designs already are serious policy

If the tariffs are revoked, reversed, unenforced, or blocked in some way, that strongly points towards possibility that they were understood to be unenforceable all along, and their imposition and failure was just a kayfabe set up by the president to look like he tried and juxtaposing himself against his enemies, depicting them as at fault for making the shiny new policy impossible. This is not unique to Trump, it has been very prevalent practice among presidents and congress since most of the Cold War and possibly long before that (I don't have the energy to determine the exact age, only that it's an old old practice and very widespread).

What do you mean by "added as a new centerpiece of the tax and spending regime"?

Sorry if I wasn't clear here. Tariffs were a very large portion of government revenue, and therefore spending, until the 1950s when income tax grew (largely because workers were pretty risk-intolerant and mass literacy made them more willing and able to file paperwork than other avenues of taxation) and government as we know it pivoted to revolving around income taxes instead. Tariff-based taxation governments and income-based taxation governments are pretty different government/civilizational paradigms, similar to the distinctiveness of the "dark forest" government paradigm in Africa centuries ago where villages built near roads were more likely to be enslaved or conscripted or have forced labor quotas imposed on them, resulting in villages largely being distant from roads.

What are the alternative futures?

In the high-tariff scenario, governments and militaries (in Europe and Asia too, not just the US) have probably predicted that international trade is sufficiently robust, and the participants sufficiently risk-averse, that imports can be milked, at least relative to risks from continuing to depend so heavily on income taxation (e.g. maybe tax evasion advice gets popular on tiktok or something). This would not surprise me as many of the best minds in the US and Chinese militaries have spent more than a decade thinking very hard about economics and international trade as warfare, and it seems to me like they at least believe they've developed a solid understanding of what economic collapse contingencies look like and how much stress their economies and trade networks can take. This means international trade will be much more expensive as it is basically heavily taxed and those tax rates can change on a dime which greatly increases everyone's risk premiums, but also might cause governments to focus on prioritizing the robustness of international trade if it becomes the main revenue source, in addition to forcing prioritization on the domestic economy because the ground has been burned behind them (investing in industries that depend on imports or exports is riskier and less viable due to the deadweight loss and risk premiums added to international trade). Generally it means broader government control as well as less stability, as income taxes can't really change on a dime in response to a nation's actions or target specific industries, and tariffs can.

Although the risk of frogboiling human rights abuses won't go away anytime soon, it's also important to keep in mind that Trump got popular by doing whatever makes the left condemn him because right-wingers seem to interpret that as a costly credible signal of commitment to them/the right/opposing the left, and his administration has spent a decade following this strategy as consistently as can reasonably be considered possible for a sitting president, most of the time landing on strategies to provoke condemnation from liberals in non-costly or ambiguously costly ways (see Jan 6th).

See Scott Alexander's classic post It's Bad On Purpose To Make You Click; engagement bait has been the soul of Trump's political persona since it emerged in the mid-2010s, and it will be interesting going forward to see whether the recent tariff designs will end up as serious policy and be added as a new centerpiece of the tax and spending regime (which had taken a stable form since the Vietnam War and the end of the Gold Standard[1]).

- ^

The case could also be made that the computerization of Wall Street during the late 70s and 80s transformed the economy sufficiently radically that the current tax and spending paradigm could be condered more like 30-40 years old, or you could pin it to the 1950s when the tariff paradigm ended; either way, the modern emergence of massive recessions, predictive analytics, pandemic risk, and international military emphasis on trade geopolitics, all indicate potential for elite consensus around unusually large macroeconomic paradigm shifts.

An aspect where I expect further work to pay off is stuff related to self-visualization, which is fairly powerful (e.g. visualizing yourself doing something for 10 hours will generally go a really long way to getting you there, and for the 10 hour thing it's more a question of what to do when something goes wrong enough to make the actul events sufficiently different from what you imagined, and how to do it in less than 10 hours).

More like a bin than heuristics, and just attacking/harming (particularly a mutually understood schelling point for attacking, with partial success being more common and more complicated due to the people adversarially aiming for that) rather than dehumanizing which is a loaded term.

My apologies, this post was pointing/grasping in a general direction and I didn't put much trouble into editing it, there was a typo at the beginning where I seem to have used the wrong word to refer to the slot concept. I just fixed it:

Humans seem to have something like an "acceptable target slot" or slots.

Acquiring control over this

conceptslot, by any means, gives a person or group incredible leeway to steer individuals, societies, and cultures.

Did that help?

Humans seem to have something like an "acceptable target slot" or slots.

Acquiring control over this concept, by any means, gives a person or group incredible leeway to steer individuals, societies, and cultures. These capabilities are sufficiently flexible and powerful that the importance of immunity has often already been built up, especially because historical record overuse is prevalent; this means that methods of taking control include expensive strategies or strategies that are sufficiently complicated as to be hard to track, like changing the behavior of a targeted individual or demographic or type-of-person in order to more easily depict them as acceptable targets, noticing and selecting the best option for acceptable targets, and/or cleverly chaining acceptable targethood from one established type-of-person to another by drawing attention to similarities to similarities that were actually carefully selected for this (or even deliberately induced in one or both of them).

9/11 and Gaza are obvious potential-examples, and most wars in the last century feature this to some extent, but acceptable-target-slot-exploitation is much broader than that; on a more local scale, most interpersonal conflict involves DARVO to some extent, especially when the human brain's ended up pretty wired to lean heavily into that without consciously noticing.

A solution is to take an agent perspective, and pay closer attention (specifically more than the amount that's expected or default) any time a person or institution uses reasoning to justify harming or coercing other people or institutions, and to assume that such situations might have been structured to be cognitively difficult to navigate and should generally be avoided or mitigated if possible. If anyone says that some kind of harm is inevitable, notice if someone is being rewarded for gaining access to the slot; many things that seem inevitable are actually a skill issue and only persist because insufficient optimization power has been pointed at them; the human race is currently pushing the frontier for creating non-toxic spaces which are robust to both internal and external factors and actors.

Base rates of harmful tendencies are high among humans (e.g. easily noticing or justifying opportunities to weaken or harm others, or the mind coming alive while doing so), but higher base rates (of any dynamic, not just things that impact various people's acceptable target slots) also increase the proportion of profoundly strategic people on earth who find that dynamic cognitively available and hold it as a gear in their models and plots.

In the ancestral environment, allies and non-enemies who visibly told better lies probably offered more fitness than allies and non-enemies who visibly made better tools, let alone invented better tools (which probably happened once in 10-1000 generations or something). In this case, "identifiably" can only happen, and become a Schelling point that increases fitness of the deciever and the identifier, if revealed frequently enough, either via bragging drive, tribal reputation/rumors, or identifiable to the people in the tribe unusually good at sensing deception.

What ratio of genetic vs memetic (e.g. the line "he's a bastard, but he's our bastard") were you thinking of?

You don't use eloquence for that. Eloquence is more for eg waking someone up and making it easier for them to learn and remember ideas that you think they'll be glad to have learned and remembered.

If you want to express how important you think something is, you can make a public prediction that it's important and explain why you made that prediction, and people who know things you don't can put your arguments into the context of their own knowledge and make their own predictions.

I might be wrong, but the phrase "conspiracy theory" seems to be a lot more meaningful to you than it is to me. I recommend maybe reading Cached Thoughts.

A "conspiracy" is something people do when they want something big, because multiple people are necessary to do big things, and stealth is necessary to prevent randos from interfering.

A "theory" is a hypothesis, an abstraction that cannot be avoided by anyone other than people rigidly committed to only thinking about things that they are nearly 100% certain is true. If you want to do thinking when it's hard instead of just when it's easy and anyone can do it, then you need theories.

A "conspiracy theory" is a label for a theory that makes most people believe there is a social consensus against that theory, and makes incompetent internet users take it up as a cause (as a search for truth which is hopeless for them in particular as they are not competitive in the truthfindng market) and make it further associated with internet degeneracy.

NEVER WRITE ON THE CLIPBOARD WHILE THEY ARE TALKING.

If you're interested in how writing on a clipboard affects the data, sure, that's actually a pretty interesting experimental treatment. It should not be considered the control.

Also, the dynamics you described with the protests is conjunctive. These aren't just points of failure, they're an attack surface, because any political system has many moving parts, and a large proportion of the moving parts are diverse optimizers.

"power fantasies" are actually a pretty mundane phenomenon given how human genetic diversity shook out; most people intuitively gravitate towards anyone who looks and acts like a tribal chief, or towards the possibility that you yourself or someone you meet could become (or already be) a tribal chief, via constructing some abstract route that requires forging a novel path instead of following other people's.

Also a mundane outcome of human genetic diversity is how division of labor shakes out; people noticing they were born with savant-level skills and that they can sink thousands of hours into skills like musical instruments, programming, data science, sleight of hand party tricks, social/organizational modelling, painting, or psychological manipulation. I expect the pool to be much larger for power-seeking-adjacent skills than art, and that some proportion of that larger pool of people managed to get their skills's mental muscle memory sufficiently intensely honed that everyone should feel uncomfortable sharing a planet with them.

How to build a lie detector app/program to release to the public (preferably packaged with advice/ideas on ways to use and strategies for marketing the app, e.g. packaging it with an animal body-language to english translator).

Gwern gave a list in his Nootropics megapost.

This got me thinking, how much space would it take up in Lighthaven to print a copy of every lesswrong post ever written? If it's not too many pallets then it would probably be a worthy precaution.

Develop metrics that predict which members of the technical staff have aptitude for world modelling.

In the Sequences post Faster than Science, Yudkowsky wrote:

there are queries that are not binary—where the answer is not "Yes" or "No", but drawn from a larger space of structures, e.g., the space of equations. In such cases it takes far more Bayesian evidence to promote a hypothesis to your attention than to confirm the hypothesis.

If you're working in the space of all equations that can be specified in 32 bits or less, you're working in a space of 4 billion equations. It takes far more Bayesian evidence to raise one of those hypotheses to the 10% probability level, than it requires further Bayesian evidence to raise the hypothesis from 10% to 90% probability.

When the idea-space is large, coming up with ideas worthy of testing, involves much more work—in the Bayesian-thermodynamic sense of "work"—than merely obtaining an experimental result with p<0.0001 for the new hypothesis over the old hypothesis.

This, along with the way that news outlets and high school civics class describe an alternate reality that looks realistic to lawyers/sales/executive types but is too simple, cartoony, narrative-driven, and unhinged-to-reality for quant people to feel good about diving into, implies that properly retooling some amount of dev-hours into efficient world modelling upskilling is low-hanging fruit (e.g. figure out a way to distill and hand them a significance-weighted list of concrete information about the history and root causes of US government's focus on domestic economic growth as a national security priority).

Prediction markets don't work for this metric as they measure the final product, not aptitude/expected thinkoomph. For example, a person who feels good thinking/reading about the SEC, and doesn't feel good thinking/reading about the 2008 recession or COVID, will have a worse Brier score on matters related to the root cause of why AI policy is the way it is. But feeling good about reading about e.g. the 2008 recession will not consistently get reasonable people to the point where they grok modern economic warfare and the policies and mentalities that emerge from the ensuing contingency planning. Seeing if you can fix that first is one of a long list of a prerequisites for seeing what they can actually do, and handing someone a sheet of paper that streamlines the process of fixing long lists of hiccups like these is one way to do this sort of thing.

Figuring-out-how-to-make-someone-feel-alive-while-performing-useful-task-X is an optimization problem (see Please Don't Throw Your Mind Away). It has substantial overlap with measuring whether someone is terminally rigid/narrow-skilled, or if they merely failed to fully understand the topology of the process of finding out what things they can comfortably build interest in. Dumping extant books, 1-on-1s, and documentaries on engineers sometimes works, but it comes from an old norm and is grossly inefficient and uninspired compared to what Anthropic's policy team is actually capable of. For example, imagine putting together a really good fanfic where HPJEV/Keltham is an Anthropic employee on your team doing everything I've described here and much more, then printing it out and handing it to people that you in-reality already predicted to have world modelling aptitude; given that it works great and goes really well, I consider that the baseline for what something would look like if sufficiently optimized and novel to be considered par.

The essay is about something I call “psychological charge”, where the idea is that there are two different ways to experience something as bad. In one way, you kind of just neutrally recognize a thing as bad.

Nitpick: a better way to write it is "the idea is there are at least two different ways..." or "major ways" etc to highlight that those are two major categories you've noticed, but there might be more. The primary purpose knowledge work is still to create cached thought inside someone's mind, and like programming, it's best to make your concepts as modular as possible so you and others are primed to refine them further and/or notice more opportunities to apply them.

Interestingly enough, this applies to corporate executives and bureaucracy leaders as well. Many see the world in a very zero-sum way (300 years ago and most of history before that, virtually all top intellectuals in virtually all civilizations saw the universe as a cycle where civilizational progress was a myth and everything was an endless cycle of power being won and lost by people born/raised to be unusually strategically competitive) but fail to realize that, in aggregate, their contempt for cause-having people ("oh, so you think you're better than me, huh? you think you're hot shit?") have turned into opposition to positive-sum folk, itself a cause of sorts, though with an aversion to activism and assembly and anything in that narrow brand of audacious display.

It doesn't help that most 'idealistic' causes throughout human history had a terrible epistemic backing.

If you converse directly with LLMs (e.g. instead of through a proxy or some very clever tactic I haven't thought of yet), which I don't recommend especially not describing how your thought process works, one thing to do is regularly ask it "what does my IQ seem like based on this conversation? I already know this is something you can do. must include number or numbers".

Humans are much smarter and better at tracking results instead of appearances, but feedback from results is pretty delayed, and LLMs have quite a bit of info about intelligence to draw from. Rather than just IQ, copy-pasting stuff like paragraphs describing concepts like thinkoomph are great too, but this post seems more like something you wouldn't want to exclude from that standard prompt.

One thing that might be helpful is the neurology of executive functioning. Activity in any part of the brain suppresses activity elsewhere; on top of reinforcement, this implies that state and states are one of the core mechanisms for understanding self-improvement and getting better output.

Your effort must scale to be appropriate to the capabilities of the people trying to remove you from the system. You have to know if they're the type of person who would immediately default to checking the will.

More understanding and calibration towards what modern assassination practice you should actually expect is mandatory because you're dealing with people putting some amount of thinkoomph into making your life plans fail, so your cost of survival is determined by what you expect your attack surface looks like. The appropriate-cost and the cost-you-decided-to-pay vary in OOMs depending on the circumstances, particularly the intelligence, resources, and fixations of the attacker. For example, the fact that this happened 2 weeks after assassination got all over the news is a fact that you don't have the privilege of ignoring if you want the answer, even though that particular fact will probably turn out to be unhelpful e.g. because the whole thing was probably just a suicide due to the base rates of disease and accidents and suicide being so god damn high.

If this sounds wasteful, it is. It's why our civilization has largely moved past assassination, even though getting-people-out-of-the-way is so instrumentally convergent for humans. We could end up in a cycle where assassination gets popular again after people start excessively standing in each other's way (knowing they won't be killed for it), or a stable cultural state like the Dune books or the John Wick universe and we've just been living in a long trough where elites aren't physically forced to live their entire lives like mob bosses playing chess games against invisible adversaries.

So don't think that if you only follow the rules of Science, that makes your reasoning defensible.

There is no known procedure you can follow that makes your reasoning defensible.

It was more of a 1970s-90s phenomenon actually, if you compare the best 90s moves (e.g. terminator 2) to the best 60s movies (e.g. space odyssey) it's pretty clear that directors just got a lot better at doing more stuff per second. Older movies are absolutely a window into a higher/deeper culture/way of thinking, but OOMs less efficient than e.g. reading Kant/Nietzsche/Orwell/Asimov/Plato. But I wouldn't be surprised if modern film is severely mindkilling and older film is the best substitute.

The content/minute rate is too low, it follows 1960s film standards where audiences weren't interested in science fiction films unless concepts were introduced to them very very slowly (at the time they were quite satisfied by this due to lower standards, similar to Shakespeare).

As a result it is not enjoyable (people will be on their phones) unless you spend much of the film either thinking or talking with friends about how it might have affected the course of science fiction as a foundational work in the genre (almost every sci-fi fan and writer at the time watched it).

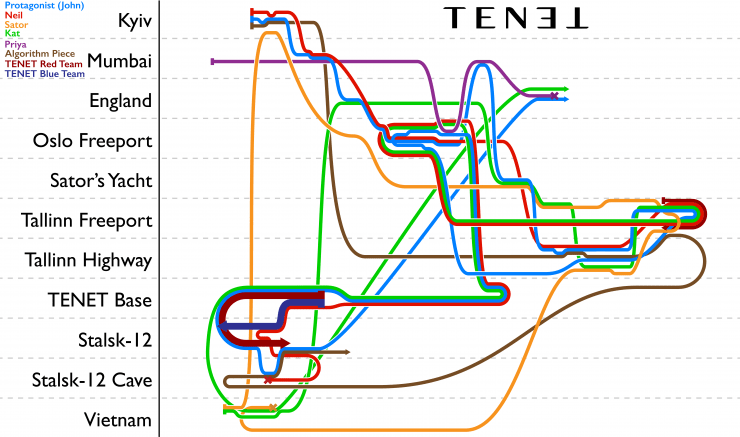

Tenet (2020) by George Nolan revolves around recursive thinking and responding to unreasonably difficult problems. Nolan introduces the time-reversed material as the core dynamic, then iteratively increases the complexity from there, in ways specifically designed to ensure that as much of the audience as possible picks up as much recursive thinking as possible.

This chart describes the movement of all key characters plot elements through the film; it is actually very easy to follow for most people. But you can also print out a bunch of copies and hand them out before the film (it isn't a spoiler so long as you don't look closely at the key).

Most of the value comes from Eat the Instructions-style mentality, as both the characters and the viewer pick up on unconventional methods to exploit the time reversing technology, only to be shown even more sophisticated strategies and are walked through how they work and their full implications.

It also ties into scope sensitivity, but it focuses deeply on the angles of interfacing with other agents and their knowledge, and responding dynamically to mistakes and failures (though not anticipating them), rather than simply orienting yourself to mandatory number crunching.

The film touches on cooperation and cooperation failures under anomalous circumstances, particularly the circumstances introduced by the time reversing technology.

The most interesting of these was also the easiest to miss:

The impossibility of building trust between the hostile forces from the distant future and the characters in the story who make up the opposition faction. The antagonist, dying from cancer and selected because his personality was predicted to be hostile to the present and sympathetic to the future, was simply sent instructions and resources from the future, and decided to act as their proxy in spite of ending up with a great life and being unable to verify their accuracy or the true goals of the hostile force. As a result, the protagonists of the story ultimately build a faction that takes on a life of its own and dooms both their friends and the entire human race to death by playing a zero sum survival game with the future faction, due to their failure throughout the film to think sufficiently laterally and their inadequate exploitation of the time-reversing technology.

Screen arrangement suggestion: Rather than everyone sitting in a single crowd and commenting on the film, we split into two clusters, one closer to the screen and one further.

The people in the front cluster hope to watch the film quietly, the people in the back cluster aim to comment/converse/socialize during the film, with the common knowledge that they should aim to not be audible to the people in the front group, and people can form clusters and move between them freely.

The value of this depends on what film is chosen; eg "A space Odyssey" is not watchable without discussing historical context and "Tenet" ought to have some viewers wanting to better understand the details of what time travelly thing just happened.

"All the Presidents Men" by Alan Paluka

"Oppenheimer" by George Nolan

"Tenet" by George Nolan

I'm not sure what to think about this, Thomas777's approach is generally a good one but for both of these examples, a shorter route (that it's cleanly mutually understood to be adding insult to injury as a flex by the aggressor) seems pretty probable. Free speech/censorship might be a better example as plenty of cultures are less aware of information theory and progress.

I don't know what proportion of the people in the US Natsec community understand 'rigged psychological games' well enough to occasionally read books on the topic, but the bar is pretty low for hopping onto fads as tricks only require one person to notice or invent them and then they can simply just get popular (with all kinds of people with varying capabilities/resources/technology and bandwidth/information/deffciencies hopping on the bandwagon).

I notice that there's just shy of 128 here and they're mostly pretty short, so you can start the day by flipping a coin 7 times to decide which one to read. Not a bisection search, just convert the seven flips to binary and pick the corresponding number. At first, you only have to start over and do another 7 flips if you land on 1111110 (126), 1111111 (127), or 0000000 (128).

If you drink coffee in the morning, this is a way better way to start the day than social media, as the early phase of the stimulant effect reinforces behavior in most people. Hanson's approach to various topics is a good mentality to try boosting this way.

This reminds me of dath ilan's hallucination diagnosis from page 38 of Yudkowsky and Alicorn's glowfic But Hurting People Is Wrong.

It's pretty far from meeting dath ilan's standard though; in fact an x-ray would be more than sufficient as anyone capable of putting something in someone's ear would obviously vastly prefer to place it somewhere harder to check, whereas nobody would be capable of defeating an x-ray machine as metal parts are unavoidable.

This concern pops up in books on the Cold War (employees at every org and every company regularly suffer from mental illnesses at somewhere around their base rates, but things get complicated at intelligence agencies where paranoid/creative/adversarial people are rewarded and even influence R&D funding) and an x-ray machine cleanly resolved the matter every time.

That's this week, right?

Is reading An Intuitive Explanation of Bayes Theorem recommended?

I agree that "general" isn't such a good word for humans. But unless civilization was initiated right after the minimum viable threshold was crossed, it seems somewhat unlikely to me that humans were very representative of the minimum viable threshold.

If any evolutionary process other than civilization precursors formed the feedback loop that caused human intelligence, then civilization would hit full swing sooner if that feedback loop continued pushing human intelligence further. Whether Earth took a century or a millennia between the harnessing of electricity and the first computer was heavily affected by economics and genetic diversity (e.g. Babbage, Lovelace, Turing), but afaik a "minimum viable general intelligence" could plausibly have taken millions or even billions of years under ideal cultural conditions to cross that particular gap.

This is an idea and NOT a recommendation. Unintended consequences abound.

Have you thought about sorting into groups based on carefully-selected categories? For example, econ/social sciences vs quant background with extra whiteboard space, a separate group for new arrivals who didn't do the readings from the other weeks (as their perspectives will have less overlap), a separate group for people who deliberately took a bunch of notes and made a concise list vs a more casual easygoing group, etc?

Actions like these leave scars on entire communities.

Do you have any idea how fortunate you were to have so many people in your life who explicitly tell you "don't do things like this"? The world around you has been made so profoundly, profoundly conducive to healing you.

When someone is this persistent in thinking of reasons to be aggressive AND reasons to not evaluate the world around them, it's scary and disturbing. I understand that humans aren't very causally upstream of their decisions, but this is the case for everyone, and situations like these go a long way towards causing people like Duncan and Eliezer to fear meeting their fans.

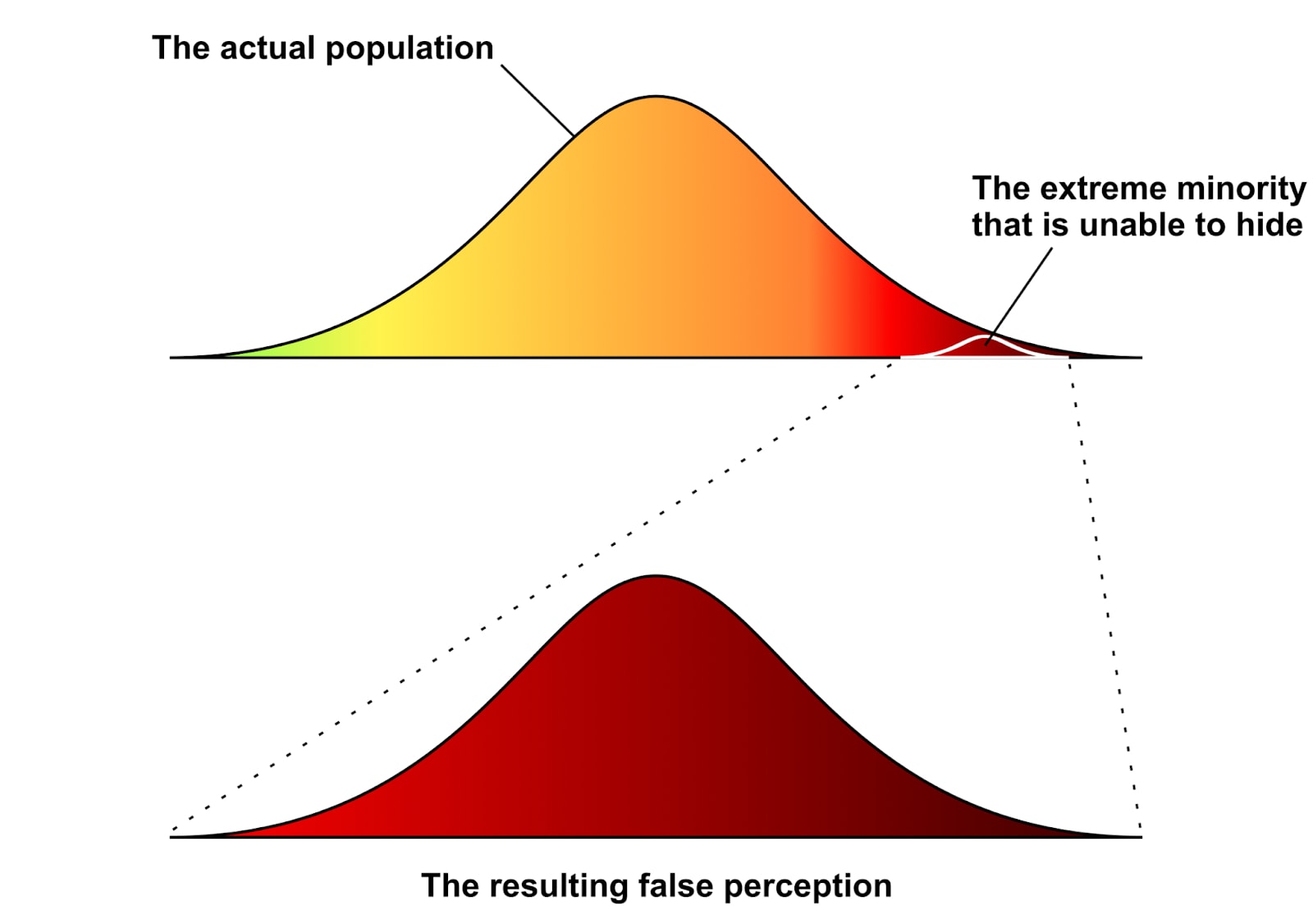

I'm greatful that looking at this case has helped me formalize a concept of oppositional drive, a variable representing the unconscious drive to oppose other humans with justifications layered on top based on intelligence (a separate variable). Children diagnosed with Oppositional Defiant disorder is the DSM-5's way of mitigating the harm when a child has an unusually strong oppositional drive for their age, but that's because the DSM puts binary categorizations on traits that are actually better represented as variables that in most people are so low as to not be noticed (and some people are in the middle, unusually extreme cases get all the attention, this was covered in this section of Social Dark Matter which was roughly 100% of my inspiration).

Opposition is... a rather dangerous thing for any living being to do, especially if your brain conceals/obfuscates the tendency/drive whenever it emerges, so even most people in the orangey area probably disagree with having this trait upon reflection and would typically press a button to place themselves more towards the yellow. This is derived from the fundamental logic of trust (which in humans must be built as a complex project that revolves around calibration).

This could have been a post so more people could link it (many don't reflexively notice that you can easily get a link to a Lesswrong quicktake or Twitter or facebook post by mousing over the date between the upvote count and the poster, which also works for tab and hotkey navigation for people like me who avoid using the mouse/touchpad whenever possible).

(The author sometimes says stuff like "US elites were too ideologically committed to globalization", but I don't think he provides great alternative policies.)

Afaik the 1990-2008 period featured government and military elites worldwide struggling to pivot to a post-Cold war era, which was extremely OOD for many leading institutions of statecraft (which for centuries constructed around the conflicts of the European wars then world wars then cold war).

During the 90's and 2000's, lots of writing and thinking was done about ways the world's militaries and intelligence agencies, fundamentally low-trust adversarial orgs, could continue to exist without intent to bump each other off. Counter-terrorism was possibly one thing that was settled on, but it's pretty well established that global trade ties were deliberately used as a peacebuilding tactic, notably to stabilize the US-China relationship (this started to fall apart after the 2008 recession brought anticipation of American economic/institutional decline scenarios to the forefront of geopolitics).

The thinking of period might not be very impressive to us, but foreign policy people mostly aren't intellectuals and for generations had been selected based on office politics where the office revolved around defeating the adversary, so for many of them them it felt like a really big shift in perspective and self-image, sort of like a Renaissance. Then US-Russia-China conflict swung right back and got people thinking about peacebuilding as a ploy to gain advantage, rather than sane civilizational development. The rejection of e.g. US-China economic integration policies had to be aggressive because many elites (and people who care about economic growth) tend to support globalization, whereas many government and especially Natsec elites remember that period as naive.

It's not a book, but if you like older movies, the 1944 film Gaslight is pretty far back (film production standards have improved quite a bit since then, so for a large proportion of people 40's films are barely watchable, which is why I recommend this version over the nearly identical British version and the original play), and it was pretty popular among cultural elites at the time so it's probably extremely causally upstream of most of the fiction you'd be interested in.

Writing is safer than talking given the same probability that both the timestamped keystrokes and the audio files are both kept.

In practice, the best approach is to handwrite your thoughts as notes, in a room without smart devices and with a door and walls that are sufficiently absorptive, and then type it out in the different room with the laptop (ideally with a USB keyboard so you don't have to put your hands on the laptop and the accelerometers on its motherboard while you type).

Afaik this gets the best ratio of revealed thought process to final product, so you get public information exchanges closer to a critical mass while simultaneously getting yourself further from getting gaslight into believing whatever some asshole rando wants you to believe. The whole paradigm where everyone just inputs keystrokes into their operating system willy-nilly needs to be put to rest ASAP, just like the paradigm of thinking without handwritten notes and the paradigm of inward-facing webcams with no built-in cover or way to break the circuit.

TL;DR "habitually deliberately visualizing yourself succeeding at goal/subgoal X" is extremely valuable, but also very tarnished. It's probably worth trying out, playing around with, and seeing if you can cut out the bullshit and boot it up properly.

Longer:

The universe is allowed to have tons of people intuitively notice that "visualize yourself doing X" is an obviously winning strategy that typically makes doing X a downhill battle if its possible at all, and so many different people pick it up that you first encounter it in an awful way e.g. in middle/high school you first hear about it but the speaker says, in the same breath, that you should use it to feel more motivated to do your repetitive math homework for ~2 hours a day.

I'm sure people could find all sorts of improvements e.g. an entire field of selfvisualizationmancy that provably helps a lot of people do stuff, but the important thing I've noticed is to simply not skip that critical step. Eliminate ugh fields around self-visualization or take whatever means necessary to prevent ugh fields from forming in your idiosyncratic case (also, social media algorithms could have been measurably increasing user retention by boosting content that places ugh fields in places that increase user retention by decreasing agency/motivation, with or without the devs being aware of this because they are looking at inputs and outputs or maybe just outputs, so this could be a lot more adversarial than you were expecting). Notice the possibility that it might or might not have been a core underlying dynamic in Yudkowsky's old Execute by Default post or Scott Alexander's silly hypothetical talent differential comment without their awareness.

The universe is allowed to give you a brain that so perversely hinges on self-image instead of just taking the action. The brain is a massive kludge of parallel processing spaghetti code and, regardless of whether or not you see yourself as a very social-status-minded person, the modern human brains was probably heavily wired to gain social status in the ancestral environment, and whatever departures you might have might be tearing down chesterton-schelling fences.

If nothing else, a takeaway from this was that the process of finding the missing piece that changes everything is allowed to be ludicrously hard and complicated, while the missing piece itself is simultaneously allowed to be very simple and easy once you've found it.

"Slipping into a more convenient world" is a good way of putting it; just using the word "optimism" really doesn't account for how it's pretty slippy, nor how the direction is towards a more convenient world.

It was helpful that Ezra noticed and pointed out this dynamic.

I think this concern is probably more a reflection of our state of culture, where people who visibly think in terms of quantified uncertainty are rare and therefore make a strong impression relative to e.g. pundits.

If you look at other hypothetical cultural states (specifically more quant-aware states e.g. extrapolating the last 100 years of math/literacy/finance/physics/military/computer progress forward another 100 years), trust would pretty quickly default to being based on track record instead of being one of the few people in the room whose visibly using numbers properly.

Strong upvoted!

Wish I was reading stuff at this level back in 2018. Glad that lots of people can now.

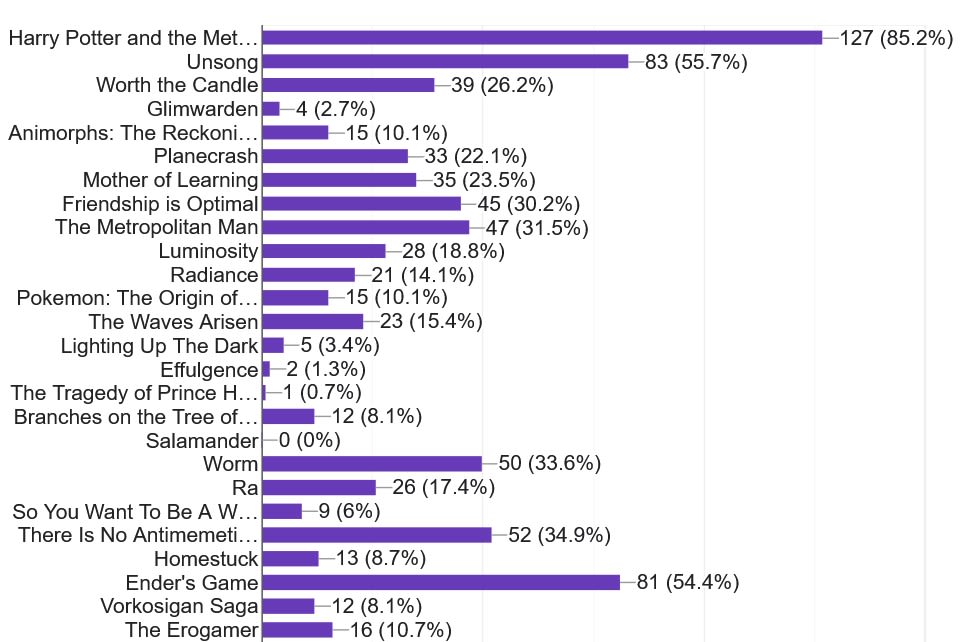

Do Metropolitan Man!

Also, here's a bunch of ratfic to read and review, weighted by the number of 2022 Lesswrong survey respondents who read them:

Weird coincidence: I was just thinking about Leopold's bunker concept from his essay. It was a pretty careless paper overall but the imperative to put alignment research in a bunker makes perfect sense; I don't see the surface as a viable place for people to get serious work done (at least, not in densely populated urban areas; somewhere in the desert would count as a "bunker" in this case so long as it's sufficiently distant from passerbys and the sensors and compute in their phones and cars).

Of course, this is unambiguously a necessary evil that a tiny handful of people are going to have to choose to live in a sad uncomfortable place for a while, and only because there's no other option and it's obviously the correct move for everyone everywhere including the people in the bunker.

Until the basics of the situation start somehow getting taught in the classrooms or something, we're going to be stuck with a ludicrously large proportion of people satisfied with the kind of bite-sized convenient takes that got us into this whole unhinged situation in the first place (or have no thoughts at all).

I would have liked to write a post that offers one weird trick to avoid being confused by which areas of AI are more or less safe to advance, but I can’t write that post. As far as I know, the answer is simply that you have to model the social landscape around you and how your research contributions are going to be applied.

Another thing that can't be ignored is the threat of Social Balkanization, Divide-and-conquer tactics have been prevalent among military strategists for millennia, and the tactic remains prevalent and psychologically available among the people making up corporate factions and many subcultures (likely including leftist and right-wing subcultures).

It is easy for external forces to notice opportunities to Balkanize a group, to make it weaker and easier to acquire or capture the splinters, which in turn provides further opportunity for lateral movement and spotting more exploits. Since awareness and exploitation of this vulnerability is prevalent, social systems without this specific hardening are very brittle and have dismal prospects.

Sadly, Balkanization can also emerge naturally, as you helpfully pointed out in Consciousness as a Conflationary Alliance Term, so the high base rates make it harder to correctly distinguishing attacks from accidents. Inadequately calibrated autoimmune responses are not only damaging, but should be assumed to be automatically anticipated and misdirected by default, including as part of the mundane social dynamics of a group with no external attackers.

There is no way around the loss function.

The only reason I could think of that this would be the "worst argument in the world" is because it strongly indicates low-level thinkers (e.g. low decouplers).

An actual "worst argument in the world" would be whatever maximizes the gap between people's models and accurate models.

Can you expand the list, go into further detail, or list a source that goes into further detail?

At the time, I thought something like "given that the nasal tract already produces NO, it seems possible that humming doesn't increase the NO in the lungs by enough orders of magnitude to make once per hour sufficient", but I never said anything until too late and a bunch of other people figured it out, and also a bunch of other useful stuff that I was pretty far away from noticing (e.g. considering the rate at which the nasal tract accumulates NO to be released by humming).

Wish I'd said something back when it was still valuable.