Information warfare historically revolved around human conduits

post by trevor (TrevorWiesinger) · 2023-08-28T18:54:27.169Z · LW · GW · 7 commentsContents

The battlespace Slow takeoff Instrumental relevance to AI safety Information I found helpful: None 7 comments

Epistemic status: This is a central gear in any model of propaganda, public opinion, information warfare, PR, hybrid warfare, and any other adversarial information environment. Due to fundamental mathematical laws, like power law distribution, not all targets in the battlespace are created equal. This is important for defensive purposes.

______________________________________________

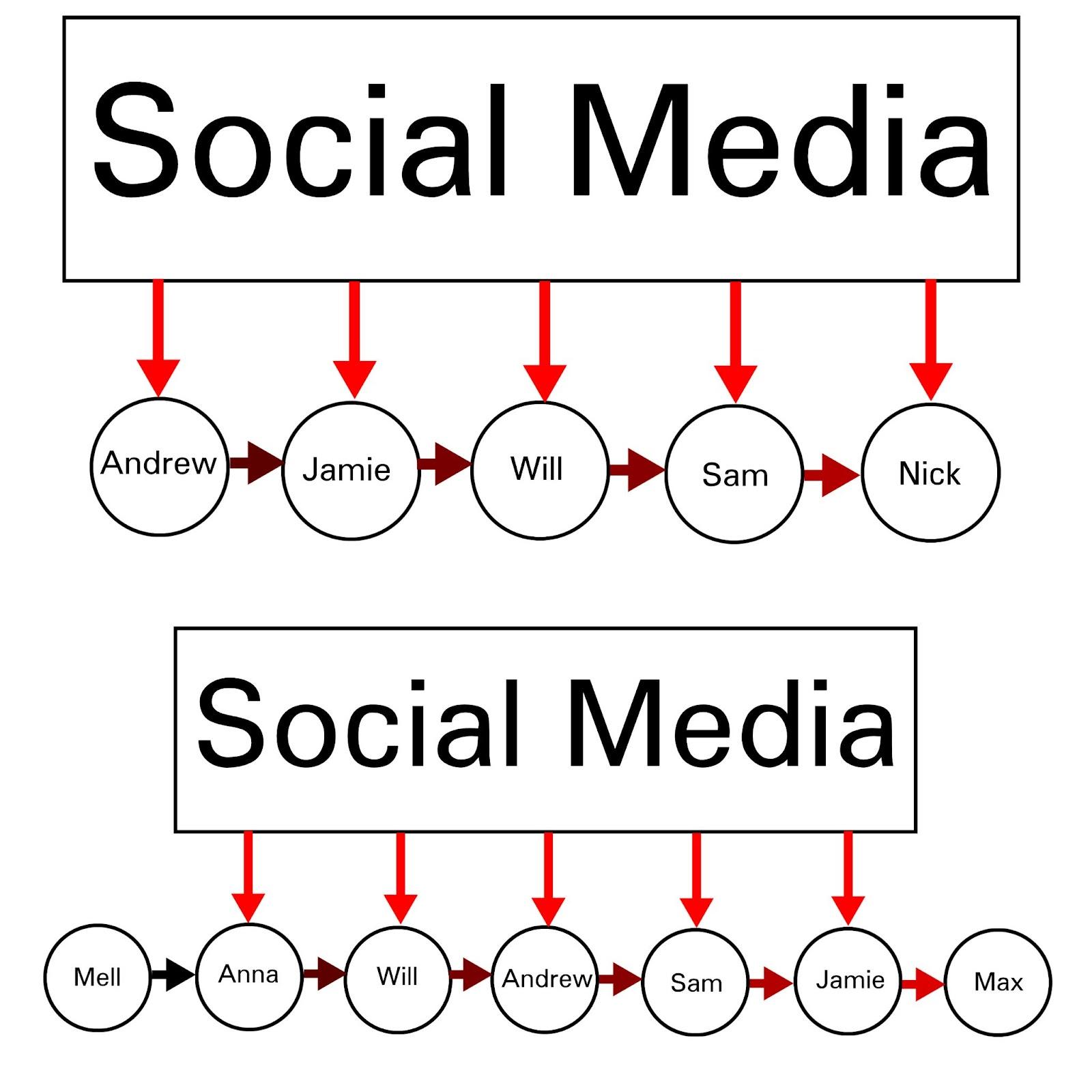

Generally, when people think about propaganda, censorship, public opinion, PR, and social psychology, they tend to think of the human receivers/viewers/readers/listeners as each being the ultimate goal— either the message is accepted, ignored, or rejected, either consciously or subconsciously, and each person either gains your side a single a point or loses you a point.

This is actually a bad model of large-scale influence. People with better models here are usually more inclined to use phrases like "steering public opinion", because the actual goal is to create human conduits, who internalize the message and spread it to their friends in personal conversations.

Messages that spread in that way are not only personalized influence by-default, even if initiated by early 20th century technology like radio or propaganda posters, but messages are also perceived as much more trustworthy when heard directly from the mouth of a friend than from one-to-many communication like broadcasting or posters, which were unambiguously spread by at least one elite with the resources to facilitate that and who you are not allowed to meet, even if they seem like a person just like you (although many people develop positive feelings towards messages from a likable or relatable persona, such as bumbling/buffoon politicians or critical voices of justice). Propaganda posters totally fell out of style, because it was plain as day that they were there to influence you; radio and television survived, including as a tool of state power, because they did not stick out so badly.

Censorship, on the other hand, is the prevention of this dynamic from emerging in the first place, which is likely a factor explaining why censorship is so widely accepted or tolerated by elites in authoritarian countries.

The battlespace

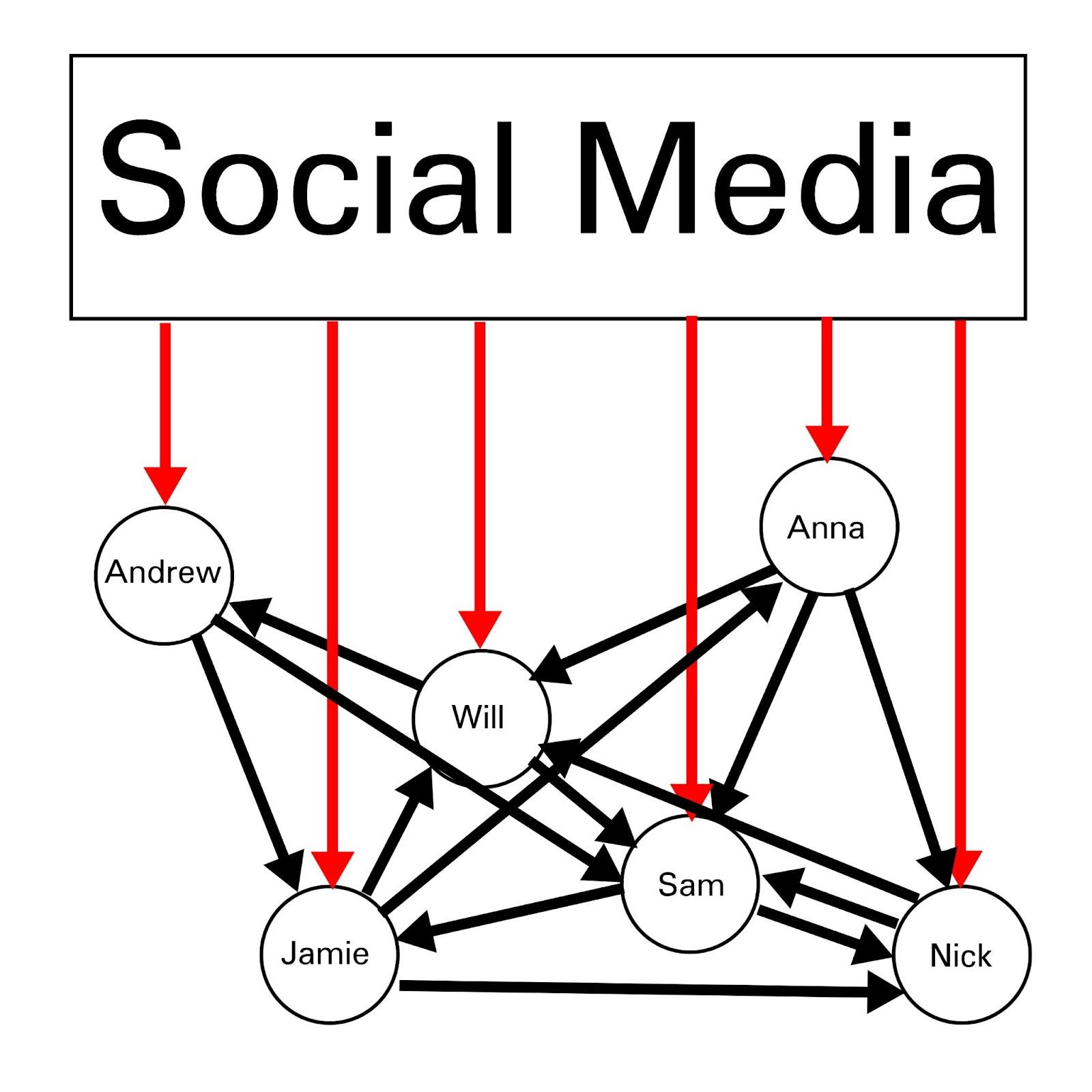

It's important to note that not all human conduits are created equal. This dynamic ultimately results in intelligent minds not merely relaying the message, but also using their human intelligence to add additional optimization power to the message's spread. On social media, this creates humans who write substantially smarter, more charismatic, eloquent, and persuasive one-liner posts and comments than those produceable by last-generation LLMs.

Furthermore, as an idea becomes more mainstream (and/or as the Overton window shrinks for rejecting the message), the conduits optimizing for the message's spread not only become greater in number, but also gain access to smarter speakers and writers. The NYT claims that Russian intelligence agencies deliberately send agents to the West to facilitate this process such as creating recruiting smart young people and creating grassroots movements that serve Russian interests; I've also previously had conversations with people who made strong arguments for and against the case that a large portion of the Vietnam antiwar movement was caused by Soviet agents orchestrating grassroots movements in-person in an almost identical way.

Slow takeoff

Social media and botnets, particularly acting autonomously due to increasingly powerful integrated AI, might be capable of navigating the information space as a battlespace, autonomously locating and focusing processing power on high-value targets who are predicted to be the best at adding a human touch to any particular message; a human touch that current or future LLMs might not be able to reliably generate on their own.

The technological transformation of the 2010s greatly exceeded the transformativeness of any other decade, such as the transition from radio to television, or the transition from the big three television channels, ABC, CBS, and NBC, to the cable TV system in the 80s and 90s (which offered substantially more channels and variety to appeal more strongly to different kinds of people). The emergence of social media and ubiquitous smartphones (notably, which are filled with sensors and whose operating systems are notoriously insecure) in the 2000s and 2010s was nonetheless greater, and many [LW · GW] have [LW · GW] argued [LW · GW] that the next 10 years will be even more transformative due to AI capabilities research.

Instrumental relevance to AI safety

Like any other use of AI for public opinion influence operations or hybrid warfare, these circumstances are notable due to the risk of it being used to damage or even decimate the AI safety community, which is undoubtedly the kind of thing that could happen during slow takeoff if slow takeoff transforms geopolitical affairs and the balance of power, in addition to transforming society (as has been predicted), since AI would become a central goalpost for international conflict, and the people involved with AI would be perceived [LW · GW] by their position relative to that goalpost.

"The enemy's gate is down"

-Orson Scott Card, Ender's Game

Information I found helpful:

Large language models will be great for Censorship [LW · GW]

The US is becoming less stable [LW · GW]

7 comments

Comments sorted by top scores.

comment by gull · 2023-08-28T22:42:28.931Z · LW(p) · GW(p)

these circumstances are notable due to the risk of it being used to damage or even decimate the AI safety community, which is undoubtedly the kind of thing that could happen during slow takeoff if slow takeoff transforms geopolitical affairs and the balance of power

Wouldn't it probably be fine as long as noone in AI safety goes about interfering with these applications? I get an overall vibe from people that messing with this kind of thing is more trouble than it's worth. If that was the case, wouldn't it be better to leave it be? What's the goal here?

Replies from: TrevorWiesinger↑ comment by trevor (TrevorWiesinger) · 2023-08-28T23:20:22.621Z · LW(p) · GW(p)

AI safety goes about interfering with these applications

Yes definitely don't do this. Perish the thought. That's not what AI safety is about.

I think it's better to know about these dynamics when forming a world model, and potentially very dangerous to not know i.e. because then they will be invisible helicopter blades that you can just walk right into. I'm aware that the tradeoffs for researching this kind of thing is complicated.

It's also a good idea to increase readership of The Sequences [? · GW], HPMOR, the codex [? · GW], Raemon's rationality paradigm [LW · GW] when it's ready, that will make people depart from being the kinds of targets that these systems are built for. Getting people off social media would also be a big win, of course.

comment by Maxwell Peterson (maxwell-peterson) · 2023-08-30T14:47:14.264Z · LW(p) · GW(p)

“(although itiots might still fall for the "I'm an idiot like you" persona such as Donald Trump, Tucker Carlson, and particularly Alex Jones).”

This line is too current-culture-war for LessWrong. I began to argue with it in this comment, before deleting what I wrote, and limiting myself to this.

Replies from: TrevorWiesinger↑ comment by trevor (TrevorWiesinger) · 2023-08-30T19:03:56.373Z · LW(p) · GW(p)

Considering that obama took on an intellectual-themed personality, I think it's not a good enough example anyway, so I'll change it to "idiot/buffoon".

Replies from: maxwell-peterson↑ comment by Maxwell Peterson (maxwell-peterson) · 2023-08-30T21:24:49.388Z · LW(p) · GW(p)

Thank you!

comment by Nicholas / Heather Kross (NicholasKross) · 2023-08-29T02:51:57.862Z · LW(p) · GW(p)

This is a good core idea for persuasion, macro and micro.

One example: Local churches and community groups are probably run by people who are already into doing community-outreach/coordination things.