Cyborg Periods: There will be multiple AI transitions

post by Jan_Kulveit, rosehadshar · 2023-02-22T16:09:04.858Z · LW · GW · 9 commentsContents

Stage

This means that for each domain, there are potentially two transitions: one from the human period into the cyborg period, and one from the cyborg period into the AI period.

Transitions in some domains will be particularly important

The order of AI transitions in different domains will matter

‘Cyborg periods’ could be pivotal

Interventions

None

9 comments

It can be useful to zoom out and talk about very compressed concepts like ‘AI progress’ or ‘AI transition’ or ‘AGI timelines’. But from the perspective of most AI strategy questions, it’s useful to be more specific.

Looking at all of human history, it might make sense to think of ourselves as at the cusp of an AI transition, when AI systems overtake humans as the most powerful actors. But for practical and forward-looking purposes, it seems quite likely there will actually be multiple different AI transitions:

- There will be AI transitions at different times in different domains

- In each of these domains, transitions may move through multiple stages:

Stage [>> = more powerful than] | Description | Present day examples |

Human period: Humans >> AIs | Humans clearly outperform AIs. At some point, AIs start to be a bit helpful. | Alignment research, high-level organisational decisions… |

Cyborg period: Human+AI teams >> humans

Human+AI teams >> AIs | Humans and AIs are at least comparably powerful, but have different strengths and weaknesses. This means that human+AI teams outperform either unaided humans, or pure AIs. | Visual art, programming, trading… |

AI period: AIs >> humans (AIs ~ human+AI teams) | AIs overtake humans. Humans become obsolete and their contribution is negligible to negative. | Chess, go, shogi… |

Some domains might never enter an AI period. It’s also possible that in some domains the cyborg period will be very brief, or that there will be a jump straight to the AI period. But:

- We’ve seen cyborg periods before

- Global supply chains have been in a cyborg period for decades

- Chess and go both went through cyborg periods before AIs became dominant

- Arguably visual art, coding and trading are currently in cyborg periods

- Even if cyborg periods are brief, they may be pivotal

This means that for each domain, there are potentially two transitions: one from the human period into the cyborg period, and one from the cyborg period into the AI period.

Transitions in some domains will be particularly important

The cyborg period in any domain will correspond to:

- An increase in capabilities (definitionally, as during that period human+AI teams will be more powerful than humans were in the human period)

- An increase in the % of that domain which is automated, and therefore probably an increase in the rate of progress

Some domains where increased capabilities/automation/speed seem particularly strategically important are:

- Research, especially

- AI research

- AI alignment research

- Human coordination

- Persuasion

- Cultural evolution

- AI systems already affect cultural evolution by speeding it up and influencing which memes spread. However, AI doesn’t yet play a significant role in creating new memes (although we are at the very start of this happening). This is similar to the way that humans harnessed the power of natural evolution to create higher yield crops without being able to directly engineer at the genetic level

- Meme generation may also become increasingly automated, until most cultural change happens on silica rather than in brains, leading to different selection pressures

- Strategic goal seeking

- Currently, broad roles involving long-term planning and open domains like "leading a company" are in the human period

- If this changes, it would give cyborgs additional capabilities on top of the ones listed above

Some other domains which seem less centrally important but could end up mattering a lot are:

- Cybersecurity

- Military strategy

- Nuclear command and control

- Some kinds of physical engineering/manufacture/nanotech/design

- Chip design

- Coding

There are probably other strategically important domains we haven’t listed.

A common feature of the domains listed is that increased capabilities in those domains could lead to large increases in power, for the systems with those capabilities. It will sometimes be helpful to consider power in aggregate, so that we can make direct comparisons about the amount of power which is automated in a given domain.

Clearly, capabilities in these domains interact. In our view, people coming from different backgrounds often perceive large increases in power in their domain of expertise as the decisive transition. For example, it is easy for someone coming from a research background to see how automated research abilities could impact other domains. But the reverse is also true: automated powers of persuasion, or automated cultural evolution, would have a strong impact on research, by making some directions of thinking unpopular, and influencing the allocation of attention and minds.

Note that it isn’t clear that the level of abstraction we’ve picked here is the right one. It’s possible that even more granularity would be more helpful, at least in some situations. For all of the domains we list, you could think of sub-domains, or of particular capabilities which might advance faster or slower than others.

The order of AI transitions in different domains will matter

The timing of transitions in different domains isn’t independent. But the world will look very different depending on which transitions happen first. A few vignettes:

- In a world where cultural evolution and AI research transition first, we may see the window of what's culturally possible opening fast and in unexpected directions:

- Increasing the power of ideologies might cause leading AI research labs to become heavily regulated or nationalised

- Concerns about AI sentience might become a large driving force behind AI research

- In contrast, an ideology might emerge which promotes ceding power to AIs as virtuous and good

- And many other possibilities (predicting future successful ideologies is obviously very hard)

- In a world where human coordination and manufacturing progress faster than other domains, humans might be able to leverage narrower AIs to bargain about the limits of power for AI systems deployed in socio-economic or political contexts, or about other aspects of AI development. Possibly, a "dominant coalition" could become powerful enough to enforce existential safety (c.f. Paretotopia [EA · GW]).

Importantly, the fact that there are different possible orderings suggests that there are multiple possible winning strategies from the perspective of decreasing existential risk. For example:

- Moving faster on automating coordination than automating power is one possible route to minimising existential risk

- Moving faster on AI alignment research than AI research is another

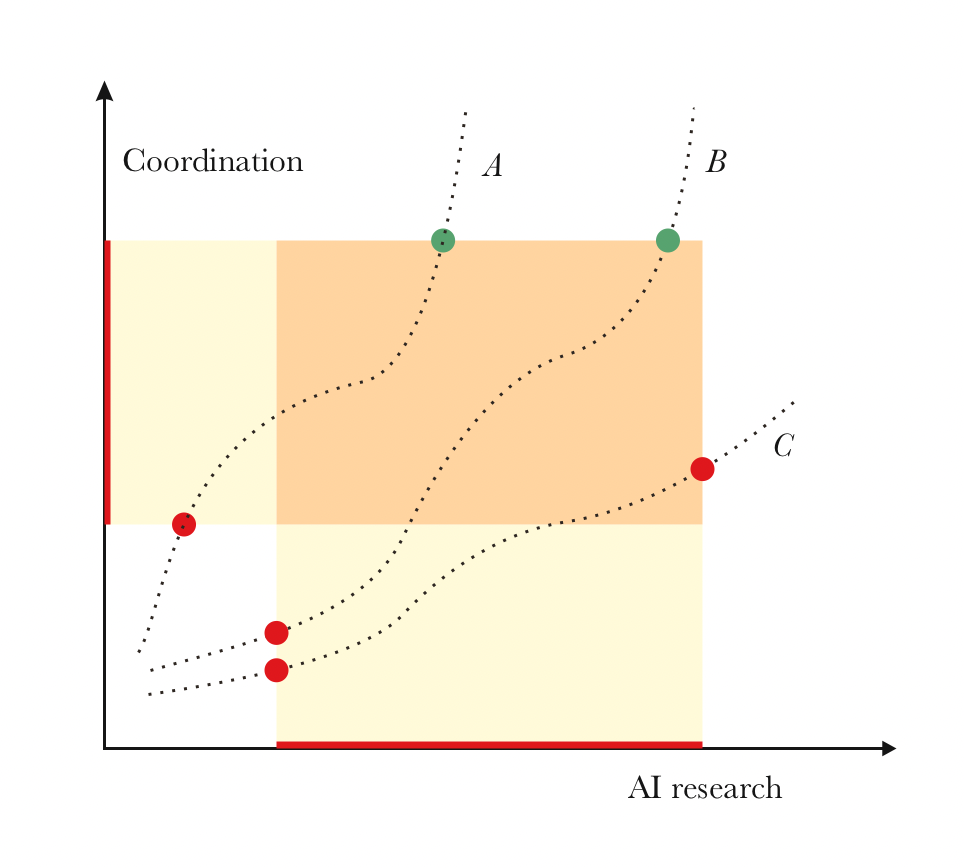

Caption: in trajectories A and B, coordination is automated more quickly than AI research. In trajectory C, AI research is automated more quickly.

What does all of this imply? Tentatively:

- Actions that have the potential to differentially speed up automation in some areas over others could be very valuable. (Yes, differential technological development again.)

- It seems unlikely that we will be able to accurately predict the trajectory we take in advance, with our current levels of understanding of the dynamics.

- Insofar as we will have to rely on our ability to course correct rather than our ability to chart out the ideal trajectory ahead of time, becoming very good at course correcting seems desirable.

‘Cyborg periods’ could be pivotal

Even if cyborg periods are brief, they may be pivotal:

- Humans (via human+AI teams) will be more powerful actors than during human periods, and have more influence over future trajectories

- This could be good, if the increases in power are directed towards risk-reducing things like coordination and alignment

- It could also be bad, if the increases in power further exacerbate power inequalities between humans, aren’t exercised with wisdom, are directed towards risk-increasing activities…

- It seems likely that the most important work for minimising existential risk will also happen during cyborg periods, because of increased power, and greater insight into what very advanced AI systems will look like

- Key deployment decisions will also probably happen during cyborg periods

- Once we enter AI periods where AIs are clearly more powerful than humans, it may be too late to change trajectories

- This seems true at a general level

- Whether it’s true for particular domains probably depends on the ordering of AI transitions

This leads to a picture where there are overlapping but different cyborg periods in different domains. These periods will probably be:

- Weird: things that were impossible will be possible, rates of progress and change may be diverging significantly in different domains, the rules of the game will be changing

- For the world as a whole to start feeling really weird, it’s probably sufficient to enter the cyborg period in any of a small number of strategically important domains (research, coordination, persuasion, cultural evolution, probably a few other domains)

- High leverage: for the reasons above

- Fast-paced: it seems possible (though not inevitable) that cyborg periods will be short, and consequently feel like crises

Interventions

Leveraging the power of human+AI teams during cyborg periods seems like it might be critical for navigating transitions to very advanced AI.

This is likely to be non-trivial. For example, to really make use of the different kinds of cognition in a system involving a single AI system and a single human requires:

- Sufficient/appropriate understanding of the AI system’s strengths and weaknesses

- Novel modes of factoring cognition, as well as means to implement a given factorisation, including e.g.

- Specialised workflows

- Good user interfaces

- Modifications of the AI system for this purpose

Doing this in a more complex set-up might involve a lot of substantive work. But we can probably prepare for this in advance, by practising working in human+AI teams in the sub-domains where automation is more advanced. (Recent post on Cyborgism [AF · GW] is a good example of a push in that direction.)

This applies more broadly than just to AI alignment research, and it would be great to have people in other strategically important domains practising this too.

The ideas in this post are mostly from Jan, and private discussions between Jan and a few other people. Rose [LW · GW] did most of the writing. Clem [LW · GW] and Nora [LW · GW] gave substantive comments. s The post was written as part of the work done at ACS research group [AF · GW].

9 comments

Comments sorted by top scores.

comment by Tomáš Gavenčiak (tomas-gavenciak) · 2023-02-24T12:16:01.923Z · LW(p) · GW(p)

Seeing some confusion on whether AI could be strictly stronger than AI+humans: A simple argument there may be that - at least in principle - adding more cognition (e.g. a human) to a system should not make it strictly worse overall. But that seems true only in a very idealized case.

One issue is incorporating human input without losing overall performance even in situation when the human's advice is much wore than the AI's in e.g. 99.9% of the cases (and it may be hard to tell apart the 0.1% reliably).

But more importantly, a good framing here may be the optimal labor cost allocation between AIs and Humans on a given task. E.g. given a budget of $1000 for a project:

- Human period: optimal allocation is $1000 to human labor, $0 to AI. (Examples: making physical art/sculpture, some areas of research[1])

- Cyborg period: optimal allocation is something in between, and neither AI nor human optimal component would go to $0 even if their price changed (say) 10-fold. (Though the ratios here may get very skewed at large scale, e.g. in current SotA AI research lab investments into compute.)

- AI period: optimal allocation of $1000 to AI resources. Moving the marginal dollar to humans would make the system strictly worse (whether for drop in overall capacity or for noisiness of the human input).[2]

- ^

This is still not a very well-formalized definition as even the artists and philosophers already use some weak AIs efficiently in some part of their business, and a boundary needs to be drawn artificially around the core of the project.

- ^

Although even in AI period with a well-aligned AI, the humans providing their preferences and feedback are a very valuable part of the system. It is not clear to me whether to include this in cyborg or AI period.

↑ comment by Tomáš Gavenčiak (tomas-gavenciak) · 2023-02-24T12:42:00.539Z · LW(p) · GW(p)

The transitions in more complex, real-world domains may not be as sharp as e.g. in chess, and it would be useful to model and map the resource allocation ratio between AIs and humans in different domains over time. This is likely relatively tractable and would be informative for prediction of future development of the transitions.

While the dynamic would differ between domains (not just the current stage but also the overall trajectory shape), I would expect some common dynamics that would be interesting to explore and model.

A few examples of concrete questions that could be tractable today:

- What fraction of costs in quantitative trading is expert analysts and AI-based tools? (incl. their development, but perhaps not including e.g. basic ML-based analytics)

- What fraction of costs is already used for AI assistants in coding? (not incl. e.g. integration and testing costs - these automated tools would point to an earlier transition to automation that is not of main interest here)

- How large fraction of costs of PR and advertisement agencies is spent on AI, both facing customers and influencing voters? (may incl. e.g. LLM analysis of human sentiment, generating targeted materials, and advanced AI-based behavior models, though a finer line would need to be drawn; I would possibly include experts who operate those AIs if the company would not employ them without using an AI, as they may incur significant part of the cost)

While in many areas the fraction of resources spent on (advanced) AIs is still relatively small, it is ramping up quite quickly and even those may provide informative to study (and develop methodology and metrics for, and create forecasts to calibrate our models).

comment by JakubK (jskatt) · 2023-02-22T22:38:41.612Z · LW(p) · GW(p)

AIs overtake humans. Humans become obsolete and their contribution is negligible to negative.

I'm confused why chess is listed as an example here. This StackExchange post suggests that cyborg teams are still better than chess engines. Overall, I'm struggling to find evidence for or against this claim (that humans are obsolete in chess), even though it's a pretty common point in discussions about AI.

Replies from: conor-sullivan, conor-sullivan, Jan_Kulveit↑ comment by Lone Pine (conor-sullivan) · 2023-02-23T11:38:12.510Z · LW(p) · GW(p)

Thinking about it analytically, the human+AI chess player cannot be dominated by an equivalent AI (since the human could always just play the move suggested by the engine.) In practice, people play correspondence chess for entertainment or for money, and the money is just payment for someone else's entertainment. Therefore, chess will properly enter the AI era (post-cyborg) when correspondence chess becomes so boring and rote that players stop even bothering to play.

↑ comment by Lone Pine (conor-sullivan) · 2023-02-23T11:25:20.332Z · LW(p) · GW(p)

Reading that StackExchange post, it sounds like AI/cyborgs are approaching perfect play, as indicated by the frequency of draws. Perfect play, in chess! That's absolutely mind blowing to me.

↑ comment by Jan_Kulveit · 2023-02-24T08:44:13.404Z · LW(p) · GW(p)

I'm not really convinced by the linked post

- the chart is from a someone selling financial advice and illustrated elo ratings of chess programs differ from e.g. wikipedia ("Stockfish estimated Elo rating is over 3500") (maybe it's just old?)

- linked interview in the "yes" answer is from 2016

- elo ratings are relative to other players; it is not trivial to directly compare cyborgs and AI: engine ratings are usually computed in tournaments where programs run with same hardware limits

In summary, in my view in something like "correspondence chess" the limit clearly is "AIs ~ human+AI teams" / "human contribution is negligible" .... the human can just do what the engine says.

My guess is the current state is: you could be able to compensate what the human contributes to the team by just more hardware. (i.e. instead of the top human part of the cyborg, spending $1M on compute would get you better results). I'd classify this as being in the AI period, for most practical purposes

Also... as noted by Lone Pine, it seems the game itself becomes somewhat boring with increased power of the players, mostly ending in draws.

↑ comment by JakubK (jskatt) · 2023-02-24T20:38:37.594Z · LW(p) · GW(p)

That makes sense. My main question is: where is the clear evidence of human negligibility in chess? People seem to be misleadingly confident about this proposition (in general; I'm not targeting your post).

When a friend showed me the linked post, I thought "oh wow that really exposes some flaws in my thinking surrounding humans in chess." I believe some of these flaws came from hearing assertive statements from other people on this topic. As an example, here's Sam Harris during his interview with Eliezer Yudkowsky (transcript, audio):

Obviously we’ll be getting better and better at building narrow AI. Go is now, along with Chess, ceded to the machines. Although I guess probably cyborgs—human-computer teams—may still be better for the next fifteen days or so against the best machines. But eventually, I would expect that humans of any ability will just be adding noise to the system, and it’ll be true to say that the machines are better at chess than any human-computer team.

(In retrospect, this is a very weird assertion. Fifteen days? I thought he was talking about Go, but the last sentence makes it sound like he's talking about chess.)

comment by Gesild Muka (gesild-muka) · 2023-02-23T12:57:44.545Z · LW(p) · GW(p)

Regarding regulation and ideology: In the relatively near term my prediction is that most of the concern won't be over AI sentience (that is still several transitions away) but rather what nations and large organizations do with AI. Those early scares/possible catastrophes will greatly inform regulation and ideology in the years to come.

comment by Review Bot · 2024-04-02T19:50:41.088Z · LW(p) · GW(p)

The LessWrong Review [? · GW] runs every year to select the posts that have most stood the test of time. This post is not yet eligible for review, but will be at the end of 2024. The top fifty or so posts are featured prominently on the site throughout the year.

Hopefully, the review is better than karma at judging enduring value. If we have accurate prediction markets on the review results, maybe we can have better incentives on LessWrong today. Will this post make the top fifty?