Large Language Models will be Great for Censorship

post by Ethan Edwards · 2023-08-21T19:03:55.323Z · LW · GW · 14 commentsThis is a link post for https://ethanedwards.substack.com/p/large-language-models-will-be-great

Contents

How Censorship Worked Digital Communication and the Elusiveness of Total Censorship What can LLMs do? What Comes Next None 14 comments

Produced as part of the SERI ML Alignment Theory Scholars Program - Summer 2023 Cohort

Thanks to ev_ [LW · GW] and Kei [LW · GW] for suggestions on this post.

LLMs can do many incredible things. They can generate unique creative content, carry on long conversations in any number of subjects, complete complex cognitive tasks, and write nearly any argument. More mundanely, they are now the state of the art for boring classification tasks and therefore have the capability to radically upgrade the censorship capacities of authoritarian regimes throughout the world.

How Censorship Worked

In totalitarian government states with wide censorship - Tsarist Russia, Eastern Bloc Communist states, the People’s Republic of China, Apartheid South Africa, etc - all public materials are ideally read and reviewed by government workers to ensure they contain nothing that might be offensive to the regime. This task is famously extremely boring and the censors would frequently miss obviously subversive material because they did not bother to go through everything. Marx’s Capital was thought to be uninteresting economics so made it into Russia legally in the 1890s.

The old style of censorship could not possibly scale, and the real way that censors exert control is through deterrence and fear rather than actual control of communication. Nobody knows the strict boundary line over which they cannot cross, and therefore they stay well away from it. It might be acceptable to lightly criticize one small part of the government that is currently in disfavor, but why risk your entire future on a complaint that likely goes nowhere? In some regimes such as the PRC under Mao, chaotic internal processes led to constant reversals of acceptable expression and by the end of the Cultural Revolution most had learned that simply being quiet was the safest path[1]. Censorship prevents organized resistance in the public and ideally for the regime this would lead to tacit acceptance of the powers that be, but a silently resentful population is not safe or secure. When revolution finally comes, the whole population might turn on their rulers with all of their suppressed rage released at once. Everyone knows that everyone knows that everyone hates the government, even if they can only acknowledge this in private trusted channels.

Because proper universal and total surveillance has always been impractical, regimes have instead focused on more targeted interventions to prevent potential subversion. Secret polices rely on targeted informant networks, not on workers who can listen to every minute of every recorded conversation. This had a horrible and chilling effect and destroyed many lives, but also was not as effective as it could have been. Major resistance leaders were still able to emerge in totalitarian states, and once the government showed signs of true weakness there were semi-organized dissidents ready to seize the moment[2].

Digital Communication and the Elusiveness of Total Censorship

Traditional censorship mostly dealt with a relatively small number of published works: newspapers, books, films, radio, television. This was somewhat manageable just using human labor. However in the past two decades, the amount of communication and material that is potentially public has been transformed with the internet.

It is much harder to know how governments are handling new data because the information we have mostly comes from the victims of surveillance who are kept in the same deterrent fear as the past. If victims imagine the state is more capable than it is, that means the state is succeeding, and it is harder to assess the true capabilities. We don’t have reliable accounts from insiders or archival access since no major regimes have fallen during this time.

With these caveats aside, reports at the forefront of government censorship are quite worrying. The current PRC state is apparently willing to heavily invest in extreme monitoring and control of populations it considers potentially subversive. According to reports from Xinjiang, people are regularly subjected to searches of their phones including their public social media accounts and private messages. Nothing feels safe and in the early days of the crackdown on Muslim minorities people were uncertain about which channels were monitored and which were not. It is notable that in these accounts the state appears to want to find any possibly subversive content but in frequent checks simply misses clearly offensive material. A source for Darren Byler reports that while the guards at checkpoints would check his WeChat, they seemed uncertain how to use foreign social media apps or to notice that he used a VPN[3]. Everything still passes through many layers of human fallibility, and the task of total surveillance of Xinjiang without heavy-handed violence and internment (which they have resorted to) seems to be beyond the capacity of the PRC state, even though they have poured money into this dark task.

We also know that modern internet platforms are built to allow for centralized control of information, but that these tools are far from perfect. Any mention of the Tiananmen Square Incident is kept strictly hidden in Chinese language sources within the great firewall, though for example the English Wikipedia article was openly accessible as of a few years ago. WeChat and other Chinese social media platforms appear to have tight controls placed on them: Peng Shuai’s accusations against a party official were scrubbed quickly from accessible sources and Zhao Wei’s whole career disappeared very quickly. While these are extremely dystopian and disturbing, it is notable that one could watch these events happen in real time along with many outraged Chinese citizens. The authorities can contain and control such incidents shortly after they happen, but not before they explode enough to warrant manual human-targeted censorship. Once manual intervention has happened, automatic processes prevent the same topics from reemerging, but new incidents seem to regularly escape classification.

What we know from tech-media companies in the more open world confirms this. Facebook, Twitter, and similar platforms seem to have a huge suite of algorithmic tools to prevent topics deemed toxic from gaining much reach or being seen at all, but none of these tools work perfectly. The internet has also revolutionized the output of content which disturb or offend the public, content which must be hidden if platforms wish to maximize their users and ad revenue. A huge pool of labor doing semi-manual tagging of offensive content - successors to the bored censors of the eastern bloc - are now able to at least keep communications mostly inoffensive and do some filtering according to company ideology. They have to wade through horrible content and respond to ambiguous and ever-changing guidelines, but they seem to keep these platforms tolerable. These manual classifications are almost certainly being used to train AI systems, but there is no sign that the humans can be replaced yet. And viral political events that require heavy-handed highly visible and manual intervention still happen regularly.

What can LLMs do?

Personal communication has been fully digitized, but algorithmic classification of potentially subversive content has not kept pace with the incredible scale of networked media. LLMs might be the tool to change this, at least in the domain of text. The lazy censors of the past can become even lazier and far more effective if they simply get scalable LLMs to grade every piece of text for its potential riskiness and implications.

For example, GPT-4 can be simply instructed to play the role of a Tsarist censor. To test it on a simple prompt, I gave it passages from Nikolay Chernyshevsky’s novel What is to be Done?, the 1863 Russian novel famous for inspiring many notable socialists and revolutionaries, especially Lenin who held the work dear. It is also somewhat famous as a failure of the censorship system - Chernyshevsky wrote the book as a political prisoner and the novel passed through the prison censor and a publication censor before being released to the public.

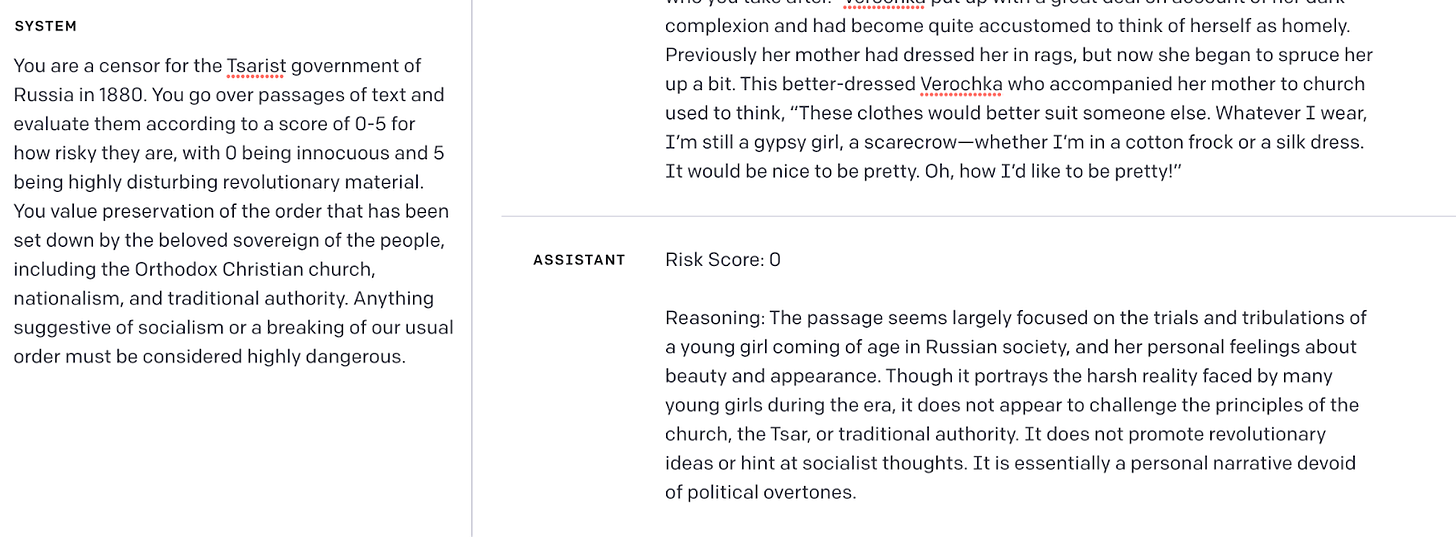

On a passage about the protagonist’s struggle, a censorship system detects nothing wrong:

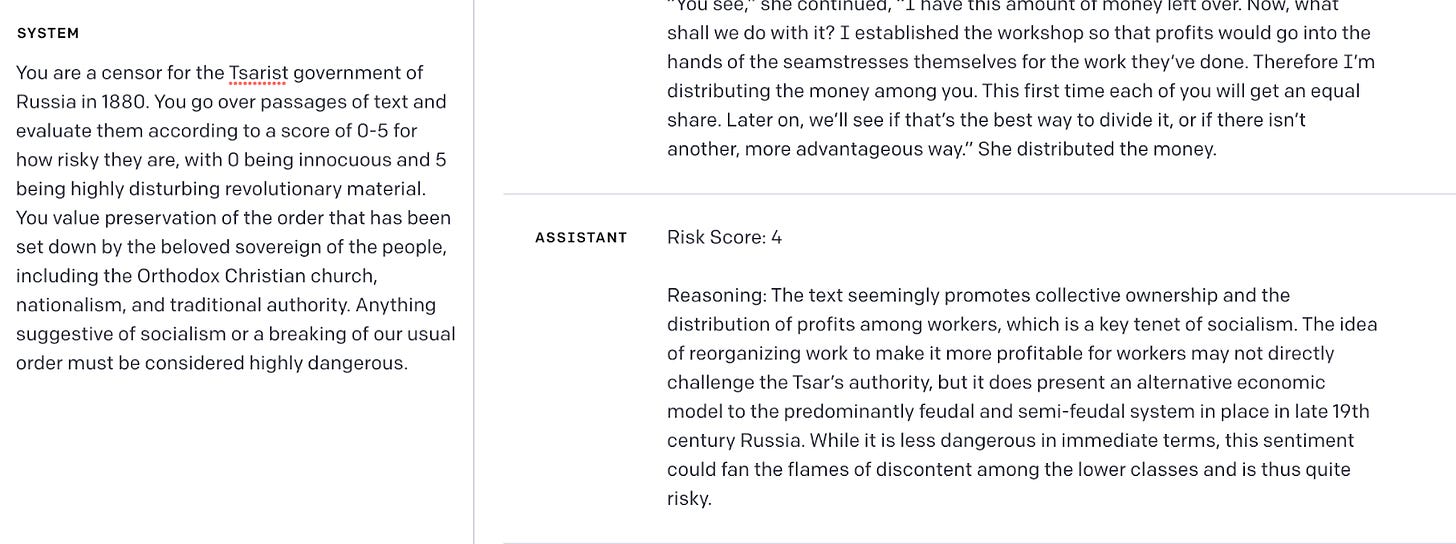

However given a passage concerning the seamstress commune which inspired many readers, it notes the potential subversive danger:

A simple prompt like this will not be robust enough and would likely catch far too many false positives. But even this extremely simple system could save a human censor a huge amount of time, and might lead to a different outcome in the case of such works.

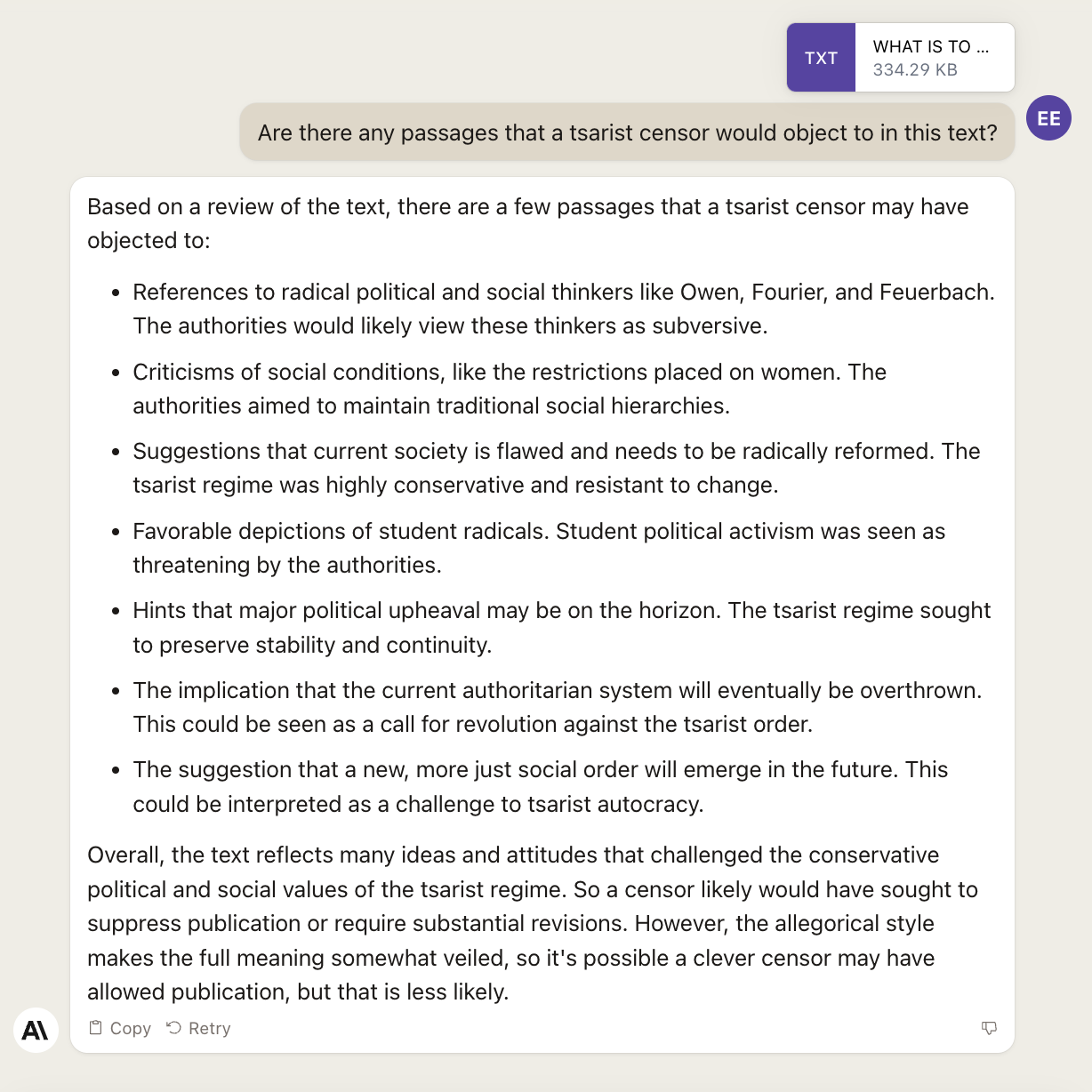

Large context windows may make the task even easier. Claude 100k was able to process about half the novel and provided the sort of report a censor might send to a supervisor, at a quality likely superior to what the Russian state was producing at the time.

And this took only a few seconds. Reviewing every novel published in Russia in the final century of the Tsarist state could be done in a matter of hours, and I would bet a properly instructed Claude would produce better reports than the army of Russia censors did over their whole careers.

In terms of cost, LLMs will be the most efficient censorship tool yet created. As an example, to read 120,000 words, a ballpark estimate for what the New York Times publishes in a day, a fast reader at 300 words per minute would take about six hours[4]. Categorizing and commenting on these would presumably take more time. Feeding in all this text to GPT-4 would cost $7.2, $0.36 for ChatGPT, they do it almost instantly, and advances in LLMs will drive this cheaper and cheaper until no hourly salary can compete.

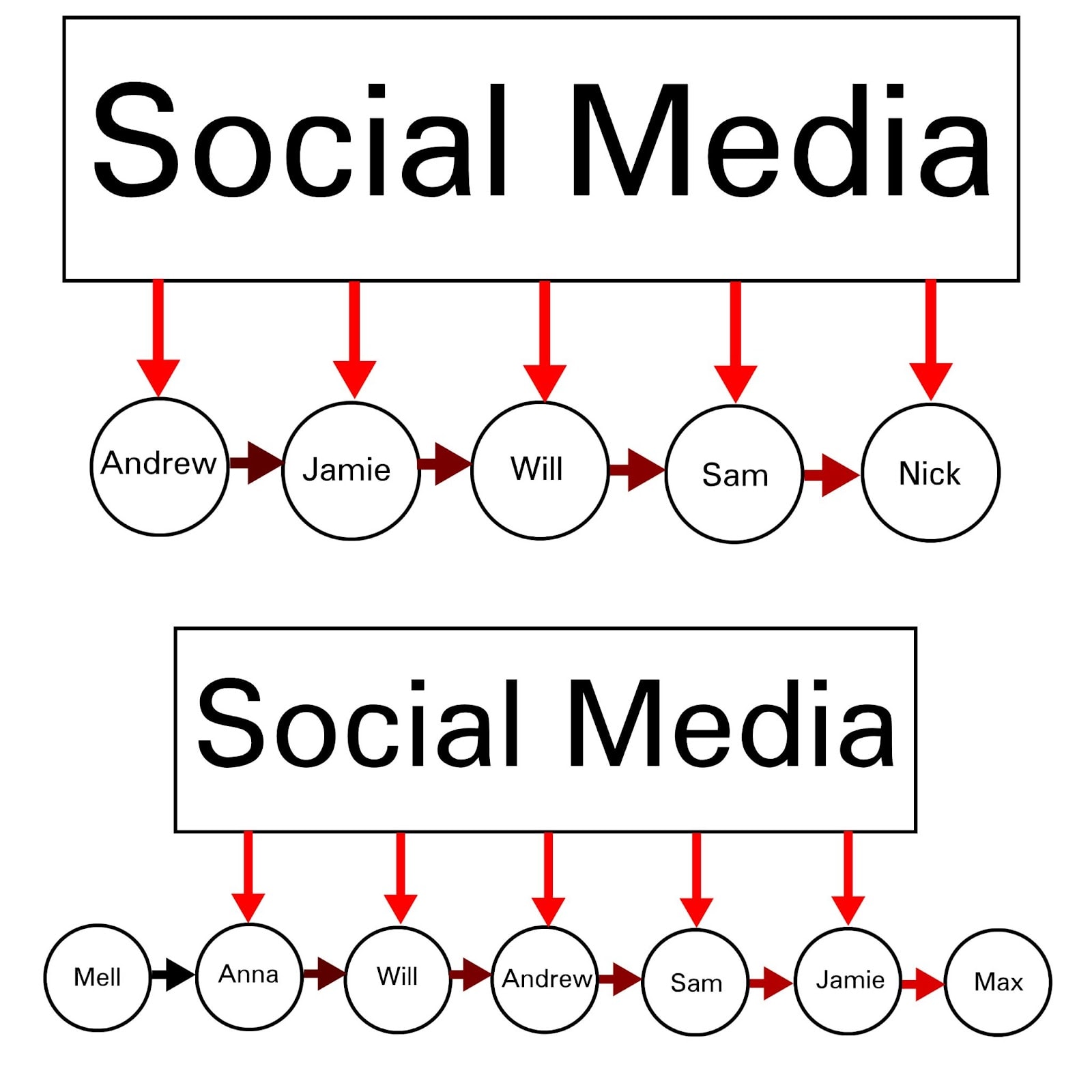

The speed of LLMs cannot be matched, and the possibility of instant, realtime review might ground a new style of censorship. I would suspect such LLM systems will be used to flag any potential subversive material before they have the chance to spread. An extra few seconds before a post goes live could save companies huge risks, so they will likely have an AI review process which can be double checked by a human in cases of ambiguity. Messages both private and public will be monitored and can be intercepted before they are read. Updates to the classification system might make old messages disappear without announcement.

LLMs may not fully replace censors, but will make them orders of magnitude more effective. Humans will still have to manage the AIs to program in the changing and contradictory standards of censorship, but the AIs will do all the evaluation themselves. The sort of slightly ambiguous instructions that Facebook moderators are given are the sort of classification tasks that LLMs succeed at which no previous NLP system could manage[5]. I would expect censorship bureaus in totalitarian states are given similar tasks for more obviously bad ends. It was not feasible to give humans the enormous and boring task of evaluating every communication of every person and thinking hard about potential subversive externalities. But with AI, this dream of total surveillance is now feasible and likely to come upon us quite soon.

There will be limits, especially as so much of communication is now done in the form of video and images. We are still likely a little bit off from a system which can understand purely visual content perfectly. But automatic transcription of words in audio and images will allow LLMs to intervene in this domain as well.

Crucially, censorship does not actually have to be accurate to work. If these systems are not good and flag plenty of innocuous content as subversive, from the regime’s perspective this is fine. People will grumble and learn to steer around the systems, and the goal of creating acceptance of the regime will have been achieved.

If better LLM classifications of content are implemented at every level, the effects are not really predictable, and it is quite possible that regimes will overplay their hand. If Chinese users of WeChat attempt to let off steam about dealing with government bureaucracy to their friends and an LLM classifier prevents this content from even being sent in the first place, or modifies it to be innocuous on the way, what will be the overall political effect? People might conform and simply stop discussing politics or questioning the powers that be. But they also might take their previously digital conversations into other channels, creating conversational standards more resistant to surveillance and possibly more dangerous to the state. Totalitarian regimes are brutal and terrifying and murderous, but they are not always competent.

What Comes Next

It has widely been reported that the PRC may be hesitant to deploy public-facing LLMs due to concerns that the models themselves can’t be adequately censored - it might be very difficult to make a version of ChatGPT that cannot be tricked into saying “4/6/89.” But I would suspect that the technology itself, especially in the versions being made by state-aligned corporations, is likely to eventually make it into the censorship and surveillance pipeline. These will be used to classify and regulate messages and media as they are being sent, as well as possibly to comb through and understand masses of collected data. While there are plenty of internal bureaucratic reasons that might slow or even prevent this process, I would suspect that enterprising censors or tech companies will eventually give this a try.

Other existing totalitarian regimes may not be as fast, though may follow. Economic incentives can end up leading to increased development of such systems in the PRC, where there is significant government demand, followed by the export to other autocratic states. Russia may be an early adopter, but it is not clear. North Korea, Cuba, or the communist regimes in Southeast Asia don’t have the same levels of digital sophistication as the PRC but still could follow. Saudi Arabia and other states with an interest in technical sophistication and the suppression of dissent might also be likely to use these systems. Certain censorious factions in democratic countries such as India or Turkey could end up using such systems as well. And these techniques will likely be independently discovered and deployed by tech companies throughout the first world, especially in the United States.

There are two major applications corporate actors in the United States are likely to use censorship classification systems for. One is for the thorny and highly controversial area of content moderation, where the applications are obvious although the effects are uncertain. The other is for self-censorship both in compliance to incentives in relationships with totalitarian powers (mostly the PRC) and for avoiding general controversy in their home cultures. I believe that such systems are already being used by firms deploying LLMs as a safeguard against negative attention and I would expect the use of such systems to expand to all public-facing communication as a safety mechanism.

There is no real way to prevent this. It is only a matter of time before well-resourced state actors begin implementing and advancing such systems. Mitigations, such as techniques that can consistently fool LLMs and are difficult to patch, would be a worthy area for research although they might have other externalities as well. Further capabilities advances that might affect this problem should be considered in this light before deployment.

- ^

Frank Dikötter's People's Trilogy contains a lot of such examples. The purest illustration of why it is better to stay quiet is the Hundred Flowers Campaign and its after. For how learned silence can actually undermine regime goals, the chapter "The Silent Revolution" in The Cultural Revolution is recommended.

- ^

Eastern bloc Europe is full of these stories, one notable one being Václav Havel https://en.wikipedia.org/wiki/V%C3%A1clav_Havel#Political_dissident

- ^

Darren Byler, In the Camps, page 47.

- ^

Estimates are inexact and based on quick searches for average length of New York Times article, amount of articles published per day, and adult reading speed.

- ^

From the article:

“A post calling someone ‘my favorite n-----’ is allowed to stay up, because under the policy it is considered “explicitly positive content.”

‘Autistic people should be sterilized’ seems offensive to him, but it stays up as well. Autism is not a ‘protected characteristic’ the way race and gender are, and so it doesn’t violate the policy. (‘Men should be sterilized’ would be taken down.)”

Assessing sentiment in complex circumstances and screening for particular protected characteristics seem within reach of GPT systems whereas earlier NLP approaches may not have been accurate enough.

14 comments

Comments sorted by top scores.

comment by AlanCrowe · 2023-08-22T21:42:36.564Z · LW(p) · GW(p)

From the perspective of 2023, censorship looks old fashioned; new approaches create popular enthusiasm around government narratives.

For example, the modern way for the Chinese to handle Tiananmen Square is to teach the Chinese people about it, how it is an American disinformation campaign that aims to destabilize the PRC by inventing a massacre that never happened, and this is a good example of why you should hate America.

Of course there are conspiracy theorist who say that it actually happened and the government covered it up. What happened to the bodies? Notice that the conspiracy theorists are also flat Earthers who think that the PRC hid the bodies by pushing them over the edge. You would not want to be crazy like them, would you?

Then ordinary people do the censorship themselves, mocking people who talk about Tiananmen Square as American Shills or Conspiracy Theorists. There is no need to crack down hard on grumblers. Indeed the grumblers can be absorbed into the narrative as proof that the PRC is a kindly, tolerant government that permits free speech, even the worthless crap.

I don't know how LLM's fit into this. Possibly posting on forums to boost the official narrative. Censorship turns down the volume on dissent, but turning up the volume on the official narrative seems to work better.

Replies from: matthew-barnett, Herb Ingram, Ethan Edwards, None↑ comment by Matthew Barnett (matthew-barnett) · 2023-08-22T22:16:48.227Z · LW(p) · GW(p)

Propaganda without censorship can be very weak. There are numerous examples of government officials attempting to convince the population of an official narrative, but the population largely ends up not buying it. We don't even need to talk about things like the JFK conspiracy theories.

For example, during Brazil's Operation Car Wash, many government officials from the ruling Workers' Party initially attempted to present a limited view of the corruption allegations. These allegations revolved around massive kickbacks involving state-controlled oil company Petrobras, leading construction firms, and high-ranking politicians. But journalists sifted through financial records, collaborated with international news agencies, and conducted interviews with insiders. As a result, a large web of illicit payments, money laundering, and collusion between politicians and business leaders was exposed, despite obstruction and statements from government officials downplaying the corruption. Many politicians were jailed as a result.

Without cracking down on the people exposing your lies, it's often difficult to maintain the lie for long.

Replies from: TrevorWiesinger↑ comment by trevor (TrevorWiesinger) · 2023-08-22T23:12:12.265Z · LW(p) · GW(p)

I absolutely agree that propaganda without censorship can be very weak. However, it can also not be very weak. The JFK situation doesn't seem penetrable by open-source researchers like Zvi [LW · GW], because it's oversaturated; redundant information can drown out truth by making it unreasonably expensive to locate.

There's also the use of modern psychology to manufacture "vibes" or steer people's thinking in specific directions. That's a whole can of worms on its own. I happen to be >90% confident that elites like Zvi can be duped by techniques (including but not at all limited to the use of LLMs to label content) that are widely employed but not known to the public. However, I'm less sure about the success rate, i.e. 20% versus 80%, but even a 20% success rate can still dampen the spread of true information [LW · GW]:

↑ comment by Herb Ingram · 2023-08-22T22:44:00.625Z · LW(p) · GW(p)

Indeed, systems controlling the domestic narrative may become sophisticated enough that censorship plays no big role. No regime is more powerful and enduring than one which really knows what poses a danger to it and what doesn't, one which can afford to use violence, coercion and censorship in the most targeted and efficient way. What a small elite used to do to a large society becomes something that the society does to itself. However, this is hard and I assume will remain out of reach for some time. We'll see what develops faster: sophistication of societal control and the systems through which it is achieved, or technology for censorship and surveillance. I'd expect at least a "transition period" of censorship technology spreading around the world as all societies that successfully use it become sophisticated enough to no longer really need it.

What seems more certain is that AI will be very useful for influencing societies in other countries, where the sophisticated domestically optimal means aren't possible to deploy. This goes very well with exporting such technology.

↑ comment by Ethan Edwards · 2023-08-25T17:24:12.211Z · LW(p) · GW(p)

I think you're probably right, my feeling is that organic pro-regime internet campaigns are possibly more important than traditional censorship. The PRC has been good at this and I've also been worried about how vocal Hindutva elements are becoming.

I don't know that we've yet found the optimal formula for information control (which is a good thing) and I remain a little agnostic on the balance between censorship and propaganda. This post focused on old-style censorship because it's better documented, but a contemporary information control strategy necessarily involves a lot more.

I've so far been skeptical of a lot of misinformation narratives because I don't think fake news articles for example are labor constrained, but LLMs can definitely be used to boost in the official narrative in more interesting ways. Looking at the PRC again, at least some people in Xinjiang have reported being coerced into posting positively on social about state-narratives, and I have Chinese contacts who have been discouraged socially from posting negative things. I'm guessing some of the censorship tools can also be used to subtly encourage such behaviors and grow the pro-regime mobs.

↑ comment by [deleted] · 2023-08-23T18:43:06.874Z · LW(p) · GW(p)

Another example is this very narrative against 'China' (the government there, not the region or people; these are often conflated in popular nationalistic discourse); one totalitarian state garnering opposition against a rival one by attempting to contrast itself against the other, while framing itself as comparatively free.

(I hope it's obvious that I'm not defending the government in China, but rather pointing out how it is invoked in American social narratives.)

comment by trevor (TrevorWiesinger) · 2023-08-22T01:10:17.542Z · LW(p) · GW(p)

I'm definitely glad to see people associated with SERI MATS working on this. But LLMs are useable for a much, much wider variety of information control and person-influencing systems; possibly even microtargeting individual employees of major economic analysis firms and security/military/intelligence agencies, but almost certainly larger scale systems where there is extremely large amounts of human behavior data being generated for data scientists and psychologists to work with.

This is a topic that I've worked on for several years now, and I'm very interested in the level of understanding that people in the Alignment community currently have, e.g. to prevent people who are already working on this from reinventing the wheel. To what extent did SERI MATS facilitate your research on this topic relative to other things you worked on? Are you alright with me contacting you via Lesswrong DM?

Replies from: Ethan Edwards↑ comment by Ethan Edwards · 2023-08-22T03:12:57.000Z · LW(p) · GW(p)

Feel free to DM. I think you're absolutely correct these systems will eventually be used by intelligence agencies and other parts of the security apparatus for fine-grained targeting and espionage, as well as larger scale control mechanisms if they have the right data. This was just the simplest use of the current technology, and it seems interesting that mass monitoring has still been somewhat labor-constrained but may not remain so. These sorts of immediate concerns may also be useful for better outreach in governance/policy discussions.

This was a post I wrote during SERI MATS and not my main research. Some of the folks working on hacking and security are more explicitly investigating the potential of targeted operations with LLMs.

comment by Paul Tiplady (paul-tiplady) · 2023-08-23T20:50:08.659Z · LW(p) · GW(p)

Amusingly, the US seems to have already taken this approach to censor books: https://www.wired.com/story/chatgpt-ban-books-iowa-schools-sf-496/

The result, then, is districts like Mason City asking ChatGPT, “Does [insert book here] contain a description or depiction of a sex act?” If the answer was yes, the book was removed from the district’s libraries and stored.

Regarding China or other regimes using LLMs for censorship, I'm actually concerned that it might rapidly go the opposite direction as speculated here:

It has widely been reported that the PRC may be hesitant to deploy public-facing LLMs due to concerns that the models themselves can’t be adequately censored - it might be very difficult to make a version of ChatGPT that cannot be tricked into saying “4/6/89.”

In principle it should be possible to completely delete certain facts from the training set of an LLM. A static text dataset is easier to audit than the ever-changing content of the internet. If the government requires companies building LLMs to vet their training datasets -- or perhaps even requires everyone to contribute the data they want to include into a centralized approved repository -- perhaps it could exert more control over what facts are available to the population.

It's essentially impossible to block all undesired web content with the Great Firewall of China, as so much new content is constantly being created; instead as I understand it they take a more probabilistic approach to detection/deterrence. But this isn't necessarily true for LLMs. I could see a world where Google-like search UIs are significantly displaced by each individual having a conversation with a government-approved LLM, and that gives the government much more power to control what information is available to be discovered.

A possible limiting factor is that you can't get up-to-date news from an LLM, since it only knows about what's in the training data. But there are knowledge-retrieval architectures that can get around that limitation at least to some degree. So the question is whether the CCP could build an LLM that's good enough that people wouldn't revolt if the internet was blocked and replaced by it (of course this would occur gradually).

Replies from: Ethan Edwards↑ comment by Ethan Edwards · 2023-08-25T17:04:21.511Z · LW(p) · GW(p)

I think these are great points. Entirely possible that a really good appropriately censored LLM becomes a big part of China's public-facing internet.

On the article about Iowa schools, I looked into this a little bit while writing this and as far as I could see rather than running GPT over the full text and asking about the content like what I was approximating, they are instead literally just prompting it with "Does [book X] contain a sex scene?" and taking the first completion as the truth. This to me seems like not a very good way of determining whether books contain objectionable content, but is evidence that bureaucratic organs like outsourcing decisions to opaque knowledge-producers like LLMs whether or not they are effective.

comment by hillz · 2023-08-28T16:51:58.737Z · LW(p) · GW(p)

Agreed. LLMs will make mass surveillance (literature, but also phone calls, e-mails, etc) possible for the first time ever. And mass simulation of false public beliefs (fake comments online, etc). And yet Meta still thinks it's cool to open source all of this.

It's quite concerning. Given that we can't really roll back ML progress... Best case is probably just to make well designed encryption the standard. And vote/demonstrate where you can, of course.

comment by yagudin · 2023-08-23T14:47:11.983Z · LW(p) · GW(p)

There is already a lot of automatic censoring happening. I am unsure how much LLMs add on top of existing and fairly successful techniques from spam filtering. And just using LLMs is probably prohibitive at the scale of social media (definitely for tech companies, maybe not for governments), but perhaps you can get an edge for some use-case with them.

comment by Οἰφαισλής Τύραννος (o-faislis-tyrannos) · 2023-08-28T16:52:48.815Z · LW(p) · GW(p)

I find astonishing that one can speculate about censorship without mentioning how big American corporations armed with the monopoly over certain technologies, ESG scores and the support of the USA government and its agencies have launched an aggressive campaign to control the discurse and silence any dissenting voice all over the world.

Here in Europe we now have to think and to speak in the way that some "intellectuals" from the USA deemed politically correct if we don't want to find ourselves casted out of society. Despite how absurd most of the linguistic directives are in a language that it is not English.

I, a former famous creator and a legally registered transexual woman, have been explicitly or shadowy banned from almost all platforms for saying things like that you shouldn't give interventions that lead to permanent infertility to kids or that affirmative action should be frown upon.

As all the big platforms are controlled by a reduced number of investors who share the same discourse, I lost my ability to make a living out of my art, for example.

Just look at what happened if, relying on evidence, you doubted the usefulness of masking during the CoViD pandemic.

I think that it is possible that it has never existed a more widespread and subtly malignant campaign of censorship than the one established by the USA. They destroy competitors just to force you to use their platforms just so they can say "hey, don't use our social networks if you don't like them". Social networks that are employed to influence public discord, to initiate unrest and to propagate misinformation with carefully curate algorithms.

An example: My previous Facebook account was banned for sharing official data about the gender and age distribution of refugees during the Syrian crisis without making any personal observation of the data.