Altman firing retaliation incoming?

post by trevor (TrevorWiesinger) · 2023-11-19T00:10:15.645Z · LW · GW · 23 commentsContents

24 comments

"Anonymous sources" are going to journalists and insisting that OpenAI employees are planning a "counter-coup" to reinstate Altman, some even claiming plans to overthrow the board.

It seems like a strategy by investors or even large tech companies to create a self-fulfilling prophecy to create a coalition of OpenAI employees, when there previously was none.

What's happening here reeks of a cheap easy move by someone big and powerful. It's important to note that AI investor firms and large tech companies are highly experienced and sophisticated at power dynamics, and potentially can even use the combination of AI with user data to do sufficient psychological research to wield substantial manipulation capabilities in unconventional environments [LW · GW], possibly already as far as in-person conversations but likely still limited to manipulation via digital environments like social media.

Companies like Microsoft also have ties to the US Natsec community and there's potentially risks coming from there as well (my model of the US Natsec community is that they are likely still confused or disinterested in AI safety, but potentially not at all confused nor disinterested any longer, and probably extremely interested and familiar with the use of AI and the AI industry to facilitate modern information warfare [LW · GW]). Counter-moves by random investors seems like the best explanation for now, I just figured that was worth mentioning; it's pretty well known that companies like Microsoft are forces that ideally you wouldn't mess with.

If this is really happening, if AI safety really is going mano-a-mano against the AI industry, then these things are important to know.

Most of these articles are paywalled so I had to paste them a separate post from the main Altman discussion post [LW · GW], and it seems like there's all sorts of people in all sorts of places who ought to be notified ASAP.

Forbes: OpenAI Investors Plot Last-Minute Push With Microsoft To Reinstate Sam Altman As CEO (2:50 pm PST, paywalled)

A day after OpenAI’s board of directors fired former CEO Sam Altman in a shock development, investors in the company are plotting how to restore him in what would amount to an even more surprising counter-coup.

Venture capital firms holding positions in OpenAI’s for-profit entity have discussed working with Microsoft and senior employees at the company to bring back Altman, even as he has signaled to some that he intends to launch a new startup, four sources told Forbes.

Whether the companies would be able to exert enough pressure to pull off such a move — and do it fast enough to keep Altman interested — is unclear.

The playbook, a source told Forbes would be straightforward: make OpenAI’s new management, under acting CEO Mira Murati and the remaining board, accept that their situation was untenable through a combination of mass revolt by senior researchers, withheld cloud computing credits from Microsoft, and a potential lawsuit from investors. Facing such a combination, the thinking is that management would have to accept Altman back, likely leading to the subsequent departure of those believed to have pushed for Altman’s removal, including cofounder Ilya Sutskever and board director Adam D’Angelo, the CEO of Quora.

Should such an effort not come together in time, Altman and OpenAI ex-president Greg Brockman were set to raise capital for a new startup, two sources said. “If they don’t figure it out asap, they’d just go ahead with Newco,” one source added.

OpenAI had not responded to a request for comment at publication time. Microsoft declined to comment.

Earlier on Saturday, The Information reported that Altman was already meeting with investors to raise funds for such a project. One source close to Altman said that both options remained possible. “I think he truly wants the best outcome,” said the person. “He doesn’t want to see lives destroyed.”

A key player in any attempted reinstatement would be Microsoft, OpenAI’s key partner that has poured $10 billion into the company. CEO Satya Nadella was left surprised and “furious” by the ouster, Bloomberg reported. Microsoft has sent only a fraction of that stated dollar amount to OpenAI, per a Semafor report. A source close to Microsoft’ thinking said the company would prefer to see stability at a key partner.

The Verge reported on Saturday that OpenAI’s board was in discussions to bring back Altman. It was unclear if such discussions were the immediate result of the investor pressure.

Wall Street Journal: OpenAI Investors Trying to Get Sam Altman Back as CEO After Sudden Firing (3:28pm PST, paywalled)

OpenAI’s investors are making efforts to bring back Sam Altman, the chief executive who was ousted Friday, said people familiar with the matter, the latest development in a fast-moving chain of events at the artificial-intelligence company behind ChatGPT.

Altman is considering returning but has told investors that he wants a new board, the people said. He has also discussed starting a company that would bring on former OpenAI employees, and is deciding between the two options, the people said.

Altman is expected to decide between the two options soon, the people said. Leading shareholders in OpenAI, including Microsoft and venture firm Thrive Capital, are helping orchestrate the efforts to reinstate Altman. Microsoft invested $13 billion into OpenAI and is its primary financial backer. Thrive Capital is the second-largest shareholder in the company.

Other investors in the company are supportive of these efforts, the people said.

The talks come as the company was thrown into chaos after OpenAI’s board abruptly decided to part ways with Altman, citing his alleged lack of candor in communications, and demoted its president and co-founder Greg Brockman, leading him to quit.

The exact reason for Altman’s firing remains unclear. But for weeks, tensions had boiled around the rapid expansion of OpenAI’s commercial offerings, which some board members felt violated the company’s initial charter to develop safe AI, according to people familiar with the matter.

The Verge: OpenAI Board in Discussions with Sam Altman to return as CEO (2:44 pm PST, not paywalled)

The OpenAI board is in discussions with Sam Altman to return to the company as its CEO, according to multiple people familiar with the matter. One of them said Altman, who was suddenly fired by the board on Friday with no notice, is “ambivalent” about coming back and would want significant governance changes.

Altman holding talks with the company just a day after he was ousted indicates that OpenAI is in a state of free-fall without him. Hours after he was axed, Greg Brockman, OpenAI’s president and former board chairman, resigned, and the two have been talking to friends and investors about starting another company. A string of senior researchers also resigned on Friday, and people close to OpenAI say more departures are in the works.

Altman is “ambivalent” about coming back

OpenAI’s largest investor, Microsoft, said in a statement shortly after Altman’s firing that the company “remains committed” to its partnership with the AI firm. However, OpenAI’s investors weren’t given advance warning or opportunity to weigh in on the board’s decision to remove Altman. As the face of the company and the most prominent voice in AI, his removal throws the future of OpenAI into uncertainty at a time when rivals are racing to catch up with the unprecedented rise of ChatGPT.

A spokesperson for OpenAI didn’t respond to a request for comment about Altman discussing a return with the board. A Microsoft spokesperson declined to comment.

OpenAI’s current board consists of chief scientist Ilya Sutskever, Quora CEO Adam D’Angelo, former GeoSim Systems CEO Tasha McCauley, and Helen Toner, the director of strategy at Georgetown’s Center for Security and Emerging Technology. Unlike traditional companies, the board isn’t tasked with maximizing shareholder value, and none of them hold equity in OpenAI. Instead, their stated mission is to ensure the creation of “broadly beneficial” artificial general intelligence, or AGI.

Sutskever, who also co-founded OpenAI and leads its researchers, was instrumental in the ousting of Altman this week, according to multiple sources. His role in the coup suggests a power struggle between the research and product sides of the company, the sources

23 comments

Comments sorted by top scores.

comment by Odd anon · 2023-11-19T11:16:44.535Z · LW(p) · GW(p)

(Glances at investor's agreement...)

IMPORTANT

* * Investing in OpenAI Global, LLC is a high-risk investment * *

* * Investors could lose their capital contribution and not see any return * *

* * It would be wise to view any investment in OpenAI Global, LLC in the spirit of a donation, with the understanding that it may be difficult to know what role money will play in a post-AGI world * *

The Company exists to advance OpenAI, Inc.'s mission of ensuring that safe artificial general intelligence is developed and benefits all of humanity. The Company's duty to this mission and the principles advanced in the OpenAI, Inc. Charter take precedence over any obligation to generate a profit. The Company may never make a profit, and the Company is under no obligation to do so. The Company is free to re-invest any or all of the Company's cash flow into research and development activities and/or related expenses without any obligation to the Members. See Section 6.4 for additional details.

If it turns out that the investors actually have the ability to influence OpenAI's leadership, it means the structure has failed. That itself would be a good reason for most of its support to disappear, and for its (ideologically motivated) employees to leave. This situation may put the organization in a bit of a conundrum.

The structure was also supposed to function for some future where OpenAI has a tremendous amount of power, to guarantee in advance that OpenAI would not be forced to use that power for profit. The implication about whether Microsoft expects to be able to influence the decision is itself a significant hit to OpenAI.

comment by AnonAcc · 2023-11-19T22:40:47.112Z · LW(p) · GW(p)

Worth noting that Sam Altman has a history of hostile power plays e.g. at reddit

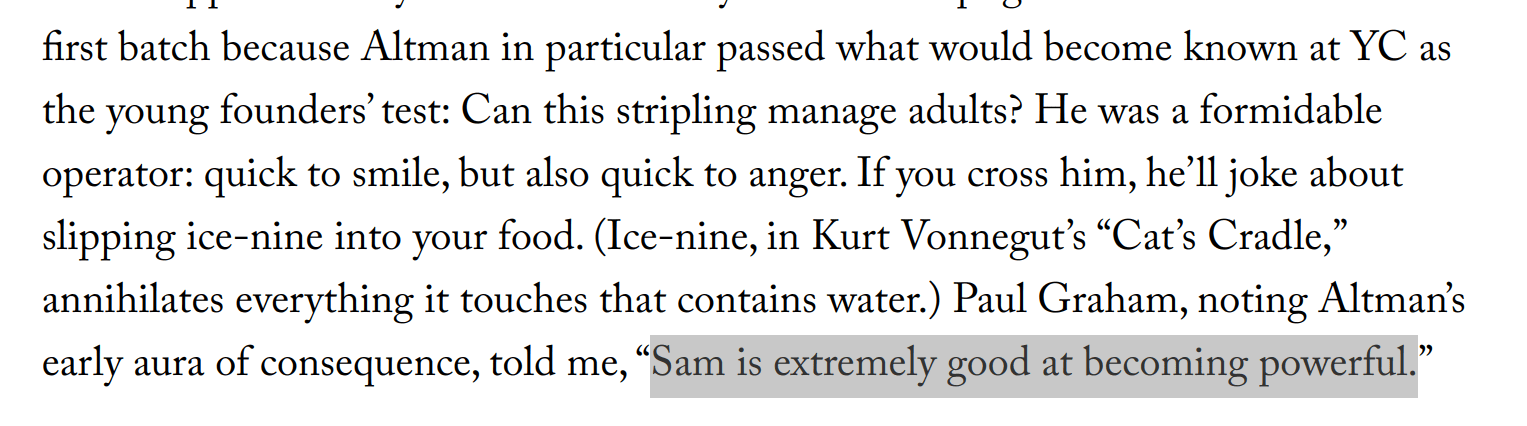

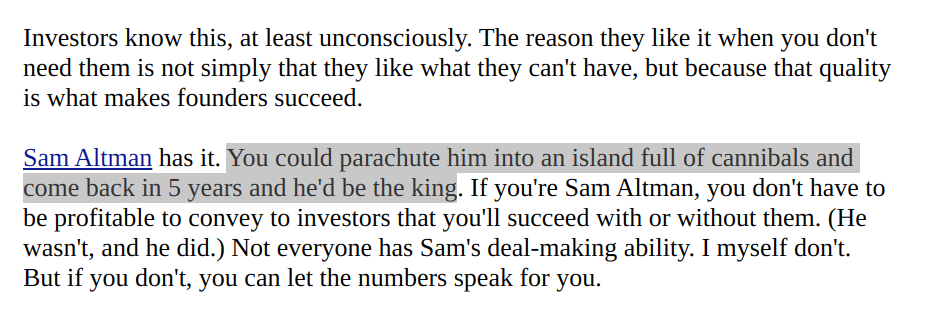

Paul Graham has written multiple times about Sam's thirst/skill for power:

https://www.newyorker.com/magazine/2016/10/10/sam-altmans-manifest-destiny

http://paulgraham.com/fundraising.html

https://twitter.com/paulg/status/1726248463355281633

↑ comment by lc · 2023-11-21T22:10:28.666Z · LW(p) · GW(p)

These descriptions from PG make Sam sound like the worst possible kind of person to put in charge of an AGI company.

Replies from: Throwaway2367↑ comment by Throwaway2367 · 2023-11-22T15:33:32.408Z · LW(p) · GW(p)

Unfortunately also the most likely person to be in charge of an AGI company..

comment by arabaga · 2023-11-19T02:46:06.941Z · LW(p) · GW(p)

It seems like a strategy by investors or even large tech companies to create a self-fulfilling prophecy to create a coalition of OpenAI employees, when there previously was none.

How is this more likely than the alternative, which is simply that this is an already-existing coalition that supports Sam Altman as CEO? Considering that he was CEO until he was suddenly removed yesterday, it would be surprising if most employees and investors didn't support him. Unless I'm misunderstanding what you're claiming here?

Replies from: TrevorWiesinger↑ comment by trevor (TrevorWiesinger) · 2023-11-19T04:06:52.976Z · LW(p) · GW(p)

Yeah, the impression I get is that if investors are going to the trouble to leak their plot to journalists (even if anonymously), then they are probably hoping to benefit from it acting as a rallying cry/Schelling point. This is a pretty basic thing for intra-org conflicts.

This indicates, at minimum, that the coalition they're aiming for will become stronger than if they had taken the default action and not leaked their plan to journalists. It seems to me more likely that the coalition they're hoping for doesn't exist at all or is too diffused, and they're trying to set people up to join a pro-Altman faction by claiming one is already strong and is due to receive outside support (from "anonymous sources familiar with the matter").

Replies from: Lukas_Gloor↑ comment by Lukas_Gloor · 2023-11-19T14:50:04.850Z · LW(p) · GW(p)

That's a good point in theory, but you'd think the board members can also speak to the media and say "no they are lying, we haven't so far had these talks with them that they claimed to have had with us about reverting the decision."

[Update Nov 20th, seems like you were right and the board maybe didn't have good enough media connections to react to this, or were too busy fighting their fight on another front.]

comment by glagidse · 2023-11-19T18:29:02.468Z · LW(p) · GW(p)

Eh, it appears that the Microsoft and other investors want Sam Altman back, but the employees are the ones that are making it happen. It appears that Sam was fired because of AI safety reasons but everyone who is really really concerned about that kind of thing already left and works for Anthropic, so Ilya's "But AI Safety" doesn't come off as very convincing, especially with the way Sam was fired. I suspect that people work at OpenAI because they want to, not because they have to. I mean, what were they expecting?

comment by Shankar Sivarajan (shankar-sivarajan) · 2023-11-19T01:39:53.497Z · LW(p) · GW(p)

Is there a prediction market on whether he'll be reinstated?

Replies from: g-w1↑ comment by Jacob G-W (g-w1) · 2023-11-19T01:44:53.844Z · LW(p) · GW(p)

There's a bunch. Here's one: https://manifold.markets/NealShrestha58d3/sam-altman-will-return-to-openai-by

Replies from: TrevorWiesinger↑ comment by trevor (TrevorWiesinger) · 2023-11-19T01:53:00.825Z · LW(p) · GW(p)

comment by Leksu · 2023-11-19T13:25:05.409Z · LW(p) · GW(p)

To the extent that Microsoft pressures the OpenAI board about their decision to oust Altman, won't it be easy to (I think accurately) portray Microsoft's behavior as unreasonable and against the common good?

It seems like the main (I would guess accurate) narrative in the media is that the reason for the board's actions was safety concerns.

Let's say Microsoft pulls whatever investments they can, revokes access to cloud compute resources, and makes efforts to support a pro-Altman faction in OpenAI. What happens if the OpenAI board decides to stall and put out statements to the effect of "we gotta do what we gotta do, it's not just for fun that we made this decision"? I would naively guess the public, the law, the media, and governments would be on the board's side. Unsure how much that would matter though.

To me at least it doesn't seem obviously bad for AI safety if OpenAI collapses or significantly loses employees and market value (would love to hear opinions on this).

Pros:

- Naively the leading capabilities organization slowing down slows down AI capabilities (though hard to say if it slows them down on balance, given some employees will start rivalling companies, and the slowing down could inspire other actors to invest more in becoming the new frontier)

- Additional signal to the public and governments that irresponsible safety shortcuts are being taken (increases willingness to regulate AI, naively seemsnlike a pro?)

Cons:

- Altman and other employees will likely start other AI companies, be less responsible, could speed up frontier of capabilities relative to counterfactual, could make coordination harder...

- Maybe I'm wrong about how all of this will be painted by the media, and the public / government's perceptions

I feel like I'm probably missing some reason Microsoft has more leverage than I'm assuming? Maybe other people are more worried about fragmentation of the AI landscape, and less optimistic about the public's and governments' perceptions of the situation, and the expected value of the actions they might take because of it?

Replies from: Lukas_Gloor, lahwran↑ comment by Lukas_Gloor · 2023-11-19T14:23:33.393Z · LW(p) · GW(p)

Maybe I'm wrong about how all of this will be painted by the media, and the public / government's perceptions

So far, a lot of the media coverage has framed the issue so the board comes across as inexperienced and their mission-related concerns come across more like an overreaction rather than reasonable criticism of profit-oriented or PR-oriented company decisions putting safety at risk when building the most dangerous technology.

I suspect that this is mostly a function of how things went down, how these differences of vision between them and Altman came into focus, rather than an a feature of the current discourse window – we've seen that media coverage about AI risk concerns (and public reaction to it) isn't always negative. So, I think you're right that there are alternative circumstances where it would look quite problematic for Microsoft if they try to interfere with or circumvent the non-profit board structure. Unfortunately, it might be a bit late to change the framing now.

But there's still the chance that the board is sitting on more info and struggled to coordinate their communications amidst all the turmoil so far, or have other reasons for not explaining their side of things in a more compelling manner.

(My comment is operating under the assumption that it's indeed true that Altman isn't the sort of cautious good leader that one would want for the whole AI thing to go well. I personally think this might well be the case, but I want to flag that my views here aren't very resilient because I have little info, and also I'm acknowledging that seeing outpouring of support for him is at least moderate evidence of him being a good leader [but one should also be careful about not overupdating on this type of evidence of someone being well-liked in a professional network]. And by "good leader" I don't just mean "can make money for companies" – Elon Musk is also good at making money for companies, but I'm not sure people would come to his support in the same way they came to Altman's support, for instance. Also, I think "being a good leader" is way more important than "having the right view on AI risk," because who knows for sure what the exact right view is – the important thing is that a good leader will incrementally make sizeable updates in the right direction as more information comes in through the research landscape.)

↑ comment by the gears to ascension (lahwran) · 2023-11-19T17:36:17.841Z · LW(p) · GW(p)

To the extent that Microsoft pressures the OpenAI board about their decision to oust Altman, won't it be easy to (I think accurately) portray Microsoft's behavior as unreasonable and against the common good?

what would this change? is there any lack in people being outspoken about framing these organizations as against the common good? it seems to me that this is simply the old conflict between capital and commoner.

comment by moreorlesswrong · 2023-11-19T05:03:47.709Z · LW(p) · GW(p)

Very much appreciate the write-up trevor; especially the substantively lengthy quotes. As other commentators have pointed out, the dichotomy between the two apparent 'factions' is quite clear. A faction of non-profit focus, tentative approach towards development, and conviction of Large Language Models' climb to Artifical General Intelligence makes up one side. The other side is a faction looking to capitalize upon OpenAI's current advances before they are commonplace and which has reservations about the capability for Large Language Models to become a form of Artificial General Intelligence.

As to Sam Altman's return, perhaps the only possible cause of such an outcome will be investor/stakeholder pressure. Will/can such pressure be enough? The support that Samuel Altman has thus far received from colleagues and employees seems to me an organic, knee-jerk rally. This signals to me the possible spawning of a new organization by him from a position of strength. Not to say there was no willingness to collaborate and/or to finance before his ousting, just that the circumstances (story) yield an 'under-dog' bystanders would enthusiastically back (bet on).

comment by Chris_Leong · 2023-11-19T01:56:19.746Z · LW(p) · GW(p)

E/acc seems to be really fired up about this:

https://twitter.com/ctjlewis/status/1725745699046948996

↑ comment by trevor (TrevorWiesinger) · 2023-11-19T02:13:49.405Z · LW(p) · GW(p)

It is really hard to use social media to measure public opinion, even if Twitter/X doesn't have nearly as much security or influence capabilities as Facebook/Instagram, botnet accounts run by state-adjacent agencies can still game Twitter's algorithms by emulating human behavior and upvoting specific posts in order to game Twitter's newsfeed algorithm for the human users.

Social media has never been an environment that is friendly to independent researchers; if it was easy, then foreign intelligence agencies would run circles around independent researchers in order to research advanced strategies to manipulate public opinion (e.g. via their own social media botnets, or merely just knowing what to say when their leaders give speeches).

But yes. E/acc seems to be really fired up about this.

comment by MiguelDev (whitehatStoic) · 2023-11-19T02:30:03.785Z · LW(p) · GW(p)

Things will get interesting if Sam gets reinstalled and he ended up attacking the board. Sam will then fire the OpenAI board for trying to do what they think is right? The chances of this happening? I would say that if this really will happen - It will not be a pretty situation for OpenAI.

Replies from: moreorlesswrong↑ comment by moreorlesswrong · 2023-11-19T05:29:40.712Z · LW(p) · GW(p)

I feel as though, MiguelDev, S. Altman's return to OpenAI would be brokered by a third party (Microsoft, et alia) with 'stability' as a hard condition to be met by both sides. Likewise, it isn't worth Mr. Altman's time — nor effort — to seek revenge. Not only would such an endeavor cost time and effort, but the vengeance would be exacted at the price of his tarnished character. The immature reaction would be noted by his peers and they will, in turn, react accordingly.

Replies from: whitehatStoic↑ comment by MiguelDev (whitehatStoic) · 2023-11-19T05:38:11.788Z · LW(p) · GW(p)

It was not me who thinks it will be brokered by Microsoft, its this forbes article outlined in the post:

https://www.forbes.com/sites/alexkonrad/2023/11/18/openai-investors-scramble-to-reinstate-sam-altman-as-ceo/?sh=2dbf6a5060da

Replies from: moreorlesswrong↑ comment by moreorlesswrong · 2023-11-19T05:49:15.820Z · LW(p) · GW(p)

Yes, I was informed by and was referencing that same article trevor linked to in his original posting. I did not, however, assume you "[think] it will be brokered by Microsoft". Regardless, I'd love to hear any critique or/and disagreements with my original reply — or even an explanation as to why you would believe I assumed you thought as much.

(Post-Scriptum: I was not the one whom down-voted the overall karma of your post.)

Replies from: whitehatStoic↑ comment by MiguelDev (whitehatStoic) · 2023-11-19T06:02:35.643Z · LW(p) · GW(p)

The only chance that there will be no response similar to a vengeful act is if Sam doesn't care about his image at all. Because of this, I disagree to the idea that Sam will be by default "not hostile" when he comes back and will treat what happened as "nothing".

There is a high chance that there will be changes - even somewhat an attempt to recover lost influence, image or glamour - judging again by his choice to promote OpenAI or himself "as the CEO" of a revolutionary tech all in many different countries this year.

BTW, I do not advocate hostility, but the pressure on them: Sam vs. Ilya & the Board on simply forgetting what happened is not possible.

comment by O O (o-o) · 2023-11-19T00:43:23.630Z · LW(p) · GW(p)

It seems like a strategy by investors or even large tech companies to create a self-fulfilling prophecy to create a coalition of OpenAI employees, when there previously was none.

What? I think the developments made it reasonably clear there were organically two factions in the company. But Ilya’s faction strikes me as inexperienced and possibly emotionally immature. I just sincerely doubt there’s a conspiracy behind it. I’m willing to bet money the company will remain a capped for profit.