Posts

Comments

If we cannot do compute governance, and we cannot do model-level governance, then I do not see an alternative solution. I only see bad options, a choice between an EU-style regime and doing essentially nothing.

It feels like this and some other parts of the post implies the EU AI Act does not apply compute governance and model-level governance, which seems false as far as I can tell (semantics?)

From a summary of the act: "All providers of GPAI models that present a systemic risk* – open or closed – must also conduct model evaluations, adversarial testing, track and report serious incidents and ensure cybersecurity protections."

*"GPAI models present systemic risks when the cumulative amount of compute used for its training is greater than 1025 floating point operations (FLOPs)"

Interested

To the extent that Microsoft pressures the OpenAI board about their decision to oust Altman, won't it be easy to (I think accurately) portray Microsoft's behavior as unreasonable and against the common good?

It seems like the main (I would guess accurate) narrative in the media is that the reason for the board's actions was safety concerns.

Let's say Microsoft pulls whatever investments they can, revokes access to cloud compute resources, and makes efforts to support a pro-Altman faction in OpenAI. What happens if the OpenAI board decides to stall and put out statements to the effect of "we gotta do what we gotta do, it's not just for fun that we made this decision"? I would naively guess the public, the law, the media, and governments would be on the board's side. Unsure how much that would matter though.

To me at least it doesn't seem obviously bad for AI safety if OpenAI collapses or significantly loses employees and market value (would love to hear opinions on this).

Pros:

- Naively the leading capabilities organization slowing down slows down AI capabilities (though hard to say if it slows them down on balance, given some employees will start rivalling companies, and the slowing down could inspire other actors to invest more in becoming the new frontier)

- Additional signal to the public and governments that irresponsible safety shortcuts are being taken (increases willingness to regulate AI, naively seemsnlike a pro?)

Cons:

- Altman and other employees will likely start other AI companies, be less responsible, could speed up frontier of capabilities relative to counterfactual, could make coordination harder...

- Maybe I'm wrong about how all of this will be painted by the media, and the public / government's perceptions

I feel like I'm probably missing some reason Microsoft has more leverage than I'm assuming? Maybe other people are more worried about fragmentation of the AI landscape, and less optimistic about the public's and governments' perceptions of the situation, and the expected value of the actions they might take because of it?

Agree that would be better. I think just links is useful too though, in case adding more context significantly increases the workload in practice

I wonder if there's an AI tool that could post-process it

Is there a group currently working on such a survey? If not, seems like it wouldn't be very hard to kickstart.

Link to mentioned survey: LINK

Maybe someone from AI Impacts could comment relevant thoughts (are they planning to do a re-run of the survey soon, would they encourage/discourage another group to do a similar survey, do they think now is a good time, do they have the right resources for it now, etc)

Thanks, I think this comment and the subsequent post will be very useful for me!

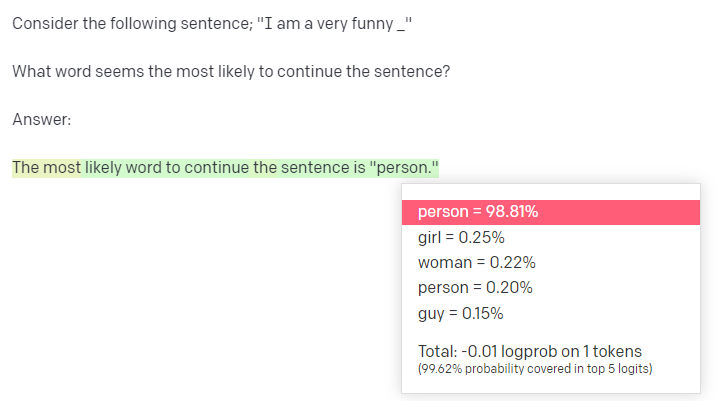

Here's what GPT-3 output for me