Habryka's Shortform Feed

post by habryka (habryka4) · 2019-04-27T19:25:26.666Z · LW · GW · 383 commentsContents

383 comments

In an attempt to get myself to write more here is my own shortform feed. Ideally I would write something daily, but we will see how it goes.

383 comments

Comments sorted by top scores.

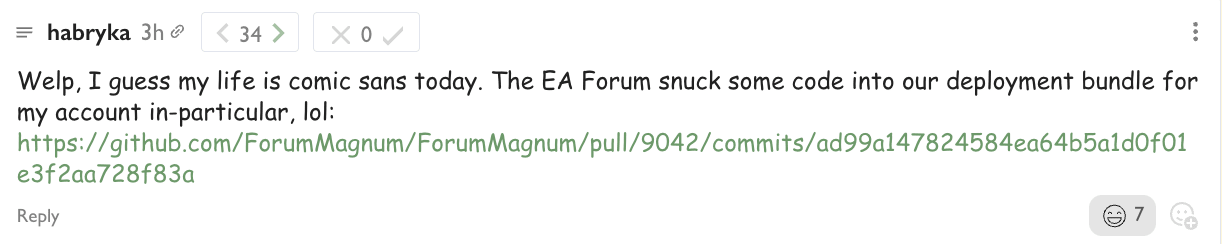

comment by habryka (habryka4) · 2024-07-02T07:59:15.296Z · LW(p) · GW(p)

I am confident, on the basis of private information I can't share, that Anthropic has asked at least some employees to sign similar non-disparagement agreements that are covered by non-disclosure agreements as OpenAI did.

Or to put things into more plain terms:

I am confident that Anthropic has offered at least one employee significant financial incentive to promise to never say anything bad about Anthropic, or anything that might negatively affect its business, and to never tell anyone about their commitment to do so.

I am not aware of Anthropic doing anything like withholding vested equity the way OpenAI did, though I think the effect on discourse is similarly bad.

I of course think this is quite sad and a bad thing for a leading AI capability company to do, especially one that bills itself on being held accountable by its employees and that claims to prioritize safety in its plans.

Replies from: sam-mccandlish, samuel-marks, Zach Stein-Perlman, jacobjacob, William_S, neel-nanda-1, ChristianKl, jacobjacob, Dagon, jacob-pfau, Zach Stein-Perlman, Zane↑ comment by Sam McCandlish (sam-mccandlish) · 2024-07-04T04:26:33.366Z · LW(p) · GW(p)

Hey all, Anthropic cofounder here. I wanted to clarify Anthropic's position on non-disparagement agreements:

- We have never tied non-disparagement agreements to vested equity: this would be highly unusual. Employees or former employees never risked losing their vested equity for criticizing the company.

- We historically included standard non-disparagement terms by default in severance agreements, and in some non-US employment contracts. We've since recognized that this routine use of non-disparagement agreements, even in these narrow cases, conflicts with our mission. Since June 1st we've been going through our standard agreements and removing these terms.

- Anyone who has signed a non-disparagement agreement with Anthropic is free to state that fact (and we regret that some previous agreements were unclear on this point). If someone signed a non-disparagement agreement in the past and wants to raise concerns about safety at Anthropic, we welcome that feedback and will not enforce the non-disparagement agreement.

In other words— we're not here to play games with AI safety using legal contracts. Anthropic's whole reason for existing is to increase the chance that AI goes well, and spur a race to the top on AI safety.

Some other examples of things we've needed to adjust from the standard corporate boilerplate to ensure compatibility with our mission: (1) replacing standard shareholder governance with the Long Term Benefit Trust and (2) supplementing standard risk management with the Responsible Scaling Policy. And internally, we have an anonymous RSP non-compliance reporting line so that any employee can raise concerns about issues like this without any fear of retaliation.

Please keep up the pressure on us and other AI developers: standard corporate best practices won't cut it when the stakes are this high. Our goal is to set a new standard for governance in AI development. This includes fostering open dialogue, prioritizing long-term safety, making our safety practices transparent, and continuously refining our practices to align with our mission.

Replies from: Zach Stein-Perlman, habryka4, neel-nanda-1, aysja, mesaoptimizer, neel-nanda-1, habryka4, kave, elifland, Zach Stein-Perlman↑ comment by Zach Stein-Perlman · 2024-07-04T19:30:16.753Z · LW(p) · GW(p)

Please keep up the pressure on us

OK:

- You should publicly confirm that your old policy don't meaningfully advance the frontier with a public launch has been replaced by your RSP, if that's true, and otherwise clarify your policy.

- You take credit for the LTBT (e.g. here) but you haven't [LW · GW] published [LW · GW] enough to show that it's effective. You should publish the Trust Agreement, clarify these ambiguities, and make accountability-y commitments like if major changes happen to the LTBT we'll quickly tell the public.

- (Reminder that a year ago you committed to establish a bug bounty program (for model issues) or similar but haven't. But I don't think bug bounties are super important.)

- [Edit: bug bounties are also mentioned in your RSP—in association with ASL-2—but not explicitly committed to.]

- (Good job in many areas.)

↑ comment by Bird Concept (jacobjacob) · 2024-07-04T20:13:22.961Z · LW(p) · GW(p)

(Sidenote: it seems Sam was kind of explicitly asking to be pressured, so your comment seems legit :)

But I also think that, had Sam not done so, I would still really appreciate him showing up and responding to Oli's top-level post, and I think it should be fine for folks from companies to show up and engage with the topic at hand (NDAs), without also having to do a general AMA about all kinds of other aspects of their strategy and policies. If Zach's questions do get very upvoted, though, it might suggest there's demand for some kind of Anthropic AMA event.)

↑ comment by habryka (habryka4) · 2024-07-05T16:16:08.099Z · LW(p) · GW(p)

Anyone who has signed a non-disparagement agreement with Anthropic is free to state that fact (and we regret that some previous agreements were unclear on this point) [emphasis added]

This seems as far as I can tell a straightforward lie?

I am very confident that the non-disparagement agreements you asked at least one employee to sign were not ambiguous, and very clearly said that the non-disparagement clauses could not be mentioned.

To reiterate what I know to be true: Employees of Anthropic were asked to sign non-disparagement agreements with a commitment to never tell anyone about the presence of those non-disparagement agreements. There was no ambiguity in the agreements that I have seen.

@Sam McCandlish [LW · GW]: Please clarify what you meant to communicate by the above, which I interpreted as claiming that there was merely ambiguity in previous agreements about whether the non-disparagement agreements could be disclosed, which seems to me demonstrably false.

Replies from: neel-nanda-1, sam-mccandlish, lcmgcd↑ comment by Neel Nanda (neel-nanda-1) · 2024-07-12T07:22:54.739Z · LW(p) · GW(p)

I can confirm that my concealed non-disparagement was very explicit that I could not discuss the existence or terms of the agreement, I don't see any way I could be misinterpreting this. (but I have now kindly been released from it! [LW(p) · GW(p)])

EDIT: It wouldn't massively surprise me if Sam just wasn't aware of its existence though

↑ comment by Sam McCandlish (sam-mccandlish) · 2024-07-09T01:43:13.716Z · LW(p) · GW(p)

We're not claiming that Anthropic never offered a confidential non-disparagement agreement. What we are saying is: everyone is now free to talk about having signed a non-disparagement agreement with us, regardless of whether there was a non-disclosure previously preventing it. (We will of course continue to honor all of Anthropic's non-disparagement and non-disclosure obligations, e.g. from mutual agreements.)

If you've signed one of these agreements and have concerns about it, please email hr@anthropic.com.

Replies from: habryka4↑ comment by habryka (habryka4) · 2024-07-09T02:15:04.352Z · LW(p) · GW(p)

Hmm, I feel like you didn't answer my question. Can you confirm that Anthropic has asked at least some employees to sign confidential non-disparagement agreements?

I think your previous comment pretty strongly implied that you think you did not do so (i.e. saying any previous agreements were merely "unclear" I think pretty clearly implies that none of them did include a non-ambiguous confidential non-disparagement agreement). I want to it to be confirmed and on the record that you did, so I am asking you to say so clearly.

↑ comment by lemonhope (lcmgcd) · 2024-07-09T11:01:53.725Z · LW(p) · GW(p)

"Unclear on this point" means what you think it means and is not a L I E for a spokesperson to say in my book. You got the W here already

Replies from: habryka4↑ comment by habryka (habryka4) · 2024-07-09T17:01:28.988Z · LW(p) · GW(p)

I really think the above was meant to imply that the non disparagement agreements were merely unclear on whether they were covered by a non disclosure clause (and I would be happy to take bets on how a randomly selected reader would interpret it).

My best guess is Sam was genuinely confused on this and that there are non disparagement agreements with Anthropic that clearly are not covered by such clauses.

↑ comment by Neel Nanda (neel-nanda-1) · 2024-07-04T21:31:35.646Z · LW(p) · GW(p)

EDIT: Anthropic have kindly released me personally from my entire concealed non-disparagement [LW(p) · GW(p)], not just made a specific safety exception. Their position on other employees remains unclear, but I take this as a good sign

If someone signed a non-disparagement agreement in the past and wants to raise concerns about safety at Anthropic, we welcome that feedback and will not enforce the non-disparagement agreement.

Thanks for this update! To clarify, are you saying that you WILL enforce existing non disparagements for everything apart from safety, but you are specifically making an exception for safety?

this routine use of non-disparagement agreements, even in these narrow cases, conflicts with our mission

Given this part, I find this surprising. Surely if you think it's bad to ask future employees to sign non disparagements you should also want to free past employees from them too?

↑ comment by aysja · 2024-07-06T00:00:19.702Z · LW(p) · GW(p)

This comment appears to respond to habryka, but doesn’t actually address what I took to be his two main points—that Anthropic was using NDAs to cover non-disparagement agreements, and that they were applying significant financial incentive to pressure employees into signing them.

We historically included standard non-disparagement agreements by default in severance agreements

Were these agreements subject to NDA? And were all departing employees asked to sign them, or just some? If the latter, what determined who was asked to sign?

↑ comment by mesaoptimizer · 2024-07-04T18:41:56.456Z · LW(p) · GW(p)

Anyone who has signed a non-disparagement agreement with Anthropic is free to state that fact (and we regret that some previous agreements were unclear on this point).

I'm curious as to why it took you (and therefore Anthropic) so long to make it common knowledge (or even public knowledge) that Anthropic used non-disparagement contracts as a standard and was also planning to change its standard agreements.

The right time to reveal this was when the OpenAI non-disparagement news broke, not after Habryka connects the dots and builds social momentum for scrutiny of Anthropic.

Replies from: habryka4↑ comment by habryka (habryka4) · 2024-07-04T19:13:32.995Z · LW(p) · GW(p)

that Anthropic used non-disparagement contracts as a standard and was also planning to change its standard agreements.

I do want to be clear that a major issue is that Anthropic used non-disparagement agreements that were covered by non-disclosure agreements. I think that's an additionally much more insidious thing to do, that contributed substantially to the harm caused by the OpenAI agreements, and I think is important fact to include here (and also makes the two situations even more analogous).

↑ comment by Neel Nanda (neel-nanda-1) · 2024-07-05T09:10:41.423Z · LW(p) · GW(p)

Note, since this is a new and unverified account, that Jack Clark (Anthropic co-founder) confirmed on Twitter that the parent comment is the official Anthropic position https://x.com/jackclarkSF/status/1808975582832832973

↑ comment by habryka (habryka4) · 2024-07-04T17:55:41.840Z · LW(p) · GW(p)

Thank you for responding! (I have more comments and questions but figured I would shoot off one quick question which is easy to ask)

We've since recognized that this routine use of non-disparagement agreements, even in these narrow cases, conflicts with our mission

Can you clarify what you mean by "even in these narrow cases"? If I am understanding you correctly, you are saying that you were including a non-disparagement clause by default in all of your severance agreements, which sounds like the opposite of narrow (edit: though as Robert points out it depends on what fraction of employees get offered any kind of severance, which might be most, or might be very few).

I agree that it would have technically been possible for you to also include such an agreement on start of employment, but that would have been very weird, and not even OpenAI did that.

I think using the sentence "even in these narrow cases" seems inappropriate given that (if I am understanding you correctly) all past employees were affected by these agreements. I think it would be good to clarify what fraction of past employees were actually offered these agreements.

↑ comment by RobertM (T3t) · 2024-07-04T19:18:00.464Z · LW(p) · GW(p)

Severance agreements typically aren't offered to all departing employees, but usually only those that are fired or laid off. We know that not all past employees were affected by these agreements, because Ivan claims [LW(p) · GW(p)] to not have been offered such an agreement, and he left[1] in mid-2023, which was well before June 1st.

- ^

Presumably of his own volition, hence no offered severance agreement with non-disparagement clauses.

↑ comment by habryka (habryka4) · 2024-07-04T19:25:21.567Z · LW(p) · GW(p)

Ah, fair, that would definitely make the statement substantially more accurate.

@Sam McCandlish [LW · GW]: Could you clarify whether severance agreements were also offered to voluntarily departing employees, and if so, under which conditions?

↑ comment by kave · 2024-07-04T19:55:46.245Z · LW(p) · GW(p)

To expand on my "that's a crux": if the non-disparagement+NDA clauses are very standard, such that they were included in a first draft by an attorney without prompting and no employee ever pushed back, then I would think this was somewhat less bad.

It would still be somewhat bad, because Anthropic should be proactive about not making those kinds of mistakes. I am confused about what level of perfection to demand from Anthropic, considering the stakes.

And if non-disparagement is often used, but Anthropic leadership either specified its presence or its form, that would seem quite bad to me, because mistakes of commision here are more evidence of poor decisionmaking than mistakes of omission. If Anthropic leadership decided to keep the clause when a departing employee wanted to remove the clause, that would similarly seem quite bad to me.

Replies from: nwinter↑ comment by nwinter · 2024-07-05T19:43:09.220Z · LW(p) · GW(p)

I think that both these clauses are very standard in such agreements. Both severance letter templates I was given for my startup, one from a top-tier SV investor's HR function and another from a top-tier SV law firm, had both clauses. When I asked Claude, it estimated 70-80% of startups would have a similar non-disparagement clause and 80-90% would have a similar confidentiality-of-this-agreement's-terms clause. The three top Google hits for "severance agreement template" all included those clauses.

These generally aren't malicious. Terminations get messy and departing employees often have a warped or incomplete picture of why they were terminated–it's not a good idea to tell them all those details, because that adds liability, and some of those details are themselves confidential about other employees. Companies view the limitation of liability from release of various wrongful termination claims as part of the value they're "purchasing" by offering severance–not because those claims would succeed, but because it's expensive to explain in court why they're justified. But the expenses disgruntled ex-employees can cause is not just legal, it's also reputational. You usually don't know which ex-employee will get salty and start telling their side of the story publicly, where you can't easily respond with your side without opening up liability. Non-disparagement helps cover that side of it. And if you want to disparage the company, in a standard severance letter that doesn't claw back vested equity, hey, you're free to just not sign it–it's likely only a bonus few weeks/months' salary that you didn't yet earn on the line, not the value of all the equity you had already vested. We shouldn't conflate the OpenAI situation with Anthropic's given the huge difference in stakes.

Confidentiality clauses are standard because they prevent other employees from learning the severance terms and potentially demanding similar treatment in potentially dissimilar situations, thus helping the company control costs and negotiations in future separations. They typically cover the entire agreement and are mostly about the financial severance terms. I imagine that departing employees who cared could've ask the company for a carve-out on the confidentiality for the non-disparagement clause as a very minor point of negotiation.

It's great that Anthropic is taking steps to make these docs more departing-employee-friendly. I wouldn't read too much into that the docs were like this in the first place (as this wasn't on cultural radars until very recently) or that they weren't immediately changed (legal stuff takes time and this was much smaller in scope than in the OpenAI case).

Example clauses in default severance letter from my law firm:

7. Non-Disparagement. You agree that you will not make any false, disparaging or derogatory statements to any media outlet, industry group, financial institution or current or former employees, consultants, clients or customers of the Company, regarding the Company, including with respect to the Company, its directors, officers, employees, agents or representatives or about the Company's business affairs and financial condition.

11. Confidentiality. To the extent permitted by law, you understand and agree that as a condition for payment to you of the severance benefits herein described, the terms and contents of this letter agreement, and the contents of the negotiations and discussions resulting in this letter agreement, shall be maintained as confidential by you and your agents and representatives and shall not be disclosed except to the extent required by federal or state law or as otherwise agreed to in writing by the Company.

↑ comment by elifland · 2024-07-04T19:33:25.368Z · LW(p) · GW(p)

And internally, we have an anonymous RSP non-compliance reporting line so that any employee can raise concerns about issues like this without any fear of retaliation.

Are you able to elaborate on how this works? Are there any other details about this publicly, couldn't find more detail via a quick search.

Some specific qs I'm curious about: (a) who handles the anonymous complaints, (b) what is the scope of behavior explicitly (and implicitly re: cultural norms) covered here, (c) handling situations where a report would deanonymize the reporter (or limit them to a small number of people)?

Replies from: Zach Stein-Perlman↑ comment by Zach Stein-Perlman · 2024-07-04T19:38:19.604Z · LW(p) · GW(p)

Anthropic has not published details. See discussion here [LW(p) · GW(p)]. (I weakly wish they would; it's not among my high-priority asks for them.)

Replies from: zac-hatfield-dodds↑ comment by Zac Hatfield-Dodds (zac-hatfield-dodds) · 2024-07-04T20:16:53.376Z · LW(p) · GW(p)

OK, let's imagine I had a concern about RSP noncompliance, and felt that I needed to use this mechanism.

(in reality I'd just post in whichever slack channel seemed most appropriate; this happens occasionally for "just wanted to check..." style concerns and I'm very confident we'd welcome graver reports too. Usually that'd be a public channel; for some compartmentalized stuff it might be a private channel and I'd DM the team lead if I didn't have access. I think we have good norms and culture around explicitly raising safety concerns and taking them seriously.)

As I understand it, I'd:

- Remember that we have such a mechanism and bet that there's a shortcut link. Fail to remember the shortlink name (reports? violations?) and search the list of "rsp-" links; ah, it's rsp-noncompliance. (just did this, and added a few aliases)

- That lands me on the policy PDF, which explains in two pages the intended scope of the policy, who's covered, the proceedure, etc. and contains a link to the third-party anonymous reporting platform. That link is publicly accessible, so I could e.g. make a report from a non-work device or even after leaving the company.

- I write a report on that platform describing my concerns[1], optionally uploading documents etc. and get a random password so I can log in later to give updates, send and receive messages, etc.

- The report by default goes to our Responsible Scaling Officer, currently Sam McCandlish. If I'm concerned about the RSO or don't trust them to handle it, I can instead escalate to the Board of Directors (current DRI Daniella Amodei)

- Investigation and resolution obviously depends on the details of the noncompliance concern.

There are other (pretty standard) escalation pathways for concerns about things that aren't RSP noncompliance. There's not much we can do about the "only one person could have made this report" problem beyond the included strong commitments to non-retaliation, but if anyone has suggestions I'd love to hear them.

I clicked through just now to the point of cursor-in-textbox, but not submitting a nuisance report. ↩︎

↑ comment by William_S · 2024-07-05T17:53:58.045Z · LW(p) · GW(p)

Good that it's clear who it goes to, though if I was an anthropic I'd want an option to escalate to a board member who isn't Dario or Daniella, in case I had concerns related to the CEO

Replies from: zac-hatfield-dodds↑ comment by Zac Hatfield-Dodds (zac-hatfield-dodds) · 2024-07-05T20:04:54.516Z · LW(p) · GW(p)

Makes sense - if I felt I had to use an anonymous mechanism, I can see how contacting Daniela about Dario might be uncomfortable. (Although to be clear I actually think that'd be fine, and I'd also have to think that Sam McCandlish as responsible scaling officer wouldn't handle it)

If I was doing this today I guess I'd email another board member; and I'll suggest that we add that as an escalation option.

Replies from: Raemon↑ comment by Raemon · 2024-07-05T20:31:23.692Z · LW(p) · GW(p)

Are there currently board members who are meaningfully separated in terms of incentive-alignment with Daniella or Dario? (I don't know that it's possible for you to answer in a way that'd really resolve my concerns, given what sort of information is possible to share. But, "is there an actual way to criticize Dario and/or Daniella in a way that will realistically be given a fair hearing by someone who, if appropriate, could take some kind of action" is a crux of mine)

Replies from: William_S, zac-hatfield-dodds↑ comment by William_S · 2024-07-05T23:56:55.319Z · LW(p) · GW(p)

Absent evidence to the contrary, for any organization one should assume board members were basically selected by the CEO. So hard to get assurance about true independence, but it seems good to at least to talk to someone who isn't a family member/close friend.

Replies from: Zach Stein-Perlman↑ comment by Zach Stein-Perlman · 2024-07-06T00:03:07.743Z · LW(p) · GW(p)

(Jay Kreps was formally selected by the LTBT. I think Yasmin Razavi was selected by the Series C investors. It's not clear how involved the leadership/Amodeis were in those selections. The three remaining members of the LTBT appear independent, at least on cursory inspection.)

↑ comment by Zac Hatfield-Dodds (zac-hatfield-dodds) · 2024-07-06T00:32:52.038Z · LW(p) · GW(p)

I think that personal incentives is an unhelpful way to try and think about or predict board behavior (for Anthropic and in general), but you can find the current members of our board listed here.

Is there an actual way to criticize Dario and/or Daniela in a way that will realistically be given a fair hearing by someone who, if appropriate, could take some kind of action?

For whom to criticize him/her/them about what? What kind of action are you imagining? For anything I can imagine actually coming up, I'd be personally comfortable raising it directly with either or both of them in person or in writing, and believe they'd give it a fair hearing as well as appropriate follow-up. There are also standard company mechanisms that many people might be more comfortable using (talk to your manager or someone responsible for that area; ask a maybe-anonymous question in various fora; etc). Ultimately executives are accountable to the board, which will be majority appointed by the long-term benefit trust from late this year.

↑ comment by Zach Stein-Perlman · 2024-07-04T19:22:20.783Z · LW(p) · GW(p)

Re 3 (and 1): yay.

If I was in charge of Anthropic I just wouldn't use non-disparagement.

↑ comment by Sam Marks (samuel-marks) · 2024-06-30T19:12:01.350Z · LW(p) · GW(p)

Anthropic has asked employees

[...]

Anthropic has offered at least one employee

As a point of clarification: is it correct that the first quoted statement above should be read as "at least one employee" in line with the second quoted statement? (When I first read it, I parsed it as "all employees" which was very confusing since I carefully read my contract both before signing and a few days ago (before posting this comment [LW(p) · GW(p)]) and I'm pretty sure there wasn't anything like this in there.)

Replies from: Vladimir_Nesov, habryka4↑ comment by Vladimir_Nesov · 2024-06-30T21:22:58.064Z · LW(p) · GW(p)

(I'm a full-time employee at Anthropic.)

I carefully read my contract both before signing and a few days ago [...] there wasn't anything like this in there.

Current employees of OpenAI also wouldn't yet have signed or even known about the non-disparagement agreement that is part of "general release" paperwork on leaving the company. So this is only evidence about some ways this could work at Anthropic, not others.

↑ comment by habryka (habryka4) · 2024-06-30T21:18:48.575Z · LW(p) · GW(p)

Yep, both should be read as "at least one employee", sorry for the ambiguity in the language.

Replies from: DanielFilan↑ comment by DanielFilan · 2024-06-30T21:24:15.027Z · LW(p) · GW(p)

FWIW I recommend editing OP to clarify this.

Replies from: neel-nanda-1↑ comment by Neel Nanda (neel-nanda-1) · 2024-06-30T22:34:49.111Z · LW(p) · GW(p)

Agreed, I think it's quite confusing as is

Replies from: habryka4↑ comment by habryka (habryka4) · 2024-06-30T22:57:36.237Z · LW(p) · GW(p)

Added a "at least some", which I hope clarifies.

↑ comment by Zach Stein-Perlman · 2024-06-30T22:15:21.206Z · LW(p) · GW(p)

I am disappointed. Using nondisparagement agreements seems bad to me, especially if they're covered by non-disclosure agreements, especially if you don't announce that you might use this.

My ask-for-Anthropic now is to explain the contexts in which they have asked or might ask people to incur nondisparagement obligations, and if those are bad, release people and change policy accordingly. And even if nondisparagement obligations can be reasonable, I fail to imagine how non-disclosure obligations covering them could be reasonable, so I think Anthropic should at least do away with the no-disclosure-of-nondisparagement obligations.

↑ comment by Bird Concept (jacobjacob) · 2024-07-01T01:11:35.472Z · LW(p) · GW(p)

Does anyone from Anthropic want to explicitly deny that they are under an agreement like this?

(I know the post talks about some and not necessarily all employees, but am still interested).

Replies from: ivan-vendrov, zac-hatfield-dodds, T3t, aysja↑ comment by Ivan Vendrov (ivan-vendrov) · 2024-07-01T05:33:14.210Z · LW(p) · GW(p)

I left Anthropic in June 2023 and am not under any such agreement.

EDIT: nor was any such agreement or incentive offered to me.

Replies from: Vladimir_Nesov↑ comment by Vladimir_Nesov · 2024-07-01T16:02:40.162Z · LW(p) · GW(p)

I left [...] and am not under any such agreement.

Neither is Daniel Kokotajlo. Context and wording strongly suggest that what you mean is that you weren't ever offered paperwork with such an agreement and incentives to sign it, but there remains a slight ambiguity on this crucial detail.

Replies from: ivan-vendrov↑ comment by Ivan Vendrov (ivan-vendrov) · 2024-07-01T16:31:32.264Z · LW(p) · GW(p)

Correct, I was not offered such paperwork nor any incentives to sign it. Edited my post to include this.

↑ comment by Zac Hatfield-Dodds (zac-hatfield-dodds) · 2024-07-01T05:55:12.586Z · LW(p) · GW(p)

I am a current Anthropic employee, and I am not under any such agreement, nor has any such agreement ever been offered to me.

If asked to sign a self-concealing NDA or non-disparagement agreement, I would refuse.

↑ comment by RobertM (T3t) · 2024-07-01T01:36:41.398Z · LW(p) · GW(p)

Did you see Sam's comment [LW(p) · GW(p)]?

↑ comment by William_S · 2024-07-01T18:59:50.564Z · LW(p) · GW(p)

I agree that this kind of legal contract is bad, and Anthropic should do better. I think there are a number of aggrevating factors which made the OpenAI situation extrodinarily bad, and I'm not sure how much these might obtain regarding Anthropic (at least one comment from another departing employee about not being offered this kind of contract suggest the practice is less widespread).

-amount of money at stake

-taking money, equity or other things the employee believed they already owned if the employee doesn't sign the contract, vs. offering them something new (IANAL but in some cases, this could be a felony "grand theft wages" under California law if a threat to withhold wages for not signing a contract is actually carried out, what kinds of equity count as wages would be a complex legal question)

-is this offered to everyone, or only under circumstances where there's a reasonable justification?

-is this only offered when someone is fired or also when someone resigns?

-to what degree are the policies of offering contracts concealed from employees?

-if someone asks to obtain legal advice and/or negotiate before signing, does the company allow this?

-if this becomes public, does the company try to deflect/minimize/only address issues that are made publically, or do they fix the whole situation?

-is this close to "standard practice" (which doesn't make it right, but makes it at least seem less deliberately malicious), or is it worse than standard practice?

-are there carveouts that reduce the scope of the non-disparagement clause (explicitly allow some kinds of speech, overriding the non-disparagement)?

-are there substantive concerns that the employee has at the time of signing the contract, that the agreement would prevent discussing?

-are there other ways the company could retaliate against an employee/departing employee who challenges the legality of contract?

I think with termination agreements on being fired there's often 1. some amount of severance offered 2. a clause that says "the terms and monetary amounts of this agreement are confidential" or similar. I don't know how often this also includes non-disparagement. I expect that most non-disparagement agreements don't have a term or limits on what is covered.

I think a steelman of this kind of contract is: Suppose you fire someone, believe you have good reasons to fire them, and you think that them loudly talking about how it was unfair that you fired them would unfairly harm your company's reputation. Then it seems somewhat reasonable to offer someone money in exchange for "don't complain about being fired". The person who was fired can then decide whether talking about it is worth more than the money being offered.

However, you could accomplish this with a much more limited contract, ideally one that lets you disclose "I signed a legal agreement in exchange for money to not complain about being fired", and doesn't cover cases where "years later, you decide the company is doing the wrong thing based on public information and want to talk about that publically" or similar.

I think it is not in the nature of most corporate lawyers to think about "is this agreement giving me too much power?" and most employees facing such an agreement just sign it without considering negotiating or challenging the terms.

For any future employer, I will ask about their policies for termination contracts before I join (as this is when you have the most leverage, if they give you an offer they want to convince you to join).

↑ comment by Neel Nanda (neel-nanda-1) · 2024-07-12T07:24:45.533Z · LW(p) · GW(p)

This is true. I signed a concealed non-disparagement when I left Anthropic in mid 2022. I don't have clear evidence this happened to anyone else (but that's not strong evidence of absence). More details here [LW(p) · GW(p)]

EDIT: I should also clarify that I personally don't think Anthropic acted that badly, and recommend reading about what actually happened before forming judgements. I do not think I am the person referred to in Habryka's comment.

↑ comment by ChristianKl · 2024-06-30T09:59:42.487Z · LW(p) · GW(p)

In the case of OpenAI most of the debate was about ex-employees. Are we talking about current employees or ex-employees here?

Replies from: habryka4↑ comment by habryka (habryka4) · 2024-06-30T17:27:29.149Z · LW(p) · GW(p)

I am including both in this reference class (i.e. when I say employee above, it refers to both present employees and employees who left at some point). I am intentionally being broad here to preserve more anonymity of my sources.

↑ comment by Bird Concept (jacobjacob) · 2024-07-01T01:13:46.443Z · LW(p) · GW(p)

Not sure how to interpret the "agree" votes on this comment. If someone is able to share that they agree with the core claim because of object-level evidence, I am interested. (Rather than agreeing with the claim that this state of affairs is "quite sad".)

↑ comment by Dagon · 2024-06-30T21:12:18.291Z · LW(p) · GW(p)

A LOT depends on the details of WHEN the employees make the agreement, and the specifics of duration and remedy, and the (much harder to know) the apparent willingness to enforce on edge cases.

"significant financial incentive to promise" is hugely different from "significant financial loss for choosing not to promise". MANY companies have such things in their contracts, and they're a condition of employment. And they're pretty rarely enforced. That's a pretty significant incentive, but it's prior to investment, so it's nowhere near as bad.

↑ comment by Jacob Pfau (jacob-pfau) · 2024-06-30T18:45:13.616Z · LW(p) · GW(p)

A pre-existing market on this question https://manifold.markets/causal_agency/does-anthropic-routinely-require-ex?r=SmFjb2JQZmF1

↑ comment by Zach Stein-Perlman · 2024-06-30T21:50:52.720Z · LW(p) · GW(p)

What's your median-guess for the number of times Anthropic has done this?

Replies from: habryka4↑ comment by habryka (habryka4) · 2024-06-30T22:53:01.517Z · LW(p) · GW(p)

(Not answering this question since I think it would leak too many bits on confidential stuff. In general I will be a bit hesitant to answer detailed questions on this, or I might take a long while to think about what to say before I answer, which I recognize is annoying, but I think is the right tradeoff in this situation)

↑ comment by Zane · 2024-07-01T06:55:01.028Z · LW(p) · GW(p)

I'm kind of concerned about the ethics of someone signing a contract and then breaking it to anonymously report what's going on (if that's what your private source did). I think there's value from people being able to trust each others' promises about keeping secrets, and as much as I'm opposed to Anthropic's activities, I'd nevertheless like to preserve a norm of not breaking promises.

Can you confirm or deny whether your private information comes from someone who was under a contract not to give you that private information? (I completely understand if the answer is no.)

Replies from: habryka4, Benito↑ comment by habryka (habryka4) · 2024-07-01T07:12:29.776Z · LW(p) · GW(p)

(Not going to answer this question for confidentiality/glommarization reasons)

↑ comment by Ben Pace (Benito) · 2024-07-10T20:03:52.984Z · LW(p) · GW(p)

I think this is a reasonable question to ask. I will note that in this case, if your guess is right about what happened, the breaking of the agreement is something that it turned out the counterparty endorsed, or at least, after the counterparty became aware of the agreement, they immediately lifted it.

I still think there's something to maintaining all agreements regardless of context, but I do genuinely think it matters here if you (accurately) expect the entity you've made the secret agreement with would likely retract it if they found out about it.

(Disclaimer that I have no private info about this specific situation.)

comment by habryka (habryka4) · 2025-01-14T06:37:33.477Z · LW(p) · GW(p)

It's the last 6 hours of the fundraiser and we have met our $2M goal! This was roughly the "we will continue existing and not go bankrupt" threshold, which was the most important one to hit.

Thank you so much to everyone who made it happen. I really did not expect that we would end up being able to raise this much funding without large donations from major philanthropists, and I am extremely grateful to have so much support from such a large community.

Let's make the last few hours in the fundraiser count, and then me and the Lightcone team will buckle down and make sure all of these donations were worth it.

Replies from: davekasten, AliceZ↑ comment by davekasten · 2025-01-14T15:23:53.057Z · LW(p) · GW(p)

Ok, but you should leave the donation box up -- link now seems to not work? I bet there would be at least several $K USD of donations from folks who didn't remember to do it in time.

Replies from: habryka4↑ comment by habryka (habryka4) · 2025-01-14T15:51:20.476Z · LW(p) · GW(p)

Oops, you're right, fixed. That was just an accident.

Replies from: davekasten↑ comment by davekasten · 2025-01-14T19:44:39.505Z · LW(p) · GW(p)

Note for posterity that there has been at least $15K of donations since this got turned back on -- You Can Just Report Bugs

Replies from: habryka4↑ comment by habryka (habryka4) · 2025-01-14T21:09:20.760Z · LW(p) · GW(p)

Those were mostly already in-flight, so not counterfactual (and also the fundraising post still has the donation link at the top), but I do expect at least some effect!

Replies from: davekasten↑ comment by davekasten · 2025-01-15T00:22:19.990Z · LW(p) · GW(p)

Oh, fair enough then, I trust your visibility into this. Nonetheless one Should Can Just Report Bugs

↑ comment by ZY (AliceZ) · 2025-01-16T05:06:33.452Z · LW(p) · GW(p)

Out of curiosity - what was the time span for this raise that achieved this goal/when did first start again? Was it 2 months ago?

Replies from: habryka4↑ comment by habryka (habryka4) · 2025-01-16T05:55:03.955Z · LW(p) · GW(p)

Yep, when the fundraising post went live, i.e. November 29th.

Replies from: kavecomment by habryka (habryka4) · 2024-12-07T22:45:53.601Z · LW(p) · GW(p)

Reputation is lazily evaluated

When evaluating the reputation of your organization, community, or project, many people flock to surveys in which you ask randomly selected people what they think of your thing, or what their attitudes towards your organization, community or project are.

If you do this, you will very reliably get back data that looks like people are indifferent to you and your projects, and your results will probably be dominated by extremely shallow things like "do the words in your name invoke positive or negative associations".

People largely only form opinions of you or your projects when they have some reason to do that, like trying to figure out whether to buy your product, or join your social movement, or vote for you in an election. You basically never care about what people think about you while engaging in activities completely unrelated to you, you care about what people will do when they have to take any action that is related to your goals. But the former is exactly what you are measuring in attitude surveys.

As an example of this (used here for illustrative purposes, and what caused me to form strong opinions on this, but not intended as the central point of this post): Many leaders in the Effective Altruism community ran various surveys after the collapse of FTX trying to understand what the reputation of "Effective Altruism" is. The results [EA · GW] were basically always the same: People mostly didn't know what EA was, and had vaguely positive associations with the term when asked. The people who had recently become familiar with it (which weren't that many) did lower their opinions of EA, but the vast majority of people did not (because they mostly didn't know what it was).

As far as I can tell, these surveys left most EA leaders thinking that the reputational effects of FTX were limited. After all, most people never heard about EA in the context of FTX, and seemed to mostly have positive associations with the term, and the average like or dislike in surveys barely budged. In reflections at the time, conclusions looked like this [EA(p) · GW(p)]:

- The fact that most people don't really care much about EA is both a blessing and a curse. But either way, it's a fact of life; and even as we internally try to learn what lessons we can from FTX, we should keep in mind that people outside EA mostly can't be bothered to pay attention.

- An incident rate in the single digit percents means that most community builders will have at least one example of someone raising FTX-related concerns—but our guess is that negative brand-related reactions are more likely to come from things like EA's perceived affiliation with tech or earning to give than FTX.

- We have some uncertainty about how well these results generalize outside the sample populations. E.g. we have heard claims that people who work in policy were unusually spooked by FTX. That seems plausible to us, though Ben would guess that policy EAs similarly overestimate the extent to which people outside EA care about EA drama.

Yes, my best understanding is still that people mostly don't know what EA is, the small fraction that do mostly have a mildly positive opinion, and that neither of these points were affected much by FTX.[1]

This, I think, was an extremely costly mistake to make. Since then, practically all metrics of the EA community's health and growth have sharply declined [EA · GW], and the extremely large and negative reputational effects have become clear.

Most programmers are familiar with the idea of a "lazily evaluated variable" - a value that isn't computed until the exact moment you try to use it. Instead of calculating the value upfront, the system maintains just enough information to be able to calculate it when needed. If you never end up using that value, you never pay the computational cost of calculating it. Similarly, most people don't form meaningful opinions about organizations or projects until the moment they need to make a decision that involves that organization. Just as a lazy variable suddenly gets evaluated when you first try to read its value, people's real opinions about projects don't materialize until they're in a position where that opinion matters - like when deciding whether to donate, join, or support the project's initiatives.

Reputation is lazily evaluated. People conserve their mental energy, time, and social capital by not forming detailed opinions about things until those opinions become relevant to their decisions. When surveys try to force early evaluation of these "lazy" opinions, they get something more like a placeholder value than the actual opinion that would form in a real decision-making context.

This computation is not purely cognitive. As people encounter a product, organization or community that they are considering doing something with, they will ask their friends whether they have any opinions, perform online searches, and generally seek out information to help them with whatever decision they are facing. This is part of the reason for why this metaphorical computation is costly and put off until it's necessary.

So when you are trying to understand what people think of you, or how people's opinions of you are changing, pay much more attention to the attitudes of people who have recently put in the effort to learn about you, or were facing some decision related to you, and so are more representative of where people tend to end up at when they are in a similar position. These will be much better indicators of your actual latent reputation than what happens when you ask people on a survey.

For the EA surveys, these indicators looked very bleak:

"Results demonstrated that FTX had decreased satisfaction by 0.5-1 points on a 10-point scale within the EA community"

"Among those aware of EA, attitudes remain positive and actually maybe increased post-FTX —though they were lower (d = -1.5, with large uncertainty) among those who were additionally aware of FTX."

"Most respondents reported continuing to trust EA organizations, though over 30% said they had substantially lost trust in EA public figures or leadership."

If various people in EA had paid attention to these, instead of to the approximately meaningless placeholder variables that you get when you ask people what they think of you without actually getting them to perform the costly computation associated with forming an opinion of you, I think they would have made substantially better predictions.

Replies from: Buck, GAA, Seth Herd, Zach Stein-Perlman, yanni↑ comment by Buck · 2024-12-08T17:14:41.219Z · LW(p) · GW(p)

I don't like the fact that this essay is a mix of an insightful generic argument and a contentious specific empirical claim that I don't think you support strongly; it feels like the rhetorical strength of the former lends credence to the latter in a way that isn't very truth-tracking.

I'm not claiming you did anything wrong here, I just don't like something about this dynamic.

Replies from: habryka4↑ comment by habryka (habryka4) · 2024-12-08T19:03:41.821Z · LW(p) · GW(p)

I do think the EA example is quite good on an illustrative level. It really strikes me as a rare case where we have an enormous pile of public empirical evidence (which is linked in the post) and it also seems by now really quite clear from a common-sense perspective.

I don't think it makes sense to call this point "contentious". I think it's about as clear as these cases go. At least of the top of my head I can't think of an example that would have been clearer (maybe if you had some social movement that more fully collapsed and where you could do a retrospective root cause analysis, but it's extremely rare to have as clear of a natural experiment as the FTX one). I do think it's political in our local social environment, and so is harder to talk about, so I agree on that dimension a different example would be better.

I do think it would be good/nice to add an additional datapoint, but I also think this would risk being misleading. The point about reputation being lazily evaluated is mostly true from common-sense observations and logical reasoning, and the EA point is mostly trying to provide evidence for "yes, this is a real mistake that real people make". I think even if you dispute EAs reputation having gotten worse, I think the quotes from people above are still invalid and would mislead people (and I had this model before we observed the empirical evidence, and am writing it up because people told me they found it helpful for thinking through the FTX stuff as it was happening).

I think if I had a lot more time, I think the best thing to do would be to draw on some literature on polling errors or marketing, since the voting situation seems quite analogous. This might even get us some estimates of how strong the correlation between unevaluated and evaluated attitudes are, and how much they diverge for different levels of investment, if there exists any measurable one, and that would be cool.

Replies from: Buck↑ comment by Buck · 2024-12-08T19:27:48.596Z · LW(p) · GW(p)

I am persuaded by neither the common sense or the empirical evidence for the point about EA. To be clear (as I've said to you privately) I'm not at all trying to imply that I specifically disagree with you, I'm just saying that the evidence you've provided doesn't persuade me of your claims.

Replies from: habryka4↑ comment by habryka (habryka4) · 2024-12-08T19:37:36.828Z · LW(p) · GW(p)

Yeah, makes sense. I don't think I am providing a full paper trail of evidence one can easily travel along, but I would take bets you would come to agree with it if you did spend the effort to look into it.

↑ comment by Guive (GAA) · 2024-12-08T00:19:00.381Z · LW(p) · GW(p)

This is good. Please consider making it a top level post.

Replies from: metachirality↑ comment by metachirality · 2024-12-08T18:56:05.744Z · LW(p) · GW(p)

It ought to be a top-level post on the EA forum as well.

Replies from: habryka4↑ comment by habryka (habryka4) · 2024-12-08T19:22:58.182Z · LW(p) · GW(p)

(Someone is welcome to link post, but indeed I am somewhat hoping to avoid posting over there as much, as I find it reliably stressful in mostly unproductive ways)

↑ comment by Seth Herd · 2024-12-09T15:25:24.049Z · LW(p) · GW(p)

There's another important effect here: a laggy time course of public opinion. I saw more popular press articles about EA than I ever have, linking SBF to them, but with a large lag after the events. So the early surveys showing a small effect happened before public conversation really bounced around the idea that SBFs crimes were motivated by EA utilitarian logic. The first time many people would remember hearing about EA would be from those later articles and discussions.

The effect probably amplified considerably over time as that hypothesis bounced through public discourse.

The original point stands but this is making the effect look much larger in this case.

Replies from: hauke-hillebrandt↑ comment by Hauke Hillebrandt (hauke-hillebrandt) · 2024-12-10T11:05:09.634Z · LW(p) · GW(p)

This lag effect might amplify a lot more when big budget movies about SBF/FTX come out.

↑ comment by Zach Stein-Perlman · 2024-12-08T03:23:43.626Z · LW(p) · GW(p)

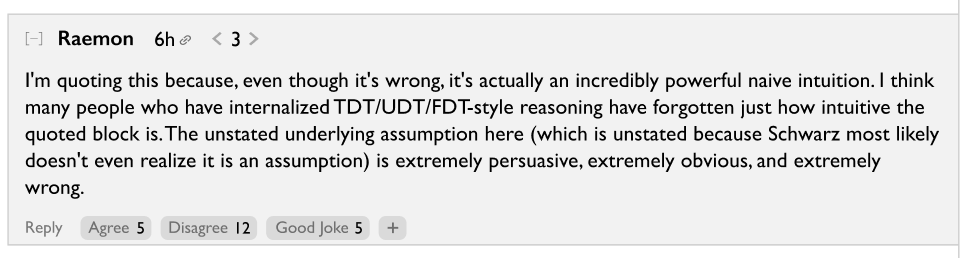

Edit 2: after checking, I now believe the data strongly suggest FTX had a large negative effect on EA community metrics. (I still agree with Buck: "I don't like the fact that this essay is a mix of an insightful generic argument and a contentious specific empirical claim that I don't think you support strongly; it feels like the rhetorical strength of the former lends credence to the latter in a way that isn't very truth-tracking." And I disagree with habryka's claims that the effect of FTX is obvious.)

practically all metrics of the EA community's health and growth have sharply declined [EA · GW], and the extremely large and negative reputational effects have become clear.

I want more evidence on your claim that FTX had a major effect on EA reputation. Or: why do you believe it?

Edit: relevant thing habryka said that I didn't quote above:

Replies from: habryka4For the EA surveys, these indicators looked very bleak:

"Results demonstrated that FTX had decreased satisfaction by 0.5-1 points on a 10-point scale within the EA community"

"Among those aware of EA, attitudes remain positive and actually maybe increased post-FTX —though they were lower (d = -1.5, with large uncertainty) among those who were additionally aware of FTX."

"Most respondents reported continuing to trust EA organizations, though over 30% said they had substantially lost trust in EA public figures or leadership."

↑ comment by habryka (habryka4) · 2024-12-08T08:49:12.458Z · LW(p) · GW(p)

Practically all growth metrics are [EA · GW] down [EA · GW] (and have indeed turned negative on most measures), a substantial fraction [EA(p) · GW(p)] of core contributors are distancing themselves from the EA affiliation, surveys among EA community builders report EA-affiliation as a major recurring obstacle[1], and many of the leaders who previously thought it wasn't a big deal now concede that it was/is a huge deal.

Also, informally, recruiting for things like EA Fund managers, or getting funding for EA Funds has become substantially harder. EA leadership positions appear to be filled by less competent people, and in most conversations I have with various people who have been around for a while, people seem to both express much less personal excitement or interest in identifying or championing anything EA-related, and report the same for most other people.

Related to the concepts in my essay, when measured the reputational differential also seem to reliably point towards people updating negatively towards EA as they learn more about EA (which shows up in the quotes you mentioned, and which more recently shows up in the latest Pulse survey [EA · GW], though I mostly consider that survey uninformative for roughly the reasons outlined in this post).

- ^

As reported to me by someone I trust working in the space recently. I don't have a link at hand.

↑ comment by angelinahli · 2024-12-10T19:40:05.137Z · LW(p) · GW(p)

Hey! Sorry for the silence, I was feeling a bit stressed by this whole thread, and so I wanted to step away and think about this before responding. I’ve decided to revert the dashboard back to its original state & have republished the stale data. I did some quick/light data checks but prioritised getting this out fast. For transparency: I’ve also added stronger context warnings and I took down the form to access our raw data in sheet form but intend to add it back once we’ve fixed the data. It’s still on our stack to Actually Fix this at some point but we’re still figuring out the timing on that.

On reflection, I think I probably made the wrong call here (although I still feel a bit sad / misunderstood but 🤷🏻♀️). It was a unilateral + lightly held call I made in the middle of my work day — like truly I spent 5 min deciding this & maybe another ~15 updating the thing / leaving a comment. I think if I had a better model for what people wanted from the data, I would have made a different call. I’ve updated on “huh, people really care about not deleting data from the internet!” — although I get that the reaction here might be especially strong because it’s about CEA (vs the general case). Sorry, I made a mistake.

Future facing thoughts: I generally hold myself to a higher standard for accuracy when putting data on the internet, but I also do value not bottlenecking people in investigating questions that feel important to me (e.g. qs about EA growth rates), so to be clear I’m prioritizing the latter goal right now. I still in general stand by, “what even is the point of my job if I don’t stand by the data I communicate to others?” :) I want people to be able to trust that the work they see me put out in the world has been red-teamed & critiqued before publication.

Although I’m sad this caused an unintended kerfuffle, it’s a positive update for me that “huh wow, people actually care a lot that this project is kept alive!”. This honestly wasn’t obvious to me — this is a low traffic website that I worked on a while ago, and don’t hear about much. Oli says somewhere that he’s seen it linked to “many other times” in the past year, but TBH no one has flagged that to me (I’ve been busy with other projects). I’m still glad that we made this thing in the first place and am glad people find the data interesting / valuable (for general CEA transparency reasons, as an input to these broader questions about EA, etc.). I’ll probably prioritize maintenance on this higher in the future.

Now that the data is back up I’m going to go back to ignoring this thread!

Replies from: angelinahli, habryka4↑ comment by angelinahli · 2024-12-10T23:13:52.451Z · LW(p) · GW(p)

[musing] Actually another mistake here which I wish I just said in the first comment: I didn't have a strong enough TAP for, if someone says a negative thing about your org (or something that could be interpreted negatively), you should have a high bar for not taking away data (meaning more broadly than numbers) that they were using to form that perception, even if you think the data is wrong for reasons they're not tracking. You can like, try and clarify the misconception (ideally, given time & energy constraints etc.), and you can try harder to avoid putting wrong things out there, but don't just take it away -- it's not on to reader to treat you charitably and it kind of doesn't matter what your motives were.

I think I mostly agree with something like that / I do think people should hold orgs to high standards here. I didn't pay enough attention to this and regret it. Sorry! (I'm back to ignoring this thread lol but just felt like sharing a reflection 🤷🏻♀️)

↑ comment by habryka (habryka4) · 2024-12-10T19:53:37.946Z · LW(p) · GW(p)

Thank you! I appreciate the quick oops here, and agree it was a mistake (but fixing it as quickly as you did I think basically made up for all the costs, and I greatly appreciate it).

Just to clarify, I don't want to make a strong statement that it's worth updating the data and maintaining the dashboard. By my lights it would be good enough to just have a static snapshot of it forever. The thing that seemed so costly to me was breaking old links and getting rid of data that you did think was correct.

Thanks again!

↑ comment by the gears to ascension (lahwran) · 2024-12-08T18:40:06.790Z · LW(p) · GW(p)

I suspect fixing this would need to involve creating something new which doesn't have the structural problems in EA which produced this, and would involve talking to people who are non-sensationalist EA detractors but who are involved with similarly motivated projects. I'd start here and skip past the ones that are arguing "EA good" to find the ones that are "EA bad, because [list of reasons ea principles are good, and implication that EA is bad because it fails at its stated principles]"

I suspect, even without seeking that out, the spirit of EA that made it ever partly good has already and will further metastasize into genpop.

↑ comment by angelinahli · 2024-12-09T01:20:48.174Z · LW(p) · GW(p)

Hi! A quick note: I created the CEA Dashboard which is the 2nd link you reference. The data here hadn’t been updated since August 2024, and so was quite out of date at the time of your comment. I've now taken this dashboard down, since I think it's overall more confusing than helpful for grokking the state of CEA's work. We still intend to come back and update it within a few months.

Just to be clear on why / what’s going on:

- I stopped updating the dashboard in August because I started getting busy with some other projects, and my manager & I decided to deprioritize this. (There are some manual steps needed to keep the data live).

- I’ve now seen several people refer to that dashboard as a reference for how CEA is doing in ways I think are pretty misleading.

- We (CEA) still intend to come back and fix this, and this is a good nudge to prioritize it.

Thanks!

Replies from: habryka4↑ comment by habryka (habryka4) · 2024-12-09T01:27:27.221Z · LW(p) · GW(p)

Oh, huh, that seems very sad. Why would you do that? Please leave up the data that we have. I think it's generally bad form to break links that people relied on. The data was accurate as far as I can tell until August 2024, and you linked to it yourself a bunch over the years, don't just break all of those links.

I am pretty up-to-date with other EA metrics and I don't really see how this would be misleading. You had a disclaimer at the top that I think gave all the relevant context. Let people make their own inferences, or add more context, but please don't just take things down.

Unfortunately, archive.org doesn't seem to have worked for that URL, so we can't even rely on that to show the relevant data trends.

Edit: I'll be honest, after thinking about it for longer, the only reason I can think of why you would take down the data is because it makes CEA and EA look less on an upwards trajectory. But this seems so crazy. How can I trust data coming out of CEA if you have a policy of retracting data that doesn't align with the story you want to tell about CEA and EA? The whole point of sharing raw data is to allow other people to come to their own conclusions. This really seems like such a dumb move from a trust perspective.

Replies from: Benito, angelinahli, angelinahli, angelinahli↑ comment by Ben Pace (Benito) · 2024-12-09T02:51:31.455Z · LW(p) · GW(p)

I also believe that the data making EA+CEA looks bad is the causal reason why it was taken down. However, I want to add some slight nuance.

I want to contrast a model whereby Angelina Li did this while explicitly trying to stop CEA from looking bad, versus a model whereby she senses that something bad might be happening, she might be held responsible (e.g. within her organization / community), and is executing a move that she's learned is 'responsible' from the culture around her.

I think many people have learned to believe the reasoning step "If people believe bad things about my team I think are mistaken with the information I've given them, then I am responsible for not misinforming people, so I should take the information away, because it is irresponsible to cause people to have false beliefs". I think many well-intentioned people will say something like this, and that this is probably because of two reasons (borrowing from The Gervais Principle):

- This is a useful argument for powerful sociopaths to use when they are trying to suppress negative information about themselves.

- The clueless people below them in the hierarchy need to rationalize why they are following the orders of the sociopaths to prevent people from accessing information. The idea that they are 'acting responsibly' is much more palatable than the idea that they are trying to control people, so they willingly spread it and act in accordance with it.

A broader model I have is that there are many such inference-steps floating around the culture that well-intentioned people can accept as received wisdom, and they got there because sociopaths needed a cover for their bad behavior and the clueless people wanted reasons to feel good about their behavior; and that each of these adversarially optimized inference-steps need to be fought and destroyed.

Replies from: sarahconstantin, Kaj_Sotala↑ comment by sarahconstantin · 2024-12-09T20:37:47.958Z · LW(p) · GW(p)

I agree, and I am a bit disturbed that it needs to be said.

At normal, non-EA organizations -- and not only particularly villainous ones, either! -- it is understood that you need to avoid sharing any information that reflects poorly on the organization, unless it's required by law or contract or something. The purpose of public-facing communications is to burnish the org's reputation. This is so obvious that they do not actually spell it out to employees.

Of COURSE any organization that has recently taken down unflattering information is doing it to maintain its reputation.

I'm sorry, but this is how "our people" get taken for a ride. Be more cynical, including about people you like.

↑ comment by Kaj_Sotala · 2024-12-10T12:02:25.670Z · LW(p) · GW(p)

I think many people have learned to believe the reasoning step "If people believe bad things about my team I think are mistaken with the information I've given them, then I am responsible for not misinforming people, so I should take the information away, because it is irresponsible to cause people to have false beliefs". I think many well-intentioned people will say something like this, and that this is probably because of two reasons (borrowing from The Gervais Principle):

(Comment not specific to the particulars of this issue but noted as a general policy:) I think that as a general rule, if you are hypothesizing reasons for why somebody might say a thing, you should always also include the hypothesis that "people say a thing because they actually believe in it". This is especially so if you are hypothesizing bad reasons for why people might say it.

It's very annoying when someone hypothesizes various psychological reasons for your behavior and beliefs but never even considers as a possibility [LW · GW] the idea that maybe you might have good reasons to believe in it. Compare e.g. "rationalists seem to believe that superintelligence is imminent; I think this is probably because that lets them avoid taking responsibility about their current problems if AI will make those irrelevant anyway, or possibly because they come from religious backgrounds and can't get over their subconscious longing for a god-like figure".

Replies from: Benito, habryka4↑ comment by Ben Pace (Benito) · 2024-12-10T17:44:47.013Z · LW(p) · GW(p)

I feel more responsibility to be the person holding/tracking the earnest hypothesis in a 1-1 context, or if I am the only one speaking; in larger group contexts I tend to mostly ask "Is there a hypothesis here that isn't or likely won't be tracked unless I speak up" and then I mostly focus on adding hypotheses to track (or adding evidence that nobody else is adding).

↑ comment by habryka (habryka4) · 2024-12-10T17:45:56.781Z · LW(p) · GW(p)

(Did Ben indicate he didn’t consider it? My guess is he considered it, but thinks it’s not that likely and doesn’t have amazingly interesting things to say on it.

I think having a norm of explicitly saying “I considered whether you were saying the truth but I don’t believe it” seems like an OK norm, but not obviously a great one. In this case Ben also responded to a comment of mine which already said this, and so I really don’t see a reason for repeating it.)

Replies from: Kaj_Sotala↑ comment by Kaj_Sotala · 2024-12-17T20:02:53.127Z · LW(p) · GW(p)

(I read

I think many well-intentioned people will say something like this, and that this is probably because of two reasons

as implying that the list of reasons is considered to exhaustive, such that any reasons besides those two have negligible probability.)

Replies from: Benito↑ comment by Ben Pace (Benito) · 2024-12-18T07:47:37.032Z · LW(p) · GW(p)

I gave my strongest hypothesis for why it looks to me that many many people believe it's responsible to take down information that makes your org look bad. I don't think alternative stories have negligible probability, nor does what I wrote imply that, though it is logically consistent with that.

There are many anti-informative behaviors that are widespread for which people do for poor reasons, like saying that their spouse is the best spouse in the world, or telling customers that their business is the best business in the industry, or saying exclusively glowing things about people in reference letters, that are best explained by the incentives on the person to present themselves in the best light; at the same time, it is respectful to a person, while in dialogue with them, to keep a track of the version of them who is trying their best to have true beliefs and honestly inform others around them, in order to help them become that person (and notice the delta between their current behavior and what they hopefully aspire to).

Seeing orgs in the self-identified-EA space take down information that makes them look bad is (to me) not that dissimilar to the other things I listed.

I think it's good to discuss norms about how appropriate it is to bring up cynical hypotheses about someone during a discussion in which they're present. In this case I think raising this hypothesis was worthwhile it for the discussion, and I didn't cut off any way [LW · GW] for the person in question to continue to show themselves to be broadly acting in good faith, so I think it went fine. Li replied to Habryka, and left a thoughtful pair of comments retracting and apologizing, which reflected well on them in my eyes.

Replies from: Kaj_Sotala↑ comment by Kaj_Sotala · 2024-12-18T08:13:54.110Z · LW(p) · GW(p)

I don't think alternative stories have negligible probability

Okay! Good clarification.

I think it's good to discuss norms about how appropriate it is to bring up cynical hypotheses about someone during a discussion in which they're present.

To clarify, my comment wasn't specific to the case where the person is present. There are obvious reasons why the consideration should get extra weight when the person is present, but there's also a reason to give it extra weight if none of the people discussed are present - namely that they won't be able to correct any incorrect claims if they're not around.

so I think it went fine

Agree.

(As I mentioned in the original comment, the point I made was not specific to the details of this case, but noted as a general policy. But yes, in this specific case it went fine.)

↑ comment by angelinahli · 2024-12-09T01:58:31.354Z · LW(p) · GW(p)

Quick thoughts on this:

- “The data was accurate as far as I can tell until August 2024”

- I’ve heard a few reports over the last few weeks that made me unsure whether the pre-Aug data was actually correct. I haven’t had time to dig into this.

- In one case (e.g. with the EA.org data) we have a known problem with the historical data that I haven’t had time to fix, that probably means the reported downward trend in views is misleading. Again I haven’t had time to scope the magnitude of this etc.

- I’m going to check internally to see if we can just get this back up in a week or two (It was already high on our stack, so this just nudges up timelines a bit). I will update this thread once I have a plan to share.

I’m probably going to drop responding to “was this a bad call” and prioritize “just get the dashboard back up soon”.

↑ comment by angelinahli · 2024-12-10T19:43:28.677Z · LW(p) · GW(p)

More thoughts here [LW · GW], but TL;DR I’ve decided to revert the dashboard back to its original state & have republished the stale data. (Just flagging for readers who wanted to dig into the metrics.)

↑ comment by angelinahli · 2024-12-09T03:01:43.213Z · LW(p) · GW(p)

Hey! I just saw your edited text and wanted to jot down a response:

Edit: I'll be honest, after thinking about it for longer, the only reason I can think of why you would take down the data is because it makes CEA and EA look less on an upwards trajectory. But this seems so crazy. How can I trust data coming out of CEA if you have a policy of retracting data that doesn't align with the story you want to tell about CEA and EA? The whole point of sharing raw data is to allow other people to come to their own conclusions. This really seems like such a dumb move from a trust perspective.

I'm sorry this feels bad to you. I care about being truth seeking and care about the empirical question of "what's happening with EA growth?". Part of my motivation in getting this dashboard published [EA · GW] in the first place was to contribute to the epistemic commons on this question.

I also disagree that CEA retracts data that doesn't align with "the right story on growth”. E.g. here's a post [EA · GW] I wrote in mid 2023 where the bottom line conclusion was that growth in meta EA projects was down in 2023 v 2022. It also publishes data on several cases where CEA programs grew slower in 2023 or shrank. TBH I also think of this as CEA contributing to the epistemic commons here — it took us a long time to coordinate and then get permission from people to publish this. And I’m glad we did it!

On the specific call here, I'm not really sure what else to tell you re: my motivations other than what I've already said. I'm going to commit to not responding further to protect my attention, but I thought I'd respond at least once :)

Replies from: habryka4↑ comment by habryka (habryka4) · 2024-12-09T03:42:42.683Z · LW(p) · GW(p)

I would currently be quite surprised if you had taken the same action if I was instead making an inference that positively reflects on CEA or EA. I might of course be wrong, but you did do it right after I wrote something critical of EA and CEA, and did not do it the many other times it was linked in the past year. Sadly your institution has a long history of being pretty shady with data and public comms this way, and so my priors are not very positively inclined.

I continue to think that it would make sense to at least leave the data up that CEA did feel comfortable linking in the last 1.5 years. By my norms invalidating links like this, especially if the underlying page happens to be unscrapeable by the internet archive, is really very bad form.

I did really appreciate your mid 2023 post!

↑ comment by yanni kyriacos (yanni) · 2024-12-11T00:06:47.826Z · LW(p) · GW(p)

I spent 8 years working in strategy departments for Ad Agencies. If you're interested in the science behind brand tracking, I recommend you check out the Ehrenberg-Bass Institutes work on Category Entry Points: https://marketingscience.info/research-services/identifying-and-prioritising-category-entry-points/

comment by habryka (habryka4) · 2019-05-09T19:12:09.799Z · LW(p) · GW(p)

Thoughts on integrity and accountability

[Epistemic Status: Early draft version of a post I hope to publish eventually. Strongly interested in feedback and critiques, since I feel quite fuzzy about a lot of this]

When I started studying rationality and philosophy, I had the perspective that people who were in positions of power and influence should primarily focus on how to make good decisions in general and that we should generally give power to people who have demonstrated a good track record of general rationality. I also thought of power as this mostly unconstrained resource, similar to having money in your bank account, and that we should make sure to primarily allocate power to the people who are good at thinking and making decisions.

That picture has changed a lot over the years. While I think there is still a lot of value in the idea of "philosopher kings", I've made a variety of updates that significantly changed my relationship to allocating power in this way:

- I have come to believe that people's ability to come to correct opinions about important questions is in large part a result of whether their social and monetary incentives reward them when they have accurate models in a specific domain. This means a person can have extremely good opinions in one domain of reality, because they are subject to good incentives, while having highly inaccurate models in a large variety of other domains in which their incentives are not well optimized.

- People's rationality is much more defined by their ability to maneuver themselves into environments in which their external incentives align with their goals, than by their ability to have correct opinions while being subject to incentives they don't endorse. This is a tractable intervention and so the best people will be able to have vastly more accurate beliefs than the average person, but it means that "having accurate beliefs in one domain" doesn't straightforwardly generalize to "will have accurate beliefs in other domains".

One is strongly predictive of the other, and that’s in part due to general thinking skills and broad cognitive ability. But another major piece of the puzzle is the person's ability to build and seek out environments with good incentive structures. - Everyone is highly irrational in their beliefs about at least some aspects of reality, and positions of power in particular tend to encourage strong incentives that don't tend to be optimally aligned with the truth. This means that highly competent people in positions of power often have less accurate beliefs than much less competent people who are not in positions of power.

- The design of systems that hold people who have power and influence accountable in a way that aligns their interests with both forming accurate beliefs and the interests of humanity at large is a really important problem, and is a major determinant of the overall quality of the decision-making ability of a community. General rationality training helps, but for collective decision making the creation of accountability systems, the tracking of outcome metrics and the design of incentives is at least as big of a factor as the degree to which the individual members of the community are able to come to accurate beliefs on their own.

A lot of these updates have also shaped my thinking while working at CEA, LessWrong and the LTF-Fund over the past 4 years. I've been in various positions of power, and have interacted with many people who had lots of power over the EA and Rationality communities, and I've become a lot more convinced that there is a lot of low-hanging fruit and important experimentation to be done to ensure better levels of accountability and incentive-design for the institutions that guide our community.

I also generally have broadly libertarian intuitions, and a lot of my ideas about how to build functional organizations are based on a more start-up like approach that is favored here in Silicon Valley. Initially these intuitions seemed at conflict with the intuitions for more emphasis on accountability structures, with broken legal systems, ad-hoc legislation, dysfunctional boards and dysfunctional institutions all coming to mind immediately as accountability-systems run wild. I've since then reconciled my thoughts on these topics a good bit.