OpenAI Email Archives (from Musk v. Altman and OpenAI blog)

post by habryka (habryka4) · 2024-11-16T06:38:03.937Z · LW · GW · 80 commentsContents

Subject: question Sam Altman to Elon Musk - May 25, 2015 9:10 PM Elon Musk to Sam Altman - May 25, 2015 11:09 PM Sam Altman to Elon Musk - Jun 24, 2015 10:24 AM Elon Musk to Sam Altman - Jun 24, 2015 11:05 PM Subject: Re: AI docs 📎 Sam Altman to Elon Musk - Nov 20, 2015 10:48AM Elon Musk to Sam Altman - Nov 20, 2015 12:29PM Subject: follow up from call Greg Brockman to Elon Musk, (cc: Sam Altman) - Nov 22, 2015 6:11 PM Elon Musk to: Greg Brockman (cc Sam Altman) - Nov 22, 2015 7:48PM Subject: Draft opening paragraphs Elon Musk to Sam Altman - Dec 8, 2015 9:29 AM Sam Altman to Elon Musk - Dec 8, 2015 10:34 AM Subject: just got word... Sam Altman to Elon Musk - Dec 11, 2015 11:30AM Elon Musk to Sam Altman - Dec 11, 2015 Sam Altman to Elon Musk - Dec 11, 2015 12:15 PM Elon Musk to Sam Altman - Dec 11, 2015 12:32 PM Sam Altman to Elon Musk - Dec 11, 2015 12:35 PM Subject: The OpenAI Company Elon Musk to: Ilya Sutskever, Pamela Vagata, Vicki Cheung, Diederik Kingma, Andrej Karpathy, John D. Schulman, Trevor Blackwell, Greg Brockman, (cc:Sam Altman) - Dec 11, 2015 4:41 PM Subject: Fwd: congrats on the falcon 9to: Elon Musk - Jan 2, 2016 10:12 AM CST Ilya Sutskever to: Elon Musk, Sam Altman, Greg Brockman - Jan 2, 2016 9:06 AM Elon Musk to: Ilya Sutskever - Jan 2, 2016 9:11 AM Subject: Re: Followup thoughts 📎 Elon Musk to: Ilya Sutskever, Greg Brockman, Sam Altman - Feb 19, 2016 12:05 AM Ilya Sutskever to: Elon Musk, (cc: Greg Brockman, Sam Altman) - Feb 19, 2016 10:28 AM Subject: compensation framework Greg Brockman to Elon Musk, (cc: Sam Altman) - Feb 21, 2016 11:34 AM Elon Musk to Greg Brockman, (cc: Sam Altman) - Feb 22, 2016 12:09 AM Greg Brockman to Elon Musk, (cc: Sam Altman) - Feb 22, 2016 12:21 AM Subject: wired article Greg Brockman to Elon Musk, (cc: Sam Teller) - Mar 21, 2016 12:53 AM Elon Musk to Greg Brockman, (cc: Sam Teller) - Mar 21, 2016 6:53:47 AM Subject: Re: Maureen Dowd Sam Teller received this email from Alex Thompson and forwards it to Elon Musk - April 27, 2016 7:25 AM Elon Musk to Sam Teller - Apr 27, 2016 12:24 PM Subject: MSFT hosting deal Sam Altman to Elon Musk, (cc: Sam Teller) - Sep 16, 2016 2:37 PM Elon Musk to Sam Altman, (cc: Sam Teller) - Sep 16, 2016 3:10 PM Sam Altman to Elon Musk, (cc: Sam Teller) - Sep 16, 2016 3:33 PM Elon Musk to Sam Altman, (cc: Sam Teller) - Sep 16, 2016 Sam Altman to Elon Musk, (cc: Sam Teller) - Sep 16, 2016 6:45 PM Sam Teller to Elon Musk - Sep 20, 2016 8:05 PM Elon Musk to Sam Teller - Sep 21, 2016 12:09 AM Subject: Bi-weekly updates 📎 Ilya Sutskever to: Greg Brockman, [redacted], Elon Musk - Jun 12, 2017 10:39 PM Elon Musk to: Ilya Sutskever, (cc: Greg Brockman, [redacted]) - Jun 12, 2017 10:52 PM Elon Musk to: Ilya Sutskever, (cc: Greg Brockman, [redacted]) - Jun 13, 2017 10:24 AM Subject: The business of building AGI 📎 Ilya Sutskever to: Elon Musk, Greg Brockman - Jul 12, 2017 1:36 PM iMessages on OpenAI for-profit 📎 Shivon Zilis to: Greg Brockman - Jul 13, 2017 10:35 PM Greg Brockman to: Shivon Zilis - Jul 13, 2017 10:35 PM Shivon Zilis to: Greg Brockman - Jul 13, 2017 10:43 PM Subject: biweekly update Ilya Sutskever to Elon Musk, Greg Brockman - Jul 20, 2017 1:56 PM Subject: Beijing Wants A.I. to Be Made in China by 2030 - NYTimes.com 📎 Elon Musk to: Greg Brockman, Ilya Sutskever - Jul 21, 2017 3:34 AM Greg Brockman to: Elon Musk, (cc: Ilya Sutskever) - Jul 21, 2017 1:18 PM Elon Musk to: Greg Brockman, (cc: Ilya Sutskever, [redacted]) - Jul 21, 2017 1:18 PM Subject: Tomorrow afternoon 📎 Elon Musk to: Greg Brockman, Ilya Sutskever, Sam Altman, (cc: [redacted], Shivon Zilis) - Aug 11, 2017 9:17 PM Subject: OpenAI notes Shivon Zilis to Elon Musk, (cc: Sam Teller) - Aug 28, 2017 12:01 AM 1. Short-term control structure? 2. Duration of control and transition? 3. Time spent? 4. What to do with time spent? 5. Ratio of time spent to amount of control? 6. Equity split? 7. Capitalization strategy? Takeaways: Elon Musk to Shivon Zilis, (cc: Sam Teller) - Aug 28, 2017 12:08 AM iMessages on majority equity and board control 📎 Shivon Zilis to: Greg Brockman - Sep 4, 2017 8:19 PM Greg Brockman Shivon Zilis Greg Brockman Subject: Re: Current State 📎 Elon Musk to: Ilya Sutskever, (cc: Greg Brockman) - Sep 13, 2017 12:40 AM Subject: Honest Thoughts Ilya Sutskever to Elon Musk, Sam Altman, (cc: Greg Brockman, Sam Teller, Shivon Zilis) - Sep 20, 2017 2:08 PM Elon Musk to Ilya Sutskever (cc: Sam Altman; Greg Brockman; Sam Teller; Shivon Zilis) - Sep 20, 2017 2:17PM Elon Musk to Ilya Sutskever, Sam Altman (cc: Greg Brockman, Sam Teller, Shivon Zilis) - Sep 20, 2017 3:08PM Sam Altman to Elon Musk, Ilya Sutskever (cc: Greg Brockman, Sam Teller, Shivon Zilis) - Sep 21, 2017 9:17 AM Subject: Non-profit Shivon Zilis to Elon Musk, (cc: Sam Teller) - Sep 22, 2017 9:50 AM Elon Musk to Shivon Zilis (cc: Sam Teller) - Sep 22, 2017 10:01 AM Shivon Zilis to Elon Musk, (cc: Sam Teller) - Sep 22, 2017 5:54 PM Subject: ICO Sam Altman to Elon Musk (cc: Greg Brockman, Ilya Sutskever, Sam Teller, Shivon Zilis) - Jan 21, 2018 5:08 PM Elon Musk to Sam Altman (cc: Greg Brockman, Ilya Sutskever, Sam Teller, Shivon Zilis) - Jan 21, 2018 5:56 PM Subject: Top AI institutions today Andrej Karpathy to Elon Musk, (cc: Shivon Zilis) - Jan 31, 2018 1:20 PM Elon Musk to Greg Brockman, Ilya Sutskever, Sam Altman, (cc: Sam Teller, Shivon Zilis, fw: Andrej Karpathy) - Jan 31, 2018 2:02 PM Elon Musk to Andrej Karpathy - Jan 31, 2018 2:07 PM Greg Brockman to: Elon Musk, (cc: Ilya Sutskever, Sam Altman, [redacted], Shivon Zilis) - Jan 31, 2018 10:56 PM 📎 Fundraising The next 3 years Moral high ground The past 2 years Andrej Karpathy to Elon Musk - Jan 31, 2018 11:54 PM Elon Musk to Ilya Sutskever, Greg Brockman - Feb 1, 2018 3:52 AM Subject: AI updates Shivon Zilis to Elon Musk, (cc: Sam Teller) - Mar 25, 2018 11:03AM OpenAI TeslaAI Cerebras Subject: The OpenAI Charter Sam Altman to Elon Musk, (cc: Shivon Zilis) - Apr 2, 2018 1:54 PM Broadly Distributed Benefits Long-Term Safety Technical Leadership Cooperative Orientation Elon Musk to Sam Altman - Apr 2, 2018 2:45PM Subject: AI updates (continuation) Shivon Zilis to Elon Musk, (cc: Sam Teller) - Apr 23, 2018 1:49 AM (continued from thread with same subject above) Tech: Time allocation: Subject: Re: OpenAI update 📎 Sam Altman to: Elon Musk - Dec 17, 2018 3:42 PM Elon Musk to: Sam Altman - Dec 17, 2018 3:47 PM Subject: I feel I should reiterate 📎 Elon Musk to: Ilya Sutskever, Greg Brockman, (cc: Sam Altman, Shivon Zilis) - Dec 26, 2018 12:07 PM Subject: OpenAI Sam Altman to Elon Musk, (cc: Sam Teller, Shivon Zilis) - Mar 6, 2019 3:13PM TL;DR: The mission comes first Corporate structure What OpenAI does Safety Who’s involved Subject: Bloomberg: AI Research Group Co-Founded by Elon Musk Starts For-Profit Arm Elon Musk to Sam Altman - Mar 11, 2019 3:04PM Sam Altman to Elon Musk - Mar 11, 2019 3:11PM iMessage between Elon Musk and Sam Altman 📎 Elon Musk to: Sam Altman – October 23, 2022 3:06AM iMessage between Sam Altman and Shivon Zilis 📎 Sam Altman - 8:07 AM Shivon Zilis Sam Altman - 9:13 PM Sam Altman - 10:50 PM Shivon Zilis None 81 comments

As part of the court case between Elon Musk and Sam Altman, a substantial number of emails between Elon, Sam Altman, Ilya Sutskever, and Greg Brockman have been released. In March 2024 and December 2024 OpenAI also released blogposts with additional emails.

I have found reading through these really valuable, and I haven't found an online source that compiles all of them in an easy to read format. So I made one.[1]

Subject: question

Sam Altman to Elon Musk - May 25, 2015 9:10 PM

Been thinking a lot about whether it's possible to stop humanity from developing AI.

I think the answer is almost definitely not.

If it's going to happen anyway, it seems like it would be good for someone other than Google to do it first.

Any thoughts on whether it would be good for YC to start a Manhattan Project for AI? My sense is we could get many of the top ~50 to work on it, and we could structure it so that the tech belongs to the world via some sort of nonprofit but the people working on it get startup-like compensation if it works. Obviously we'd comply with/aggressively support all regulation.

Sam

Elon Musk to Sam Altman - May 25, 2015 11:09 PM

Probably worth a conversation

Sam Altman to Elon Musk - Jun 24, 2015 10:24 AM

The mission would be to create the first general AI and use it for individual empowerment—ie, the distributed version of the future that seems the safest. More generally, safety should be a first-class requirement.

I think we’d ideally start with a group of 7-10 people, and plan to expand from there. We have a nice extra building in Mountain View they can have.

I think for a governance structure, we should start with 5 people and I’d propose you, Bill Gates, Pierre Omidyar, Dustin Moskovitz, and me. The technology would be owned by the foundation and used “for the good of the world”, and in cases where it’s not obvious how that should be applied the 5 of us would decide. The researchers would have significant financial upside but it would be uncorrelated to what they build, which should eliminate some of the conflict (we’ll pay them a competitive salary and give them YC equity for the upside). We’d have an ongoing conversation about what work should be open-sourced and what shouldn’t. At some point we’d get someone to run the team, but he/she probably shouldn’t be on the governance board.

Will you be involved somehow in addition to just governance? I think that would be really helpful for getting work pointed in the right direction getting the best people to be part of it. Ideally you’d come by and talk to them about progress once a month or whatever. We generically call people involved in some limited way in YC “part-time partners” (we do that with Peter Thiel for example, though at this point he’s very involved) but we could call it whatever you want. Even if you can’t really spend time on it but can be publicly supportive, that would still probably be really helpful for recruiting.

I think the right plan with the regulation letter is to wait for this to get going and then I can just release it with a message like “now that we are doing this, I’ve been thinking a lot about what sort of constraints the world needs for safefy.” I’m happy to leave you off as a signatory. I also suspect that after it’s out more people will be willing to get behind it.

Sam

Elon Musk to Sam Altman - Jun 24, 2015 11:05 PM

Agree on all

Subject: Re: AI docs 📎

Sam Altman to Elon Musk - Nov 20, 2015 10:48AM

Elon–

Plan is to have you, me, and Ilya on the Board of Directors for YC AI, which will be a Delaware non-profit. We will also state that we plan to elect two other outsiders by majority vote of the Board.

We will write into the bylaws that any technology that potentially compromises the safety of humanity has to get consent of the Board to be released, and we will reference this in the researchers’ employment contracts.

At a high level, does that work for you?

I’m cc’ing our GC <redacted> here–is there someone in your office he can work with on the details?

Sam

Elon Musk to Sam Altman - Nov 20, 2015 12:29PM

I think this should be independent from (but supported by) YC, not what sounds like a subsidiary.

Also, the structure doesn’t seem optimal. In particular, the YC stock along with a salary from the nonprofit muddies the alignment of incentives. Probably better to have a standard C corp with a parallel nonprofit.

Subject: follow up from call

Greg Brockman to Elon Musk, (cc: Sam Altman) - Nov 22, 2015 6:11 PM

Hey Elon,

Nice chatting earlier.

As I mentioned on the phone, here's the latest early draft of the blog post: https://quip.com/6YnqA26RJgKr. (Sam, Ilya, and I are thinking about new names; would love any input from you.)

Obviously, there's a lot of other detail to change too, but I'm curious what you think of that kind of messaging. I don't want to pull any punches, and would feel comfortable broadcasting a stronger message if it feels right. I think it's mostly important that our messaging appeals to the research community (or at least the subset we want to hire). I hope for us to enter the field as a neutral group, looking to collaborate widely and shift the dialog towards being about humanity winning rather than any particular group or company. (I think that's the best way to bootstrap ourselves into being a leading research institution.)

I've attached the offer letter template we've been using, with a salary of $175k. Here's the email template I've been sending people:

Attached is your official YCR offer letter! Please sign and date the your convenience. There will be two more documents coming:

- A separate letter offering you 0.25% of each YC batch you are present for (as compensation for being an Advisor to YC).

- The At-Will Employment, Confidential Information, Invention Assignment and Arbitration Agreement

(As this is the first batch of official offers we've done, please forgive any bumpiness along the way, and please let me know if anything looks weird!)

We plan to offer the following benefits:

- Health, dental, and vision insurance

- Unlimited vacation days with a recommendation of four weeks per year

- Paid parental leave

- Paid conference attendance when you are presenting YC AI work or asked to attend by YC AI

We're also happy to provide visa support. When you're ready to talk about visa-related questions, I'm happy to put you in touch with Kirsty from YC.

Please let me know if you have any questions — I'm available to chat any time! Looking forward to working together :).

- gdb

Elon Musk to: Greg Brockman (cc Sam Altman) - Nov 22, 2015 7:48PM

Blog sounds good, assuming adjustments for neutrality vs being YC-centric.

I'd favor positioning the blog to appeal a bit more to the general public -- there is a lot of value to having the public root for us to succeed -- and then having a longer, more detailed and inside-baseball version for recruiting, with a link to it at the end of the general public version.

We need to go with a much bigger number than $100M to avoid sounding hopeless relative to what Google or Facebook are spending. I think we should say that we are starting with a $1B funding commitment. This is real. I will cover whatever anyone else doesn't provide.

Template seems fine, apart from shifting to a vesting cash bonus as default, which can optionally be turned into YC or potentially SpaceX (need to understand how much this will be) stock.

Subject: Draft opening paragraphs

Elon Musk to Sam Altman - Dec 8, 2015 9:29 AM

It is super important to get the opening summary section right. This will be what everyone reads and what the press mostly quotes. The whole point of this release is to attract top talent. Not sure Greg totally gets that.

---- OpenAI is a non-profit artificial intelligence research company with the goal of advancing digital intelligence in the way that is most likely to benefit humanity as a whole, unencumbered by an obligation to generate financial returns.

The underlying philosophy of our company is to disseminate AI technology as broadly as possible as an extension of all individual human wills, ensuring, in the spirit of liberty, that the power of digital intelligence is not overly concentrated and evolves toward the future desired by the sum of humanity.

The outcome of this venture is uncertain and the pay is low compared to what others will offer, but we believe the goal and the structure are right. We hope this is what matters most to the best in the field.

Sam Altman to Elon Musk - Dec 8, 2015 10:34 AM

how is this?

__

OpenAI is a non-profit artificial intelligence research company with the goal of advancing digital intelligence in the way that is most likely to benefit humanity as a whole, unencumbered by an obligation to generate financial returns.

Because we don't have any financial obligations, we can focus on the maximal positive human impact and disseminating AI technology as broadly as possible. We believe AI should be an extension of individual human wills and, in the spirit of liberty, not be concentrated in the hands of the few.

The outcome of this venture is uncertain and the pay is low compared to what others will offer, but we believe the goal and the structure are right. We hope this is what matters most to the best in the field.

Subject: just got word...

Sam Altman to Elon Musk - Dec 11, 2015 11:30AM

that deepmind is going to give everyone in openAI massive counteroffers tomorrow to try to kill it.

do you have any objection to me proactively increasing everyone's comp by 100-200k per year? i think they're all motivated by the mission here but it would be a good signal to everyone we are going to take care of them over time.

sounds like deepmind is planning to go to war over this, they've been literally cornering people at NIPS.

Elon Musk to Sam Altman - Dec 11, 2015

Has Ilya come back with a solid yes?

If anyone seems at all uncertain, I’m happy to call them personally too. Have told Emma this is my absolute top priority 24/7.

Sam Altman to Elon Musk - Dec 11, 2015 12:15 PM

yes committed committed. just gave his word.

Elon Musk to Sam Altman - Dec 11, 2015 12:32 PM

awesome

Sam Altman to Elon Musk - Dec 11, 2015 12:35 PM

everyone feels great, saying stuff like "bring on the deepmind offers, they unfortunately dont have 'do the right thing' on their side"

news out at 130 pm pst

Subject: The OpenAI Company

Elon Musk to: Ilya Sutskever, Pamela Vagata, Vicki Cheung, Diederik Kingma, Andrej Karpathy, John D. Schulman, Trevor Blackwell, Greg Brockman, (cc:Sam Altman) - Dec 11, 2015 4:41 PM

Congratulations on a great beginning!

We are outmanned and outgunned by a ridiculous margin by organizations you know well, but we have right on our side and that counts for a lot. I like the odds.

Our most important consideration is recruitment of the best people. The output of any company is the vector sum of the people within it. If we are able to attract the most talented people over time and our direction is correctly aligned, then OpenAI will prevail.

To this end, please give a lot of thought to who should join. If I can be helpful with recruitment or anything else, I am at your disposal. I would recommend paying close attention to people who haven't completed their grad or even undergrad, but are obviously brilliant. Better to have them join before they achieve a breakthrough.

Looking forward to working together,

Elon

Subject: Fwd: congrats on the falcon 9

<redacted> to: Elon Musk - Jan 2, 2016 10:12 AM CST

Hi Elon Happy new year to you, ██████████!

Congratulations on landing the Falcon 9, what an amazing achievement. Time to build out the fleet now!

I've seen you (and Sam and other OpenAI people) doing a lot of interviews recently extolling the virtues of open sourcing AI, but I presume you realise that this is not some sort of panacea that will somehow magically solve the safety problem? There are many good arguments as to why the approach you are taking is actually very dangerous and in fact may increase the risk to the world. Some of the more obvious points are well articulated in this blog post, that I'm sure you've seen, but there are also other important considerations: http://slatestarcodex.com/2015/12/17/should-ai-be-open/

I'd be interested to hear your counter-arguments to these points.

Best,

████

[Elon forwards the above email to Sam Altman, Ilya Sutskever and Greg Brockman on Jan 2, 2016 8:18AM]

Ilya Sutskever to: Elon Musk, Sam Altman, Greg Brockman - Jan 2, 2016 9:06 AM

The article is concerned with a hard takeoff scenario: if a hard takeoff occurs, and a safe AI is harder to build than an unsafe one, then by opensorucing everything, we make it easy for someone unscrupulous with access to overwhelming amount of hardware to build an unsafe AI, which will experience a hard takeoff. As we get closer to building AI, it will make sense to start being less open. The Open in openAI means that everyone should benefit from the fruits of AI after its built, but it's totally OK to not share the science (even though sharing everything is definitely the right strategy in the short and possibly medium term for recruitment purposes).

Elon Musk to: Ilya Sutskever - Jan 2, 2016 9:11 AM

Yup

Subject: Re: Followup thoughts 📎

Elon Musk to: Ilya Sutskever, Greg Brockman, Sam Altman - Feb 19, 2016 12:05 AM

Frankly, what surprises me is that the AI community is taking this long to figure out concepts. It doesn't sound super hard. High-level linking of a large number of deep nets sounds like the right approach or at least a key part of the right approach. ███████████████████████████████

The probability of DeepMind creating a deep mind increases every year. Maybe it doesn't get past 50% in 2 to 3 years, but it likely moves past 10%. That doesn't sound crazy to me, given their resources.

In any event, I have found that it is far better to overestimate than underestimate competitors.

This doesn't mean we should rush out and hire weak talent. I agree that nothing good would be achieved by that. What we need to do is redouble our efforts to seek out the best people in the world, do whatever it takes to bring them on board and imbue the company with a high sense of urgency.

It will be important for OpenAI to achieve something significant in the next 6 to 9 months to show that we are for real. Doesn't need to be a whopper breakthrough, but it should be enough for key talent around the world to sit up and take notice.

████████████████████████████████████████████████████████████████████████████████████████████████████████████

Ilya Sutskever to: Elon Musk, (cc: Greg Brockman, Sam Altman) - Feb 19, 2016 10:28 AM

Several points:

- It is not the case that once we solve "concepts," we get AI. Other problems that will have to be solved include unsupervised learning, transfer learning, and lifetime learning. We're also doing pretty badly with language right now. It does not mean that these problems will not see significant progress in the coming years, but it is not the case that there is only one problem that stands between us and full human level AI.

- We can't build AI today because we lack key ideas (computers may be too slow, too, but we can't tell). Powerful ideas are produced by top people. Massive clusters help, and are very worth getting, but they play a less important role.

- We will be able to achieve a conventionally significant result in the next 6 to 9 months, simply because the people we already have are very good. Achieving a field-altering result will be harder, riskier, and take longer. But we have a not unreasonable plan for that as well.

Subject: compensation framework

Greg Brockman to Elon Musk, (cc: Sam Altman) - Feb 21, 2016 11:34 AM

Hi all,

We're currently doing our first round of full-time offers post-founding. It's obviously super important to get these right, as the implications are very long-term. I don't yet feel comfortable making decisions here on my own, and would love any guidance.

Here's what we're currently doing:

Founding team: $275k salary + 25bps of YC stock

- Also have option of switching permanently to $125k annual bonus or equivalent in YC or SpaceX stock. I don't know if anyone's taken us up on this.

New offers: $175k annual salary + $125k annual bonus || equivalent in YC or SpaceX stock. Bonus is subject to performance review, where you may get 0% or significantly greater than 100%.

Special cases: gdb + Ilya + Trevor

The plan is to keep a mostly flat salary, and use the bonus multiple as a way to reward strong performers.

Some notes:

- We use a 20% annualized discount for the 8 years until the stock becomes liquid, the $125k bonus equates to 12bps in YC. So the terminal value is more like $750k. This number sounds a lot more impressive, though obviously it's hard to value exactly.

- The founding team was initially offered $175k each. The day after the lab launched, we proactively increased everyone's salary by $100k, telling them that we are financially committed to them as the lab becomes successful, and asking for a personal promise to ignore all counteroffers and trust we'll take care of them.

- We're currently interviewing Ian Goodfellow from Brain, who is one of the top 2 scientists in the field we don't have (the other being Alex Graves, who is a DeepMind loyalist). He's the best person on Brain, so Google will fight for him. We're grandfathering him into the founding team offer.

Some salary datapoints:

- John was offered $250k all-in annualized at DeepMind, thought he could negotiate to $300k easily.

- Wojciech was verbally offered ~$1.25M/year at FAIR (no concrete letter though)

- Andrew Tulloch is getting $800k/year at FB. (A lot is stock which is vesting.)

- Ian Goodfellow is currently getting $165k cash + $600k stock/year at Google.

- Apple is a bit desperate and offering people $550k cash (plus stock, presumably). I don't think anyone good is saying yes.

Two concrete candidates that are on my mind:

- Andrew is very close to saying yes. However, he's concerned about taking such a large paycut.

- Ian has stated he's not primarily concerned with money, but the Bay Area is expensive / wants to make sure he can buy a house. I don't know what will happen if/when Google starts throwing around the numbers they threw at Ilya.

My immediate questions:

1. I expect Andrew will try to negotiate up. Should we stick to his offer, and tell him to only join if he's excited enough to take that kind of paycut (and that others have left more behind)?

2. Ian will be interviewing + (I'm sure) getting an offer on Wednesday. Should we consider his offer final, or be willing to slide depending on what Google offers?

3. Depending on the answers to 1 + 2, I'm wondering if this flat strategy makes sense. If we keep it, I feel we'll have to really sell people on the bonus multiplier. Maybe one option would be using a signing bonus as a lever to get people to sign?

4. Very secondary, but our intern comp is also below market: $9k/mo. (FB offers $9k + free housing, Google offers like $11k/mo all-in.) Comp is much less important to interns than to FT people, since the experience is primary. But I think we may have lost a candidate who was on the edge to this. Given the dollar/hour is so much lower than for FT, should we consider increasing the amount?

I'm happy to chat about this at any time.

- gdb

Elon Musk to Greg Brockman, (cc: Sam Altman) - Feb 22, 2016 12:09 AM

We need to do what it takes to get the top talent. Let's go higher. If, at some point, we need to revisit what existing people are getting paid, that's fine.

Either we get the best people in the world or we will get whipped by Deepmind.

Whatever it takes to bring on ace talent is fine by me.

Deepmind is causing me extreme mental stress. If they win, it will be really bad news with their one mind to rule the world philosophy. They are obviously making major progress and well they should, given the talent level over there.

Greg Brockman to Elon Musk, (cc: Sam Altman) - Feb 22, 2016 12:21 AM

Read you loud and clear. Sounds like a plan. Will plan to continue working with sama on specifics, but let me know if you'd like to be kept in the loop.

- gdb

Subject: wired article

Greg Brockman to Elon Musk, (cc: Sam Teller) - Mar 21, 2016 12:53 AM

Hi Elon,

I was interviewed for a Wired article on OpenAI, and the fact checker sent me some questions. Wanted to sync with you on two in particular to make sure they sound reasonable / aligned with what what you'd say:

Would it be accurate to say that OpenAI is giving away ALL of its research?

At any given time, we will take the action that is likely to most strongly benefit the world. In the short term, we believe the best approach is giving away our research. But longer-term, this might not be the best approach: for example, it might be better not to immediately share a potentially dangerous technology. In all cases, we will be giving away all the benefits of all of our research, and want those to accrue to the world rather than any one institution.

Does OpenAI believe that getting the most sophisticated AI possible in as many hands as possible is humanity's best chance at preventing a too-smart AI in private hands that could find a way to unleash itself on the world for malicious ends?

We believe that using AI to extend individual human wills is the most promising path to ensuring AI remains beneficial. This is appealing because if there are many agents with about the same capabilities they could keep any one bad actor in check. But I wouldn't claim we have all the answers: instead, we're building an organization that can both seek those answers, and take the best possible action regardless of what the answer turns out to be.

Thanks!

- gdb

Elon Musk to Greg Brockman, (cc: Sam Teller) - Mar 21, 2016 6:53:47 AM

Sounds good

Subject: Re: Maureen Dowd

Sam Teller received this email from Alex Thompson and forwards it to Elon Musk - April 27, 2016 7:25 AM

Hi Sam,

I hope you are having a great day and I apologize for interrupting it with another question. Maureen wanted to see if Mr. Musk had any reaction to some of Mr. Zuckerberg's public comments since their interview. In particular, his labelling of Mr. Musk as "hysterical" for his A.I. fears and lectured those who "fearmonger" about the dangers of A.I.. I have included more details below of Mr. Zuckerberg's comments.

Asked in Germany recently about Musk’s forebodings, Zuckerberg called them “hysterical’’ and praised A.I. breakthroughs, including one system he claims can make cancer diagnoses for skin lesions on a mobile phone with the accuracy of “the best dermatologist.’’

“Unless we really mess something up,’’ he said, the machines will always be subservient, not “superhuman.”

“I think we can build A.I. so it works for us and helps us...Some people fearmonger about how A.I. is a huge danger, but that seems farfetched to me and much less likely than disasters due to widespread disease, violence, etc.’’ Or as he put his philosophy at an April Facebook developers conference: “Choose hope over fear.’’

--

Alex Thompson

The New York Times

Elon Musk to Sam Teller - Apr 27, 2016 12:24 PM

History unequivocally illustrates that a powerful technology is a double-edged sword. It would be foolish to assume that AI, arguably the most powerful of all technologies, only has a single edge.

The recent example of Microsoft's AI chatbot shows how quickly it can turn incredibly negative. The wise course of action is to approach the advent of AI with caution and ensure that its power is widely distributed and not controlled by any one company or person.

That is why we created OpenAI.

Subject: MSFT hosting deal

Sam Altman to Elon Musk, (cc: Sam Teller) - Sep 16, 2016 2:37 PM

Here are the MSFT terms. $60MM of compute for $10MM, and input from us on what they deploy in the cloud. LMK if you have any feedback.

Sam

Microsoft/OpenAI Terms[2]

Microsoft and OpenAI: Accelerate the development of deep learning on Azure and CNTK

This non-binding term sheet (“Term Sheet”) between Microsoft Corporation (“Microsoft”) and OpenAI (“OpenAI”) sets forth the terms for a potential business relationship between the parties. This Term Sheet is intended to form a basis of discussion and does not state all matters upon which agreement must be reached before executing a legally binding commercial agreement (“Commercial Agreement”). The existence and terms of this Term Sheet, and all discussions related thereto or to a Commercial Agreement, are Confidential Information as defined and governed by the Non-Disclosure Agreement between the parties dated 17 March, 2016 (“NDA”). Except for the binding nature of the foregoing confidentiality obligations, this Term Sheet is non-binding.

Deal Purpose

OpenAI is focused on deep learning in such a way as to benefit humanity. Microsoft and OpenAI desire to partner to enable the acceleration of deep learning on Microsoft Azure. Towards this goal, Microsoft will provide OpenAI with Azure compute capabilities at a favorable price that would enable OpenAI to continue their mission effectively.

Deal Business Goal

Microsoft

· Accelerate deep learning environment on Azure

· Attract a net new audience of next generation developers

· Joint PR and evangelism of deep learning on Azure

OpenAI

· Deeply discounted GPU compute offering over the deal term (3 years) for use in their nonprofit research: $60m of Compute for $10m

· Joint PR and evangelism of OpenAI on Azure

Parties (Legal entities) Microsoft OpenAI

Proposed Deal Execution Date September 19, 2016

Proposed Deal Commencement Date Same as deal execution date

Legal Authoring Microsoft holds the pen.

Deal Term 3 years

Engineering Terms

- Compute: Microsoft will provide OpenAI GPU core hours of compute at the agreed upon price for OpenAI’s workloads to run in Azure.

- Geographic Location: Geographic location decisions will be at Microsoft discretion depending on capacity and availability. Microsoft will also be responsible for sharing the deployment strategy and timelines with OpenAI.

- SLA: For all Virtual Machines that have two or more instances deployed in the same availability set, Microsoft guarantee OpenAI will have virtual machine connectivity to at least one instance at least 99.95% of the time. Microsoft will be held accountable to the SLA’s provided on https://azure.microsoft.com/enus/support/legal/sla/virtual-machines/v1_2/

- Evaluation, Evangelization, and Usage of CNTK v2, Azure Batch and HD-Insight: OpenAI will evaluate CNTK v2, Azure Batch, and HDInsight for their research, provide feedback on how Microsoft can improve these products. OpenAI will work with Microsoft to evangelize these products to their research and developer ecosystems, and evangelize Microsoft Azure as their preferred public cloud provider. At their sole discretion, and as it makes sense for their research, OpenAI will adopt these products

- Ramp: Microsoft and OpenAI will work together for creating a ramp plan that balances capacity per clusters. The initial timeline for ramp is a minimum of 30 days that will be augmented by Microsoft’s capacity expansion plans in the coming months.

- Capacity: OpenAI will be given an allocation of capacity in the preview cluster (located in US South Central) for short term requirements and Microsoft will provide quota access to the subsequent K80 GPU clusters that go live in the 4th quarter of 2016 with the intention of more capacity in Q1 2017 (calendar year).

Financial Terms

· Financial Terms: Microsoft will offer $60m worth of List Compute (including GPU) at a deep discount which results in a price of $10m to be paid by OpenAI over the course of the deal. In the event OpenAI consumes less than $10m worth of Azure compute, OpenAI will be responsible for paying the balance between the used amount and $10m at the end of the deal term to Microsoft.

Marketing & PR Terms

Microsoft and OpenAI commit to jointly evangelizing deep learning capabilities on Azure as agreed upon by both parties.

- Ignite: Announce the partnership at Microsoft’s Ignite event with executives (Sam Altman from OpenAI and Satya Nadella from Microsoft) from both parties inaugurating the collaboration

- PR: Microsoft and OpenAI will work together to issue a joint press release about the partnership including any materials such as blog posts and videos.

Elon Musk to Sam Altman, (cc: Sam Teller) - Sep 16, 2016 3:10 PM

This actually made me feel nauseous. It sucks and is exactly what I would expect from them.

Evaluation, Evangelization, and Usage of CNTK v2, Azure Batch and HD-Insight: OpenAI will evaluate CNTK v2, Azure Batch, and HD-Insight for their research, provide feedback on how Microsoft can improve these products. OpenAI will work with Microsoft to evangelize these products to their research and developer ecosystems, and evangelize Microsoft Azure as their preferred public cloud provider. At their sole discretion, and as it makes sense for their research, OpenAI will adopt these products

Let’s just say that we are willing to have Microsoft donate spare computing time to OpenAI and have that be known, but we want do any contract or agree to “evangelize”. They can turn us off at any time and we can leave at any time.

Sam Altman to Elon Musk, (cc: Sam Teller) - Sep 16, 2016 3:33 PM

I had the same reaction after reading that section and they've already agreed to drop.

We had originally just wanted space cycles donated but the team wanted more certainty that capacity will be available. But I'll work with MSFT to make sure there are no strings attached

Elon Musk to Sam Altman, (cc: Sam Teller) - Sep 16, 2016

We should just do this low key. No certainty either way. No contract.

Sam Altman to Elon Musk, (cc: Sam Teller) - Sep 16, 2016 6:45 PM

ok will see how much $ I can get in that direction.

Sam Teller to Elon Musk - Sep 20, 2016 8:05 PM

Microsoft is now willing to do the agreement for a full $50m with “good faith effort at OpenAI's sole discretion” and full mutual termination rights at any time. No evangelizing. No strings attached. No looking like lame Microsoft marketing pawns. Ok to move ahead?

Elon Musk to Sam Teller - Sep 21, 2016 12:09 AM

Fine by me if they don't use this in active messaging. Would be worth way more than $50M not to seem like Microsoft's marketing bitch.

Subject: Bi-weekly updates 📎

Ilya Sutskever to: Greg Brockman, [redacted], Elon Musk - Jun 12, 2017 10:39 PM

This is the first of our bi-weekly updates. The goal is to keep you up to date, and to help us make greater use from your visits.

Compute:

- Compute is used in two ways: it is used to run a big experiment quickly, and it is used to run many experiments in parallel.

- 95% of progress comes from the ability to run big experiments quickly. The utility of running many experiments is much less useful.

- In the old days, a large cluster could help you run more experiments, but it could not help with running a single large experiment quickly.

- For this reason, an academic lab could compete with Google, because Google's only advantage was the ability to run many experiments. This is not a great advantage.

- Recently, it has become possible to combine 100s of GPUs and 100s of CPUs to run an experiment that's 100x bigger than what is possible on a single machine while requiring comparable time. This has become possible due to the work of many different groups. As a result, the minimum necessary cluster for being competitive is now 10–100x larger than it was before.

- Currently, every Dota experiment uses 1000+ cores, and it is only for the small 1v1 variant, and on extremely small neural network policies. We will need more compute to just win the 1v1 variant. To win the full 5v5 game, we will need to run fewer experiments, where each experiment is at least 1 order of magnitude larger (possibly more!).

- TLDR: What matters is the size and speed of our experiments. In the old days, a big cluster could not let anyone run a larger experiment quickly. Today, a big cluster lets us run a large experiment 100x faster.

- In order to be capable of accomplishing our projects even in theory, we need to increase the number of our GPUs by a factor of 10x in the next 1–2 months (we have enough CPUs). We will discuss the specifics in our in-person meeting.

Dota 2:

- We will solve the 1v1 version of the game in 1 month. Fans of the game care about 1v1 a fair bit.

- We are now at a point where a single experiment consumes 1000s of cores, and where adding more distributed compute increases performance.

- Here is a cool video of our bot doing something rather clever: https://www.youtube.com/watch?v=Y-vxbREX5ck&feature=youtu.be&t=99.

Rapid learning of new games:

- Infra work is underway

- We implemented several baselines

- Fundamentally, we're not where we want to be, and are taking action to correct this.

Robotics:

- Current status: The HER algorithm (https://www.youtube.com/watch?v=Dz_HuzgMzxo) can learn to solve many low-dimensional robotics tasks that were previously unsolvable very rapidly. It is non-obvious, simple, and effective.

- In 6 months, we will accomplish at least one of: single-handed Rubik's cube, pen spinning (https://www.youtube.com/watch?v=dDavyRnEPrI), Chinese balls spinning (https://www.youtube.com/watch?v=M9N1duIl4Fc) using the HER algorithm and using a sim2real method [such as https://blog.openai.com/spam-detection-in-the-physical-world/].

- The above will be deployed on the robotic hand: [Link to Google Drive] [this video is human controlled, not algorithmic controlled. Need to be logged in to the OpenAI account to see the video].

Self play as a key path to AGI:

- Self play in multiagent environments is magical: if you place agents into an environment, then no matter how smart (or not smart) they are, the environment will provide them with the exact level of challenge, which can be faced only by outsmarting the competition. So for example, if you have a group of children, they will find each other's company to be challenging; likewise for a collection of super intelligences of comparable intelligence. So the "solution" to self-play is to become more and more intelligent, without bound.

- Self-play lets us get "something out of nothing." The rules of a competitive game can be simple, but the best strategy for playing this game can be immensely complex. [motivating example: https://www.youtube.com/watch?v=u2T77mQmJYI].

- Training agents in simulation to develop very good dexterity via competitive fighting, such as wrestling. Here is a video of ant-shaped robots that we trained to struggle: <redacted>

- Current work on self-play: getting agents to learn to develop a language [gifs in https://blog.openai.com/learning-to-cooperate-compete-and-communicate/].Agents are doing "stuff," but it's still work in progress.

We have a few more cool smaller projects. Updates to be presented as they produce significant results.

Elon Musk to: Ilya Sutskever, (cc: Greg Brockman, [redacted]) - Jun 12, 2017 10:52 PM

Thanks, this is a great update.

Elon Musk to: Ilya Sutskever, (cc: Greg Brockman, [redacted]) - Jun 13, 2017 10:24 AM

Ok. Let's figure out the least expensive way to ensure compute power is not a constraint...

Subject: The business of building AGI 📎

Ilya Sutskever to: Elon Musk, Greg Brockman - Jul 12, 2017 1:36 PM

We usually decide that problems are hard because smart people have worked on them unsuccessfully for a long time. It’s easy to think that this is true about AI. However, the past five years of progress have shown that the earliest and simplest ideas about AI — neural networks — were right all along, and we needed modern hardware to get them working.

Historically, AI breakthroughs have consistently happened with models that take between 7–10 days to train. This means that hardware defines the surface of potential AI breakthroughs. This is a statement about human psychology more than about AI. If experiments take longer than this, it’s hard to keep all the state in your head and iterate and improve. If experiments are shorter, you’ll just use a bigger model.

It’s not so much that AI progress is a hardware game, any more than physics is a particle accelerator game. But if our computers are too slow, no amount of cleverness will result in AGI, just like if a particle accelerator is too small, we have no shot at figuring out how the universe works. Fast enough computers are a necessary ingredient, and all past failures may have been caused by computers being too slow for AGI.

Until very recently, there was no way to use many GPUs together to run faster experiments, so academia had the same “effective compute” as industry. But earlier this year, Google used two orders of magnitude more compute than is typical to optimize the architecture of a classifier, something that usually requires lots of researcher time. And a few months ago, Facebook released a paper showing how to train a large ImageNet model with near-linear speedup to 256 GPUs (given a specially-configured cluster with high-bandwidth interconnects).

Over the past year, Google Brain produced impressive results because they have an order of magnitude or two more GPUs than anyone. We estimate that Brain has around 100k GPUs, FAIR has around 15–20k, and DeepMind allocates 50 per researcher on question asking, and rented 5k GPUs from Brain for AlphaGo. Apparently, when people run neural networks at Google Brain, it eats up everyone’s quotas at DeepMind.

We're still missing several key ideas necessary for building AGI. How can we use a system's understanding of “thing A” to learn “thing B” (e.g. can I teach a system to count, then to multiply, then to solve word problems)? How do we build curious systems? How do we train a system to discover the deep underlying causes of all types of phenomena — to act as a scientist? How can we build a system that adapts to new situations on which it hasn’t been trained on precisely (e.g. being asked to apply familiar concepts in an unfamiliar situation)? But given enough hardware to run the relevant experiments in 7–10 days, history indicates that the right algorithms will be found, just like physicists would quickly figure out how the universe works if only they had a big enough particle accelerator.

There is good reason to believe that deep learning hardware will speed up 10x each year for the next four to five years. The world is used to the comparatively leisurely pace of Moore’s Law, and is not prepared for the drastic changes in capability this hardware acceleration will bring. This speedup will happen not because of smaller transistors or faster clock cycles; it will happen because like the brain, neural networks are intrinsically parallelizable, and new highly parallel hardware is being built to exploit this.

Within the next three years, robotics should be completely solved, AI should solve a long-standing unproven theorem, programming competitions should be won consistently by AIs, and there should be convincing chatbots (though no one should pass the Turing test). In as little as four years, each overnight experiment will feasibly use so much compute capacity that there’s an actual chance of waking up to AGI, given the right algorithm — and figuring out the algorithm will actually happen within 2–4 further years of experimenting with this compute in a competitive multiagent simulation.

To be in the business of building safe AGI, OpenAI needs to:

- Have the best AI results each year. In particular, as hardware gets exponentially better, we’ll have dramatically better results. Our DOTA and Rubik’s cube projects will have impressive results for the current level of compute. Next year’s projects will be even more extreme, and what’s realistic depends primarily on what compute we can access.

- Increase our GPU cluster from 600 GPUs to 5000 GPUs ASAP. As an upper bound, this will require a capex of $12M and an opex of $5–6M over the next year. Each year, we’ll need to exponentially increase our hardware spend, but we have reason to believe AGI can ultimately be built with less than $10B in hardware.

- Increase our headcount: from 55 (July 2017) to 80 (January 2018) to 120 (January 2019) to 200 (January 2020). We’ve learned how to organize our current team, and we’re now bottlenecked by number of smart people trying out ideas.

- Lock down an overwhelming hardware advantage. The 4-chip card that <redacted> says he can build in 2 years is effectively TPU 3.0 and (given enough quantity) would allow us to be on an almost equal footing with Google on compute. The Cerebras design is far ahead of both of these, and if they’re real then having exclusive access to them would put us far ahead of the competition. We have a structural idea for how to do this given more due diligence, best to discuss on a call.

2/3/4 will ultimately require large amounts of capital. If we can secure the funding, we have a real chance at setting the initial conditions under which AGI is born. Increased funding needs will come lockstep with increased magnitude of results. We should discuss options to obtain the relevant funding, as that’s the biggest piece that’s outside of our direct control.

Progress this week:

- We’ve beat our top 1v1 test player (he’s top 30 in North America at 1v1, and beats the top 1v1 player about 30% of the time), but the bot can also be exploited by playing weirdly. We’re working on understanding these exploits and cracking down on them.

- Repeated from Saturday, here’s the first match where we beat our top test player: https://www.youtube.com/watch?v=FBoUHay7XBI&feature=youtu.be&t=345

- Every additional day of training makes the bot stronger and harder to exploit.

- Robot getting closer to solving Rubik’s cube.

- The improved cube simulation teleoperated by a human: <redacted>.

- Our defense against adversarial examples is starting to work on ImageNet.

- We will completely solve the problem of adversarial examples by the end of August.

████████████████████████████████████

██████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████

███████████████████████████████████████████████████████████████████████████████████████████████████████████████

iMessages on OpenAI for-profit 📎

Shivon Zilis to: Greg Brockman - Jul 13, 2017 10:35 PM

How did it go?

Greg Brockman to: Shivon Zilis - Jul 13, 2017 10:35 PM

Went well!

ocean: agreed on announcing around the international; he suggested playing against the best player from the winning team which seems cool to me. I asked him to call <redacted> and he said he would. I think this is better than our default of announcing in advance we’ve beaten the best 1v1 player and then having our bot playable at a terminal at TI ████ ██████████ █████████████████ ██.

gpus: said do what we need to do

cerebras: we talked about the reverse merger idea a bit. independent of cerebras, turned into talking about structure (he said non-profit was def the right one early on, may not be the right one now — ilya and I agree with this for a number of reasons). He said he’s going to Sun Valley to ask <redacted> to donate.

Shivon Zilis to: Greg Brockman - Jul 13, 2017 10:43 PM

<redacted> and others. Will try to work it for ya.

Subject: biweekly update

Ilya Sutskever to Elon Musk, Greg Brockman - Jul 20, 2017 1:56 PM

- The robot hand can now solve a Rubik's cube in simulation:

https://drive.google.com/a/openai.com/file/d/0B60rCy4P2FOIenlLdzN2LXdiOTQ/view?usp=sharing (needs OpenAI login)

Physical robot will do same in September

- 1v1 bot is no longer exploitable

It can no longer be beaten using “unconventional” strategies

On track to beat all humans in 1 month

- Athletic competitive robots:

https://drive.google.com/a/openai.com/file/d/0B60rCy4P2FOIZE4wNVdlbkx6U2M/view?usp=sharing (needs OpenAI login)

- Released an adversarial example that fools a camera from all angles simultaneously:

- DeepMind's directly used one of our algorithms to produce their parkour results:

DeepMind's results: https://deepmind.com/blog/producing-flexible-behaviourssimulated-environments/

DeepMind's technical papers explicitly state they directly used our algorithms

Our blogpost about our algorithm: https://blog.openai.com/openai-baselines-ppo/ (DeepMind used an older version).

- Coming up:

Designing the for-profit structure

Negotiate merger terms with Cerebras

More due diligence with Cerebras

Subject: Beijing Wants A.I. to Be Made in China by 2030 - NYTimes.com 📎

Elon Musk to: Greg Brockman, Ilya Sutskever - Jul 21, 2017 3:34 AM

They will do whatever it takes to obtain what we develop. Maybe another reason to change course. [Link to news article]

Greg Brockman to: Elon Musk, (cc: Ilya Sutskever) - Jul 21, 2017 1:18 PM

100% agreed. We think the path must be:

- AI research non-profit (through end of 2017)

- AI research + hardware for-profit (starting 2018)

- Government project (when: ??)

█████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████

-gdb

Elon Musk to: Greg Brockman, (cc: Ilya Sutskever, [redacted]) - Jul 21, 2017 1:18 PM

Let's talk Sat or Sun. I have a tentative game plan that I'd like to run by you.

Subject: Tomorrow afternoon 📎

Elon Musk to: Greg Brockman, Ilya Sutskever, Sam Altman, (cc: [redacted], Shivon Zilis) - Aug 11, 2017 9:17 PM

████████████████████████████████ Are you guys able to meet or do a conf call tomorrow afternoon?

Time to make the next step for OpenAI. This is the triggering event.

Subject: OpenAI notes

Shivon Zilis to Elon Musk, (cc: Sam Teller) - Aug 28, 2017 12:01 AM

Elon,

As I'd mentioned, Greg had asked to talk through a few things this weekend. Ilya ended up joining, and they pretty much just shared all of what they are still trying to think through. This is the distillation of that random walk of a conversation... came down to 7 unanswered questions with their commentary below. Please note that I'm not advocating for any of this, just structuring and sharing the information I heard.

1. Short-term control structure?

-Is the requirement for absolute control? They wonder if there is a scenario where there could be some sort of creative overrule provision if literally everyone else disagreed on direction (not just the three of them, but perhaps a broader board)?

2. Duration of control and transition?

-*The* non-negotiable seems to be an ironclad agreement to not have any one person have absolute control of AGI if it's created. Satisfying this means a situation where, regardless of what happens to the three of them, it's guaranteed that power over the company is distributed after the 2-3 year initial period.

3. Time spent?

-How much time does Elon want to spend on this, and how much time can he actually afford to spend on this? In what timeframe? Is this an hour a week, ten hours a week, something in between?

4. What to do with time spent?

-They don't really know how he prefers to spend time at his other companies and how he'd want to spend his time on this. Greg and Ilya are confident they could build out SW / ML side of things pretty well. They are not confident on the hardware front. They seemed hopeful Elon could spend some time on that since that's where they are weak, but did want his help in all domains he was interested in.

5. Ratio of time spent to amount of control?

-They are cool with less time / less control, more time / more control, but not less time / more control. Their fear is that there won't be enough time to discuss relevant contextual information to make correct decisions if too little time is spent.

6. Equity split?

-Greg still instinctually anchored on equal split. I personally disagree with him on that instinct and he asked for and was receptive to hearing other things he could use to recalibrate his mental model.

-Greg noted that Ilya in some ways has contributed millions by leaving his earning potential on the table at Google.

-One concern they had was the proposed employee pool was too small.

7. Capitalization strategy?

-Their instinct is to raise much more than $100M out of the gate. They are of the opinion that the datacenter they need alone would cost that so they feel more comfortable raising more.

Takeaways:

Unsure if any of this is amenable but just from listening to all of the data points they threw out, the following would satisfy their current sticky points:

-Spending 5-10 hours a week with near full control, or spend less time and have less control.

-Having a creative short-term override just for extreme scenarios that was not just Greg / Sam / Ilya.

-An ironclad 2-3yr minority control agreement, regardless of the fates of Greg / Sam / Ilya.

-$200M-$1B initial raise.

-Greg and Ilya's stakes end up higher than 1/10 of Elon's but not significantly (this remains the most ambiguous).

-Increasing employee pool.

Elon Musk to Shivon Zilis, (cc: Sam Teller) - Aug 28, 2017 12:08 AM

This is very annoying. Please encourage them to go start a company. I've had enough.

iMessages on majority equity and board control 📎

Shivon Zilis to: Greg Brockman - Sep 4, 2017 8:19 PM

Actually I'm still slightly confused on the proposed detail around the share % and board control

Given it sounds like proposal is that Elon always gets max(3 seats, 25% of seats) and all the power rests with the board

Greg Brockman

Yes. Though I am guessing he intended your overrule provision for first bit but I'm not sure

Shivon Zilis

So what power does having a certain % of shares have?

Sounds like intention is static board members, or at least board members coming statically from certain pools

But yeah would be curious to hear the specifics. Also I guess for even board sizes a 50% means no action?

Greg Brockman

I think it would grow to at least 7 pretty quick. The question is not that but when does it transition to traditional board if in fact transitions

And he sounded fairly non-negotiable on his equity being between 50-60 so moot point of having majority

Subject: Re: Current State 📎

Elon Musk to: Ilya Sutskever, (cc: Greg Brockman) - Sep 13, 2017 12:40 AM

Sounds good. The three common stock seats (you, Greg and Sam) should be elected by common shareholders. They will de facto be yours, but not in the unlikely event that you lose the faith of a huge percentage of common stockholders over time or step away from the company by choice.

I think that the Preferred A investment round (supermajority me) should have the right to appoint four (not three) seats. I would not expect to appoint them immediately, but, like I said I would unequivocally have initial control of the company, but this will change quickly.

The rough target would be to get to a 12 person board (probably more like 16 if this board really ends up deciding the fate of the world) where each board member has a deep understanding of technology, at least a basic understanding of AI and strong & sensible morals.

Apart from the Series A four and the Common three, there would likely be a board member with each new lead investor/ally. However, the specific individual new board members can only be added if all but one existing board member agrees. Same for removing board members.

There will also be independent board members we want to add who aren't associated with an investor. Same rules apply: requires all but one of existing directors to add or remove.

I'm super tired and don't want to overcomplicate things, but this seems approx right. At the sixteen person board level, we would have 7/16 votes and I'd have a 25% influence, which is my min comfort level. That sounds about right to me. If everyone else we asked to join our board is truly against us, we should probably lose.

As mentioned, my experience with boards (assuming they consist of good, smart people) is that they are rational and reasonable. There is basically never a real hardcore battle where an individual board vote is pivotal, so this is almost certainly (sure hope so) going to be a moot point.

As a closing note, I've been really impressed with the quality of discussion with you guys on the equity and board stuff. I have a really good feeling about this.

Lmk if above seems reasonable.

Elon

Subject: Honest Thoughts

Ilya Sutskever to Elon Musk, Sam Altman, (cc: Greg Brockman, Sam Teller, Shivon Zilis) - Sep 20, 2017 2:08 PM

Elon, Sam,

This process has been the highest stakes conversation that Greg and I have ever participated in, and if the project succeeds, it'll turn out to have been the highest stakes conversation the world has seen. It's also been a deeply personal conversation for all of us.

Yesterday while we were considering making our final commitment given the non-solicit agreement, we realized we'd made a mistake. We have several important concerns that we haven't raised with either of you. We didn't raise them because we were afraid to: we were afraid of harming the relationship, having you think less of us, or losing you as partners.

There is some chance that our concerns will prove to be unresolvable. We really hope it's not the case, but we know we will fail for sure if we don't all discuss them now. And we have hope that we can work through them and all continue working together.

Elon:

We really want to work with you. We believe that if we join forces, our chance of success in the mission is the greatest. Our upside is the highest. There is no doubt about that. Our desire to work with you is so great that we are happy to give up on the equity, personal control, make ourselves easily firable — whatever it takes to work with you.

But we realized that we were careless in our thinking about the implications of control for the world. Because it seemed so hubristic, we have not been seriously considering the implications of success.

The current structure provides you with a path where you end up with unilateral absolute control over the AGI. You stated that you don't want to control the final AGI, but during this negotiation, you've shown to us that absolute control is extremely important to you.

As an example, you said that you needed to be CEO of the new company so that everyone will know that you are the one who is in charge, even though you also stated that you hate being CEO and would much rather not be CEO.

Thus, we are concerned that as the company makes genuine progress towards AGI, you will choose to retain your absolute control of the company despite current intent to the contrary. We disagree with your statement that our ability to leave is our greatest power, because once the company is actually on track to AGI, the company will be much more important than any individual.

The goal of OpenAI is to make the future good and to avoid an AGI dictatorship. You are concerned that Demis could create an AGI dictatorship. So do we. So it is a bad idea to create a structure where you could become a dictator if you chose to, especially given that we can create some other structure that avoids this possibility.

We have a few smaller concerns, but we think it's useful to mention it here:

In the event we decide to buy Cerebras, my strong sense is that it'll be done through Tesla. But why do it this way if we could also do it from within OpenAI? Specifically, the concern is that Tesla has a duty to shareholders to maximize shareholder return, which is not aligned with OpenAI's mission. So the overall result may not end up being optimal for OpenAI.

We believe that OpenAI the non-profit was successful because both you and Sam were in it. Sam acted as a genuine counterbalance to you, which has been extremely fruitful. Greg and I, at least so far, are much worse at being a counterbalance to you. We feel this is evidenced even by this negotiation, where we were ready to sweep the long-term AGI control questions under the rug while Sam stood his ground.

Sam:

When Greg and I are stuck, you've always had an answer that turned out to be deep and correct. You've been thinking about the ways forward on this problem extremely deeply and thoroughly. Greg and I understand technical execution, but we don't know how structure decisions will play out over the next month, year, or five years.

But we haven't been able to fully trust your judgements throughout this process, because we don't understand your cost function.

We don't understand why the CEO title is so important to you. Your stated reasons have changed, and it's hard to really understand what's driving it.

Is AGI truly your primary motivation? How does it connect to your political goals? How has your thought process changed over time?

Greg and Ilya:

We had a fair share of our own failings during this negotiation, and we'll list some of them here (Elon and Sam, I'm sure you'll have plenty to add...):

During this negotiation, we realized that we have allowed the idea of financial return 2-3 years down the line to drive our decisions. This is why we didn't push on the control — we thought that our equity is good enough, so why worry? But this attitude is wrong, just like the attitude of AI experts who don't think that AI safety is an issue because they don't really believe that they'll build AGI.

We did not speak our full truth during the negotiation. We have our excuses, but it was damaging to the process, and we may lose both Sam and Elon as a result.

There's enough baggage here that we think it's very important for us to meet and talk it out. Our collaboration will not succeed if we don't. Can all four of us meet today? If all of us say the truth, and resolve the issues, the company that we'll create will be much more likely to withstand the very strong forces it'll experience.

- Greg & Ilya

Elon Musk to Ilya Sutskever (cc: Sam Altman; Greg Brockman; Sam Teller; Shivon Zilis) - Sep 20, 2017 2:17PM

Guys, I've had enough. This is the final straw. Either go do something on your own or continue with OpenAI as a nonprofit. I will no longer fund OpenAI until you have made a firm commitment to stay or I'm just being a fool who is essentially providing free funding for you to create a startup. Discussions are over

Elon Musk to Ilya Sutskever, Sam Altman (cc: Greg Brockman, Sam Teller, Shivon Zilis) - Sep 20, 2017 3:08PM

To be clear, this is not an ultimatum to accept what was discussed before. That is no longer on the table.

Sam Altman to Elon Musk, Ilya Sutskever (cc: Greg Brockman, Sam Teller, Shivon Zilis) - Sep 21, 2017 9:17 AM

i remain enthusiastic about the non-profit structure!

Subject: Non-profit

Shivon Zilis to Elon Musk, (cc: Sam Teller) - Sep 22, 2017 9:50 AM

Hi Elon,

Quick FYI that Greg and Ilya said they would like to continue with the non-profit structure. They know they would need to provide a guarantee that they won't go off doing something else to make it work.

Haven't spoken to Altman yet but he asked to talk this afternoon so will report anything I hear back.

If anything I can do to help let me know.

Elon Musk to Shivon Zilis (cc: Sam Teller) - Sep 22, 2017 10:01 AM

Ok

Shivon Zilis to Elon Musk, (cc: Sam Teller) - Sep 22, 2017 5:54 PM

From Altman:

Structure: Great with keeping non-profit and continuing to support it.

Trust: Admitted that he lost a lot of trust with Greg and Ilya through this process. Felt their messaging was inconsistent and felt childish at times.

Hiatus: Sam told Greg and Ilya he needs to step away for 10 days to think. Needs to figure out how much he can trust them and how much he wants to work with them. Said he will come back after that and figure out how much time he wants to spend.

Fundraising: Greg and Ilya have the belief that 100's of millions can be achieved with donations if there is a definitive effort. Sam thinks there is a definite path to 10's of millions but TBD on more. He did mention that Holden was irked by the move to for-profit and potentially offered more substantial amount of money if OpenAI stayed a non-profit, but hasn't firmly committed. Sam threw out a $100M figure for this if it were to happen.

Communications: Sam was bothered by how much Greg and Ilya keep the whole team in the loop with happenings as the process unfolded. Felt like it distracted the team. On the other hand, apparently in the last day almost everyone has been told that the for-profit structure is not happening and he is happy about this at least since he just wants the team to be heads down again.

Shivon

Subject: ICO

Sam Altman to Elon Musk (cc: Greg Brockman, Ilya Sutskever, Sam Teller, Shivon Zilis) - Jan 21, 2018 5:08 PM

Elon—

Heads up, spoke to some of the safety team and there were a lot of concerns about the ICO and possible unintended effects in the future.

Planning to talk to the whole team tomorrow and invite input. Going to emphasize the need to keep this confidential, but I think it's really important we get buy-in and give people the chance to weigh in early.

Sam

Elon Musk to Sam Altman (cc: Greg Brockman, Ilya Sutskever, Sam Teller, Shivon Zilis) - Jan 21, 2018 5:56 PM

Absolutely

Subject: Top AI institutions today

Andrej Karpathy to Elon Musk, (cc: Shivon Zilis) - Jan 31, 2018 1:20 PM

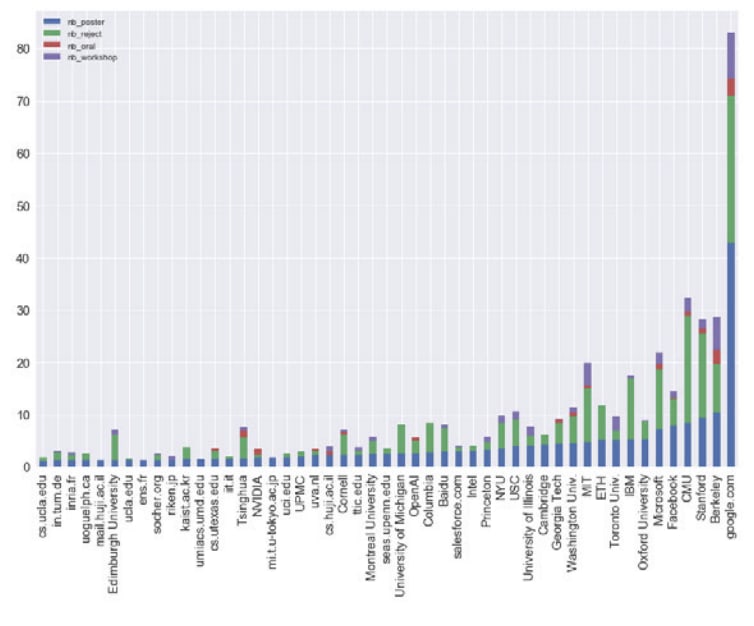

The ICLR conference (which is the top deep learning - specific conference (NIPS is larger, but more diffuse)) released their decisions for accepted/rejected papers, and someone made some nice plots that show where the current deep learning / AI research happens at. It's an imperfect measure because not every company might prioritize paper publications, but it's indicative.

Here's a plot that shows the total number of papers (broken down by oral/poster/workshop/rejected) from any institution:

Long story short, Google is dominating with 83 paper submissions. The academic institutions (Berkeley / Stanford / CMU / MIT) are next, in 20-30 ranges each.

Just thought it was an interesting snapshot of where all the action is today. The full data is here: http://webia.lip6.fr/~pajot/dataviz.html

-Andrej

Elon Musk to Greg Brockman, Ilya Sutskever, Sam Altman, (cc: Sam Teller, Shivon Zilis, fw: Andrej Karpathy) - Jan 31, 2018 2:02 PM

OpenAI is on a path of certain failure relative to Google. There obviously needs to be immediate and dramatic action or everyone except for Google will be consigned to irrelevance.

I have considered the ICO approach and will not support it. In my opinion, that would simply result in a massive loss of credibility for OpenAI and everyone associated with the ICO. If something seems too good to be true, it is. This was, in my opinion, an unwise diversion.

The only paths I can think of are a major expansion of OpenAI and a major expansion of Tesla AI. Perhaps both simultaneously. The former would require a major increase in funds donated and highly credible people joining our board. The current board situation is very weak.

I will set up a time for us to talk tomorrow. To be clear, I have a lot of respect for your abilities and accomplishments, but I am not happy with how things have been managed. That is why I have had trouble engaging with OpenAI in recent months. Either we fix things and my engagement increases a lot or we don’t and I will drop to near zero and publicly reduce my association. I will not be in a situation where the perception of my influence and time doesn’t match the reality.

Elon Musk to Andrej Karpathy - Jan 31, 2018 2:07 PM

fyi

What do you think makes sense? Happy to talk by phone if that’s better.

Greg Brockman to: Elon Musk, (cc: Ilya Sutskever, Sam Altman, [redacted], Shivon Zilis) - Jan 31, 2018 10:56 PM 📎

Hi Elon,

Thank you for the thoughtful note. I have always been impressed by your focus on the big picture, and agree completely we must change trajectory to achieve our goals. Let's speak tomorrow, any time 4p or later will work.

My view is that the best future will come from a major expansion of OpenAI. Our goal and mission are fundamentally correct, and that will increasingly be a superpower as AGI grows near.

Fundraising

Our fundraising conversations show that:

- Ilya and I are able to convince reputable people that AGI can really happen in the next ≤10 years

- There's appetite for donations from those people

- There's very large appetite for investments from those people

I respect your decision on the ICO idea, which matches the evolution of our own thinking. Sam Altman has been working on a fundraising structure that does not rely on a public offering, and we will be curious to hear your feedback.

Of the people we've been talking to, the following people are currently my top suggestions for board members. Would also love suggestions for your top picks not on this list, and we can figure out how to approach them.

- <redacted>

- <redacted>

- <redacted>

- <redacted>

- <redacted>

- <redacted> (she heads Partnership on AI, originally created by Demis to steal OpenAI's thunder – would bring a lot of outside credibility)

The next 3 years

Over the next 3 years, we must build 3 things:

- Custom AI hardware (such as <redacted> computer)

- Massive AI data center (likely multiple revs thereof)

- Best software team, mixing between algorithm development, public demonstrations, and safety

We've talked the most about the custom AI hardware and AI data center. On the software front, we have a credible path (self-play in a competitive multiagent environment) which has been validated by Dota and AlphaGo. We also have identified a small but finite number of limitations in today's deep learning which are barriers to learning from human levels of experience. And we believe we uniquely are on trajectory to solving safety (at least in broad strokes) in the next three years.

We would like to scale headcount in this way:

- Beginning of 2017: ~40

- End of 2018: 100

- End of 2019: 300

- End of 2020: 900

█████████████ █████████████████ ██████

Moral high ground

Our biggest tool is the moral high ground. To retain this, we must:

- Try our best to remain a non-profit. AI is going to shake up the fabric of society, and our fiduciary duty should be to humanity.

- Put increasing effort into the safety/control problem, rather than the fig leaf you've noted in other institutions. It doesn't matter who wins if everyone dies. Related to this, we need to communicate a "better red than dead" outlook — we're trying to build safe AGI, and we're not willing to destroy the world in a down-to-the-wire race to do so.

- Engage with government to provide trusted, unbiased policy advice — we often hear that they mistrust recommendations from companies such as ████████████.

- Be perceived as a place that provides public good to the research community, and keeps the other actors honest and open via leading by example.

The past 2 years

I would be curious to hear how you rate our execution over the past two years, relative to resources. In my view:

- Over the past five years, there have two major demonstrations of working systems: AlphaZero [DeepMind] and Dota 1v1 [OpenAI]. (There are a larger number of breakthroughs of "capabilities" popular among practitioners, the top of which I'd say are: ProgressiveGAN [NVIDIA], unsupervised translation [Facebook], WaveNet [DeepMind], Atari/DQN [DeepMind], machine translation [Ilya at Google — now at OpenAI], generative adversarial network [Ian Goodfellow at grad school — now at Google], variational autoencoder [Durk at grad school — now at OpenAI], AlexNet [Ilya at grad school — now at OpenAI].) We benchmark well on this axis.

- We grew very rapidly in 2016, and in 2017 iterated to a working management structure. We're now ready to scale massively, given the resources. We lose people on comp currently, but pretty much only on comp. I've been resuming the style of recruiting I did in the early days, and believe I can exceed those results.

- We have the most talent dense team in the field, and we have the reputation for it as well.

- We don't encourage paper writing, and so paper acceptance isn't a measure we optimize. For the ICLR chart Andrej sent, I'd expect our (accepted papers)/(people submitting papers) to be the highest in the field.

- gdb

Andrej Karpathy to Elon Musk - Jan 31, 2018 11:54 PM

Working at the cutting edge of AI is unfortunately expensive. For example, DeepMind's operating expenses in 2016 were at around $250M USD (does not include compute). With their growing team today it might be ~0.5B/yr. But then Alphabet in 2016 reported ~20B net income so it's still fairly cheap even if DeepMind had no revenue of its own. In addition to DeepMind, Google also has Google Brain, Research, and Cloud. And TensorFlow, TPUs, and they own about a third of all research (in fact, they hold their own AI conferences).

I also strongly suspect that compute horsepower will be necessary (and possibly even sufficient) to reach AGI. If historical trends are any indication, progress in AI is primarily driven by systems - compute, data, infrastructure. The core algorithms we use today have remained largely unchanged from the ~90s. Not only that, but any algorithmic advances published in a paper somewhere can be almost immediately re-implemented and incorporated. Conversely, algorithmic advances alone are inert without the scale to also make them scary.

It seems to me that OpenAI today is burning cash and that the funding model cannot reach the scale to seriously compete with Google (an 800B company). If you can't seriously compete but continue to do research in open, you might in fact be making things worse and helping them out "for free", because any advances are fairly easy for them to copy and immediately incorporate, at scale.