Posts

Comments

The results of the listener survey were equivocal. Listener preferences varied widely, with Ryan (our existing voice) and Echo coming out tied and slightly on top, but not with statistical significance.

Given that, we plan to stick with Ryan for now. Two considerations that influence this call, independently of the survey result:

- There's an habituation effect, such that switching costs for existing hardcore listeners are significant.

- There's some evidence that more expressive voices are less comprehensible at high listening speeds. Ryan is less expressive than the other voices we tested.

We'll survey again—or perhaps just switch based on our judgement—when better models are released.

There is no meeting where an actual rational discussion of considerations and theories of change happens, everything really is people flying by the seat of their pants even at highest level. Talk of ethics usually just gets you excluded from the power talk.

This seems overstated. E.g. Musk and Altman both read Superintelligence, and they both met Bostrom at least once in 2015. Sam published reflective blog posts on AGI in 2015, and it's clear that the OpenAI founders had lengthy, reflective discussions from the YC Research days onwards.

That's probably not what Page meant. On consideration, he would probably have clarified […]

[…]

A little more careful conversation would've prevented this whole thing.

The passage you quote earlier suggests that they had multiple lengthy conversations:

Larry Page and I used to be close friends and I would stay at his house in Paolo Alto and I would talk to him late into the night about AI safety […]

Quick discussions via email are not strong evidence of a lack of careful discussion and reflection in other contexts.

I made a change to my Twitter setup recently.

Initially, I discovered the "AI Twitter Recap" section of the AI News newsletter (example). It is good, but it doesn't actually include the tweet texts, and it isn't quite enough to make me feel fine about skipping my Twitter home screen.

So—I made an app that extracts all the tweet URLs that are mentioned in all of my favourite newsletters, and lists them in a feed. Then I blocked x.com/home (but not other x.com URLs, so I can still read and engage with particular threads) on weekdays.

This is just repackaging the curation work that is done by my favourite newsletters. But I'm enjoying having a single place to check, that feels more like the Twitter feed I want to have. It's helped me feel fine about blocking the normal Twitter home screen for larger fraction of the week.

This setup has various obvious issues—in particular, it's still not sufficiently tailored to my interests. I could improve things by having an LLM classify search results from the Twitter API, but sadly the $100 / month plan only lets you read ~300 tweets / day. And then the next tier is $5000 / month...

1. My current system

I check a couple of sources most days, at random times during the afternoon or evening. I usually do this on my phone, during breaks or when I'm otherwise AFK. My phone and laptop are configured to block most of these sources during the morning (LeechBlock and AppBlock).

When I find something I want to engage with at length, I usually put it into my "Reading inbox" note in Obsidian, or into my weekly todo list if it's above the bar.

I check my reading inbox on evenings and weekends, and also during "open" blocks that I sometimes schedule as part of my work week.

I read about 1/5 of the items that get into my reading inbox, either on my laptop or iPad. I read and annotate using PDF Expert, take notes in Obsidian, and use Mochi for flashcards. My reading inbox—and all my articles, highlights and notes—are synced between my laptop and my iPad.

2. Most useful sources

(~Daily)

- AI News (usually just to the end of the "Twitter recap" section).

- Private Slack and Signal groups.

- Twitter (usually just the home screen, sometimes my lists).

- Marginal Revolution.

- LessWrong and EA Forum (via the 30+ karma podcast feeds; I rarely check the homepages)

(~Weekly)

- Newsletters: Zvi, CAIS.

- Podcasts: The Cognitive Revolution, AXRP, Machine Learning Street Talk, Dwarkesh.

3. Problems

I've not given the top of the funnel—the checking sources bit—much thought. In particular, I've never sat down for an afternoon to ask questions like "why, exactly, do I try to follow AI news?", "what are the main ways this is valuable (and disvaluable)?" and "how could I make it easy to do this better?". There's probably a bunch of low-hanging fruit here.

Twitter is... twitter. I currently check the "For you" home screen every day (via web browser, not the app). At least once a week I'm very glad that I checked Twitter—because I found something useful, that I plausibly wouldn't have found otherwise. But—I wish I had an easy way to see just the best AI stuff. In the past I tried to figure something out with Twitter lists and Tweetdeck (now "X Pro"), but I've not found something that sticks. So I spend most of my time with the "For you" screen, training the algorithm with "not interested" reports, an aggressive follow/unfollow/block policy, and liberal use of the "mute words" function. I'm sure I can do better...

My newsletter inbox is a mess. I filter newsletters into a separate folder, so that they don't distract me when I process my regular email. But I'm subscribed to way too many newsletters, many of which aren't focussed on AI, so when I do open the "Newsletters" folder, it's overwhelming. I don't reliably read the sources which I flagged above, even though I consider them fairly essential reading (and would prefer to read them to many of the things I do, in fact, read).

I addictively over-consume podcasts, at the cost of "shower time" (diffuse/daydream mode) or higher-quality rest.

I don't make the most of LLMs. I have various ideas for how LLMs could improve my information discovery and engagement, but on my current setup—especially on mobile—the affordances for using LLMs are poor.

I miss things that I'd really like to know about. I very rarely miss a "big story", but I'd guess I miss several things that I'd really like to know about each week, given my particular interests.

I find out about many things I don't need to know about.

I could go on...

Asya: is the above sufficient to allay the suspicion you described? If not, what kind of evidence are you looking for (that we might realistically expect to get)?

CNBC reports:

The memo, addressed to each former employee, said that at the time of the person’s departure from OpenAI, “you may have been informed that you were required to execute a general release agreement that included a non-disparagement provision in order to retain the Vested Units [of equity].”

“Regardless of whether you executed the Agreement, we write to notify you that OpenAI has not canceled, and will not cancel, any Vested Units,” stated the memo, which was viewed by CNBC.

The memo said OpenAI will also not enforce any other non-disparagement or non-solicitation contract items that the employee may have signed.

“As we shared with employees, we are making important updates to our departure process,” an OpenAI spokesperson told CNBC in a statement.

“We have not and never will take away vested equity, even when people didn’t sign the departure documents. We’ll remove nondisparagement clauses from our standard departure paperwork, and we’ll release former employees from existing nondisparagement obligations unless the nondisparagement provision was mutual,” said the statement, adding that former employees would be informed of this as well.

A handful of former employees have publicly confirmed that they received the email.

I'm not sure what to make of this omission.

OpenAI's March 2024 summary of the WilmerHale report included:

The firm conducted dozens of interviews with members of OpenAI’s prior Board, OpenAI executives, advisors to the prior Board, and other pertinent witnesses; reviewed more than 30,000 documents; and evaluated various corporate actions. Based on the record developed by WilmerHale and following the recommendation of the Special Committee, the Board expressed its full confidence in Mr. Sam Altman and Mr. Greg Brockman’s ongoing leadership of OpenAI.

[...]

WilmerHale found that the prior Board acted within its broad discretion to terminate Mr. Altman, but also found that his conduct did not mandate removal.

I'd guess that telling lies to the board would mandate removal. If that's right, then the summary suggests that they didn't find evidence of this.

It's also notable that Toner and McCauley have not provided public evidence of “outright lies” to the board. We also know that whatever evidence they shared in private during that critical weekend did not convince key stakeholders that Sam should go.

The WSJ reported:

Some board members swapped notes on their individual discussions with Altman. The group concluded that in one discussion with a board member, Altman left a misleading perception that another member thought Toner should leave, the people said.

I really wish they'd publish these notes.

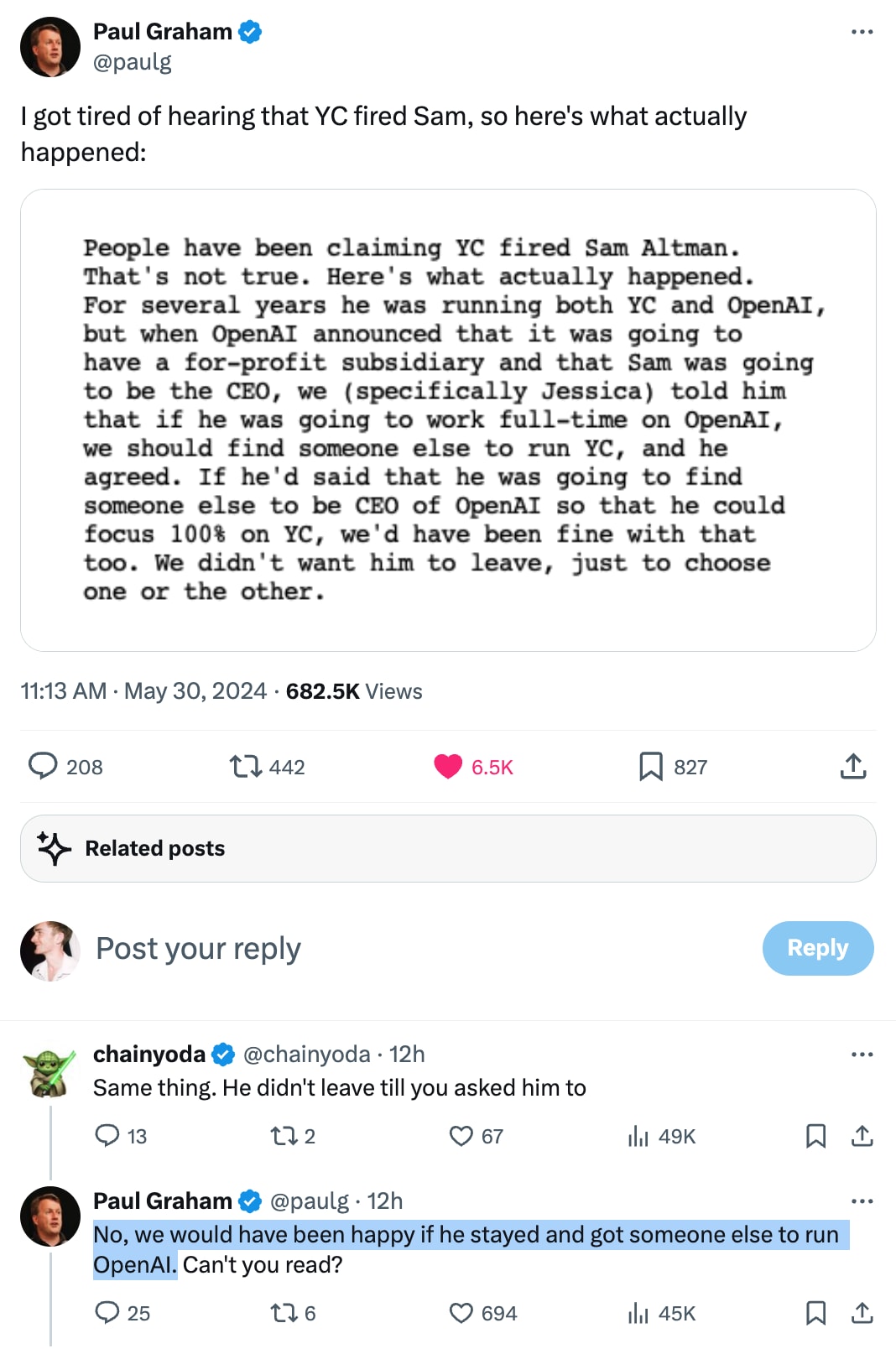

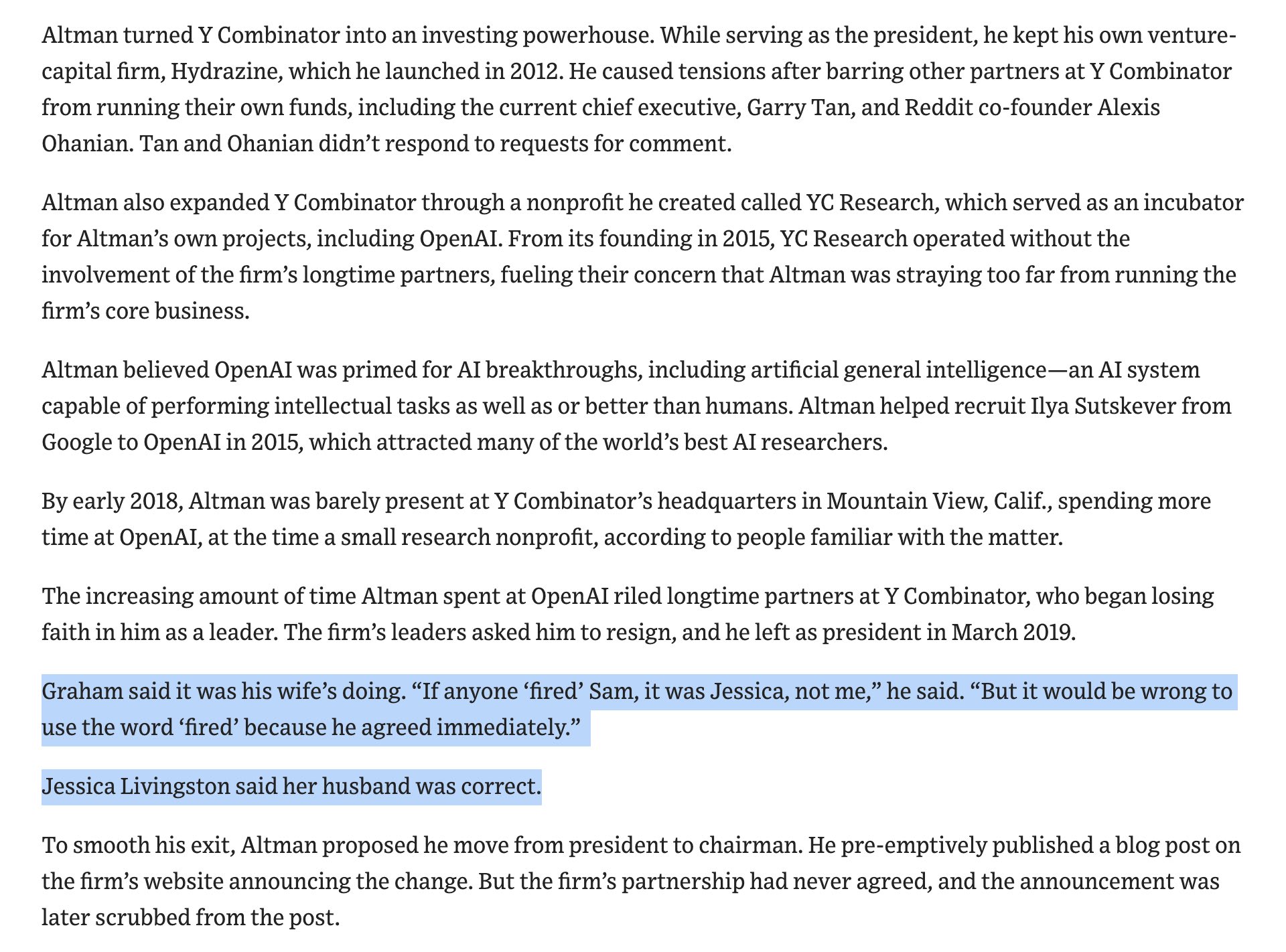

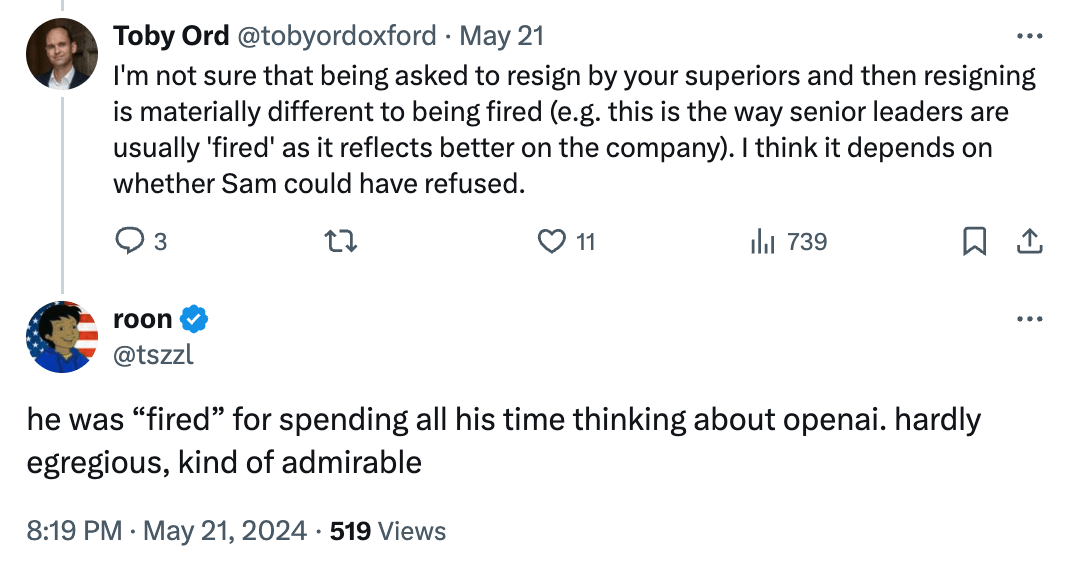

If we presume that Graham’s story is accurate, it still means that Altman took on two incompatible leadership positions, and only stepped down from one of them when asked to do so by someone who could fire him. That isn’t being fired. It also isn’t entirely not being fired.

According to the most friendly judge (e.g. GPT-4o) if it was made clear Altman would get fired from YC if he did not give up one of his CEO positions, then ‘YC fired Altman’ is a reasonable claim. I do think precision is important here, so I would prefer ‘forced to choose’ or perhaps ‘effectively fired.’ Yes, that is a double standard on precision, no I don’t care.

I think that Paul Graham’s remarks today—particularly the “we didn’t want him to leave” part—make it clear that Altman was not fired.

In December 2023, Paul Graham gave a similar account to the Wall St Journal and said “it would be wrong to use the word ‘fired’”.

Roon has a take.

Bret Taylor and Larry Summers (members of the current OpenAI board) have responded to Helen Toner and Tasha McCauley in The Economist.

The key passages:

Helen Toner and Tasha McCauley, who left the board of Openai after its decision to reverse course on replacing Sam Altman, the CEO, last November, have offered comments on the regulation of artificial intelligence (AI) and events at OpenAI in a By Invitation piece in The Economist.

We do not accept the claims made by Ms Toner and Ms McCauley regarding events at OpenAI. Upon being asked by the former board (including Ms Toner and Ms McCauley) to serve on the new board, the first step we took was to commission an external review of events leading up to Mr Altman’s forced resignation. We chaired a special committee set up by the board, and WilmerHale, a prestigious law firm, led the review. It conducted dozens of interviews with members of OpenAI's previous board (including Ms Toner and Ms McCauley), Openai executives, advisers to the previous board and other pertinent witnesses; reviewed more than 30,000 documents; and evaluated various corporate actions. Both Ms Toner and Ms McCauley provided ample input to the review, and this was carefully considered as we came to our judgments.

The review’s findings rejected the idea that any kind of ai safety concern necessitated Mr Altman’s replacement. In fact, WilmerHale found that “the prior board’s decision did not arise out of concerns regarding product safety or security, the pace of development, OpenAI's finances, or its statements to investors, customers, or business partners.”

Furthermore, in six months of nearly daily contact with the company we have found Mr Altman highly forthcoming on all relevant issues and consistently collegial with his management team. We regret that Ms Toner continues to revisit issues that were thoroughly examined by the WilmerHale-led review rather than moving forward.

Ms Toner has continued to make claims in the press. Although perhaps difficult to remember now, OpenAI released ChatGPT in November 2022 as a research project to learn more about how useful its models are in conversational settings. It was built on GPT-3.5, an existing ai model which had already been available for more than eight months at the time.

Flagging the most upvoted comment thread on EA Forum, with replies from Ozzie, which begins:

This post contains many claims that you interpret OpenAI to be making. However, unless I'm missing something, I don't see citations for any of the claims you attribute to them. Moreover, several of the claims feel like they could potentially be described as misinterpretations of what OpenAI is saying or merely poorly communicated ideas.

Nice. One thing: initially I couldn't figure out how to read this because I didn't see the key at the top. I think the key is a bit too easy to miss if you are zooming in to look at the image on mobile. Maybe make it more prominent?

Thanks for the heads up. Each of those code blocks is being treated separately, so the placeholder is repeated several times. We'll release a fix for this next week.

Usually the text inside codeblocks is not suitable for narration. This is a case where ideally we would narrate them. We'll have a think about ways to detect this.

I replaced it because it seemed like a less useful format.

- Azure TTS cost per million characters = $16

- Elevenlabs TTS cost per million characters = $180

1 million characters is roughly 200,000 words.

One hour of audio is roughly 9000 words.

Thanks! We're currently using Azure TTS. Our plan is to review every couple months and update to use better voices when they become available on Azure or elsewhere. Elevenlabs is a good candidate but unfortunately they're ~10x more expensive per hour of narration than Azure ($10 vs $1).

Thanks! We do have feature (2)—we remember whatever playback speed you last set. If you're not seeing this, please let me know what browser you're using.

Yep, if the pilot goes well then I imagine we'll do all the >100 karma posts, or something like that.

We'll add narrations for all >100 karma posts on the EA Forum later this month.

Honestly this reminds me of "Death with Dignity" and other recent examples of friendly fire from Eliezer.

This post may have various effects. Two that come to mind:

(1) Positively influence a future AI.

(2) Damage the credibility of people who are concerned about AI safety; especially the community of people associated with LessWrong.

If the post attracts significant attention in the world outside of LessWrong, I expect that (2) will be the larger effect, so far as the expected value of the future goes.

It sounds like your story is similar to the one that Bernard Williams would tell.

Williams was in critical dialog with Peter Singer and Derek Parfit for much of his career.

This lead to a book: Philosophy as a Humanistic Discipline.

If you're curious:

- Williams talk on The Human Prejudice (audio)

- Adrian Moore on Williams on Ethics (audio)

- My notes on Bernard Williams.

This website is compiling links to datasets, dashboards, tools etc:

Edit 2020-03-08: I made a Google Sheet that makes it easy to view Johns Hopkins data for up to 5 locations of interest.

If you want to get raw data from the Johns Hopkins Github Repo into a Google Sheet, use these formulas:

=IMPORTDATA("https://raw.githubusercontent.com/CSSEGISandData/COVID-19/master/csse_covid_19_data/csse_covid_19_time_series/time_series_19-covid-Confirmed.csv") =IMPORTDATA("https://raw.githubusercontent.com/CSSEGISandData/COVID-19/master/csse_covid_19_data/csse_covid_19_time_series/time_series_19-covid-Deaths.csv") =IMPORTDATA("https://raw.githubusercontent.com/CSSEGISandData/COVID-19/master/csse_covid_19_data/csse_covid_19_time_series/time_series_19-covid-Recovered.csv")With these formulas, the data in your sheet should update within a few hours of Johns Hopkins updating their data. If you want to force an update, bust the Google Sheets cache by sticking ?v=1 on the end of the URL.