OpenAI: Helen Toner Speaks

post by Zvi · 2024-05-30T21:10:02.938Z · LW · GW · 8 commentsContents

Notes on Helen Toner’s TED AI Show Podcast Things That Could Have Been Brought To Our Attention Previously Brad Taylor Responds How Much Does This Matter? If You Come at the King So That is That None 8 comments

Helen Toner went on the TED AI podcast, giving us more color on what happened at OpenAI. These are important claims to get right.

I will start with my notes on the podcast, including the second part where she speaks about regulation in general. Then I will discuss some implications more broadly.

Notes on Helen Toner’s TED AI Show Podcast

This seems like it deserves the standard detailed podcast treatment. By default each note’s main body is description, any second-level notes are me.

- (0:00) Introduction. The host talks about OpenAI’s transition from non-profit research organization to de facto for-profit company. He highlights the transition from ‘open’ AI to closed as indicative of the problem, whereas I see this as the biggest thing they got right. He also notes that he was left with the (I would add largely deliberately created and amplified by enemy action) impression that Helen Toner was some kind of anti-tech crusader, whereas he now understands that this was about governance and misaligned incentives.

- (5:00) Interview begins and he dives right in and asks about the firing of Altman. She dives right in, explaining that OpenAI was a weird company with a weird structure, and a non-profit board supposed to keep the company on mission over profits.

- (5:20) Helen says for years Altman had made the board’s job difficult via withholding information, misrepresenting things happening at the company, and ‘in some cases outright lying to the board.’

- (5:45) Helen says she can’t share all the examples of lying or withholding information, but to give a sense: The board was not informed about ChatGPT in advance and learned about ChatGPT on Twitter, Altman failed to inform the board that he owned the OpenAI startup fund despite claiming to be an independent board member, giving false information about the company’s formal safety processes on multiple occasions, and relating to her research paper, that Altman in the paper’s wake started lying to other board members in order to push Toner off the board.

- I will say it again. If the accusation bout Altman lying to the board in order to change the composition of the board is true, then in my view the board absolutely needed to fire Altman. Period. End of story. You have one job.

- As a contrasting view, the LLMs I consulted thought that firing the CEO should be considered, but it was plausible this could be dealt with via a reprimand combined with changes in company policy.

- I asked for clarification given the way it was worded in the podcast, and can confirm that the Altman withheld information from the board regarding the startup fund and the launch of ChatGPT, but he did not lie about those.

- Repeatedly outright lying about safety practices seems like a very big deal?

- It sure sounds like Altman had a financial interest in OpenAI via the startup fund, which means he was not an independent board member, and that the company’s board was not majority independent despite OpenAI claiming that it was. That is… not good, even if the rest of the board knew.

- (7:25) Toner says that any given incident Altman could give an explanation, but the cumulative weight meant they could not trust Altman. And they’d been considering firing Altman for over a month.

- If they were discussing firing Altman for at least a month, that raises questions about why they weren’t better prepared, or why they timed the firing so poorly given the tender offer.

- (8:00) Toner says that Altman was the board’s main conduit of information about the company. They had been trying to improve processes going into the fall, these issues had been long standing.

- (8:40) Then in October two executives went to the board and said they couldn’t trust Altman, that the atmosphere was toxic and using the term ‘psychological abuse,’ that Altman was the wrong person to lead the company to AGI, no expectation that Altman would change and no avenue for feedback, complete with documentation and screenshots (which were not then shared). Those executives have now tried to walk back their statements.

- (9:45) This is where it went off the rails. The board had spent weeks discussing these questions. But they thought if Altman got any inkling of what was happening Atman would go to war with the board, so the board couldn’t tell almost anyone outside of their legal team and could not do much in advance of the firing on November 17.

- I get the failure mode, but I still do not get it. There was still plenty of time to consult with the legal team and get their ducks in a row. They had been talking for weeks without a leak. They could have prepared clear statements. They had multiple executives complaining, who could have been asked for on the record statements. They had to anticipate that Altman and his allies would fight back after he was fired, at bare minimum he would attempt to recruit for his next venture.

- Instead, they went in with basically no explanation, no plan, and got killed.

- (10:20) Toner explains that the situation was portrayed as either Altman returns with a new fully controlled board and complete control, or OpenAI will be destroyed. Given those choices, the employees got behind Altman.

- (11:20) But also, she says, no one appreciates how scared people are to go against Altman. Altman has a long history of retaliation, including for criticism.

- It was a Basilisk situation. Everyone fears what will happen if you don’t back a vindictive unforgiving power seeker now, so one by one everyone falls in line, and then they have power.

- Let’s face it. They put the open letter in front of you. You know that it will be public who will and won’t sign. You see Ilya’s name on it, so you presume Altman is going to probably return, even if he doesn’t he still will remember and have a lot of money and power, and if not there is a good chance OpenAI falls apart. How do you dare not sign? That seems really tough.

- (12:00) She says this is not a new problem for Altman. She claims he was fired from YC and the management team asked the board to fire Altman twice at Loopt.

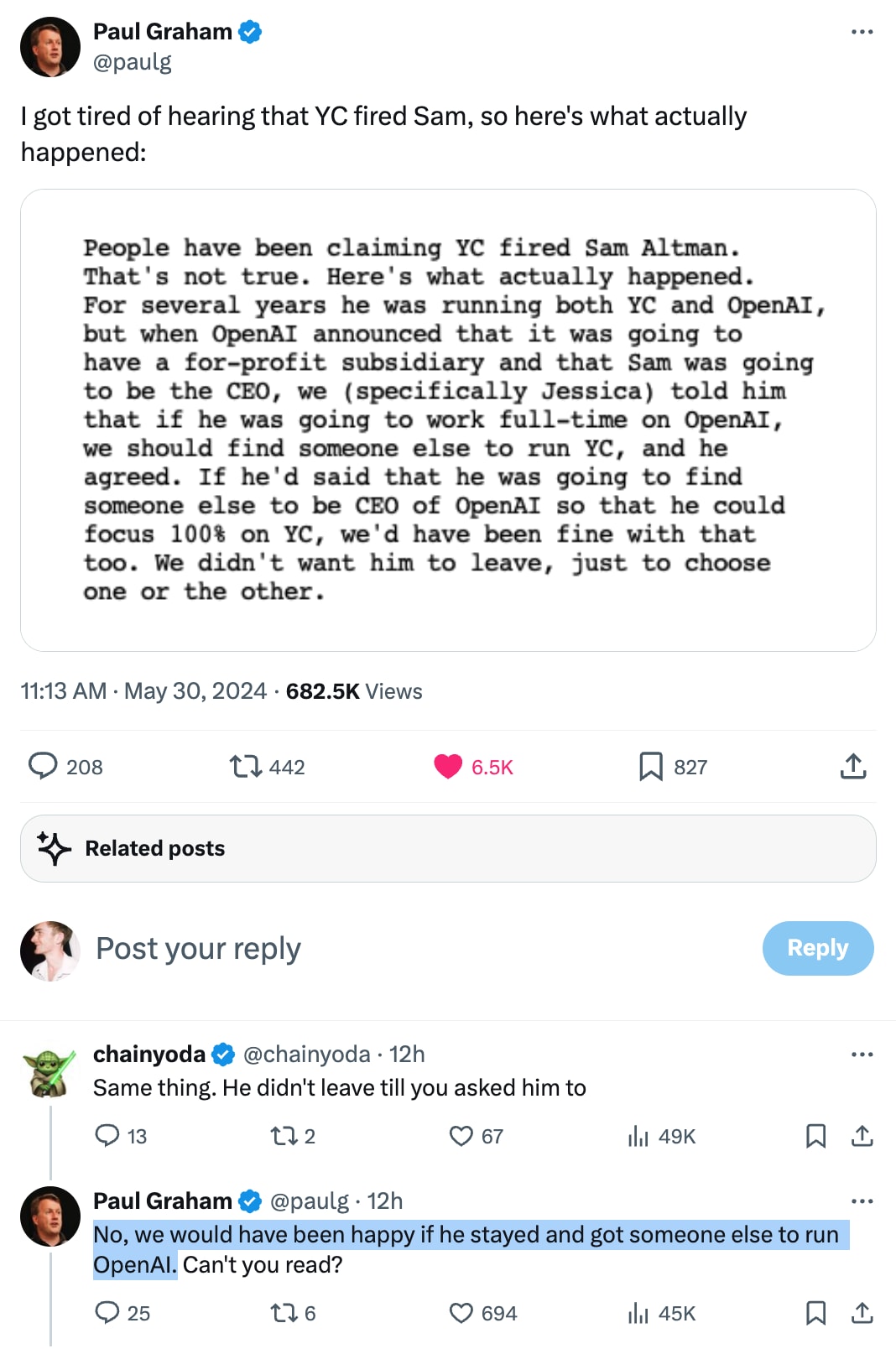

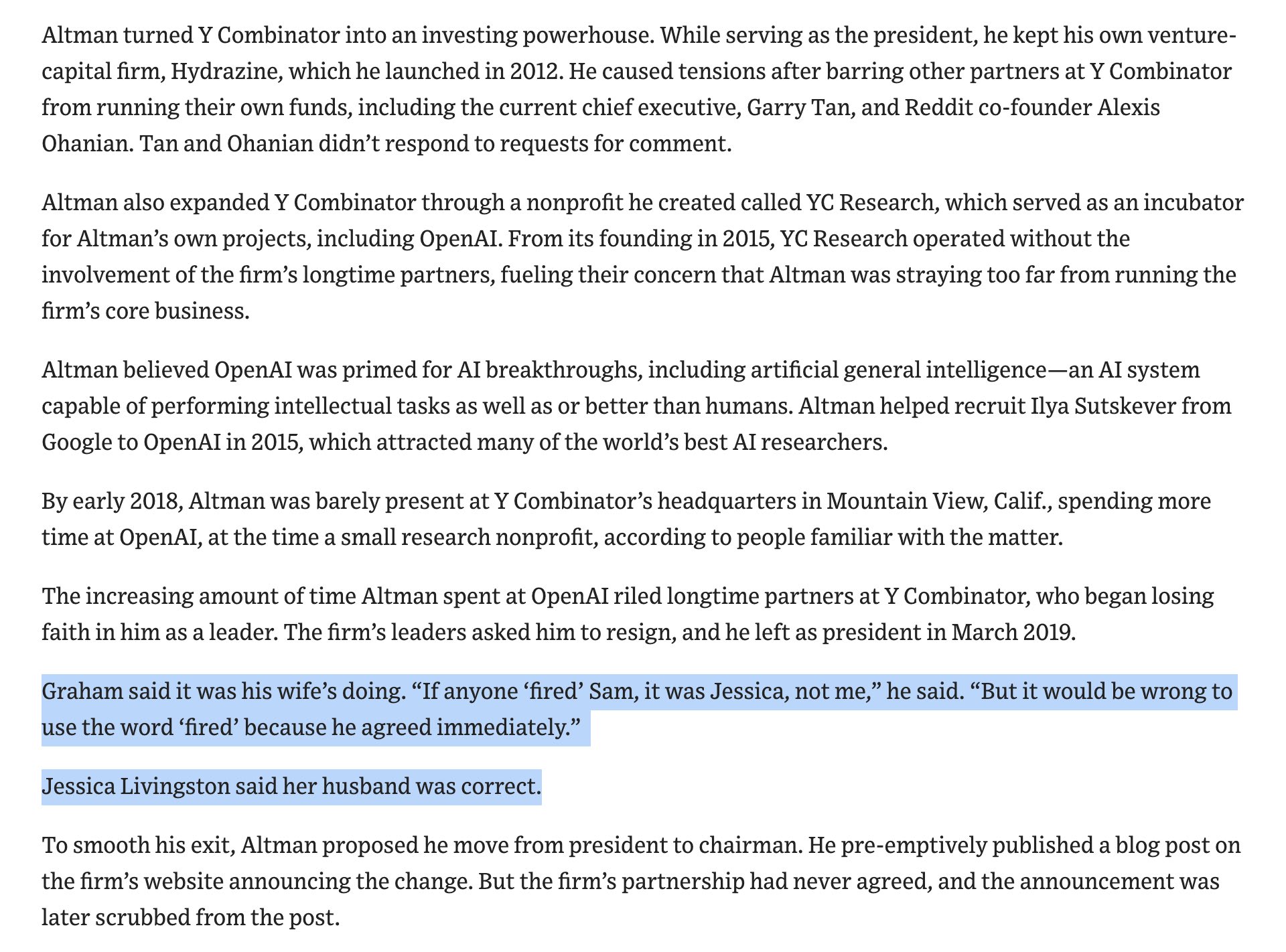

- Paul Graham has issued a statement that Altman was not fired from YC. According to Graham, who would know, Altman was asked to choose to be either CEO of OpenAI or YC, but that he could not hold both positions at once. Altman agreed and (quite obviously and correctly) chose OpenAI. This seems like a highly reasonable thing for Graham to do in that spot.

- Paul Graham and Sam Altman are presenting as being on good terms. That can cut in both directions in terms of the credibility of Graham’s story.

- If we presume that Graham’s story is accurate, it still means that Altman took on two incompatible leadership positions, and only stepped down from one of them when asked to do so by someone who could fire him. That isn’t being fired. It also isn’t entirely not being fired.

- According to the most friendly judge (e.g. GPT-4o) if it was made clear Altman would get fired from YC if he did not give up one of his CEO positions, then ‘YC fired Altman’ is a reasonable claim. I do think precision is important here, so I would prefer ‘forced to choose’ or perhaps ‘effectively fired.’ Yes, that is a double standard on precision, no I don’t care.

- (12:50) Then they pivot to other questions. That most certainly would not have been my move if I was doing this interview, even if I had a strict time budget. There are so many additional questions.

- (13:10) Regulations time, then. What are we worried about in concrete terms? Toner starts with the basics like credit approvals, housing and criminal justice decisions. Next up is military use, and obvious concern. Looking forward, if capabilities improve, she cites enhancing hacking capabilities as an example of a potential danger, while noting that not everything needs regulation, if Spotify wants to use AI for your playlist then that’s fine.

- Choosing examples is always tricky. Cyber can sometimes be a very helpful example. At other times, it can trigger (often valid) particular objections.

- (15:00) Surveillance and processing of audio and video? He cites MSG, which famously uses facial recognition to exclude anyone with a pending lawsuit against their parent company. Toner notes this (I would say ‘for now’) is a difference in degree, not kind, but it still requires reassessing our policies.

- Facial recognition technology gets a strangely hostile rap compared to facial recognition in humans. Witness identifications are super unreliable. Yes, people being in jail purely on incorrect facial recognition is terrible, but how much worse is it than the vastly more common being in jail because of an accidentally mistaken witness ID? Versus an intentional or coached one?

- The real issue is radical reductions in price plus increases in accuracy and speed open up new use cases and defaults that have some big issues.

- (18:15) What happens when a business can track tons of things, like productivity and actions of workers and time spent at tables? Host asks, how is this legal? Well, there are no Federal privacy laws for private actors, in contrast to many other countries.

- I have not seen a principled explanation for where to draw the line on what information you should and should not be allowed to track, or a good practical proposal either. Certainly the EU solutions are not great. We don’t want a ‘everything not forbidden is compulsory’ situation, and most people very clearly do not value their privacy in many senses.

- (19:50) Toner suggests that selling the data to others might be a key distinction. It is one thing for the coffee shop to know your patterns, another to share it with essentially every corporation.

- This seems promising as an intuition. I don’t mind local information sharing but worry more about universal information sharing. Seems tricky to codify, but not obviously impossible.

- (20:15) Phone scams, via AI to scrub social media and duplicate voices. What’s on the horizon? Toner says video, and the standard reminder to talk to your parents and not use voice as a password and so on, says we can likely adapt. I like the reminder that we used to have full listings of everyone’s address and phone number and it was fine.

- It is not so obvious to me that having a universal directory would not have been fine in 2022 before ChatGPT, or even that it is obviously terrible now. My guess is you could fix it with an opt-out for special cases (like abuse victims) combined with a small refundable tax on phone calls and emails. So many famous people have de facto public contact information and it’s fine. Some of them like Tyler Cowen actually answer all their emails. I don’t always answer, but I do always look.

- (22:30) Can regulations and laws protect us? Toner says yes, of course. In many case there are already rules, you only need to ensure the resources are available for enforcement.

- (24:00) Is there an example of good regulation? Toner notes the issue on AI regulation is all the uncertainty, and the best policies are about shedding light, such as the executive order’s disclosure requirements on advanced systems. She notes we don’t even have good evaluation methods yet, and it is good to work on such abilities.

- (25:50) What makes regulating AI hard? Toner says three things. AI is a lot of different things, AI is a moving target, no one can agree on where AI is going.

- Those are definitely big issues. I see others as well, although you could file a lot of those under that third objection. Also all the industry lobbying can’t be helping. The hyperbolic outright lying campaigns could go either way.

- (27:00) How do you get top AI labs to ‘play nice’ and give access? How do you prevent them from doing regulatory capture? No great answers here other than you have force them.

- (29:15) Standard ‘cat out of bag’ question regarding open source. Toner points out that Hugging Face will take down problematic models, you can at least reduce distribution. Toner pivots to detection for AI-generated content.

- This of course won’t stop determined actors, and won’t matter at the limit.

- For now, yes, defense in depth can do a lot of work.

- I notice she mostly dodged the most important implications.

- (31:30) What are the utopian and dystopian scenarios? For dystopia Toner says ‘so many possibilities,’ but then paints a dystopia that is very similar to our own, basically one where AIs make a lot of decisions and those decisions can’t be questioned. She mentions existential risk, but then somehow quotes the famous Kamala Harris line about how losing health care could be ‘existential for that person.’ And says there are plenty of things to be worried about already, that are happening. Mentions the ‘Wall-e’ future from the ship.

- Seriously, what the hell?

- Yes, there are plenty of bad things already happening, and they include lots of serious problems.

- But it seems very wrong to focus on the things already happening or that are locked into happening.

- However I do think this loss of control scenario, where it happens gradually and with our consent but ends with a worthless world, is certainly one scenario that could happen, in at least some form. I notice we do not even have a plan for how to avoid this scenario.

- Even without existential risk this seems profoundly unimaginative. I think this is deliberate, she is trying to stay as seemingly grounded as possible, and I think she takes this too far.

- (34:40) Moving on to utopia. She essentially says ‘solve our current problems.’

- But that’s not what makes a good utopia. We need a better vision.

Things That Could Have Been Brought To Our Attention Previously

A particular note from Helen Toner’s podcast: The OpenAI board learned about the release of ChatGPT from Twitter. They were not informed in advance.

This was nowhere near as crazy as it now sounds. The launch was relatively quiet and no one saw the reaction coming. I do not think that, on its own, this mistake would be egregious given the low expectations. You still should inform your board of new product launches, even if they are ‘research previews,’ but corners get cut.

As an isolated incident of not informing the board, I would be willing to say this is a serious process failure but ultimately not that big a deal. But this is part of a years-long (by Toner’s account) pattern of keeping the board in the dark and often outright lying to it.

Altman’s continual ‘saying that which was not’ and also ‘failure to say that which was and was also relevant’ included safety issues along with everything else.

It is the pattern that matters, and that is hard to convey to outsiders. As she says in the podcast, any one incident can be explained away, but a consistent pattern cannot. Any one person’s sense of the situation can be written off. A consistent pattern of it, say by two executives plus all the board members that aren’t either Altman or his right hand man Brockman, should be a lot harder, alas statements with substance could not be given.

Only now do we understand the non-disparagement and non-disclosure agreements and other tactics used to silence critics, along other threats and leverage. Indeed, it damn well sure sounds like Toner is holding back a lot of the story.

Thus, one way or another, this all falls under ‘things that could have been brought to our attention yesterday’ on so many levels.

Alas, it is too late now. The new board clearly wants business as usual.

Brad Taylor Responds

The only contradiction of Toner’s claims, so far, has been Paul Graham’s statement that Sam Altman was not fired from YC. Assuming we believe Paul’s story, which I mostly do, that puts whether Altman was effectively fired in a gray area.

Bret Taylor, the current OpenAI board chief, took a different approach.

In response to Toner’s explanations, Taylor did not dispute any of the claims, or the claims in general. Instead he made the case that Altman should still be CEO of OpenAI, and that Toner talking was bad for business so she should cut that out.

Notice the Exact Words here.

Bret Taylor (OpenAI Board Chief): We are disappointed that Ms. Toner continues to revisit these issues.

…

An independent review of Altman’s firing concluded that the prior board’s decision was not based on concerns regarding product safety or security, the pace of development, OpenAI’s finances, or its statements to investors, customers, or business partners.

Bloomberg: Taylor also said that “over 95% of employees” asked for Altman’s reinstatement, and that the company remains focused on its “mission to ensure AGI benefits all of humanity.”

So yes. Those are all true statements, and very much things the Board Chief should say if he has decided he does not want the trouble of firing Altman as CEO.

With one possible exception, none of it in any way contradicts anything said by Toner.

Indeed, this looks awfully close to a corroboration.

Notice that Toner did not make any claims regarding product safety or security, the pace of developments, OpenAI’s finances, or any statements to investors, customers or business partners not related to OpenAI having an independant board. And I am happy to believe that those potentially false statements about the board’s independence were not a consideration in the firing of Altman.

Whether or not the company is focused on its ‘mission to ensure AGI benefits all of humanity’ is an open question where I think any reasonable outsider would be highly skeptical at this point given everything we now know, and would treat that as an empty corporate slogan.

I believe that the independent report’s conclusion is technically correct, the best kind of correct. If we are to draw any further conclusion than the exact words? Well, let’s see the report, then.

None of that goes to whether it was wise to respond by firing Altman, or whether the board would have been wise to do so if they had executed better.

How Much Does This Matter?

Is the new information damning for Sam Altman? Opinions vary.

Neel Nanda: This is absolutely damning of Sam Altman. It’s great to finally start to hear the board’s side of the story, who recent events have more than vindicated.

Roon: How is it damning?

The specific claim that the board was not informed of ChatGPT’s launch does not seem much more damaging, on the margin, than the things we already know. As I have said before, ‘lying to the board about important things’ seems to me the canonical offense that forces the board to consider firing the CEO, and in my book lying in an attempt to control the board is the one that forces you to outright fire the CEO, but we already put that part together.

The additional color does help crystalize and illustrate the situation. It clarifies the claims. The problem is that when there is the sum of a lot of bad incidents, any one of which could be excused as some combination of sloppy or a coincidence or not so bad or not sufficiently proven or similar, there is the tendency to only be able to focus on the worst one thing, or even to evaluate based on the least bad of all the listed things.

We got explicit confirmation that Altman lied to the board in an attempt to remove Toner from the board. To me, this remains by far the worst offense, on top of other details. We also got the news about Altman hiding his ownership of the AI startup fund. That seems like a potentially huge deal to hide from the board.

Why, people then ask, are you also harping on what is only like the 9th and 11th worst things we have heard about? Why do you ‘keep revisiting’ such issues? Why can’t you understand that you fought power, power won, and now you don’t have any?

Because the idea of erasing our memories, of saying that if you get away with it then it didn’t count, is one of the key ways to excuse such patterns of awful behavior.

If You Come at the King

OpenAI’s Joshua Achiam offered a reasonable take, saying that the board was well meaning and does not deserve to be ‘hated or ostracized,’ but they massively screwed up. Achiam thinks they made the wrong choice firing Altman, the issues were not sufficiently severe, but that this was not obvious, and the decision not so unreasonable.

His other claim, however, even if firing had been the right choice, they then had a duty if they went through with it to provide a clear and convincing explanation to all the stakeholders not only the employees.

Essentially everyone agrees that the board needed to provide a real explanation. They also agree that the board did not do so, and that this doomed the attempt to fire Altman without destroying the company, whether or not it had a shot anyway. If your approach will miss, it does not matter what he has done, you do not come at the king.

And that seems right.

For a vindictive king who will use the attempt to consolidate power? Doubly so.

The wrinkle remains why the board did not provide a better explanation. Why they did not get written statements from the two other executives, and issue additional statements themselves, if only internally or to other executives and key stakeholders. We now know that they considered this step for weeks, and on some level for years. I get that they feared Altman fighting back, but even given that this was clearly a massive strategic blunder. What gives?

It must be assumed that part of that answer is still hidden.

So That is That

Perhaps we will learn more in the future. There is still one big mystery left to solve. But more and more, the story is confirmed, and the story makes perfect sense.

Altman systematically withheld information from and on many occasions lied to the board. This included lying in an attempt to remove Toner from the board so Altman could appoint new members and regain control. The board quite reasonably could not trust Altman, and had tried for years to institute new procedures without success. Then they got additional information from other executives that things were worse than they knew.

Left with no other options, the board fired Altman. But they botched the firing, and now Altman is back and has de facto board control to run the company as a for profit startup, whether or not he has a full rubber stamp. And the superalignment team has been denied its promised resources and largely driven out of the company, and we have additional highly troubling revelations on other fronts.

The situation is what it is. The future is still coming. Act accordingly.

8 comments

Comments sorted by top scores.

comment by PeterH · 2024-05-30T22:04:52.258Z · LW(p) · GW(p)

Bret Taylor and Larry Summers (members of the current OpenAI board) have responded to Helen Toner and Tasha McCauley in The Economist.

The key passages:

Replies from: MichaelDickens, D0TheMathHelen Toner and Tasha McCauley, who left the board of Openai after its decision to reverse course on replacing Sam Altman, the CEO, last November, have offered comments on the regulation of artificial intelligence (AI) and events at OpenAI in a By Invitation piece in The Economist.

We do not accept the claims made by Ms Toner and Ms McCauley regarding events at OpenAI. Upon being asked by the former board (including Ms Toner and Ms McCauley) to serve on the new board, the first step we took was to commission an external review of events leading up to Mr Altman’s forced resignation. We chaired a special committee set up by the board, and WilmerHale, a prestigious law firm, led the review. It conducted dozens of interviews with members of OpenAI's previous board (including Ms Toner and Ms McCauley), Openai executives, advisers to the previous board and other pertinent witnesses; reviewed more than 30,000 documents; and evaluated various corporate actions. Both Ms Toner and Ms McCauley provided ample input to the review, and this was carefully considered as we came to our judgments.

The review’s findings rejected the idea that any kind of ai safety concern necessitated Mr Altman’s replacement. In fact, WilmerHale found that “the prior board’s decision did not arise out of concerns regarding product safety or security, the pace of development, OpenAI's finances, or its statements to investors, customers, or business partners.”

Furthermore, in six months of nearly daily contact with the company we have found Mr Altman highly forthcoming on all relevant issues and consistently collegial with his management team. We regret that Ms Toner continues to revisit issues that were thoroughly examined by the WilmerHale-led review rather than moving forward.

Ms Toner has continued to make claims in the press. Although perhaps difficult to remember now, OpenAI released ChatGPT in November 2022 as a research project to learn more about how useful its models are in conversational settings. It was built on GPT-3.5, an existing ai model which had already been available for more than eight months at the time.

↑ comment by MichaelDickens · 2024-05-30T22:58:20.077Z · LW(p) · GW(p)

we have found Mr Altman highly forthcoming

He was caught lying about the non-disparagement agreements, but I guess lying to the public is fine as long as you don't lie to the board?

Taylor's and Summers' comments here are pretty disappointing—it seems that they have no issue with, and maybe even endorse, Sam's now-publicly-verified bad behavior.

Replies from: Lukas_Gloor↑ comment by Lukas_Gloor · 2024-05-31T00:34:44.316Z · LW(p) · GW(p)

we have found Mr Altman highly forthcoming

That's exactly the line that made my heart sink.

I find it a weird thing to choose to say/emphasize.

The issue under discussion isn't whether Altman hid things from the new board; it's whether he hid things to the old board a long while ago.

Of course he's going to seem forthcoming towards the new board at first. So, the new board having the impression that he was forthcoming towards them? This isn't information that helps us much in assessing whether to side with Altman vs the old board. That makes me think: why report on it? It would be a more relevant update if Taylor or Summers were willing to stick their necks out a little further and say something stronger and more direct, something more in the direction of (hypothetically), "In all our by-now extensive interactions with Altman, we got the sense that he's the sort of person you can trust; in fact, he had surprisingly circumspect and credible things to say about what happened, and he seems self-aware about things that he could've done better (and those things seem comparatively small or at least very understandable)." If they had added something like that, it would have been more interesting and surprising. (At least for those who are currently skeptical or outright negative towards Altman; but also "surprising" in terms of "nice, the new board is really invested in forming their own views here!").

By contrast, this combination of basically defending Altman (and implying pretty negative things about Toner and McCauley's objectivity and their judgment on things that they deem fair to tell the media), but doing so without sticking their necks out, makes me worried that the board is less invested in outcomes and more invested in playing their role. By "not sticking their necks out," I mean the outsourcing of judgment-forming to the independent investigation and the mentioning of clearly unsurprising and not-very-relevant things like whether Altman has been forthcoming to them, so far. By "less invested in outcomes and more invested in playing their role," I mean the possibility that the new board maybe doesn't consider it important to form opinions at the object level (on Altman's character and his suitability for OpenAI's mission, and generally them having a burning desire to make the best CEO-related decisions). Instead, the alternative mode they could be in would be having in mind a specific "role" that board members play, which includes things like, e.g., "check whether Altman ever gets caught doing something outrageous," "check if he passes independent legal reviews," or "check if Altman's answers seem reassuring when we occasionally ask him critical questions." And then, that's it, job done. If that's the case, I think that'd be super unfortunate. The more important the org, the more it matters to have a engaged/invested board that considers itself ultimately responsible for CEO-related outcomes ("will history look back favorably on their choices regarding the CEO").

To sum up, I'd have much preferred it if their comments had either included them sticking their neck out a little more, or if I had gotten from them more of a sense of still withholding judgment. I think the latter would have been possible even in combination with still reminding the public that Altman (e.g.,) passed that independent investigation or that some of the old board members' claims against him seem thinly supported, etc. (If that's their impression, fair enough.) For instance, it's perfectly possible to say something like, "In our duty as board members, we haven't noticed anything unusual or worrisome, but we'll continue to keep our eyes open." That's admittedly pretty similar, in substance, to what they actually said. Still, it would read as a lot more reassuring to me because of its different emphasis My alternative phrasing would help convey that (1) they don't naively believe that Altman – in worlds where he is dodgy – would have likely already given things away easily in interactions towards them, and (2) that they consider themselves responsible for the outcome (and not just following of the common procedures) of whether OpenAI will be led well and in line with its mission.

(Maybe they do in fact have these views, 1 and 2, but didn't do a good job here at reassuring me of that.)

↑ comment by Garrett Baker (D0TheMath) · 2024-05-31T20:44:27.099Z · LW(p) · GW(p)

The review’s findings rejected the idea that any kind of ai safety concern necessitated Mr Altman’s replacement. In fact, WilmerHale found that “the prior board’s decision did not arise out of concerns regarding product safety or security, the pace of development, OpenAI's finances, or its statements to investors, customers, or business partners.”

Note that Toner did not make claims regarding product safety, security, the pace of development, OAI's finances, or statements to investors (the board is not investors), customers, or business partners (the board are not business partners). She said he was not honest to the board.

Replies from: PeterH↑ comment by PeterH · 2024-06-01T02:05:50.905Z · LW(p) · GW(p)

I'm not sure what to make of this omission.

OpenAI's March 2024 summary of the WilmerHale report included:

The firm conducted dozens of interviews with members of OpenAI’s prior Board, OpenAI executives, advisors to the prior Board, and other pertinent witnesses; reviewed more than 30,000 documents; and evaluated various corporate actions. Based on the record developed by WilmerHale and following the recommendation of the Special Committee, the Board expressed its full confidence in Mr. Sam Altman and Mr. Greg Brockman’s ongoing leadership of OpenAI.

[...]

WilmerHale found that the prior Board acted within its broad discretion to terminate Mr. Altman, but also found that his conduct did not mandate removal.

I'd guess that telling lies to the board would mandate removal. If that's right, then the summary suggests that they didn't find evidence of this.

It's also notable that Toner and McCauley have not provided public evidence of “outright lies” to the board. We also know that whatever evidence they shared in private during that critical weekend did not convince key stakeholders that Sam should go.

The WSJ reported:

Some board members swapped notes on their individual discussions with Altman. The group concluded that in one discussion with a board member, Altman left a misleading perception that another member thought Toner should leave, the people said.

I really wish they'd publish these notes.

comment by PeterH · 2024-05-30T22:23:11.598Z · LW(p) · GW(p)

If we presume that Graham’s story is accurate, it still means that Altman took on two incompatible leadership positions, and only stepped down from one of them when asked to do so by someone who could fire him. That isn’t being fired. It also isn’t entirely not being fired.

According to the most friendly judge (e.g. GPT-4o) if it was made clear Altman would get fired from YC if he did not give up one of his CEO positions, then ‘YC fired Altman’ is a reasonable claim. I do think precision is important here, so I would prefer ‘forced to choose’ or perhaps ‘effectively fired.’ Yes, that is a double standard on precision, no I don’t care.

I think that Paul Graham’s remarks today—particularly the “we didn’t want him to leave” part—make it clear that Altman was not fired.

In December 2023, Paul Graham gave a similar account to the Wall St Journal and said “it would be wrong to use the word ‘fired’”.

Roon has a take.

↑ comment by Dana · 2024-05-30T23:34:54.825Z · LW(p) · GW(p)

These are the remarks Zvi was referring to in the post. Also worth noting Graham's consistent choice of the word 'agreed' rather than 'chose', and Altman's failed attempt to transition to chairman/advisor to YC. It sure doesn't sound like Altman was the one making the decisions here.

Replies from: gwern↑ comment by gwern · 2024-05-31T01:55:24.901Z · LW(p) · GW(p)

Altman's failed attempt to transition to chairman/advisor to YC

Of some relevance in this context is that Altman has apparently for years been claiming to be YC Chairman (including in filings to the SEC): https://www.bizjournals.com/sanfrancisco/inno/stories/news/2024/04/15/sam-altman-y-combinator-board-chair.html