Posts

Comments

I thought this was an excellent post. In particular, I'd been trying to think about taste as "a good intuition for what things will and won’t work well to try," and I thought your framing through the whole piece was quite crisp.

Thanks for writing this!

Damn good post. Pretty fucking funny, too.

I really enjoy this post, for two reasons: as a slice out of the overall aesthetic of the Bay Area Rationalist; and, as an honest-to-goodness reference for a number of things related to good interior decorating.

I'd enjoy seeing other slices of anthropology on the Rationalist scene, e.g. about common verbal tics ("this seems true" vs "that seems true," or "that's right," or "it wouldn't be crazy"), or about some element of history.

"The ants and the grasshopper" is a beautifully written short fiction piece that plays around with the structure and ending of the classic Aesop fable: the ants who prepare for winter, and the grasshopper who does not.

I think there's often a gap between how one thinks through the implications that a certain decision process would have on various difficult situations in the abstract, and how one actually feels while following through (or witnessing others follow through). It's pretty easy to point at that gap's existence, but pretty hard to reason well about that gap without being able to tangibly feel it. Fiction can do exactly that, but it's hard to find a fiction piece that executes on that goal well without turning to heavy-handed cliches. For me, "The ants and the grasshopper" succeeded.

MCE is a clear, incisive essay. Much of it clarified thoughts I already had, but framed them in a more coherent way; the rest straightforwardly added to my process of diagnosing interpersonal harm. I now go about making sense of most interpersonal issues through its framework.

Unlike Ricki/Avital, I haven't found that much use from its terminology with others, though I often come to internal conclusions generated by explicitly using its terminology then communicate those conclusions in more typical language. I wouldn't be surprised if I found greater use of the specific terminology if the interpersonal issues I did have happened with people who were already strongly bought into the MCE framework; this isn't true for me, and I'd guess it also isn't true for the vast majority of readers.

Overall, MCE is a clear post that explores a grounded, useful framework in-depth.

I'd be interested to see other posts written in similar veins exploring how MCE might be useful for intrapersonal conflicts (e.g. trade between versions of yourself over time, or different internal motivations).

ohh, this is great — agreed on all fronts. thanks shri!

The numbers I have in my Anki deck, selected for how likely I am to find practical use of them:

- total # hours in a year — 8760

- ${{c1::200}}k/year = ${{c2::100}}/hour

- ${{c1::100}}k/year = ${{c2::50}}/hour

- # of hours in a working year — 2,000 hours

- miles per time zone — ~1,000 miles

- california top-to-bottom — 900 miles

- US coast-to-coast — 3,000 miles

- equator circumference — (before you show the answer, i always find it fun that i can quickly get an approximation by multiplying the # of time zones by the # of miles per time zone!) :::25,000::: miles

- US GDP in 2022 — $25 trillion

- google's profit in 2022 — $60 billion

- total US political spending per election — ~$5 billion

- median US salary in 2022 — $75k

- LMIC's GDP per capita in 2022 — $2.5k

- world population in 2022 — 8 billion

- NYC population in 2022 — 8 million

- US population in 2022 — 330 million

memento — shows a person struggling to figure out the ground truth; figuring out to whom he can defer (including different versions of himself); figuring out what his real goals are; etc.

hmm, that's fair — i guess there's another, finer distinction here between "active recall" and chaining the mental motion of recalling of something to some triggering mental motion. i usually think of "active recall" as the process of:

- mental-state-1

- ~stuff going on in your brain~

- mental-state-2

over time, you build up an association between mental-state-1 and mental-state-2. doing this with active recall looks like being shown something that automatically triggers mental-state-1, then being forced to actively recall mental-state-2.

with names/faces, i think that if you were to e.g. look at their face, then try to remember their name, i'd say that probably counts as active recall (where mental-state-1 is "person's face," mental-state-2 is "person's name," and ~stuff going on in your brain~ is the mental motion of going from their face to their name).

thanks for pointing that out!

Active Recall and Spaced Repetition are Different Things

EDIT: I've slightly edited this and published it as a full post.

Epistemic status: splitting hairs.

There’s been a lot of recent work on memory. This is great, but popular communication of that progress consistently mixes up active recall and spaced repetition. That consistently bugged me — hence this piece.

If you already have a good understanding of active recall and spaced repetition, skim sections I and II, then skip to section III.

Note: this piece doesn’t meticulously cite sources, and will probably be slightly out of date in a few years. I link some great posts that have far more technical substance at the end, if you’re interested in learning more & actually reading the literature.

I. Active Recall

When you want to learn some new topic, or review something you’ve previously learned, you have different strategies at your disposal. Some examples:

- Watch a YouTube video on the topic.

- Do practice problems.

- Review notes you’d previously taken.

- Try to explain the topic to a friend.

- etc

Some of these boil down to “stuff the information into your head” (YouTube video, reviewing notes) and others boil down to “do stuff that requires you to use/remember the information” (doing practice problems, explaining to a friend). Broadly speaking, the second category — doing stuff that requires you to actively recall the information — is way, way more effective.

That’s called “active recall.”

II. (Efficiently) Spaced Repetition

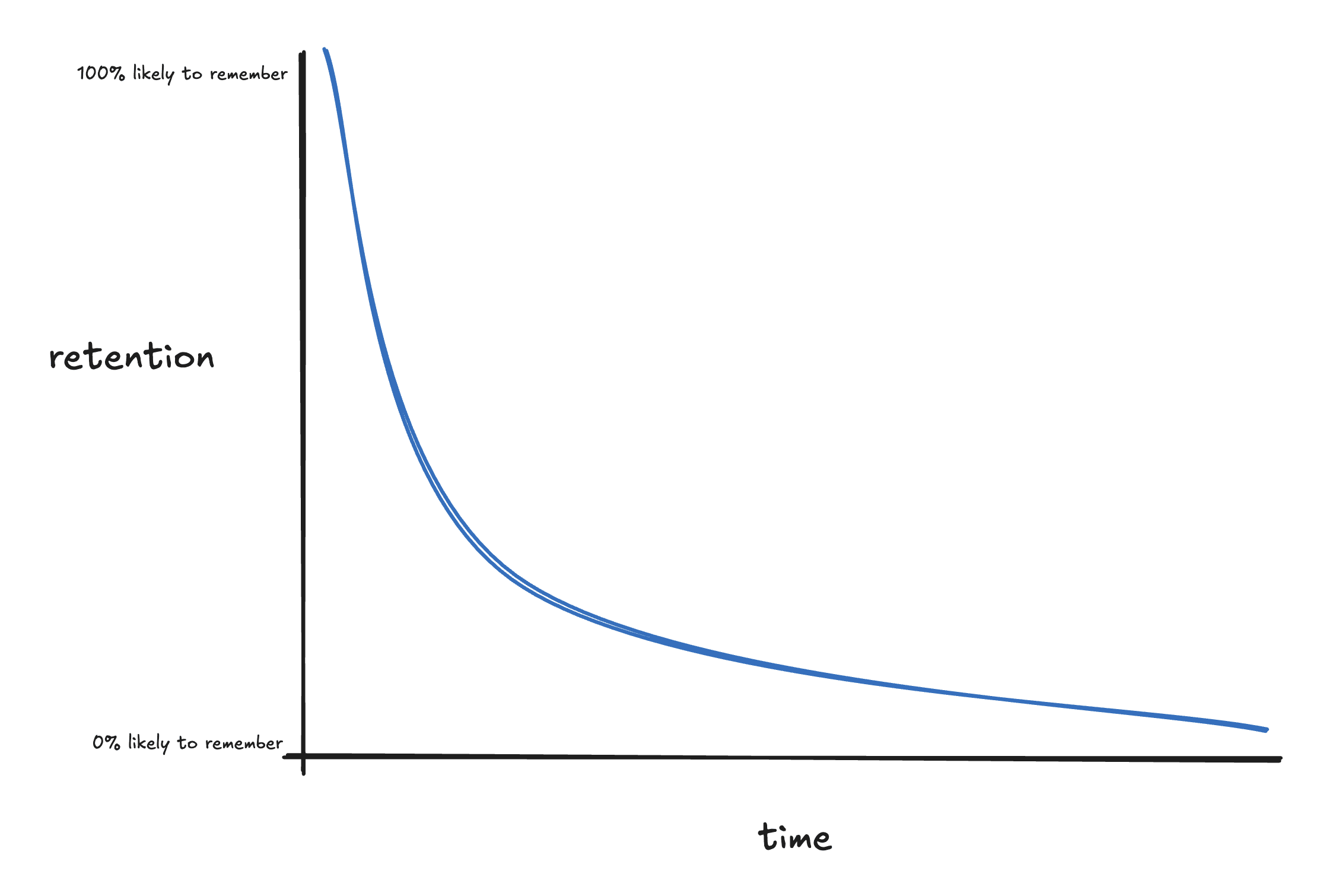

After you learn something, you’re likely to forget it pretty quickly:

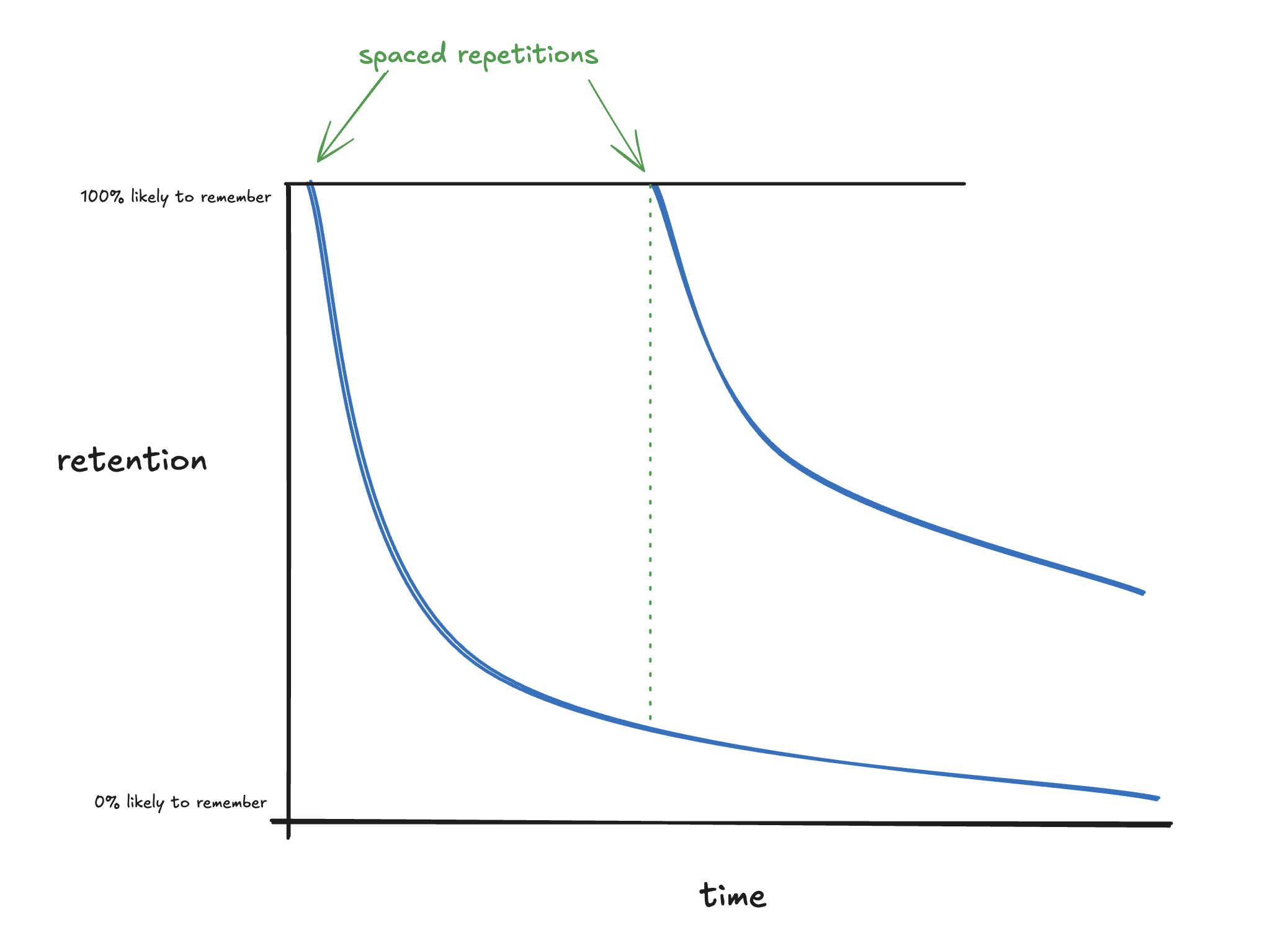

Fortunately, reviewing the thing you learned pushes you back up to 100% retention, and this happens each time you “repeat” a review:

That’s a lot better!

…but that’s also a lot of work. You have to review the thing you learned in intervals, which takes time/effort. So, how can you do the least the number of repetitions to keep your retention as high as possible? In other words — what should be the size of the intervals? Should you space them out every day? Every week? Should you change the size of the spaces between repetitions? How?

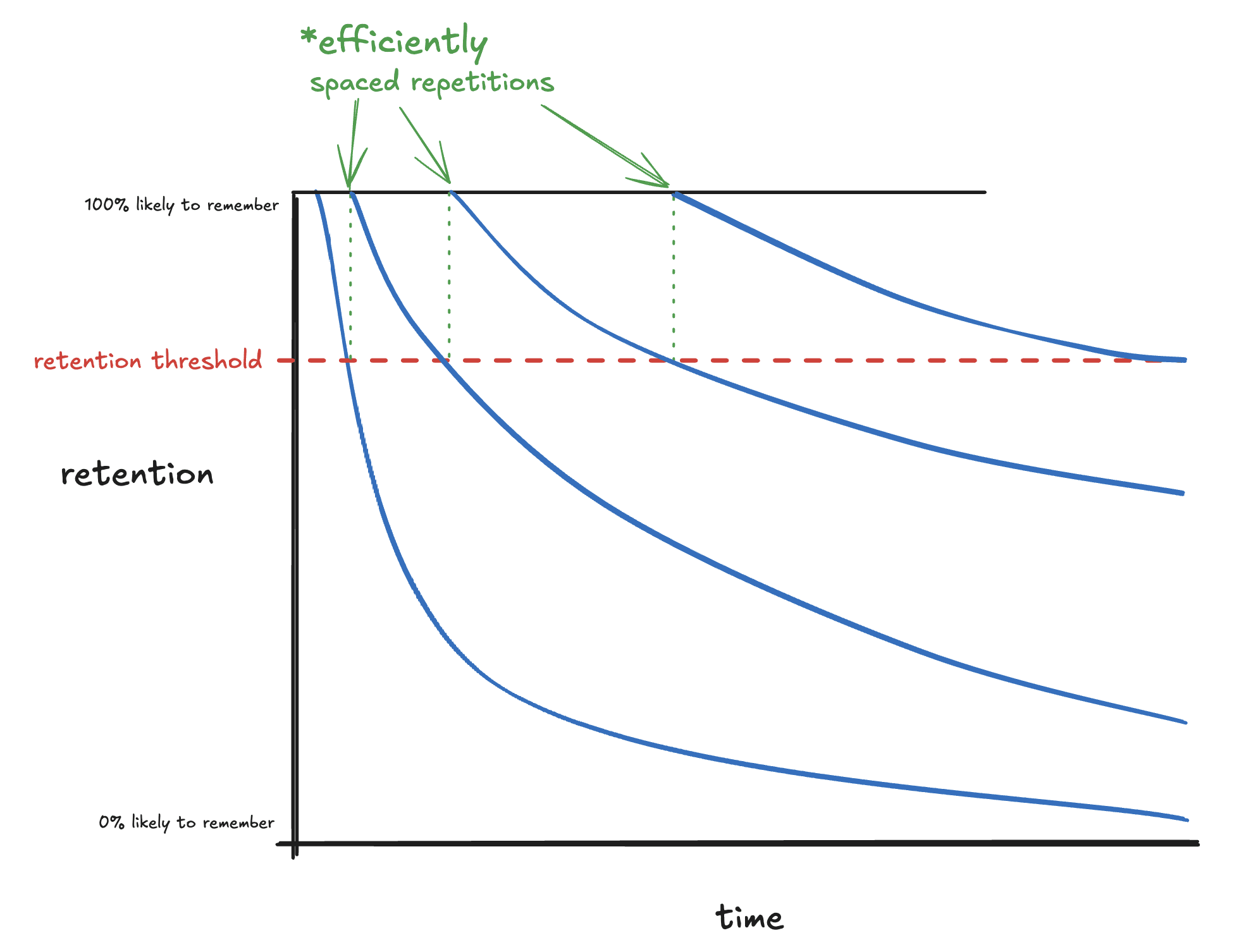

As it turns out, efficiently spacing out repetitions of reviews is a pretty well-studied problem. The answer is “riiiight before you’re about to forget it:”

Generally speaking, you should do a review right before it crosses some threshold for retention. What that threshold actually is depends on some fiddly details, but the central idea remains the same: repeating a review riiight before you hit that threshold is the most efficient spacing possible.

This is called (efficiently) spaced repetition. Systems that use spaced repetitions — software, methods, etc — are called “spaced repetition systems” or “SRS.”

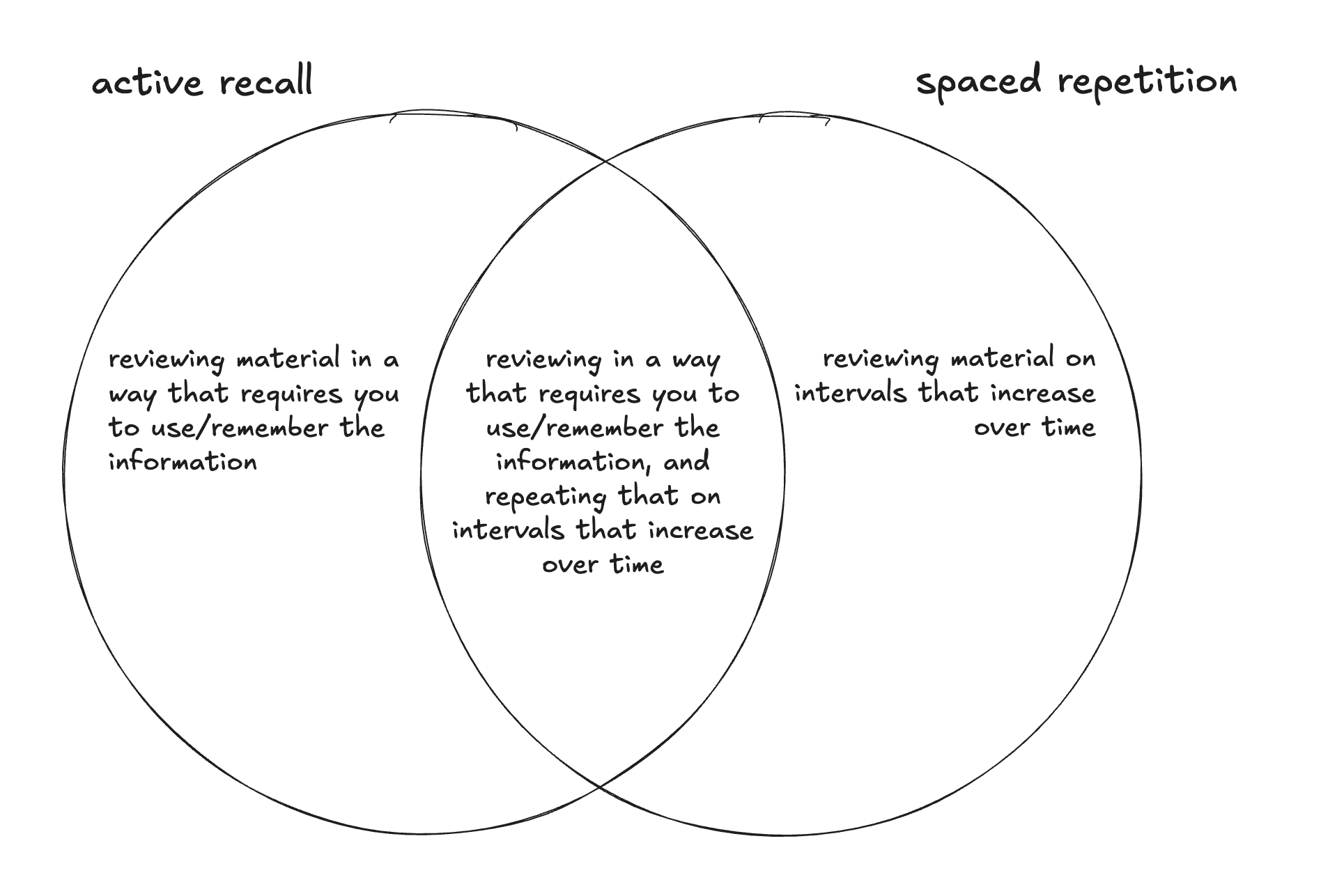

III. The difference

Active recall and spaced repetition are independent strategies. One of them (active recall) is a method for reviewing material; the other (effective spaced repetition) is a method for how to best time reviews. You can use one, the other, or both:

Examples of their independence:

- You could listen to a lecture on a topic once now, and again a year from now (not active recall, very inefficiently spaced repetition)

- You could watch YouTube videos on a topic in efficiently spaced intervals (not active recall, yes spaced repetition)

- You could quiz yourself with flashcards once, then never again (yes active recall, no spaced repetition)

- You could do flashcards on something in efficiently spaced intervals (both spaced repetition and active recall).

IV. Implications

Why does this matter?

Mostly, it doesn’t, and I’m just splitting hairs. But occasionally, it’s prohibitively difficult to use one method, but still quite possible to use the other. In these cases, the right thing to do isn’t to give up on both — it’s to use the one that works!

For example, you can do a bit of efficiently spaced repetition when learning people’s names, by saying their name aloud:

- immediately after learning it (“hi, my name’s Alice” “nice to meet you, Alice!”)

- partway through the conversation (“but i’m still not sure of the proposal. what do you think, Alice?”)

- at the end of the conversation (“thanks for chatting, Alice!”)

- that night (“who did I meet today? oh yeah, Alice!”)

…but it’s a lot more difficult to use active recall to remember people’s names. (The closest I’ve gotten is to try to first bring into my mind’s eye what their face looks like, then to try to remember their name.)

Another example in the opposite direction: learning your way around a city in a car. It’s really easy to do active recall: have Google Maps opened on your phone and ask yourself what the next direction is each time before you look down; guess what the next street is going to be before you get there; etc. But it’s much more difficult to efficiently space your reviews out: review timing ends up mostly in the hands of your travel schedule.

For more on the topic of deliberately using memory systems to quickly learn the geography of a new place, see this post.

is there a handy label for “crux(es) on which i’m maximally uncertain”? there are infinite cruxes that i have for any decision, but the ones i care about are the ones about which i’m most uncertain. it'd be nice to have a reference-able label for this concept, but i haven't seen one anywhere.

there's also an annoying issue that feels analogous to "elasticity" — how much does a marginal change in my doxastic attitude toward my belief in some crux affect my conative attitude toward the decision?

if no such concepts exist for either, i'd propose: crux uncertainty, crux elasticity (respectively)

I wish more LW users had Patreons linked to from their profiles/posts. I would like people to have the option of financially supporting great writers and thinkers on LessWrong.

is this something you’ve considered building into LW natively?

I can give some context for that

please do!

gotcha. what would be the best way to send you feedback? i could do:

- comments here

- sent directly to you via LW DM, email, [dm through some other means] or something else if that's better

(while it's top-of-mind: the feedback that generated this question was that the chat interface pops up every single time open a tab of LW, including every time i open a post in a new tab. this gets really annoying very quickly!)

great! how do i access it on mobile LW?

i’d love access! my guess is that i’d use it like — elicit:research papers::[this feature]:LW posts

solved: i think you mean it as this wikipedia article describes:

The word albatross is sometimes used metaphorically to mean a psychological burden (most often associated with guilt or shame) that feels like a curse.

what do you mean by "financial albatross"?

Domain: Various: Startups, Events, Project Management, etc

Link: Manifund, Manifold, and Manifest {2023, 2024: meeting notes, docs, budget}

Person: Various: generally, the Manifold, Manifund, and Manifest teams

Why: This isn't a video, but it's probably relevantly close. All Manifold-sphere things are public — all meeting notes, budgets/finances, strategy docs, etc. I think that someone could learn a lot of tacit knowledge based on how the Manifold-sphere teams work by skimming e.g. our meeting notes docs, which are fairly comprehensive/extensive.

this interview between alexey guzey and dwarkesh patel gets into it a bit!

i only spent a few minutes browsing, but i thought this was surprisingly well-made!

…when I say “integrity” I mean something like “acting in accordance with your stated beliefs.” Where honesty is the commitment to not speak direct falsehoods, integrity is the commitment to speak truths that actually ring true to yourself, not ones that are just abstractly defensible to other people. It is also a commitment to act on the truths that you do believe, and to communicate to others what your true beliefs are.

your handle of “integrity” seems to point at something quite similar to the thing at which joe carlsmith’s handle of “sincerity” (https://joecarlsmith.com/2022/12/23/on-sincerity) points. am i missing something, or are these two handles for ~the same thing?

ah, lovely! maybe add that link as an edit to the top-level shortform comment?

fourthed. oli, do you intend to post this?

if not, could i post this text as a linkpost to this shortform?

how did this go? any update?

i quite liked this post. thanks!

i'll give two answers, the Official Event Guidelines and the practical social environment.[1] i will say that i have have a bit of a COI in that i'm an event organizer; it'd be good if someone who isn't organizing the event, but e.g. attended the event last year, to either second my thoughts or give their own.

- Official Event Guidelines

- Unsafe drug use of any kind is disallowed and strongly discouraged, both by the venue and by us.

- Illegal drug use is disallowed and strongly discouraged, both by the venue and by us.

- Alcohol use during the event is discouraged, but you can bring & drink your own if you drink responsibly. However, at the official afterparty (and possibly at some points during Summer Camp), we may serve drinks. Broadly, our rules with regard to alcohol are "drink responsibly; please don't drink during the day; definitely don't drink if you're going to drink irresponsibly, because that will be no fun for us and definitely no fun for you." The social environment will not be conducive to alcohol during the day, and probably not super conducive at night.

- practical social environment

- during the main event, drugs & alcohol are pretty strongly discouraged. it makes the event lower quality for everyone.

- during the official afterparty, we'll probably serve alcohol in moderate, safe amounts. we will not be serving drugs, and the social environment will probably not be very conducive to drugs.

- i could imagine that external, unofficial parties/meetups/etc have a different vibe. for these, my guess is that if you can imagine something between a regular conference meetup at a bar and a college party, you're probably somewhere on the right track. note that you are on your own if you attend external, unnofficial parties.

- ^

for future reference, i do not necessarily endorse the practical social environment. more formally, the stance i take was written in the first bullet point (the Official Event Guidelines), regardless of anything written in the second bullet point.

thanks oli, and thanks for editing mine! appreciate the modding <3

thanks for writing this — also, some broad social encouragement for the practice of doing quick/informal lit reviews + posting them publicly! well done :)

that is... wild. thanks for sharing!

I think institutional market makers are basically not pricing [slow takeoff, or the expectation of one] in

why do you think they're not pricing this in?

Really love this post. Thanks for writing it!

thanks for the feedback on the website. here's the explanation we gave on the manifest announcement post:

In the week between LessOnline and Manifest, come hang out at Lighthaven with other attendees! Cowork and share meals during the day, attend casual workshops and talks in the evening, and enjoy conversations by the (again, literal) fire late into the night.

Summer Camp will be pretty lightweight: we’ll provide the space and the tools, and you & your fellow attendees will bring the discussions, workshops, tournaments, games, and whatever else you’re excited about organizing.

Here are the types of events you’ll see at Summer Camp:

- Hackathons (or “Forecastathons”)

- Organized discussions and workshops

- Jam sessions and dance parties

- Games of all kinds: social deception games, poker, MTG, jackbox, etc.

- Campy activities: sardines, s’mores, singalongs

- Multi-day intensive workshops, e.g. a CFAR-style workshop or a Quantitative Trading Bootcamp (Note: these may come at some extra cost, TBD by the organizers)

let me know if you have other questions.

I really enjoyed this — thank you for writing. I also think the updated version is a lot better than the previous version, and I appreciate the work you put in to update it. I'm really, really looking forward to the other posts in this sequence.

I'd also really enjoy a post that's on this exact topic, but one that I'd feel comfortable sending to my mom or something, cf "Broad adverse selection (for poets)."

relevant: story-based decision-making

- the name of this post was really confusing for me. i thought it would be about "how to stop defeating akrasia," not "how to defeat akrasia by stopping." consider renaming it to be a bit more clear?

- the part at the end really reminded me of this piece by dr mciver: https://notebook.drmaciver.com/posts/2022-12-20-17:21.html

+1 on Things You're Allowed To Do, it's really really great

here are some specific, random, generally small things that i do quite often:

- sit on the floor. i notice myself wanting to sit, and i notice myself lacking a chair. fortunately, the floor is always there.

- explicitly babble! i babble about thoughts that are bouncing around in my head, no matter the topic! open a new doc — docs.new works well, or whatever you use — set a 5 minute timer, and just babble. write whatever comes to mind.

- message effective/competent people to cowork with them. i'm probably not the most effective/competent person you know, but feel free practice this with me!

- board airplanes close to last, intentionally. i do this to avoid pushing through the lines, and to give myself an extra ~15 minutes to work in the airport terminal.

- write out informal/babbled decision docs for big/important decisions. i've done this ~5 times over the last 6 months, and it both helps me during the decision (forces me to make my thoughts/worries/hopes concrete, lets me get quick thoughts/advice from friends, etc) and after the decision (i can remind myself why i originally made the decision, regardless of what ends up happening).

- actually doing a lot of the things on this list

- after or while doing something with someone else (a long conversation, a group project, a friendship, a ski trip, etc) asking them "what was the worst thing i did?"

- waking myself up by intentionally getting water up my nose, then blowing it

- pick a few things — about 3-8 — that look pretty good on a menu. ask siri to pick a random number, 1 through [number of things that looked good]. that's now my default order; if i want, i can order something else, but i should have a pretty strong reason.

- when sending someone a list of questions, send them in a accompanying accompanying list of the answers that are likely correct, so that they can just say “yes” or "actually no, it's _____." it cuts down on the amount of time they have to spend answering my questions significantly.

here are some more general mental TAPs that i've accidentally burned into my brain:

- "gahhh, i wish [x] thing existed!" → "could i make [x]?"

- "boy, [x] is annoying/bad/disruptive/generally a problem." → "could i solve [x]?"

- "now that i think about it, [person] is actually a really rare & awesome person." → "could i text/call them right now?"

- "i don't like [x] about myself/my environment/the people i hang out with/my workspace/etc." → "could i change [x]?"

- "i hate that i always have to do [x]." → "what would happen if i didn't do [x]? would i take damage? if so, would i take more damage than the damage i'm currently taking while forcing myself to do [x]?"

- "ooh, i have to remember to [x]." → "should i set an alarm/reminder/calendar event about [x]?" (almost always: yes, you should)

- "that's a great idea." → "should i quickly write a sentence about this down in my notes app? even just a sazen for my future self?"

- "i should do [x]." → "do i have a concrete plan for doing [x] that's worked in relevantly similar situations for me in the past? if not, how am i expecting to get [x] done?"

- [in a meeting] "great, let's make sure to get [x] done." → "is there one clear person who's owning this? who's responsible if it doesn't get done, and in charge of making sure it does get done? do i trust this person to actually get [x] done?"

- "hmm, i'm realizing that i've built up a really big ugh field around [x]." → "[insert generic strategies for dealing with ugh fields; i think mine are mediocre, so would love to hear others']"

- notes:

- most of the above was stolen from this thread

- the actions above are questions that i ask myself, not concrete actions i force myself to do. they're sorta like saying "hey, here's a concrete action you could take — do you want to?" most of the time, my internal response is "nah, i'm good." but sometimes, my internal response is "actually, yeah! let's do this!" importantly, the questions are meant to reduce friction toward acting on your available options, not imply an obligation to those options.

If you and your audience have smartphones, we suggest making use of a copy of this spreadsheet and google form.

are "spreadsheet" and "google form" meant to be linked to something?

I think a lot of what I write for rationalist meetups would apply straightforwardly to EA meetups.

agreed. this sort of thing feels completely missing from the EA Groups Resources Centre, and i'd guess it would be a big/important contribution.

This may be a silly question, but- how does cross posting usually work?

iirc, when you're publishing a post on {LessWrong, the EA forum}, one of the many settings at the bottom is "Cross-Post to {the EA forum, LessWrong}," or something along those lines. there's some karma requirement for both the EA forum and for LW — if you don't meet the karma requirement for one, you might need to manually cross-post until you have enough karma.

are there norms on EA forum around say, pseudonyms and real names, or being a certain amount aligned with EA?

re: pseudonyms: though there's a general, mild preference for post authors to use their real names, using a pseudonym is perfectly fine — and many do (example).

re: alignment: you don't need to be fully on-board with EA to post on the forum (and many aren't), but the content of your post should at least relate to EA.

for other questions regarding the norms on the EA forum, here's a guide to the norms on the EA forum. (on that guide, they have a section on "rules for pseudonymous and multiple accounts" and "privacy and pseudonymity.")

*i'll edit & delete this part later, but: i'll get back to you over email in a bit! caught up with other stuff, and getting to things one at a time :)

This is great! Have you cross-posted this to the EA Forum? If not, may I?

Thanks for the response!

Re: concerns about bad incentives, I agree that you can depict the losses associated with manipulating conditional prediction markets as paying a "cost" — even though you'll probably lose a boatload of money, it might be worth it to lose a boatload of money to manipulate the markets. In the words of Scott Alexander, though:

If you’re wondering why people aren’t going to get an advantage in the economy by committing horrible crimes, the answer is probably the same combination of laws, ethics, and reputational concerns that works everywhere else.

I'm concerned about this, but it feels like a solvable problem.

Re: personal stuff & the negative externalities of publicly revealing probabilities, thanks for pointing these out. I hadn't thought of it. Added it to the post!

Thanks for the response!

This applies to roughly the entire post, but I see an awful lot of magical thinking in this space.

Could you point to some specific areas of magical thinking in the post? and/or in the space?[1] (I'm not claiming that there aren't any, I definitely think there are. I'm interested to know where I & the space are being overconfident/thinking magically, so that I/it can do less magical thinking.)

What is the actual mechanism by which you think prediction markets will solve these problems?

The mechanism that Manifold Love uses. In section 2, I put it as "run a bunch of conditional prediction markets on a bunch of key benchmarks for potential pairs between two sides that are normally caught in adverse selection." I wrote this post to explain the actual mechanism by which I think (conditional) prediction markets might solve these problems, but I also want to note that I definitely do not think that (conditional) prediction markets will definitely for sure 100% totally completely solve these problems. I just think they have potential, and I'm excited to see people giving it a shot.

In order to get a good prediction from a market you need traders to put prices in the right places. This means you need to subsidise[2] the markets.

I agree! In order to get a good prediction from a market, you (probably, see the footnote) need participation to be positive-sum.[3] I think there are a few ways to get this:

- Direct subsidies

- Since prediction markets create valuable price information, it might make sense to have those who benefit from the price information directly pay. I could imagine this pretty clearly, actually: Manifold Love could charge users for (e.g.) more than 5 matches, and some part of the fee that the user pays goes toward market subsidies. As you pointed out, paying a 3rd party is currently the case for most of my examples — matchmakers, headhunters, real estate agents, etc — so it seems like this sort of thing aligns with the norms & users' expectations.

- Hedging

- Some participants bet to hedge other off-market risks. These participants are likely to lose money on prediction markets, and know that ahead of time. That's because they're not betting their beliefs; they're paying the equivalent to an insurance premium.

- For prediction markets generally, this seems like the most viable path to getting money flowing into the market. I'm not sure how well it'd work for this sort of setup, though — mainly because the markets are so personal.

- This requires finding markets on which participants would want to hedge, which seems like a difficult problem. I give an example before, but I'm pretty unsure what something like this would look like in a lot of the examples I listed in the original essay.

- Continuing the example of the labor market from section 2: I could imagine (e.g.) a Google employee buying an ETF-type-thing that bets NO on whether all potential Google employees will remain at Google a year from their hiring date. This protects that Google empoyee against the risk of some huge round of layoffs — they've bought "insurance" against that outcome. In doing so, it provides the markets a way to become positive-sum for those participants who're betting their beliefs.

- New traders

- This provides an inflow of money, but is (obviously) tied to new traders joining the market. I don't like this at all, because it's totally unsustainable and leads to community dynamics like those in crypto. Also, it's a scheme that's pyramid-shaped, a "pyramid scheme" if you will.

- I'm mainly including this for completeness; I think relying on this is a terrible idea.

Whether or not a subsidised prediction market is going to be cheaper for the equivalent level of forecast than paying another 3rd party (as is currently the case in most of your examples) is very unclear to me

Agreed! It's unclear to me too. This sort of question is answerable by trying the thing and seeing if it works — that's why I'm excited about for people & companies to try it out and see if it works.

- ^

I'm assuming you mean the "prediction market/forecasting space," so please let me know if that's not the space to which you're referring.

- ^

I'll interpret "subsidize" more broadly as "money flowing into the market to make it positive-sum after fees, inflation, etc."

- ^

I'm comfortable working under this assumption for now, but I do want to be clear that I'm not fully convinced this is the case. The stock market is clearly negative-sum for the majority of traders, and yet... traders still join. It seems at least plausible that, as long as the market is positive-sum for some key market participants, the markets still can still provide valuable price information.