Generic advice caveats

post by Saul Munn (saul-munn) · 2024-10-30T21:03:07.185Z · LW · GW · 1 commentsThis is a link post for https://www.brasstacks.blog/caveats/

Contents

1 comment

You were (probably) linked here from some advice. Unfortunately, that advice has some caveats. See below:

- There exist some people who should not do the advice.

- Moreover, people are different.

- More moreover, situations are different. What worked there/then might not work here/now.

- Some of the advice is missing context, contradictory, inaccurate, misleading, won’t replicate, or is downright false or useless.

- Consider reversing the advice.

- The author’s incentives might be misaligned with your goals. The author might say something like “these are affiliate links” or “I’m investing in this company,” but sometimes they just get more clicks/upvotes/karma/likes/whatever from overconfidence or exaggeration. Or they might just get a kick out of giving advice.

- You might not be the target audience for the advice.

- The author of the advice might be explaining the advice poorly, even if it’s a good idea. (Corellary: the author might be explaining the advice really convincingly, but it still might not be good advice.)

- Reading the advice selects for advice that you’re liable to read; hearing the advice selects for advice that you’re liable to hear; etc. You’ll read a lot more blog posts telling you to start a blog than ones telling you not to, because the people who have good takes on why you shouldn’t write a blog aren’t writing a blog posts about it.

- The advice might not help at all. It might even make your problem worse. Even if the advice helps, it might not totally solve your problem, or it might create new problems you didn’t expect.

- The advice is for entertainment value only, and not professional advice. The author is not a [lawyer, doctor, whatever], and if they are, they’re not acting in a professional capacity.

- Following the advice might relevantly change your frame/outlook/goals/etc in life. This could, for instance, cause your success criteria to change such that it’s impossible for the advice to ever “succeed” by your current self’s version of “success” — only by past versions.

- The advice might assume certain capabilities, knowledge, connections, or other resources that you don’t have.

- The advice might have second, third, or nth order effects. Even the sign of these can be extremely difficult to predict, let alone their general shape, let alone specifics.

- Stolen from dynomight's essay on advice, and bastardized to fit the context of this list:

- The advice might be incomplete without relevant lived experience. Some things make no sense when you first read it, but make a lot of sense after having experienced something like it.

- You might not understand the advice, even if you think you do. Even slight misunderstandings can cascade into completely different things.

- You might feel like it won’t work, even if you intellectually think it will, which could affect your ability to actually do it, so that when you “try” it, you’re not actually trying it.

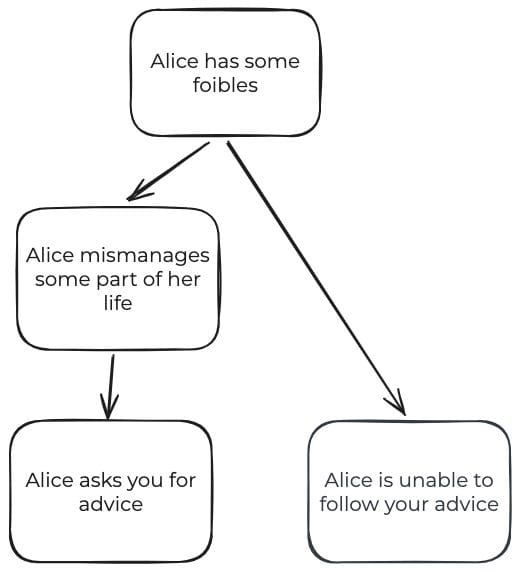

- You might not be able to act on the advice for the same reason you need the advice in the first place. (See image below.)

- Stolen from page 5 of the CFAR Handbook, lightly edited to make sense in this context:

- Remain firmly grounded in your own experiences, in your own judgment, in what you care about, in your existing ways of doing things. As you come across new concepts, hold them up against your own experiences. If something seems like a bad idea, don’t do it. If you do try something out, pay attention to how useful it seems (according to however you already judge whether something seems useful) and whether anything about it seems “off” (according to however you already judge that). If you wind up getting something useful out of the advice, it is likely to come from you tinkering around with your existing ways of doing things (while taking some inspiration from the advice).

- What happens when someone reads the advice? The guy who gave you the advice doesn’t know. Information from folks who read the advice doesn’t make it back to the advice-giver reliably. To take a guess, most of the time, not all that much happens. Reading about how to swing a tennis racket probably doesn’t have much effect on one’s tennis game.

- You might expect that tennis analogy to lead into exhortations to actually try out the techniques and practice them, but (to reiterate) the advice-giver doesn’t really know what will happen if you actually try out the techniques and practice them guided only by the advice. If someone reads the words that’ve been written about the numbered steps to the advice, and forms an interpretation about what those words mean, and tries to do the thing with their mind that matches their interpretation of those words, and practices again and again… the advice-giver might be surprised to see what they actually wind up doing. Maybe it’ll be something useful, maybe not.

- [From niplav’s Life Advice.]

- There is value-laden and value-agnostic advice. Most advice is value-laden. Before you carry out some advice, check whether it actually corresponds to your values.

- This also applies to all advice in this post (yes, even self-referentially)

- There is value-laden and value-agnostic advice. Most advice is value-laden. Before you carry out some advice, check whether it actually corresponds to your values.

Oh, and one last caveat: all of the above apply to all of the above.

1 comments

Comments sorted by top scores.

comment by Parker Conley (parker-conley) · 2024-11-07T20:20:41.683Z · LW(p) · GW(p)

Another caveat:

- I am believable and have expertise in very few major life skills, and possibly don't have expertise in the thing you're asking advice for.

Related note: I think developing the skill of identifying believability and expertise is very powerful (though I have only been applying said skill for a couple of years explicitly; caveat emptor, lol.)

Here's Cedric Chin outlining believability defined by Ray Dalio:

Technique summary:

Believable people are people who have 1) a record of at least three relevant successes and 2) have great explanations of their approach when probed.You may evaluate a person's believability on the subject matter at hand by applying this heuristic. When interacting with them:

- If you’re talking to a more believable person, suppress your instinct to debate and instead ask questions to understand their approach. This is far more effective in getting to the truth than wasting time debating.

- You’re only allowed to debate someone who has roughly equal believability compared to you.

- If you’re dealing with someone with lower believability, spend the minimum amount of time to see if they have objections that you’d not considered before. Otherwise, don’t spend that much time on them.

Here's Gary Klein, founder of Naturalistic Decision Making, outlining seven dimensions of expertise:

We want pragmatic guidelines for deciding which if any purported experts to listen to when making a difficult and important decision. How can we know who is really credible?

Bottom line: We cannot know for sure. There are no iron-clad criteria.

However, there are soft criteria, indicators we can pay attention to. I have identified seven so far, drawing on papers such as Crispen & Hoffman, 2016, and Shanteau, 2015, and on suggestions by Danny Kahneman and Robert Hoffman. Even though none of these criteria are fool-proof, all of them seem useful and relevant:

(a) Successful performance—measurable track record of making good decisions in the past. (But with a large sample, some we do very well just by luck, such as stock-pickers who have called the market direction accurately in the past 10 years.)

(b) Peer respect. (But peer ratings can be contaminated by a person’s confident bearing or fluent articulation of reasons for choices.)

(c) Career—number of years performing the task. (But some 10-year veterans have one year of experience repeated 10 times and, even worse, some vocations do not provide any opportunity for meaningful feedback.)

(d) Quality of tacit knowledge such as mental models. (But some experts may be less articulate because tacit knowledge is by definition hard to articulate.)

(e) Reliability. (Reliability is necessary but not sufficient. A watch that is consistently one hour slow will be highly reliable but completely inaccurate).

(f) Credentials—licensing or certification of achieving professional standards. (But credentials just signify a minimal level of competence, not the achievement of expertise.)

(g) Reflection. When I ask "What was the last mistake you made?" most credible experts immediately describe a recent blunder that has been eating at them. In contrast, journeymen posing as experts typically say they can't think of any; they seem sincere but, of course, they may be faking. And some actual experts, upon being asked about recent mistakes, may for all kinds of reasons choose not to share any of these, even ones they have been ruminating about. So this criterion of reflection and candor is not any more foolproof than the others.