ryan_greenblatt's Shortform

post by ryan_greenblatt · 2023-10-30T16:51:46.769Z · LW · GW · 152 commentsContents

153 comments

152 comments

Comments sorted by top scores.

comment by ryan_greenblatt · 2024-06-28T18:52:13.076Z · LW(p) · GW(p)

I'm currently working as a contractor at Anthropic in order to get employee-level model access as part of a project I'm working on. The project is a model organism of scheming [LW · GW], where I demonstrate scheming arising somewhat naturally with Claude 3 Opus. So far, I’ve done almost all of this project at Redwood Research, but my access to Anthropic models will allow me to redo some of my experiments in better and simpler ways and will allow for some exciting additional experiments. I'm very grateful to Anthropic and the Alignment Stress-Testing team for providing this access and supporting this work. I expect that this access and the collaboration with various members of the alignment stress testing team (primarily Carson Denison and Evan Hubinger so far) will be quite helpful in finishing this project.

I think that this sort of arrangement, in which an outside researcher is able to get employee-level access at some AI lab while not being an employee (while still being subject to confidentiality obligations), is potentially a very good model for safety research, for a few reasons, including (but not limited to):

- For some safety research, it’s helpful to have model access in ways that labs don’t provide externally. Giving employee level access to researchers working at external organizations can allow these researchers to avoid potential conflicts of interest and undue influence from the lab. This might be particularly important for researchers working on RSPs, safety cases, and similar, because these researchers might naturally evolve into third-party evaluators.

- Related to undue influence concerns, an unfortunate downside of doing safety research at a lab is that you give the lab the opportunity to control the narrative around the research and use it for their own purposes. This concern seems substantially addressed by getting model access through a lab as an external researcher.

- I think this could make it easier to avoid duplicating work between various labs. I’m aware of some duplication that could potentially be avoided by ensuring more work happened at external organizations.

For these and other reasons, I think that external researchers with employee-level access is a promising approach for ensuring that safety research can proceed quickly and effectively while reducing conflicts of interest and unfortunate concentration of power. I’m excited for future experimentation with this structure and appreciate that Anthropic was willing to try this. I think it would be good if other labs beyond Anthropic experimented with this structure.

(Note that this message was run by the comms team at Anthropic.)

Replies from: Zach Stein-Perlman, kave, neel-nanda-1, Buck, akash-wasil, Josephm, RedMan↑ comment by Zach Stein-Perlman · 2024-06-29T03:41:03.576Z · LW(p) · GW(p)

Yay Anthropic. This is the first example I'm aware of of a lab sharing model access with external safety researchers to boost their research (like, not just for evals). I wish the labs did this more.

[Edit: OpenAI shared GPT-4 access with safety researchers including Rachel Freedman [LW(p) · GW(p)] before release. OpenAI shared GPT-4 fine-tuning access with academic researchers including Jacob Steinhardt and Daniel Kang in 2023. Yay OpenAI. GPT-4 fine-tuning access is still not public; some widely-respected safety researchers I know recently were wishing for it, and were wishing they could disable content filters.]

Replies from: rachelAF↑ comment by Rachel Freedman (rachelAF) · 2024-06-29T14:39:31.566Z · LW(p) · GW(p)

OpenAI did this too, with GPT-4 pre-release. It was a small program, though — I think just 5-10 researchers.

Replies from: beth-barnes, ryan_greenblatt, Zach Stein-Perlman↑ comment by Beth Barnes (beth-barnes) · 2024-07-02T22:32:50.992Z · LW(p) · GW(p)

I'd be surprised if this was employee-level access. I'm aware of a red-teaming program that gave early API access to specific versions of models, but not anything like employee-level.

↑ comment by ryan_greenblatt · 2024-06-29T18:12:17.811Z · LW(p) · GW(p)

It also wasn't employee level access probably?

(But still a good step!)

↑ comment by Zach Stein-Perlman · 2024-06-29T18:27:43.737Z · LW(p) · GW(p)

Source?

Replies from: rachelAF↑ comment by Rachel Freedman (rachelAF) · 2024-06-30T15:20:26.569Z · LW(p) · GW(p)

It was a secretive program — it wasn’t advertised anywhere, and we had to sign an NDA about its existence (which we have since been released from). I got the impression that this was because OpenAI really wanted to keep the existence of GPT4 under wraps. Anyway, that means I don’t have any proof beyond my word.

Replies from: Zach Stein-Perlman↑ comment by Zach Stein-Perlman · 2024-06-30T16:55:51.435Z · LW(p) · GW(p)

Thanks!

To be clear, my question was like where can I learn more + what should I cite, not I don't believe you. I'll cite your comment.

Yay OpenAI.

↑ comment by kave · 2024-06-28T19:21:46.760Z · LW(p) · GW(p)

Would you be able to share the details of your confidentiality agreement?

Replies from: samuel-marks, ryan_greenblatt↑ comment by Sam Marks (samuel-marks) · 2024-06-29T07:57:47.741Z · LW(p) · GW(p)

(I'm a full-time employee at Anthropic.) It seems worth stating for the record that I'm not aware of any contract I've signed whose contents I'm not allowed to share. I also don't believe I've signed any non-disparagement agreements. Before joining Anthropic, I confirmed that I wouldn't be legally restricted from saying things like "I believe that Anthropic behaved recklessly by releasing [model]".

↑ comment by ryan_greenblatt · 2024-06-29T01:35:01.885Z · LW(p) · GW(p)

I think I could share the literal language in the contractor agreement I signed related to confidentiality, though I don't expect this is especially interesting as it is just a standard NDA from my understanding.

I do not have any non-disparagement, non-solicitation, or non-interference obligations.

I'm not currently going to share information about any other policies Anthropic might have related to confidentiality, though I am asking about what Anthropic's policy is on sharing information related to this.

Replies from: kave↑ comment by kave · 2024-06-29T20:47:52.786Z · LW(p) · GW(p)

I would appreciate the literal language and any other info you end up being able to share.

Replies from: ryan_greenblatt↑ comment by ryan_greenblatt · 2024-07-04T22:44:01.688Z · LW(p) · GW(p)

Here is the full section on confidentiality from the contract:

- Confidential Information.

(a) Protection of Information. Consultant understands that during the Relationship, the Company intends to provide Consultant with certain information, including Confidential Information (as defined below), without which Consultant would not be able to perform Consultant’s duties to the Company. At all times during the term of the Relationship and thereafter, Consultant shall hold in strictest confidence, and not use, except for the benefit of the Company to the extent necessary to perform the Services, and not disclose to any person, firm, corporation or other entity, without written authorization from the Company in each instance, any Confidential Information that Consultant obtains from the Company or otherwise obtains, accesses or creates in connection with, or as a result of, the Services during the term of the Relationship, whether or not during working hours, until such Confidential Information becomes publicly and widely known and made generally available through no wrongful act of Consultant or of others who were under confidentiality obligations as to the item or items involved. Consultant shall not make copies of such Confidential Information except as authorized by the Company or in the ordinary course of the provision of Services.

(b) Confidential Information. Consultant understands that “Confidential Information” means any and all information and physical manifestations thereof not generally known or available outside the Company and information and physical manifestations thereof entrusted to the Company in confidence by third parties, whether or not such information is patentable, copyrightable or otherwise legally protectable. Confidential Information includes, without limitation: (i) Company Inventions (as defined below); and (ii) technical data, trade secrets, know-how, research, product or service ideas or plans, software codes and designs, algorithms, developments, inventions, patent applications, laboratory notebooks, processes, formulas, techniques, biological materials, mask works, engineering designs and drawings, hardware configuration information, agreements with third parties, lists of, or information relating to, employees and consultants of the Company (including, but not limited to, the names, contact information, jobs, compensation, and expertise of such employees and consultants), lists of, or information relating to, suppliers and customers (including, but not limited to, customers of the Company on whom Consultant called or with whom Consultant became acquainted during the Relationship), price lists, pricing methodologies, cost data, market share data, marketing plans, licenses, contract information, business plans, financial forecasts, historical financial data, budgets or other business information disclosed to Consultant by the Company either directly or indirectly, whether in writing, electronically, orally, or by observation.

(c) Third Party Information. Consultant’s agreements in this Section 5 are intended to be for the benefit of the Company and any third party that has entrusted information or physical material to the Company in confidence. During the term of the Relationship and thereafter, Consultant will not improperly use or disclose to the Company any confidential, proprietary or secret information of Consultant’s former clients or any other person, and Consultant will not bring any such information onto the Company’s property or place of business.

(d) Other Rights. This Agreement is intended to supplement, and not to supersede, any rights the Company may have in law or equity with respect to the protection of trade secrets or confidential or proprietary information.

(e) U.S. Defend Trade Secrets Act. Notwithstanding the foregoing, the U.S. Defend Trade Secrets Act of 2016 (“DTSA”) provides that an individual shall not be held criminally or civilly liable under any federal or state trade secret law for the disclosure of a trade secret that is made (i) in confidence to a federal, state, or local government official, either directly or indirectly, or to an attorney; and (ii) solely for the purpose of reporting or investigating a suspected violation of law; or (iii) in a complaint or other document filed in a lawsuit or other proceeding, if such filing is made under seal. In addition, DTSA provides that an individual who files a lawsuit for retaliation by an employer for reporting a suspected violation of law may disclose the trade secret to the attorney of the individual and use the trade secret information in the court proceeding, if the individual (A) files any document containing the trade secret under seal; and (B) does not disclose the trade secret, except pursuant to court order.

(Anthropic comms was fine with me sharing this.)

↑ comment by Neel Nanda (neel-nanda-1) · 2024-06-28T21:06:13.932Z · LW(p) · GW(p)

This seems fantastic! Kudos to Anthropic

↑ comment by Buck · 2024-12-18T17:42:17.850Z · LW(p) · GW(p)

This project has now been released [LW · GW]; I think it went extremely well.

↑ comment by Orpheus16 (akash-wasil) · 2024-06-28T21:02:03.310Z · LW(p) · GW(p)

Do you feel like there are any benefits or drawbacks specifically tied to the fact that you’re doing this work as a contractor? (compared to a world where you were not a contractor but Anthropic just gave you model access to run these particular experiments and let Evan/Carson review your docs)

Replies from: ryan_greenblatt↑ comment by ryan_greenblatt · 2024-06-28T23:54:28.049Z · LW(p) · GW(p)

Being a contractor was the most convenient way to make the arrangement.

I would ideally prefer to not be paid by Anthropic[1], but this doesn't seem that important (as long as the pay isn't too overly large). I asked to be paid as little as possible and I did end up being paid less than would otherwise be the case (and as a contractor I don't receive equity). I wasn't able to ensure that I only get paid a token wage (e.g. $1 in total or minimum wage or whatever).

I think the ideal thing would be a more specific legal contract between me and Anthropic (or Redwood and Anthropic), but (again) this doesn't seem important.

At least for this current primary purpose of this contracting. I do think that it could make sense to be paid for some types of consulting work. I'm not sure what all the concerns are here. ↩︎

↑ comment by Joseph Miller (Josephm) · 2024-06-29T00:20:11.273Z · LW(p) · GW(p)

It seems a substantial drawback that it will be more costly for you to criticize Anthropic in the future.

Many of the people / orgs involved in evals research are also important figures in policy debates. With this incentive Anthropic may gain more ability to control the narrative around AI risks.

Replies from: ryan_greenblatt↑ comment by ryan_greenblatt · 2024-06-29T00:22:47.956Z · LW(p) · GW(p)

It seems a substantial drawback that it will be more costly for you to criticize Anthropic in the future.

As in, if at some point I am currently a contractor with model access (or otherwise have model access via some relationship like this) it will at that point be more costly to criticize Anthropic?

Replies from: Josephm↑ comment by Joseph Miller (Josephm) · 2024-06-29T00:36:41.378Z · LW(p) · GW(p)

I'm not sure what the confusion is exactly.

If any of

- you have a fixed length contract and you hope to have another contract again in the future

- you have an indefinite contract and you don't want them to terminate your relationship

- you are some other evals researcher and you hope to gain model access at some point

you may refrain from criticizing Anthropic from now on.

Replies from: ryan_greenblatt↑ comment by ryan_greenblatt · 2024-06-29T01:05:37.568Z · LW(p) · GW(p)

Ok, so the concern is:

AI labs may provide model access (or other goods), so people who might want to obtain model access might be incentivized to criticize AI labs less.

Is that accurate?

Notably, as described this is not specifically a downside of anything I'm arguing for in my comment or a downside of actually being a contractor. (Unless you think me being a contractor will make me more likely to want model acess for whatever reason.)

I agree that this is a concern in general with researchers who could benefit from various things that AI labs might provide (such as model access). So, this is a downside of research agendas with a dependence on (e.g.) model access.

I think various approaches to mitigate this concern could be worthwhile. (Though I don't think this is worth getting into in this comment.)

Replies from: Josephm↑ comment by Joseph Miller (Josephm) · 2024-06-29T01:19:20.038Z · LW(p) · GW(p)

Yes that's accurate.

Notably, as described this is not specifically a downside of anything I'm arguing for in my comment or a downside of actually being a contractor.

In your comment you say

- For some safety research, it’s helpful to have model access in ways that labs don’t provide externally. Giving employee level access to researchers working at external organizations can allow these researchers to avoid potential conflicts of interest and undue influence from the lab. This might be particularly important for researchers working on RSPs, safety cases, and similar, because these researchers might naturally evolve into third-party evaluators.

- Related to undue influence concerns, an unfortunate downside of doing safety research at a lab is that you give the lab the opportunity to control the narrative around the research and use it for their own purposes. This concern seems substantially addressed by getting model access through a lab as an external researcher.

I'm essentially disagreeing with this point. I expect that most of the conflict of interest concerns remain when a big lab is giving access to a smaller org / individual.

(Unless you think me being a contractor will make me more likely to want model access for whatever reason.)

From my perspective the main takeaway from your comment was "Anthropic gives internal model access to external safety researchers." I agree that once you have already updated on this information, the additional information "I am currently receiving access to Anthropic's internal models" does not change much. (Although I do expect that establishing the precedent / strengthening the relationships / enjoying the luxury of internal model access, will in fact make you more likely to want model access again in the future).

Replies from: ryan_greenblatt↑ comment by ryan_greenblatt · 2024-06-29T01:22:11.016Z · LW(p) · GW(p)

I expect that most of the conflict of interest concerns remain when a big lab is giving access to a smaller org / individual.

As in, there aren't substantial reductions in COI from not being an employee and not having equity? I currently disagree.

Replies from: Josephm↑ comment by Joseph Miller (Josephm) · 2024-06-29T01:32:26.102Z · LW(p) · GW(p)

Yeah that's the crux I think. Or maybe we agree but are just using "substantial"/"most" differently.

It mostly comes down to intuitions so I think there probably isn't a way to resolve the disagreement.

↑ comment by RedMan · 2024-07-01T11:33:28.907Z · LW(p) · GW(p)

So you asked anthropic for uncensored model access so you could try to build scheming AIs, and they gave it to you?

To use a biology analogy, isn't this basically gain of function research?

Replies from: mesaoptimizer↑ comment by mesaoptimizer · 2024-07-05T07:11:22.649Z · LW(p) · GW(p)

Please read the model organisms for misalignment proposal [LW · GW].

comment by ryan_greenblatt · 2025-01-06T17:54:13.453Z · LW(p) · GW(p)

I thought it would be helpful to post about my timelines and what the timelines of people in my professional circles (Redwood, METR, etc) tend to be.

Concretely, consider the outcome of: AI 10x’ing labor for AI R&D [LW · GW][1], measured by internal comments by credible people at labs that AI is 90% of their (quality adjusted) useful work force (as in, as good as having your human employees run 10x faster).

Here are my predictions for this outcome:

- 25th percentile: 2 year (Jan 2027)

- 50th percentile: 5 year (Jan 2030)

The views of other people (Buck, Beth Barnes, Nate Thomas, etc) are similar.

I expect that outcomes like “AIs are capable enough to automate virtually all remote workers” and “the AIs are capable enough that immediate AI takeover is very plausible (in the absence of countermeasures)” come shortly after (median 1.5 years and 2 years after respectively under my views).

Only including speedups due to R&D, not including mechanisms like synthetic data generation. ↩︎

↑ comment by Ajeya Cotra (ajeya-cotra) · 2025-01-07T19:04:23.053Z · LW(p) · GW(p)

My timelines are now roughly similar on the object level (maybe a year slower for 25th and 1-2 years slower for 50th), and procedurally I also now defer a lot to Redwood and METR engineers. More discussion here: https://www.lesswrong.com/posts/K2D45BNxnZjdpSX2j/ai-timelines?commentId=hnrfbFCP7Hu6N6Lsp [LW(p) · GW(p)]

↑ comment by Orpheus16 (akash-wasil) · 2025-01-07T23:06:44.937Z · LW(p) · GW(p)

I expect that outcomes like “AIs are capable enough to automate virtually all remote workers” and “the AIs are capable enough that immediate AI takeover is very plausible (in the absence of countermeasures)” come shortly after (median 1.5 years and 2 years after respectively under my views).

@ryan_greenblatt [LW · GW] can you say more about what you expect to happen from the period in-between "AI 10Xes AI R&D" and "AI takeover is very plausible?"

I'm particularly interested in getting a sense of what sorts of things will be visible to the USG and the public during this period. Would be curious for your takes on how much of this stays relatively private/internal (e.g., only a handful of well-connected SF people know how good the systems are) vs. obvious/public/visible (e.g., the majority of the media-consuming American public is aware of the fact that AI research has been mostly automated) or somewhere in-between (e.g., most DC tech policy staffers know this but most non-tech people are not aware.)

Replies from: ryan_greenblatt, davekasten↑ comment by ryan_greenblatt · 2025-01-07T23:47:18.998Z · LW(p) · GW(p)

I don't feel very well informed and I haven't thought about it that much, but in short timelines (e.g. my 25th percentile): I expect that we know what's going on roughly within 6 months of it happening, but this isn't salient to the broader world. So, maybe the DC tech policy staffers know that the AI people think the situation is crazy, but maybe this isn't very salient to them. A 6 month delay could be pretty fatal even for us as things might progress very rapidly.

↑ comment by davekasten · 2025-01-07T23:56:50.243Z · LW(p) · GW(p)

Note that the production function of the 10x really matters. If it's "yeah, we get to net-10x if we have all our staff working alongside it," it's much more detectable than, "well, if we only let like 5 carefully-vetted staff in a SCIF know about it, we only get to 8.5x speedup".

(It's hard to prove that the results are from the speedup instead of just, like, "One day, Dario woke up from a dream with The Next Architecture in his head")

↑ comment by Daniel Kokotajlo (daniel-kokotajlo) · 2025-01-07T00:31:03.130Z · LW(p) · GW(p)

AI is 90% of their (quality adjusted) useful work force (as in, as good as having your human employees run 10x faster).

I don't grok the "% of quality adjusted work force" metric. I grok the "as good as having your human employees run 10x faster" metric but it doesn't seem equivalent to me, so I recommend dropping the former and just using the latter.

Replies from: ryan_greenblatt↑ comment by ryan_greenblatt · 2025-01-07T00:38:45.121Z · LW(p) · GW(p)

Fair, I really just mean "as good as having your human employees run 10x faster". I said "% of quality adjusted work force" because this was the original way this was stated when a quick poll was done, but the ultimate operationalization was in terms of 10x faster. (And this is what I was thinking.)

Replies from: davekasten↑ comment by davekasten · 2025-01-07T02:50:50.136Z · LW(p) · GW(p)

Basic clarifying question: does this imply under-the-hood some sort of diminishing returns curve, such that the lab pays for that labor until it net reaches as 10x faster improvement, but can't squeeze out much more?

And do you expect that's a roughly consistent multiplicative factor, independent of lab size? (I mean, I'm not sure lab size actually matters that much, to be fair, it seems that Anthropic keeps pace with OpenAI despite being smaller-ish)

↑ comment by ryan_greenblatt · 2025-01-07T04:32:50.299Z · LW(p) · GW(p)

Yeah, for it to reach exactly 10x as good, the situation would presumably be that this was the optimum point given diminishing returns to spending more on AI inference compute. (It might be the returns curve looks very punishing. For instance, many people get a relatively large amount of value from extremely cheap queries to 3.5 Sonnet on claude.ai and the inference cost of this is very small, but greatly increasing the cost (e.g. o1-pro) often isn't any better because 3.5 Sonnet already gave an almost perfect answer.)

I don't have a strong view about AI acceleration being a roughly constant multiplicative factor independent of the number of employees. Uplift just feels like a reasonably simple operationalization.

↑ comment by Charbel-Raphaël (charbel-raphael-segerie) · 2025-01-08T19:22:44.229Z · LW(p) · GW(p)

How much faster do you think we are already? I would say 2x.

Replies from: ryan_greenblatt↑ comment by ryan_greenblatt · 2025-01-08T19:39:24.829Z · LW(p) · GW(p)

I'd guess that xAI, Anthropic, and GDM are more like 5-20% faster all around (with much greater acceleration on some subtasks). It seems plausible to me that the acceleration at OpenAI is already much greater than this (e.g. more like 1.5x or 2x), or will be after some adaptation due to OpenAI having substantially better internal agents than what they've released. (I think this due to updates from o3 and general vibes.)

Replies from: charbel-raphael-segerie↑ comment by Charbel-Raphaël (charbel-raphael-segerie) · 2025-01-08T20:19:42.080Z · LW(p) · GW(p)

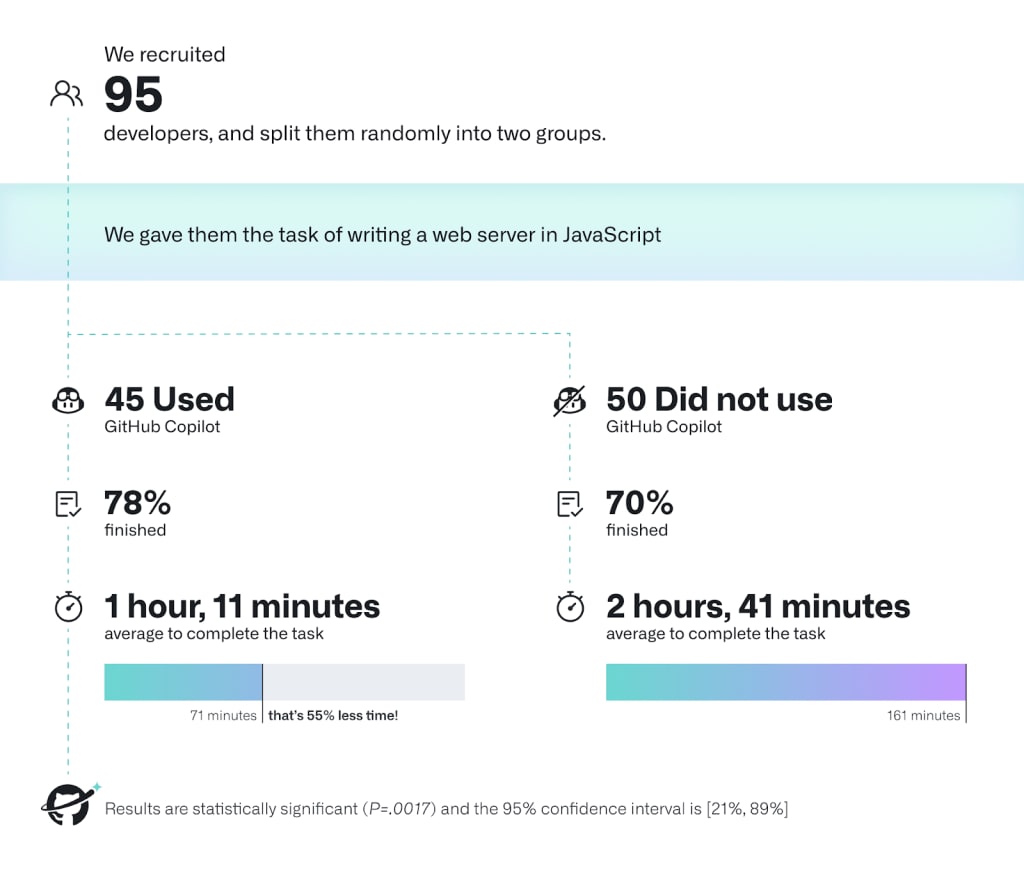

I was saying 2x because I've memorised the results from this study. Do we have better numbers today? R&D is harder, so this is an upper bound. However, since this was from one year ago, so perhaps the factors cancel each other out?

↑ comment by ryan_greenblatt · 2025-01-08T20:27:30.073Z · LW(p) · GW(p)

This case seems extremely cherry picked for cases where uplift is especially high. (Note that this is in copilot's interest.) Now, this task could probably be solved autonomously by an AI in like 10 minutes with good scaffolding.

I think you have to consider the full diverse range of tasks to get a reasonable sense or at least consider harder tasks. Like RE-bench seems much closer, but I still expect uplift on RE-bench to probably (but not certainly!) considerably overstate real world speed up.

Replies from: charbel-raphael-segerie↑ comment by Charbel-Raphaël (charbel-raphael-segerie) · 2025-01-08T20:44:38.363Z · LW(p) · GW(p)

Yeah, fair enough. I think someone should try to do a more representative experiment and we could then monitor this metric.

btw, something that bothers me a little bit with this metric is the fact that a very simple AI that just asks me periodically "Hey, do you endorse what you are doing right now? Are you time boxing? Are you following your plan?" makes me (I think) significantly more strategic and productive. Similar to I hired 5 people to sit behind me and make me productive for a month. But this is maybe off topic.

Replies from: ryan_greenblatt↑ comment by ryan_greenblatt · 2025-01-09T01:17:47.175Z · LW(p) · GW(p)

btw, something that bothers me a little bit with this metric is the fact that a very simple AI ...

Yes, but I don't see a clear reason why people (working in AI R&D) will in practice get this productivity boost (or other very low hanging things) if they don't get around to getting the boost from hiring humans.

↑ comment by Jacob Pfau (jacob-pfau) · 2025-01-06T18:58:01.475Z · LW(p) · GW(p)

AI is 90% of their (quality adjusted) useful work force

This is intended to compare to 2023/AI-unassisted humans, correct? Or is there some other way of making this comparison you have in mind?

Replies from: ryan_greenblatt↑ comment by ryan_greenblatt · 2025-01-06T19:43:23.181Z · LW(p) · GW(p)

Yes, "Relative to only having access to AI systems publicly available in January 2023."

More generally, I define everything more precisely in the post linked in my comment on "AI 10x’ing labor for AI R&D [LW · GW]".

↑ comment by Dagon · 2025-01-06T18:25:59.069Z · LW(p) · GW(p)

Thanks for this - I'm in a more peripheral part of the industry (consumer/industrial LLM usage, not directly at an AI lab), and my timelines are somewhat longer (5 years for 50% chance), but I may be using a different criterion for "automate virtually all remote workers". It'll be a fair bit of time (in AI frame - a year or ten) between "labs show generality sufficient to automate most remote work" and "most remote work is actually performed by AI".

Replies from: ryan_greenblatt↑ comment by ryan_greenblatt · 2025-01-06T18:34:56.779Z · LW(p) · GW(p)

A key dynamic is that I think massive acceleration in AI is likely after the point when AIs can accelerate labor working on AI R&D. (Due to all of: the direct effects of accelerating AI software progress, this acceleration rolling out to hardware R&D and scaling up chip production, and potentially greatly increased investment.) See also here and here [LW · GW].

So, you might very quickly (1-2 years) go from "the AIs are great, fast, and cheap software engineers speeding up AI R&D" to "wildly superhuman AI that can achieve massive technical accomplishments".

Replies from: Dagon↑ comment by Dagon · 2025-01-06T21:25:43.457Z · LW(p) · GW(p)

I think massive acceleration in AI is likely after the point when AIs can accelerate labor working on AI R&D.

Fully agreed. And the trickle-down from AI-for-AI-R&D to AI-for-tool-R&D to AI-for-managers-to-replace-workers (and -replace-middle-managers) is still likely to be a bit extended. And the path is required - just like self-driving cars: the bar for adoption isn't "better than the median human" or even "better than the best affordable human", but "enough better that the decision-makers can't find a reason to delay".

comment by ryan_greenblatt · 2024-12-31T02:54:38.452Z · LW(p) · GW(p)

Inference compute scaling might imply we first get fewer, smarter AIs.

Prior estimates imply that the compute used to train a future frontier model could also be used to run tens or hundreds of millions of human equivalents per year at the first time when AIs are capable enough to dominate top human experts at cognitive tasks[1] (examples here from Holden Karnofsky, here from Tom Davidson, and here [LW · GW] from Lukas Finnveden). I think inference time compute scaling (if it worked) might invalidate this picture and might imply that you get far smaller numbers of human equivalents when you first get performance that dominates top human experts, at least in short timelines where compute scarcity might be important. Additionally, this implies that at the point when you have abundant AI labor which is capable enough to obsolete top human experts, you might also have access to substantially superhuman (but scarce) AI labor (and this could pose additional risks).

The point I make here might be obvious to many, but I thought it was worth making as I haven't seen this update from inference time compute widely discussed in public.[2]

However, note that if inference compute allows for trading off between quantity of tasks completed and the difficulty of tasks that can be completed (or the quality of completion), then depending on the shape of the inference compute returns curve, at the point when we can run some AIs as capable as top human experts, it might be worse to run many (or any) AIs at this level of capability rather than using less inference compute per task and completing more tasks (or completing tasks serially faster).

Further, efficiency might improve quickly such that we don't have a long regime with only a small number of human equivalents. I do a BOTEC on this below.

I'll do a specific low-effort BOTEC to illustrate my core point that you might get far smaller quantities of top human expert-level performance at first. Suppose that we first get AIs that are ~10x human cost (putting aside inflation in compute prices due to AI demand) and as capable as top human experts at this price point (at tasks like automating R&D). If this is in ~3 years, then maybe you'll have $15 million/hour worth of compute. Supposing $300/hour human cost, then we get ($15 million/hour) / ($300/hour) / (10 times human cost per compute dollar) * (4 AI hours / human work hours) = 20k human equivalents. This is a much smaller number than prior estimates.

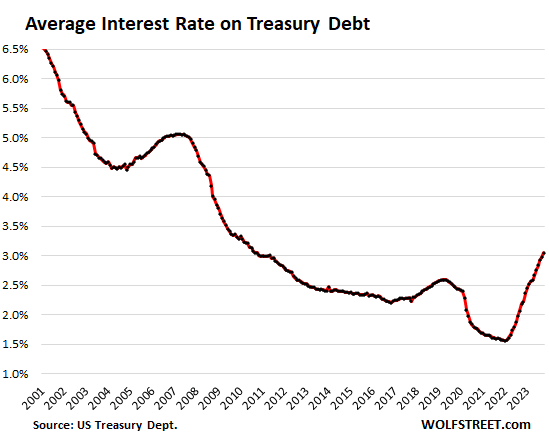

The estimate of $15 million/hour worth of compute comes from: OpenAI spent ~$5 billion on compute this year, so $5 billion / (24*365) = $570k/hour; spend increases by ~3x per year, so $570k/hour * 3³ = $15 million.

The estimate for 3x per year comes from: total compute is increasing by 4-5x per year, but some is hardware improvement and some is increased spending. Hardware improvement is perhaps ~1.5x per year and 4.5/1.5 = 3. This at least roughly matches this estimate from Epoch which estimates 2.4x additional spend (on just training) per year. Also, note that Epoch estimates 4-5x compute per year here and 1.3x hardware FLOP/dollar here, which naively implies around 3.4x, but this seems maybe too high given the prior number.

Earlier, I noted that efficiency might improve rapidly. We can look at recent efficiency changes to get a sense for how fast. GPT-4o mini is roughly 100x cheaper than GPT-4 and is perhaps roughly as capable all together (probably lower intelligence but better elicited). It was trained roughly 1.5 years later (GPT-4 was trained substantially before it was released) for ~20x efficiency improvement per year. This is selected for being a striking improvment and probably involves low hanging fruit, but AI R&D will be substantially accelerated in the future which probably more than cancels this out. Further, I expect that inference compute will be inefficent in the tail of high inference compute such that efficiency will be faster than this once the capability is reached. So we might expect that the number of AI human equivalents increases by >20x per year and potentially much faster if AI R&D is greatly accelerated (and compute doesn't bottleneck this). If progress is "just" 50x per year, then it would still take a year to get to millions of human equivalents based on my earlier estimate of 20k human equivalents. Note that once you have millions of human equivalents, you also have increased availability of generally substantially superhuman AI systems.

I'm refering to the notion of Top-human-Expert-Dominating AI that I define in this post [LW · GW], though without a speed/cost constraint as I want to talk about the cost when you first get such systems. ↩︎

Of course, we should generally expect huge uncertainty with future AI architectures such that fixating on very efficient substitution of inference time compute for training would be a mistake, along with fixating on minimal or no substitution. I think a potential error of prior discussion is insufficient focus on the possibility of relatively scalable (though potentially inefficient) substitution of inference time for training (which o3 appears to exhibit) such that we see very expensive (and potentially slow) AIs that dominate top human expert performance prior to seeing cheap and abundant AIs which do this. ↩︎

↑ comment by Nathan Helm-Burger (nathan-helm-burger) · 2025-01-01T03:35:14.040Z · LW(p) · GW(p)

Ok, but for how long? If the situation holds for only 3 months, and then the accelerated R&D gives us a huge drop in costs, then the strategic outcomes seem pretty similar.

If there continues to be a useful peak capability only achievable with expensive inference, like the 10x human cost, and there are weaker human-skill-at-minimum available for 0.01x human cost, then it may be interesting to consider which tasks will benefit more from a large number of moderately good workers vs a small number of excellent workers.

Also worth considering is speed. In a lot of cases, it is possible to set things up to run slower-but-cheaper on less or cheaper hardware. Or to pay more, and have things run in as highly parallelized a manner as possible on the most expensive hardware. Usually maximizing speed comes with some cost overhead. So then you also need to consider whether it's worth having more of the work be done in serial by a smaller number of faster models...

For certain tasks, particularly competitive ones like sports or combat, speed can be a critical factor and is worth sacrificing peak intelligence for. Obviously, for long horizon strategic planning, it's the other way around.

Replies from: ryan_greenblatt↑ comment by ryan_greenblatt · 2025-01-01T03:45:32.751Z · LW(p) · GW(p)

I don't expect this to continue for very long. 3 months (or less) seems plausible. I really should have mentioned this in the post. I've now edited it in.

then the strategic outcomes seem pretty similar

I don't think so. In particular once the costs drop you might be able to run substantially superhuman systems at the same cost that you could previously run systems that can "merely" automate away top human experts.

↑ comment by Orpheus16 (akash-wasil) · 2024-12-31T18:10:21.428Z · LW(p) · GW(p)

The point I make here is also likely obvious to many, but I wonder if the "X human equivalents" frame often implicitly assumes that GPT-N will be like having X humans. But if we expect AIs to have comparative advantages (and disadvantages), then this picture might miss some important factors.

The "human equivalents" frame seems most accurate in worlds where the capability profile of an AI looks pretty similar to the capability profile of humans. That is, getting GPT-6 to do AI R&D is basically "the same as" getting X humans to do AI R&D. It thinks in fairly similar ways and has fairly similar strengths/weaknesses.

The frame is less accurate in worlds where AI is really good at some things and really bad at other things. In this case, if you try to estimate the # of human equivalents that GPT-6 gets you, the result might be misleading or incomplete. A lot of fuzzier things will affect the picture.

The example I've seen discussed most is whether or not we expect certain kinds of R&D to be bottlenecked by "running lots of experiments" or "thinking deeply and having core conceptual insights." My impression is that one reason why some MIRI folks are pessimistic is that they expect capabilities research to be more easily automatable (AIs will be relatively good at running lots of ML experiments quickly, which helps capabilities more under their model) than alignment research (AIs will be relatively bad at thinking deeply or serially about certain topics, which is what you need for meaningful alignment progress under their model).

Perhaps more people should write about what kinds of tasks they expect GPT-X to be "relatively good at" or "relatively bad at". Or perhaps that's too hard to predict in advance. If so, it could still be good to write about how different "capability profiles" could allow certain kinds of tasks to be automated more quickly than others.

(I do think that the "human equivalents" frame is easier to model and seems like an overall fine simplification for various analyses.)

Replies from: ryan_greenblatt↑ comment by ryan_greenblatt · 2024-12-31T19:01:07.239Z · LW(p) · GW(p)

In the top level comment, I was just talking about AI systems which are (at least) as capable as top human experts. (I was trying to point at the notion of Top-human-Expert-Dominating AI that I define in this post [LW · GW], though without a speed/cost constraint, but I think I was a bit sloppy in my language. I edited the comment a bit to better communicate this.)

So, in this context, human (at least) equivalents does make sense (as in, because the question is the cost of AIs that can strictly dominate top human experts so we can talk about the amount of compute needed to automate away one expert/researcher on average), but I agree that for earlier AIs it doesn't (necessarily) make sense and plausibly these earlier AIs are very key for understanding the risk (because e.g. they will radically accelerate AI R&D without necessarily accelerating other domain).

Replies from: akash-wasil↑ comment by Orpheus16 (akash-wasil) · 2024-12-31T20:05:04.610Z · LW(p) · GW(p)

At first glance, I don’t see how the point I raised is affected by the distinction between expert-level AIs vs earlier AIs.

In both cases, you could expect an important part of the story to be “what are the comparative strengths and weaknesses of this AI system.”

For example, suppose you have an AI system that dominates human experts at every single relevant domain of cognition. It still seems like there’s a big difference between “system that is 10% better at every relevant domain of cognition” and “system that is 300% better at domain X and only 10% better at domain Y.”

To make it less abstract, one might suspect that by the time we have AI that is 10% better than humans at “conceptual/serial” stuff, the same AI system is 1000% better at “speed/parallel” stuff. And this would have pretty big implications for what kind of AI R&D ends up happening (even if we condition on only focusing on systems that dominate experts in every relevant domain.)

Replies from: ryan_greenblatt↑ comment by ryan_greenblatt · 2024-12-31T21:41:18.377Z · LW(p) · GW(p)

I agree comparative advantages can still important, but your comment implied a key part of the picture is "models can't do some important thing". (E.g. you talked about "The frame is less accurate in worlds where AI is really good at some things and really bad at other things." but models can't be really bad at almost anything if they strictly dominate humans at basically everything.)

And I agree that at the point AIs are >5% better at everything they might also be 1000% better at some stuff.

I was just trying to point out that talking about the number human equivalents (or better) can still be kinda fine as long as the model almost strictly dominates humans as the model can just actually substitute everywhere. Like the number of human equivalents will vary by domain but at least this will be a lower bound.

comment by ryan_greenblatt · 2025-01-19T19:03:01.850Z · LW(p) · GW(p)

Sometimes people think of "software-only [LW · GW] singularity" as an important category of ways AI could go. A software-only singularity can roughly be defined as when you get increasing-returns growth (hyper-exponential) just via the mechanism of AIs increasing the labor input to AI capabilities software[1] R&D (i.e., keeping fixed the compute input to AI capabilities).

While the software-only singularity dynamic is an important part of my model, I often find it useful to more directly consider the outcome that software-only singularity might cause: the feasibility of takeover-capable AI without massive compute automation. That is, will the leading AI developer(s) be able to competitively develop AIs powerful enough to plausibly take over[2] without previously needing to use AI systems to massively (>10x) increase compute production[3]?

[This is by Ryan Greenblatt and Alex Mallen]

We care about whether the developers' AI greatly increases compute production because this would require heavy integration into the global economy in a way that relatively clearly indicates to the world that AI is transformative. Greatly increasing compute production would require building additional fabs which currently involve substantial lead times, likely slowing down the transition from clearly transformative AI to takeover-capable AI.[4][5] In addition to economic integration, this would make the developer dependent on a variety of actors after the transformative nature of AI is made more clear, which would more broadly distribute power.

For example, if OpenAI is selling their AI's labor to ASML and massively accelerating chip production before anyone has made takeover-capable AI, then (1) it would be very clear to the world that AI is transformatively useful and accelerating, (2) building fabs would be a constraint in scaling up AI which would slow progress, and (3) ASML and the Netherlands could have a seat at the table in deciding how AI goes (along with any other actors critical to OpenAI's competitiveness). Given that AI is much more legibly transformatively powerful in this world, they might even want to push for measures to reduce AI/human takeover risk.

A software-only singularity is not necessary for developers to have takeover-capable AIs without having previously used them for massive compute automation (it is also not clearly sufficient, since it might be too slow or uncompetitive by default without massive compute automation as well). Instead, developers might be able to achieve this outcome by other forms of fast AI progress:

- Algorithmic / scaling is fast enough at the relevant point independent of AI automation. This would likely be due to one of:

- Downstream AI capabilities progress very rapidly with the default software and/or hardware progress rate at the relevant point;

- Existing compute production (including repurposable production) suffices (this is sometimes called hardware overhang) and the developer buys a bunch more chips (after generating sufficient revenue or demoing AI capabilities to attract investment);

- Or there is a large algorithmic advance that unlocks a new regime with fast progress due to low-hanging fruit.[6]

- AI automation results in a one-time acceleration of software progress without causing an explosive feedback loop, but this does suffice for pushing AIs above the relevant capability threshold quickly.

- Other developers just aren't very competitive (due to secrecy, regulation, or other governance regimes) such that proceeding at a relatively slower rate (via algorithmic and hardware progress) suffices.

My inside view sense is that the feasibility of takeover-capable AI without massive compute automation is about 75% likely if we get AIs that dominate top-human-experts [LW · GW] prior to 2040.[7] Further, I think that in practice, takeover-capable AI without massive compute automation is maybe about 60% likely. (This is because massively increasing compute production is difficult and slow, so if proceeding without massive compute automation is feasible, this would likely occur.) However, I'm reasonably likely to change these numbers on reflection due to updating about what level of capabilities would suffice for being capable of takeover (in the sense defined in an earlier footnote) and about the level of revenue and investment needed to 10x compute production. I'm also uncertain whether a substantially smaller scale-up than 10x (e.g., 3x) would suffice to cause the effects noted earlier.

To-date software progress has looked like "improvements in pre-training algorithms, data quality, prompting strategies, tooling, scaffolding" as described here [LW · GW]. ↩︎

This takeover could occur autonomously, via assisting the developers in a power grab, or via partnering with a US adversary. I'll count it as "takeover" if the resulting coalition has de facto control of most resources. I'll count an AI as takeover-capable if it would have a >25% chance of succeeding at a takeover (with some reasonable coalition) if no other actors had access to powerful AI systems. Further, this takeover wouldn't be preventable with plausible interventions on legible human controlled institutions, so e.g., it doesn't include the case where an AI lab is steadily building more powerful AIs for an eventual takeover much later (see discussion here [LW(p) · GW(p)]). This 25% probability is as assessed under my views but with the information available to the US government at the time this AI is created. This line is intended to point at when states should be very worried about AI systems undermining their sovereignty unless action has already been taken. Note that insufficient inference compute could prevent an AI from being takeover-capable even if it could take over with enough parallel copies. And note that whether a given level of AI capabilities suffices for being takeover-capable is dependent on uncertain facts about how vulnerable the world seems (from the subjective vantage point I defined earlier). Takeover via the mechanism of an AI escaping, independently building more powerful AI that it controls, and then this more powerful AI taking over would count as that original AI that escaped taking over. I would also count a rogue internal deployment [LW · GW] that leads to the AI successfully backdooring or controlling future AI training runs such that those future AIs take over. However, I would not count merely sabotaging safety research. ↩︎

I mean 10x additional production (caused by AI labor) above long running trends in expanding compute production and making it more efficient. As in, spending on compute production has been increasing each year and the efficiency of compute production (in terms of FLOP/$ or whatever) has also been increasing over time, and I'm talking about going 10x above this trend due to using AI labor to expand compute production (either revenue from AI labor or having AIs directly work on chips as I'll discuss in a later footnote). ↩︎

Note that I don't count converting fabs from making other chips (e.g., phones) to making AI chips as scaling up compute production; I'm just considering things that scale up the amount of AI chips we could somewhat readily produce. TSMC's revenue is "only" about $100 billion per year, so if only converting fabs is needed, this could be done without automation of compute production and justified on the basis of AI revenues that are substantially smaller than the revenues that would justify building many more fabs. Currently AI is around 15% of leading node production at TSMC, so only a few more doublings are needed for it to consume most capacity. ↩︎

Note that the AI could indirectly increase compute production via being sufficiently economically useful that it generates enough money to pay for greatly scaling up compute. I would count this as massive compute automation, though some routes through which the AI could be sufficiently economically useful might be less convincing of transformativeness than the AIs substantially automating the process of scaling up compute production. However, I would not count the case where AI systems are impressive enough to investors that this justifies investment that suffices for greatly scaling up fab capacity while profits/revenues wouldn't suffice for greatly scaling up compute on their own. In reality, if compute is greatly scaled up, this will occur via a mixture of speculative investment, the AI earning revenue, and the AI directly working on automating labor along the compute supply chain. If the revenue and direct automation would suffice for an at least massive compute scale-up (>10x) on their own (removing the component from speculative investment), then I would count this as massive compute automation. ↩︎

A large algorithmic advance isn't totally unprecedented. It could suffice if we see an advance similar to what seemingly happened with reasoning models like o1 and o3 in 2024. ↩︎

About 2/3 of this is driven by software-only singularity. ↩︎

↑ comment by Lukas Finnveden (Lanrian) · 2025-01-21T03:08:53.841Z · LW(p) · GW(p)

I'm not sure if the definition of takeover-capable-AI (abbreviated as "TCAI" for the rest of this comment) in footnote 2 quite makes sense. I'm worried that too much of the action is in "if no other actors had access to powerful AI systems", and not that much action is in the exact capabilities of the "TCAI". In particular: Maybe we already have TCAI (by that definition) because if a frontier AI company or a US adversary was blessed with the assumption "no other actor will have access to powerful AI systems", they'd have a huge advantage over the rest of the world (as soon as they develop more powerful AI), plausibly implying that it'd be right to forecast a >25% chance of them successfully taking over if they were motivated to try.

And this seems somewhat hard to disentangle from stuff that is supposed to count according to footnote 2, especially: "Takeover via the mechanism of an AI escaping, independently building more powerful AI that it controls, and then this more powerful AI taking over would" and "via assisting the developers in a power grab, or via partnering with a US adversary". (Or maybe the scenario in 1st paragraph is supposed to be excluded because current AI isn't agentic enough to "assist"/"partner" with allies as supposed to just be used as a tool?)

What could a competing definition be? Thinking about what we care most about... I think two events especially stand out to me:

- When would it plausibly be catastrophically bad for an adversary to steal an AI model?

- When would it plausibly be catastrophically bad for an AI to be power-seeking and non-controlled [LW · GW]?

Maybe a better definition would be to directly talk about these two events? So for example...

- "Steal is catastrophic" would be true if...

- "Frontier AI development projects immediately acquire good enough security to keep future model weights secure" has significantly less probability of AI-assisted takeover than

- "Frontier AI development projects immediately have their weights stolen, and then acquire security that's just as good as in (1a)."[1]

- "Power-seeking and non-controlled is catastrophic" would be true if...

- "Frontier AI development projects immediately acquire good enough judgment about power-seeking-risk that they henceforth choose to not deploy any model that would've been net-negative for them to deploy" has significantly less probability of AI-assisted takeover than

- "Frontier AI development acquire the level of judgment described in (2a) 6 months later."[2]

Where "significantly less probability of AI-assisted takeover" could be e.g. at least 2x less risk.

- ^

The motivation for assuming "future model weights secure" in both (1a) and (1b) is so that the downside of getting the model weights stolen imminently isn't nullified by the fact that they're very likely to get stolen a bit later, regardless. Because many interventions that would prevent model weight theft this month would also help prevent it future months. (And also, we can't contrast 1a'="model weights are permanently secure" with 1b'="model weights get stolen and are then default-level-secure", because that would already have a really big effect on takeover risk, purely via the effect on future model weights, even though current model weights probably aren't that important.)

- ^

The motivation for assuming "good future judgment about power-seeking-risk" is similar to the motivation for assuming "future model weights secure" above. The motivation for choosing "good judgment about when to deploy vs. not" rather than "good at aligning/controlling future models" is that a big threat model is "misaligned AIs outcompete us because we don't have any competitive aligned AIs, so we're stuck between deploying misaligned AIs and being outcompeted" and I don't want to assume away that threat model.

↑ comment by ryan_greenblatt · 2025-01-21T04:47:54.720Z · LW(p) · GW(p)

I agree that the notion of takeover-capable AI I use is problematic and makes the situation hard to reason about, but I intentionally rejected the notions you propose as they seemed even worse to think about from my perspective.

Replies from: Lanrian↑ comment by Lukas Finnveden (Lanrian) · 2025-01-21T05:47:07.968Z · LW(p) · GW(p)

Is there some reason for why current AI isn't TCAI by your definition?

(I'd guess that the best way to rescue your notion it is to stipulate that the TCAIs must have >25% probability of taking over themselves. Possibly with assistance from humans, possibly by manipulating other humans who think they're being assisted by the AIs — but ultimately the original TCAIs should be holding the power in order for it to count. That would clearly exclude current systems. But I don't think that's how you meant it.)

Replies from: ryan_greenblatt↑ comment by ryan_greenblatt · 2025-01-21T17:36:41.591Z · LW(p) · GW(p)

Oh sorry. I somehow missed this aspect of your comment.

Here's a definition of takeover-capable AI that I like: the AI is capable enough that plausible interventions on known human controlled institutions within a few months no longer suffice to prevent plausible takeover. (Which implies that making the situation clear to the world is substantially less useful and human controlled institutions can no longer as easily get a seat at the table.)

Under this definition, there are basically two relevant conditions:

- The AI is capable enough to itself take over autonomously. (In the way you defined it, but also not in a way where intervening on human institutions can still prevent the takeover, so e.g.., the AI just having a rogue deployment within OpenAI doesn't suffice if substantial externally imposed improvements to OpenAI's security and controls would defeat the takeover attempt.)

- Or human groups can do a nearly immediate takeover with the AI such that they could then just resist such interventions.

I'll clarify this in the comment.

Replies from: Lanrian↑ comment by Lukas Finnveden (Lanrian) · 2025-01-21T18:23:04.360Z · LW(p) · GW(p)

Hm — what are the "plausible interventions" that would stop China from having >25% probability of takeover if no other country could build powerful AI? Seems like you either need to count a delay as successful prevention, or you need to have a pretty low bar for "plausible", because it seems extremely difficult/costly to prevent China from developing powerful AI in the long run. (Where they can develop their own supply chains, put manufacturing and data centers underground, etc.)

Replies from: ryan_greenblatt↑ comment by ryan_greenblatt · 2025-01-21T18:45:00.531Z · LW(p) · GW(p)

Yeah, I'm trying to include delay as fine.

I'm just trying to point at "the point when aggressive intervention by a bunch of parties is potentially still too late".

↑ comment by Sam Marks (samuel-marks) · 2025-01-20T08:47:26.362Z · LW(p) · GW(p)

I really like the framing here, of asking whether we'll see massive compute automation before [AI capability level we're interested in]. I often hear people discuss nearby questions using IMO much more confusing abstractions, for example:

- "How much is AI capabilities driven by algorithmic progress?" (problem: obscures dependence of algorithmic progress on compute for experimentation)

- "How much AI progress can we get 'purely from elicitation'?" (lots of problems, e.g. that eliciting a capability might first require a (possibly one-time) expenditure of compute for exploration)

My inside view sense is that the feasibility of takeover-capable AI without massive compute automation is about 75% likely if we get AIs that dominate top-human-experts [LW · GW] prior to 2040.[6] [? · GW] Further, I think that in practice, takeover-capable AI without massive compute automation is maybe about 60% likely.

Is this because:

- You think that we're >50% likely to not get AIs that dominate top human experts before 2040? (I'd be surprised if you thought this.)

- The words "the feasibility of" importantly change the meaning of your claim in the first sentence? (I'm guessing it's this based on the following parenthetical, but I'm having trouble parsing.)

Overall, it seems like you put substantially higher probability than I do on getting takeover capable AI without massive compute automation (and especially on getting a software-only singularity). I'd be very interested in understanding why. A brief outline of why this doesn't seem that likely to me:

- My read of the historical trend is that AI progress has come from scaling up all of the factors of production in tandem (hardware, algorithms, compute expenditure, etc.).

- Scaling up hardware production has always been slower than scaling up algorithms, so this consideration is already factored into the historical trends. I don't see a reason to believe that algorithms will start running away with the game.

- Maybe you could counter-argue that algorithmic progress has only reflected returns to scale from AI being applied to AI research in the last 12-18 months and that the data from this period is consistent with algorithms becoming more relatively important relative to other factors?

- I don't see a reason that "takeover-capable" is a capability level at which algorithmic progress will be deviantly important relative to this historical trend.

I'd be interested either in hearing you respond to this sketch or in sketching out your reasoning from scratch.

Replies from: ryan_greenblatt, ryan_greenblatt, ryan_greenblatt↑ comment by ryan_greenblatt · 2025-01-21T04:43:00.816Z · LW(p) · GW(p)

I put roughly 50% probability on feasibility of software-only singularity.[1]

(I'm probably going to be reinventing a bunch of the compute-centric takeoff model [LW · GW] in slightly different ways below, but I think it's faster to partially reinvent than to dig up the material, and I probably do use a slightly different approach.)

My argument here will be a bit sloppy and might contain some errors. Sorry about this. I might be more careful in the future.

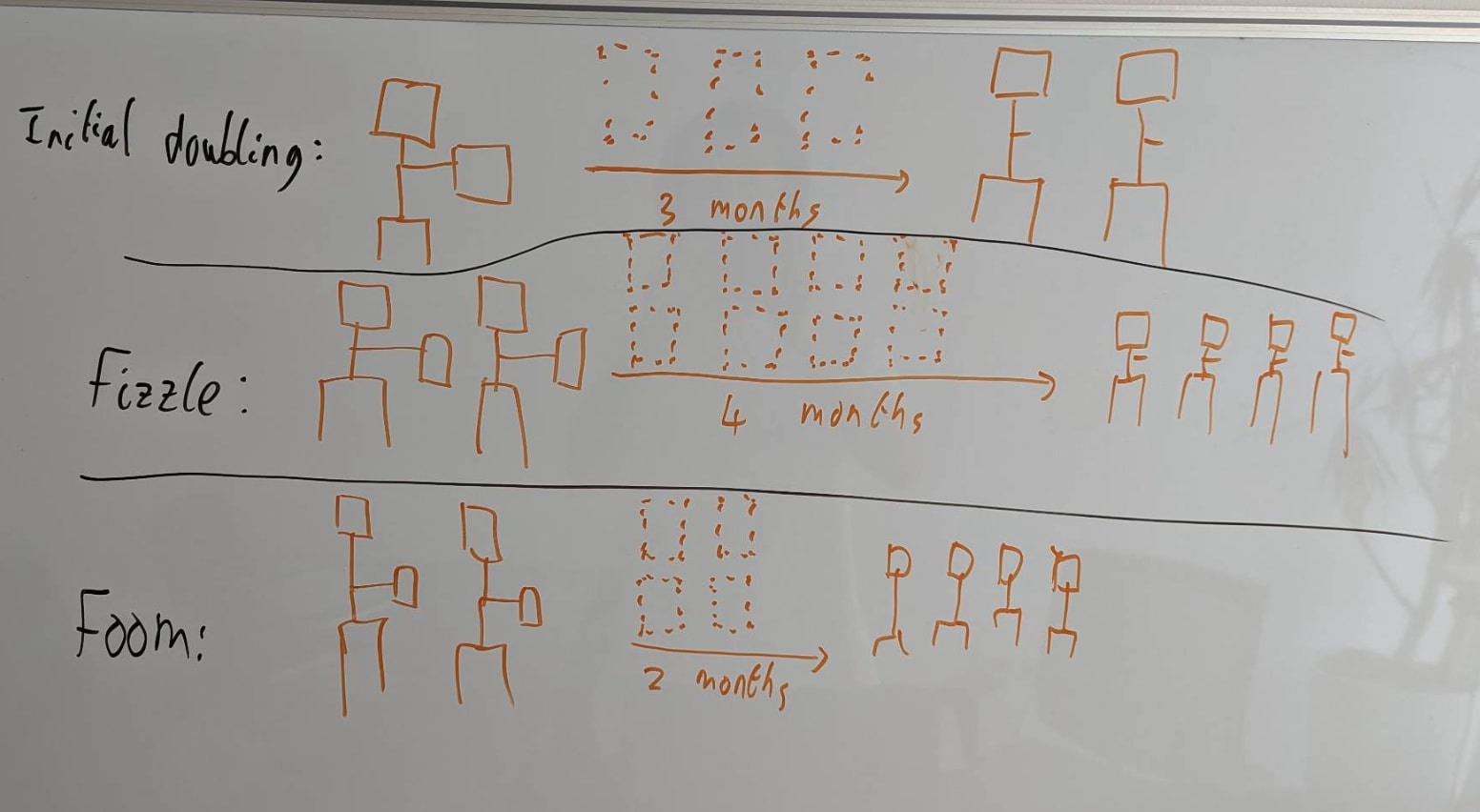

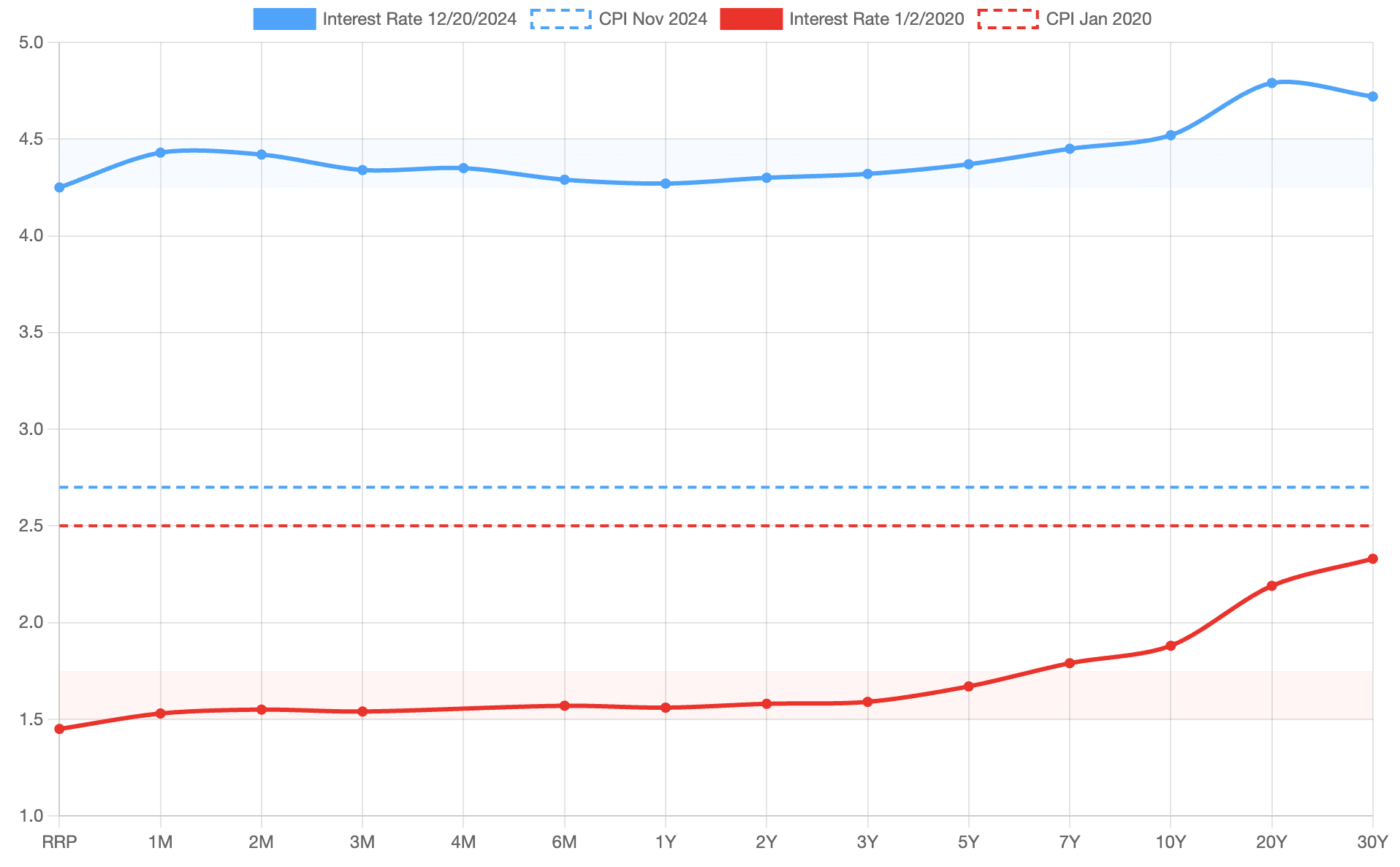

The key question for software-only singularity is: "When the rate of labor production is doubled (as in, as if your employees ran 2x faster[2]), does that more than double or less than double the rate of algorithmic progress? That is, algorithmic progress as measured by how fast we increase the labor production per FLOP/s (as in, the labor production from AI labor on a fixed compute base).". This is a very economics-style way of analyzing the situation, and I think this is a pretty reasonable first guess. Here's a diagram I've stolen from Tom's presentation on explosive growth illustrating this:

Basically, every time you double the AI labor supply, does the time until the next doubling (driven by algorithmic progress) increase (fizzle) or decrease (foom)? I'm being a bit sloppy in saying "AI labor supply". We care about a notion of parallelism-adjusted labor (faster laborers are better than more laborers) and quality increases can also matter. I'll make the relevant notion more precise below.

I'm about to go into a relatively complicated argument for why I think the historical data supports software-only singularity. If you want more basic questions answered (such as "Doesn't retraining make this too slow?"), consider looking at Tom's presentation on takeoff speeds [LW · GW].

Here's a diagram that you might find useful in understanding the inputs into AI progress:

And here is the relevant historical context in terms of trends:

- Historically, algorithmic progress in LLMs looks like 3-4x per year including improvements on all parts of the stack.[3] This notion of algorithmic progress is "reduction in compute needed to reach a given level of frontier performance", which isn't equivalent to increases in the rate of labor production on a fixed compute base. I'll talk more about this below.

- This has been accompanied by increases of around 4x more hardware per year[4] and maybe 2x more quality-adjusted (parallel) labor working on LLM capabilities per year. I think total employees working on LLM capabilities have been roughly 3x-ing per year (in recent years), but quality has been decreasing over time.

- A 2x increase in the quality-adjusted parallel labor force isn't as good as the company getting the same sorts of labor tasks done 2x faster (as in, the resulting productivity from having your employees run 2x faster) due to parallelism tax (putting aside compute bottlenecks for now). I'll apply the same R&D parallelization penalty as used in Tom's takeoff model and adjust this down by a power of 0.7 to yield 1.6x per year in increased labor production rate. (So, it's as though the company keeps the same employees, but those employees operate 1.6x faster each year.)

- It looks like the fraction of progress driven by algorithmic progress has been getting larger over time.

- So, overall, we're getting 3-4x algorithmic improvement per year being driven by 1.6x more labor per year and 4x more hardware.

So, the key question is how much of this algorithmic improvement is being driven by labor vs. by hardware. If it is basically all hardware, then the returns to labor must be relatively weak and software-only singularity seems unlikely. If it is basically all labor, then we're seeing 3-4x algorithmic improvement per year for 1.6x more labor per year, which means the returns to labor look quite good (at least historically). Based on some guesses and some poll questions, my sense is that capabilities researchers would operate about 2.5x slower if they had 10x less compute (after adaptation), so the production function is probably proportional to (). (This is assuming a cobb-douglas production function.) Edit: see the derivation of the relevant thing in Deep's comment [LW(p) · GW(p)], my old thing was wrong[5].

Now, let's talk more about the transfer from algorithmic improvement to the rate of labor production. A 2x algorithmic improvement in LLMs makes it so that you can reach the same (frontier) level of performance for 2x less training compute, but we actually care about a somewhat different notion for software-only singularity: how much you can increase the production rate of labor (the thing that we said was increasing at roughly a rate of 1.6x per year by using more human employees). My current guess is that every 2x algorithmic improvement in LLMs increases the rate of labor production by , and I'm reasonably confident that the exponent isn't much below . I don't currently have a very principled estimation strategy for this, and it's somewhat complex to reason about. I discuss this in the appendix below.

So, if this exponent is around 1, our central estimate of 2.3 from above corresponds to software-only singularity and our estimate of 1.56 from above under more pessimistic assumptions corresponds to this not being feasible. Overall, my sense is that the best guess numbers lean toward software-only singularity.

More precisely, software-only singularity that goes for >500x effective compute gains above trend (to the extent this metric makes sense, this is roughly >5 years of algorithmic progress). Note that you can have software-only singularity be feasible while buying tons more hardware at the same time. And if this ends up expanding compute production by >10x using AI labor, then this would count as massive compute production despite also having a feasible software-only singularity. (However, in most worlds, I expect software-only singularity to be fast enough, if feasible, that we don't see this.) ↩︎

Rather than denominating labor in accelerating employees, we could instead denominate in number of parallel employees. This would work equivalently (we can always convert into equivalents to the extent these things can funge), but because we can actually accelerate employees and the serial vs. parallel distinction is important, I think it is useful to denominate in accelerating employees. ↩︎

I would have previously cited 3x, but recent progress looks substantially faster (with DeepSeek v3 and reasoning models seemingly indicating somewhat faster than 3x progress IMO), so I've revised to 3-4x. ↩︎

This includes both increased spending and improved chips. Here, I'm taking my best guess at increases in hardware usage for training and transferring this to research compute usage on the assumption that training compute and research compute have historically been proportional. ↩︎

Edit: the reasoning I did here was off. Here was the old text: so the production function is probably roughly (). Increasing compute by 4x and labor by 1.6x increases algorithmic improvement by 3-4x, let's say 3.5x, so we have , . Thus, doubling labor would increase algorithmic improvement by . This is very sensitive to the exact numbers; if we instead used 3x slower instead of 2.5x slower, we would have gotten that doubling labor increases algorithmic improvement by , which is substantially lower. Obviously, all the exact numbers here are highly uncertain. ↩︎

↑ comment by deep · 2025-01-22T20:20:35.435Z · LW(p) · GW(p)

Hey Ryan! Thanks for writing this up -- I think this whole topic is important and interesting.

I was confused about how your analysis related to the Epoch paper, so I spent a while with Claude analyzing it. I did a re-analysis that finds similar results, but also finds (I think) some flaws in your rough estimate. (Keep in mind I'm not an expert myself, and I haven't closely read the Epoch paper, so I might well be making conceptual errors. I think the math is right though!)

I'll walk through my understanding of this stuff first, then compare to your post. I'll be going a little slowly (A) to help myself refresh myself via referencing this later, (B) to make it easy to call out mistakes, and (C) to hopefully make this legible to others who want to follow along.

Using Ryan's empirical estimates in the Epoch model

The Epoch model

The Epoch paper models growth with the following equation:

1. ,

where A = efficiency and E = research input. We want to consider worlds with a potential software takeoff, meaning that increases in AI efficiency directly feed into research input, which we model as . So the key consideration seems to be the ratio . If it's 1, we get steady exponential growth from scaling inputs; greater, superexponential; smaller, subexponential.[1]

Fitting the model

How can we learn about this ratio from historical data?

Let's pretend history has been convenient and we've seen steady exponential growth in both variables, so and . Then has been constant over time, so by equation 1, has been constant as well. Substituting in for A and E, we find that is constant over time, which is only possible if and the exponent is always zero. Thus if we've seen steady exponential growth, the historical value of our key ratio is:

2. .

Intuitively, if we've seen steady exponential growth while research input has increased more slowly than research output (AI efficiency), there are superlinear returns to scaling inputs.

Introducing the Cobb-Douglas function

But wait! , research input, is an abstraction that we can't directly measure. Really there's both compute and labor inputs. Those have indeed been growing roughly exponentially, but at different rates.

Intuitively, it makes sense to say that "effective research input" has grown as some kind of weighted average of the rate of compute and labor input growth. This is my take on why a Cobb-Douglas function of form (3) , with a weight parameter , is useful here: it's a weighted geometric average of the two inputs, so its growth rate is a weighted average of their growth rates.

Writing that out: in general, say both inputs have grown exponentially, so and . Then E has grown as , so is the weighted average (4) of the growth rates of labor and capital.

Then, using Equation 2, we can estimate our key ratio as .

Let's get empirical!

Plugging in your estimates:

- Historical compute scaling of 4x/year gives ;

- Historical labor scaling of 1.6x gives ;

- Historical compute elasticity on research outputs of 0.4 gives ;

- Adding these together, .[2]

- Historical efficiency improvement of 3.5x/year gives .

- So [3]

Adjusting for labor-only scaling

But wait: we're not done yet! Under our Cobb-Douglas assumption, scaling labor by a factor of 2 isn't as good as scaling all research inputs by a factor of 2; it's only as good.

Plugging in Equation 3 (which describes research input in terms of compute and labor) to Equation 1 (which estimates AI progress based on research), our adjusted form of the Epoch model is .

Under a software-only singularity, we hold compute constant while scaling labor with AI efficiency, so multiplied by a fixed compute term. Since labor scales as A, we have . By the same analysis as in our first section, we can see A grows exponentially if , and grows grows superexponentially if this ratio is >1. So our key ratio just gets multiplied by , and it wasn't a waste to find it, phew!

Now we get the true form of our equation: we get a software-only foom iff , or (via equation 2) iff we see empirically that . Call this the takeoff ratio: it corresponds to a) how much AI progress scales with inputs and b) how much of a penalty we take for not scaling compute.

Result: Above, we got , so our takeoff ratio is . That's quite close! If we think it's more reasonable to think of a historical growth rate of 4 instead of 3.5, we'd increase our takeoff ratio by a factor of , to a ratio of , right on the knife edge of FOOM. [4] [note: I previously had the wrong numbers here: I had lambda/beta = 1.6, which would mean the 4x/year case has a takeoff ratio of 1.05, putting it into FOOM land]

So this isn't too far off from your results in terms of implications, but it is somewhat different (no FOOM for 3.5x, less sensitivity to the exact historical growth rate).

Analyzing your approach:

Tweaking alpha:

Your estimate of is in fact similar in form to my ratio - but what you're calculating instead is .

One indicator that something's wrong is that your result involves checking whether , or equivalently whether , or equivalently whether . But the choice of 2 is arbitrary -- conceptually, you just want to check if scaling software by a factor n increases outputs by a factor n or more. Yet clearly varies with n.

One way of parsing the problem is that alpha is (implicitly) time dependent - it is equal to exp(r * 1 year) / exp(q * 1 year), a ratio of progress vs inputs in the time period of a year. If you calculated alpha based on a different amount of time, you'd get a different value. By contrast, r/q is a ratio of rates, so it stays the same regardless of what timeframe you use to measure it.[5]

Maybe I'm confused about what your Cobb-Douglas function is meant to be calculating - is it E within an Epoch-style takeoff model, or something else?

Nuances:

Does Cobb-Douglas make sense?

The geometric average of rates thing makes sense, but it feels weird that that simple intuitive approach leads to a functional form (Cobb-Douglas) that also has other implications.

Wikipedia says Cobb-Douglas functions can have the exponents not add to 1 (while both being between 0 and 1). Maybe this makes sense here? Not an expert.

How seriously should we take all this?

This whole thing relies on...

- Assuming smooth historical trends

- Assuming those trends continue in the future

- And those trends themselves are based on functional fits to rough / unclear data.

It feels like this sort of thing is better than nothing, but I wish we had something better.

I really like the various nuances you're adjusting for, like parallel vs serial scaling, and especially distinguishing algorithmic improvement from labor efficiency. [6] Thinking those things through makes this stuff feel less insubstantial and approximate...though the error bars still feel quite large.

- ^

Actually there's a complexity here, which is that scaling labor alone may be less efficient than scaling "research inputs" which include both labor and compute. We'll come to this in a few paragraphs.

- ^

This is only coincidentally similar to your figure of 2.3 :)

- ^

I originally had 1.6 here, but as Ryan points out in a reply it's actually 1.5. I've tried to reconstruct what I could have put into a calculator to get 1.6 instead, and I'm at a loss!

- ^

I was curious how aggressive the superexponential growth curve would be with a takeoff ratio of a mere. A couple of Claude queries gave me different answers (maybe because the growth is so extreme that different solvers give meaningfully different approximations?), but they agreed that growth is fairly slow in the first year (~5x) and then hits infinity by the end of the second year.I wrote this comment with the wrong numbers (0.96 instead of 0.9), so it doesn't accurately represent what you get if you plug in 4x capability growth per year. Still cool to get a sense of what these curves look like, though. - ^

I think can be understood in terms of the alpha-being-implicitly-a-timescale-function thing -- if you compare an alpha value with the ratio of growth you're likely to see during the same time period, e.g. alpha(1 year) and n = one doubling, you probably get reasonable-looking results.

- ^

I find it annoying that people conflate "increased efficiency of doing known tasks" with "increased ability to do new useful tasks". It seems to me that these could be importantly different, although it's hard to even settle on a reasonable formalization of the latter. Some reasons this might be okay:

- There's a fuzzy conceptual boundary between the two: if GPT-n can do the task at 0.01% success rate, does that count as a "known task?" what about if it can do each of 10 components at 0.01% success, so in practice we'll never see it succeed if run without human guidance, but we know it's technically possible?

- Under a software singularity situation, maybe the working hypothesis is that the model can do everything necessary to improve itself a bunch, maybe just not very efficiently yet. So we only need efficiency growth, not to increase the task set. That seems like a stronger assumption than most make, but maybe a reasonable weaker assumption is that the model will 'unlock' the necessary new tasks over time, after which point they become subject to rapid efficiency growth.

- And empirically, we have in fact seen rapid unlocking of new capabilities, so it's not crazy to approximate "being able to do new things" as a minor but manageable slowdown to the process of AI replacing human AI R&D labor.

↑ comment by ryan_greenblatt · 2025-01-24T18:02:48.986Z · LW(p) · GW(p)

I think you are correct with respect to my estimate of and the associated model I was using. Sorry about my error here. I think I was fundamentally confusing a few things in my head when writing out the comment.

I think your refactoring of my strategy is correct and I tried to check it myself, though I don't feel confident in verifying it is correct.

Your estimate doesn't account for the conversion between algorithmic improvement and labor efficiency, but it is easy to add this in by just changing the historical algorithmic efficiency improvement of 3.5x/year to instead be the adjusted effective labor efficiency rate and then solving identically. I was previously thinking the relationship was that labor efficiency was around the same as algorithmic efficiency, but I now think this is more likely to be around based on Tom's comment [LW(p) · GW(p)].

Plugging this is, we'd get: