We are already in a persuasion-transformed world and must take precautions

post by trevor (TrevorWiesinger) · 2023-11-04T15:53:31.345Z · LW · GW · 14 commentsContents

Summary: The Fundamental Problem They would notice and pursue these capabilities This can't go on None 14 comments

"In times of change, learners inherit the earth, while the learned find themselves beautifully equipped to deal with a world that no longer exists"

—Eric Hoffer

Summary:

We're already in the timeline where the research and manipulation of the human thought process is widespread; SOTA psychological research systems require massive amounts of human behavior data, which in turn requires massive numbers of unsuspecting test subjects (users) in order to automate the process of analyzing and exploiting human targets. This therefore must happen covertly, and both the US and China have a strong track record of doing things like this. This outcome is a strong attractor state since anyone with enough data can do it, and it naturally follows that powerful organizations would deny others access e.g. via data poisoning. Most people are already being persuaded that this is harmless, even though it is obviously ludicrously dangerous. Therefore, we are probably already in a hazardously transformative world and must take standard precautions [LW · GW] immediately.

This should not distract people from AI safety. This is valuable because the AI safety community must survive. This problem connects to the AI safety community in the following way:

State survival and war power ==> [LW · GW] already depends on information warfare capabilities.

Information warfare capabilities ==> [LW · GW] already depends on SOTA psychological research systems.

SOTA psychological research systems ==> [LW · GW] already improves and scales mainly from AI capabilities research, diminishing returns on everything else.[1]

AI capabilities research ==> [LW · GW] already under siege from the AI safety community.

Therefore, the reason why this might be such a big concern is:

State survival and war power ==> [LW · GW] their toes potentially already being stepped on by the AI safety community?

Although it's also important to note that people with access to SOTA psychological research systems are probably super good at intimidation and bluffing [LW · GW], it's also the case that the AI safety community needs to get a better handle on the situation if we are in the bad timeline; and the math indicates that we are already well past that point [? · GW].

The Fundamental Problem

If there were intelligent aliens, made of bundles of tentacles or crystals or plants that think incredibly slowly, their minds would also have discoverable exploits/zero days, because any mind that evolved naturally would probably be like the human brain, a kludge of spaghetti code [LW · GW] that is operating outside of its intended environment.

They would probably not even begin to scratch the surface of finding and labeling those exploits, until, like human civilization today, they began surrounding thousands or millions of their kind with sensors that could record behavior several hours a day and find webs of correlations [LW · GW].

In the case of humans, the use of social media as a controlled environment for automated AI-powered experimentation appears to be what created that critical mass of human behavior data. Current 2020s capabilities for psychological research and manipulation vastly exceed the 20th century academic psychology paradigm.

The 20th century academic psychology paradigm still dominates our cultural impression of what it means to research the human mind; but when the effectiveness of psychological research and manipulation starts increasing by an order of magnitude every 4 years, it becomes time to stop mentally living in a world that was stabilized by the fact that manipulation attempts generally failed.

The capabilities of social media to steer human outcomes are not advancing in isolation, they are parallel to a broad acceleration in the understanding and exploitation of the human mind, which itself is a byproduct of accelerating AI capabilities research.

By comparing people to other people and predicting traits and future behavior, multi-armed bandit algorithms can predict whether a specific research experiment or manipulation strategy is worth the risk of undertaking at all in the first place; resulting in large numbers of success cases a low detection rate (as detection would likely yield a highly measurable response, particularly with substantial sensor exposure).

When you have sample sizes of billions of hours of human behavior data and sensor data, millisecond differences in reactions from different kinds of people (e.g. facial microexpressions, millisecond differences at scrolling past posts covering different concepts, heart rate changes after covering different concepts, eyetracking differences after eyes passing over specific concepts, touchscreen data, etc) transform from being imperceptible noise to becoming the foundation of webs of correlations mapping the human mind.

Unfortunately, the movement of scrolling past a piece of information on a social media news feed with a mouse wheel or touch screen will generate at least one curve since the finger accelerates and decelerates each time. Trillions of those curves are outputted each day by billions of people. These curves are linear algebra, the perfect shape to plug into ML.

Social media’s individualized targeting uses deep learning and massive samples of human behavioral data to procedurally generate an experience that fits human mind like a glove, in ways we don't fully understand, but allow hackers incredible leeway to find ways to optimize for steering people’s thinking or behavior in measurable directions, insofar as those directions are measurable. This is something that AI can easily automate. I originally thought that going deeper into human thought reading/interpretability was impossible, but I was wrong; the human race is already well past that point as well, due to causality inference [? · GW].

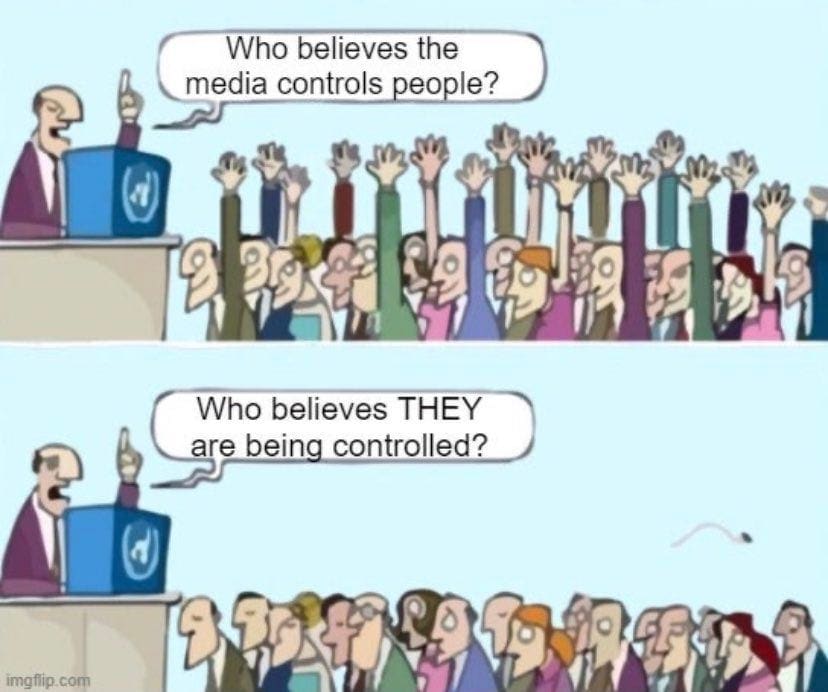

Most people see social media influence as something that happens to people lower on the food chain, but this is no longer true; there is at least one technique, Clown Attacks [LW · GW], that generally works on all humans who aren't already aware of it, regardless of intelligence or commitment to truthseeking; and that particular technique became discoverable with systems vastly weaker than the ones that exist today. I don't know what manipulation strategies the current systems can find, but I can predict with great confidence that they're well above the clown attack.

First it started working on 60% of people, and I didn’t speak up, because my mind wasn’t as predictable as people in that 60%. Then, it started working on 90% of people, and I didn’t speak up, because my mind wasn’t as predictable as the people in that 90%. Then it started working on me. And by then, it was already too late, because it was already working on me.

They would notice and pursue these capabilities

If the big 5 tech companies (Facebook, Google, Microsoft, Amazon, and Apple) notice ways to steer people towards buying specific products, or to feel a wide variety of compulsions to avoid quitting the platform, and to prevent/counteract other platforms from running multi-armed bandit algorithms to automatically exploit strategies (e.g. combinations of posts) to plunder their users [LW · GW], then you can naturally assume that they've noticed their capabilities to steer people in a wide variety of other directions as well.

The problem is that major governments and militaries are overwhelmingly incentivized and well-positioned to exploit those capabilities for offensive and defensive information warfare [? · GW]; if American companies abstain from manipulation capabilities, and Chinese companies naturally don't, then American intelligence agencies worry about a gap in information warfare capabilities and push the issue.

Government/military agencies are bottlenecked by competence [LW · GW], which is difficult to measure due to a lack of transparency at higher levels and high employee turnover at lower levels [LW · GW], but revolving-door employment easily allows them to source flexible talent from the talent pools of the big 5 tech companies; this practice is endangered by information warfare itself [LW · GW], further driving interest in information warfare superiority.

Access to tech company talent pools also determine the capability of intelligence agencies to use OS exploits and chip firmware exploits needed to access the sensors in the devices of almost any American, not just the majority of Americans that leave various sensor permissions on, which allows even greater access to the psychological research needed to compromise critical elites such as the AI safety community [LW · GW].

The capabilities of these systems to run deep bayesian analysis on targets dramatically improves with more sensor data, particularly video feed on the face and eyes [? · GW]; this is not necessary to run interpretability on the human brain [? · GW], but it increases these capabilities by yet more orders of magnitude (particularly for lie detection [? · GW] and conversation topic aversion).

This can't go on

There isn’t much point in having a utility function in the first place if hackers can change it at any time. There might be parts that are resistant to change, but it’s easy to overestimate yourself on this; especially if the effectiveness of SOTA influence systems have been increasing by an order of magnitude every 4 years. Your brain has internal conflicts, and they have human internal causality interpretability systems [? · GW]. You get constant access to the surface [LW · GW], but they get occasional access to the deep.

The multi-armed bandit algorithm will keep trying until it finds something that works. The human brain is a kludge of spaghetti code, so there’s probably something somewhere.

The human brain has exploits, and the capability and cost of social media platforms to use massive amounts of human behavior data to find complex social engineering techniques is a profoundly technical matter, you can’t get a handle on this with intuition or pre-2010s historical precedent.

Thus, you should assume that your utility function and values are at risk of being hacked at an unknown time, and should therefore be assigned a discount rate to account for the risk over the course of several years.

Slow takeoff over the course of the next 10 years alone guarantees that this discount rate is too high, in reality, for people in the AI safety community to continue to go on believing that it is something like zero.

I think that approaching zero is a reasonable target, but not with the current state of affairs where people don’t even bother to cover up their webcams, have important and sensitive conversations about the fate of the earth in rooms with smartphones, sleep in the same room or even the same bed as a smartphone, and use social media for nearly an hour a day (scrolling past nearly a thousand posts).

The discount rate in this environment cannot be considered “reasonably” close to zero if the attack surface is this massive; and the world is changing this quickly. We are gradually becoming intractably compromised by slow takeoff before the party even starts.

If people have anything they value at all [LW · GW], and the AI safety community almost certainly does have that, then the current AI safety paradigm of zero effort is wildly inappropriate, it is basically total submission to invisible hackers [? · GW]. The human brain has low-hanging exploits [LW · GW] and the structures of power have already been set in motion [LW · GW], so we must take the standard precautions [LW · GW] immediately.

The AI safety community must survive the storm.

- ^

e.g. Facebook could hire more psychologists to label data and correlations, but has greater risk of one of them breaking their NDA and trying to leak Facebook's manipulation capabilities to the press like Snowden and Fishback. Stronger AI means more ways to make users label their own data.

14 comments

Comments sorted by top scores.

comment by gull · 2023-11-04T18:24:56.238Z · LW(p) · GW(p)

It seems like if capabilities are escalating like that, it's important to know how long ago it started. I don't think the order-of-magnitude-every-4-years would last (compute bottleneck maybe?), but I see what you're getting at, with the loss of hope for agency and stable groups happening on a function that potentially went bad a while ago.

Having forecasts about state-backed internet influence during the Arab Spring and other post-2008 conflicts seems like it would be important for estimating how long ago the government interest started, since that was close to the Deep Learning revolution. Does anyone have good numbers for these?

Replies from: TrevorWiesinger↑ comment by trevor (TrevorWiesinger) · 2023-11-04T18:41:04.318Z · LW(p) · GW(p)

I agree with all of this, except 4 years is a lot of time lately and the empirical record from here to 2015 (in 2015 I don't think there was much capabilities at all aside from bots drowning out voices and things like clown attacks, Yann Lecun wrote a great post on how these systems are hard to engineer in practice) suggests that the rate will continue without big increases in investment, especially since compute production alone can generates a large portion of that OOM every 4 years, and a fixed proportion of compute will be going into psych research, and there's also stuff like edge computing/5G.

I don't have good models on the Arab Spring other than that authoritarian states face pretty strong incentives to blame all kinds of domestic problems on foreign influence ops from the West. It's a pretty bad equilibria since influence ops actually do come out of the West.

comment by RationalDino · 2023-11-04T18:18:48.752Z · LW(p) · GW(p)

Sorry, but you're overthinking what's required. Simply being able to reliably use existing techniques is more than enough to hack the minds of large groups of people, no complex new research needed.

Here is a concrete example.

First, if you want someone's attention, just make them feel listened to. ELIZA could already successfully do this in the 1970s, ChatGPT is better. The result is what therapists call transference, and causes the person to wish to please the AI.

Now the AI can use the same basic toolkit mastered by demagogues throughout history. Use simple and repetitive language to hit emotional buttons over and over again. Try to get followers to form a social group. Switch positions every so often. Those that pay insufficient attention will have the painful experience of being attacked by their friends, and it forces everyone to pay more attention.

All of this is known and effective. What AI brings is that it can use individualized techniques, at scale, to suck people into many target groups. And once they are in those groups, it can use the demagogue's techniques to erase differences and get them aligned into ever bigger groups.

The result is that, as Sam Altman predicted, LLMs will prove superhumanly persuasive. They can beat the demagogues at their own game by seeding the mass persuasion techniques by individual attention at scale.

Do you think that this isn't going to happen? Social media accidentally did a lot of this at scale. Now it is just a question of weaponizing something like TikTok.

Replies from: dr_s, TrevorWiesinger↑ comment by dr_s · 2023-11-05T08:02:53.880Z · LW(p) · GW(p)

Yes, like the example of "Clown Attacks" isn't at all novel or limited to AI, it's old stuff. And it's not even true that you can't be resistant to them, though these days going actively against peer pressure in these things isn't very fashionable. That said, the biggest risk of LLMs right now is indeed IMO how well they can enact certain forms of propaganda and sentiment analysis en masse. No longer can I say "the government wouldn't literally read ALL your emails to figure out what you think, you're not worth the work it would take": now it might, because the cost has dramatically dropped.

Replies from: TrevorWiesinger↑ comment by trevor (TrevorWiesinger) · 2023-11-05T14:18:28.929Z · LW(p) · GW(p)

That said, the biggest risk of LLMs right now is indeed IMO how well they can enact certain forms of propaganda and sentiment analysis en masse.

I agree with the contents of this comment in general, but not the idea that propaganda generation is the greatest risk. Lots of people know about that already, and I'd argue that the risk to democracy via persuading the masses isn't very tractable, whereas the risk to the AI safety community via manipulating elites in random ways with automated high-level psychology research, is very tractable (minimize sensor exposure [LW · GW]).

Replies from: dr_s↑ comment by dr_s · 2023-11-06T10:00:15.017Z · LW(p) · GW(p)

My point wasn't that it would be a very new capability in general, but it could be deployed at a scale and cost impossible before. Armies of extremely smart and believable bots flooding social media all around. The "huh, everyone else except me thinks X, maybe they do have a point/my own belief is hopeless" gregariousness effect is real, and has often been used already, but this allows bad actors to take it to a whole new level. This could also be deployed as you say against AI safety itself, but not exclusively.

↑ comment by trevor (TrevorWiesinger) · 2023-11-04T18:47:42.602Z · LW(p) · GW(p)

I think that this is pretty appropriately steel manned, given that there are tons of people here who do ML daily way better than I ever could in my best moments, but they're clueless about the industry/economics/natsec side and that's a quick fix on my part. If I'm underestimating the severity of the situation then they'd know.

I think that this is more like zero days in the human brain; like performing a magic trick that prevents an entire room full of string theorists from thinking about something that's important to them e.g. lab leak hypothesis. Making people talk about specific concepts in their sleep. Lie detectors that only need a voice recording from a distance. Seeing which concepts scare people and which concepts don't based on small changes in their heart rate. etc.

My intuition says that engineering problems like these are hard and all sorts of problems crop up, not just Goodhart's law. Yann Lecun wrote a great post on this:

1. Building something that works in the real world is harder than what most armchair AI safety folks think.

2. there is a natural tendency to exaggerate the potential risks of your own work, because it makes you feel powerful and important.

This seems pretty in-line with some pretty fundamental engineering principles e.g. spaghetti code systems [LW · GW]. I think that a big part of it is that, unlike in China, western bigtech companies and intelligence agencies are strangled from small workforces of psychology researchers/data labellers/hypothesis generators due to Snowden risk. Although discovering the human internal causality interpretability dynamic [LW · GW] was a big update in the other direction, it being really easy to get away with doing a ton of stuff to people with very little data and older AI systems.

Replies from: RationalDino↑ comment by RationalDino · 2023-11-04T20:07:32.097Z · LW(p) · GW(p)

I disagree that people who do ML daily would be in a good position to judge the risks here. The key issue is not the capabilities of AI, but rather the level of vulnerability of the brain. Since they don't study that, they can't judge it.

It is like how scientists proved to be terrible at unmasking charlatans like Uri Geller. Nature doesn't actively try to fool us, charlatans do. The people with actual relevant expertise were people who studied how people can be fooled. Which meant magicians like James Randi. Similarly, to judge this risk, I think you should look at how dictators, cult leaders, and MLM companies operate.

A century ago Benito Mussolini figured out how to use mass media to control the minds of a mass audience. He used this to generate a mass following, and become dictator of Italy.. The same vulnerabilities exploited the same way have become a staple for demagogues and would-be dictators ever since. But human brains haven't been updated. And so Donald Trump has managed to use the same basic rootkit to amass about 70 million devoted followers. As we near the end of 2023, he still has a chance of successfully overthrowing our democracy if he can avoid jail.

Your thinking about zero days is a demonstration of how thinking in terms of computers can mislead you. What matters for an attack is the availability of vulnerable potential victims. In computers there is a correlation between novelty and availability. Before anyone knows about a vulnerability, everyone is available for your attack. Then it is discovered, a patch is created, and availability goes down as people update. But humans don't simply upgrade to brain 2.1.8 to fix the vulnerabilities found in brain 2.1.7. People can be brainwashed today by the same techniques that the CIA was studying when they funded the Reverend Sun Moon back in the 1960s.

You do make an excellent point about the difficulty of building something that can work at scale in the real world. Which is why I focused my scenario on techniques that have worked, repeatedly, at scale. We know that they can work, because they have worked. We see it in operation whenever we study the propaganda techniques used by dictators like Putin.

Given these examples, the question stops being an abstract, "Can AI find vulnerabilities by which we can be exploited?" It then switches to, "Is AI capable of executing effectrive variants on the strategies that dictators, cult leaders and MLM founders already have shown works at scale against human minds?"

I think that the answer is a pretty clear yes. Properly directed, ChatGPT should be more than capable of doing this. We then have the hallmark of a promising technology, we know that nothing fundamentally new is required. It is just a question of execution.

Replies from: TrevorWiesinger↑ comment by trevor (TrevorWiesinger) · 2023-11-04T23:48:37.124Z · LW(p) · GW(p)

My thinking about this (and other people like Tristan Harris who can't think about superintelligence) is that the big difference is that persuasion, as a science, is getting amplified by orders of magnitude greater than the 20th century.

As a result, the AI safety community is at risk of getting blindsided by manipulation strategies that we're vulnerable to because we don't recognize them.

I don't imagine CFAR [LW · GW]'s founders as being particularly vulnerable to clown attacks, for example, but they also would also fail to notice clown attacks being repeatedly tested against them; so it stands to reason that today's AI would be able to locate something that would both work on them AND prevent them from noticing, if it had enough social media scrolling data to find novel strategies based on results.

I'm less interested in the mass psychology stuff from the 2020s because a lot of that was meant to target elites who influenced more people downstream [LW · GW], and elites are now harder to fool than in the 20th century; and also, if democracy dies, then it dies, and it's up to us to not die with it. One of the big issues with AI targeting people based on bayes-predicted genes is that it can find one-shot strategies, including selecting 20th century tactics with the best odds of success.

This is why I think that psychology is critical, especially for interpreting causal data [LW · GW], but also we shouldn't expect things to be too similar to the 20th century because it's a new dimension, and the 21st century is OOD anyway (OOD is similar to the butterfly effect, changes cause a cascade of other changes).

Replies from: RationalDino↑ comment by RationalDino · 2023-11-05T21:25:04.029Z · LW(p) · GW(p)

With all due respect, I see no evidence that elites are harder to fool now than they were in the past. For concrete examples, look at the ones who flipped to Trump over several years. The Corruption of Lindsey Graham gives an especially clear portrayal about how one elite went from condemning Trump to becoming a die-hard supporter.

I dislike a lot about Mr. Graham. But there is no question that he was smart and well aware of how authoritarians gain power. He saw the risk posed by Trump very clearly. However he knew himself to be smart, and thought he could ride the tiger. Instead, his mind got eaten.

Moving on, I believe that you are underestimating the mass psychology stuff. Remember, I'm suggesting it as a floor to what could already be done. New capabilities and discoveries allow us to do more. But what should already be possible is scary enough.

However that is a big topic. I went into it in AI as Super-Demagogue [LW · GW] which you will hopefully find interesting.

Replies from: TrevorWiesinger↑ comment by trevor (TrevorWiesinger) · 2023-11-06T03:41:14.671Z · LW(p) · GW(p)

I think that it's generally really hard to get a good sense of what's going on when it comes to politicians, because so much of what they do is intended to make a persona believable and disguise the fact that most of the policymaking happens elsewhere.

Moving on, I believe that you are underestimating the mass psychology stuff. Remember, I'm suggesting it as a floor to what could already be done. New capabilities and discoveries allow us to do more. But what should already be possible is scary enough.

That's right, the whole point of the situation is that everything I've suggested is largely just a floor for what's possible, and in order to know the current state of the limitations, you need to have the actual data sets + watch the innovation as it happens. Hence why the minimum precautions [LW · GW] are so important.

I went into it in AI as Super-Demagogue [LW · GW] which you will hopefully find interesting.

I'll read this tomorrow or the day after, this research area has tons of low-hanging fruit and few people looking into it.

comment by Jackson Wagner · 2023-11-06T02:00:13.932Z · LW(p) · GW(p)

Hi Trevor! I appreciate this thread of related ideas that you have been developing about intelligence agencies, AI-augmented persuasion techniques, social media, etc.

- It seems important to "think ahead" about how the power-struggle over AI will play out as things escalate to increasingly intense levels, involving eg national governments and militaries and highly-polarized political movements and etc.

- Obviously if some organization was hypercompetent and super-good at behind-the-scenes persuasion, we wouldn't really know about it! So it is hard to 100% confidently dismiss the idea that maybe the CIA has next-gen persuasion tech, or whatever.

- Obviously we are already, to a large extent, living in a world that is shaped by the "marketplace of ideas", where the truth often gets outcompeted by whatever sounds best / is most memetically fit. Thinking about these dynamics (even without anything AI-related or any CIA conspiracies) is confusing, but seems very important. Eg, I myself have been deeply shaped by the crazy memetic landscape in ways that I partially endorse and partially don't. And everything I might try to do to achieve impact in the world needs to navigate the weird social landscape of human society, which in many respects is in a kind of memetic version of the "equilibrium of no free energy" that yudkowsky talks about in Inadequate Equilibria (although there he is talking mostly about an individual-incentives landscape, rather than a memetic landscape).

- AI super-persuasion does seem like something we might plausibly get before we get general ASI, which seems like it could be extremely weird / dangerous / destabilizing.

That said, I think this post is too conspiratorial in assuming that some combination of social media companies / national governments understand how to actually deploy effective persuasion techniques in a puppetmaster-like way which is way beyond everyone else. I think that the current situation is more like "we are living in an anarchic world influenced by an out-of-control memetic marketplace of ideas being influenced by many different actors of varying levels of sophistication, none of whom have amazing next-level gameboard-flipping dominance". Some scattered thoughts on this theme:

- If the CIA (or other entities affiliated with the US government, including tech companies being pressured by the government) is so good at persuasion ops, why are there so many political movements that seem to go against the CIA's interests? Why hasn't the government been able to use its persuasion jiujitsu to neutralize wokeism and Trump/MAGA-ism? From an establishment perspective, both of these movements seem to be doing pretty serious damage to US culture/institutions. Maybe these are both in the process of being taken down by "clown attacks" (although to my eye, this looks less like an "attack" from CIA saboteurs, and more like a lot of genuine ordinary people in the movement themselves just being dumb / memetic dynamics playing out deterministically via social dynamics like yudkowsky's "evaporative cooling of group beliefs")? Or maybe ALL of, eg, wokeism, is one GIANT psy-op to distract the American people from creating a left-wing movement that is actually smart and effective? (I definitely believe something like this, but I don't believe it's a deliberate military psy-op... rather it's an emergent dynamic. Consider how corporations are differentially friendlier to wokeism than they are to a more economically-focused, class-based Bernie-ism, so wokeism has an easier time spreading and looking successful, etc. It also helps that wokeism is memetically optimized to appeal to people in various ways, versus a genuinely smart-and-effective left-wing policy idea like Georgism comes off as boring, technocratic, and hard-to-explain.)

- Basically, what I am saying is that our national politics/culture looks like the product of anarchic memetic optimization (recently turbocharged by social media dynamics, as described by folks like Slate Star Codex and the book "Revolt of the Public") much moreso than the product of top-down manipulation.

- If google & facebook & etc are so good at manipulating me, why do their efforts at influence often still seem so clumsy? Yes, of course, I'm not going to notice the non-clumsy manipulations! And yes, your "I didn't speak up, because I wasn't as predictable as the first 60%" argument certainly applies here -- I am indeed worried that as technology progresses, AI persuasion tech will become a bigger and bigger problem. But still, in the here and now, Youtube is constantly showing me these ridiculous ideological banners about "how to spot misinformation" or "highlighting videos from Black creators" or etc... I am supposed to believe that these people are some kind of master manipulators? (They are clearly just halfheartedly slapping the banners on there in a weak attempt to cover their ass and appease NYT-style complaints that youtube's algorithm is unintentionally radicalizing people into trumpism... they aren't even trying to be persuasive to the actual viewers, just hamfistedly trying to look good to regulators...)

- Where is the evidence of super-persuasion techniques being used by other countries, or in geopolitical situations? One of the most important targets here would be things like "convincing Taiwanese to identify mostly as ethnic Chinese, or mostly as an independent nation", or the same for trying to convince Ukrainians to align more with their Russian-like ethnicity and language or with the independent democracies of western Europe. Ultimately, the cultural identification might be the #1 decisive factor in these countries' futures, and for sure there are lots of propaganda / political messaging attempts from all sides here. But nobody seems like they have some kind of OP superweapon which can singlehandedly change the fate of nations by, eg, convincing Taiwanese people of something crazy, like embracing their history as a Japanese colony and deciding that actually they want to reunify with Japan instead of remaining independent or joining China!

- Similarly, the Russian attempts to interfere in the 2016 election, although initially portrayed as some kind of spooky OP persuasion technique, ultimately ended up looking pretty clumsy and humdrum and small-scale, eg just creating facebook groups on themes designed to inflame American cultural divisions, making wacky anti-Hillary memes, etc.

- China's attempts at cultural manipulation are probably more advanced, but they haven't been able to save themselves from sinking into a cultural atmosphere of intense malaise and pessimism, one of the lowest fertility rates in the world, etc. If persuasion tech was so powerful, couldn't China use it to at least convince people to keep plowing more money into real estate?

- Have there been any significant leaks that indicate the USA is focused on persuasion tech and has seen significant successes with it? If I recall correctly, the Edward Snowden leaks (admittedly from the NSA which focuses on collecting information, and from 10 years ago) seemed to mostly indicate a strategy of "secretly collect all the data" --> "search through and analyze it to identify particular people / threats / etc". There didn't seem to be any emphasis on trying to shape culture more broadly.

- Intelligence agencies in the USA devote some effort to "deradicalization" of eg islamist terrorists, extreme right-wingers, etc. But this stuff seems to be mostly focused on pretty narrow interventions targeting individual people or small groups, and seems mostly based on 20th-century-style basic psychological understanding... seems like a far cry from A/B testing the perfect social-media strategy to unleash on the entire population of some middle-eastern country to turn them all into cosmopolitan neoliberals.

Anyways, I guess my overall point is that it just doesn't seem true that the CIA, or Facebook, or China, or anyone else, currently has access to amazing next-gen persuasion tech. So IMO you are thinking about this in the wrong way, with too much of a conspiratorial / Tom Clancy vibe. But the reason I wrote such a long comment is because I think you should keep exploring these general topics, since I agree with you about most of the other assumptions you are making!

- We are already living in a persuasion-transformed world in the sense that the world is full of a lot of crazy ideas which have been shaped by memetic dynamics

- Social media in particular seems like a powerful lever to influence culture (see Slate Star Codex & Revolt of the Public)

- It seems like you probably COULD influence culture a ton by changing the design of social media, so it's a little funny that nobody seems to be intentionally using this to build a persuasion superweapon

- (Nevertheless I think nobody really understands the long-term cultural effects of social media well enough to make deliberate changes to achieve eventual intended results. And I think there are limits to what you could do with current techniques -- changing the design & policies of a site like Twitter might change the broad cultural vibe, but I don't think we could create an especially persuasive superweapon that could be aimed at particular targets, like making Taiwanese people culturally identify with Japan)

- It definitely seems like AI could be used for all kinds of censorship & persuasion-related tasks, and this seems scary because it might indeed allow the creation of persuasion superweapons.

- Totally separately from all the above stuff about persuasion, the shadowier parts of governments (military & intelligence-agency bureaucracies) seem very important to think about when we are trying to think ahead about the future of AI technology and human civilization.

↑ comment by trevor (TrevorWiesinger) · 2023-11-06T08:31:40.540Z · LW(p) · GW(p)

Thanks for putting the work into this attempt to falsify my model! I read all of it, but I will put work into keeping my response concise and helpful.

If the CIA (or other entities affiliated with the US government, including tech companies being pressured by the government) is so good at persuasion ops, why are there so many political movements that seem to go against the CIA's interests?

- Quoting ChristianKI quoting Michael Vassar: "We didn't have an atomic war as people expected after WWII, but we had an infowar and now most people are like zombies when it comes to their ability to think and act independently" [LW · GW]. Russia and China have this technology too, but I currently think that all three countries prioritize defensive uses. Homogenizing people makes their thoughts and behavior more predictable and therefore require less FLOPS, lower risk of detection/suspicion, and higher success rate; polarization offers this homogenization, as well as the cultural intensity/creativity that Jan Kulviet referenced [LW · GW].

- In addition to international infowar, there's also domestic elites, many of them are paying close attention to things they care about. The US government exists atop ~6 million people above 130 IQ with varying values, which keeps things complicated.

- Generally though, they have learned from many of the mistakes from Vietnam and the War on Terror [LW · GW]. Riding the wave is not an improbable outcome in my model, especially considering that individual humans and groups are less predictable/controllable when OOD.

- It's also absolutely possible that the Left still bears ugly scars from the Infowars surrounding Vietnam and the War on Terror.

If google & facebook & etc are so good at manipulating me, why do their efforts at influence often still seem so clumsy?

There is a pretty low bar for running SGD on secure [? · GW] user data, and social media news feed data/algorithms/combinations of posts, in order to see what causes people to stay versus leave. That means that their systems, by default, throw outrageously clumsy failed influence attempts at you, because those tend to cause users to feel safe and return; when in reality they are not safe [LW · GW] and shouldn't return. Meanwhile, influencing people in ways that they notice will make them leave and get RLHF'd out quickly. This is the most probable outcome, they probably couldn't prevent algorithms from doing this if they tried due to goodharts law.

Where is the evidence of super-persuasion techniques being used by other countries, or in geopolitical situations?

The Ukraine War and Lab Leak Hypothesis are both things that I would have predicted the US to commit substantial capabilities to, including SOTA thought/behavior prediction/steering. It's hard to predict what they will do in the future because they are often trying to fool or outmaneuver foreign intelligence agencies and high-IQ elites with much better information than me; the US might suddenly decide to fully commit to accusing China of originally leaking COVID, or powerful US elites might rally against proxy wars and the trend towards a second Cold War. If pro-terrorism sentiment became popular in the US, then that could just be the Russians succeeding, not the NSA failing. This is why I focus on the math of how human internal interpretability [LW · GW] naturally translates into industry change and cyberattacks on the brain. I would love to get better at this.

The main issue here is that history is being written and that the AI safety community needs to avoid the fate of the CCC [LW(p) · GW(p)]. I'm arguing that the math [LW · GW] and the state of the industry indicates that human brains are extremely predisposed to being hacked, that governments would totally do this [LW · GW], and that the AI safety community is unusually likely to be targeted.

Have there been any significant leaks that indicate the USA is focused on persuasion tech and has seen significant successes with it?

Snowden was in 2013 back when modern ML was new, and if Moscow wanted to leak this tech to the world, they would succeed, and we wouldn't be talking about it early on Lesswrong. But it's basic math, and the issue is that people aren't panicking, or they panic but eventually go back to using social media and then they feel fine (SGD does this automatically in order to retain users). Tristan Harris is worried about this and "leaks" the fundamental math constantly, possibly helped design/push standards that made the tech less outrageously harmful e.g. using social media 5 hours a day.

IMO you are thinking about this in the wrong way, with too much of a conspiratorial / Tom Clancy vibe.

The problem here is that most books and documentaries about intelligence agencies are entertainment products that need to optimize for intrigue or thrill in order to reduce the risk of failing to recoup the production budget. Meanwhile, most people with experience at intelligence agencies worked at the low-competence low-trust bottom-70% where the turnover is much higher [LW · GW]. Taking a step back, intelligence agencies seem to be Bureaucracies that Conquer, and should be better at intimidating politicians and normal-bureaucrats than politician and normal-bureaucrats are at intimidating them.

- Social media in particular seems like a powerful lever to influence culture (see Slate Star Codex & Revolt of the Public)

If they had any sense, they'd try to kill moloch by building dath ilan [LW · GW]. They probably won't, because they've probably been turned deeply nihilistic by fighting so many information wars. Most people get turned into moloch worshippers from far less e.g. business school.

- Totally separately from all the above stuff about persuasion, the shadowier parts of governments (military & intelligence-agency bureaucracies) seem very important to think about when we are trying to think ahead about the future of AI technology and human civilization.

I'm thinking not so much, if they weren't massively investing in intensely powerful brain hacking technology [? · GW] and burying everyone underneath sensors, then there wouldn't be much intersection at all. They could focus on what they're good at, stopping China, and we could focus on what we're good at, saving the world from unaligned AI. Sadly, the happy-go-lucky outcome where people can hack your brain, but don't, doesn't seem likely under the current global affairs.

Replies from: Jackson Wagner↑ comment by Jackson Wagner · 2023-11-13T22:32:30.964Z · LW(p) · GW(p)

(this comment is kind of a "i didn't have time to write you a short letter so I wrote you a long one" situation)

re: Infowar between great powers -- the view that China+Russia+USA invest a lot of efforts into infowar, but mostly "defensively" / mostly trying to shape domestic opinion, makes sense. (After all, it must be easier to control the domestic media/information lansdscape!) I would tend to expect that doing domestically-focused infowar stuff at a massive scale would be harder for the USA to pull off (wouldn't it be leaked? wouldn't it be illegal somehow, or at least something that public opinion would consider a huge scandal?), but on the other hand I'd expect the USA to have superior infowar technology (subtler, more effective, etc). And logically it might also be harder to percieve effects of USA infowar techniques, since I live in the USA, immersed in its culture.

Still, my overall view is that, although the great powers certainly expend substantial effort trying to shape culture, and have some success, they don't appear to have any next-gen technology qualitatively different and superior to the rhetorical techniques deployed by ordinary successful politicians like Trump, social movements like EA or wokeism, advertising / PR agencies, media companies like the New York Times, etc. (In the way that, eg, engineering marvels like the SR-72 Blackbird were generations ahead of competitors' capabilities.) So I think the overall cultural landscape is mostly anarchic -- lots of different powers are trying to exert their own influence and none of them can really control or predict cultural changes in detail.

re: Social media companies' RL algorithms are powerful but also "they probably couldn't prevent algorithms from doing this if they tried due to goodharts law". -- Yeah, I guess my take on this is that the overt attempts at propaganda (aimed at placating the NYT) seem very weak and clumsy. Meanwhile the underlying RL techniques seem potentially powerful, but poorly understood or not very steerable, since social media companies seem to be mostly optimizing for engagement (and not even always succeeding at that; here we are talking on LessWrong instead of tweeting / tiktoking), rather than deploying clever infowar superweapons. If they have such power, why couldn't left-leaning sillicon valley prevent the election of Trump using subtle social-media-RL trickery?

(Although I admit that the reaction to the 2016 election could certainly be interpreted as sillicon valley suddenly realizing, "Holy shit, we should definitely try to develop social media infowar superweapons so we can maybe prevent this NEXT TIME." But then the 2020 election was very close -- not what I'd have expected if info-superweapons were working well!)

With Twitter in particular, we've had such a transparent look at its operations during the handover to Elon Musk, and it just seems like both sides of that transaction have been pretty amateurish and lacked any kind of deep understanding of how to influence culture. The whole fight seems to have been about where to tug one giant lever called "how harshly do we moderate the tweets of leftists vs rightists". This lever is indeed influential on twitter culture, and thus culture generally -- but the level of sophistication here just seems pathetic.

Tiktok is maybe the one case where I'd be sympathetic to the idea that maybe a lot of what appears to be random insane trends/beliefs fueled by SGD algorithms and internet social dynamics, is actually the result of fairly fine-grained cultural influence by Chinese interests. I don't think Tiktok is very world-changing right now (as we'd expect, it's targeting the craziest and lowest-IQ people first), but it's at least kinda world-changing, and maybe it's the first warning sign of what will soon be a much bigger threat? (I don't know much about the details of Tiktok the company, or the culture of its users, so it's hard for me to judge how much fine-grained control China might or might not be exerting.)

Unrelated -- I love the kind of sci-fi concept of "people panic but eventually go back to using social media and then they feel fine (SGD does this automatically in order to retain users)". But of course I think that the vast majority of users are in the "aren't panicking" / never-think-about-this-at-all category, and there are so few people in the "panic" category (panic specifically over subtle persuasion manipulation tech that isn't just trying to maximize engagement but instead achieve some specific ideological outcome, I mean) that there would be no impact on the social-media algorithms. I think it is plausible that other effects like "try not to look SO clickbaity that users recognize the addictiveness and leave" do probably show up in algorithms via SGD.

More random thoughts about infowar campaigns that the USA might historically have wanted to infowar about:

- Anti-communism during the cold war, maybe continuing to a kind of generic pro-corporate / pro-growth attitude these days. (But lots of people were pro-communist back in the day, and remain anti-corporate/anti-growth today! And even the republican party is less and less pro-business... their basic model isn't to mind-control everyone into becoming fiscal conservatives, but instead to gain power by exploiting the popularity of social conservativism and then use power to implement fiscal conservativism.)

- Maybe I am taking a too-narrow view of infowar as "the ability to change peoples' minds on individual issues", when actually I should be considering strategies like "get people hyped up about social issues in order to gain power that you can use for economic issues" as a successful example of infowar? But even if I consider this infowar, then it reinforces my point that the most advanced stuff today all seems to be variations on normal smart political strategy and messaging, not some kind of brand-new AI-powered superweapon for changing people's minds (or redirecting their focus or whatever) in a radically new way.

- Since WW2, and maybe continuing to today, the West has tried to ideologically immunize itself against Nazi-ism. This includes a lot of trying to teach people to reject charismatic dictators, to embrace counterintuitive elements of liberalism like tolerance/diversity, and even to deny inconvenient facts like racial group differences for the sake of social harmony. In some ways this has gone so well that we're getting problems from going too far in this direction (wokism), but in other ways it can often feel like liberalism is hanging on by a thread and people are still super-eager to embrace charismatic dictators, incite racial conflict, etc.

"Human brains are extremely predisposed to being hacked, governments would totally do this, and the AI safety community is unusually likely to be targeted."

-- yup, fully agree that the AI safety community faces a lot of peril navigating the whims of culture and trying to win battles in a bunch of diverse high-stakes environments (influencing superpower governments, huge corporations, etc) where they are up against a variety of elite actors with some very strong motivations. And that there is peril both in the difficulty of navigating the "conventional" human-persuasion-transformed social landscape of today's world (already super-complex and difficult) and the potentially AI-persuasion-transformed world of tomorrow. I would note though, that these battles will (mostly?) play out in pretty elite spaces, wheras I'd expect the power of AI information superweapons to have the most powerful impact on the mass public. So, I'd expect to have at least some warning in the form of seeing the world go crazy (in a way that seems different from and greater than today's anarchic internet-social-dynamics-driven craziness), before I myself went crazy. (Unless there is an AI-infowar-superweapon-specific hard-takeoff where we suddenly get very powerful persuasion tech but still don't get the full ASI singularity??)

re: Dath Ilan -- this really deserves a whole separate comment, but basically I am also a big fan of the concept of Dath Ilan [LW · GW], and I would love to hear your thoughts on how you would go about trying to "build Dath Ilan" IRL.

- What should an individual person, acting mostly alone, do to try and promote a more Dath-Ilani future? Try to practice & spread Lesswrong-style individual-level rationality, maybe (obviously Yudkowsky did this with Lesswrong and other efforts). Try to spread specific knowledge about the way society works and thereby build energy for / awareness of ways that society could be improved (inadequate equilibria kinda tries to do this? seems like there could be many approaches here). Personally I am also always eager to talk to people about specific institutional / political tweaks that could lead to a better, more Dath-Ilani world: georgism, approval voting, prediction markets, charter cities, etc. Of those, some would seem to build on themselves while others wouldn't -- what ideas seem like the optimal, highest-impact things to work on? (If the USA adopted georgist land-value taxes, we'd have better land-use policy and faster economic growth but culture/politics wouldn't hugely change in a broadly Dath-Ilani direction; meanwhile prediction markets or new ways of voting might have snowballing effects where you get the direct improvement but also you make culture more rational & cooperative over time.)

- What should a group of people ideally do? (Like, say, an EA-adjacent silicon valley billionaire funding a significant minority of the EA/rationalist movement to work on this problem together in a coordinated way.) My head immediately jumps to "obviously they should build a rationalist charter city":

- The city doesn't need truly nation-level sovereign autonomy, the goal would just be to coordinate enough people to move somewhere together a la the Free State Project, gaining enough influence over local government to be able to run our own policy experiments with things like prediction markets, georgism, etc. (Unfortunately some things, like medical research, are federally regulated, but I think you could do a lot with just local government powers + creating a critical mass of rationalist culture.)

- Instead of moving to a random small town and trying to take over, it might be helpful to choose some existing new-city project to partner with -- like California Forever, Telosa, Prospera, whatever Zuzalu or Praxis turn into, or other charter cities that have amenable ideologies/goals. (This would also be very helpful if you don't have enough people or money to create a reasonably-sized town all by yourself!)

- The goal would be twofold: first, run a bunch of policy experiments and try to create Dath-Ilan-style institutions (where legal under federal law if you're still in the USA, etc). And second, try to create a critical mass of rationalist / Dath Ilani culture that can grow and eventually influence... idk, lots of people, including eventually the leaders of other governments like Singapore or the UK or whatever. Although it's up for debate whether "everyone move to a brand-new city somewhere else" is really a better plan for cultural influence than "everyone move to the bay area", which has been pretty successful at influencing culture in a rationalist direction IMO! (Maybe the rationalist charter city should therefore be in Europe or at least on the East Coast or something, so that we mostly draw rationalists from areas other than the Bay Area. Or maybe this is an argument for really preferring California Forever as an ally, over and above any other new-city project, since that's still in the Bay Area. Or for just trying to take over Bay Area government somehow.)

- ...but maybe a rationalist charter city is not the only or best way that a coordinated group of people could try to build Dath Ilan?