Paper Summary: The Effects of Communicating Uncertainty on Public Trust in Facts and Numbers

post by Jeffrey Heninger (jeffrey-heninger) · 2024-07-09T16:50:05.776Z · LW · GW · 2 commentsContents

Appendix: Survey Methods None 2 comments

by Anne Marthe van der Bles, Sander van der Linden, Alexandra L. J. Freeman, and David J. Spiegelhalter. (2020)

https://www.pnas.org/doi/pdf/10.1073/pnas.1913678117.

Summary: Numerically expressing uncertainty when talking to the public is fine. It causes people to be less confident in the number itself (as it should), but does not cause people to lose trust in the source of that number.

Uncertainty is inherent to our knowledge about the state of the world yet often not communicated alongside scientific facts and numbers. In the “posttruth” era where facts are increasingly contested, a common assumption is that communicating uncertainty will reduce public trust. However, a lack of systematic research makes it difficult to evaluate such claims.

Within many specialized communities, there are norms which encourage people to state numerical uncertainty when reporting a number. This is not often done when speaking to the public. The public might not understand what the uncertainty means, or they might treat it as an admission of failure. Journalistic norms typically do not communicate the uncertainty.

But are these concerns actually justified? This can be checked empirically. Just because a potential bias is conceivable does not imply that it is a significant problem for many people. This paper does the work of actually checking if these concerns are valid.

Van der Bles et al. ran five surveys in the UK with a total n = 5,780. A brief description of their methods can be found in the appendix below.

Respondents’ trust in the numbers varied with political ideology, but how they reacted to the uncertainty did not.

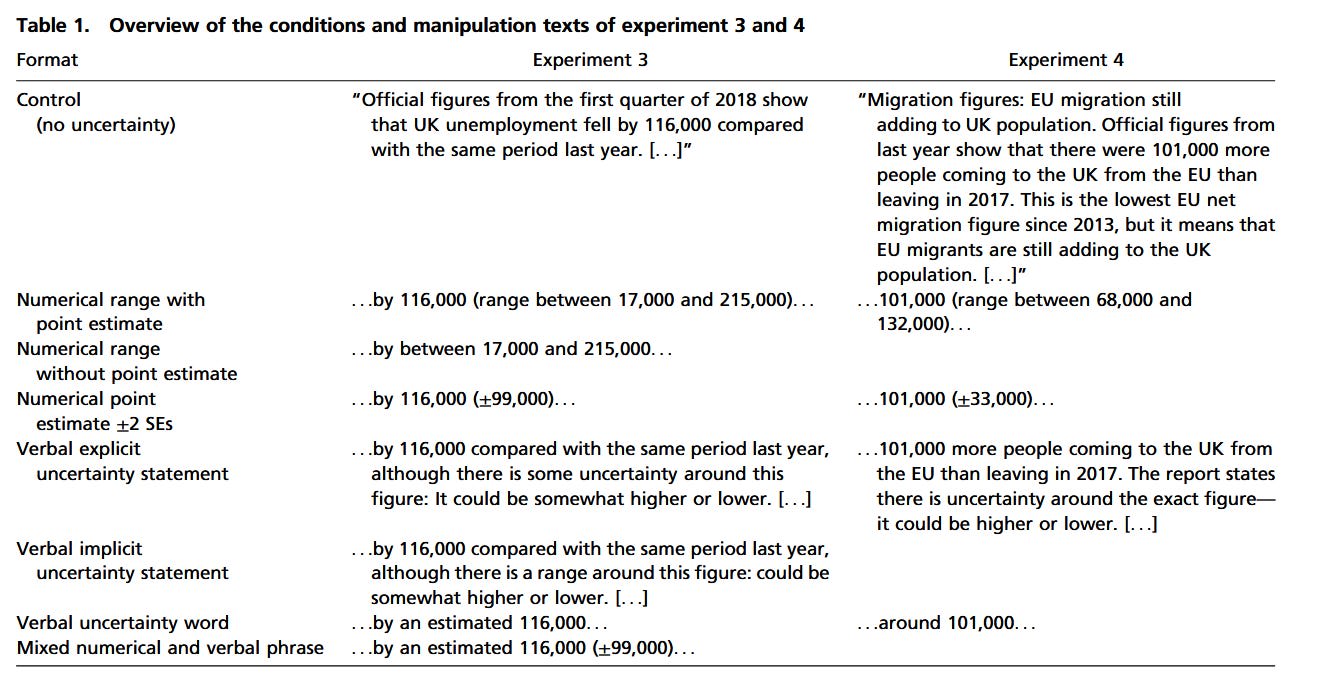

People were told the number either without mentioning uncertainty (as a control), with a numerical range, or with a verbal statement that uncertainty exists for these numbers. The study did not investigate stating p-values for beliefs. Exact statements used in the survey can be seen in Table 1, in the appendix.

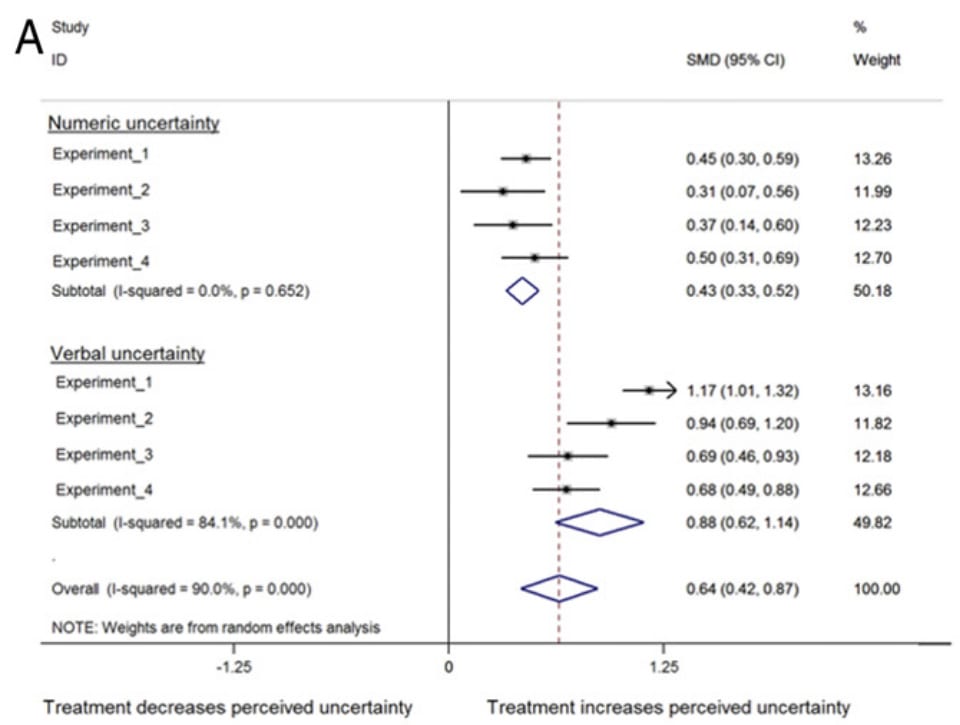

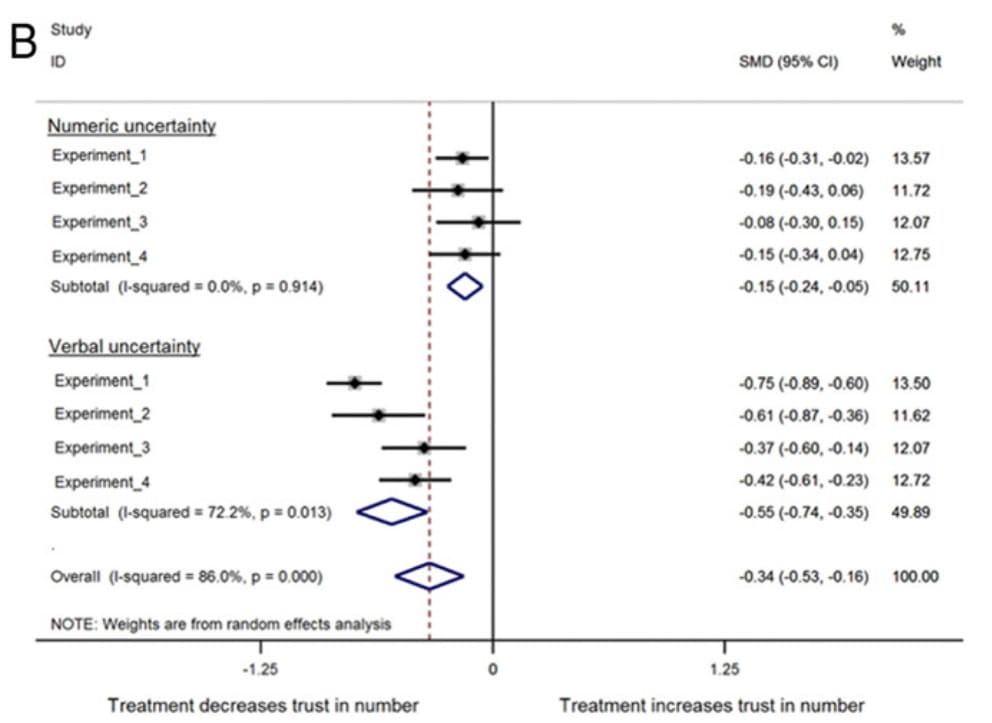

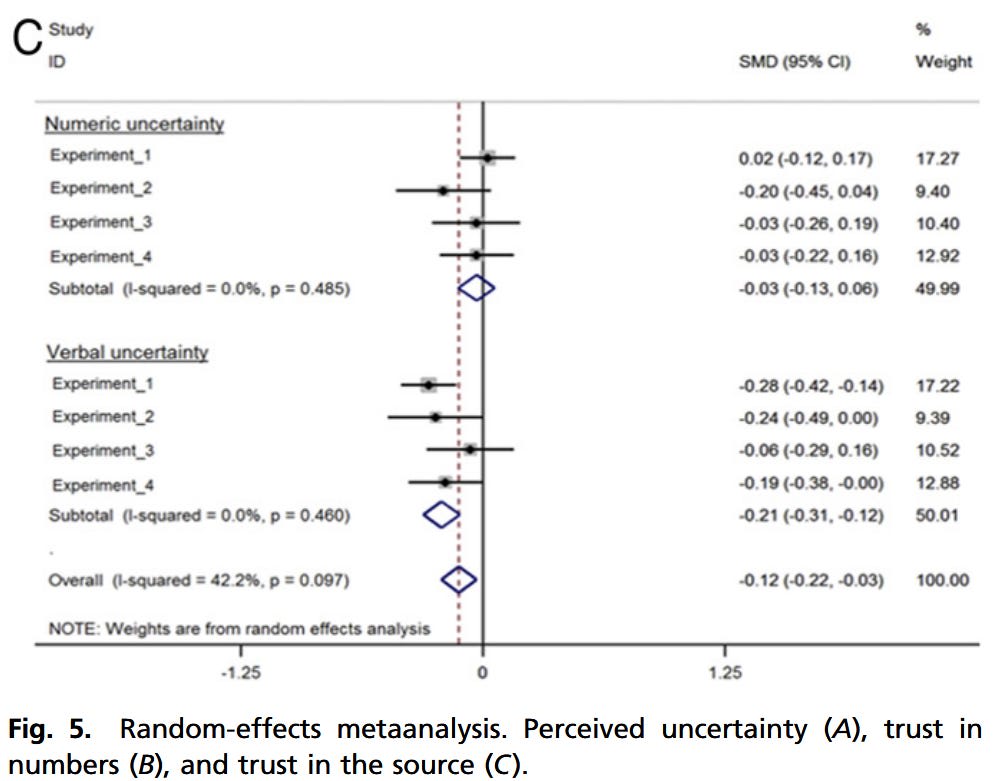

The best summary of their data is in their Figure 5, which presents results from surveys 1-4. The fifth survey had smaller effect sizes, so none of the shifts in trust were significant.

Expressing uncertainty made it more likely that people perceived uncertainty in the number (A). This is good. When the numbers are uncertain, science communicators should want people to believe that they are uncertain. Interestingly, verbally reminding people of uncertainty resulted in higher perceived uncertainty than numerically stating the numerical range, which could mean that people are overestimating the uncertainty when verbally reminded of it.

The surveys distinguished between trust in the number itself (B) and trust in the source (C). Numerically expressing uncertainty resulted in a small decrease in the trust of that number. Verbally expressing uncertainty resulted in a larger decrease in the trust of that number. Numerically expressing uncertainty resulted in no significant change in the trust of the source. Verbally expressing uncertainty resulted in a small decrease in the trust of the source.

The consequences of expressing numerical uncertainty are what I would have hoped: people trust the number a bit less than if they hadn’t thought about uncertainty at all, but don’t think that this reflects badly on the source of the information.

Centuries of human thinking about uncertainty among many leaders, journalists, scientists, and policymakers boil down to a simple and powerful intuition: “No one likes uncertainty.” It is therefore often assumed that communicating uncertainty transparently will decrease public trust in science. In this program of research, we set out to investigate whether such claims have any empirical basis.

The answer is mostly no. Good epistemic practice is not bad journalistic practice. When you give people numerical estimates of uncertainty of a number, they respond the way they should. The perceived confidence in the number itself goes down, while the trust in the source does not. Verbally reminding people of uncertainty seems like a worse practice: it causes people to distrust the source of information and seems to cause them to overestimate the uncertainty in the number. Expressing no uncertainty seems to make people overconfident in the number reported.

It is better to use good epistemics when talking to the public than it is to try to correct for their bad epistemics with compromised epistemics of your own.

The high degree of consistency in our results, across topics, magnitudes of uncertainty, and communication formats suggest that people “can handle the truth.”

Appendix: Survey Methods

Of the five surveys involved in this paper, the first three each had about 1,000 participants in the UK recruited using the platform Prolific, and were paid £1.20 to complete a 2 minute survey. The fourth survey was a preregistered replication with 1,050 adults in the UK recruited using the platform Qualtrics Panel. The fifth survey was a field experiment done with BBC News. When BBC News reported new labor market statistics on October 15, 2019, they ran three different versions of the article, and included a link to the survey in the article. There were 1,700 people who completed this survey.

Each survey presented readers with a measured number of some statistic: the number of unemployed people in the UK, the net number of migrants between the EU and UK, the amount the Earth’s average global temperature increased between 1880 & 2012, and the number of tigers in India. Some of these are more partisan issues than others in the UK, and the error bars are different sizes relative to the size of the number.

Table 1 shows some of the exact statements used in the 3rd and 4th surveys.

The participants were somewhat more educated and more liberal than the general public in all five of the surveys, and the first three surveys were somewhat younger and more female.

2 comments

Comments sorted by top scores.

comment by [deleted] · 2024-07-10T00:01:40.080Z · LW(p) · GW(p)

It causes people to be less confident in the number itself (as it should), but does not cause people to lose trust in the source of that number.

This is interesting to know, if true, but I would like to explicitly note that there is a potential problem in the complete opposite direction, namely people giving too much trust to those who talk about uncertainty in (what seems to the public like) very "certain" terms. Ezra Klein talked about this a bit in his appearance on the 80k hours podcast about 3 years ago:

Replies from: TrevorWiesingerEzra Klein: I’ll note there’s a related thing in politics where — and this is endlessly proven out now — people with the highest levels of political information tend to exhibit the highest levels of partisan self-deception. This is a very hard line to walk, but there is nothing more dangerous than thinking you know a lot, and nothing more dangerous, particularly, than thinking you know a lot about how you think. You really need a lot of humility. And so at the best I think the rationality community imposes humility on itself, and at the worst, there’s a performance of imposing humility that’s a way of not actually having humility, right? That is a way of, you know… I’ve known forever at Wonkblog and other things, things seem more convincing if you put them in chart form, like they just look more official.

Ezra Klein: I appreciate that Scott Alexander and others will sometimes put ‘epistemic status, 60%’ on the top of 5,000 words of super aggressive argumentation, but is the effect of that epistemic status to make people like, “Oh, I should be careful with this,” or is it like, “This person is super rational and self-critical, and actually now I believe him totally”? And I’m not picking on Scott here. A lot of people do this.

Ezra Klein: Larry Summers did this all the time. He was known in the administration for… He would constantly, when people were saying something, be like, “Well, what probability do you put on that?” And so people are just pulling like 20%, 30%, 70% probabilities out of thin air. That makes things sound more convincing, but I always think it’s at the danger of making people… Of actually it having the reverse effect that it should. Sometimes the language of probability reads to folks like well, you can really trust this person. And so instead of being skeptical, you’re less skeptical. So those are just pitfalls that I notice and it’s worth watching out for, as I have to do myself.

↑ comment by trevor (TrevorWiesinger) · 2024-07-10T00:31:36.698Z · LW(p) · GW(p)

It was helpful that Ezra noticed and pointed out this dynamic.

I think this concern is probably more a reflection of our state of culture, where people who visibly think in terms of quantified uncertainty are rare and therefore make a strong impression relative to e.g. pundits.

If you look at other hypothetical cultural states (specifically more quant-aware states e.g. extrapolating the last 100 years of math/literacy/finance/physics/military/computer progress forward another 100 years), trust would pretty quickly default to being based on track record instead of being one of the few people in the room whose visibly using numbers properly.