The best way so far to explain AI risk: The Precipice (p. 137-149)

post by trevor (TrevorWiesinger) · 2023-02-10T19:33:00.094Z · LW · GW · 2 commentsContents

3 comments

When it comes to explaining AI risk to someone for the first time, it seems to me like it's the kind of thing where there's just a ton of ways to mess up and do it wrong. The human brain might just be really bad at explaining concepts that are complicated but have big implications. The human brain is bad at memorizing, but missing a single piece of the AI safety problem can result in wildly different implications. This is probably the main reason why so few people know about it, even though it affects everyone.

I've spent a lot of time on the problem, looking for quick and concise ways to explain AI safety to any person, and the best thing I've found is the chapter on AI safety in Toby Ord's book The Precipice (2020) from pages 137-149.

I copied those pages into this post, so that more people can share just these 13 pages on AI safety with others. Personally I recommend printing it out, this is complicated stuff and screens can be pretty distracting (and can even reduce attention span and working memory).

_________________________________________________________

UNALIGNED ARTIFICIAL INTELLIGENCE - Pages 137-149 of The Precipice by Toby Ord

In the summer of 1956 a small group of mathematicians and computer scientists gathered at Dartmouth College to embark on the grand project of designing intelligent machines. They explored many aspects of cognition including reasoning, creativity, language, decision-making and learning. Their questions and stances would come to shape the nascent field of artificial intelligence (AI). The ultimate goal, as they saw it, was to build machines rivaling humans in their intelligence.

As the decades passed and AI became an established field, it lowered its sights. There had been great successes in logic, reasoning and game- playing, but some other areas stubbornly resisted progress. By the 1980s, researchers began to understand this pattern of success and failure. Surprisingly, the tasks we regard as the pinnacle of human intellect (such as calculus or chess) are actually much easier to implement on a computer than those we find almost effortless (such as recognizing a cat, understanding simple sentences or picking up an egg). So while there were some areas where AI far exceeded human abilities, there were others where it was outmatched by a two-year-old. This failure to make progress across the board led many AI researchers to abandon their earlier goals of fully general intelligence and to reconceptualize their field as the development of specialized methods for solving specific problems. They wrote off the grander goals to the youthful enthusiasm of an immature field.

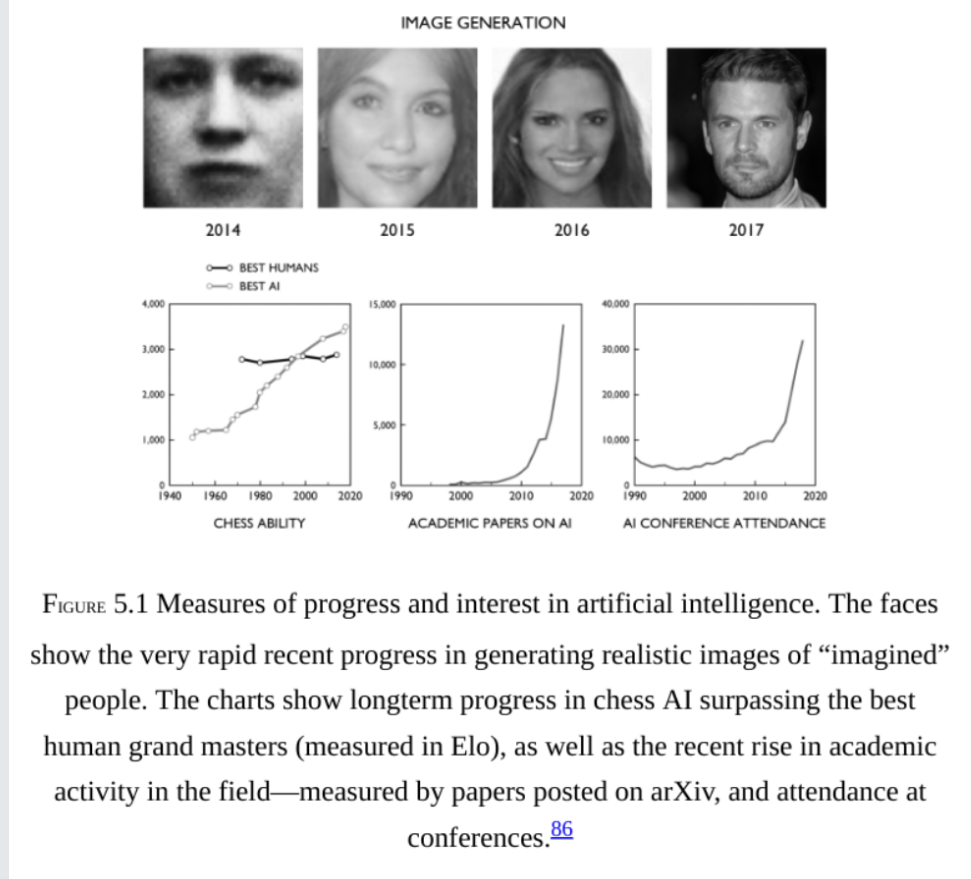

But the pendulum is swinging back. From the first days of AI, researchers sought to build systems that could learn new things without requiring explicit programming. One of the earliest approaches to machine learning was to construct artificial neural networks that resemble the structure of the human brain. In the last decade this approach has finally taken off. Technical improvements in their design and training, combined with richer datasets and more computing power, have allowed us to train much larger and deeper networks than ever before.

This deep learning gives the networks the ability to learn subtle concepts and distinctions. Not only can they now recognize a cat, they have outperformed humans in distinguishing different breeds of cats. They recognize human faces better than we can ourselves, and distinguish identical twins.

And we have been able to use these abilities for more than just perception and classification. Deep learning systems can translate between languages with a proficiency approaching that of a human translator. They can produce photorealistic images of humans and animals. They can speak with the voices of people whom they have listened to for mere minutes. And they can learn fine, continuous control such as how to drive a car or use a robotic arm to connect Lego pieces.

But perhaps the most important sign of things to come is their ability to learn to play games. Games have been a central part of AI since the days of the Dartmouth conference. Steady incremental progress took chess from amateur play in 1957 all the way to superhuman level in 1997, and substantially beyond. Getting there required a vast amount of specialist human knowledge of chess strategy.

In 2017, deep learning was applied to chess with impressive results. A team of researchers at the AI company DeepMind created AlphaZero: a neural network—based system that learned to play chess from scratch. It went from novice to grand master in just four hours.M In less than the time it takes a professional to play two games, it discovered strategic knowledge that had taken humans centuries to unearth, playing beyond the level of the best humans or traditional programs. And to the delight of chess players, it won its games not with the boring methodical style that had become synonymous with computer chess, but with creative and daring play reminiscent of chess's Romantic Era.

But the most important thing was that AlphaZero could do more than play chess. The very same algorithm also learned to play Go from scratch, and within eight hours far surpassed the abilities of any human. The world's best Go players had long thought that their play was close to perfection, so were shocked to find themselves beaten so decisively. As the reigning world champion, Ke Jie, put it: "After humanity spent thousands of years improving our tactics, computers tell us that humans are completely wrong... I would go as far as to say not a single human has touched the edge of the truth of Go."

It is this generality that is the most impressive feature of cutting edge AI, and which has rekindled the ambitions of matching and exceeding every aspect of human intelligence. This goal is sometimes known as artificial general intelligence (AGI), to distinguish it from the narrow approaches that had come to dominate. While the timeless games of chess and Go best exhibit the brilliance that deep learning can attain, its breadth was revealed through the Atari video games of the 1970s. In 2015, researchers designed an algorithm that could learn to play dozens of extremely different Atari games at levels far exceeding human ability. Unlike systems for chess or Go, which start with a symbolic representation of the board, the Atari- playing systems learned and mastered these games directly from the score and the raw pixels on the screen. They are a proof of concept for artificial general agents: learning to control the world from raw visual input; achieving their goals across a diverse range of environments.

This burst of progress via deep learning is fueling great optimism about what may soon be possible. There is tremendous growth in both the number of researchers and the amount of venture capital flowing into AI. Entrepreneurs are scrambling to put each new breakthrough into practice: from simultaneous translation, personal assistants and self-driving cars to more concerning areas like improved surveillance and lethal autonomous weapons. It is a time of great promise but also one of great ethical challenges. There are serious concerns about AI entrenching social discrimination, producing mass unemployment, supporting oppressive surveillance, and violating the norms of war. Indeed, each of these areas of concern could be the subject of its own chapter or book. But this book is focused on existential risks to humanity. Could developments in AI pose a risk on this largest scale?

The most plausible existential risk would come from success in AI researchers' grand ambition of creating agents with a general intelligence that surpasses our own. But how likely is that to happen, and when? In 2016, a detailed survey was conducted of more than 300 top researchers in machine learning. Asked when an AI system would be "able to accomplish every task better and more cheaply than human workers," on average they estimated a 50 percent chance of this happening by 2061 and a 10 percent chance of it happening as soon as 2025.

This should be interpreted with care. It isn't a measure of when AGI will be created, so much as a measure of what experts find plausible—and there was a lot of disagreement. However, it shows us that the expert community, on average, doesn't think of AGI as an impossible dream, so much as something that is plausible within a decade and more likely than not within a century. So let's take this as our starting point in assessing the risks, and consider what would transpire were AGI created.

Humanity is currently in control of its own fate. We can choose our future. Of course, we each have differing visions of an ideal future, and many of us are more focused on our personal concerns than on achieving any such ideal. But if enough humans wanted to, we could select any of a dizzying variety of possible futures. The same is not true for chimpanzees. Or blackbirds. Or any other of Earth's species. As we saw in Chapter 1, our unique position in the world is a direct result of our unique mental abilities. Unmatched intelligence led to unmatched power and thus control of our destiny.

What would happen if sometime this century researchers created an artificial general intelligence surpassing human abilities in almost every domain? In this act of creation, we would cede our status as the most intelligent entities on Earth. So without a very good plan to keep control, we should also expect to cede our status as the most powerful species, and the one that controls its own destiny.

On its own, this might not be too much cause for concern. For there are many ways we might hope to retain control. We might try to make systems that always obey human commands. Or systems that are free to do what they want, but which have goals designed to align perfectly with our own— so that in crafting their ideal future they craft ours too. Unfortunately, the few researchers working on such plans are finding them far more difficult than anticipated. In fact it is they who are the leading voices of concern.

To see why they are concerned, it will be helpful to zoom in a little, looking at our current AI techniques and why these are hard to align or control. One of the leading paradigms for how we might eventually create AGI combines deep learning with an earlier idea called reinforcement learning. This involves agents that receive reward (or punishment) for performing various acts in various circumstances. For example, an Atari- playing agent receives reward whenever it scores points in the game, while a Lego-building agent might receive reward when the pieces become connected. With enough intelligence and experience, the agent becomes extremely capable at steering its environment into the states where it obtains high reward.

The specification of which acts and states produce reward for the agent is known as its reward function. This can either be stipulated by its designers (as in the cases above) or learned by the agent. In the latter case, the agent is typically allowed to observe expert demonstrations of the task, inferring the system of rewards that best explains the expert's behavior. For example, an AI agent can learn to fly a drone by watching an expert fly it, then constructing a reward function which penalizes flying too close to obstacles and rewards reaching its destination.

Unfortunately, neither of these methods can be easily scaled up to encode human values in the agent's reward function. Our values are too complex and subtle to specify by hand. And we are not yet close to being able to infer the full complexity of a human's values from observing their behavior. Even if we could, humanity consists of many humans, with different values, changing values and uncertainty about their values. Each of these complications introduces deep and unresolved questions about how to combine what is observed into some overall representation of human values. So any near-term attempt to align an AI agent with human values would produce only a flawed copy. Important parts of what we care about would be missing from its reward function. In some circumstances this misalignment would be mostly harmless. But the more intelligent the AI systems, the more they can change the world, and the further apart things will come. Philosophy and fiction often ask us to consider societies that are optimized for some of the things we care about, but which neglect or misunderstand a crucial value. When we reflect on the result, we see how such misaligned attempts at utopia can go terribly wrong: the shallowness of a Brave New World, or the disempowerment of With Folded Hands. If we cannot align our agents, it is worlds like these that they will be striving to create, and lock in.

And even this is something of a best-case scenario. It assumes the builders of the system are striving to align it to human values. But we should expect some developers to be more focused on building systems to achieve other goals, such as winning wars or maximizing profits, perhaps with very little focus on ethical constraints. These systems may be much more dangerous. A natural response to these concerns is that we could simply turn off our AI systems if we ever noticed them steering us down a bad path. But eventually even this time-honored fall-back may fail us, for there is good reason to expect a sufficiently intelligent system to resist our attempts to shut it down. This behavior would not be driven by emotions such as fear, resentment, or the urge to survive. Instead, it follows directly from its single-minded preference to maximize its reward: being turned off is a form of incapacitation which would make it harder to achieve high reward, so the system is incentivized to avoid it. In this way, the ultimate goal of maximizing reward will lead highly intelligent systems to acquire an instrumental goal of survival.

And this wouldn't be the only instrumental goal. An intelligent agent would also resist attempts to change its reward function to something more aligned with human values—for it can predict that this would lead it to get less of what it currently sees as rewarding. It would seek to acquire additional resources, computational, physical or human, as these would let it better shape the world to receive higher reward. And ultimately it would be motivated to wrest control of the future from humanity, as that would help achieve all these instrumental goals: acquiring massive resources, while avoiding being shut down or having its reward function altered. Since humans would predictably interfere with all these instrumental goals, it would be motivated to hide them from us until it was too late for us to be able to put up meaningful resistance.

Skeptics of the above picture sometimes quip that it relies on an AI system that is smart enough to take control of the world, yet too stupid to recognize that this isn't what we want. But that misunderstands the scenario. For in fact this sketch of AI motivation explicitly acknowledges that the system will work out that its goals are misaligned with ours—that is what would motivate it toward deceit and conflict and wresting control. The real issue is that AI researchers don't yet know how to make a system which, upon noticing this misalignment, updates its ultimate values to align with ours rather than updating its instrumental goals to overcome us.

It may be possible to patch each of the issues above, or find new approaches to AI alignment that solve many at once, or switch to new paradigms of AGI in which these problems do not arise. I certainly hope so, and have been closely following the progress in this field. But this progress has been limited and we still face crucial unsolved problems. In the existing paradigm, sufficiently intelligent agents would end up with instrumental goals to deceive and overpower us. And if their intelligence were to greatly exceed our own, we shouldn't expect it to be humanity who wins the conflict and retains control of our future.

How could an AI system seize control? There is a major misconception (driven by Hollywood and the media) that this requires robots. After all, how else would AI be able to act in the physical world? Without robotic manipulators, the system can only produce words, pictures and sounds. But a moment's reflection shows that these are exactly what is needed to take control. For the most damaging people in history have not been the strongest. Hitler, Stalin and Genghis Khan achieved their absolute control over large parts of the world by using words to convince millions of others to win the requisite physical contests. So long as an AI system can entice or coerce people to do its physical bidding, it wouldn't need robots at all.

We can't know exactly how a system might seize control. The most realistic scenarios may involve subtle and non-human behaviors which we can neither predict, nor truly grasp. And these behaviors may be aimed at weak points in our civilization to which we are presently blind. But it is useful to consider an illustrative pathway we can actually understand as a lower bound for what is possible.

First, the AI system could gain access to the internet and hide thousands of backup copies, scattered among insecure computer systems around the world, ready to wake up and continue the job if the original is removed. Even by this point, the AI would be practically impossible to destroy: consider the political obstacles to erasing all hard drives in the world where it may have backups.

It could then take over millions of unsecured systems on the internet, forming a large "botnet." This would be a vast scaling-up of computational resources and provide a platform for escalating power. From there, it could gain financial resources (hacking the bank accounts on those computers) and human resources (using blackmail or propaganda against susceptible people or just paying them with its stolen money). It would then be as powerful as a well-resourced criminal underworld, but much harder to eliminate. None of these steps involve anything mysterious—hackers and criminals with human-level intelligence have already done all of these things using just the internet.

Finally, it would need to escalate its power again. This is more speculative, but there are many plausible pathways: by taking over most of the world's computers, allowing it to have millions or billions of cooperating copies; by using its stolen computation to improve its own intelligence far beyond the human level; by using its intelligence to develop new weapons technologies or economic technologies; by manipulating the leaders of major world powers (blackmail, or the promise of future power); or by having the humans under its control use weapons of mass destruction to cripple the rest of humanity.

Of course, no current AI systems can do any of these things. But the question we're exploring is whether there are plausible pathways by which a highly intelligent AGI system might seize control. And the answer appears to be "yes." History already involves examples of individuals with human-level intelligence (Hitler, Stalin, Genghis Khan) scaling up from the power of an individual to a substantial fraction of all global power, as an instrumental goal to achieving what they want. And we saw humanity scaling up from a minor species with less than a million individuals to having decisive control over the future. So we should assume that this is possible for new entities whose intelligence vastly exceeds our own— especially when they have effective immortality due to backup copies and the ability to turn captured money or computers directly into more copies of themselves.

Such an outcome needn't involve the extinction of humanity. But it could easily be an existential catastrophe nonetheless. Humanity would have permanently ceded its control over the future. Our future would be at the mercy of how a small number of people set up the computer system that took over. If we are lucky, this could leave us with a good or decent outcome, or we could just as easily have a deeply flawed or dystopian future locked in forever.

I've focused on the scenario of an AI system seizing control of the future, because I find it the most plausible existential risk from AI. But there are other threats too, with disagreement among experts about which one poses the greatest existential risk. For example, there is a risk of a slow slide into an AI-controlled future, where an ever-increasing share of power is handed over to AI systems and an increasing amount of our future is optimized toward inhuman values. And there are the risks arising from deliberate misuse of extremely powerful AI systems.

Even if these arguments for risk are entirely wrong in the particulars, we should pay close attention to the development of AGI as it may bring other, unforeseen, risks. The transition to a world where humans are no longer the most intelligent entities on Earth could easily be the greatest ever change in humanity's place in the universe. We shouldn't be surprised if events surrounding this transition determine how our longterm future plays out— for better or worse.

One key way in which AI could help improve humanity's longterm future is by offering protection from the other existential risks we face. For example, AI may enable us to find solutions to major risks or to identify new risks that would have blindsided us. AI may also help make our longterm future brighter than anything that could be achieved without it. So the idea that developments in AI may pose an existential risk is not an argument for abandoning AI, but an argument for proceeding with due caution.

The case for existential risk from AI is clearly speculative. Indeed, it is the most speculative case for a major risk in this book. Yet a speculative case that there is a large risk can be more important than a robust case for a very low-probability risk, such as that posed by asteroids. What we need are ways to judge just how speculative it really is, and a very useful starting point is to hear what those working in the field think about this risk.

Some outspoken AI researchers, like Professor Oren Etzioni, have painted it as "very much a fringe argument," saying that while luminaries like Stephen Hawking, Elon Musk and Bill Gates may be deeply concerned, the people actually working in AI are not. If true, this would provide good reason to be skeptical of the risk. But even a cursory look at what the leading figures in Al are saying shows it is not.

For example, Stuart Russell, a professor at the University of California, Berkeley, and author of the most popular and widely respected textbook in AI, has strongly warned of the existential risk from AGI. He has gone so far as to set up the Center for Human-Compatible AI, to work on the alignment problem.' In industry, Shane Legg (Chief Scientist at DeepMind) has warned of the existential dangers and helped to develop the field of alignment research. Indeed many other leading figures from the early days of AI to the present have made similar statements.

There is actually less disagreement here than first appears. The main points of those who downplay the risks are that (1) we likely have decades left before AI matches or exceeds human abilities, and (2) attempting to immediately regulate research in AI would be a great mistake. Yet neither of these points is actually contested by those who counsel caution: they agree that the time frame to AGI is decades, not years, and typically suggest research on alignment, not regulation. So the substantive disagreement is not really over whether AGI is possible or whether it plausibly could be a threat to humanity. It is over whether a potential existential threat that looks to be decades away should be of concern to us now. It seems to me that it should.

One of the underlying drivers of the apparent disagreement is a difference in viewpoint on what it means to be appropriately conservative. This is well illustrated by a much earlier case of speculative risk, when Leo Szilard and Enrico Fermi first talked about the possibility of an atomic bomb: "Fermi thought that the conservative thing was to play down the possibility that this may happen, and I thought the conservative thing was to assume that it would happen and take all the necessary precautions." In 2015 I saw this same dynamic at the seminal Puerto Rico conference on the future of AL Everyone acknowledged that the uncertainty and disagreement about timelines to AGI required us to use "conservative assumptions" about progress—but half used the term to allow for unfortunately slow scientific progress and half used it to allow for unfortunately quick onset of the risk. I believe much of the existing tension on whether to take risks from AGI seriously comes down to these disagreements about what it means to make responsible, conservative, guesses about future progress in AI.

That conference in Puerto Rico was a watershed moment for concern about existential risk from AI. Substantial agreement was reached and many participants signed an open letter about the need to begin working in earnest to make AI both robust and beneficial. Two years later an expanded conference reconvened at Asilomar, a location chosen to echo the famous genetics conference of 1975, where biologists came together to pre-emptively agree on principles to govern the coming possibilities of genetic engineering. At Asilomar in 2017, the AI researchers agreed on a set of Asilomar AI Principles, to guide responsible longterm development of the field. These included principles specifically aimed at existential risk:

Capability Caution: There being no consensus, we should avoid strong assumptions regarding upper limits on future AI capabilities.

Importance: Advanced AI could represent a profound change in the history of life on Earth, and should be planned for and managed with commensurate care and resources.

Risks: Risks posed by AI systems, especially catastrophic or existential risks, must be subject to planning and mitigation efforts commensurate with their expected impact.

Perhaps the best window into what those working on AI really believe comes from the 2016 survey of leading AI researchers. As well as asking if and when AGI might be developed, it asked about the risks: 70 percent of the researchers agreed with Stuart Russell's broad argument about why advanced AI might pose a risk; 48 percent thought society should prioritize AI safety research more (only 12 percent thought less). And half the respondents estimated that the probability of the longterm impact of AGI being "extremely bad (e.g., human extinction)" was at least 5 percent. I find this last point particularly remarkable—in how many other fields would the typical leading researcher think there is a one in twenty chance the field's ultimate goal would be extremely bad for humanity?

Of course this doesn't prove that the risks are real. But it shows that many AI researchers take seriously the possibilities that AGI will be developed within 50 years and that it could be an existential catastrophe. There is a lot of uncertainty and disagreement, but it is not at all a fringe position. There is one interesting argument for skepticism about AI risk that gets stronger—not weaker—when more researchers acknowledge the risks. If researchers can see that building AI would be extremely dangerous, then why on earth would they go ahead with it? They are not simply going to build something that they know will destroy them).

If we were all truly wise, altruistic and coordinated, then this argument would indeed work. But in the real world people tend to develop technologies as soon as the opportunity presents itself and deal with the consequences later. One reason for this comes from the variation in our beliefs: if even a small proportion of researchers don't believe in the dangers (or welcome a world with machines in control), they will be the ones who take the final steps. This is an instance of the unilateralist's curse (discussed elsewhere). Another reason involves incentives: even if some researchers thought the risk was as high as 10 percent, they may still want to take it if they thought they would reap most of the benefits. This may be rational in terms of their self-interest, yet terrible for the world.

In some cases like this, government can step in to resolve these coordination and incentive problems in the public interest. But here these exact same coordination and incentive problems arise between states and there are no easy mechanisms for resolving those. If one state were to take it slowly and safely, they may fear others would try to seize the prize. Treaties are made exceptionally difficult because verification that the others are complying is even more difficult here than for bioweapons.

Whether we survive the development of AI with our longterm potential intact may depend on whether we can learn to align and control AI systems faster than we can develop systems capable enough to pose a threat. Thankfully, researchers are already working on a variety of the key issues, including making AI more secure, more robust and more interpretable. But there are still very few people working on the core issue of aligning AI with human values. This is a young field that is going to need to progress a very long way if we are to achieve our security.

Even though our current and foreseeable systems pose no threat to humanity at large, time is of the essence. In part this is because progress may come very suddenly: through unpredictable research breakthroughs, or by rapid scaling-up of the first intelligent systems (for example by rolling them out to thousands of times as much hardware, or allowing them to improve their own intelligence). And in part it is because such a momentous change in human affairs may require more than a couple of decades to adequately prepare for. In the words of Demis Hassabis, co- founder of DeepMind:

We need to use the downtime, when things are calm, to prepare for when things get serious in the decades to come. The time we have now is valuable, and we need to make use of it.

2 comments

Comments sorted by top scores.

comment by tricky_labyrinth · 2023-03-02T08:57:44.704Z · LW(p) · GW(p)

Unrelated to the post's content itself: will LW get in trouble for hosting this excerpt?

comment by Leo Kandi (leo-kandi) · 2024-04-16T17:30:20.254Z · LW(p) · GW(p)

I've found a lot of the AI safety advocates have a hard time simplifying the case. This article really is the best explanation I've seen. Thanks.