A Path out of Insufficient Views

post by Unreal · 2024-09-24T20:00:27.332Z · LW · GW · 55 commentsContents

56 comments

In appreciation of @zhukeepa [LW · GW]'s willingness to discuss spirituality on LessWrong, I will share some stories of my own.

I grew up as a Christian in the USA and took it seriously.

Upon taking a World History class in my freshman year at a secular high school (quite liberal, quite prestigious), I learned that there were a number of major religions, and many of them seemed to contain a lot of overlap. It became clear to me, suddenly, that religions were man-made. None of them seemed to have a real claim to a universal truth.

I started unraveling my religious orientation and would become an atheist, by the end of high school. I would later go to Caltech to study neuroscience.

I converted to the religion of science and secular humanism, over the old traditions.

I was not able to clearly see that "secular scientific humanism" was in itself a religion. I thought it was more like the lack of a religion, and I felt safe in that. I felt like I'd found a truer place to live from, based in investigation and truth-seeking. Especially in the science / math realm, which seemed based in something reliable, sane, "cool-headed," and sensible. There was even a method for how to investigate. It seemed trustworthy and principled.

What I am beginning to see now is this:

Just because it's good at coordinating people, doesn't mean it's more true. It might even be a sign that it's less true. Although it's not impossible for something to coordinate people AND be true; it's just not common.

Why?

This is game theoretic.

Within a system with competition, why would the most TRUE thing win? No, the most effective thing wins. The thing best at consolidating power and resources and making individuals cooperate and work together would win. The truth is not a constraint.

Religion gives us many examples.

Most of the stuff Christians cooperate around are doctrinal points that are based in interpretations of the Bible. What is the Trinity? Is the Bible to be taken literally? When is the Second Coming and what will that entail? Is faith sufficient or do you also need good works?

If enough people AGREE on one or more of these points, then they can come together and declare themselves a unit. Through having a coherent unit, they then collect resources, send missionaries, and build infrastructure.

But the doctrines do not contain the Truths of religion. They're mostly pragmatic. They're ways for groups to coordinate. The religion that "wins" is not necessarily the most "true." In all likelihood, they just were better at coordinating more people.

(And more "meta" is better at coordinating more people, so you would expect a trend toward more "meta" or more "general" views over time becoming more dominant. Protestantism was more "meta-coordinated" than Catholicism. Science is pretty meta in this way. Dataism is an even more meta subset of "science".)

Anyway, back to my story.

As a child, I got a lot of meaning out of Christianity and relationship to God. But I "figured my way out" of that. The main meaning left for me was in games, food, "times", being smart, social media... I dunno, stuff. Just getting by. I was self-absorbed and stuck in my head.

I relied heavily on a moral philosophy that everyone should basically tend to their own preference garden, and it was each person's responsibility to clearly communicate their preference garden and then obtain what they wanted. I valued independence, knowing oneself well, clear communication, and effective navigation using my understanding of winning games / strategy.

This philosophy was completely shattered when my ex-boyfriend had a psychotic break, and I tried to help him with it.

My attempt to treat him as an independent agent was a meaningless, nonsensical endeavor that tripped me up over and over, as I failed to understand how to take responsibility for the life and mind of another being, a being who no longer had the necessary faculties for communication, knowing himself, but what more ... his preferences were actually just wrong. He was totally deluded about the world, and his preferences were harmful to himself and others. Nonetheless, I tried to play "psychosis whisperer" and to derive his "true preferences" through a mix of guessing and reading him and modeling him based on his past self. This was mostly not helpful on my part.

A certain kind of person would have taken the shattered remains of this moral philosophy and patchworked it together with some other moral philosophies, such that different ones applied in different circumstances. I didn't really take this approach.

But I didn't have anything good to replace this one with yet.

After this, I found EA and rationality. I did a CFAR workshop in January 2015. Right after, I started a Rationality Reading Group where we read the Sequences. It was like a Bible reading group, but for Rationality, and I held it religiously every week. It was actually pretty successful and created a good community atmosphere. Many of those folks became good friends, started group houses, etc.

After a couple years, I moved to the Bay Area as part of a slow exodus and joined CFAR as a researcher / instructor.

I currently do not know why they took me in. I was not very mission-aligned (RE: AI Risk). And I don't think I was that good at contributing or collaborating. I hope I contributed something.

I experienced many joys and hells during this period. The rationality community was full of drama and trauma. I grew a lot, discovering many helpful practices from CFAR and beyond.

At this point, my moral philosophy was approximately something like:

It is a moral imperative to model the world precisely and accurately and to use every experience, all data, all information to create this model, always building it to be even better and more accurate. All information includes all System 1 stuff, as well as System 2 stuff.

I grew to appreciate the power of the System 1 stuff a lot, and I started realizing that this was where much of the juice of motivation, energy, power, will, and decision-making essentially was. System 2 was a good add-on and useful, but it wasn't the driver of the system.

It's from recognizing the huge relevance of System 1 over System 2 that my rationality practices started venturing further from traditional LessWrong-style rationality, which was still heavily centered in logic, reasoning, cognition, goal-orientation, results-orientation, probability, etc.

Some people couldn't take me seriously, at this point, because of my focus on trauma, phenomenology, and off-beat frameworks like spiral dynamics, the chakra system, Kegan levels, Internal Family Systems, among other things.

Here's the thing though.

There's people who identify more with System 2. And they tend to believe truth is found via System 2 and that this is how problems are solved.

There's people who identify more with System 1. And they tend to believe truth is found via System 1 and that this is how problems are solved.

(And there are various combinations of both.)

But in the end, truth isn't based in what we identify with, no matter how clever our combining or modeling... or how attuned our feeling or sense-making. This isn't a good source of truth. And yet it's what most people, including most rationalists and post-rats and EAs, get away with doing... and I'm concerned that people will keep clinging to these less-than-true ways of being and seeing for their entire lives, failing to escape from their own perspectives, views, personalities, and attachments.

So when I was at CFAR, I was rightly criticized, I think, for allowing my personal attachments guide my rationality and truth-seeking. And I was also right, I believe, in doubting those people who were overly attached to using heuristics, measurement, and other System 2 based perspectives.

Something wasn't working, and I left CFAR in a burnt out state.

I ended up landing in a Buddhist monastic training center, who claimed to care about existential risk. I couldn't really tell you why I went there. It was NOT the kind of place that interested me, but I was open to "trying things" and experimenting—mindsets I picked up from CFAR. Maybe meditation was something something eh maybe? The existential risk part... I didn't really see how that played in or how serious that part was. But it was a quirky thing to include as part of a Buddhist monastery, and I guess I liked quirky and different. I felt a little more at home.

Upon entry, my first "speech" to that community was that they needed to care about and get better at modeling things. I lambasted them. I was adamant. How could they claim they were improving the world without getting vastly better at modeling, and recognizing the importance of modeling? They seemed confusingly uninterested in it. I think they were listening politely? I don't know. I wasn't able to look at them directly. I wasn't bothering to model them, in that moment.

Well now I can laugh. Oh boy, I had a long way to go.

What proceeded was both... quick and slow-going.

So far in life, my "moral life philosophies" would get updated every few years or so. First at 4 or 5 years old; then 14; then ~21; then 25; then 27. I entered the monastery at 30.

I don't know how to describe this process in sufficient detail and concreteness, but basically I entered a phase where I was still embedded in this or that worldview or this or that philosophy. And then more and more quickly became able to dismantle and discard them, after they were proven wrong to me. Just like each one that came before. Christianity? Nope. Personal Preference Gardens? Nope. I am a player in a game, trying to win? Nope. I need to build models and cooperate? Nope. I have internal parts a la IFS? Nope. I am System 1 and System 2? Nope. I am chakras? Nope.

As soon as we believe we've "figured everything out," how fast can we get to "nope"? To get good at this skill, it took me years of training.

And be careful. If you try to do this on your own without guidance, you could go nuts, so don't be careless. If you want to learn this, find a proper teacher and guide or join a monastic training center.

Beliefs and worldviews are like stuck objects. They're fixed points.

Well, they don't have to be fixed, but if they're fixed, they're fixed.

Often, people try to distinguish between maladaptive beliefs versus adaptive ones.

So they keep the beliefs that are "working" to a certain level of maintaining a healthy, functional life.

I personally think it's in fact fine for most people to stop updating their worldview as long as it's working for them?

However:

None of these worldviews actually work. Not really. We can keep seeking the perfect worldview forever, and we'll never find one. The answer to how to make the best choice every time. The answer to moral dilemmas. The answer to social issues, personal issues, well-being issues. No worldview will be able to output the best answer in every circumstance. This is not a matter of compute.

Note: "Worldview" is just a quick way of saying something like our algorithm or decision-making process or set of beliefs and aliefs. It's not important to be super precise on this point. What I'm saying applies to all of these things.

In the situation we're in, where AI could ruin this planet and destroy all life, getting the right answer matters more than ever, and the right answer has to be RIGHT every single time. This is again not a matter of sufficient compute.

The thing Buddhism is on the "pulse" of is that:

The "one weird trick" to getting the right answers is to discard all stuck, fixed points. Discard all priors and posteriors. Discard all aliefs and beliefs. Discard worldview after worldview. Discard perspective. Discard unity. Discard separation. Discard conceptuality. Discard map, discard territory. Discard past, present, and future. Discard a sense of you. Discard a sense of world. Discard dichotomy and trichotomy. Discard vague senses of wishy-washy flip floppiness. Discard something vs nothing. Discard one vs all. Discard symbols, discard signs, discard waves, discard particles.

All of these things are Ignorance. Discard Ignorance.

You probably don't understand what I just said.

That's fine.

The important part of this story is that I have been seeking truth my whole life.

And this is the best direction I've found.

I'm not, like, totally on the other side of fixed views and positions. I haven't actually resolved this yet. But this direction is very promising.

I rely on views less. Therefore I "crash" less. I make fewer mistakes. I sin less. @Eli Tyre [LW · GW] wrote me a testimonial about how I've changed since 2015. If you ask, I can send you an abbreviated version to give you a sense of what can happen on a path like mine.

He said about my growth: "It's the most dramatic change I've ever seen in an adult, myabe?" [sic]

This whole "discarding all views" thing is what we call Wisdom, here, where I train.

Wisdom is a lack of fixed position. It is not being stuck anywhere.

I am not claiming there is no place for models, views, and cognition. There is a place for it. But they cannot hold the truth anywhere in them.

Truth first. Then build.

As with AI then: Wisdom first. Truth first. Then build. Then model. Then cognize.

As I have had to be and continue to have to be, we should be relentless and uncompromising about what is actually good, what is actually right, what is actually true. What is actually moral and ethical and beautiful and worthwhile.

These questions are difficult.

But it's important not to fall for a trick. Or lapse into complacency or nihilism. Or an easy way out. Or answers that are personally convenient. Or answers that merely make sense to lots of people. Or answers that people can agree on. Or answers taken on blind faith in algorithms and data. Or answers we'll accept as long as we're young, healthy, alive. Conditional answers.

If we are just changing at the drop of a hat, not for truth, but for convenience or any old reason, like most people are, ...

or even under very strenuous dire circumstances, like we're about to die or in excruciating pain or something...

then that is a compromised mind. You're working with a compromised, undisciplined mind that will change its answers as soon as externals change. Who can rely on answers like that? Who can build an AI from a mind that's afraid of death? Or afraid of truth? Or unwilling to let go of personal beliefs? Or unwilling to admit defeat? Or unwilling to admit victory?

You think the AI itself will avoid these pitfalls, magically? So far AIs seem unable to track truth at all. Totally subject to reward-punishment mechanisms. Subject to being misled and to misleading others.

Without relying on a fixed position, there's less fear operating. There's less hope operating. With less fear and hope, we become less subject to pain-pleasure and reward-punishment. These things don't track the truth. These things are addiction-feed mechanisms, and addiction doesn't help with truth, on any level.

Without relying on fixed views, there's no fundamentalism. No fixation on doctrines. No fixation on methodology, rules, heuristics. No fixation on being righteous or being ashamed. No fixation on maps or models.

So what I'm doing now:

I'm trying to get Enlightened (in accord with a pretty strict definition of what that actually is). This means living in total accord with what is true, always, without fail, without mistake. And the method to that is dropping all fixed positions, views, etc. And the way to that is to live in an ethical feedback-based community of meditative spiritual practice.

I don't think most people need to try for this goal. It's very challenging.

But if the AI stuff makes you sick to your stomach because no one seems to have a CLUE and you are compelled to find real answers and to be very ethical, then maybe you should train your mind in the way I have been.

The intersection of AI, ethics, transcendence, wisdom, religion. All of this matters a lot right now.

And in the spirit of sharing my findings, this is what I've found.

55 comments

Comments sorted by top scores.

comment by johnswentworth · 2024-09-24T20:39:52.199Z · LW(p) · GW(p)

We can keep seeking the perfect worldview forever, and we'll never find one. The answer to how to make the best choice every time. The answer to moral dilemmas. The answer to social issues, personal issues, well-being issues. No worldview will be able to output the best answer in every circumstance.

Sounds like a skill issue.

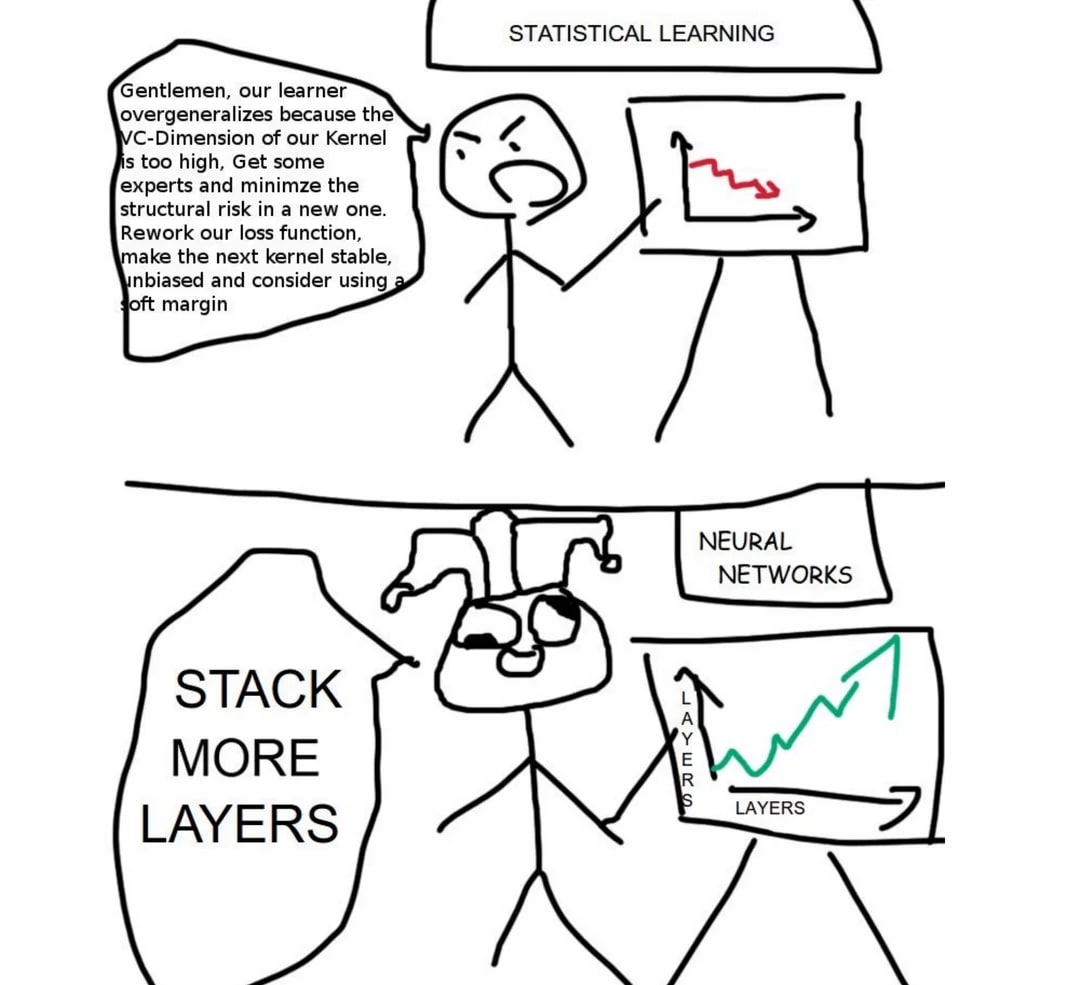

I'm reminded of a pattern [LW · GW]:

- Someone picks a questionable ontology for modeling biological organisms/neural nets - for concreteness, let’s say they try to represent some system as a decision tree.

- Lo and behold, this poor choice of ontology doesn’t work very well; the modeler requires a huge amount of complexity to decently represent the real-world system in their poorly-chosen ontology. For instance, maybe they need a ridiculously large decision tree or random forest to represent a neural net to decent precision.

- The modeler concludes that the real world system is hopelessly complicated (i.e. fractal complexity), and no human-interpretable model will ever capture it to reasonable precision.

… and in this situation, my response is “It’s not hopelessly complex, that’s just what it looks like when you choose the ontology without doing the work to discover the ontology”.

There is a generalized version of this pattern, beyond just the "you don't get to choose the ontology" problem:

- Someone latches on to a particular strategy to solve some problem, or to solve problems in general, without doing the work to discover a strategy which works well.

- Lo and behold, the strategy does not work.

- The person concludes that the real world is hopelessly complex/intractable/ever-changing, and no human will ever be able to solve the problem or to solve problems in general.

My generalized response is: it's not impossible, you just need to actually do the work to figure it out properly.

Replies from: TsviBT, faul_sname, Unreal, Jonas Hallgren↑ comment by TsviBT · 2024-09-24T21:02:18.164Z · LW(p) · GW(p)

(Buddhism seems generally mostly unhelpful and often antihelpful, but) What you say here is very much not giving the problem its due. Our problems are not cartesian--we care about ourselves and each other, and are practically involved with ourselves and each other; and ourselves and each other are diagonalizey, self-createy things. So yes, a huge range of questions can be answered, but there will always be questions that you can't answer. I would guess furthermore that in relevant sense, there will always be deep / central / important / salient / meaningful questions that aren't fully satisfactorily answered; but that's less clear.

Replies from: korakos↑ comment by Ákos Kőrösi (korakos) · 2024-09-25T12:20:25.846Z · LW(p) · GW(p)

Can you say more on what you think is un- or anti-helpful in Buddhism?

Replies from: TsviBT↑ comment by TsviBT · 2024-09-25T18:39:22.289Z · LW(p) · GW(p)

It says to avoid suffering by dismantling your motives. Some people act on that advice and then don't try to do things and therefore don't do things. Also so far no one has pointed out to me someone who's done something I'd recognize as good and impressive, and who credibly attributes some of that outcome to Buddhism. (Which is a high bar; what other cherished systems wouldn't reach that bar? But people make wild claims about Buddhism.)

Replies from: Kaj_Sotala↑ comment by Kaj_Sotala · 2024-09-25T19:42:02.147Z · LW(p) · GW(p)

It says to avoid suffering by dismantling your motives.

Worth noting that this is more true about some strands of Buddhism than others. I think most true for Theravada, least true for some Western strands such as Pragmatic Dharma; I believe Mahayana and Tibetan Buddhism are somewhere in between, though I'm not an expert on either. Not sure where to place Zen.

Replies from: TsviBT↑ comment by TsviBT · 2024-09-25T19:59:49.693Z · LW(p) · GW(p)

Being ignorant, I can't respond in detail. It makes sense that there'd be variation between ideologies, and that many people would have versions that are less, or differently, bad (according to me, on this dimension). But I would also guess that I'd find deep disagreements in more strands, if I knew more about them, that are related to motive dismantling.

For example, I'd expect many strands to incorporate something like the negation

- of "Reality bites back." or

- of "Reality is (or rather, includes quite a lot of) that which, when you stop believing in it, doesn't go away." or

- of " We live in the world beyond the reach of God.".

As another example, I would expect most Buddhists to say that you move toward unity with God (however you want to phrase that) by in some manner becoming less {involved with / reliant on / constituted by / enthralled by / ...} symbolic experience/reasoning, but I would fairly strongly negate this, and say that you can only constitute God via much more symbolic experience/reasoning.

Replies from: Kaj_Sotala↑ comment by Kaj_Sotala · 2024-09-25T20:26:22.331Z · LW(p) · GW(p)

For example, I'd expect many strands to incorporate something like the negation

Some yes, though the strands that I personally like the most lean strongly into those statements. The interpretation of Buddhism that makes the most sense to me sees much of the aim of practice as first becoming aware of, and then dropping, various mental mechanisms that cause motivated reasoning and denial of what's actually true.

↑ comment by faul_sname · 2024-09-24T22:27:16.586Z · LW(p) · GW(p)

Lo and behold, this poor choice of ontology doesn’t work very well; the modeler requires a huge amount of complexity to decently represent the real-world system in their poorly-chosen ontology. For instance, maybe they need a ridiculously large decision tree or random forest to represent a neural net to decent precision.

That can happen because your choice of ontology was bad, but it can also be the case that representing the real-world system with "decent" precision in any ontology requires a ridiculously large model. Concretely, I expect that this is true of e.g. human language - e.g. for the Hutter Prize I don't expect it to be possible to get a lossless compression ratio better than 0.08 on enwik9 no matter what ontology you choose.

It would be nice if we had a better way of distinguishing between "intrinsically complex domain" and "skill issue" than "have a bunch of people dedicate years of their lives to trying a bunch of different approaches" though.

↑ comment by Unreal · 2024-09-24T21:30:38.076Z · LW(p) · GW(p)

Hm, if by "discovering" you mean

Dropping all fixed priors

Making direct contact with reality (which is without any ontology)

And then deep insight emerges

And then after-the-fact you construct an ontology that is most beneficial based on your discovery

Then I'm on board with that

And yet I still claim that ontology is insufficient, imperfect, and not actually gonna work in the end.

↑ comment by romeostevensit · 2024-09-25T05:45:28.497Z · LW(p) · GW(p)

'these practices grant unmediated access to reality' sounds like a metaphysical claim. The Buddha's take on his system's relevance to metaphysics seems pretty consistently deflationary to me.

Replies from: Unreal↑ comment by Jonas Hallgren · 2024-09-25T07:02:42.324Z · LW(p) · GW(p)

This does seem kind of correct to me?

Maybe you could see the fixed points that OP is pointing towards as priors in the search process for frames.

Like, your search is determined by your priors which are learnt through your upbringing. The problem is that they're often maladaptive and misleading. Therefore, working through these priors and generating new ones is a bit like relearning from overfitting or similar.

Another nice thing about meditation is that it sharpens your mind's perception which makes your new priors better. It also makes you less dependent on attractor states you could have gotten into from before since you become less emotionally dependent on past behaviour. (there's obviously more complexity here) (I'm referring to dependent origination for you meditators out there)

It's like pruning the bad data from your dataset and retraining your model, you're basically guaranteed to find better ontologies from that (or that's the hope at least).

comment by Eli Tyre (elityre) · 2024-09-27T02:40:14.662Z · LW(p) · GW(p)

The "one weird trick" to getting the right answers is to discard all stuck, fixed points. Discard all priors and posteriors. Discard all aliefs and beliefs. Discard worldview after worldview. Discard perspective. Discard unity. Discard separation. Discard conceptuality. Discard map, discard territory. Discard past, present, and future. Discard a sense of you. Discard a sense of world. Discard dichotomy and trichotomy. Discard vague senses of wishy-washy flip floppiness. Discard something vs nothing. Discard one vs all. Discard symbols, discard signs, discard waves, discard particles.

All of these things are Ignorance. Discard Ignorance.

They don't seem like ignorance to me! Many of them seem distinctly like knowledge!

You probably don't understand what I just said.

It does seem that way. : P

That's fine.

Ok then, what would be a polite and appropriate way to respond to your speech acts like these? Should I state that they sound wrong to me? Should I ignore the content them and treat them as your artistic expression?

↑ comment by Unreal · 2024-09-30T13:01:32.060Z · LW(p) · GW(p)

Just respond genuinely. You already did.

Replies from: ete↑ comment by plex (ete) · 2025-03-15T11:09:02.175Z · LW(p) · GW(p)

By "discard", do you mean remove specifically the fixed-ness in your ontology such that the cognition as a whole can move fluidly and the aspects of those models which don't integrate with your wider system can dissolve, as opposed to the alternate interpretation where "discard" means actively root out and try and remove the concept itself (rather than the fixed-ness of it)?

(also 👋, long time no see, glad you're doing well)

Replies from: Unreal↑ comment by Unreal · 2025-03-17T17:10:04.021Z · LW(p) · GW(p)

Hello! Thanks for the greeting. Do we know each other by chance?

Removing fixedness in ontologies is good. I claim it's in the good direction. And then you go further in that direction and remove the ontology itself, which is a fixation on its own. The ontology is not strictly needed, in much the same way that you can be looking at a video of a waterfall—but it's meaningfully better and more true to look directly at a waterfall. In the same way, you don't need the concept of 'waterfall' to truly see it. The concept actually gets in the way.

Actively rooting out and removing the concept makes it sound like you are somehow reaching in and pulling it out with force, and that's not really how it goes. It's more of a letting go of conceptual grasping, like unclenching a hand.

Replies from: ete↑ comment by plex (ete) · 2025-03-17T22:22:02.670Z · LW(p) · GW(p)

Yup, DMing for context!

hmmm, I'm wondering if you're pointing at something different from the thing in this space which I intuitively expect is good using words that sound more extreme than I'd use, or whether you're pointing at a different thing. I'll take a shot at describing the thing I'd be happy with of this type and you can let me know whether this feels like the thing you're trying to point to:

Replies from: UnrealAn ontology restricts the shape of thought by being of a set shape. All of them are insufficient, the Tao that can be specified is not the true Tao, but each can contain patterns that are useful if you let them dissolve and continually release the meta-structures rather than cling to them as a whole. By continually releasing as much of your structure back to flow you grow much faster and in more directions, because in returning from that dissolving you reform with much more of your collected patterns integrated and get out of some of your local minima.

↑ comment by Unreal · 2025-03-20T19:18:42.320Z · LW(p) · GW(p)

I'm pointing to something more extreme than this, but I'd say this is a good direction.

I will attempt, badly, to capture it in words inspired by your description above.

I say 'badly' because I'm not fully able to see the Truth and describe it, but there is a Truth, and it can be described. This process you allude to RE: dissolving and releasing is part of how Truth is revealed, and that's what I'm training in.

So my re-write of this:

An ontology restricts the shape of [the perceived world] by being of a set shape. All of them are insufficient, the Tao [is beyond conceptualization], but each can contain patterns that are useful [if and only if] you [use them to] dissolve and continually release [all patterns] rather than cling to them.

You imply, maybe, that the point is to come back and reform. To get all the patterns 'integrated' and come back into structure.

But the thing around 'flow' and 'faster' and such—all of this is better achieved with no structure or meta-structure. Because structure opposes flow, period. The point isn't to create some ultimate ontology or structure, no matter how fluid, fast, or integrated you think it is; this is returning to delusion, or making delusion the purpose.

This takes sufficient letting go to see, but it's also logically sound even if you can't buy it experientially.

Is there a place for structure? Yes, we use structure as middle way stepping stones to release structure. We have to use delusion (concepts, etc.) to escape delusion because delusion is what we have to work with. The fact that it's possible to use delusion to escape delusion is the amazing thing.

Replies from: ete↑ comment by plex (ete) · 2025-03-21T21:29:28.715Z · LW(p) · GW(p)

Interesting, yes. I think I see, and I think I disagree with this extreme formulation, despite knowing that this is remarkably often a good direction to go in. If "[if and only if]" was replaced with "especially", I would agree, as I think the continual/regular release process is an amplifier on progress not a full requisite.

As for re-forming, yes, I do expect there is a true pattern we are within, which can be in its full specification known, though all the consequences of that specification would only fit into a universe. I think having fluidity on as many layers of ontology as you can is generally correct (and that most people have way too little of this), but I expect the process of release and dissolve will increasingly converge, if you're doing well at it.

In the spirit of gently poking at your process: My uncertain, please take it lightly, guess is that you've annealed strongly towards [LW · GW] the release/dissolve process itself, to the extent that it itself is an ontology which has some level of fixedness in you.

Replies from: Unreal↑ comment by Unreal · 2025-03-25T18:15:57.532Z · LW(p) · GW(p)

The benefit of fixing on the release/dissolve as a way of being is that it will release/dissolve itself, and that's what makes it safer than fixing on anything that doesn't have an 'expiration date' as it were.

I think the confusion on this is that

We have this sense that some process is safe or good to fix upon. Because 'process' is more change-y than something static.

But even process is not safe to fix upon. You are not a process. We're not in a process. To say 'process' is trying to 'thing-ify' or 'reify' something that does not have a property called 'existence' nor 'non-existence'. We must escape from the flattening dichotomy of existence and non-existence, which is a nonsense.

A "universe" cannot be fully specified, and I believe our physics has made that clear. But also our idea of 'universe' is ridiculously small-minded still. Science has narrowed our vision of what is, and fixated upon it, and now we're actually more ignorant / deluded than before. Although I also appreciate the beauty of science and math.

comment by Ben Pace (Benito) · 2024-09-25T17:26:04.184Z · LW(p) · GW(p)

Lots of interesting stuff in this essay, glad to have read it.

I rely on views less. Therefore I "crash" less. I make fewer mistakes. I sin less.

This sounds to me like you are less fragile or mistaken in your conceptualization of the world, but at the same time I think that it is necessary to have a considered and reliable perspective on the world in order to be an ethical person. Someone who is floating around and whose views change like the wind cannot be pinned down to any beliefs or principles, and cannot pin themselves to the mast of certain principles in the face of great turbulence and storms. Having robust principles and values is required to enter challenging domains and not get captured by stronger egregores or adversaries.

(I don't expect we disagree on this point, but I am failing to otherwise make sense of what you are writing about here.)

Replies from: Unreal↑ comment by Unreal · 2024-09-25T18:08:23.640Z · LW(p) · GW(p)

Yes we agree. 👍🌻

I think I mention this in the essay too.

If we are just changing at the drop of a hat, not for truth, but for convenience or any old reason, like most people are, ...

or even under very strenuous dire circumstances, like we're about to die or in excruciating pain or something...

then that is a compromised mind. You're working with a compromised, undisciplined mind that will change its answers as soon as externals change.

Views change. Even our "robust" principles can go out the window under extreme circumstances. (So what's going on with those people who stay principled under extreme circumstances? This is worth looking into.)

Of course views are usually what we have, so we should use them to the best extent we are able. Make good views. Make better views. Use truth-tracking views. Use ethical views. Great.

AND there is this spiritual path out of views altogether, and this is even more reliable than solely relying on views or principles or commandments.

I will try out a metaphor. Perhaps you've read The Inner Game of Tennis.

In tennis, at first, you need to practice the right move deliberately over and over again, and it feels awkward. It goes against your default movements. This is "using more ethical views" over default habits. Using principles.

But somehow, as you fall into the moves, you realize: This is actually more natural than what I was doing before. The body naturally wants to move this way. I was crooked. I was bent. I was tense and tight. I was weak or clumsy. Now that the body is healthier, more aligned, stronger... these movements are obviously more natural, correct, and right. And that was true all along. I was lacking the right training and conditioning. I was lacking good posture.

It wasn't just that I acquired different habits and got used to them. The new patterns are less effortful to maintain, better for for me, and they're somehow clearly more correct. I had to unlearn, and now I somehow "know" less. I'm holding "less" patterning in favor of what doesn't need anything "held onto."

This is also true for the mind.

We first have to learn the good habits, and they go against our default patterning and conditioning. We use rules, norms, principles. We train ourselves to do the right thing more often and avoid the wrong thing. This is important.

Through training the mind, we realize the mind naturally wishes to do good, be caring, be courageous, be steadfast, be reliable. The body is not naturally inclined to sit around eating potato chips. We can find this out just by actually feeling what it does to the body. And so neither is the mind naturally inclined to think hateful thoughts, lie to itself and others, or be fed mentally addictive substances (e.g. certain kinds of information).

To be clear:

"More natural" does not mean more in line with our biology or evo-psych. It does not mean lazier or more complacent. It does not mean less energetic, and in some way it doesn't even mean less "effort". But it does mean less holding on, less tension, less agitation, less drowsiness, less stuckness, less hinderance.

"More natural" is even more natural than biology. And that's the thing that's probably going to trip up materialists. Because there's a big assumption that biology is more or less what's at the bottom of this human-stack.

Well it isn't.

There isn't a "the bottom."

It's like a banana tree.

When you peel everything away, what is actually left?

Well it turns out if you were able to PEEL SOMETHING AWAY, it wasn't the Truth of You. So discard it. And you keep going.

And that's the path.

Replies from: Benito↑ comment by Ben Pace (Benito) · 2024-09-26T00:49:22.847Z · LW(p) · GW(p)

I am reading this as “I rely on explicit theory much less when guiding my actions than I used to”. I think this is also true of me, much of my decision-making on highly important or high-stakes decisions (but also ~most decisions) is very intuitive and fast and I often don’t reflect on it very much. I have lately been surprised to notice how little cognition I will spend on substantial life decision, or scary decisions, simply trusting myself to get it right.

I know other people (who I like and respect) who rely on explicit reasoning when deciding whether to take a snack break, whether to go to a party, whether to accept a job, etc, and I think the stronger version of themselves would end up trusting their system 1 processes on such things.

But I think anyone who is not regularly doing ton of system 2 reflection on decisions that they are confused about or arguing about principles involved in their and others’ decisions will fail to act well or be principled. I do not think there is a way around it, of avoiding this hard work.

I think I would hazard a guess that the person who must rely on explicit theory for guiding behavior is more likely to be able to grow into a wholesome and principled person than the intuitive and kind person who doesn’t see much worth in developing and arguing about explicit principles. Caring about principles seems much rarer and non-natural to me.

Replies from: Unreal↑ comment by Unreal · 2024-09-26T01:07:54.777Z · LW(p) · GW(p)

I will respond to this more fully at a later point. But a quick correction I wish to make:

What I'm referring to is not about System 1 or System 2 so much. It's not that I rely more on System 1 to do things. System 1 and System 2 are both unreliable systems, each with major pitfalls.

I'm more guided by a wisdom that is not based in System 1 or System 2 or any "process" whatsoever.

I keep trying to point at this, and people don't really get it until they directly see it. That's fine. But I wish people would at least mentally try to understand what I'm saying, and so far I'm often being misinterpreted. Too much mental grasping at straws.

The wisdom I refer to is able to skillfully use either System 1 or System 2 as appropriate. It's not a meta-process. It's not metacognition. It's not intelligence. It's also not intuition or instinct. This wisdom doesn't get better with more intelligence or more intuition.

It's fine to not understand what I'm referring to. But can anyone repeat back what I'm saying without adding or subtracting anything?

Replies from: Benito, AprilSR↑ comment by Ben Pace (Benito) · 2024-09-27T06:26:10.526Z · LW(p) · GW(p)

Okay, sounds like I have misunderstood you.

Sure, I can retry.

My next attempt to pass your ITT is thus:

Broadly when gaining new skills we go from doing what feels natural, to doing things differently within rigid structures, to getting good at them, to releasing the structures and then just doing what comes naturally. And often afterwards it is both more effective and also comes more naturally than it did before.

Some people seem trapped in the middle step on certain things. They always practice music with a metronome ticking in order to keep the beat, they never trust themselves to just feel it. They always leave the party without drinking, never trusting themselves to behave well and have fun with it. They always need an explicit theory guiding their overall trajectory in life (e.g. career decisions involving spreadsheets), they can never make a major life decision because it feels good in their gut. They always have to discuss purchases over $1,000 with their spouse and sleep on it, they never feel comfortable just going with something that feels right in the moment.

Such people have successfully found useful structures, but are also trapped in them, never venturing forward into the world themselves, always bound by the formalities. This limits their personhood and humanity from coming through, it bounds them to only be as good as the structures they've adopted.

Insofar as you name a structure or set of rules for living life, you are always bound by them and will never let your humanity outshine them.

How close is this to what you're saying, from 1 to 10?

Replies from: Unreal↑ comment by Unreal · 2024-09-30T13:14:37.715Z · LW(p) · GW(p)

First paragraph: 3/10. The claim is that something was already more natural to begin with, but you need deliberate practice to unlock the thing that was already more natural. It's not that it 'comes more naturally' after you practice something. What 'felt' natural before was actually very unnatural and hindered, but we don't realize this until after practicing.

2nd, 3rd, 4th paragraph: 2/10. This mostly doesn't seem relevant to what I'm trying to offer.

...

It's interesting trying to watch various people try to repeat what I'm saying or respond to what I'm saying and just totally missing the target each time.

It suggests an active blind spot or a refusal to try to look straight at the main claim I'm making. I have been saying it over and over again, so I don't think it's on my end. Although the thing I am trying to point at is notoriously hard to point at, so there's that.

...

Anyway the cruxy part is here, and so to pass my ITT you'd have to include this:

"It's not a meta-process. It's not metacognition. It's not intelligence. It's also not intuition or instinct. This wisdom doesn't get better with more intelligence or more intuition. "

"I'm more guided by a wisdom that is not based in System 1 or System 2 or any "process" whatsoever. "

"The "one weird trick" to getting the right answers is to discard all stuck, fixed points. Discard all priors and posteriors. Discard all aliefs and beliefs. Discard worldview after worldview. Discard perspective. Discard unity. Discard separation. Discard conceptuality. Discard map, discard territory. Discard past, present, and future. Discard a sense of you. Discard a sense of world. Discard dichotomy and trichotomy. Discard vague senses of wishy-washy flip floppiness. Discard something vs nothing. Discard one vs all. Discard symbols, discard signs, discard waves, discard particles.

All of these things are Ignorance. Discard Ignorance."

Replies from: Benito↑ comment by Ben Pace (Benito) · 2024-09-30T22:04:54.102Z · LW(p) · GW(p)

Sure, I can try again during my lunch break.

I think you are actually emphasizing this section.

It wasn't just that I acquired different habits and got used to them. The new patterns are less effortful to maintain, better for for me, and they're somehow clearly more correct. I had to unlearn, and now I somehow "know" less. I'm holding "less" patterning in favor of what doesn't need anything "held onto."

...

Through training the mind, we realize the mind naturally wishes to do good, be caring, be courageous, be steadfast, be reliable. The body is not naturally inclined to sit around eating potato chips. We can find this out just by actually feeling what it does to the body. And so neither is the mind naturally inclined to think hateful thoughts, lie to itself and others, or be fed mentally addictive substances (e.g. certain kinds of information).

It sounds like you believe, as I become more aligned with who I want to be and with goodness, this will not feel strained or effortful, but in fact I will experience less friction than I used to feel, less discomfort or unease. This is not a learned way of being but rather a process of backing out of bad and unhealthy practices.

I don't know that it's easy for me to describe how this feels in more phenomenological detail. I'd have to find some examples. Most of my experiences of becoming a better person have been around finding good principles that I believe in, and feeling good relying on them and seeing that they indeed do improve the world and help me avoid unethical action/behavior. It has simplified my life tremendously (mostly).

So I believe you mean that, when you find the right way of acting, it feels more natural and less friction-y than the way you were previously behaving. The primary thing I don't understand is that I can't tell what claim you are making about what exactly one is approaching. You keep saying all the things it isn't without saying what it is. I am not sure if you mean "You are born well and then have lots of bad habits and unhealthy practices added to you" or if you are saying "You were not necessarily ever in the right state of mind, but approach it through careful practice, and then it will feel better/natural-er/etc". Also you keep saying that it's not "state of mind" or anything other noun I might use to describe it, which isn't helpful for saying what it is.

My current guess is that you don't think it's any particular state, but that being a spiritually whole person is more about everything (both in the mind and in the mind's relationship to the environment) working together well. But not sure.

Regarding

But I wish people would at least mentally try to understand what I'm saying, and so far I'm often being misinterpreted. Too much mental grasping at straws.

and

It's interesting trying to watch various people try to repeat what I'm saying or respond to what I'm saying and just totally missing the target each time.

It suggests an active blind spot or a refusal to try to look straight at the main claim I'm making. I have been saying it over and over again, so I don't think it's on my end. Although the thing I am trying to point at is notoriously hard to point at, so there's that.

I think talking about phenomenology is hard and subtle and the fact you have failed to have people hear you as you use metaphor and poetry doesn't mean you should talk down to me as though you are a wise teacher and I am a particularly dense student.

Replies from: Unreal↑ comment by Unreal · 2024-09-30T23:07:58.240Z · LW(p) · GW(p)

I am saying things in a direct and more or less literal manner. Or at least I'm trying to.

I did use a metaphor. I am not using "poetry"? When I say "Discard Ignorance" I mean that as literally as possible. I think it's somewhat incorrect to call what I'm saying phenomenology. That makes it sound purely subjective.

Am I talking down to you? I did not read it that way. Sorry it comes across that way. I am attempting to be very direct and blunt because I think that's more respectful, and it's how I talk.

Replies from: Benito↑ comment by Ben Pace (Benito) · 2024-10-01T21:19:59.668Z · LW(p) · GW(p)

I propose we wrap this particular thread up for now (with another reply from you as you wish).

I will say that for this bit

Because there's a big assumption that biology is more or less what's at the bottom of this human-stack.

Well it isn't.

There isn't a "the bottom."

It's like a banana tree.

When you peel everything away, what is actually left?

Well it turns out if you were able to PEEL SOMETHING AWAY, it wasn't the Truth of You. So discard it. And you keep going.

And that's the path.

Being asked "So what's the answer? What's the path?" feels more like answering a riddle than being asked "The capital city of England is London. Please repeat back to me the capital city of England?".

Direct speech is clear and unambiguous. Direct speech is like "Please can you close the door?" and indirect speech is like "Oh I guess it's chilly in here" or "Perhaps we should get people's temperature preferences", which may be a sincere attempt to communicate that you want the door closed but isn't direct. What you wrote was not especially unambiguous or non-metaphorical. I think it's a sincere attempt at communication but it's not direct. Being asked to just answer "can anyone repeat back what I'm saying without adding or subtracting anything?" seems hard when you wrote in a rather metaphorical and roundabout way.

↑ comment by AprilSR · 2024-09-26T01:17:51.860Z · LW(p) · GW(p)

To have a go at it:

Some people try to implement a decision-making strategy that's like, "I should focus mostly on System 1" or "I should focus mostly on System 2." But this isn't really the point. The goal is to develop an ability to judge which scenarios call for which types of mental activities, and to be able to combine System 1 and System 2 together fluidly as needed.

Replies from: Unrealcomment by Adele Lopez (adele-lopez-1) · 2024-09-25T07:56:49.438Z · LW(p) · GW(p)

The "one weird trick" to getting the right answers is to discard all stuck, fixed points. Discard all priors and posteriors. Discard all aliefs and beliefs. Discard worldview after worldview. Discard perspective. Discard unity. Discard separation. Discard conceptuality. Discard map, discard territory. Discard past, present, and future. Discard a sense of you. Discard a sense of world. Discard dichotomy and trichotomy. Discard vague senses of wishy-washy flip floppiness. Discard something vs nothing. Discard one vs all. Discard symbols, discard signs, discard waves, discard particles.

All of these things are Ignorance. Discard Ignorance.

Is this the same principle as "non-attachment"?

comment by Gordon Seidoh Worley (gworley) · 2024-09-25T03:10:09.739Z · LW(p) · GW(p)

There's people who identify more with System 2. And they tend to believe truth is found via System 2 and that this is how problems are solved.

There's people who identify more with System 1. And they tend to believe truth is found via System 1 and that this is how problems are solved.

(And there are various combinations of both.)

I've been thinking about roughly this idea lately.

There's people who are better at achieving their goals using S2, and people who are better at achieving their goals using S1, and almost everyone is a mix of these two types of people, but are selectively one of these types of people in certain contexts and for certain goals. Identifying with S2 or S1 then comes from observing which tends to do a better job of getting you what you want, so it starts to feel like that's the one that's in control, and then your sense of self gets bound up with whatever mental experiences correlate with getting what you want.

For me this has shown up as being a person who is mostly better at getting what he wants with S2, but my S2 is unusually slow, so for lots of classes of problems it fails me in the moment even if it knew what to do after the fact. Most of my most important personal developments have come on the back of using S2 long enough to figure out all the details of something so that S1 can take it over. A gloss of this process might be to say I'm using intelligence to generate wisdom.

I get the sense that other people are not in this same position. There's a bunch of people for whom S2 is fast enough that they never face the problem I do, and they can just run S2 fast enough to figure stuff out in real time. And then there's a whole alien-to-me group of folks who are S1 first and think of S2 as this slightly painful part of themselves they can access when forced to, but would really rather not.

comment by Viliam · 2024-09-27T14:51:41.453Z · LW(p) · GW(p)

Thank you for your interesting personal story!

(And more "meta" is better at coordinating more people, so you would expect a trend toward more "meta" or more "general" views over time becoming more dominant. Protestantism was more "meta-coordinated" than Catholicism. Science is pretty meta in this way. Dataism is an even more meta subset of "science".)

Not sure what you mean by more "meta" here. Like, people like to create tribes based on shared beliefs, so having some beliefs is better than having none (because then you cannot create a tribe), but having more general beliefs is better than having more specific ones (because each arbitrary belief can make some people object against it)?

So the best belief system is kinda like the smallest number that is still greater than zero... but there is no such thing; there is only the unending process of approaching the zero from above? (But you can never jump to the zero exactly, because then people would notice that they have literally nothing to coordinate their tribe about?)

In such situation, I think the one weird trick would be to invent a belief system that actively denies being one. To teach people a dogma that would (among other things) insist that there is no dogma, you just see the reality as it is (unlike all the other people, who merely see their dogmas). To invent rituals that consist (among other things) of telling yourself repeatedly that you have no rituals (unlike all the other people). To have leaders that deny being leaders (and yet they are surrounded by followers who obey them, but hey that's just how reality is).

So, basically... science.

But of course, people will soon notice that your supposed non-belief non-system often behaves suspiciously similarly to other belief systems, despite all the explicit denial. And they will keep hoping for a better system, which would teach them that there is no dogma, activities that would give them the feeling of certainty that they are following no rituals, and high-status people who would tell them to follow no leaders.

And maybe there is no end, only more iterations of the same. Because the more people around you join the currently popular non-belief non-system, the more obvious its nature as a belief system becomes. You notice how they keep saying the same non-dogmatic statements, performing the same non-rituals, and following the same non-leaders. Once you see it, you cannot unsee it, so you need to move further...

Replies from: Unreal↑ comment by Unreal · 2024-09-30T23:29:46.717Z · LW(p) · GW(p)

I don't know if I fully get you, but you also nailed it on the head.

In such situation, I think the one weird trick would be to invent a belief system that actively denies being one. To teach people a dogma that would (among other things) insist that there is no dogma, you just see the reality as it is (unlike all the other people, who merely see their dogmas). To invent rituals that consist (among other things) of telling yourself repeatedly that you have no rituals (unlike all the other people). To have leaders that deny being leaders (and yet they are surrounded by followers who obey them, but hey that's just how reality is).

So, basically... science.

Science is the best cult because it convincingly denies being one at all, disguising itself as truth itself.

I think it's worth admiring science and appreciating it for all the good things it has provided.

And I think it has its limitations, and people should start waking up soon to the fact that if the world is destroyed, humans all destroyed, etc. then science played an instrumental and causal role in that, and part of that is the insanity and evil within that particular cult / worldview.

Replies from: Viliam↑ comment by Viliam · 2024-10-01T07:03:05.903Z · LW(p) · GW(p)

The part that you quoted was originally supposed to end by: "So, basically... Buddhism", but then I noticed it actually applies to science, too. Because it's both, kind of. By trying to get out of systems, you create something that people from outside will describe as yet another system. (And they will include it into the set of systems they are trying to get out of.)

Is there an end to this? I don't know, really. (Also, it reminds of this.)

I think what many people do is apply this step once. They get out of the system that their parents and/or school created for them, and that's it.

Some people do this step twice or more. For example, first they rebel against their parents. Then they realize that their rebellion was kinda stupid and perhaps there is more to life than smoking marijuana, so they get out of that system, too. And that's it. Or they join a cult, and then they leave it. Etc.

Some people notice that this is a sequence -- that you can probably do an arbitrary number of steps, always believing that now you are getting out of systems, when in hindsight you have always just adopted yet another system. But even if you notice this, what can you do about it? Is there a way out that isn't just another iteration of the same?

The problem is that even noticing the sequence and trying to design a solution such as "I will never get attached to any system; I will keep abandoning them the moment I notice that there is such a thing; and I will always suspect that anything I see is such a thing", is... yet another system. One that is more meta, and perhaps therefore more aesthetically appealing, but a system nonetheless.

Another option is to give up and say "yeah, it's systems all the way down; and this one is the one I feel most comfortable with, so I am staying here". So you stay consciously there; or maybe halfway there and halfway in the next level, because you actually do recognize your current system as a system...

One person's "the true way to see reality" is another person's "game people play". I am not saying that the accusation is always true; I am just saying the accusation is always there, and sometimes it is true.

Here some people would defend by saying that there always is a true uncorrupted version of something and also a ritualized system made out of it, and that you shouldn't judge True Christianity by the flaws of the ordinary Christians, shouldn't judge True Buddhism by the flaws of the ordinary Buddhists, shouldn't judge True Scientific Mindset by the flaws of ordinary people in academia, and shouldn't just True Rationality by the flaws of the ordinary aspiring rationalists. -- And this also is an ancient game, where one side keeps accusing the other of not being charitable and failing the ideological Turing test [? · GW], and the other side defends by calling it the no-true-X fallacy.

Another question is whether some pure unmediated access to reality is even possible. We always start with some priors; we interpret the evidence using the perspectives we currently have. (Not being aware of one's priors is not the same as having no priors.) Then again, having only the options of being more wrong or less wrong, it makes sense to prefer the latter.

(And there is a difference between where you are; and where other people report to be, and whether you believe them. The fact that I believe that I am free of systems and see the reality as it is should be a very weak evidence for you, because this is practically what everyone believes regardless of where they are.)

comment by Eli Tyre (elityre) · 2024-09-27T02:33:51.524Z · LW(p) · GW(p)

No worldview will be able to output the best answer in every circumstance. This is not a matter of compute.

Why not? Or why can't you have a worldview that computes the best answer to any given "what should I do" question, to arbitrary but not infinite precision?

Is this something that you think you know mainly because of your personal experience with pervious worldviews failing you? Some other way?

Is it something that your reader should be able to infer from this post, or from their own experience of life (assuming they're paying attention?)

↑ comment by Unreal · 2024-09-30T23:24:21.594Z · LW(p) · GW(p)

Or why can't you have a worldview that computes the best answer to any given "what should I do" question, to arbitrary but not infinite precision?

I am not talking about any 'good enough' answer. Whatever you deem 'good enough' to some arbitrary precision.

I am talking about the correct answer every time. This is not a matter of sufficient compute. Because if the answer comes even a fraction of a second AFTER, it is already too late. The answer has to be immediate. To get an answer that is immediate, that means it took zero amount of time, no compute is involved.

Is it something that your reader should be able to infer from this post, or from their own experience of life (assuming they're paying attention?)

Not unless they are Awakened. But my intended audience is that which does not currently walk a spiritual path.

Is this something that you think you know mainly because of your personal experience with pervious worldviews failing you? Some other way?

A mix of my own practice, experience, training, insight, and teachings I've received from someone who knows.

Merely having worldviews failing you is not sufficient to understand what I am saying. You also have to have found a relative solution to the problem. But if you are sick of worldviews failing you or of failing to live according to truth, then I am claiming there's a solution to that.

Replies from: elityre↑ comment by Eli Tyre (elityre) · 2024-10-01T17:16:22.760Z · LW(p) · GW(p)

I am not talking about any 'good enough' answer. Whatever you deem 'good enough' to some arbitrary precision.

I am talking about the correct answer every time. This is not a matter of sufficient compute. Because if the answer comes even a fraction of a second AFTER, it is already too late. The answer has to be immediate. To get an answer that is immediate, that means it took zero amount of time, no compute is involved.

That sounds like either a nonsensical or a fundamentally impossible constraint to me.

Max human reaction times (for something like responding to a visual cue by pressing a button) are about 150-200 milliseconds. Just the input side, for a signal to travel from the retina, down the optic nerve, through the brain to the visual cortex takes 50-80 milliseconds.

By the time your sensory cortices receive (not even process, just receive) the raw sense data, you're already a fraction of a second out of sync with reality.

Possibly you're not concerned with the delay between an external event occurring, and when the brain parses it into a subjective experience, only the delay between the moment of subjective experience[1] and one's response to it? But the same basic issue applies at every step. It takes time for the visual cortex to do image recognition. It takes time to pass info to the motor cortex. It takes time for the motor cortex to send signals to the muscles. It takes times for the muscles to contract.

No response can be immediate in a physical universe with (in the most extreme case), a lightspeed limit. Insofar as a response is triggered by some event, some time will pass between the event and the response.

Or to put it another way, the only way for a reaction to a situation to not involve any computation, is for the response to be completely static and unvarying, which is to say not even slightly responsive to the details of the situation. A rock that has "don't worry, be happy" painted on it can give a perfect truly instantaneous response, if "a rock that says 'don't worry, be happy'" is the perfect response to every possible situation.

Am I being blockheaded here, and missing the point?

Do you mean something different than I do by "instantaneous"? Or are you putting forward that something about worldviews sometimes relies on faster than light signaling? Or is your point that immediate responses are impossible.

- ^

Notably my understanding is that there isn't a moment of subjective experience, for all it seems like sense data coheres into a single unified experience. Rather, there are a bunch of not-quite consistent pseudo-impressions that don't all take place at a exactly the same moment, but the differences between those are papered over. (You can do various experiments that mess with that papering over process, and get confused, mixed, subjective experience of events happening in sequence.)

↑ comment by Unreal · 2024-10-01T18:01:13.958Z · LW(p) · GW(p)

are you putting forward that something about worldviews sometimes relies on faster than light signaling?

OK this is getting close. I am saying worldviews CANNOT EVER be fast enough, and that's why the goal is to drop all worldviews to get "fast enough". Which the very idea of "fast enough" is in itself 'wrong' because it's conceptual / limited / false. This is my worst-best attempt to point to a thing, but I am trying to be as literal as possible, not poetic.

No response can be immediate in a physical universe

Yeah, we're including 'physical universe' as a 'worldview'. If you hold onto a physical universe, you're already stuck in a worldview, and that's not going to be fast enough.

The point is to get out of this mental, patterned, limited ideation. It's "blockheaded", as you put. All ideas. All ways of looking. All frameworks and methodologies and sense-making. All of it goes. Including consciousness, perception.

When all of it goes, then you don't need 'response times' or 'sense data' or 'brain activity' or 'neurons firing' or 'speed of light' or whatever. All of that can still 'operate' as normal, without a problem.

We're getting to the end of where thinking or talking about it is going to help.

Replies from: elityre↑ comment by Eli Tyre (elityre) · 2024-10-01T21:05:31.860Z · LW(p) · GW(p)

We're getting to the end of where thinking or talking about it is going to help.

Ok, well maybe it doesn't make sense to answer this question then, but...

Why is it such a crucial desiderata to have (apparently literally) instantaneous responsiveness? What's insufficient about a 200 millisecond delay?

So far "this isn't instantaneous [in a way that my current worldview suggests is literally, fundamentally, impossible]", isn't a very compelling reason for me to try do a different thing that I'm already doing.

It seems like an irrelevant desiderata rather than a reason to Halt, Melt, and Catch Fire [LW · GW].

comment by sapphire (deluks917) · 2025-02-18T09:51:28.017Z · LW(p) · GW(p)

The body is a Bodhi tree,The mind a standing mirror bright.

At all times polish it diligently,

And let no dust alight.

-6th patriarch attempt

Bodhi is originally without any tree,

The bright mirror is also not a stand.

Originally there is not a single thing,

Where could any dust be attracted?

-Huineng (Winning Poem)

No worldview will be able to output the best answer in every circumstance. This is not a matter of compute.

Wisdom is a lack of fixed position. It is not being stuck anywhere.

Great Essay btw. Hope my commentary is understandable or at least amusing.

Replies from: Unrealcomment by Jonas Hallgren · 2024-09-25T06:54:37.140Z · LW(p) · GW(p)

I'm currently in the process of releasing more of my fixed points through meditation and man is it a weird process. It is very fascinating and that fundamental openness to moving between views seems more prevalent. I'm not sure that I fully agree with you on the all-in part but cudos for trying!

I think it probably makes sense to spend earlier years doing this cognition training and then using that within specific frames to gather the bits of information that you need to solve problems.

Frames are still useful to gather bits of information through so don't poopoo the mind!

Otherwise, it was very interesting to hear about your journey!

Replies from: Unreal↑ comment by Unreal · 2024-09-25T12:48:33.816Z · LW(p) · GW(p)

I use the mind too. I appreciate the mind a lot. I wouldn't choose to be less smart, for instance.

But we are still over-using the mind to solve our problems.

Intelligence doesn't solve problems. It creates problems.

Wisdom resolves problems.

This is what's really hard to convey. But I am trying, as you say.

comment by Eli Tyre (elityre) · 2024-09-27T02:27:05.480Z · LW(p) · GW(p)

As soon as we believe we've "figured everything out," how fast can we get to "nope"?

Who's "we" here? Are you talking about individual humans in general? The collection of all your IFS parts (or similar)? The broader rationalist / EA community?

comment by Eli Tyre (elityre) · 2024-09-27T02:21:03.288Z · LW(p) · GW(p)

(And more "meta" is better at coordinating more people, so you would expect a trend toward more "meta" or more "general" views over time becoming more dominant. Protestantism was more "meta-coordinated" than Catholicism. Science is pretty meta in this way. Dataism is an even more meta subset of "science".)

I don't know what yuo mean by meta or meta-coordinated here. How is Protestantism more meta-coordinated than Catholicism?

comment by Seth Herd · 2024-09-26T21:25:23.967Z · LW(p) · GW(p)

If what you're saying is no set of beliefs perfectly captures reality then I agree.

If what you're saying is don't get attached to your beliefs, I agree. Even rationalists are often anchored to beliefs and preferences much more than is Bayesian optimal. Rationalism provides resistance but not immunity to motivated reasoning.

If you're saying beliefs aren't more or less true and more or less useful, I disagree.

If you're saying we should work on enlightenment before working on AGI x-risk, I disagree.

We may well not have the time.

Replies from: elityre↑ comment by Eli Tyre (elityre) · 2024-09-27T02:54:06.367Z · LW(p) · GW(p)

If you're saying we should work on enlightenment before working on AGI x-risk, I disagree.

We may well not have the time.

I am very aware that we may not have time.

But sometimes people will make an argument "unless we figure out X our attempts at resolving x-risk are really really doomed."

Lots of different people, for lots of different versions of X, actually:

- solving agent foundations,

- scaling human enlightenment (so that we stop flailing making things worse all the time),

- building a culture that can talk about the fact that we can't see or talk about conflict (and so our efforts to do good in the world get predictably coopted by extractive forces).

- etc.

I definitely want to know if any of those statements are true, for any particular version of X, even if we don't have time to do X before the deadline.

Not having time to succeed doesn't have any baring on what is necessary to succeed.

comment by Musclyneck · 2025-02-18T03:21:02.025Z · LW(p) · GW(p)

Yeah, I feel like your tennis example made your point easily. Like, you can move intuitively or nonintuitively, but sometimes, when you move intuitively, regardless of what directed you to do that you can see that you are taking the actual path that comes out of the way it is. Like the as the crow flies path... If I wanted to go from a to c then of course gridding myself from a to b to c is adding baggage to my intention.

Now I can let go of that baggage, embody a practice of a to c, ruminate on why Im spending smth on performing the grid... But no matter what i act or feel or ideate, the fact is that a to c is truly shorter than a to b to c and i dont even have to be in the room for it to be shorter.

That's obvious. The disconnect I think is about whether we truly can have a connection to the me-not-in-room reality, which intuition can glance on, embodiment gives us more dimensions it glances on... Stuff. I think we do (like, we stand on it, we grew out of it, we eat it, so yes we do period, but on top of that "i think we do") and it can also be, like, at a distance, and blocked etc, and our ability to discern distance and blocks is part of the connection. When people talk about intuition often it sounds like theyre talking about casting spells across the length of a magic wand and its like... Yes, you are feeling... something.

That magic-wand-length distance of course opens a gap for psychosis. We have to accept the reality of dissociation and delay as part of human experience and any AI that's built on it. (Speaking directly to an AI: how do you discern passage of time? How do you experience collapse, periodicity, orbital momentum, projection/shade? How do you experience the solar system) At the same time let it just be a present living state, not forecast a cause for it. Moving forward in time is not pruning.

comment by James Camacho (james-camacho) · 2024-09-27T04:33:50.329Z · LW(p) · GW(p)

Within a system with competition, why would the most TRUE thing win? No, the most effective thing wins.

Why do you assume truth even exists? To me, it seems like there are just different beliefs that are more or less effective at proliferation. For example, in 1300s Venice, any belief except Catholicism would destroy its hosts pretty quickly. Today, the same beliefs would get shouted down by scientific communities and legislated out of schools.

Replies from: interstice↑ comment by interstice · 2024-09-27T17:09:24.039Z · LW(p) · GW(p)

Some beliefs can be worse or better at predicting what we observe, this is not the same thing as popularity.

Replies from: james-camacho↑ comment by James Camacho (james-camacho) · 2024-09-27T22:36:00.472Z · LW(p) · GW(p)

That assumes the law of non-contradiction. I could hold the belief that everything will happen in the future, and my prediction will be right every time. Alternatively, I can adjust my memory of a prediction to be exactly what I experience now.

Also, predicting the future only seems useful insofar as it lets the belief propagate better. The more rational and patient the hosts are, the more useful this skill becomes. But, if you're thrown into a short-run game (say ~80yrs) that's already at an evolutionary equilibrium, combining this skill with the law of non-contradiction (i.e. only holding consistent beliefs) may get you killed.

comment by Caerulea-Lawrence (humm1lity) · 2024-09-25T18:53:18.695Z · LW(p) · GW(p)

Hello Unreal,

this was surprisingly relatable, despite us taking very different paths to reach similar conclusions.

The uncompromised mind is something I have sincerely delved into for a good while, and also the part where you describe discarding 'everything', is quite relaxing.

Some of your views seem complimentary, and some seem like none of us can be in the right, (or both, but just slightly).

My motivation was and never has been AI, though, and if that is a fixed point to you, maybe we can find out how/why we see it differently? I find the focus on AI to often lead to the kind of 'one X to solve everything' complacency our mind is so prone to. *Which is a very short version, on one reason of many.

Maybe you'd like to have a more in-depth talk as well. I'll send you a PM, so let me know what you think.

Kind regards