The Plan - 2023 Version

post by johnswentworth · 2023-12-29T23:34:19.651Z · LW · GW · 40 commentsContents

1. What’s Your Plan For AI Alignment? 2. So what exactly are the “robust bottlenecks” you’re targeting? 3. How is understanding abstraction a bottleneck to any alignment approach at all? How is abstraction a bottleneck to alignment via interpretability? What’s the “We Don’t Get To Choose The Ontology” thing? … Back to Alignment Via Interpretability But isn’t polysemanticity fundamentally unavoidable? How is abstraction a bottleneck to deconfusion around embedded agency? How is abstraction a bottleneck to metaphilosophy? What about? What about alignment by default? What about alignment by iteration? What about alignment by fine-tuning/RLHF/etc? What about outsourcing alignment research to AI? What about iteratively outsourcing alignment research to fine-tuned/RLHF’d/etc AI? What about that Bad Idea which the theorists tend to start from? 4. What would the ideal output of this abstraction research look like, and how does it fit into the bigger picture? How would that “grand theorem” help with alignment? What comes after the medium-term goal? Are there parts which can be parallelized and are particularly useful for other people to work on? 5. This all sounds rather… theoretical. Why do we need all this theory? Why can’t we just rely on normal engineering? 6. What sorts of intermediates will provide feedback along the way, to make sure you haven’t lost all contact with reality? 7. But what if timelines are short, and we don’t have time for all this? 8. So what are you currently doing, and what progress has been made in the last year? None 40 comments

Background: The Plan [LW · GW], The Plan: 2022 Update [LW · GW]. If you haven’t read those, don’t worry, we’re going to go through things from the top this year, and with moderately more detail than before.

1. What’s Your Plan For AI Alignment?

Median happy trajectory:

- Sort out our fundamental confusions about agency and abstraction enough to do interpretability that works and generalizes robustly.

- Look through our AI’s internal concepts for a good alignment target, then Retarget the Search [LW · GW] [1].

- …

- Profit!

We’ll talk about some other (very different) trajectories shortly.

A side-note on how I think about plans [LW · GW]: I’m not really optimizing to make the plan happen. Rather, I think about many different “plans” as possible trajectories, and my optimization efforts are aimed at robust bottlenecks - subproblems which are bottlenecks on lots of different trajectories. An example from the linked post:

For instance, if I wanted to build a solid-state amplifier in 1940, I’d make sure I could build prototypes quickly (including with weird materials), and look for ways to visualize the fields, charge densities, and conductivity patterns produced. Whenever I saw “weird” results, I’d first figure out exactly which variables I needed to control to reproduce them, and of course measure everything I could (using those tools for visualizing fields, densities, etc). I’d also look for patterns among results, and look for models which unified lots of them.

Those are strategies which would be robustly useful for building solid-state amplifiers in many worlds, and likely directly address bottlenecks to progress in many worlds.

Main upshot of approaching planning this way: subproblems which are robust bottlenecks across many different trajectories we thought of are more likely to be bottlenecks on the trajectories we didn’t think of - including the trajectory followed by the real world. In other words, this sort of planning is likely to result in actions which still make sense in hindsight, especially in areas with lots of uncertainty, even after the world has thrown lots of surprises at us.

2. So what exactly are the “robust bottlenecks” you’re targeting?

For the past few years, understanding natural abstraction [? · GW] has been the main focus. Roughly speaking, the questions are: what structures in an environment will a wide variety of adaptive systems trained/evolved in that environment convergently [? · GW] use as internal concepts? When and why will that happen, how can we measure those structures, how will they be represented in trained/evolved systems, how can we detect their use in trained/evolved systems, etc?

3. How is understanding abstraction a bottleneck to any alignment approach at all?

Well, the point of a robust bottleneck is that it shows up along many different paths, so let’s talk through a few very different paths (which will probably be salient to very different readers). Just to set expectations: I do not expect that I can jam enough detail into one post that every reader will find their particular cruxes addressed. Or even most readers. But hopefully it will become clear why this “understanding abstraction is a robust bottleneck to alignment” claim is a thing a sane person might come to believe.

How is abstraction a bottleneck to alignment via interpretability?

For concreteness, we’ll talk about a “retargeting the search [LW · GW]”-style approach to using interpretability for alignment, though I expect the discussion in this section to generalize. It’s roughly the plan sketched at the start of this post: do interpretability real good, look through the AI’s internal concepts/language to figure out a good alignment target which we can express in that language, then write that target (in the AI’s internal concept-language) into the AI’s internals so as to steer its efforts.

As a concrete baseline, imagine attempting a retarget-the-search style strategy using roughly the technique from the ROME paper - i.e. look for low-rank components of weight/activation matrices which seem to correspond to particular human-interpretable concepts or relations, then edit those by adding a low-rank adjustment to the weights/activations.

Failure mode: the technique from the ROME paper (and variations thereon) just don’t work all that robustly. For instance, the low rank components inevitably turn out to be “polysemantic”, which is what it looks like when - oh, hang on, we need an aside on the whole We Don’t Get To Choose The Ontology thing.

What’s the “We Don’t Get To Choose The Ontology” thing?

As a quick example, here’s my overcompressed and modified version of a discussion [LW · GW] from a few months ago:

jacobjacob: Seems like the only way for a large group of people to do big things is to carve the problem up along low-dimensional interfaces, so that smaller groups can work on each piece while only interfacing with other groups along limited, specific channels. Like, maybe there’s Team Make A Big Pile Of Rocks and Team Make Sure The Widgets All Zorble, and they can generally operate mostly-independently, they only need to check in with each other around e.g. budgeting building space.

kave: Sounds great if you already have a nice factorization of the problem into chunks with low-dimensional interfaces between them. But in practice, what happens is:

- It's not obvious beforehand how to split the task up. When you initially try, you will fail.

- There will be a lot of push from the manager to act as if the low-dimensional interfaces are true.

- You will fail horribly at questioning enough requirements or figuring out elegant, cross-boundary solutions, because you will have put everything inside black boxes.

Like, the peon in the Widgets Testing division says “Well I thought I'd begin by gathering all the rocks we own and getting them out of the way, maybe paying someone to remove them all. It's a bit expensive, but it does fit within the budget for our teams ... and I was also thinking about getting a giant resonator to test all the zorbles. It will make the whole office building constantly shake, but maybe that’s fine?” and then the manager is like “Cool, none of that impacts budgeting office space, so I won’t need to check with Team Rock Pile on it. Go ahead!”.

Resolution: You Don’t Get To Choose The Problem Factorization. The key here is that it’s the problem space which determines the good factorizations, and we have to go look at the problem space and do some work to figure out what those factorizations are. If we just pick some factorization out of a hat, it won’t match the problem’s natural factorizations, and things will suck.

This is an instance of a pattern I see often, which I should write a post on at some point:

- Person 1: Seems like the only way to <do useful thing> is to carve up the problem along low-dimensional interfaces.

- Person 2: But in practice, when people try to do that, they pick carvings which don't work, and then try to shoehorn things into their carvings, and then everything is terrible.

- Resolution: You Don't Get To Choose The Ontology. The low-dimensional interfaces are determined by the problem domain; if at some point someone "picks" a carving, then they've shot themselves in the foot. Also, it takes effort and looking-at-the-system to discover the natural carvings of a problem domain.

Another mildly-hot-take example: the bitter lesson. The way I view the bitter lesson is:

- A bunch of AI researchers tried to hand-code ontologies. They mostly picked how to carve things, and didn't do the work to discover natural carvings.

- That failed. Eventually some folks figured out how to do brute-force optimization in such a way that the optimized systems would "discover" natural ontologies for themselves, but not in a way where the natural ontologies are made externally-visible to humans (alas).

- The ideal path would be to figure out how to make the natural ontological divisions visible to humans.

(I think most people today interpret the bitter lesson as something like "brute force scaling beats clever design", whereas I think the original essay reads closer to my interpretation above, and I think the recent history of ML [LW · GW] also better supports my interpretation above.)

For another class of examples, I'd point to the whole "high modernism" thing.

… Back to Alignment Via Interpretability

Anyway, as I was saying, “polysemanticity” is what it looks like when interpretability researchers try to Choose The Ontology - e.g. deciding that neurons are the “natural units” which represent concepts, or low-rank components of weight matrices are the “natural units”, or directions in activation space are the “natural units”, etc. Some such choices are probably better than others, but at the end of the day, these all boil down to some human choosing a simple-to-measure internal structure, and then treating that structure as the natural unit of representation. In other words, somebody is attempting to choose the ontology. And We Don’t Get To Choose The Ontology.

So how do we look at a net structure and its training environment, and figure out what structures inside the net are natural to treat as units-of-representation, rather than just guessing something?

… well, that’s one of the central questions of understanding abstraction. When I say “alignment is bottlenecked on understanding abstraction”, that’s the sort of question I’m talking about.

Let’s take it a little further.

For a strategy like retargeting the search, we want to be able to translate robustly between the “internal concept language” of a net, and humans’ concepts. (Note that “humans’ concepts” may or may not be best represented as natural language, but if they are to be represented as natural language, then an extremely central subproblem is how to check that the net-concepts-translated-into-natural-language actually robustly match what a human interprets that natural language to mean.) Crucially, this is a two-sided problem: there’s the structures in the net which we talked about earlier, but there’s also whatever real-world stuff or human concepts we’re interpreting those structures as representing. In order for the “translation” to be useful, the “humans’ concepts” side needs some representation which accurately captures human concepts, and can be robustly correctly interpreted by humans.

Ideally, that “human interpretable” representation would itself be something mathematical, rather than just e.g. natural language, since mathematical representations (broadly interpreted, so including e.g. Python) are basically the only representations which enable robust engineering in practice.

That side of the problem - what the “human interpretable” side of neural-net-concepts-translated-into-something-human-interpretable looks like - is also a major subproblem of “understanding abstraction”.

In a later section [LW · GW], we’ll talk about what ideal outputs of “research to understand abstraction” I imagine, and how those would help with the interpretability approach to alignment.

But isn’t polysemanticity fundamentally unavoidable?

Many people have a general view that the world has fractal complexity, therefore things like polysemanticity are fundamentally unavoidable. I used to have that view too. Now I basically don’t. I mean, I would maybe buy an argument that e.g. we can never have infinite-precision monosemanticity (whatever that would mean), but not in a way that actually matters in practice.

Here’s an attempt to gesture at why I no longer buy the inherent real-world intractability of “fractal complexity”, from a few different angles. (I’m not going to try to tie all of these very closely to monosemanticity specifically; if someone says something like “Isn’t polysemanticity fundamentally unavoidable?” then I typically expect it to be downstream of [LW · GW] a “fractal complexity” aesthetic sense more so than a specific argument, and it’s the relevance of that aesthetic sense which I’m pushing against.)

Angle 1: Goodheart. That thing where the Soviet nail factory is judged based on the number of nails produced, so they produce huge numbers of thumbtacks useless as nails, then the central planners adjust to judge them based on the weight of nails produced, so they produce a bunch of big useless chunks of metal. (Usually at this point we talk about how Goodheart problems are an issue for alignment, but that’s actually orthogonal to the point here, since we’re just using it as an angle on the “fractal complexity” thing.) Important under-appreciated point: Goodheart is about generalization, not approximation. If I’m optimizing some objective-function which is approximately-what-I-want everywhere (within \epsilon of my “true utility”, if you want to be samequantitative about it), then the optimum found will be approximately-the-best as judged by what I actually want (within 2\epsilon of max utility, if we’re being quantitative). Goodheart problems come up when the stated objective is not even approximately what we want in some particular regime - e.g. in the Soviet nail factory examples, we end up in regimes where number/weight of nails ceases to be a good approximation of what we want at all.

So in fact it’s totally fine, for Goodheart purposes, if we’re working with stuff that’s only approximate - as long as it’s robustly approximate.

Returning to polysemanticity/monosemanticity: from e.g. a Goodheart angle, it’s totally fine if our units-of-representation are only approximately monosemantic, so long as they’re robustly approximately monosemantic. Further, I claim that this doesn’t just apply to Goodheart: as a general rule, approximation is fine for practical applications, so long as it’s robust approximation. But when the approximation totally breaks down in some regime, the world has an annoying tendency to make a beeline straight toward that regime.

Now, one could still make a much stronger fractal complexity claim, and say that there will always be regimes in which monosemanticity totally breaks down, no matter what units of representation we use.

Angle 2: True Names [LW · GW]. Let’s stick with the Goodheart thing as a measure of “does <operationalization> totally break down in some regime?”. Then, let’s ask: do we have operationalizations - especially mathematical operationalizations - so robust that we can optimize for them really hard, and get basically what we intuitively want, i.e. Goodheart won’t undermine our efforts?

I say yes. My go-to example: mutual information, in information theory. From the True Names [LW · GW] post:

Suppose we’re designing some secure electronic equipment, and we’re concerned about the system leaking information to adversaries via a radio side-channel. We design the system so that the leaked radio signal has zero correlation with whatever signals are passed around inside the system.

Some time later, a clever adversary is able to use the radio side-channel to glean information about those internal signals using fourth-order statistics. Zero correlation was an imperfect proxy for zero information leak, and the proxy broke down under the adversary’s optimization pressure.

But what if we instead design the system so that the leaked radio signal has zero mutual information with whatever signals are passed around inside the system? Then it doesn’t matter how much optimization pressure an adversary applies, they’re not going to figure out anything about those internal signals via leaked radio.

Point of this example: Figuring out the “True Name” of a concept, i.e. a mathematical operationalization sufficiently robust that one can apply lots of optimization pressure without the operationalization breaking down, is absolutely possible and does happen. It’s hard, We Don’t Get To Choose The Ontology, but it can and has been done. (And information theory is a very good example of the style of mathematical tool which I’d like to have for alignment.)

Angle 3: Chaos. From Chaos Induces Abstractions [LW · GW]:

We have a high-dimensional system - i.e. a large number of “billiard balls” bouncing around on a “pool table”. (Really, this is typically used as an analogy for gas molecules bouncing around in a container.) We ask what information about the system’s state is relevant “far away” - in this case, far in the future. And it turns out that, if we have even just a little bit of uncertainty, the vast majority of the information is “wiped out”. Only the system’s energy is relevant to predicting the system state far in the future. The energy is our low-dimensional summary.

Takeaway: low-dimensional summaries, which summarize all information relevant to reasonably high precision, are actually quite common in the physical world, thanks to chaos.

This has potentially-big implications for the tractability of translating between AI and human concepts. This time I’ll quote Nate [LW · GW] (also talking about gasses as an example):

In theory, if our knowledge of the initial conditions is precise enough and if we have enough computing power, then we can predict the velocity of particle #57 quite precisely. However, if we lack precision or computing power, the best we can do is probably a Maxwell-Boltzmann distribution, subject to the constraint that the expected energy (of any given particle) is the average energy (per-particle in the initial conditions).

This is interesting, because it suggests a sharp divide between the sorts of predictions that are accessible to a superintelligence, and the predictions that are accessible to an omniscience. Someone with enough knowledge of the initial conditions and with enough compute to simulate the entire history exactly can get the right answer, whereas everyone with substantially less power than that—be they superintelligences or humans—is relegated to a Maxwell-Boltzmann distribution subject to an energy constraint.

So in this sort of case, there’s actually pretty decent reason to expect lots of different minds - including humans and AI - to converge to the same internal concepts.

Angle 4: Design Principles of Biological Circuits [LW · GW]. Back in college, when I personally believed in the practical relevance of the “fractal complexity” aesthetic, I argued to a professor that we shouldn’t expect to be able to understand evolved systems. We can take it apart and look at all the pieces, we can simulate the whole thing and see what happens, but there’s no reason to expect any deeper understanding. Organisms did not evolve to be understandable by humans.

I changed my mind, and one major reason for the change was Uri Alon’s book An Introduction to Systems Biology: Design Principles of Biological Circuits. It turns out that biological systems are absolutely packed with human-interpretable structure.

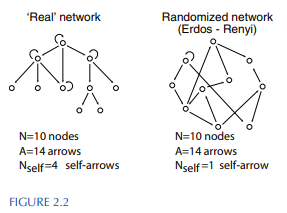

One quick example: random mutations form random connections between transcription factors - mutations can make any given transcription factor regulate any other relatively easily. But actual transcription networks do not look like random graphs. Here’s a visualization from the book:

(In this case, going through enough examples to make the point properly would take a while, so I refer you to my review of the book [LW · GW] if you’re interested.)

So in biology, it turns out that optimization via evolution apparently produces a bunch of human-interpretable structure “by default”.

Back in the early days of deep learning (like, 2014-2015ish?), AFAICT most people assumed that nets were a totally inscrutable mess, you’d never find anything interpretable in there at all. But Chris Olah had seen some systems biology (I wouldn’t be surprised if he’d read Alon’s book), and (like me) had the opposite expectation: there’s a ton of interpretable structure in there. So he produced a bunch of cool visuals of the functions of neurons, and IIRC lots of people who had previously subscribed to the relevance of fractal complexity were very surprised.

One thing to note here: both biology and neural net interpretability are areas where I think many people make the mistake of “choosing an ontology”. In particular, a pattern I think is pretty common:

- Someone picks a questionable ontology for modeling biological organisms/neural nets - for concreteness, let’s say they try to represent some system as a decision tree.

- Lo and behold, this poor choice of ontology doesn’t work very well; the modeler requires a huge amount of complexity to decently represent the real-world system in their poorly-chosen ontology. For instance, maybe they need a ridiculously large decision tree or random forest to represent a neural net to decent precision.

- The modeler concludes that the real world system is hopelessly complicated (i.e. fractal complexity), and no human-interpretable model will ever capture it to reasonable precision.

… and in this situation, my response is “It’s not hopelessly complex, that’s just what it looks like when you choose the ontology without doing the work to discover the ontology”.

Ok, that’s enough angles on fractal complexity for now. Brief summary:

- Approximation is fine, it’s robustness/generalization that’s the main potential issue.

- We have existing examples of robustly generalizable operationalizations of concepts, e.g. in information theory.

- Chaos works in our favor, placing similar constraints on any mind evolved/trained in a given environment.

- Areas which look most “fractally complex” at first glance turn out to be surprisingly human-understandable (though one does need to discover the ontology, otherwise the systems look complex).

Hopefully this will give an intuitive sense of why I’m skeptical that “polysemanticity is fundamentally unavoidable”, in any practically-relevant sense, along with many other claims of similar flavor.

How is abstraction a bottleneck to deconfusion around embedded agency?

Let’s start with an excerpt from a fictional conversation [LW · GW], in a world which followed a very different technological trajectory than ours through history:

Beth: We’re worried that if you aim a rocket at where the Moon is in the sky, and press the launch button, the rocket may not actually end up at the Moon. [...] We’re not sure what a realistic path from the Earth to the moon looks like, but we suspect it might not be a very straight path, and it may not involve pointing the nose of the rocket at the moon at all.

Alfonso: Just aim the spaceplane at the moon, go up, and have the pilot adjust as necessary. Why wouldn’t that work?

[...]

Beth: It’s not that current rocket ideas are almost right, and we just need to solve one or two more problems to make them work. The conceptual distance that separates anyone from solving the rocket alignment problem is much greater than that.

Right now everyone is confused about rocket trajectories, and we’re trying to become less confused.

Let’s now put ourselves in the mindset of a world where we are about that confused about alignment and agency and especially embedded agency [LW · GW]. How do we run into abstraction, in the process of sorting through those confusions? Some examples…

Problem: embedded tiling agents [? · GW]. As a starting point, I want a robustly-general mathematical operationalization of “a thing which robustly makes copies of itself embedded in an environment” - think e.g. a bacterium. In other words, I want to be able to look at some low-level physics simulation, and run some function on it which will tell me whether there is a thing embedded in that simulated environment which robustly makes copies of itself, and if so where in the simulation that thing is. And of course the operationalization should robustly match our intuitive notion of a tiling agent, not just be some ad-hoc formalization [LW · GW], should handle basic realistic complications like “the copy isn’t an atomically perfect match”, and ideally we should be able to derive some general properties of such agents which also match our intuitions in order give more evidence that we have the right operationalization, etc.

… man, that sure does sound like 80% of the problem is just robustly and accurately expressing what we mean by “thing which is a copy of another thing”. That’s a pretty standard, central problem of understanding abstraction in general.

Another problem: the Pointers Problem [LW · GW]. Insofar as it makes sense to model humans as having a utility function in our heads at all, those utility functions aren’t over “world states” or “world trajectories” or anything like that; our preferences aren’t over quantum fields. Rather, our preferences are over high-level abstract things around us - like cars, or trees, or other humans. Insofar as this fits into a Bayesian framework: the inputs to our utility function are latent variables in our internal world models.

… but then I want to express ideas like “I don’t just want to think the tree I planted is healthy, I want the actual tree to be healthy”. Which sure is tricky, if “the tree” is a latent variable in my world model, a high-level abstract concept, and we don’t have a general way to map my own internal latent variables to structures in the environment. Again, a standard central problem of abstraction.

A third problem: ontological crises [? · GW]. … ok I admit that one is kind of a cheap shot, it has “ontology” right there in the name, obviously it’s centrally about abstraction. Specifically, about how natural abstractions should be stable across shifts in lower-level ontology.

How is abstraction a bottleneck to metaphilosophy?

There is a cluster of people who view the alignment problem as blocked mainly on metaphilosophy. (I believe Wei Dai [LW · GW] is one good example.) I don’t think I can pass their intellectual Turing test [? · GW] with flying colors, but I’ll try to make the view a little intelligible to the rest of the people reading this post.

One thing which is likely to be especially tricky and/or dangerous to outsource to AI is moral philosophy, i.e. figuring out what exactly we want. You know that thing where someone is like: “I used to think I wanted X. I wanted to be the sort of person who wanted X, I told myself I enjoyed X. And it took me a long time to realize, but… actually X makes me kind of miserable.”. Say our AI is a language model; its training data and/or context window is filled with me saying “I love X”. I give the AI some task, and the AI does the task in a way which gives me X, and I give the AI extra-positive feedback for that because I think I want X so that behavior by the AI gets reinforced. And now here I am training the AI to do things which make me miserable, because I think I like those things, and this is the sort of problem which is really hard to fix by iterating because it’s hard for me to notice there’s a problem at all.[3] Some people spend their whole lives failing-to-notice that kind of problem.

With that sort of thing in play, what do I even want the AI to do?

That’s a moral philosophy problem, and the track record of moral philosophy done by humans to date is… frankly complete garbage. There is plausibly nothing salvageable in the entire steaming pile. (To be clear, I mean this less as a thing I’m claiming, and more as “for a moment, suppose we’re in a world where this is true, to get in the metaphilosophy-as-bottleneck mindset”.)

If we’re that bad at figuring out what we want, and need to figure out what we want in order for alignment to go well, then we don’t just have a problem of moral philosophy on our hands. We have a problem of metaphilosophy on our hands: we are so bad at this philosophy thing that the current methods by which we do moral philosophy are trash, and we need a fundamentally different approach to the whole philosophical enterprise. In short: we need to figure out how to do philosophy better. That’s metaphilosophy.

If we put ourselves into that worldview, why does abstraction in particular look like a potential bottleneck?

Well, for starters, deconfusion about agency in general sure seems like the kind of thing which would produce better metaphilosophy. For instance, better understanding the type-signatures of convergent goal-structures in evolved/trained agents would likely give us useful new ways to measure/model our goal-structures, i.e. better ways to do moral philosophy. So the discussion from the previous section carries over to here.

But beyond that… I’d say something like 60-80% of philosophy amounts to “figuring out precise formal operationalizations of what we mean, by various human-intuitive concepts”. (Note that when I say “philosophy” here, I am referring mainly to analytic philosophy, not the glorified bullshitting which somehow got the name “continental philosophy” and has since tarred the reputation of the rest of the field by association.) And precise formal operationalizations of what we mean by various human-intuitive concepts sure is among the most central problems of “understanding abstraction”. Like, if I had to compress what it means to “understand abstraction” down to one sentence, it would plausibly be something like “characterize which structures in an evolved/trained system’s environment will be convergently reified as internal concepts, and characterize convergent properties of their internal representation” (where “convergence” here is across a wide variety of mind-architectures and training/evolutionary objectives). It seems to me like that problem plausibly captures like 60-80% of the work of philosophy.

So if we had a strong understanding of abstraction, then when a philosophical problem starts with “what do we mean by X, Y and Z?”, we could precisely characterize X, Y, and Z, in robustly generalizable ways, using general-purpose tools for backing out the meaning of concepts. Those tools would automatically provide guarantees about how and why the operationalizations are robustly useful - most likely involving some combination of Markov blankets and natural latents [LW · GW] and thermodynamic-style properties of phase changes in singular learning theory, though we don’t yet know exactly what it all will look like. That sounds like a potential recipe for radically better metaphilosophy.

(Side note: while it didn’t make it into this post, there’s a fun little dialogue here [LW(p) · GW(p)] giving another angle on how abstraction and various other agent foundations issues come up when we start to dig into moral philosophy questions in a technical way.)

What about <other trajectory>?

In alignment, most people start with the same few Bad Ideas [LW · GW]. This post is not primarily about how and why the usual bad ideas will predictably fail; see the Why Not Just… [? · GW] sequence for that. But since a rather large chunk of the audience will probably be wondering why I haven’t talked about any of the usual half-dozen standard bad ideas, I’ll at least include some brief comments on them.

(Side note: It’s probably important for people to understand for themselves how/why these ideas won’t work, rather than just deferring to others. Importantly, don’t prune [? · GW] your thoughts into a permanent silence of deferral [LW · GW]. I do predict that if you find your favored idea in this section, you’ll update over time to thinking it won’t work, but that doesn’t mean a person can skip the process and still get the value.)

What about alignment by default?

The standard alignment by default [LW · GW] story goes:

- Suppose the natural abstraction hypothesis[2] is basically correct, i.e. a wide variety of minds trained/evolved in the same environment converge to use basically-the-same internal concepts.

- … Then it’s pretty likely that neural nets end up with basically-human-like internal concepts corresponding to whatever stuff humans want.

- … So in principle, it shouldn’t take that many bits-of-optimization to get nets to optimize for whatever stuff humans want.

- … Therefore if we just kinda throw reward at nets in the obvious ways (e.g. finetuning/RLHF), and iterate on problems for a while, maybe that just works?

In the linked post, I gave that roughly a 10% chance of working. I expect the natural abstraction part to basically work, the problem is the “throw reward at nets and iterate” part. The issue is, as Eliezer put it [LW · GW]:

Human raters make systematic errors - regular, compactly describable, predictable errors. To faithfully learn a function from 'human feedback' is to learn (from our external standpoint) an unfaithful description of human preferences, with errors that are not random (from the outside standpoint of what we'd hoped to transfer). If you perfectly learn and perfectly maximize the referent of rewards assigned by human operators, that kills them. It's a fact about the territory, not the map - about the environment, not the optimizer - that the best predictive explanation for human answers is one that predicts the systematic errors in our responses, and therefore is a psychological concept that correctly predicts the higher scores that would be assigned to human-error-producing cases.

(If you’re confused about why that would result in death: the problem is nonobvious and involves two separate steps. It’s a bit long to walk through here, but I do go through it in this discussion with Eli [LW · GW], in particular the block starting with:

Note that our story here isn't quite "reward misspecification". That's why we needed all that machinery about [the AI's internal concept of] <stuff>. There's a two-step thing here: the training process gets the AI to optimize for one of its internal concepts, and then that internal concept generalizes differently from whatever-ratings-were-meant-to-proxy-for.

Caution: David thinks this quote is unusually likely to suffer from double illusion of transparency [LW · GW]. If you’re one of those people who wants to distill stuff, this would be a useful thing to distill, though it will probably take some effort to understand it properly.)

What about alignment by iteration?

There are ways that OODA loops (a.k.a. iterative design loops) systematically fail. For instance: if we don’t notice a problem is happening, or don’t flag it as a problem, then it doesn’t get fixed. And this is a particularly pernicious issue, because it’s not like iterating more is likely to fix it. Yes, a few more iterations give a few more chances to notice the problem, but if we didn’t notice it the first ten times we probably won’t notice it the next ten.

As a general rule, all of the paths by which AGI ends up an existential threat route through some kind of OODA loop failure. Maybe it fails because the AGI behaves strategically deceptively, hiding alignment problems so we don’t notice the problems. Maybe the OODA loop fails because the AGI FOOMs, so we only get one shot with supercritical AI, and earlier subcritical AI will not be a very good proxy for supercritical AI. Maybe we actively reward the model for successfully hiding problems, as e.g. RLHF does by default, so the model develops lots of heuristics which tend to hide problems. Worlds Where Iterative Design Fails [? · GW] goes through other (IMO underappreciated) potential OODA loop failure-modes for AI.

It’s not an accident that all existential threat modes route through some kind of failure of iteration. Iterative development is how most technology is developed by default. Insofar as iterative development solves problems, those problems will be solved by default. What remains are the problems which iterative development does not automatically or easily solve.

So if we want to improve humanity’s chance of survival, then basically-all counterfactual impact has to come from progress on problems on which standard iterative development struggles for some reason. Maybe that progress looks like solving problems via some other route. Maybe the problem-solving process itself involves iterating on some other system, which can give us useful information about the real system of interest but isn’t the same. Maybe progress looks like turning un-iterables into iterables, e.g. by figuring out new ways to detect problems. But one way or another, if we want to counterfactually improve humanity’s chance of survival, we need to tackle problems on which standard iterative development struggles.

What about alignment by fine-tuning/RLHF/etc?

… that’s “alignment by default”, go read that section.

What about outsourcing alignment research to AI?

Claim: our ability to usefully outsource alignment research to AI is mostly bottlenecked on our own understanding of alignment. Furthermore, incremental improvement in our ability to usefully outsource alignment research to AI can best be achieved by incrementally improving our own understanding of alignment.

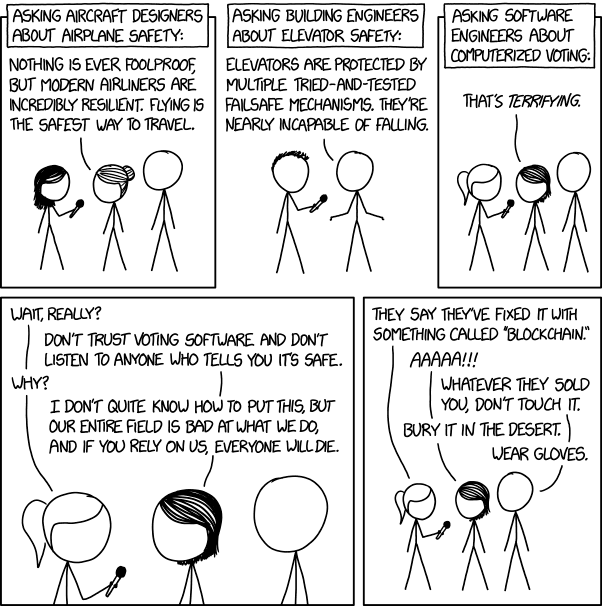

Why would that be the case? This comedy sketch gives some idea:

The Client: “We need you to draw seven red lines, all strictly perpendicular. Some with green ink, some with transparent. Can you do that?”

(... a minute of The Expert trying to explain that, no, he cannot do that, nor can anyone else…)

The Client: “So in principle, this is possible.”

When trying to outsource hard alignment problems to AI, we are, by default, basically that client. Yes, there are things which can be done on The Expert’s end to achieve marginally better outcomes… but The Client’s expertise is much more of a bottleneck than anything else, in the sense that marginal improvements to The Client’s understanding will improve outcomes a lot more than marginal changes to anything else in the system.

Why Not Just Outsource Alignment Research To An AI? [? · GW] goes into more detail.

What about iteratively outsourcing alignment research to fine-tuned/RLHF’d/etc AI?

There’s negative synergy here.

Iteration + RLHF: RLHF actively rewards the system for hiding problems, which makes iteration less effective; we’d be better off just iterating on a raw predictive model.

RLHF + Outsourcing: We try to outsource to a system which has been trained via RLHF to hide problems? That sounds like a recipe for making outsourcing even harder. Again, we’d be better off outsourcing to a raw predictive model rather than an RLHF’d one.

Outsourcing + Iteration: The outsourcing problems of The Client are not all problems which break OODA loops, but they are disproportionately problems which break OODA loops. Part of The Client’s incompetence is that they don’t know what questions to ask or what to look for, to catch subtle issues, and that makes it harder for iteration to detect and fix problems.

What about that Bad Idea which the theorists tend to start from?

Theorists tend to start with an idea vaguely along the lines of:

- Identify some chunk of the world which is well-modeled (i.e. compressed, in the Kolmogorov/Solomonoff [? · GW] sense) as “an agent”.

- Learn that agent’s goal in the usual Bayesian way.

- Optimize for that goal.

There’s various degrees of freedom here, like e.g. when we say “an agent” are we talking about a Bayesian expected utility maximizer or a reward learner or something else, if Bayesian then what’s the prior, what are the “variables'' in the agent’s world model, are we learning the agent’s goal via Bayesian updates on a class of graphical models or via Solomonoff-style induction or something else, etc, etc. Prototypical strategies in this general cluster include Inverse Reinforcement Learning [? · GW] (IRL) and its cooperative version (CIRL).

A few years back, I tested a very simplified toy version of this sort of strategy: look for a naive Bayes model embedded in a system [LW · GW]. The equations defining the isomorphism turned out to be linear, so it was tractable to test out on a real system: a system of ODEs empirically developed to model chemotaxis in e-coli. And when I ran that test… the problem was extremely numerically unstable. Turns out the isomorphism was very underspecified.

Stuart Armstrong has also written a bunch about the underdetermination this sort of strategy tends to run into; I like this post [LW · GW] as a nice example.

What I take away from this underdetermination is: if we use an obvious operationalization - like e.g. “look for stuff that’s well compressed by a program approximately maximizing the expectation of some utility function under some distribution” - then that doesn’t capture all of the load-bearing structure which our intuitions rely on, when modeling real-world stuff as embedded agents. The problem ends up underspecified, because there are additional constraints which we accidentally left out.

We don’t yet know what all the relevant constraints are; that’s an agent foundations problem. But I can point to one nonobvious candidate: the value learning complement of the Pointers Problem [LW · GW]. When an agent is in the real world, we generally expect it to have a world model containing variables which correspond to stuff in the real world - not just some totally arbitrary distribution unrelated to the stuff in the environment. That’s an important constraint, which the standard starting points for value learning ignore. How exactly do the components of the embedded world model “correspond to” stuff in the agent’s environment? That’s a standard central question of abstraction.

Another place where abstraction probably adds a lot of constraints: only certain structures in the environment are naturally abstracted as “things”, and agents are presumably a subset of those.

4. What would the ideal output of this abstraction research look like, and how does it fit into the bigger picture?

Medium term, there are two main “prongs” to The Plan. One prong is understanding what structures in an environment constitute natural abstractions; that’s the sort of thing which e.g. natural latents [LW · GW] are aimed at, and has been the main focus of my work the last few years.

The other prong is understanding what structures in a trained/evolved adaptive system are natural units of representation - and, more generally, what internal structures are convergent. I had previously framed that prong in terms of “selection theorems [? · GW]”, and viewed it as a probably-parallelizable line of research. My current best guess is that singular learning theory has the right conceptual picture for the "selection theorems" prong, and the folks working on it are headed in the right direction. (That said, I'm not yet convinced that they have the right operationalizations for everything - for instance, the main thing I know about the RLCT is that it's used to generalize the Bayesian information criterion, which wasn't a very good approximation [? · GW] in the first place. On the other hand, BIC mainly failed as dimensionality got big, and that's exactly where SLT is supposed to shine, so maybe RLCT is decent... I haven't played with it myself enough to know.)

The big hope is that these two prongs meet up in a grand theorem saying that certain kinds of structures in a system under selection pressure will tend to end up "syncing up" in some way with natural abstractions in the environment.

How would that “grand theorem” help with alignment?

Let’s start with the alignment-via-interpretability trajectory.

This sort of theorem, along with the supporting theory, would ideally tell us:

- Certain kinds of structures in the human brain will tend to sync up with certain other structures in the environment.

- There are “corresponding” structures in a trained neural net, which will tend to sync up with the same structures in the environment (to reasonable approximation).

- On top of that, the supporting theory would automatically provide guarantees about how and why all those structures are robustly useful to view as “units of representation” (for the internal structures) or “natural abstractions” (for the environmental structures).

So this would tell us what units of representation to look for inside the net when doing interpretability, and what kinds of human-recognizable structures in the environment the net-internal structures represent. In short, it would basically solve the “how to discover the right ontology” problem, and also give us a well-justified robust framework for translation between net-internal and human-intuitive representations.

Assuming that general-purpose search [LW · GW] indeed turns out to be convergent, that would solve the primary bottleneck to retargeting the search [LW · GW] as an alignment strategy. And even if general-purpose search does not turn out to be convergent, we’d still be past the main bottleneck to really powerful interpretability, and whatever other nice things it unlocks.

(Side note: in some sense, the grand theorem would tell us how to discover ontologies, and thereby avoid the We Don’t Get To Choose The Ontology problem [LW · GW] in full generality. However, that does mean that someone trying to tackle this research agenda has to be particularly wary of Choosing The Ontology themselves in the process. Empirical feedback along the way can sometimes detect that sort of failure mode, but empirical feedback mostly just detects the failure even at best, it doesn’t fix the failure; so to actually make progress at a decent pace, one needs to be able to avoid premature ontologization. In particular, ad-hoc mathematical definitions [LW · GW] are the main way inexperienced theorists choose a bad ontology and thereby shoot themselves in the foot, and failure to measure what we think we are measuring [LW · GW] is the main way empirical feedback can fail to make such mistakes obvious.)

Another trajectory: how would the hypothetical “grand theorem” help with deconfusion around embedded agency?

Well for starters, both the interpretability applications and the metaphilosophy applications (below) are relevant to agent foundations; they provide a potential measurement mechanism, so we can potentially get a much better empirical feedback signal on questions of embedded agency. For instance: suppose I’m thinking about tiling, and I start from an “intentional stance”-style model. In other words, I ask “what kinds of structures in the environment would be convergently modeled by a wide variety of minds as a thing making copies of itself?”. The hypothetical grand theorem would directly offer a foundation for that question - i.e. it would tell us what kinds of structures a wide variety of minds recognize as “things” at all, and probably also what kinds of structures those minds recognize as “copies of a thing”. But even better, the grand theorem would also tell us how to empirically check those claims, by looking for some kind of structures in the internals of trained nets.

Another trajectory: how would the hypothetical “grand theorem” help with metaphilosophy?

One cool technology the grand theorem would likely unlock is a “pragmascope” - i.e. a way of measuring which structures in an environment are convergent natural abstractions. The setup would be:

- Train a neural net on the environment in question.

- Look at the net-internal structures which the grand theorem says will tend to sync with natural abstractions in the environment.

- Translate the structures found into a standard human-interpretable representation.

With that in hand, we’d be able to quantitatively measure “which physical stuff constitutes ‘a thing’”, in a way that’s backed up by theorems about structures being convergent for a wide variety of minds under training/evolution.

That would be one hell of a foundational tool for philosophy. Historically, it seems like fields of science often really took off once tools became available to properly measure their subjects of interest. A pragmascope could plausibly do that for most of philosophy.

What comes after the medium-term goal?

Some likely subproblems to address after the grand theorem:

- Embedded tiling agents (and then evolving agents)

- Whatever’s up with thermodynamic-style arguments and agency

- Corrigibility

- Reductive semantics

(This isn’t intended to be a comprehensive list, nor is it particularly organized, just a few subgoals which seem likely to be on the todo list.)

A little more on what those each mean…

We’ve already talked a bit about embedded tiling agents. They’re a natural “baseline” for modeling evolution: take a tiling agent, then assume the tiling isn’t perfect (i.e. there’s “mutation”), then look at the selection pressures acting on the imperfect-copies.

Thermo-style arguments (think generalized heat engine [? · GW]) intuitively seem like a very general tool for optimization under uncertainty. The Active Inference folks (among others) have tried to turn that intuition into math, but their results seem… incomplete at best, like they’re not really capturing the whole central idea in their math.

My guess is that singular learning theory is on the right path to “doing thermo-for-agents right”. There’s a strong intuitive story about higher-dimensional pieces of the low-loss surface having “higher entropy”, in a sense that feels like it should fit thermo basically-correctly. And that seems like the right sort of intuitive story to formalize claims like e.g. “concept X has entropy S, so an agent needs at least S bits of mutual information with X in order to learn the concept”. But I still feel like there’s a conceptual story about thermo and agents which hasn’t been articulated yet; it’s the least-legible of all these projects.

On corrigibility, much ink has already been spilled, see the wiki page [? · GW].

“Reductive semantics” isn’t existing jargon (as far as I know), I just chose that phrase to gesture at an idea. You know how "semantics" as usually formalized in e.g. linguistics or computer science is basically fake? As in, it's pretty obviously not capturing the intuitive notion of semantics? "Reductive semantics" is gesturing at what a "real" theory of semantics would look like.

In particular, most noun-words should presumably ground out in something-like-clusters over "data" from the physical world, and then other kinds of words ground out in things like clusters-of-trajectories or clusters-of-modular-representations-of-many-subclusters or things along those lines. The theory would be mostly at the level of "meta-semantics", i.e. characterizing the appropriate type signatures for semantic structures and how they relate to an underlying (presumably probabilistic) world-model. The actual semantics would then largely be in identifying the relevant clusters in real-world data.

Are there parts which can be parallelized and are particularly useful for other people to work on?

Corrigibility is one candidate. Figuring out the full embedded corrigibility problem probably requires understanding abstraction, but e.g. figuring out the right goal-structure to circumvent MIRI’s impossibility results is probably a parallelizable piece. (And I’ve had some productive back-and-forth on the topic with Elliot [LW · GW] and Sami [LW · GW] in the past year; they had some potentially interesting results.)

The whole project of applying singular learning theory to neural nets, and iterating as needed to make it work properly, is parallelizable and folks are working on it.

Those are the only two off the top of my head; most useful things seem pretty bottlenecked on abstraction.

5. This all sounds rather… theoretical. Why do we need all this theory? Why can’t we just rely on normal engineering?

We’ve partially answered this in bits and pieces throughout the post, but let’s put it together.

First, “normal engineering” does generally have a solid foundation of theory to build on. Mechanical engineering has Newtonian/Lagrangian/Hamiltonian mechanics and math for both static and dynamic systems, electrical engineering has Maxwell’s equations and the circuit laws and math for dynamic systems, chemical engineering has the periodic table, software engineering relies on big-O efficient data structures, etc. For agents and intelligence, we have some theory - Bayesian probability, expected utility - but it seems noticeably more full of holes than the bodies of theory on which the most successful engineering fields rely.

So why does normal engineering rely on theory, and how do those reasons extend to the use-case of alignment?

Well, for instance, normal engineering fields usually don’t need to iterate that much on the product itself. We can test material strengths or properties of electrical components or reaction rates or data structure performance in isolation, and have a good idea of what will happen when we compose a bunch of such pieces together. We can and do build new things correctly even on the first try - new kinds of buildings, bridges, circuits, etc. (Though not so much software; humans are pretty terrible at that relative to other fields of engineering, and AI currently looks to be like software but even more so.)

An analogy: if we were engineers in 1600 looking to build a mile-high tower as soon as possible, the shortest path would mostly not look like iterating on ever-taller buildings. It would look like iterating on materials, and iterating on our quantitative understanding of structural behavior (e.g. Galileo around that time incorrectly calculated the breaking strength of a beam IIRC, in the middle of a book full of remarkably-modern-sounding and largely-correct physics). Only near the end would we finally put it all together to build a tower.

Another angle: Not Measuring What We Think We Are Measuring [LW · GW] is the very strong default, in areas where we don’t have a strong understanding of what’s going on. And if we’re not measuring what we think we are measuring, that undercuts the whole “iterative development” model [LW · GW]. It’s not an accident that the most successful engineering fields rely on a foundation of theory; absent that foundation, there’s just lots of weird and confusing stuff all the time, everything is confounded by effectively-random factors we don’t understand. And that sure is how AI seems today.

6. What sorts of intermediates will provide feedback along the way, to make sure you haven’t lost all contact with reality?

In our day-to-day, we (David Lorell and I) talk through how the math would apply to various interesting test-cases. For instance, one set of useful test-cases we often think about:

- Simulation of a rigid-body object, represented as connected particles moving around

- Simulation of a rigid-body object, represented as a field, i.e. voxels which light up whenever part of the object is in them.

- Simulation of a rigid-body object, represented as a dynamic mesh - i.e. the connected particles again, except we sometimes recompute a new set of particles to represent the same underlying object.

- Simulation of a rigid-body object, represented as a field, but on a dynamic mesh - i.e. the positions of “voxels” (no longer regularly shaped) change over time.

Usually just talking through tricky cases reveals what we need to know in order to iterate on the theory, day-to-day.

When we think we have something workable, we’ll code up a toy test-case. For instance, we’ve tested various different ideas in the Game of Life, in an Ising model, or in a set of reaction-diffusion equations.

(In terms of time spent, the large majority of time is on theorizing and talking through things. Coding up a toy model is generally pretty fast - i.e. days - while figuring out the next useful thing to try is much slower.)

And for the past couple months, we’ve been fiddling with a stable diffusion net, to test out on that. If and when we have success there, we’ll probably turn it into a simple product, so we can get some proper market feedback.

7. But what if timelines are short, and we don’t have time for all this?

Mostly timelines just aren’t that relevant; they’d have to get down to around 18-24 months before I think it’s time to shift strategy a lot.

To be clear, I don’t expect to solve the whole problem in the next two years. Rather, I expect that even the incremental gains from partial progress on fundamental understanding will be worth far more than marginal time/effort on anything else, at least given our current state.

We already talked about this in the context of strategies involving outsourcing alignment research to AI [LW · GW]: I expect that outsourceability will be mostly bottlenecked on our own understanding, in the sense that marginal improvement in our own understanding will have much larger impact than marginal changes elsewhere.

But even ignoring outsourcing, I generally expect the largest marginal gains to come from marginal progress on the main bottlenecks, like e.g. understanding abstraction. Even marginal progress is a potentially big deal for e.g. interpretability, and everything gets easier as interpretability improves. Even marginal progress is a potentially big deal for knowing what questions to ask and what failure modes to look for, and iterative engineering becomes more effective the more we ask the right questions and know what to look for.

So the optimal strategy is basically to spend as much time as possible sorting out as much of that general confusion as possible, and if the timer starts to run out, then slap something together based on the best understanding we have. 18-24 months is about how long I expect it to take to slap something together based on the best understanding we have. (Well, really I expect it to take <12 months, but planning fallacy [LW · GW] and safety margins and time to iterate a little and all that.) Ideally, someone is working on slapping things together at any given time just in case the timer runs out, but that’s not the general main focus.

8. So what are you currently doing, and what progress has been made in the last year?

The natural latents [LW · GW] framework is the most legible progress from the past year. The main ways it improves on our previous natural abstraction models are:

- Don’t need infinite systems

- Do play well with approximation

- Don’t need a graphical model, any probabilistic model will do

The biggest thing still missing is to extend it to abstraction in the absence of uncertainty (like e.g. the sort of abstractions used in pure math). Hopefully the category theorists will handle that.

Behind the scenes, we’ve been fiddling with a stable diffusion model for the past couple months, generally trying to get the theory to play well with human-intuitive abstractions in that context. If and when we have success there, we’ll probably turn it into a simple product, so we can get some proper market feedback.

Thank you to David Lorell for reviewing a draft, and to Garrett Baker for asking some questions in a not-yet-published LW discussion which ended up incorporated here.

- ^

Many people who read the Retarget the Search post were very confused about some things, which I later explained in What’s General-Purpose Search, And Why Might We Expect To See It In Trained ML Systems? [LW · GW]

- ^

Fun fact: Alignment By Default was the post which introduced the phrase “natural abstraction hypothesis”.

- ^

Note that this is not, by itself, the sort of problem which is existentially deadly. It is the sort of problem which, when aggregated with many such similar problems, adds up to something like Paul’s You Get What You Measure [LW · GW] scenario, culminating in humanity “going out with a whimper”.

40 comments

Comments sorted by top scores.

comment by aysja · 2024-01-02T11:01:17.517Z · LW(p) · GW(p)

Goodheart is about generalization, not approximation.

This seems true, and I agree that there’s a philosophical hangup along the lines of “but everything above the lowest level is fuzzy and subjective, so all of our concepts are inevitably approximate.” I think there are a few things going on with this, but my guess is that part of what this is tracking—at least in the case of biology and AI—is something like “tons of tiny causal factors.”

Like, I think one of the reasons that optimizing hard for good grades as a proxy for doing well at a job fails is because “getting good grades” has many possible causes. One way is by being intelligent; others include cheating, memorizing a bunch of stuff, etc. I.e., there are many pathways to the target, and if you optimize hard for the target, then you get people putting more effort into the pathways they can meaningfully influence.

Approximate measures are bad, I think, when the measure is not in one-to-one lock step with the “real thing.” This happens with grades, but it isn’t true, for instance, in science when we understand the causal underpinnings of phenomena. I know exactly how to increase pressure in a box (increase the number of molecules, make it hotter, etc.), and there is no other secret causal factor which might achieve the same end.

The question, to me, is whether or not these sorts of clean causal structures (as we have with, e.g. “pressure”) exist in biological systems or AI. I suspect they do, and that we just have to find the right way of understanding them. But I think part of the reason people expect these domains to be hopelessly messy is because their behavior seems to be mostly determined by tons of tiny casual factors (chemical signals, sequence of specific neurons firing, etc.)... Like, I read this biology paper once where the authors hilariously lamented that their chart of signaling cascades was a “horror graph” where “everything does everything to everything.”

Indeed, the underlying structure “everything does everything to everything” does seem less conducive to being precisely conceptualized. Especially when (imo) ideal scientific understanding consists of finding the few, isolated causal factors which produce an effect (as in the case of "pressure"). But if everything we care about—abstractions, agency, goals, etc.—are coarse-grainings over billions of semi-independent causal factors, produced in path-dependent ways, then the idea of finding concepts as precise as “pressure” starts looking kind of hopeless, I think.

Like you, I suspect that this is mostly an ontology issue (i.e., that neurons/etc are not necessarily the natural units), and I do think that precise concepts of agency/abstractions/etc both exist and are findable. Partially this is an empirical claim—as you note, we do actually find surprisingly clean structures in biological systems (such as modularity). But also, my proposed angle against “fractal complexity” is something like: I expect life to be understandable because it needs to control itself. I’m not going to give the full argument here, but to speak in my own mentalese for a paragraph:

When you get large, directed systems—(e.g., we are composed of 40 trillion cells, each containing tens of millions of proteins)—I think you basically need some level of modularity if there’s any hope of steering the whole thing. E.g., I think one of the reasons we see modular structure across all levels in biology is because modular structures are easier to control (the input/output structure is much cleaner than if it were some messy clump where “everything does everything to everything”). If my brain had to figure out how to navigate some hyper complex horror graph with a gazillion tiny causal factors every time I wanted to move my hand, I wouldn’t get very far. There’s more argument here to totally spell this out—like why that can’t all be pre-computed, etc.—but I think the fact that biological organisms can reliably coordinate their activity in flexible/general ways is evidence of there being clean structure.

Replies from: Alex_Altair, faul_sname↑ comment by Alex_Altair · 2024-01-02T22:01:19.617Z · LW(p) · GW(p)

I agree there's a core principle somewhere around the idea of "controllable implies understandable". But when I think about this with respect to humans studying biology, then there's another thought that comes to my mind; the things we want to control are not necessarily the things the system itself is controlling. For example, we would like to control the obesity crisis (and weight loss in general) but it's not clear that the biological system itself is controlling that. It almost certainly was successfully controlling it in the ancestral environment (and therefore it was understandable within that environment) but perhaps the environment has changed enough that it is now uncontrollable (and potentially not understandable). Cancer manages to successfully control the system in the sense of causing itself to happen, but that doesn't mean that our goal, "reliably stopping cancer" is understandable, since it is not a way that the system is controlling itself.

This mismatch seems pretty evidently applicable to AI alignment.

And perhaps the "environment" part is critical. A system being controllable in one environment doesn't imply it being controllable in a different (or broader) environment, and thus guaranteed understandability is also lost. This feels like an expression of misgeneralization.

Replies from: aysja↑ comment by aysja · 2024-01-24T23:45:43.468Z · LW(p) · GW(p)

Yeah, I think I misspoke a bit. I do think that controllability is related to understanding, but also I’m trying to gesture at something more like “controllability implies simpleness.”

Where, I think what I’m tracking with “controllability implies simpleness” is that the ease with which we can control things is a function of how many causal factors there are in creating it, i.e., “conjunctive things are less likely” in some Occam’s Razor sense, but also conjunctive things “cost more.” At the very least, they cost more from the agents point of view. Like, if I have to control every step in some horror graph in order to get the outcome I want, that’s a lot of stuff that has to go perfectly, and that’s hard. If “I,” on the other hand, as a virus, or cancer, or my brain, or whatever, only have to trigger a particular action to set off a cascade which reliably results in what I want, this is easier.

There is a sense, of course, in which the spaghetti code thing is still happening underneath it all, but I think it matters what level of abstraction you’re taking with respect to the system. Like, with enough zoom, even very simple activities start looking complex. If you looked at a ball following a parabolic arc at the particle level, it’d be pretty wild. And yet, one might reasonably assume that the regularity with which the ball follows the arc is meaningful. I am suspicious that much of the “it’s all ad-hoc” intuitions about biology/neuroscience/etc are making a zoom error (also typing errors, but that’s another problem). Even simple things can look complicated if you don’t look at them right, and I suspect that the ease with which we can operate in our world should be some evidence that this simpleness “exists,” much like the movement of the ball is “simple” even though a whole world of particles underlies its behavior.

To your point, I do think that the ability to control something is related to understanding it, but not exactly the same. Like, there indeed might be things in the environment that we don’t have much control over, even if we can clearly see what their effects are. Although, I’d be a little surprised if there were true for, e.g., obesity. Like, it seems likely that hormones (semaglutide) can control weight loss, which makes sense to me. A bunch of global stuff in bodies is regulated by hormones, in a similar way, I think, to how viruses “hook into” the cells machinery, i.e., it reliably triggers the right cascades. And controlling something doesn’t necessarily mean that it's possible to understand it completely. But I do suspect that the ability to control implies the existence of some level of analysis upon which the activity is pretty simple.

↑ comment by faul_sname · 2024-01-02T23:20:51.295Z · LW(p) · GW(p)

When you get large, directed systems—(e.g., we are composed of 40 trillion cells, each containing tens of millions of proteins)—I think you basically need some level of modularity if there’s any hope of steering the whole thing.

This seems basically right to me. That said, while it is predictable that the systems in question will be modular, what exact form that modularity takes is both environment-dependent and also path-dependent. Even in cases where the environmental pressures form a very strong attractor for a particular shape of solution, the "module divisions" can differ between species. For example, the pectoral fins of fish and the pectoral flippers of dolphins both fulfill similar roles. However, fish fins are basically a collection of straight, parallel fin rays made of bone or cartilage and connected to a base inside the body of the fish, and the muscles to control the movement of the fin are located within the body of the fish. By contrast, a dolphin's flipper is derived from the foreleg of its tetrapod ancestor, and contains "fingers" which can be moved by muscles within the flipper.

So I think approaches that look like "find a structure that does a particular thing, and try to shape that structure in the way you want" are somewhat (though not necessarily entirely) doomed, because the pressures that determine which particular structure does a thing are not nearly so strong as the pressures that determine that some structure does the thing.

comment by Thane Ruthenis · 2023-12-30T01:19:39.864Z · LW(p) · GW(p)

Excellent breakdown of the relevant factors at play.

You Don’t Get To Choose The Problem Factorization

But what if you need to work on a problem you don't understand anyway?

That creates Spaghetti Towers [LW · GW]: vast constructs of ad-hoc bug-fixes and tweaks built on top of bug-fixes and tweaks. Software-gore databases, Kafkaesque-horror bureaucracies, legislation you need a law degree to suffer through, confused mental models; and also, biological systems built by evolution, and neural networks trained by the SGD.

That's what necessarily, convergently happens every time you plunge into a domain you're unfamiliar with. You constantly have to tweak your system momentarily, to address new minor problems you run into, which reflects new bits of the domain structure you've learned.

Much like biology, the end result initially looks like an incomprehensible arbitrary mess, to anyone not intimately familiar with it. Much like biology, it's not actually a mess. Inasmuch as the spaghetti tower actually performs well in the domain it's deployed in, it necessarily comes to reflect that domain's structure within itself. So if you look at it through the right lens – like those of a programmer who's intimately familiar with their own nightmarish database – you'd actually be able to see that structure and efficiently navigate it.

Which suggests a way to ameliorate this problem: periodic refactoring. Every N time-steps, set some time aside for re-evaluating the construct you've created in the context of your current understanding of the domain, and re-factorize it along the lines that make sense to you now.

That centrally applies to code, yes, but also to your real-life projects, and your literal mental ontologies/models. Always make sure to simplify and distill them. Hunt down snippets of redundant code and unify them into one function.

I. e.: When working on a problem you don't understand, make sure to iterate on the problem factorization.

comment by Daniel Kokotajlo (daniel-kokotajlo) · 2023-12-30T03:27:39.184Z · LW(p) · GW(p)

raters make systematic errors - regular, compactly describable, predictable errors.

If I understand the tentative OpenAI plan correctly (at least the one mentioned in the recent paper, not sure if it's the Main Plan or just one of several being considered) the idea is to use various yet-to-be-worked out techniques to summon certain concepts (concepts like "is this sentence true" and "is this chain-of-thought a straightforward obedient one or a sneaky deceptive one?") into a reward model, and then use the reward model to train the agent. So, the hope is that if the first step works, we no longer have the "systematic errors" problem, and instead have a "perfect" reward system.

How do we get step one to work? That's the hard part & that's what the recent paper is trying to make progress on. I think. Part of the hope is that our reward model can be made from a pretrained base model, which hopefully won't be situationally aware.

↑ comment by Orpheus16 (akash-wasil) · 2023-12-30T11:24:28.772Z · LW(p) · GW(p)

Does the "rater problem" (raters have systematic errors) simply apply to step one in this plan? I agree that once you have a perfect reward model, you no longer need human raters.

But it seems like the "rater problem" still applies if we're going to train the reward model using human feedback. Perhaps I'm too anchored to thinking about things in an RLHF context, but it seems like at some point in the process we need to have some way of saying "this is true" or "this chain-of-thought is deceptive" that involves human raters.

Is the idea something like:

- Eliezer: Human raters make systematic errors

- OpenAI: Yes, but this is only a problem if we have human raters indefinitely provide feedback. If human raters are expected to provide feedback on 10,000,000 responses under time-pressure, then surely they will make systematic errors.

- OpenAI: But suppose we could train a reward model on a modest number of responses and we didn't have time-pressure. For this dataset of, say, 10,000 responses, we are super careful, we get a ton of people to double-check that everything is accurate, and we are nearly certain that every single label is correct. If we train a reward model on this dataset, and we can get it to generalize properly, then we can get past the "humans make systematic errors" problem.

Or am I totally off//the idea is different than this//the "yet-to-be-worked-out-techniques" would involve getting the reward model to learn stuff without ever needing feedback from human raters?

Replies from: leogao, daniel-kokotajlo↑ comment by leogao · 2023-12-30T18:07:37.049Z · LW(p) · GW(p)

Short answer: The core focus of the "yet to be worked out techniques" is to figure out the "how do we get it to generalize properly" part, not the "how do we be super careful with the labels" part.

Longer answer: We can consider weak to strong generalization as actually two different subproblems:

- generalizing from correct labels on some easy subset of the distribution (the 10,000 super careful definitely 100% correct labels)

- generalizing from labels which can be wrong and are more correct on easy problems than hard problems, but we don't exactly know when the labels are wrong (literally just normal human labels)

The setting in the paper doesn't quite distinguish between the two but I personally think the former problem is more interesting and contains the bulk of the difficulty. Namely, most of the difficulty is in understanding when generalization happens/fails and what kinds of generalizations are more natural.

↑ comment by Daniel Kokotajlo (daniel-kokotajlo) · 2023-12-30T22:34:54.491Z · LW(p) · GW(p)

Leo already answered, but yes, the rater problem applies to step one of that plan. And the hope is that progress can be made on solving the rater problem in this setting. Because e.g. our model isn't situationally aware.

Replies from: johnswentworth↑ comment by johnswentworth · 2024-01-02T17:48:37.205Z · LW(p) · GW(p)

Why would the rater model not be situationally aware?

comment by Orpheus16 (akash-wasil) · 2024-01-02T14:38:52.253Z · LW(p) · GW(p)

If the timer starts to run out, then slap something together based on the best understanding we have. 18-24 months is about how long I expect it to take to slap something together based on the best understanding we have.

Can you say more about what you expect to be doing after you have slapped together your favorite plans/recommendations? I'm interested in getting a more concrete understanding of how you see your research (eventually) getting implemented.

Suppose after the 18-24 month process, you have 1-5 concrete suggestions that you want AGI developers to implement. Is the idea essentially that you would go to the superalignment team (and the equivalents at other labs) and say "hi, here's my argument for why you should do X?" What kinds of implementation-related problems, if any, do you see coming up?