Embedded Agents

post by abramdemski, Scott Garrabrant · 2018-10-29T19:53:02.064Z · LW · GW · 42 commentsContents

42 comments

(A longer text-based version of this post is also available on MIRI's blog here, and the bibliography for the whole sequence can be found here)

42 comments

Comments sorted by top scores.

comment by Wei Dai (Wei_Dai) · 2018-11-05T06:12:36.905Z · LW(p) · GW(p)

I think it would be useful to give your sense of how Embedded Agency fits into the more general problem of AI Safety/Alignment. For example, what percentage of the AI Safety/Alignment problem you think Embedded Agency represents, and what are the other major chunks of the larger problem?

Replies from: Scott Garrabrant↑ comment by Scott Garrabrant · 2018-11-07T19:45:34.084Z · LW(p) · GW(p)

This is not a complete answer, but it is part of my picture:

(It is the part of the picture that I can give while being only descriptive, and not prescriptive. For epistemic hygiene reasons, I want avoid discussions of how much of different approaches we need in contexts (like this one) that would make me feel like I was justifying my research in a way that people might interpret as an official statement from the agent foundations team lead.)

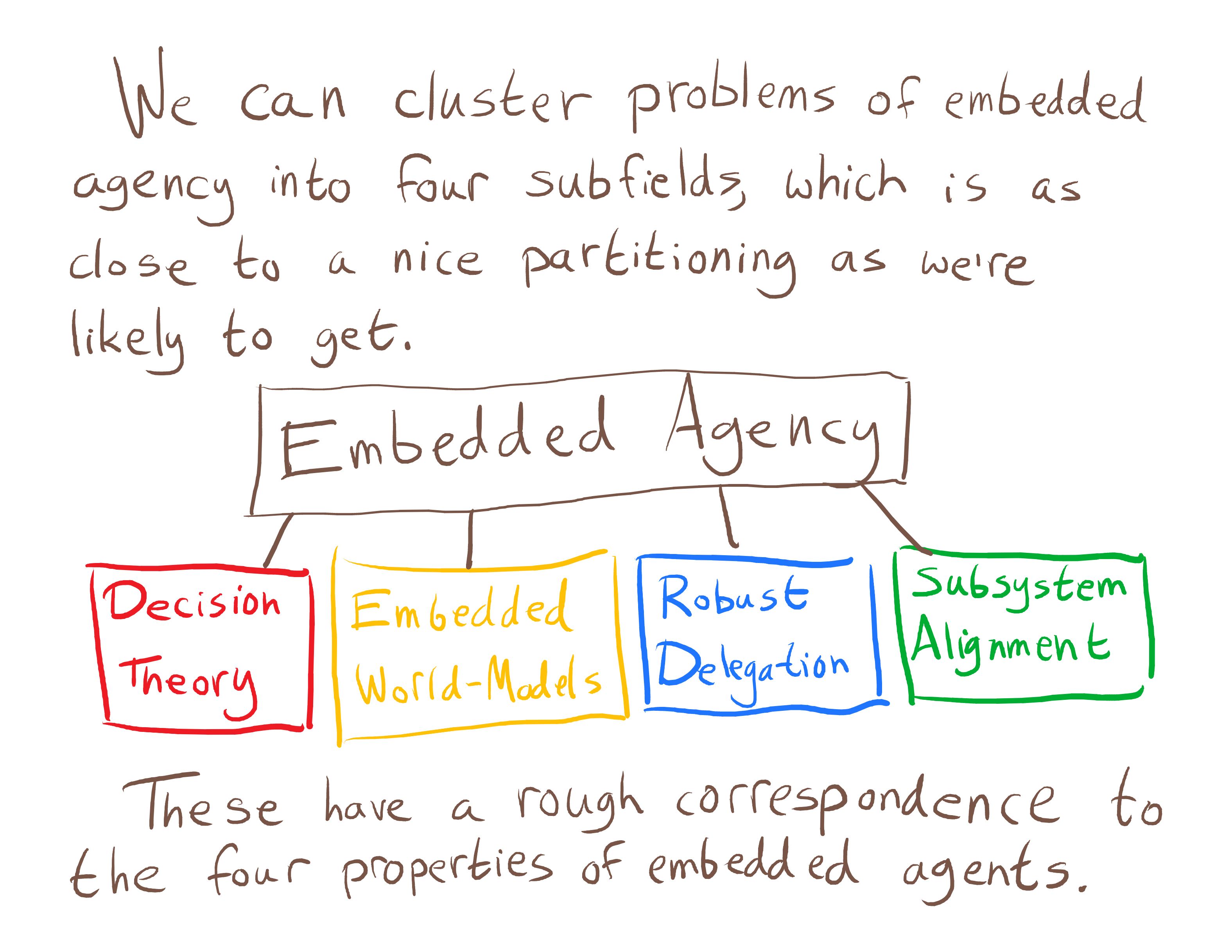

I think that Embedded Agency is basically a refactoring of Agent Foundations in a way that gives one central curiosity based goalpost, rather than making it look like a bunch of independent problems. It is mostly all the same problems, but it was previously packaged as "Here are a bunch of things we wish we understood about aligning AI," and in repackaged as "Here is a central mystery of the universe, and here are a bunch things we don't understand about it." It is not a coincidence that they are the same problems, since they were generated in the first place by people paying close to what mysteries of the universe related to AI we haven't solved yet.

I think of Agent Foundations research has having a different type signature than most other AI Alignment research, in a way that looks kind of like Agent Foundations:other AI alignment::science:engineering. I think of AF as more forward-chaining and other stuff as more backward-chaining. This may seem backwards if you think about AF as reasoning about superintelligent agents, and other research programs as thinking about modern ML systems, but I think it is true. We are trying to build up a mountain of understanding, until we collect enough that the problem seems easier. Others are trying to make direct plans on what we need to do, see what is wrong with those plans, and try to fix the problems. Some consequences of this is that AF work is more likely to be helpful given long timelines, partially because AF is trying to be the start of a long journey of figuring things out, but also because AF is more likely to be robust to huge shifts in the field.

I actually like to draw an analogy with this: (taken from this [AF · GW] post by Evan Hubinger)

I was talking with Scott Garrabrant late one night recently and he gave me the following problem: how do you get a fixed number of DFA-based robots to traverse an arbitrary maze (if the robots can locally communicate with each other)? My approach to this problem was to come up with and then try to falsify various possible solutions. I started with a hypothesis, threw it against counterexamples, fixed it to resolve the counterexamples, and iterated. If I could find a hypothesis which I could prove was unfalsifiable, then I'd be done.

When Scott noticed I was using this approach, he remarked on how different it was than what he was used to when doing math. Scott's approach, instead, was to just start proving all of the things he could about the system until he managed to prove that he had a solution. Thus, while I was working backwards by coming up with possible solutions, Scott was working forwards by expanding the scope of what he knew until he found the solution.

(I don't think it quite communicates my approach correctly, but I don't know how to do better.)

A consequence of the type signature of Agent Foundations is that my answer to "What are the other major chunks of the larger problem?" is "That is what I am trying to figure out."

Replies from: Amyr↑ comment by Cole Wyeth (Amyr) · 2025-03-21T17:58:33.781Z · LW(p) · GW(p)

I also take this approach to agent foundations, which is why I like to tie different agendas together. Studying AIXI is part of that because many other approaches can be described as "depart from AIXI in this way to solve this informally stated problem with AIXI."

comment by Tetraspace (tetraspace-grouping) · 2018-10-30T00:12:09.569Z · LW(p) · GW(p)

Very nice! I like the colour-coding scheme, and the way it ties together those bullet points in MIRI's research agenda.

Looks like these sequences are going to be a great (content-wise and aesthetically) introduction to a lot of the ideas behind agent foundations; I'm excited.

comment by Rohin Shah (rohinmshah) · 2019-11-21T04:08:00.364Z · LW(p) · GW(p)

I actually have some understanding of what MIRI's Agent Foundations work is about

comment by habryka (habryka4) · 2018-11-06T00:44:36.376Z · LW(p) · GW(p)

Promoted to curated: I think this post (and the following posts in the sequence) might be my favorite posts that have been written in the last year. I think this has a lot to do with how this post feels like it is successfully doing one of the things that Eliezer sequences did, which is to combine a wide range of solid theoretical results, with practical implications for rationality as well as broad explanations that improve my understanding not just of the specific domain at hand, but of a range of related domains as well.

I also really like the change of pace with the format, and quite like how easy certain things become to explain when you use a more visual format. I think it's a exceptionally accessible post, even given its highly technical nature. I am currently rereading GEB, and this post reminds me of that book in a large variety of ways, in a very good way.

comment by chaosmage · 2018-11-01T08:47:51.237Z · LW(p) · GW(p)

The text is beautifully condensed. And the handwritten style does help it look casual/inviting.

But the whole thing loaded significantly slower than I read. How many megabytes is this post? I haven't waited this long for a website to load for years.

Replies from: habryka4, habryka4↑ comment by habryka (habryka4) · 2018-11-01T18:25:34.911Z · LW(p) · GW(p)

Good, point. We just uploaded the images that Abram gave us, but I just realized that they are quite large and have minimal compression applied to them.

I just experimented with some compression and it looks like we can get a 5x size reduction without any significant loss in quality, so we will go and replace all the images with the compressed ones. Thanks for pointing that out!

↑ comment by habryka (habryka4) · 2018-11-03T17:19:37.568Z · LW(p) · GW(p)

This should now be fixed! We added compression to all the images, and things should now pretty fast (total size of the images is <1 MB)

Replies from: SaidAchmiz↑ comment by Said Achmiz (SaidAchmiz) · 2018-11-03T17:57:46.194Z · LW(p) · GW(p)

It’s actually 3.4 MB total.

Edit: You can get some further savings by saving as GIF instead of JPEG. For example, this image goes from ~360 KB to ~250 KB that way.

Replies from: habryka4↑ comment by habryka (habryka4) · 2018-11-03T22:03:32.613Z · LW(p) · GW(p)

Ah, yep. Sorry, that was the size after I experimented with cropping them some (until I realized that pixels don't mean pixels anymore, and we need to serve higher resolution images to retina screens).

Yeah, we could try changing the image format as well. Though I think it's mostly fine now.

Replies from: wizzwizz4comment by orthonormal · 2019-12-07T22:19:29.146Z · LW(p) · GW(p)

Insofar as the AI Alignment Forum is part of the Best-of-2018 Review, this post deserves to be included. It's the friendliest explanation to MIRI's research agenda (as of 2018) that currently exists.

comment by johnswentworth · 2019-11-21T18:58:09.791Z · LW(p) · GW(p)

This post (and the rest of the sequence) was the first time I had ever read something about AI alignment and thought that it was actually asking the right questions. It is not about a sub-problem, it is not about marginal improvements. Its goal is a gears-level understanding of agents, and it directly explains why that's hard. It's a list of everything which needs to be figured out in order to remove all the black boxes and Cartesian boundaries, and understand agents as well as we understand refrigerators.

comment by Shmi (shminux) · 2018-10-30T01:36:48.352Z · LW(p) · GW(p)

Embedded Agency sounds like an essential property of the world to acknowledge, as we humans naturally think in terms of Cartesian dualism.

Replies from: Benquo↑ comment by Benquo · 2018-11-02T09:00:43.311Z · LW(p) · GW(p)

I rhink that’s a specifically modern tendency, not an universal human one. Much of premodern thought assumes embeddedness in ways that read to Moderns as confusing or shoddy.

Replies from: Benito↑ comment by Ben Pace (Benito) · 2018-11-02T13:58:48.355Z · LW(p) · GW(p)

Can you say a few more words on what sort of ways of speaking are you thinking of? I'm curious about what assumptions have changed.

comment by ryan_b · 2019-11-28T21:46:05.457Z · LW(p) · GW(p)

I nominate this post for two reasons.

One, it is an excellent example of providing supplemental writing about basic intuitions and thought processes, which is extremely helpful to me because I do not have a good enough command of the formal work to intuit them.

Two, it is one of the few examples of experimenting with different kinds of presentation. I feel like this is underappreciated and under-utilized; better ways of communicating seems like a strong baseline requirement of the rationality project, and this post pushes in that direction.

comment by Davidmanheim · 2019-11-28T10:40:40.380Z · LW(p) · GW(p)

This post has significant changed my mental model of how to understand key challenges in AI safety, and also given me a clearer understanding of and language for describing why complex game-theoretic challenges are poorly specified or understood. The terms and concepts in this series of posts have become a key part of my basic intellectual toolkit.

comment by John_Maxwell (John_Maxwell_IV) · 2018-11-05T02:46:47.290Z · LW(p) · GW(p)

From the version of this post on the MIRI blog:

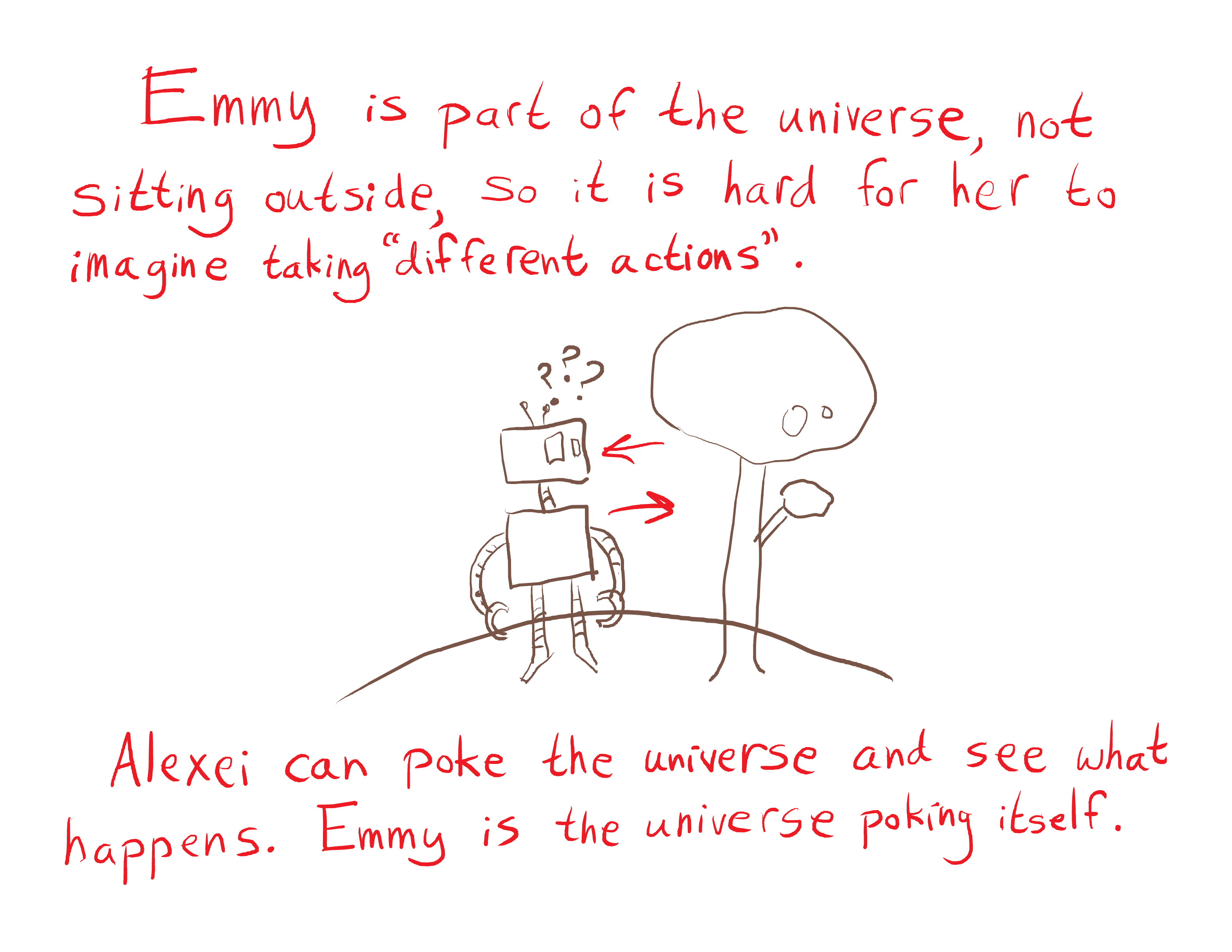

Bayesian reasoning works by starting with a large collection of possible environments, and as you observe facts that are inconsistent with some of those environments, you rule them out. What does reasoning look like when you’re not even capable of storing a single valid hypothesis for the way the world works? Emmy is going to have to use a different type of reasoning, and make updates that don’t fit into the standard Bayesian framework.

I think maybe this paragraph should say "Solomonoff induction" instead of Bayesian reasoning. If I'm reasoning about a coin, and I have a model with a single parameter representing the coin's bias, there's a sense in which I'm doing Bayesian reasoning and there is some valid hypothesis for the coin's bias. Most applied Bayesian ML work looks more like discovering a coin's bias than thinking about the world at a sufficiently high resolution for the algorithm to be modeling itself, so this seems like an important distinction.

comment by Rana Dexsin · 2018-10-30T06:02:01.975Z · LW(p) · GW(p)

This sounds similar in effect to what philosophy of mind calls “embodied cognition”, but it takes a more abstract tack. Is there a recognized background link between the two ideas already? Is that a useful idea, regardless of whether it already exists, or am I off track?

Replies from: RobbBB, TheWakalix↑ comment by Rob Bensinger (RobbBB) · 2018-10-30T17:12:40.440Z · LW(p) · GW(p)

I'd draw more of a connection between embedded agency and bounded optimality or the philosophical superproject of "naturalizing" various concepts (e.g., naturalized epistemology).

Our old name for embedded agency was "naturalized agency"; we switched because we kept finding that CS people wanted to know what we meant by "naturalized", and we'd always say "embedded", so...

"Embodiment" is less relevant because it's about, well, bodies. Embedded agency just says that the agent is embedded in its environment in some fashion; it doesn't say that the agent has a robot body, in spite of the cute pictures of robots Abram drew above. An AI system with no "body" it can directly manipulate or sense will still be physically implemented on computing hardware, and that on its own can raise all the issues above.

↑ comment by TheWakalix · 2018-11-26T22:37:12.805Z · LW(p) · GW(p)

In my view, embodied cognition says that the way in which an agent is embodied is important to its cognition, whereas embedded agency says that the fact that an agent is embodied is important to its cognition.

(This is probably a repetition, but it's shorter and more explicit, which could be useful.)

comment by FactorialCode · 2020-01-18T01:04:49.470Z · LW(p) · GW(p)

In the comments of this post, Scott Garrabrant says:

I think that Embedded Agency is basically a refactoring of Agent Foundations in a way that gives one central curiosity based goalpost, rather than making it look like a bunch of independent problems. It is mostly all the same problems, but it was previously packaged as "Here are a bunch of things we wish we understood about aligning AI," and in repackaged as "Here is a central mystery of the universe, and here are a bunch things we don't understand about it." It is not a coincidence that they are the same problems, since they were generated in the first place by people paying close to what mysteries of the universe related to AI we haven't solved yet.

This entire sequence has made that clear for me. Most notably it has helped me understand the relationship between the various problems in decision theory that have been discussed on this site for years, along with their proposed solutions such as TDT, UDT, and FDT. These problems are a direct consequence of agents being embedded in their environments.

Furthermore, it's made me think more clearly about some of my high level models of ideal AI and RL systems. For instance, the limitations of the AIXI framework and some of it's derivatives has become more clear to me.

comment by Rafael Harth (sil-ver) · 2019-12-23T08:44:13.702Z · LW(p) · GW(p)

Just saw this linked on SlateStarCodex. I love this style. I also think it's valuable to demonstrate that it's possible to have fun without dumbing down the content at all.

comment by Ben Pace (Benito) · 2019-11-27T22:08:43.051Z · LW(p) · GW(p)

This sequence was the first time I felt I understood MIRI's research.

(Though I might prefer to nominate the text-version that has the whole sequence in one post [? · GW].)

comment by Gordon Seidoh Worley (gworley) · 2018-10-29T21:05:03.136Z · LW(p) · GW(p)

Thanks for also sharing this as text (on the MIRI blog); makes it much easier to read.

comment by Swimmer963 (Miranda Dixon-Luinenburg) (Swimmer963) · 2019-11-29T20:17:43.334Z · LW(p) · GW(p)

Read sequence as research for my EA/rationality novel, this was really good and also pretty easy to follow despite not having any technical background

comment by philip_b (crabman) · 2018-11-01T00:16:09.791Z · LW(p) · GW(p)

Is this the first post in the sequence? It's not clear.

Replies from: RobbBB↑ comment by Rob Bensinger (RobbBB) · 2018-11-01T01:02:22.297Z · LW(p) · GW(p)

It's the first post! The posts are indexed on https://www.alignmentforum.org/s/Rm6oQRJJmhGCcLvxh and https://intelligence.org/embedded-agency/, but it looks like they're not on LW?

Replies from: habryka4↑ comment by habryka (habryka4) · 2018-11-01T01:53:38.602Z · LW(p) · GW(p)

Also indexed here, you should see the sequences navigation right below the post (https://imgur.com/a/HTlNGjC), and here is a link to the full sequence: https://www.lesswrong.com/s/Rm6oQRJJmhGCcLvxh [? · GW]

comment by John_Maxwell (John_Maxwell_IV) · 2018-11-05T02:46:44.185Z · LW(p) · GW(p)

Bayesian reasoning works by starting with a large collection of possible environments, and as you observe facts that are inconsistent with some of those environments, you rule them out. What does reasoning look like when you’re not even capable of storing a single valid hypothesis for the way the world works? Emmy is going to have to use a different type of reasoning, and make updates that don’t fit into the standard Bayesian framework.

I think maybe this paragraph should say "Solomonoff induction" instead of Bayesian reasoning. If I'm reasoning about a coin, and I have a model with a single parameter representing the coin's bias, there's a sense in which I'm doing Bayesian reasoning and there is some valid hypothesis for the coin's bias. Most applied Bayesian ML work looks more like discovering a coin's bias than thinking about the world at a sufficiently high resolution for the algorithm to be modeling itself, so this seems like an important distinction.

comment by DragonGod · 2018-11-02T09:29:00.953Z · LW(p) · GW(p)

I don't understand why being an embedded agent makes Bayesian reasoning impossible. My intuition is that an hypothesis doesn't have to be perfectly correlated with reality to be useful. Furthermore suppose you conceived of hypotheses as being a conjunction of elementary hypothesis, then I see no reason why you cannot perform Bayesian reasoning of the form "hypothesis X is one of the consituents of the true hypothesis", even if the agent can't perfectly describe the true hypothesis.

Also, "the agent is larger/smaller than the environment" is not very clear, so I think it would help if you would clarify what those terms mean.

Replies from: RobbBB↑ comment by Rob Bensinger (RobbBB) · 2018-11-02T16:07:20.627Z · LW(p) · GW(p)

The next part just went live, and is about exactly that!: http://intelligence.org/embedded-models

comment by mathfreedom · 2018-10-31T12:03:13.857Z · LW(p) · GW(p)

At least some of these ideas have been pondered by anthropologists over the years.

comment by Abe Dillon (abe-dillon) · 2019-06-28T21:02:09.954Z · LW(p) · GW(p)

I don't see the point in adding so much complexity to such a simple matter. AIXI is an incomputable agent who's proofs of optimality require a computable environment. It requires a specific configuration of the classic agent-environment-loop where the agent and the environment are independent machines. That specific configuration is only applicable to a sub-set of real-world problems in which the environment can be assumed to be much "smaller" than the agent operating upon it. Problems that don't involve other agents and have very few degrees of freedom relative the agent operating upon them.

Marcus Hutter already proposed computable versions of AIXI like AIXI_lt. In the context of agent-environment loops, AIXI_lt is actually more general than AIXI because AIXI_lt can be applied to all configurations of the agent-environment loop including the embedded agent configuration. AIXI is a special case of AIXI_lt where the limits of "l" and "t" go to infinity.

Some of the problems you bring up seem to be concerned with the problem of reconciling logic with probability while others seem to be concerned with real-world implementation. If your goal is to define concepts like "intelligence" with mathematical formalizations (which I believe is necessary), then you need to delineate that from real-world implementation. Discussing both simultaneously is extremely confusing. In the real world, an agent only has is empirical observations. It has no "seeds" to build logical proofs upon. That's why scientists talk about theories and evidence supporting them rather than proofs and axioms.

You can't prove that the sun will rise tomorrow, you can only show that it's reasonable to expect the sun to rise tomorrow based on your observations. Mathematics is the study of patterns, mathematical notation is a language we invented to describe patterns. We can prove theorems in mathematics because we are the ones who decide the fundamental axioms. When we find patterns that don't lend themselves easily to mathematical description, we rework the tool (add concepts like zero, negative numbers, complex numbers, etc.). It happens that we live in a universe that seems to follow patterns, so we try to use mathematics to describe the patterns we see and we design experiments to investigate the extent to which those patterns actually hold.

The branch of mathematics for characterizing systems with incomplete information is probability. If you wan't to talk about real-world implementations, most non-trivial problems fall under this domain.

comment by rmoehn · 2019-06-04T21:36:27.653Z · LW(p) · GW(p)

I had a thought about this that was too long for a comment, so I've posted it separately [LW · GW]. Bottom line:

When thinking about embedded agency it might be helpful to drop the notion of ‘agency’ and ‘agents’ sometimes, because it might be confusing or underdefined. Instead one could think of [sub-]processes running according to the laws of physics. Or of algorithms running on a stack of interpreters running on the hardware of the universe.

comment by MDMA · 2018-11-06T09:52:01.716Z · LW(p) · GW(p)

I have been thinking about this for a while and I'd like to propose a different viewpoint on this same idea. It's equivalent but personally I prefer this perspective. Instead of saying 'embedded agency' which implies an embedding of the agent in the environment. Perhaps we could say 'embedded environment'. Which implies that the environment is within the agent. To illustrate, the same way 'I' can 'observe' 'my' 'thoughts', I can observe my laptop. Are my thoughts me, or is my laptop me? I think the correct way way took look at it that my computer is just as much a part of me as are my thoughts. Even though surely everyone is convinced that this dualistic notion between agent and environment does not make sense, there seems to be a difference between what one believes on a level of 'knowledge', and what you can phenomenologically observe. I believe this phrasing allows people to more easier experience for themselves the truthness of the embedded environment/agent idea, which is important for further idea generation! :)

Replies from: TheWakalix↑ comment by TheWakalix · 2018-11-26T22:39:51.136Z · LW(p) · GW(p)

"Within" is not commutative, and it seems to me that what you have said about Emmy is equally applicable to Alexei (thus it cannot be identical to a concept that splits the two).