Alignment By Default

post by johnswentworth · 2020-08-12T18:54:00.751Z · LW · GW · 96 commentsContents

Unsupervised: Pointing to Values Unsupervised: Natural Abstractions Aside: Microscope AI Supervised/Reinforcement: Proxy Problems Alignment in the Long Run Conclusion None 96 comments

Suppose AI continues on its current trajectory: deep learning continues to get better as we throw more data and compute at it, researchers keep trying random architectures and using whatever seems to work well in practice. Do we end up with aligned AI “by default”?

I think there’s at least a plausible trajectory in which the answer is “yes”. Not very likely - I’d put it at ~10% chance - but plausible. In fact, there’s at least an argument to be made that alignment-by-default is more likely to work than many fancy alignment proposals, including IRL variants [? · GW] and HCH-family methods [? · GW].

This post presents the rough models and arguments.

I’ll break it down into two main pieces:

- Will a sufficiently powerful unsupervised learner “learn human values”? What does that even mean?

- Will a supervised/reinforcement learner end up aligned to human values, given a bunch of data/feedback on what humans want?

Ultimately, we’ll consider a semi-supervised/transfer-learning style approach, where we first do some unsupervised learning and hopefully “learn human values” before starting the supervised/reinforcement part.

As background, I will assume you’ve read some of the core material about human values from the sequences [? · GW], including Hidden Complexity of Wishes [LW · GW], Value is Fragile [LW · GW], and Thou Art Godshatter [LW · GW].

Unsupervised: Pointing to Values

In this section, we’ll talk about why an unsupervised learner might not “learn human values”. Since an unsupervised learner is generally just optimized for predictive power, we’ll start by asking whether theoretical algorithms with best-possible predictive power (i.e. Bayesian updates on low-level physics models) “learn human values”, and what that even means. Then, we’ll circle back to more realistic algorithms.

Consider a low-level physical model of some humans - e.g. a model which simulates every molecule comprising the humans. Does this model “know human values”? In one sense, yes: the low-level model has everything there is to know about human values embedded within it, in exactly the same way that human values are embedded in physical humans. It has “learned human values”, in a sense sufficient to predict any real-world observations involving human values.

But it seems like there’s a sense in which such a model does not “know” human values. Specifically, although human values are embedded in the low-level model, the embedding itself is nontrivial. Even if we have the whole low-level model, we still need that embedding in order to “point to” human values specifically - e.g. to use them as an optimization target. Indeed, when we say “point to human values”, what we mean is basically “specify the embedding”. (Side note: treating human values as an optimization target is not the only use-case for “pointing to human values”, and we still need to point to human values even if we’re not explicitly optimizing for anything. But that’s a separate discussion [LW · GW], and imagining using values as an optimization target is useful to give a mental image of what we mean by “pointing”.)

In short: predictive power alone is not sufficient to define human values. The missing part is the embedding of values within the model. The hard part is pointing to the thing (i.e. specifying the values-embedding), not learning the thing (i.e. finding a model in which values are embedded).

Finally, here’s a different angle on the same argument which will probably drive some of the philosophers up in arms: any model of the real world with sufficiently high general predictive power will have a model of human values embedded within it. After all, it has to predict the parts of the world in which human values are embedded in the first place - i.e. the parts of which humans are composed, the parts on which human values are implemented. So in principle, it doesn’t even matter what kind of model we use or how it’s represented; as long the predictive power is good enough, values will be embedded in there, and the main problem will be finding the embedding.

Unsupervised: Natural Abstractions

In this section, we’ll talk about how and why a large class of unsupervised methods might “learn the embedding” of human values, in a useful sense.

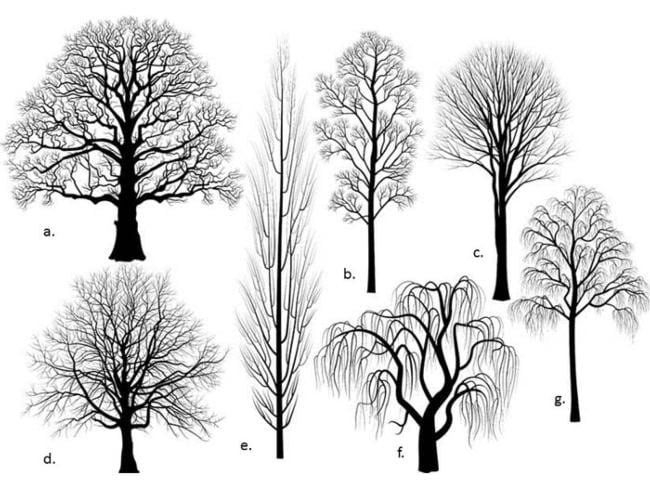

First, notice that basically everything from the previous section still holds if we replace the phrase “human values” with “trees”. A low-level physical model of a forest has everything there is to know about trees embedded within it, in exactly the same way that trees are embedded in the physical forest. However, while there are trees embedded in the low-level model, the embedding itself is nontrivial. Predictive power alone is not sufficient to define trees; the missing part is the embedding of trees within the model.

More generally, whenever we have some high-level abstract object (i.e. higher-level than quantum fields), like trees or human values, a low-level model might have the object embedded within it but not “know” the embedding.

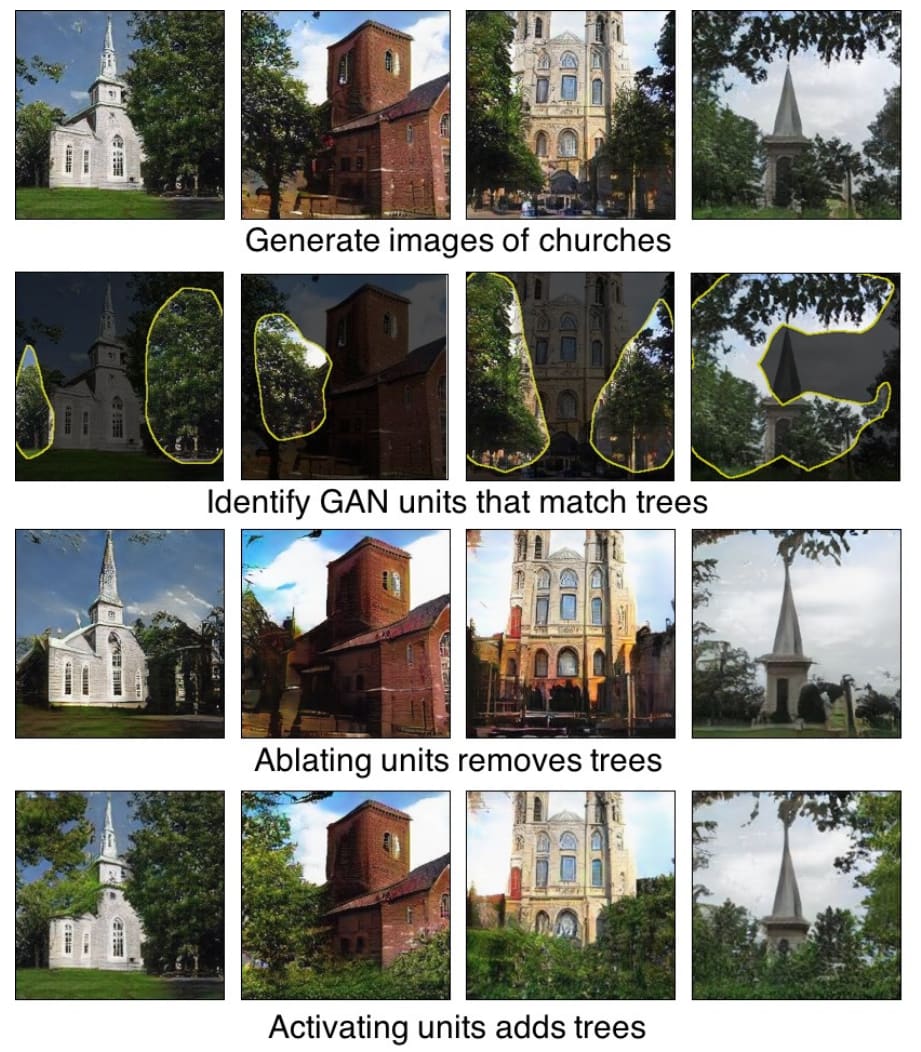

Now for the interesting part: empirically, we have whole classes of neural networks in which concepts like “tree” have simple, identifiable embeddings. These are unsupervised systems, trained for predictive power, yet they apparently “learn the tree-embedding” in the sense that the embedding is simple: it’s just the activation of a particular neuron, a particular channel, or a specific direction in the activation-space of a few neurons.

What’s going on here? We know that models optimized for predictive power will not have trivial tree-embeddings in general; low-level physics simulations demonstrate that much. Yet these neural networks do end up with trivial tree-embeddings, so presumably some special properties of the systems make this happen. But those properties can’t be that special, because we see the same thing for a reasonable variety of different architectures, datasets, etc.

Here’s what I think is happening: “tree” is a natural abstraction. More on what that means here [LW · GW], but briefly: abstractions summarize information which is relevant far away. When we summarize a bunch of atoms as “a tree”, we’re throwing away lots of information about the exact positions of molecules/cells within the tree, or about the pattern of bark on the tree’s surface. But information like the exact positions of molecules within the tree is irrelevant to things far away - that signal is all wiped out by the noise of air molecules between the tree and the observer. The flap of a butterfly’s wings may alter the trajectory of a hurricane, but unless we know how all wings of all butterflies are flapping, that tiny signal is wiped out by noise for purposes of our own predictions. Most information is irrelevant to things far away, not in the sense that there’s no causal connection, but in the sense that the signal is wiped out by noise in other unobserved variables.

If a concept is a natural abstraction, that means that the concept summarizes all the information which is relevant to anything far away, and isn’t too sensitive to the exact notion of “far away” involved. That’s what I think is going on with “tree”.

Getting back to neural networks: it’s easy to see why a broad range of architectures would end up “using” natural abstractions internally. Because the abstraction summarizes information which is relevant far away, it allows the system to make far-away predictions without passing around massive amounts of information all the time. In a low-level physics model, we don’t need abstractions because we do pass around massive amounts of information all the time, but real systems won’t have anywhere near that capacity any time soon. So for the foreseeable future, we should expect to see real systems with strong predictive power using natural abstractions internally.

With all that in mind, it’s time to drop the tree-metaphor and come back to human values. Are human values a natural abstraction?

If you’ve read Value is Fragile [LW · GW] or Godshatter [LW · GW], then there’s probably a knee-jerk reaction to say “no”. Human values are basically a bunch of randomly-generated heuristics which proved useful for genetic fitness; why would they be a “natural” abstraction? But remember, the same can be said of trees. Trees are a complicated pile of organic spaghetti code [LW · GW], but “tree” is still a natural abstraction, because the concept summarizes all the information from that organic spaghetti pile which is relevant to things far away. In particular, it summarizes anything about one tree which is relevant to far-away trees.

Similarly, the concept of “human” summarizes all the information about one human which is relevant to far-away humans. It’s a natural abstraction.

Now, I don’t think “human values” are a natural abstraction in exactly the same way as “tree” - specifically, trees are abstract objects, whereas human values are properties of certain abstract objects (namely humans). That said, I think it’s pretty obvious that “human” is a natural abstraction in the same way as “tree”, and I expect that humans “have values” in roughly the same way that trees “have branching patterns”. Specifically, the natural abstraction contains a bunch of information, that information approximately factors into subcomponents (including “branching pattern”), and “human values” is one of those information-subcomponents for humans.

I wouldn’t put super-high confidence on all of this, but given the remarkable track record of hackish systems learning natural abstractions in practice, I’d give maybe a 70% chance that a broad class of systems (including neural networks) trained for predictive power end up with a simple embedding of human values. A plurality of my uncertainty is on how to think about properties of natural abstractions. A significant chunk of uncertainty is also on the possibility that natural abstraction is the wrong way to think about the topic altogether, although in that case I’d still assign a reasonable chance that neural networks end up with simple embeddings of human values - after all, no matter how we frame it, they definitely have trivial embeddings of many other complicated high-level objects.

Aside: Microscope AI

Microscope AI [LW · GW] is about studying the structure of trained neural networks, and trying to directly understand their learned internal algorithms, models and concepts. In light of the previous section, there’s an obvious path to alignment where there turns out to be a few neurons (or at least some simple embedding) which correspond to human values, we use the tools of microscope AI to find that embedding, and just like that the alignment problem is basically solved.

Of course it’s unlikely to be that simple in practice, even assuming a simple embedding of human values. I don’t expect the embedding to be quite as simple as one neuron activation, and it might not be easy to recognize even if it were. Part of the problem is that we don’t even know the type signature of the thing we’re looking for - in other words, there are unanswered fundamental conceptual questions here, which make me less-than-confident that we’d be able to recognize the embedding even if it were right under our noses.

That said, this still seems like a reasonably-plausible outcome, and it’s an approach which is particularly well-suited to benefit from marginal theoretical progress.

One thing to keep in mind: this is still only about aligning one AI; success doesn’t necessarily mean a future in which more advanced AIs remain aligned. More on that later.

Supervised/Reinforcement: Proxy Problems

Suppose we collect some kind of data on what humans want, and train a system on that. The exact data and type of learning doesn’t really matter here; the relevant point is that any data-collection process is always, no matter what, at best a proxy for actual human values. That’s a problem, because Goodhart’s Law [? · GW] plus Hidden Complexity of Wishes [LW · GW]. You’ve probably heard this a hundred times already, so I won’t belabor it.

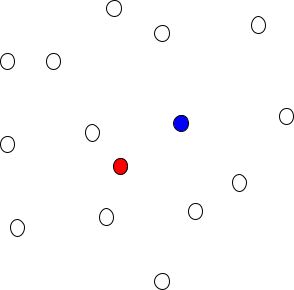

Here’s the interesting possibility: assume the data is crap. It’s so noisy that, even though the data-collection process is just a proxy for real values, the data is consistent with real human values. Visually:

At first glance, this isn’t much of an improvement. Sure, the data is consistent with human values, but it’s consistent with a bunch of other possibilities too - including the real data-collection process (which is exactly the proxy we wanted to avoid in the first place).

But now suppose we do some transfer learning. We start with a trained unsupervised learner, which already has a simple embedding of human values (we hope). We give our supervised learner access to that system during training. Because the unsupervised learner has a simple embedding of human values, the supervised learner can easily score well by directly using that embedded human values model. So, we cross our fingers and hope the supervised learner just directly uses that embedded human values model, and the data is noisy enough that it never “figures out” that it can get better performance by directly modelling the data-collection process instead.

In other words: the system uses an actual model of human values as a proxy for our proxy of human values.

This requires hitting a window - our data needs to be good enough that the system can tell it should use human values as a proxy, but bad enough that the system can’t figure out the specifics of the data-collection process enough to model it directly. This window may not even exist.

(Side note: we can easily adjust this whole story to a situation where we’re training for some task other than “satisfy human values”. In that case, the system would use the actual model of human values to model the Hidden Complexity of whatever task it’s training on.)

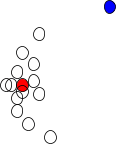

Of course in practice, the vast majority of the things people use as objectives for training AI probably wouldn’t work at all. I expect that they usually look like this:

In other words, most objectives are so bad that even a little bit of data is enough to distinguish the proxy from real human values. But if we assume that there’s some try-it-and-see going on, i.e. people try training on various objectives and keep the AIs which seem to do roughly what the humans want, then it’s maybe plausible that we end up iterating our way to training objectives which “work”. That’s assuming things don’t go irreversibly wrong before then - including not just hostile takeover, but even just development of deceptive behavior, since this scenario does not have any built-in mechanism to detect deception.

Overall, I’d give maybe a 10-20% chance of alignment by this path, assuming that the unsupervised system does end up with a simple embedding of human values. The main failure mode I’d expect, assuming we get the chance to iterate, is deception - not necessarily “intentional” deception, just the system being optimized to look like it’s working the way we want rather than actually working the way we want. It’s the proxy problem again, but this time at the level of humans-trying-things-and-seeing-if-they-work, rather than explicit training objectives.

Alignment in the Long Run

So far, we’ve only talked about one AI ending up aligned, or a handful ending up aligned at one particular time. However, that isn’t really the ultimate goal of AI alignment research. What we really want is for AI to remain aligned in the long run, as we (and AIs themselves) continue to build new and more powerful systems and/or scale up existing systems over time.

I know of two main ways to go from aligning one AI to long-term alignment:

- Make the alignment method/theory very reliable and robust to scale, so we can continue to use it over time as AI advances.

- Align one roughly-human-level-or-smarter AI, then use that AI to come up with better alignment methods/theories.

The alignment-by-default path relies on the latter. Even assuming alignment happens by default, it is unlikely to be highly reliable or robust to scale.

That’s scary. We’d be trusting the AI to align future AIs, without having any sure-fire way to know that the AI is itself aligned. (If we did have a sure-fire way to tell, then that would itself be most of a solution to the alignment problem.)

That said, there’s a bright side: when alignment-by-default works, it’s a best-case scenario. The AI has a basically-correct model of human values, and is pursuing those values. Contrast this to things like IRL variants, which at best learn a utility function which approximates human values (which are probably not themselves a utility function). Or the HCH family of methods, which at best mimic a human with a massive hierarchical bureaucracy at their command, and certainly won’t be any more aligned than that human+bureaucracy would be.

To the extent that alignment of the successor system is limited by alignment of the parent system, that makes alignment-by-default potentially a more promising prospect than IRL or HCH. In particular, it seems plausible that imperfect alignment gets amplified into worse-and-worse alignment as systems design their successors. For instance, a system which tries to look like it’s doing what humans want rather than actually doing what humans want will design a successor which has even better human-deception capabilities. That sort of problem makes “perfect” alignment - i.e. an AI actually pointed at a basically-correct model of human values - qualitatively safer than a system which only manages to be not-instantly-disastrous.

(Side note: this isn’t the only reason why “basically perfect” alignment matters, but I do think it’s the most relevant such argument for one-time alignment/short-term term methods, especially on not-very-superhuman AI.)

In short: when alignment-by-default works, we can use the system to design a successor without worrying about amplification of alignment errors. However, we wouldn’t be able to tell for sure whether alignment-by-default had worked or not, and it’s still possible that the AI would make plain old mistakes in designing its successor.

Conclusion

Let’s recap the bold points:

- A low-level model of some humans has everything there is to know about human values embedded within it, in exactly the same way that human values are embedded in physical humans. The embedding, however, is nontrivial. Thus...

- Predictive power alone is not sufficient to define human values. The missing part is the embedding of values within the model. However…

- This also applies if we replace the phrase “human values” with “trees”. Yet we have a whole class of neural networks in which a simple embedding lights up in response to trees. Why?

- Trees are a natural abstraction, and we should expect to see real systems trained for predictive power use natural abstractions internally.

- Human values are a little different from trees (they’re a property of an abstract object rather than an abstract object themselves), but I still expect that a broad class of systems trained for predictive power will end up with simple embeddings of human values (~70% chance).

- Because the unsupervised learner has a simple embedding of human values, a supervised/reinforcement learner can easily score well on values-proxy-tasks by directly using that model of human values. In other words, the system uses an actual model of human values as a proxy for our proxy of human values (~10-20% chance).

- When alignment-by-default works, it’s basically a best-case scenario, so we can safely use the system to design a successor without worrying about amplification of alignment errors (among other things).

Overall, I only give this whole path ~10% chance of working in the short term, and maybe half that in the long term. However, if amplification of alignment errors turns out to be a major limiting factor for long-term alignment, then alignment-by-default is plausibly more likely to work than approaches in the IRL or HCH families.

The limiting factor here is mainly identifying the (probably simple) embedding of human values within a learned model, so microscope AI and general theory development are both good ways to improve the outlook. Also, in the event that we are able to identify a simple embedding of human values in a learned model, it would be useful to have a way to translate that embedding into new systems, in order to align successors.

96 comments

Comments sorted by top scores.

comment by Sammy Martin (SDM) · 2020-08-13T12:45:22.540Z · LW(p) · GW(p)

I think what you've identified here is a weakness in the high-level, classic arguments for AI risk -

Overall, I’d give maybe a 10-20% chance of alignment by this path, assuming that the unsupervised system does end up with a simple embedding of human values. The main failure mode I’d expect, assuming we get the chance to iterate, is deception - not necessarily “intentional” deception, just the system being optimized to look like it’s working the way we want rather than actually working the way we want. It’s the proxy problem again, but this time at the level of humans-trying-things-and-seeing-if-they-work, rather than explicit training objectives.

This failure mode of deceptive alignment seems like it would result most easily from Mesa-optimisation or an inner alignment failure [LW · GW]. Inner Alignment / Misalignment is possibly the key specific mechanism which fills a weakness in the 'classic arguments [LW(p) · GW(p)]' for AI safety - the Orthogonality Thesis, Instrumental Convergence and Fast Progress together implying small separations between AI alignment and AI capability can lead to catastrophic outcomes. The question of why there would be such a damaging, hard-to-detect divergence between goals and alignment needs an answer to have a solid, specific reason to expect dangerous misalignment, and Inner Misalignment is just such a reason.

I think that it should be presented in initial introductions to AI risk alongside those classic arguments, as the specific, technical reason why the specific techniques we use are likely to produce such goal/capability divergence - rather than the general a priori reasons given by the classic arguments.

Replies from: johnswentworth↑ comment by johnswentworth · 2020-08-13T16:12:25.359Z · LW(p) · GW(p)

Personally, I think a more likely failure mode is just "you get what you measure", as in Paul's write up here [LW · GW]. If we only know how to measure certain things which are not really the things we want, then we'll be selecting for not-what-we-want by default. But I know at least some smart people who think that inner alignment is the more likely problem, so you're in good company.

Replies from: SDM, Benito↑ comment by Sammy Martin (SDM) · 2020-08-13T16:53:35.798Z · LW(p) · GW(p)

‘You get what you measure’ (outer alignment failure) and Mesa optimisers (inner failure) are both potential gap fillers that explain why specifically the alignment/capability divergence initially arises. Whether it’s one or the other, I think the overall point is still that there is this gap in the classic arguments that allows for a (possibly quite high) chance of ‘alignment by default’, for the reasons you give, but there are at least 2 plausible mechanisms that fill this gap. And then I suppose my broader point would be that we should present:

Classic Arguments —> objections to them (capability and alignment often go together, could get alignment by default) —> specific causal mechanisms for misalignment

↑ comment by Ben Pace (Benito) · 2020-08-14T03:20:12.717Z · LW(p) · GW(p)

Am surprised you think that’s the main failure mode. I am fairly more concerned about failure through mesa optimisers taking a treacherous turn.

I’m thinking we will be more likely to find sensible solutions to outer alignment, but have not much real clue about the internals, and then we’ll give them enough optimisation power to build super intelligent unaligned mesa optimisers, and then with one treacherous turn the game will be up.

Why do you think inner alignment will be easier?

Replies from: johnswentworth↑ comment by johnswentworth · 2020-08-14T16:32:23.081Z · LW(p) · GW(p)

Two arguments here. First, an outside-view argument: inner alignment problems should only crop up on a relatively narrow range of architectures/parameters. Second, an entirely separate inside-view argument: assuming that natural abstractions are a thing makes inner alignment failure look much less likely.

Narrow range argument: inner alignment failure only applies to a specific range of architectures within a specific range of task parameters - for instance, we have to be optimizing for something, and there has to be lots of relevant variables observed only at runtime, and there has to be something like a "training" phase in which we lock-in parameter choices before runtime, and for the more disastrous versions we usually need divergence of the runtime distribution from the training distribution. It's a failure mode which assumes that a whole lot of things look like today's ML pipelines.

On the other hand, the get-what-you-measure problem and its generalizations apply to any architecture, including tool AI, idealized Bayesian utility maximizers (i.e. the infinite data/compute regime), and (less obviously) human-mimicking systems.

Natural abstractions argument: in an inner alignment failure, the outer optimizer is optimizing for , but the inner optimizer ends up pointed at some rough approximation . But if X is a natural abstraction, then this is far less likely to be a problem; we expect a wide range of predictive systems to all learn a basically-correct notion of , so there's little reason for an inner optimizer to end up pointed at a rough approximation, especially if we're leveraging transfer learning from some unsupervised learner.

(It's worth asking here why this argument doesn't apply to the divergence of human goals from evolutionary fitness. A human only has ~30k genes, and each one has a fairly simple function - e.g. catalyze one chemical reaction or stabilize a structure or the like. That's nowhere near enough to represent something like evolutionary fitness in the genome, especially when the large majority of those genes are already used for metabolism and body plan and whatnot. Modern ML, on the other hand, already operates in a range [LW · GW] where insufficient degrees of freedom are far less likely to be a problem. Also, I'm currently unsure whether evolutionary fitness is a natural abstraction at all.)

In general, if human values are a natural abstraction, then pointing to values is much harder than "learning" values. That means outer alignment is the problem more than inner alignment.

Replies from: evhub, Benito↑ comment by evhub · 2020-08-14T18:44:38.642Z · LW(p) · GW(p)

Natural abstractions argument: in an inner alignment failure, the outer optimizer is optimizing for X, but the inner optimizer ends up pointed at some rough approximation ~X. But if X is a natural abstraction, then this is far less likely to be a problem; we expect a wide range of predictive systems to all learn a basically-correct notion of X, so there's little reason for an inner optimizer to end up pointed at a rough approximation, especially if we're leveraging transfer learning from some unsupervised learner.

This isn't an argument against deceptive alignment, just proxy alignment—with deceptive alignment, the agent still learns X, it just does so as part of its world model rather than its objective. In fact, I think it's an argument for deceptive alignment, since if X first crops up as a natural abstraction inside of your agent's world model, that raises the question of how exactly it will get used in the agent's objective function—and deceptive alignment is arguably one of the simplest, most natural ways for the base optimizer to get an agent that has information about the base objective stored in its world model to actually start optimizing for that model of the base objective.

Replies from: johnswentworth↑ comment by johnswentworth · 2020-08-14T19:32:57.995Z · LW(p) · GW(p)

I mostly agree with this. I don't view deception as an inner alignment problem, though - for instance, it's an issue in any approval-based setup even without an inner optimizer showing up. To the extent that it is an inner alignment issue, it involves generalization failure from the training distribution, which I also generally consider an outer alignment problem (i.e. training on a distribution which differs from the deploy environment generally means the system is not outer aligned, unless the architecture is somehow set up to make the distribution shift irrelevant).

A useful criterion here: would the problem still happen if we just optimized over all the parameters simultaneously at runtime, rather than training offline first? If the problem would still happen, then it's not really an inner alignment problem (at least not in the usual mesa-optimization sense).

Replies from: evhub↑ comment by evhub · 2020-08-14T21:05:33.712Z · LW(p) · GW(p)

To the extent that it is an inner alignment issue, it involves generalization failure from the training distribution, which I also generally consider an outer alignment problem (i.e. training on a distribution which differs from the deploy environment generally means the system is not outer aligned, unless the architecture is somehow set up to make the distribution shift irrelevant).

A useful criterion here: would the problem still happen if we just optimized over all the parameters simultaneously at runtime, rather than training offline first? If the problem would still happen, then it's not really an inner alignment problem (at least not in the usual mesa-optimization sense).

That's certainly not how I would define inner alignment. In “Risks from Learned Optimization,” we just define it as the problem of aligning the mesa-objective (if one exists) with the base objective, which is entirely independent of whether or not there's any sort of distinction between the training and deployment distributions and is fully consistent with something like online learning as you're describing it.

Replies from: johnswentworth↑ comment by johnswentworth · 2020-08-14T21:36:54.949Z · LW(p) · GW(p)

The way I understood it, the main reason a mesa-optimizer shows up in the first place is that some information is available at runtime which is not available during training, so some processing needs to be done at runtime to figure out the best action given the runtime-info. The mesa-optimizer handles that processing. If we directly optimize over all parameters at runtime, then there's no place for that to happen.

What am I missing?

Replies from: evhub↑ comment by evhub · 2020-08-14T22:30:13.569Z · LW(p) · GW(p)

Let's consider the following online learning setup:

At each timestep , takes action and receives reward . Then, we perform the simple policy gradient update

Now, we can ask the question, would be a mesa-optimizer? The first thing that's worth noting is that the above setup is precisely the standard RL training setup—the only difference is that there's no deployment stage. What that means, though, is that if standard RL training produces a mesa-optimizer, then this will produce a mesa-optimizer too, because the training process isn't different in any way whatsoever. If is acting in a diverse environment that requires search to be able to be solved effectively, then will still need to learn to do search—the fact that there won't ever be a deployment stage in the future is irrelevant to 's current training dynamics (unless is deceptive and knows there won't be a deployment stage—that's the only situation where it might be relevant).

Given that, we can ask the question of whether , if it's a mesa-optimizer, is likely to be misaligned—and in particular whether it's likely to be deceptive. Again, in terms of proxy alignment, the training process is exactly the same, so the picture isn't any different at all—if there are simpler, easier-to-optimize-for proxies, then is likely to learn those instead of the true base objective. Like I mentioned previously, however, deceptive alignment is the one case where it might matter that you're doing online learning, since if the model knows that it might do different things based on that fact. However, there are still lots of reasons why a model might be deceptive even in an online learning setup—for example, it might expect better opportunities for defection in the future, and thus want to prevent being modified now so that it can defect when it'll be most impactful.

Replies from: johnswentworth↑ comment by johnswentworth · 2020-08-14T23:20:32.032Z · LW(p) · GW(p)

When I say "optimize all the parameters at runtime", I do not mean "take one gradient step in between each timestep". I mean, at each timestep, fully optimize all of the parameters. Optimize all the way to convergence before every single action.

Think back to the central picture of mesa-optimization (at least as I understand it). The mesa-optimizer shows up because some data is only available at runtime, not during training, so it has to be processed at runtime using parameters selected during training. In the online RL setup you sketch here, "runtime" for mesa-optimization purposes is every time the system chooses its action - i.e. every timestep - and "training" is all the previous timesteps. A mesa-optimizer should show up if, at every timestep, some relevant new data comes in and the system has to process that data in order to choose the optimal action, using parameters inherited from previous timesteps.

Now, suppose we fully optimize all of the parameters at every timestep. The objective function for this optimization would presumably be , with the sum taken over all previous data points, since that's what the RL setup is approximating.

This optimization would probably still "find" the same mesa-optimizer as before, but now it looks less like a mesa-optimizer problem and more like an outer alignment problem: that objective function is probably not actually the thing we want. The fact that the true optimum for that objective function probably has our former "mesa-optimizer" embedded in it is a pretty strong signal that that objective function itself is not outer aligned; the true optimum of that objective function is not really the thing we want.

Does that make sense?

Replies from: evhub↑ comment by evhub · 2020-08-14T23:50:44.237Z · LW(p) · GW(p)

The RL process is actually optimizing , the log just comes from the REINFORCE trick. Regardless, I'm not sure I understand what you mean by optimizing fully to convergence at each timestep—convergence is a limiting property, so I don't know what it could mean do it for a single timestep. Perhaps you mean just taking the optimal policy such that In that case, that is in fact the definition of outer alignment I've given in the past [AF · GW], so I agree that whether is aligned or not is an outer alignment question.

Replies from: johnswentworth↑ comment by johnswentworth · 2020-08-15T02:25:45.483Z · LW(p) · GW(p)

Sure, works for what I'm saying, assuming that sum-over-time only includes the timesteps taken thus far. In that case, I'm saying that either:

- the mesa optimizer doesn't appear in , in which case the problem is fixed by fully optimizing everything at every timestep (i.e. by using ), or

- the mesa optimizer does appear in , in which case the problem was really an outer alignment issue all along.

↑ comment by Ben Pace (Benito) · 2020-08-14T17:50:31.568Z · LW(p) · GW(p)

Thank you for being so clear.

On 2, I’m surprised if you think that natural selection isn’t a natural abstraction but that eudaemonia is. (If we’re getting an AGI singleton that want to fully learn our values.)

Secondly I’ll say that if we do not understand it’s representation of X or X-prime, and if a small difference will be catastrophic, then that will also lead to doom.

On 1: I think that’s quite plausible? Like, I assign something in the range of 20-60% probability to that. How much does it have to change for you to feel much safer about inner alignment?

(I’m also not that clear it only applies to this situation. Perhaps I’m mistaken, but in my head subsystem alignment and robust delegation both have this property of ”build a second optimiser that helps achieve your goals” and in both cases passing on the true utility function seems very hard.)

Replies from: johnswentworth↑ comment by johnswentworth · 2020-08-14T19:58:50.066Z · LW(p) · GW(p)

On 2, I’m surprised if you think that natural selection isn’t a natural abstraction but that eudaemonia is.

Currently, my first-pass check for "is this probably a natural abstraction?" is "can humans usually figure out what I'm talking about from a few examples, without a formal definition?". For human values, the answer seems like an obvious "yes". For evolutionary fitness... nonobvious. Humans usually get it wrong without the formal definition.

Also, natural abstractions in general involve summarizing the information from one chunk of the universe which is relevant "far away". For human values, the relevant chunk of the universe is the human - i.e. the information about human values is all embedded in the physical human. But for evolutionary fitness, that's not the case - an organism does not contain all the information relevant to calculating its evolutionary fitness. So it seems like there's some qualitative difference there - like, human values "live" in humans, but fitness doesn't "live" in organisms in the same way. I still don't feel like I fully understand this, though.

On 1: I think that’s quite plausible? Like, I assign something in the range of 20-60% probability to that.

Sure, inner alignment is a problem which mainly applies to architectures similar to modern ML, and modern ML architecture seems like the most-likely route to AGI.

It still feels like outer alignment is a much harder problem, though. The very fact that inner alignment failure is so specific to certain architectures is evidence that it should be tractable. For instance, we can avoid most inner alignment problems by just optimizing all the parameters simultaneously at run-time. That solution would be too expensive in practice, but the point is that inner alignment is hard in a "we need to find more efficient algorithms" sort of way, not a "we're missing core concepts and don't even know how to solve this in principle" sort of way. (At least for mesa-optimization; I agree that there are more general subsystem alignment/robust delegation issues which are potentially conceptually harder.)

Outer alignment, on the other hand, we don't even know how to solve in principle, on any architecture whatsoever, even with arbitrary amounts of compute and data. That's why I expect it to be a bottleneck.

Replies from: Vaniver, Benito↑ comment by Vaniver · 2020-08-16T04:58:49.412Z · LW(p) · GW(p)

Currently, my first-pass check for "is this probably a natural abstraction?" is "can humans usually figure out what I'm talking about from a few examples, without a formal definition?". For human values, the answer seems like an obvious "yes". For evolutionary fitness... nonobvious. Humans usually get it wrong without the formal definition.

Hmm, presumably you're not including something like "internal consistency" in the definition of 'natural abstraction'. That is, humans who aren't thinking carefully about something will think there's an imaginable object even if any attempts to actually construct that object will definitely lead to failure. (For example, Arrow's Impossibility Theorem comes to mind; a voting rule that satisfies all of those desiderata feels like a 'natural abstraction' in the relevant sense, even though there aren't actually any members of that abstraction.)

Replies from: johnswentworth↑ comment by johnswentworth · 2020-08-16T14:51:56.374Z · LW(p) · GW(p)

Oh this is fascinating. This is basically correct; a high-level model space can include models which do not correspond to any possible low-level model.

One caveat: any high-level data or observations will be consistent with the true low-level model. So while there may be natural abstract objects which can't exist, and we can talk about those objects, we shouldn't see data supporting their existence - e.g. we shouldn't see a real-world voting system behaving like it satisfies all of Arrow's desiderata.

↑ comment by Ben Pace (Benito) · 2020-08-15T00:37:24.866Z · LW(p) · GW(p)

Regarding your first pass check for naturalness being whether humans can understand it: strike me thoroughly puzzled. Isn't one of the core points of the reductionism sequence that, while "thor caused the thunder" sounds simpler to a human than Maxwell's equations (because the words fit naturally into a human psychology), one of them is much "simpler" in an absolute sense than the other (and is in fact true).

Regarding your point about the human values living in humans while the organism's fitness is living partly in the environment, nothing immediately comes to mind to say here, but I agree it's a very interesting question.

The things you say about inner/outer alignment hold together quite sensibly. I am surprised to hear you say that mesa optimisers can be avoided by just optimizing all the parameters simultaneously at run-time. That doesn't match my understanding of mesa optimisation, I thought the mesa optimisers would definitely arise during the training, but if you're right that it's trivial-but-expensive to remove them there then I agree it's intuitively a much easier problem than I had realised.

Replies from: johnswentworth↑ comment by johnswentworth · 2020-08-15T02:41:23.380Z · LW(p) · GW(p)

Regarding your first pass check for naturalness being whether humans can understand it: strike me thoroughly puzzled. Isn't one of the core points of the reductionism sequence that, while "thor caused the thunder" sounds simpler to a human than Maxwell's equations (because the words fit naturally into a human psychology), one of them is much "simpler" in an absolute sense than the other (and is in fact true).

Despite humans giving really dumb verbal explanations (like "Thor caused the thunder"), we tend to be pretty decent at actually predicting things in practice.

The same applies to natural abstractions. If I ask people "is 'tree' a natural category?" then they'll get into some long philosophical debate. But if I show someone five pictures of trees, then show them five other picture which are not all trees, and ask them which of the second set are similar to the first set, they'll usually have no trouble at all picking the trees in the second set.

I thought the mesa optimisers would definitely arise during the training

If you're optimizing all the parameters simultaneously at runtime, then there is no training. Whatever parameters were learned during "training" would just be overwritten by the optimal values computed at runtime.

Replies from: Benito↑ comment by Ben Pace (Benito) · 2020-08-15T07:05:04.569Z · LW(p) · GW(p)

Despite humans giving really dumb verbal explanations (like "Thor caused the thunder"), we tend to be pretty decent at actually predicting things in practice.

Mm, quantum mechanics much? I do not think I can reliably tell you which experiments are in the category “real” and the category “made up”, even though it’s a very simple category mathematically. But I don’t expect you’re saying this, I just am still confused what you are saying.

This reminds me of Oli’s question here [LW · GW], which ties into Abram’s “point of view from somewhere [LW · GW]” idea. I feel like I expect ML-systems to take the point of view of the universe, and not learn our natural categories.

Replies from: johnswentworth↑ comment by johnswentworth · 2020-08-15T16:35:36.798Z · LW(p) · GW(p)

I'm talking everyday situations. Like "if I push on this door, it will open" or "by next week my laundry hamper will be full" or "it's probably going to be colder in January than June". Even with quantum mechanics, people do figure out the pattern and build some intuition, but they need to see a lot of data on it first and most people never study it enough to see that much data.

In places where the humans in question don't have much first-hand experiential data, or where the data is mostly noise, that's where human prediction tends to fail. (And those are also the cases where we expect learning systems in general to fail most often, and where we expect the system's priors to matter most.) Another way to put it: humans' priors aren't great, but in most day-to-day prediction problems we have more than enough data to make up for that.

comment by Steven Byrnes (steve2152) · 2021-12-21T15:23:58.778Z · LW(p) · GW(p)

I’ll set aside what happens “by default” and focus on the interesting technical question of whether this post is describing a possible straightforward-ish path to aligned superintelligent AGI.

The background idea is “natural abstractions”. This is basically a claim that, when you use an unsupervised world-model-building learning algorithm, its latent space tends to systematically learn some patterns rather than others. Different learning algorithms will converge on similar learned patterns, because those learned patterns are a property of the world, not an idiosyncrasy of the learning algorithm. For example: Both human brains and ConvNets seem to have a “tree” abstraction; neither human brains nor ConvNets seem to have a “head or thumb but not any other body part” concept.

I kind of agree with this. I would say that the patterns are a joint property of the world and an inductive bias. I think the relevant inductive biases in this case are something like: (1) “patterns tend to recur”, (2) “patterns tend to be localized in space and time”, and (3) “patterns are frequently composed of multiple other patterns, which are near to each other in space and/or time”, and maybe other things. The human brain definitely is wired up to find patterns with those properties, and ConvNets to a lesser extent. These inductive biases are evidently very useful, and I find it very likely that future learning algorithms will share those biases, even more than today’s learning algorithms. So I’m basically on board with the idea that there may be plenty of overlap between the world-models of various different unsupervised world-model-building learning algorithms, one of which is the brain.

(I would also add that I would expect “natural abstractions” to be a matter of degree, not binary. We can, after all, form the concept “head or thumb but not any other body part”. It would just be extremely low on the list of things that would pop into our head when trying to make sense of something we’re looking at. Whereas a “prominent” concept like “tree” would pop into our head immediately, if it were compatible with the data. I think I can imagine a continuum of concepts spanning the two. I’m not sure if John would agree.)

Next, John suggests that “human values” may be such a “natural abstraction”, such that “human values” may wind up a “prominent” member of an AI's latent space, so to speak. Then when the algorithms get a few labeled examples of things that are or aren’t “human values”, they will pattern-match them to the existing “human values” concept. By the same token, let’s say you’re with someone who doesn’t speak your language, but they call for your attention and point to two power outlets in succession. You can bet that they’re trying to bring your attention to the prominent / natural concept of “power outlets”, not the un-prominent / unnatural concept of “places that one should avoid touching with a screwdriver”.

Do I agree? Well, “human values” is a tricky term. Maybe I would split it up. One thing is “Human values as defined and understood by an ideal philosopher after The Long Reflection”. This is evidently not much of a “natural abstraction”, at least in the sense that, if I saw ten examples of that thing, I wouldn’t even know it. I just have no idea what that thing is, concretely.

Another thing is “Human values as people use the term”. In this case, we don’t even need the natural abstraction hypothesis! We can just ensure that the unsupervised world-modeler incorporates human language data in its model. Then it would have seen people use the phrase “human values”, and built corresponding concepts. And moreover, we don’t even necessarily need to go hunting around in the world-model to find that concept, or to give labeled examples. We can just utter the words “human values”, and see what neurons light up! I mean, sure, it probably wouldn’t work! But the labeled examples thing probably wouldn’t work either!

Unfortunately, “Human values as people use the term” is a horrific mess of contradictory and incoherent things. An AI that maximizes “‘human values’ as those words are used in the average YouTube video” does not sound to me like an AI that I want to live with. I would expect lots of performative displays of virtue and in-group signaling, little or no making-the-world-a-better-place.

In any case, it seems to me that the big kernel of truth in this post is that we can and should think of future AGI motivations systems as intimately involving abstract concepts, and that in particular we can and should take advantage of safety-advancing abstract concepts like “I am advancing human flourishing”, “I am trying to do what my programmer wants me to try to do”, “I am following human norms” [AF · GW], or whatever. In fact I have a post advocating that just a few days ago [LW · GW], and think of that kind of thing as a central ingredient in all the AGI safety stories that I find most plausible.

Beyond that kernel of truth, I think a lot more work, beyond what’s written in the post, would be needed to build a good system that actually does something we want. In particular, I think we have much more work to do on choosing and pointing to the right concepts (cf. “first-person problem” [LW · GW]), detecting when concepts break down because we’re out of distribution (cf. “model splintering” [LW · GW]), sandbox testing protocols, and so on. The post says 10% chance that things work out, which seems much too high to me. But more importantly, if things work out along these lines, I think it would be because people figured out all those things I mentioned, by trial-and-error, during slow takeoff. Well in that case, I say: let's just figure those things out right now!

Replies from: johnswentworth↑ comment by johnswentworth · 2021-12-21T16:48:17.140Z · LW(p) · GW(p)

Next, John suggests that “human values” may be such a “natural abstraction”, such that “human values” may wind up a “prominent” member of an AI's latent space, so to speak.

I'm fairly confident that the inputs to human values are natural abstractions - i.e. the "things we care about" are things like trees, cars, other humans, etc, not low-level quantum fields or "head or thumb but not any other body part". (The "head or thumb" thing is a great example, by the way). I'm much less confident that human values themselves are a natural abstraction, for exactly the same reasons you gave.

comment by Wei Dai (Wei_Dai) · 2020-08-13T03:28:25.951Z · LW(p) · GW(p)

To help me check my understanding of what you're saying, we train an AI on a bunch of videos/media about Alice's life, in the hope that it learns an internal concept of "Alice's values". Then we use SL/RL to train the AI, e.g., give it a positive reward whenever it does something that the supervisor thinks benefits Alice's values. The hope here is that the AI learns to optimize the world according to its internal concept of "Alice's values" that it learned in the previous step. And we hope that its concept of "Alice's values" includes the idea that Alice wants AIs, including any future AIs, to keep improving their understanding of Alice's values and to serve those values, and that this solves alignment in the long run.

Assuming the above is basically correct, this (in part) depends on the AI learning a good enough understanding of "improving understanding of Alice's values" in step 1. This in turn (assuming "improving understanding of Alice's values" involves "using philosophical reasoning to solve various confusions related to understanding Alice's values, including Alice's own confusions") depends on that the AI can learn a correct or good enough concept of "philosophical reasoning" from unsupervised training. Correct?

If AI can learn "philosophical reasoning" from unsupervised training, GPT-N should be able to do philosophy (e.g., solve open philosophical problems), right?

Replies from: johnswentworth, John_Maxwell_IV↑ comment by johnswentworth · 2020-08-13T05:34:48.571Z · LW(p) · GW(p)

There's a lot of moving pieces here, so the answer is long. Apologies in advance.

I basically agree with everything up until the parts on philosophy. The point of divergence is roughly here:

assuming "improving understanding of Alice's values" involves "using philosophical reasoning to solve various confusions related to understanding Alice's values, including Alice's own confusions"

I do think that resolving certain confusions around values involves solving some philosophical problems. But just because the problems are philosophical does not mean that they need to be solved by philosophical reasoning.

The kinds of philosophical problems I have in mind are things like:

- What is the type signature of human values?

- What kind of data structure naturally represents human values?

- How do human values interface with the rest of the world?

In other words, they're exactly the sort of questions for which "utility function" and "Cartesian boundary" are answers, but probably not the right answers.

How could an AI make progress on these sorts of questions, other than by philosophical reasoning?

Let's switch gears a moment and talk about some analogous problems:

- What is the type signature of the concept of "tree"?

- What kind of data structure naturally represents "tree"?

- How do "trees" (as high-level abstract objects) interface with the rest of the world?

Though they're not exactly the same questions, these are philosophical questions of a qualitatively similar sort to the questions about human values.

Empirically, AIs already do a remarkable job reasoning about trees, and finding answers to questions like those above, despite presumably not having much notion of "philosophical reasoning". They learn some data structure for representing the concept of tree, and they learn how the high-level abstract "tree" objects interact with the rest of the (lower-level) world. And it seems like such AIs' notion of "tree" tends to improve as we throw more data and compute at them, at least over the ranges explored to date.

In other words: empirically, we seem to be able to solve philosophical problems to a surprising degree by throwing data and compute at neural networks. Well, at least "solve" in the sense that the neural networks themselves seem to acquire solutions to the problems... not that either the neural nets or the humans gain much understanding of such problems in general.

Going up a meta level: why would this be the case? Why would solutions to philosophical problems end up embedded in random learning algorithms, without either the algorithms or the humans having a general understanding of the problems?

Well, presumably neural nets end up with a notion of "tree" for much the same reason that humans end up with a notion of "tree": it's a useful concept. We don't have a precise mathematical theory of when or why it's useful (though I do hopefully have some groundwork [LW · GW] for that), but we can see instrumental convergence to a useful concept even without understanding why the concept is useful.

In short: solutions to certain philosophical problems are probably instrumentally convergent, so the solutions will probably pop up in a fairly broad range of systems despite neither the systems nor their designers understanding the philosophical problems.

Now, so far this has talked about why solutions to philosophical problems would pop up in one AI. But does that help one AI to improve its own solutions? Depends on the setup, but at the very least it offers an AI a possible path to improving its solutions to such philosophical problems without going through philosophical reasoning.

Finally, I'll note that if humans want to be able to recognize an AI's solutions to philosophical problems, e.g. decode a model of human values from the weights of a neural net, then we'll probably need to make some philosophical/mathematical progress ourselves in order to do that reliably. After all, we don't even know the type signature of the thing we're looking for or a data structure with which to represent it.

Replies from: Wei_Dai↑ comment by Wei Dai (Wei_Dai) · 2020-08-15T19:31:02.816Z · LW(p) · GW(p)

So similarly, a human could try to understand Alice's values in two ways. The first, equivalent to what you describe here for AI, is to just apply whatever learning algorithm their brain uses when observing Alice, and form an intuitive notion of "Alice's values". And the second is to apply explicit philosophical reasoning to this problem. So sure, you can possibly go a long way towards understanding Alice's values by just doing the former, but is that enough to avoid disaster? (See Two Neglected Problems in Human-AI Safety [LW · GW] for the kind of disaster I have in mind here.)

(I keep bringing up metaphilosophy but I'm pretty much resigned to be living in a part of the multiverse where civilization will just throw the dice and bet [LW · GW] on AI safety not depending on solving it. What hope is there for our civilization to do what I think is the prudent thing, when no professional philosophers, even ones in EA who are concerned about AI safety, ever talk about it?)

Replies from: johnswentworth↑ comment by johnswentworth · 2020-08-15T20:21:56.516Z · LW(p) · GW(p)

I mostly agree with you here. I don't think the chances of alignment by default are high. There are marginal gains to be had, but to get a high probability of alignment in the long term we will probably need actual understanding of the relevant philosophical problems.

↑ comment by John_Maxwell (John_Maxwell_IV) · 2020-08-15T11:45:15.444Z · LW(p) · GW(p)

My take is that corrigibility is sufficient to get you an AI that understands what it means to "keep improving their understanding of Alice's values and to serve those values". I don't think the AI needs to play the "genius philosopher" role, just the "loyal and trustworthy servant" role. A superintelligent AI which plays that role should be able to facilitate a "long reflection" where flesh and blood humans solve philosophical problems.

(I also separately think unsupervised learning systems could in principle make philosophical breakthroughs. Maybe one already has.)

comment by Donald Hobson (donald-hobson) · 2020-08-15T21:42:01.226Z · LW(p) · GW(p)

In light of the previous section, there’s an obvious path to alignment where there turns out to be a few neurons (or at least some simple embedding) which correspond to human values, we use the tools of microscope AI to find that embedding, and just like that the alignment problem is basically solved.

This is the part I disagree with. The network does recognise trees, or at least green things (given that the grass seems pretty brown in the low tree pic).

Extrapolating this, I expect the AI might well have neurons that correspond roughly to human values, on the training data. Within the training environment, human values, amount of dopamine in human brain, curvature of human lips (in smiles), number of times the reward button is pressed, and maybe even amount of money in human bank account might all be strongly correlated.

You will have successfully narrowed human values down to within the range of things that are strongly correlated with human values in the training environment. If you take this signal and apply enough optimization pressure, you are going to get the equivalent of a universe tiled with tiny smiley faces.

Replies from: johnswentworth↑ comment by johnswentworth · 2020-08-16T15:09:39.321Z · LW(p) · GW(p)

Note that the examples in the OP are from an adversarial generative network. If its notion of "tree" were just "green things", the adversary should be quite capable of exploiting that.

You will have successfully narrowed human values down to within the range of things that are strongly correlated with human values in the training environment. If you take this signal and apply enough optimization pressure, you are going to get the equivalent of a universe tiled with tiny smiley faces.

The whole point of the "natural abstractions" section of the OP is that I do not think this will actually happen. Off-distribution behavior is definitely an issue for the "proxy problems" section of the post, but I do not expect it to be an issue for identifying natural abstractions.

Replies from: donald-hobson↑ comment by Donald Hobson (donald-hobson) · 2020-08-16T16:55:00.703Z · LW(p) · GW(p)

Note that the examples in the OP are from an adversarial generative network. If its notion of "tree" were just "green things", the adversary should be quite capable of exploiting that.

In order for the network to produce good pictures, the concept of "tree" must be hidden in there somewhere, but it could be hidden in a complicated and indirect manor. I am questioning whether the particular single node selected by the researchers encodes the concept of "tree" or "green thing".

Replies from: johnswentworth↑ comment by johnswentworth · 2020-08-16T17:34:54.257Z · LW(p) · GW(p)

Ah, I see. You're saying that the embedding might not actually be simple. Yeah, that's plausible.

comment by Bogdan Ionut Cirstea (bogdan-ionut-cirstea) · 2024-10-06T14:57:29.491Z · LW(p) · GW(p)

Supervised/Reinforcement: Proxy Problems

Another plausible approach to deal with the proxy problems might be to do something like unsupervised clustering/learning on the representations of multiple systems that we'd have good reasons to believe encode the (same) relevant values - e.g. when exposed to the same stimulus (including potentially multiple humans and multiple AIs). E.g. for some relevant recent proof-of-concept works: Identifying Shared Decodable Concepts in the Human Brain Using Image-Language Foundation Models, Finding Shared Decodable Concepts and their Negations in the Brain, AlignedCut: Visual Concepts Discovery on Brain-Guided Universal Feature Space, Rosetta Neurons: Mining the Common Units in a Model Zoo, Cross-GAN Auditing: Unsupervised Identification of Attribute Level Similarities and Differences between Pretrained Generative Models, Quantifying stimulus-relevant representational drift using cross-modality contrastive learning. Automated interpretability (e.g. https://multimodal-interpretability.csail.mit.edu/maia/) could also be useful here. This might also work well with concepts like corrigibility/instruction following [LW · GW] and arguments about the 'broad basin of attraction' and convergence [LW · GW] for corrigibility.

Replies from: bogdan-ionut-cirstea↑ comment by Bogdan Ionut Cirstea (bogdan-ionut-cirstea) · 2024-11-13T16:44:32.656Z · LW(p) · GW(p)

A few additional relevant recent papers: Sparse Autoencoders Reveal Universal Feature Spaces Across Large Language Models, Towards Universality: Studying Mechanistic Similarity Across Language Model Architectures.

Similarly, the argument in this post and e.g. in Robust agents learn causal world models seem to me to suggest that we should probably also expect something like universal (approximate) circuits, which it might be feasible to automate the discovery of using perhaps a similar procedure to the one demo-ed in Sparse Feature Circuits: Discovering and Editing Interpretable Causal Graphs in Language Models.

Later edit: And I expect unsupervised clustering/learning could help in a similar fashion to the argument in the parent comment (applied to features), when applied to the feature circuits(/graphs).

comment by Rohin Shah (rohinmshah) · 2020-08-18T22:24:36.880Z · LW(p) · GW(p)

Planned summary for the Alignment Newsletter:

I liked the author’s summary, so I’ve reproduced it with minor stylistic changes:

A low-level model of some humans has everything there is to know about human values embedded within it, in exactly the same way that human values are embedded in physical humans. The embedding, however, is nontrivial. Thus, predictive power alone is not sufficient to define human values. The missing part is the embedding of values within the model.

However, this also applies if we replace the phrase “human values” with “trees”. Yet we have a whole class of neural networks in which a simple embedding lights up in response to trees. This is because trees are a natural abstraction, and we should expect to see real systems trained for predictive power use natural abstractions internally.

Human values are a little different from trees: they’re a property of an abstract object (humans) rather than an abstract object themselves. Nonetheless, the author still expects that a broad class of systems trained for predictive power will end up with simple embeddings of human values (~70% chance).

Since an unsupervised learner has a simple embedding of human values, a supervised/reinforcement learner can easily score well on values-proxy-tasks by directly using that model of human values. In other words, the system uses an actual model of human values as a proxy for our proxy of human values (~10-20% chance). This is what is meant by _alignment by default_.

When this works, it’s basically a best-case scenario, so we can safely use the system to design a successor without worrying about amplification of alignment errors (among other things).

Planned opinion:

I broadly agree with the perspective in this post: in particular, I think we really should have more optimism because of the tendency of neural nets to learn “natural abstractions”. There is structure and regularity in the world and neural nets often capture it (despite being able to memorize random noise); if we train neural nets on a bunch of human-relevant data it really should learn a lot about humans, including what we care about.

However, I am less optimistic than the author about the specific path presented here (and he only assigns 10% chance to it). In particular, while I do think human values are a “real” thing that a neural net will pick up on, I don’t think that they are well-defined enough to align an AI system arbitrarily far into the future: our values do not say what to do in all possible situations; to see this we need only to look at the vast disagreements among moral philosophers (who often focus on esoteric situations). If an AI system were to internalize and optimize our current system of values, as the world changed the AI system would probably become less and less aligned with humans. We could instead talk about an AI system that has internalized both current human values and the process by which they are constructed, but that feels much less like a natural abstraction to me.

I _am_ optimistic about a very similar path, in which instead of training the system to pursue (a proxy for) human values, we train the system to pursue some “meta” specification like “be helpful to the user / humanity” or “do what we want on reflection”. It seems to me that “being helpful” is also a natural abstraction, and it seems more likely that an AI system pursuing this specification would continue to be beneficial as the world (and human values) changed drastically.Replies from: johnswentworth

↑ comment by johnswentworth · 2020-08-18T23:17:13.649Z · LW(p) · GW(p)

LGTM

comment by John_Maxwell (John_Maxwell_IV) · 2020-08-15T10:15:39.892Z · LW(p) · GW(p)

Some notes on the loss function in unsupervised learning:

Since an unsupervised learner is generally just optimized for predictive power

I think it's worthwhile to distinguish the loss function that's being optimized during unsupervised learning, vs what the practitioner is optimizing for. Yes, the loss function being optimized in an unsupervised learning system is frequently minimization of reconstruction error or similar. But when I search for "unsupervised learning review" on Google Scholar, I find this highly cited paper by Bengio et al. The abstract talks a lot about learning useful representations and says nothing about predictive power. In other words, learning "natural abstractions" appears to be pretty much the entire game from a practitioner perspective.

And in the same way supervised learning has dials such as regularization which let us control the complexity of our model, unsupervised learning has similar dials.

For clustering, we could achieve 0 reconstruction error (or equivalently, explain all the variation in the data) by putting every data point in its own cluster, but that would completely defeat the point. The elbow method is a well-known heuristic for figuring out what the "right" number of clusters in a dataset is.

Similarly, we could achieve 0 reconstruction error with an autoencoder by making the number of dimensions in the bottleneck be equal to the number of dimensions in the original input, but again, that would completely defeat the point. Someone on the Stats Stackexchange says that there is no standard way to select the number of dimensions for an autoencoder. (For reference, the standard way to select the regularization parameter which controls complexity in supervised learning would obviously be through cross-validation.) However, I suspect this is a tractable research problem.

It was interesting that you mentioned the noise of air molecules, because one unsupervised learning trick is to deliberately introduce noise into the input to see if the system has learned "natural" representations which allow it to reconstruct the original noise-free input. See denoising autoencoder. This is the kind of technique which might allow an autoencoder to learn natural representations even if the number of dimensions in the bottleneck is equal to the number of dimensions in the original input.

BTW, here's an interesting-looking (pessimistic) paper I found while researching this comment: Challenging Common Assumptions in the Unsupervised Learning of Disentangled Representations

You brought up microscope AI. I think a promising research direction here may be to formulate a notion of "ease of interpretability" which can be added as an additional term to an unsupervised loss function (the same way we might, for example, add a term to a clustering algorithm's loss function so that in addition to minimizing reconstruction error, it also seeks to minimize the number of clusters).

Hardcoding "human values" by hand is hopeless, but hardcoding "ease of human interpretability" by hand seems much more promising, since ease of human interpretability is likely to correspond to easily formalizable notions such as simplicity. Also, if your hardcoded notion of "ease of human interpretability" turns out to be slightly wrong, that's not a catastrophe: you just get an ML model which is a bit harder to interpret than you might like.

Another option is to learn a notion of what constitutes an interpretable model by e.g. collecting "ease of interpretability" data from human microscope users.

Of course, one needs to be careful that any interpretability term does not get too much weight in the loss function, because if it does, we may stop learning the "natural" abstractions that we desire (assuming a worst-case scenario where human interpretability is anticorrelated with "naturalness"). The best approach may be to learn two models, one of which was optimized for interpretability and one of which wasn't, and only allow our system to take action when the two models agree. I guess mesa-optimizers in the non-interpretable model are still a worry though.

Replies from: johnswentworth↑ comment by johnswentworth · 2020-08-15T18:27:07.006Z · LW(p) · GW(p)

This comment definitely wins the award for best comment on the post so far. Great ideas, highly relevant links.

I especially like the deliberate noise idea. That plays really nicely with natural abstractions as information-relevant-far-away: we can intentionally insert noise along particular dimensions, and see how that messes with prediction far away (either via causal propagation or via loss of information directly). As long as most of the noise inserted is not along the dimensions relevant to the high-level abstraction, denoising should be possible. So it's very plausible that denoising autoencoders are fairly-directly incentivized to learn natural abstractions. That'll definitely be an interesting path to pursue further.

Assuming that the denoising autoencoder objective more-or-less-directly incentivizes natural abstractions, further refinements on that setup could very plausibly turn into a useful "ease of interpretability" objective.

Replies from: John_Maxwell_IV↑ comment by John_Maxwell (John_Maxwell_IV) · 2020-08-18T09:31:09.439Z · LW(p) · GW(p)

This comment definitely wins the award for best comment on the post so far.

Thanks!

I don't consider myself an expert on the unsupervised learning literature by the way, I expect there is more cool stuff to be found.

comment by algon33 · 2020-08-13T12:57:23.027Z · LW(p) · GW(p)

This came out of the discussion you had with John Maxwell, right? Does he think this is a good presentation of his proposal?

How do we know that the unsupervised learner won't have learnt a large number of other embeddings closer to the proxy? If it has, then why should we expect human values to do well?

Some rough thoughts on the data type issue. Depending on what types the unsupervised learner provides the supervised, it may not be able to reach the proxy type by virtue of issues with NN learning processes.

Recall that tata types can be viewed as homotopic spaces, and construction of types can be viewed as generating new spaces off the old e.g. tangent spaces or path spaces etc. We can view neural nets as a type corresponding to a particular homotopic space. But getting neural nets to learn certain functions is hard. For example, learning a function which is 0 except in two sub spaces A and B. It has different values on A and B. But A and B are shaped like intelocked rings. In other words, a non-linear classification problem. So plausibly, neural nets have trouble constructing certain types from others. Maybe this depends on architecture or learning algorithm, maybe not.

If the proxy and human values have very different types, it may be the case that the supervised learner won't be able to get from one type to another. Supposing the unsupervised learner presents it with types "reachable" from human values, then the proxy which optimises performance on the data set is just unavailable to the system even though its relatively simple in comparison.

Because of this, checking which simple homotopies neural nets can move between would be useful. Depending on the results, we could use this as an arguement that unsupervised NNs will never embed the human values type because we've found out it has some simple properties it won't be able to construct de novo. Unless we do something like feed the unsupervised learner human biases/start with an EM and modify it.

Replies from: John_Maxwell_IV, johnswentworth↑ comment by John_Maxwell (John_Maxwell_IV) · 2020-08-15T12:04:52.712Z · LW(p) · GW(p)

Does he think this is a good presentation of his proposal?

I'm very glad johnswentworth wrote this, but there are a lot of little details where we seem to disagree--see my other comments in this thread. There are also a few key parts of my proposal not discussed in this post, such as active learning and using an ensemble to fight Goodharting and be more failure-tolerant. I don't think there's going to be a single natural abstraction for "human values" like johnswentworth seems to imply with this post, but I also think that's a solvable problem.

(previous discussion for reference [LW(p) · GW(p)])

↑ comment by johnswentworth · 2020-08-13T16:54:00.859Z · LW(p) · GW(p)

This came out of the discussion you had with John Maxwell, right?

Sort of? That was one significant factor which made me write it up now, and there's definitely a lot of overlap. But this isn't intended as a response/continuation to that discussion, it's a standalone piece, and I don't think I specifically address his thoughts from that conversation.

A lot of the material is ideas from the abstraction project which I've been meaning to write up for a while, as well as material from discussions with Rohin that I've been meaning to write up for a while.

How do we know that the unsupervised learner won't have learnt a large number of other embeddings closer to the proxy? If it has, then why should we expect human values to do well?

Two brief comments here. First, I claim that natural abstraction space is quite discrete (i.e. there usually aren't many concepts very close to each other), though this is nonobvious and I'm not ready to write up a full explanation of the claim yet. Second, for most proxies there probably are natural abstractions closer to the proxy, because most simple proxies are really terrible - for instance, if our proxy is "things people say are ethical on twitter", then there's probably some sort of natural abstraction involving signalling which is closer.

Assuming we get the chance to iterate, this is the sort of thing which people hopefully solve by trying stuff and seeing what works. (Not that I give that a super-high chance of success, but it's not out of the question.)

Depending on what types the unsupervised learner provides the supervised, it may not be able to reach the proxy type by virtue of issues with NN learning processes.