Reviewing LessWrong: Screwtape's Basic Answer

post by Screwtape · 2025-02-05T04:30:34.347Z · LW · GW · 18 commentsContents

I. On the big picture of what intellectual progress seems important to you II. On how I wish incentives could be different on LessWrong III. On how the LessWrong community could make group progress IV. I propose an archipelago None 18 comments

Yeah I put this off until the last day, and I'm not sure this is the format Raemon was actually looking for. Oh well.

Then, in proportion to how valuable they seem, spend at least some time this month reflecting...

- ...on the big picture of what intellectual progress seems important to you. Do it whatever way is most valuable to you. But, do it publicly, this month, such that it helps encourage other people to do so as well. And ideally, do it with some degree of "looking back" – either of your own past work and how your views have changed, or how the overall intellectual landscape has changed.

- ...on how you wish incentives were different on LessWrong. Write up your thoughts on this post. (I suggest including both "what the impossible ideal" would be, as well as some practical ideas for how to improve them on current margins)

- ...on how the LessWrong and X-risk communities could make some group epistemic progress on the longstanding questions that have been most controversial. (We won't resolve the big questions firmly, and I don't want to just rehash old arguments. But, I believe we can make some chunks of incremental progress each year, and the Review is a good time to do so)

I. On the big picture of what intellectual progress seems important to you

I've said it elsewhere, and I'll say it again here: the thing that hooked me is The Martial Art Of Rationality. I'll do my proper rant about that elsewhere, but that's the direction I'm pointed in. I want progress in the form of clear examples of ways brains make obvious mistakes paired with drills on how to practice not making that mistake, and a way to check if the drill is working.

Anki and spaced repetition [LW · GW], to stop forgetting things.

Calibration training tools, like the introduction of Fatebook [LW · GW].

Even focusing on checking if things are working by focusing on the feedback loop [LW · GW].

I did a lot of meetup writeups for Meetup In A Box [? · GW]. And yet, this probably isn't much progress? It's hammering pitons into the mountainside to make it easier for more people to climb up, not braving a fresh climb somewhere nobody's been before.

Raemon's Feedback Loop Rationality is a central example of what I want more of.

II. On how I wish incentives could be different on LessWrong

I wish there was more reason for great writers to stick around.

Stop for a moment and think of the great rationalist writers. Grab a piece of paper or open a text doc and write down five names. Don't feel like you have to take a long time, this can be like ~30 seconds.

Did you?

I'm going to leave some space, page down when you're ready.

.

..

...

....

.....

......

.......

......

.....

....

...

..

.

I'd go Eliezer Yudkowsky, Scott Alexander, TheUnitOfCaring, Zvi, Duncan Sabien. If I go for another five, I'd say Alicorn, Gwern, Elizabeth Van Nostrand, Sarah Constantin, Jacob Falkovitch. If you came up with a different list, that's fine.

How many of them write on LessWrong?

Scott and Duncan have Substacks. Sarah, Jacob, and Zvi also have Substacks. Alicorn, Gwern, and Elizabeth have their own websites. TheUnitOfCaring is writing elsewhere.

Zvi crossposts to LessWrong. Gwern does, but most of his best writing isn't here. Elizabeth and Sarah Constantin both write here, mostly crossposting. Jacob used to crosspost, but stopped a couple years ago.

As far as I can tell, Eliezer mostly writes on twitter these days. I get it, if you're trying to do public outreach then you go where the public is, but that seems kind of embarrassing from LessWrong's perspective.

If there was a fresh great writer these days, why would they post to LessWrong? If you're trying to keep up with the rationalist writing, I think a Substack account would be more useful to you than a LessWrong account.

Failing a way to incentivize those writers to LessWrong, maybe incentivize curation? Grab the good Eliezer tweets and bring them back to LW, collect half credit for the upvotes maybe?

III. On how the LessWrong community could make group progress

The question Raemon asks includes X-risk as a community, not just LessWrong. I don't have any answers for X-risk. Sorry.

That points in an important direction though. I think "The LessWrong Community" is too big, and pointed in too many directions. It might be more useful to think of us as a dozen smaller communities in a trench coat. The trench coat is important, it keeps us close enough to recognize each other and make finding each other easier.

Towards that end, having common knowledge [? · GW] seems useful. I'm increasingly fond of the EA Handbook [? · GW]. I'm told EA student groups go through the handbook once a year or so, and cohorts of new EAs get introduced via the handbook. This means if you can actually somewhat assume your fellow EAs know the phrase "marginal impact" or a basic understanding of why charities can have different amounts of impact.

Here's something embarrassing. There's a bunch of Yudkowsky's Sequences posts in the EA Handbook. Pick a LessWrong meetup attendee and an EA meetup attendee at random. I would be more confident that the EA attendee has read Making Beliefs Pay Rent [LW · GW] than the LessWrong attendee.

I've been thinking of writing up some kind of Five Things Aspiring Rationalists Should Know document and try to get every meetup group to read the thing. I expect there'd be some quibbling over exactly what got added or what a close sixth thing would be. That seems fine. It would be important to keep this document short, since "read the sequences" is a bit much. The Highlights From The Sequences is close to what I want here? The idea would be to be able to assume the people in the room (or commenting on a LessWrong thread) had some common building blocks.

Related: I think it would be worth identifying a handful of rationalist skills, figuring out how to evaluate them, and checking if anything we're doing is helping improve those skills. That's not a step forward into new territory, but I think it'd help catch up.

That doesn't mean we can stop- you will always have the 101 space [LW · GW]- but we can't take a step forward together unless most people actually have caught up to the step we're currently on. If there's some objection that everyone points out the first time they hear an idea, but the objection is incorrect, then this lets you get everyone past the objection and on to the second or third problems with an idea instead of just the first. If we can't take a step together, then we should split up into groups small enough that we can get on the same page.

IV. I propose an archipelago

I think friendly, gentle splintering might be in order.

Seven years ago, Raemon floated the idea of LessWrong as a Public Archipelago [LW · GW]. I'd like to see more movement in that direction.

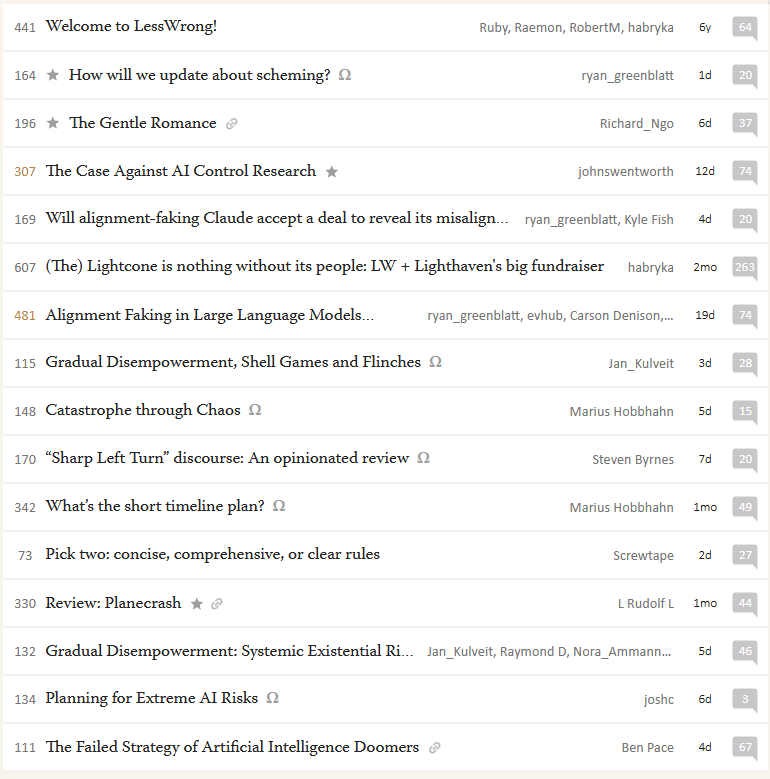

My martial artist's view of rationality, focusing on drills and concrete subskills, isn't the consensus view. There's a lot of AI content on LessWrong these days, and I basically tune it all out. (Thank you to the LessWrong team for the filtering tools to do that!) How much AI content is there? Here's a screenshot of the Latest page right now, from an incognito window:

Welcome to LessWrong, AI, fiction about AI, AI, AI, fundraiser, AI, AI, AI, AI, AI, musings about moderation policy, book review, AI, AI, AI. I'm trying to filter out 3/4ths of the site at this point.

Give me a dojo.lesswrong.com, where the people into mental self-improvement can hang out and swap techniques, maybe a meetup.lesswrong.com where I can run better meetups and find out about the best rationalist get-togethers. Let there be an ai.lesswrong.com for the people writing about artificial intelligence. I'm not against a little cross pollination even! Maybe the other subsections can send over one or two posts a month, as a treat.

Let the islands of the archipelago be styled a little differently, or have their own intro and faqs. If someone's a particularly prolific writer, give them their own subdomain. If it's not too hard to figure out, make it easy to pay the local writer. C'mon, acx.lesswrong.com would be pretty great. For that matter, why does it need to be a subdomain? I'd love a Screwtape.com that pointed at a LessWrong page, especially if I could have more freedom with the user page than LessWrong generally allows.

I suspect an instance of LessWrong, configured to be styled how they wanted it, with similar affordances for subscriptions, clearer moderation controls, and making the one writer's posts front and centre, would be able to put up more of a fight against Substack.

(Gwern.net is an exception. That's a piece of web development art. Scott and Sarah and Zvi and Elizabeth though, my guess is the most significant advancement since Wordpress is the normalization of the Subscribe button giving them money.)

This would let smaller groups identify themselves and differentiate more. From what I can tell, it's normal to archive binge a blog's past posts, but not to archive binge all of LessWrong. Even a "next post" button on the bottom of all of, say, Raemon's posts or Habryka's posts would be interesting. Right now to do that, I'd have to go to their user page, click a post, read it, click back, and find the next post. I'd be interested in seeing some writer's circles maybe, a new Inklings writing on the same domain the way that Hanson and Eliezer shared Overcoming Bias for a while.

Maybe this users in a second rationalist diaspora. There is something useful about having a single conversational locus [LW · GW]. My observation right now is that LessWrong isn't the place where all the great thinkers and writers are making progress though, and it's not where the readers stay to get everything they want to read. If this worked, you could bring back some elements of the community that never returned. There's fairly little fiction published on LessWrong for instance, despite HPMOR being such a big influence and the health of r/rational.

There's my review and pitch. Hope it's helpful.

18 comments

Comments sorted by top scores.

comment by Gunnar_Zarncke · 2025-02-05T07:25:38.346Z · LW(p) · GW(p)

About archipelago: c2.com, the original wiki tried this. They created a federated wiki but that didn't seem to work. My guess: the volume was too low.

And LW has already all the filtering you need: just subscribe to the people and topics you are interested. There is also the unfinishe reading list.

I get tha this may not feel like its own community. Within LW this could be done with ongoing open threads about a topic. But tgat requires an organizer and participation. And we are back at volume. And at needing good writers.

comment by Said Achmiz (SaidAchmiz) · 2025-02-06T06:52:01.999Z · LW(p) · GW(p)

Correction: Gwern’s site is gwern.net, not gwern.com. (The latter is for sale for $10k, though.)

Replies from: Screwtapecomment by plex (ete) · 2025-02-05T09:56:53.768Z · LW(p) · GW(p)

Give me a dojo.lesswrong.com, where the people into mental self-improvement can hang out and swap techniques, maybe a meetup.lesswrong.com where I can run better meetups and find out about the best rationalist get-togethers. Let there be an ai.lesswrong.com for the people writing about artificial intelligence.

Yes! Ish! I'd be keen to have something like this for the upcoming aisafety.com/stay-informed page, where we're looking like we'll currently resort to linking to https://www.lesswrong.com/tag/ai?sortedBy=magic#:~:text=Posts%20tagged%20AI [? · GW] as there's no simpler way to get people specifically to the AI section of the site.

I'd weakly lean towards not using a subdomain, but to using a linkable filter, but yeah seems good.

I'd also think that making it really easy and fluid to cross-post (including selectively, maybe the posts pop up in your drafts and you just have to click post if you don't want everything cross-posted) would be a pretty big boon for LW.

comment by Raemon · 2025-02-06T04:16:00.996Z · LW(p) · GW(p)

I do think we should probably just build the "AI" "Some AI" "No AI" buttons. It felt kinda overkill the last time I considered it but seems probably worth it.

Replies from: ryan_greenblatt↑ comment by ryan_greenblatt · 2025-02-06T04:27:25.738Z · LW(p) · GW(p)

I don't understand. Can't people just hide posts tagged as AI?

Replies from: Screwtape, Raemon↑ comment by Screwtape · 2025-02-06T04:45:17.537Z · LW(p) · GW(p)

I will cheerfully bet at 1:1 odds that half the people who show up on LessWrong do not know how to filter posts on the frontpage. Last time I asked that on a survey [LW · GW] it was close to 50% and I'm pretty sure selection effects for who takes the LW Census pushed that number up.

Replies from: ryan_greenblatt↑ comment by ryan_greenblatt · 2025-02-06T04:48:16.423Z · LW(p) · GW(p)

I don't disagree. I assumed Raemon intended something more elaborate than just a salient button with this effect.

↑ comment by Raemon · 2025-02-06T04:29:21.835Z · LW(p) · GW(p)

Yes but it's an out-of-the-way button mixed in with a bunch of buttons, as opposed to like, kinda the primary choice you're expected to make on the site.

Replies from: Raemon, ryan_greenblatt↑ comment by Raemon · 2025-02-06T04:30:57.318Z · LW(p) · GW(p)

"Isn't it weird an arbitrary to make a front-and-center choice 'hey wanna turn off the AI content'?" yes, which is why we didn't do it the last couple times we talked about it.

Replies from: Screwtape↑ comment by Screwtape · 2025-02-06T04:55:56.956Z · LW(p) · GW(p)

Yeah, I don't know how typical that frontpage full of AI is, I just checked as I was writing the review. It seems like if you don't de-emphasize AI content at this point though, it's easy for AI to overwhelm everything else.

One idea if you want like, a minimal change (as opposed to a more radical archipelago approach) is to penalize each extra post of the same tag on the frontpage? I don't know how complicated that would be under the hood. I'd be happy to see the one or two hottest AI posts, I just don't want to see 3/4ths AI and have to search for the other posts.

To be clear, I think there's a bunch of AI posts on the front page because that's what's getting upvoted, and also if LessWrong does want to show mostly AI posts then especially given the state of the world that's a reasonable priority. It's not what I usually write or usually read, but LessWrong doesn't have to be set up for me- I'm just going to keep reviewing it from my perspective.

Replies from: ryan_greenblatt↑ comment by ryan_greenblatt · 2025-02-06T05:03:57.087Z · LW(p) · GW(p)

[Mildly off topic]

I think it would be better if the default was that LW is a site about AI and longtermist cause areas and other stuff was hidden by default. (Or other stuff is shown by default on rat.lesswrong.com or whatever.)

Correspondingly, I wouldn't like penalizing multiple of the same tag.

I think the non-AI stuff is less good for the world than the AI stuff and there are downsides in having LW feature non-AI stuff from my perspective (e.g., it's more weird and random from the perspective of relevant people).

Replies from: Screwtape, habryka4↑ comment by Screwtape · 2025-02-06T05:57:46.978Z · LW(p) · GW(p)

Your preferences are reasonable preferences, and also I disagree with them and plan to push the weird fanfiction and cognitive self-improvement angles on LessWrong. May I offer you a nice AlignmentForum in this trying time?

Replies from: ryan_greenblatt, Screwtape↑ comment by ryan_greenblatt · 2025-02-06T06:25:04.559Z · LW(p) · GW(p)

Yes, but random people can't comment or post on the alignment forum and in practice I find that lots of AI relevant stuff doesn't make it there (and the frontpage is generally worse than my lesswrong frontpage after misc tweaking).

TBC, I'm not really trying to make a case that something should happen here, just trying to quickly articulate why I don't think the alignment forum fully addresses what I want.

↑ comment by Screwtape · 2025-02-06T06:00:51.567Z · LW(p) · GW(p)

(In case that came across as sarcastic, I sincerely appreciate your stating the position clearly and think lots of other people probably hold that position. I'm being a little silly with paraphrasing a meme, but I mean it in a friendly kind of silly way.)

↑ comment by habryka (habryka4) · 2025-02-06T05:29:47.783Z · LW(p) · GW(p)

(I would not work on LessWrong if that was the case and think it would be very harmful for the world)

↑ comment by ryan_greenblatt · 2025-02-06T04:33:58.353Z · LW(p) · GW(p)

Fair. For reference, here are my selections which are I think are a good default strategy for people who just come to LW for AI/AI safety reasons:

(Why "-" a bunch of stuff rather than "+" AI? Well, because "+" adds some fixed karma while "-" multiplies by a constant (less than 1), and I don't think adding karma is a good strategy (as it shows you really random posts often). I do like minus-ing these specific things also. If I could, I'd probably do AI * 1.5 and then reduce the negative weight on these things a bit.)

So this might be a good suggestion for a "AI focus" preset.

comment by Viliam · 2025-02-05T16:13:04.275Z · LW(p) · GW(p)

The advantage of having a Substack blog is that you can write about topics that are not interesting for rationalists in general, and that you can also write for a non-rationalist audience. And if you write a lot, and you get lots of readers (which includes the non-rationalist audience), you might make some extra money.

For example, I have a blog about programming computer games in Java on Substack. Because of heavy procrastination I only wrote 5 articles in 3 years, so it means nothing. But in a parallel universe where I somehow succeeded to write an article every week, and got thousand readers... posting it on Less Wrong would probably be annoying for most Less Wrong readers, and I would lose most of the audience.

If one day I write a post that seems interesting for rationalists and non-rationalists alike (like maybe something about math or education), it would make sense to post it on Substack (if I already have a blog and an audience there) and cross-post to Less Wrong. If I write something that is only interesting for rationalists, it would make sense to write directly on Less Wrong.

*

I think that it is good to have one central website for the rationalist community. I don't want to follow dozen different writers individually. And it is good to have a place where I can e.g. ask something, and most rationalists will see my question.

Also, compared to Substack, the website is technically good, and compared to Reddit, moderation is good.

*

Grab the good Eliezer tweets and bring them back to LW, collect half credit for the upvotes maybe?

Yes please! Also for others.

And for the rationalists who blog elsewhere and don't cross-post to Less Wrong, maybe once in a month make a list containing links to their posts and short summaries?

I've been thinking of writing up some kind of Five Things Aspiring Rationalists Should Know document and try to get every meetup group to read the thing.

Yes please!

Would it make sense to ask an AI to create this document (after giving it the original Sequences)?

Welcome to LessWrong, AI, fiction about AI, AI, AI, fundraiser, AI, AI, AI, AI, AI, musings about moderation policy, book review, AI, AI, AI. I'm trying to filter out 3/4ths of the site at this point.

There should be big buttons: "All posts", "Only AI posts", "Hide AI posts" at the top of the main page.

Categories other than AI don't make sense, in my opinion, there wouldn't be enough content for them individually. (And that's okay, because on reflection, how much time do you want to spend reading Less Wrong?)