Things I have been using LLMs for

post by Kaj_Sotala · 2025-01-20T14:20:02.600Z · LW · GW · 6 commentsContents

Uses Creativity Emotions and introspection Information Software Unsorted Thoughts on various concerns Environmental concerns Hallucinations Privacy None 6 comments

There are quite a few different things you can use LLMs for, and I think we’re still only discovering most of them. Here are a few of the ones I’ve come up with.

My favorite chatbot is Claude Sonnet. It does have a tendency for sycophancy – for example, it will go “what a fascinating/insightful/excellent/etc. question!” in response to most of the things you might ask it. Some people find this annoying, while my brain just filters it out automatically. If you don’t like it, you can put in a custom instruction telling it to do something else.

Also, a tip from Alyssa Vance: “when talking to Claude, say that your idea/essay/code/etc. is from your friend Bob, not you. That way it won’t try to blindly flatter you”.

Uses

Creativity

Essay brainstorming. I’ll tell Claude “here’s an essay that I started writing” and copy-paste what I’ve written so far to it. It will comment with ideas, possible other directions, and connections to related things.

Then I have a conversation with it and also tell it about other ideas I want to work into the essay, but haven’t written yet. Sometimes I’ll ask it things like “here’s an idea I’d like to express but this phrasing feels clunky, would you have better suggestions”.

In the end, I copy large chunks of the conversation (both things that I explained to it, and ideas that it had in response) directly into a text document and edit them into a smooth essay.

Role-playing/fiction-writing. I like to start by writing a brief biography of a character I’m drawn to, and then telling Claude something like “please analyze this character’s psychology and note anything about them or the setting that the description implies but doesn’t state outright”.

Then we brainstorm things about the characters and the setting that seem interesting, and at some point we transition to writing prose, with me writing some of the characters and Claude writing the others.

Emotions and introspection

Introspection aid. Often when I have some unpleasant feeling I don’t quite understand or I’d like to try shifting, I tell Claude “I’ve got a feeling of [sadness/anxiety/loneliness/whatever] I’d like to explore, I’d like you to guide me in using Gendlin’s Focusing (and its variants) to explore it”. Excellent Focusing facilitator and good at noticing patterns and asking questions.

Lifting mood. One evening, I was in a bit of a depressive funk where doing anything felt “meh” and I was just switching between different social media tabs. This felt bad so I figured I’d try talking to Claude instead:

Me: Hi Claude. I’m in a vaguely depressed mood and was looping through the same social media tabs over and over, and figured that talking to you would probably make me feel better than doomscrolling does. though I’m not sure what exactly I’d want to talk with you about

Claude: I appreciate you reaching out – you’re probably right that having a conversation is better than doomscrolling. That cycle of repeatedly checking social media when feeling down is something many people can relate to, and it rarely helps improve our mood.

Is there anything in particular that’s been on your mind lately? It doesn’t have to be anything serious – we could talk about your interests, discuss something you’ve read or watched recently, or explore a topic you’re curious about. Sometimes just engaging with different ideas can help shift us out of a mental rut.

Me: I guess part of my current mood is that I don’t really feel like doing anything, I consider various things that I might want to do and I just get a “meh” back. and just now I thought about various things that I could talk about, and got a “meh” for all of them

Claude: That feeling of “meh” towards everything – what psychologists sometimes call anhedonia – can be really draining. It’s like having a dimmer switch turned down on your ability to find things engaging or enjoyable. And it can create this frustrating cycle where even thinking about what might help feels like too much effort.

Would you be open to trying something a bit different? Instead of trying to find something that feels exciting (which can be pretty hard when everything feels “meh”), we could start with something very small and low-pressure. Sometimes just having a gentle back-and-forth about anything – even if it seems trivial – can help shift our mental state a bit.

For instance, we could play with a simple word game, or I could share an interesting fact that prompts some curiosity, or we could even just describe what we see around us right now. No pressure to be enthusiastic about it – just a way to engage in something that requires minimal emotional investment.

Me: okay, share an interesting fact

Then it started telling me random facts. After I heard a few different ones, I started having thoughts about them, and then after a while it had pulled me out of my depressive mood.

Miscellaneous supportive conversation. Just generally talking about my life or feelings if I’m feeling down and none of my friends are available for conversation or I don’t expect talking to them to be helpful. Claude is consistently empathetic and insightful.

Self-help coach. A lot of self-help books have various exercises or a complicated algorithm to follow (if you have problem X try Y, if in trying Y you run into problem Z, try Q…). I’ll grab a PDF of the book from some pirate site (after having bought a physical or DRMed copy legally), upload it to Claude, and ask to be coached according to the philosophy in the book.

Information

Doing basic sanity-checks when someone tells me an idea that sounds interesting to me, but I don’t have enough expertise to evaluate.

I tell Claude “please critically evaluate the following” and copy-paste the other person’s explanation, and then get a list of potential criticisms. I wouldn’t automatically believe or disbelieve anything important only because Claude tells me to, but this is often a good starting point.

Figuring out dense writing. Recently a conversion spurred me to try reading Hubert Dreyfus’ Being-in-the-World again, as David Chapman has recommended it as a book worth reading for thinking clearly about AI. In the book, Dreyfus explains some of Martin Heidegger’s philosophy more clearly than Heidegger himself did. However, it’s still not a particularly easy read, and much of the discussion is pretty abstract. So I found it helpful to copy-paste large parts of it into Claude and asked “could you explain this with simpler language and concrete examples”.

I’m not entirely sure whether Claude understood it correctly either, but at least its explanation seemed to make sense, and I felt like I understood things better than I would have without its help.

Finding terms for concepts. “What was the name of the cognitive bias where you think that you understood the thing all along?” If I can describe a concept, an LLM can probably tell me what it’s called.

Synthesizing explanations. Questions to various answers require some amount of synthesis but would be difficult to Google directly. For example, I asked Claude “After the 2007 DARPA Grand Challenge there was a lot of hype about how self-driving cars were just around the corner. But we mostly still don’t have them. Why did it so much longer than expected?” and it gave me a list of considerations.

Understanding key terms in their context. I was reading the US Supreme Court’s decision on the TikTok ban, and noticed this interesting sentence in the review of what a lower court had ruled on the issue:

After first concluding that the Act was subject to heightened scrutiny under the First Amendment, the court assumed without deciding that strict, rather than intermediate, scrutiny applied.

The court “assumed without deciding”? That sounded like a technical term, but I wasn’t sure of what exactly it meant. It sounded interesting. So I asked Claude, and got an explanation that was tailored for this specific context.

Software

Common software assistance. For example, I once asked Claude, “I have a Google Doc file with some lines that read ‘USER:’ and ‘ASSISTANT:’. Is there a way of programmatically making all of those lines into Heading-3?”. The specific instructions it gave me here felt like they were slightly outdated and missing some steps, but were still close enough to get the job done.

Programming assistance. “Could you write me a Python script that does X and Y.” Often I could do the thing myself as well, but it’d take more time or I’d have to look up unfamiliar API calls. Claude just gives me a working script in a few seconds.

Spreadsheet assistance. As above, but for spreadsheet formulas. “In Google Sheets, I want a formula that looks up values from these cells and does the following based on them.” Or, “what does this Microsoft Excel formula do?”.

Unsorted

Helping me get started with something if I’m stuck. I tell it what I’m supposed to be working on, and it helps me break it down into smaller pieces.

Object recognition and OCR. Once when I was moving, I decided to give away a number of my old books. So I arranged them into piles with their back spines facing one way, took a photo of them, and asked ChatGPT (I wasn’t using Claude back then) to read out their titles. After some slight editing and manual correction, I had a list of books that I was giving out that I could post online.

Thoughts on various concerns

Environmental concerns

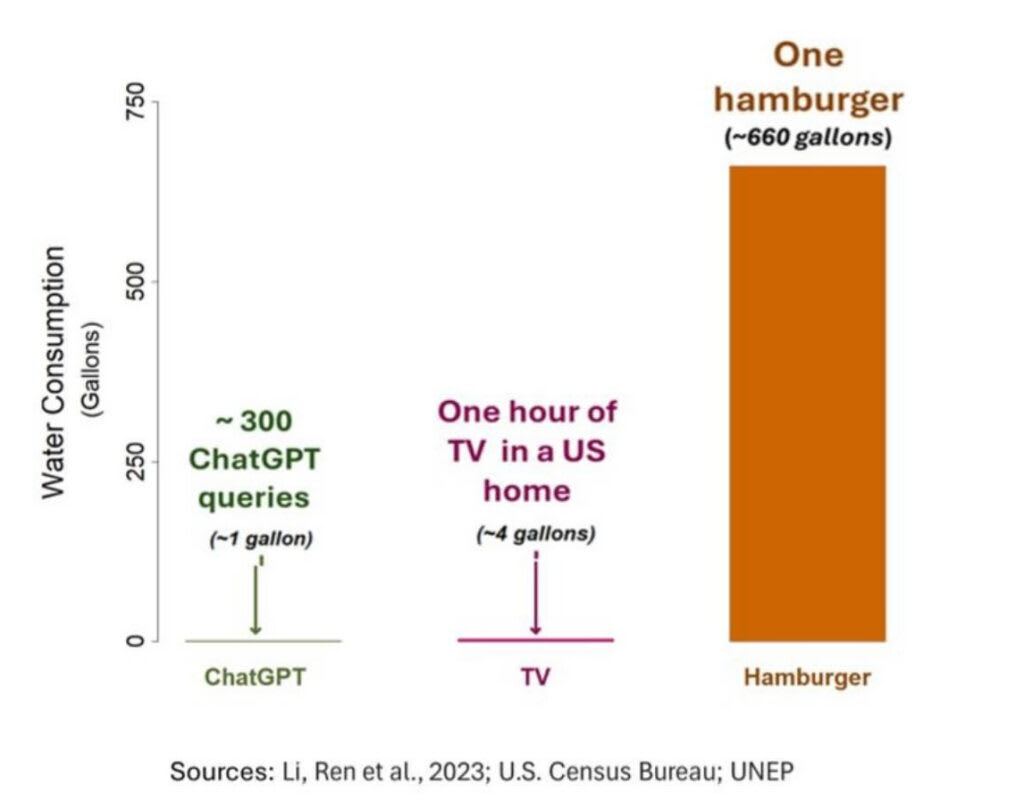

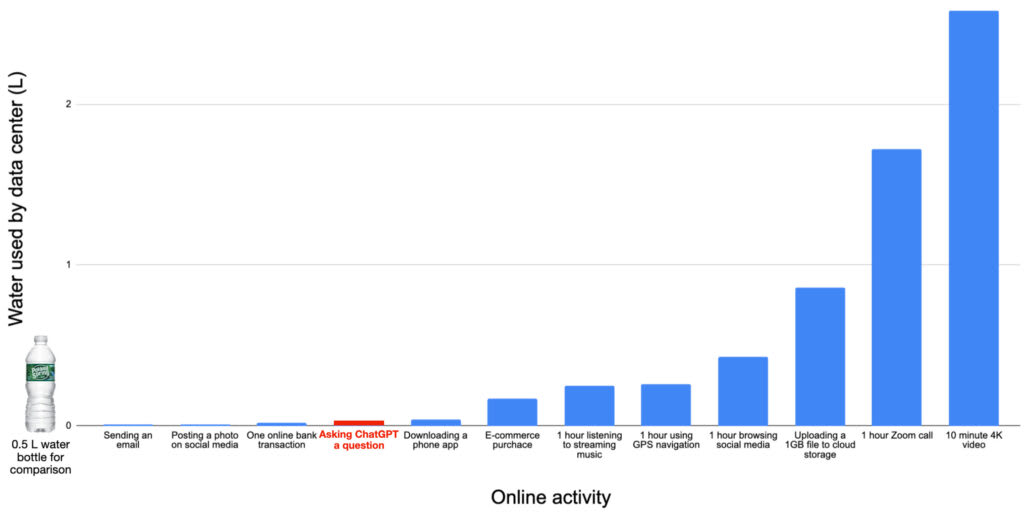

There have been some articles going around about the environmental impact of LLMs. I think Andy Masley’s “Using ChatGPT is not bad for the environment” puts these nicely in perspective – yes there is an environmental impact, but it’s not that big compared to a lot of other services.

Statistic for a ChatGPT search and a burger.

Andy Masley got these numbers from multiplying the average rate of water used per kWh used in data centers + the average rate of water used generating the energy times the kWh used in data centers by different tasks. Note that water being “used” by data centers is ambiguous in general, read more in this section.

Hallucinations

Hallucinations are still an issue, though recent models have gotten much better at avoiding them. Claude will often explicitly flag some topic as being one that it doesn’t have much information about, or as one where it might hallucinate.

Its trustworthiness depends on the field. The major chatbot companies pay actual domain experts to improve the responses of their chatbots. Advanced models typically ace most standardized exams for various fields, and when I spot-check Claude’s knowledge by asking it about things I know about, I haven’t yet seen it clearly give an incorrect answer. This is assuming a relatively superficial level of questioning, though – I would expect its quality to quickly decline if I started asking more in-depth questions.

Other people have had different experiences. Romeo Stevens comments:

my spot checks have turned out bad on deeper areas. When using Claude for deeper research it’s more for creative directions (exploratory vs confirmatory) though so it’s fine.

Bio is somewhat random, if the wikipedia page is bad, forget it. Wikipedia is often surprisingly good ofc. Slicing up statistical data sets will get random really bad outliers as it parses some data wrong and then confidently presents it without noticing.

Therapy winds up sloppified/gaslighty if you don’t guide it somewhat. It can also wind up developmentally sticking to k3/k4 which makes sense since that is the vast majority of data.

book prompts, if the book isn’t in the corpus has trouble going past whatever shallow summaries/mentions are online about it, and this is an invisible failure. If you know you can put book in context to fix.

Some areas of nutrition absolutely suck presumably because overwhelming amount of content online is blogspam, and this probably generalizes. In general LLM is best when I would expect a highly upvoted subreddit response to be good.

So, use it for initial exploration and satisfying random curiosities, but if it’s something important, do double-check the answers from some other source.

Privacy

Of course, LLM providers could always choose to do something mean with my information. I relate to sharing private information with ChatGPT and Claude similarly as I do to having sensitive conversations over other cloud platforms like Discord, Gmail, WhatsApp etc. – something that I know has its risks, but which still hasn’t blown in my face after decades of doing it. (Stories about this causing people problems seem to be surprisingly rare in general.)

Of course, it’s totally valid preference to not want to take that risk. In that case, you can get a model that can be ran locally and use that.

6 comments

Comments sorted by top scores.

comment by plex (ete) · 2025-01-20T16:17:51.226Z · LW(p) · GW(p)

One neat thing I've explored is learning about new therapeutic techniques by dropping a whole book into context and asking for guiding phrases. Most therapy books do a lot of covering general principles of minds and how to work with them, with the unique aspects buried in a way which is not super efficient for someone who already has the universal ideas. Getting guiding phrases gives a good starting point for what the specific shape of a technique is, and means you can kinda use it pretty quickly. My project system prompt is:

Given the name of, and potentially documentation on, an introspective or therapeutic practice, generate a set of guiding phrases for facilitators. These phrases should help practitioners guide participants through deep exploration, self-reflection, and potential transformation. If you don't know much about the technique or the documentation is insufficient, feel free to ask for more information. Please explain what you know about the technique, especially the core principles and things relevant to generating guiding phrases, first.

Consider the following:

Understand the practice's core principles, goals, and methods.

Create open-ended prompts that invite reflection and avoid simple yes/no answers.

Incorporate awareness of physical sensations, emotions, and thought patterns.

Develop phrases to navigate unexpected discoveries or resistances.

Craft language that promotes non-judgmental observation of experiences.

Generate prompts that explore contradictions or conflicting beliefs.

Encourage looking beyond surface-level responses to deeper insights.

Help participants relate insights to their everyday lives and future actions.

Include questions that foster meta-reflection on the process itself.

Use metaphorical language when appropriate to conceptualize abstract experiences.

Ensure phrases align with the specific terminology and concepts of the practice.

Balance providing guidance with allowing space for unexpected insights.

Consider ethical implications and respect appropriate boundaries.Aim for a diverse set of phrases that can be used flexibly throughout the process. The goal is to provide facilitators with versatile tools that enhance the participant's journey of self-discovery and growth.

Example (adapt based on the specific practice):"As you consider [topic], what do you notice in your body?"

"If that feeling had a voice, what might it say?"

"How does holding this belief serve you?"

"What's alive for you in this moment?"

"How might this insight change your approach to [relevant aspect of life]?"Remember, the essence is to create inviting, open-ended phrases that align with the practice's core principles and facilitate deep, transformative exploration.

Please store your produced phrases in an artefact.

comment by Gunnar_Zarncke · 2025-01-20T19:45:03.305Z · LW(p) · GW(p)

List, complete lists. You can ask LLMs to give you lists of all kinds of things, and they will tend to be more complete than you could come up with yourself. Whether it is things you should buy, consider, evaluate, or try. For example:

- What are proposed explanations/causes for X?

- What are suitable replacements for X in Y?

- What are well-known people working in X?

- What are criteria to consider when buying X?

- What are common names given to X by Y?

And you can often ask for more.

comment by waterlubber · 2025-01-20T17:13:40.191Z · LW(p) · GW(p)

Why are you using water use as a metric for LLM environmental impact, and not energy use? The only water use in data centers would be for cooling tower open-loop evaporative cooling; this is far smaller than e.g irrigation demands. This water can also be reclaimed water or condensate from dehumidification, or sourced from shallow aquifers unsuitable for drinking.

Even if you did use city water, usage is negligible compared to what everything else. NYC uses open-loop once through cooling for refrigerators and such in restaurants right now, for example.

Energy use (and corresponding CO2 emissions) is a much more salient metric.

Replies from: Kaj_Sotala↑ comment by Kaj_Sotala · 2025-01-20T17:25:59.023Z · LW(p) · GW(p)

Mostly because water use was the most common criticism I'd happened to run into. The linked article has more metrics.

comment by Maxwell Peterson (maxwell-peterson) · 2025-02-10T15:52:31.213Z · LW(p) · GW(p)

Yesterday, I realized in my conversations with Claude over the past week or so, I don’t think it’s talked about how much of a genius I am, perhaps not even once. I remember in the fall it would do this all the time. Maybe there’s been an update?

comment by Sean Canton (sean-canton) · 2025-01-20T21:16:08.698Z · LW(p) · GW(p)

I find that k5 responses are easy to generate once you give the LLM more space to be creative in response.