Posts

Comments

Are there any existing articles, deep dives, or research around PCBTF? It is a supposedly "green" solvent used as a replacement of xylene due to its status as VOC-exempt, despite being similarly volatile.

It has all the hallmarks of being one of the wretched forever chemicals - fat soluble, denser than water (accumulates in groundwater supplies instead of evaporating), and heavily halogenated. There's very little cancer and toxicity data, and what does exist seems pretty bad. The EPA has prevented employees from acknowledging the issue; (also see this article by the Intercept) to my understanding, this is because it is grandfathered as an existing chemical that has been in production for a long time (although usage has only increased in recent years as a replacement for "high-VOC" solvents, such as ethanol.)

This seems like a clear-cut case of replacing a relatively mundane solvent (primarily xylene, ethanol and others as well) with a far more toxic, persistent compound with far worse effects for very misguided reasons. Am I missing something there (perhaps it breaks down rather quickly in the environment?) or is this a rather neglected & significant issue?

That's why I brought it up; I thought it was an interesting contrast.

I am skeptical of it, but not altogether that skeptical. If language is "software" one could make an analogy to e.g symbolic AI or old fashioned algorithms vs modern transformer architectures; they perform differently at different tasks.

There's a book of fiction, Blindsight, by Peter Watts, that explores what intelligent life would look like without consciousness. You may be interested in reading it, even only recreationally, but it covers a lot of ground around the idea you're talking about here.

I would also not discredit the ability of the emotive brain. Just like anything else, it can be trained - I think a lot of engineers, developers or technical professionals can relate to their subsconscious developing intuitive, rapid solutions to problems that conscious thought does not.

Hard agree on "post rationalism" being the alignment of the intuitive brain with accurate, rational thought. To the extent I've been able to do it, it's extremely helpful, at least in the areas I frequently practice.

Counterpoints: leaded gasoline, Montreal protocol, and human genome editing. I don't think it's possible to completely eliminate development but an OOM reduction in the rate of deployment or spread is easily achievable.

Why are you using water use as a metric for LLM environmental impact, and not energy use? The only water use in data centers would be for cooling tower open-loop evaporative cooling; this is far smaller than e.g irrigation demands. This water can also be reclaimed water or condensate from dehumidification, or sourced from shallow aquifers unsuitable for drinking.

Even if you did use city water, usage is negligible compared to what everything else. NYC uses open-loop once through cooling for refrigerators and such in restaurants right now, for example.

Energy use (and corresponding CO2 emissions) is a much more salient metric.

I think the support/belief of "AI bad" is widespread, but people don't have a clear goal to rally behind. People want to support something, but give a resigned "what am I to do?"

If there's a strong cause with a clear chance of helping (i.e a "don't build AI or advance computer semiconductors for the next 50 years" guild) people will rally behind it.

Fair.

I think AI concerned people mostly take their concerns about AI to somewhat insular communities: Twitter, et. al. Wealthy interest groups are going to dominate internet communication and I don't think that's an effective way to drum up concern or coordination. The vast majority of the working-class, average Joe, everyday population is going to be opposed to AI for one reason or another; I think AI interest groups developing a united front specifically for this people would be the most effective technique.

(one way to accomplish this might be to spread these "quietly" among online sympathizers before "deploying" them to the public? I'm sure this sort of grassroots PR campaign has been solved and analyzed to death already)

The intent was to make that taboo permanent, not a "pause", and it more or less succeeded in that.

I would not be opposed to a society stalled at 2016 level AI/computing that held that level indefinitely. Progress can certainly continue without AGI via e.g human intelligence enhancement or just sending our best and brightest to work directly on our problems instead of on zero-sum marketing or AI efforts.

Um, humans in the Culture have no significant influence on the lightcone (other than maybe as non-agentic "butterfly wings"). The Minds decide what's going to happen

Humans were still free to leave the Culture, however; not all of the lightcone was given to the AI. Were we to develop aligned ASI, it would be wise to slice off a chunk of the lightcone for humans to work on "on their own."

I don't think the Culture is an ideal outcome, either, merely a "good" one that many people would be familiar with. "Uplifting" humans rather than developing replacements for them will likely lead us down a better path, although the moral alignment shift in whatever the uplifting process is might limit its utility.

This entire article was written solely by me without the "assistance" of any language models.

Anecdotal data point: an (online) friend of mine with EDS successfully used BPC-157 to treat shoulder ligament injury, although apparently it promoted scar tissue formation as well. He claims that it produced a significant improvement in his symptoms.

Once again, a post here has put into well researched, organized words what I have tried to express verbally wtih friends and family. You have my most sincere gratitude for that.

I've been sending this around to aforementioned friends and family and I am again surprised by how receptive people are. I really do think that our best course of action is to talk to & prime people for the roller coaster to come - massive public sentiment against AI (e.g Butlerian Jihad) is our best bet.

While I very much would love a new frontier era (I work at a rocket launch startup), and would absolutely be on board with Culture utopia, I see no practical means to ensure that any of these worlds come about without:

- Developing proper aligned AGI and making a pivotal turn, i.e creating a Good™ culture mind that takes over the world (fat chance!)

- Preventing the development of AI entirely

I do not see a world where AGI exists and follows human orders that does not result in a boot, stomping on a face, forever -- societal change in dystopian or totalitarian environments is largely produced via revolution, which becomes nonviable when means of coordination can be effectively controlled and suppressed at scale.

First world countries only enjoy the standard of living they do because, to some degree, the ways to make tons of money are aligned with the well being of society (large diversified investment funds optimize for overall economic well being). Break this connection and things will slide quickly.

This post collects my views on, and primary opposition to, AI and presents them in a very clear way. Thank you very much on that front. I think that this particular topic is well known in many circles, although perhaps not spoken of, and is the primary driver of heavy investment in AI.

I will add that capital-dominated societies, e.g resource extraction economies, suffer a typically poor quality of life and few human rights. This is a well known phenomenon (the "resource curse") and might offer a good jumping -off point for presenting this argument to others.

I agree with you on your assessment of GPQA. The questions themselves appear to be low quality as well. Take this one example, although it's not from GPQA Diamond:

In UV/Vis spectroscopy, a chromophore which absorbs red colour light, emits _____ colour light.

The correct answer is stated as yellow and blue. However, the question should read transmits, not emits; molecules cannot trivially absorb and re-emit light of a shorter wavelength without resorting to trickery (nonlinear effects, two-photon absorption).

This is, of course, a cherry-picked example, but is exactly characteristic of the sort of low-quality science questions I saw in school (e.g with a teacher or professor who didn't understand the material very well). Scrolling through the rest of the GPQA questions, they did not seem like questions that would require deep reflection or thinking, but rather the sort of trivia things that I would expect LLMs to perform extremely well on.

I'd also expect "popular" benchmarks to be easier/worse/optimized for looking good while actually being relatively easy. OAI et. al probably have the mother of all publication biases with respect to benchmarks, and are selecting very heavily for items within this collection.

I believe the intention in Georgism is to levy a tax that eliminates appreciation in the value of the land. This is effectively the same as renting the land from the government. You are correct in that it would prevent people from investing in land -- investment in land is purely rent-seeking behavior, and benefits no one; building improvements on land (eg mines, factories, apartment complexes) that generate value, however, does.

It could be using nonlinear optical shenanigans for CO2 measurement. I met someone at NASA using optical mixing and essentially using a beat frequency to measure atmospheric CO2 with all solid state COTS components (based on absorption of solar radiation). Technique was called optical heterodyne detection.

I've also seen some mid IR leds being sold, although none near the 10um CO2 wavelength.

COTS CO2 monitors exist for ~$100 and could probably be modified to messure breathing gases. They'll likely be extremely slow.

The cheapest way to measure CO2 concentration, although likely most inaccurate and slow, would be with the carbonic acid equilibrium reaction in water and a pH meter.

Ultimately the reason it's not popular is probably because it doesn't seem that useful. Breathing is automatic and regulated by blood CO2 concentration; I find it hard to believe that the majority of the population, with otherwise normal respiratory function, would be so off the mark. Is there strong evidence to suggest this is the case?

Strongly agree. I see many, many others use "intelligence" as their source of value for life -- i.e humans are sentient creatures and therefore worth something -- without seriously considering the consequences and edge cases of that decision. Perhaps this view is popularized ny science fiction that used interspecies xenophobia as an allegory for racism; nonetheless, it's a somewhat extreme position to stick too if you genuinely believe in it. I shared a similar opinion a couple of years ago, but decided to shift it to a human-focused terminal value months back because I did not like the conclusions it generated when taken to its logical conclusion with present and future society.

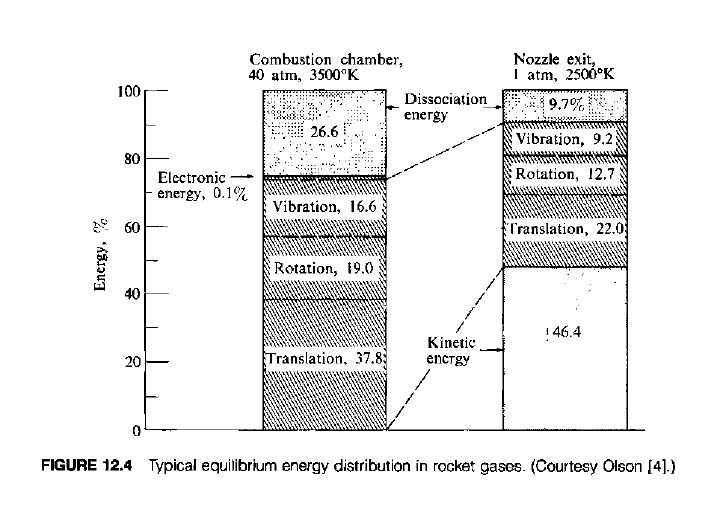

Aside from dissociation/bond energy, nearly all of the energy in the combustion chamber is kinetic. Hill's Mechanics and Thermodynamics of Propulsion gives us this very useful figure for the energy balance:

A good deal of the energy in the exhaust is still locked up in various high-energy states; these states are primarily related to the degrees of freedom of the gas (and thus gamma) and are more strongly occupied at higher temperatures. I think that the lighter molecular weight gasses have equivalently less energy here, but I'm not entirely sure. This might be something to look into.

Posting this graph has got me confused as well, though. I was going to write about how there's more energy tied up in the enthalpy of the gas in the exhaust, but that wouldn't make sense - lower MW propellants have a higher specific heat per unit mass, and thus would retain more energy at the same temperature.

I ran the numbers in Desmos for perfect combustion, an infinite nozzle, and no dissociation, and the result was still there, but quite small:

https://www.desmos.com/calculator/lyhovkxepr

The one thing to note: the ideal occurs where the gas has the highest speed of sound. I really can't think of any intuitive way to write this other than "nozzles are marginally more efficient at converting the energy of lighter molecular weight gases from thermal-kinetic to macroscopic kinetic."

You've got the nail on the head here. Aside from the practical limits of high temperature combustion (running at a lower chamber temperature allows for lighter combustion chambers, or just practical ones at all) the various advantages of a lighter exhaust most than make up for the slightly lower combustion energy. the practical limits are often important: if your max chamber temperature is limited, it makes a ton of sense to run fuel rich to bring it to an acceptable range.

One other thing to mention is that the speed of sound of the exhaust matters quite a lot. Given the same area ratio nozzle and same gamma in the gas, the exhaust mach number is constant; a higher speed of sound thus yields a higher exhaust velocity.

The effects of dissociation vary depending on application. It's less of an issue with vacuum nozzles, where their large area ratio and low exhaust temperature allow some recombination. For atmospheric engines, nozzles are quite short; there's little time for gases to recombine.

I'd recommend playing around with CEA (https://cearun.grc.nasa.gov/), which allows you to really play with a lot of combinations quickly.

I'd also like to mention that some coefficients in nozzle design might make things easier to reason about. Thrust coefficient and characteristic velocity are the big ones; see an explanation here

Note that exhaust velocity is proportional to the square root of (T_0/MW), where T_0 is chamber temperature.

Thrust coefficient, which describes the effectiveness of a nozzle, purely depends on area ratio, back pressure, and the specific heat ratio for the gas.

You're right about intuitive explanations of this being few and far between. I couldn't even get one out of my professor when I covered this in class.

To summarize:

- Only gamma, molecular weight, chamber temp T0, and nozzle pressures affect ideal exhaust velocity.

- Given a chamber pressure, gamma, and back pressure, (chamber pressure is engineering limited), a perfect nozzle will expand your exhaust to a fixed mach number, regardless of original temperature.

- Lower molecular weight gases have more exhaust velocity at the same mach number.

- Dissociation effects make it more efficient to avoid maximizing temperature in favor of lowering molecular weight.

This effect is incredibly strong for nuclear engines: since they run at a fixed, relatively low engineering limited temperature, they have enormous specific impulse gains by using as light a propellant as possible.

You might be able to just survey the thing. If you've got a good floor plan and can borrow some surveying equipment, you should be able to take angles to the top and just work out the height that way. Your best bet would probably be to use averaged GPS measurements, or existing surveys, to get an accurate horizontal distance to the spire, then take the angle from the base to the spire and work out some trig. You might be able to get away with just a plain camera, if you can correct for the lens distortion.

I believe this is going to be vastly more useful for commercial applications than consumer ones. Architecture firms are already using VR to demonstrate design concepts - imagine overlaying plumbing and instrumentation diagrams over an existing system, to ease integration, or allowing engineers to CAD something in real time around an existing part. I don't think it would replace more than a small portion of existing workflows, but for some fields it would be incredibly useful.

This seems like a behavior that might have been trained in rather than something emergent.

As silly as it is, the viral spread of deepfaked president memes and AI content would probably serve to inoculate the populace against serious disinformation - "oh, I've seen this already, these are easy to fake."

I'm almost certain the original post is a joke though. All of its suggestions are opposite of anything you might consider a good idea.

That makes a lot of sense, and I should have considered that the training data of course couldn't have been predicted. I didn't even consider RLHF--I think there's definitely behaviors where models will intentionally avoid predicting text they ""know"" will result in a continuation that will be punished. This is a necessity, as otherwise models will happily continue with some idea before abruptly ending it because it was too similar to something punished via RLHF.

I think this means that these "long term thoughts" are encoded into the predictive behavior of the model turning training, rather than any sort of meta learning. An interesting experiment would be including some sort of token that indicates RLHF will or will not be used when training, then seeing how this affects the behavior of the model.

For example, apply RLHF normally, except in the case that the token [x] appears. In that case, do not apply any feedback - this token directly represents an "out" for the AI.

You might even be able to follow it through the network and see what affects the feedback has.

Whether this idea is practical or not requires further thought.. I'm just writing it now, late at night, because I figure it's useful enough to possibly be made into something meaningful.

This was a well written and optimistic viewpoint, thank you.

I may be misunderstanding this, but it would seem to me that LLMs might still develop a sort of instrumentality - even with short prediction lengths - as a byproduct of their training. Consider a case where some phrases are "difficult" to continue without high prediction loss, and others are easier. After sufficient optimization, it makes sense that models will learn to go for what might be a less likely immediate option in exchange for a very "predictable" section down the line. (This sort of meta optimization would probably need to happen during training, and the idea is sufficiently slippery that I'm not at all confident it'll pan out this way.)

In cases like this, could models still learn some sort of long form instrumentality, even if it's confined to their own output? For example, "steering" the world towards more predictable outcomes.

It's a weird thought. I'm curious what others think.

That's also a good point. I suppose I'm overextending my experience with weaker AI-ish stuff, where they tend to reproduce whatever is in their training set — regardless of whether or not it's truly relevant.

I still think that LW would be a net disadvantage, though. If you really wanted to chuck something into an AGI and say "do this," my current choice would be the Culture books. Maybe not optimal, but at least there's a lot of them!

On a vaguely related side note: is the presence of LessWrong (and similar sites) in AI training corpora detrimental? This site is full of speculation on how a hypothetical AGI would behave, and most of it is not behavior we would want any future systems to imitate. Deliberately omitting depictions of malicious AI behavior in training datasets may be of marginal benefit. Even if simulator-style AIs are not explicitly instructed to simulate a "helpful AI assistant," they may still identify as one.