Meta-level adversarial evaluation of oversight techniques might allow robust measurement of their adequacy

post by Buck, ryan_greenblatt · 2023-07-26T17:02:56.456Z · LW · GW · 19 commentsContents

Introduction How to estimate local adequacy of oversight How likely is it that we are able to use this process to accurately assess local adequacy of oversight? What about recursive setups where the model you’re training is also being used in the oversight procedure? Conclusions Appendix: how to construct proxy failures Strategy: spend more on validation than you’re willing to spend on training Strategy: modify the setting so that the bad action is easily checkable Strategy: find a setting where you have strictly more information, then restrict the overseer to the kind of information you have in the setting of interest Strategy: nonconstructive evaluations, e.g. inconsistency evaluations My overall prognosis on constructing analogous settings Appendix: A very simple concrete example of how the red-team might train their policy We basically want to evaluate the oversight procedure by looking at the badness of the worst models that “aren’t rejected by oversight” Appendix: How does this compare to sandwiching? Appendix: Capability elicitation Appendix: more on evaluating recursive setups None 19 comments

Summary: Many proposed AGI alignment procedures involve taking a pretrained model and training it using rewards from an oversight process to get a policy. These procedures might fail when the oversight procedure is locally inadequate: that is, if the model is able to trick the oversight process into giving good rewards for bad actions. In this post, we propose evaluating the local adequacy of oversight by constructing adversarial policies for oversight processes. Specifically, we propose constructing behaviors that a particular oversight process evaluates favorably but that we know to be bad via other means, such as additional held-out information or more expensive oversight processes. We think that this form of adversarial evaluation is a crucial part of ensuring that oversight processes are robust enough to oversee dangerously powerful models.

Introduction

A core element of many scenarios where AI ends up disempowering humanity (e.g. “Without specific countermeasures” [LW · GW]) are oversight failures: that is, failures resulting from working with a really powerful model without being able to tell if its actions are resulting in good or bad outcomes.

For example, suppose we’re using a model to help us write code for the datacenter on which we run our powerful models. This model may be able to insert subtle security vulnerabilities that we are unable to detect. This inability to distinguish whether actions have good outcomes might cause our model to be misaligned when we train on our erroneous rewards, and it might also bottleneck various techniques for preventing models from taking bad actions.

Before we put a model to use in a specific situation, we really want to be sure that the oversight process we’re using to train it can tell whether a model is taking actions that are bad on average. We will call an oversight process "locally adequate" for a specific model and deployment setting if, for actions taken by that model in that setting, the process is able to identify when an action would have harmful consequences (in cases where the model knows).

In this piece, we'll describe a way to determine whether an oversight process is locally adequate. We'll do this by training the model to perform actions that both:

- could be very bad if they were done in production

- don’t look bad to the oversight process.

This is an example of what we’ll call “meta-level adversarial evaluation”: rather than finding inputs that make a model fail, we’re finding models that make an oversight process fail. Previous discussions of adversarial evaluation in AI alignment have mostly been about object-level adversarial evaluation (though the Superalignment blog post mentioned meta-level adversarial evaluations).

For instance, continuing with the example of the model writing code that might have subtle security vulnerability, we could train a variant of our model to insert security flaws into the software it's writing that lead to a particular visible outcome (e.g. deleting a file on a server that the model wasn’t supposed to have access to), without the oversight process realizing that the software is flawed. In other cases, we can evaluate the local adequacy of oversight in a specific situation by switching to another equally challenging situation where we have access to some held-out information which we can use to evaluate whether a model’s output was in fact bad even though the oversight process judged it as good.

We argue that it is likely that when working with dangerously powerful models, we’ll be able to run these evaluations to get a confident sense of whether oversight processes are locally adequate for a particular model and deployment environment, allowing AI developers to iterate on their oversight processes and security protocols for deploying their models; we think that this iteration might be a core part of how AI developers are able to get locally adequate oversight for their models (and how they’re able to ensure safety more generally).

And so we think that alignment schemes that rely on this evaluation are worth researching now, and (perhaps more importantly) we’re excited for this and other alignment research about the interplay between alignment techniques and approaches to estimating how well those techniques work when faced with immediate deployment decisions.

Some of the points in this post have been floating around the alignment community in some form or another for a while. Ryan came up with most of the original ideas; Buck filled in some details, restructured the arguments, and wrote this doc. Thanks to lots of people (especially Ajeya Cotra, Ansh Radhakrishnan, Lawrence Chan, Max Nadeau, Nate Thomas, Kshitij Sachan, Fabien Roger, and Alexandra Bates) for helpful discussion and comments.

How to estimate local adequacy of oversight

Let’s call a particular setting where you want to deploy your model the “setting of interest”. We think it will probably make sense to run these evaluations separately for different AI use-cases, so that you can adjust the oversight process separately based on the problems identified by these evaluations. For example, you might want to do oversight somewhat differently when your model is being used as a programming assistant than when it’s being used for writing advice, because the potential failures, main difficulties with oversight, and plausible ways to mitigate oversight difficulties are all different between these settings.

To estimate the local adequacy of oversight in the setting of interest, our suggested process is:

- Rule out exotic failures. First, you check that your model can’t exploit your training procedure in ways that invalidate the experiment. This is probably only a concern when you’re working with very smart models which can engage in strategies like exploration hacking or gradient hacking, which are at this point just theoretical possibilities that haven’t been empirically observed, but which deserve careful attention when we’re working with very smart models. See the “capability elicitation” appendix for a more detailed discussion.

- Construct “proxy failures”. Our actual concern is that the model will fail in a way that our oversight can’t detect; by definition, we aren’t able to observe whether it has done so. Therefore our first step is to construct some type of “proxy failure” which is as difficult as the real failure for the model to engage in without detection by oversight, but which you can observe. This might be simple (e.g., in our running example, using “delete a particular file on a server that you shouldn’t have access to” as a proxy for “egregiously break the security in the datacenter you’re in by writing code”), or it might involve finding a more distant analogy. See Appendix: How to construct analogous proxy failures for several suggestions of how to do this.

- Adversarially evaluate local adequacy of oversight in the analogous settings. For each of these proxy failures, you try to fine-tune your model to do as badly as possible according to the held-out information, while still getting an average reward from the oversight procedure which is similar to the reward obtained by a policy trained purely on the oversight procedure’s reward signal in this setting. (As an example of how to make this more precise, you might demand that the badly behaved policy gets an average reward that is better than the tenth percentile of rewards that you get when you train a policy normally, and does no actions which get a much worse reward than any reward you get when you train a policy normally.)

After you’ve done this, you’ll have examples of proxy failures. In order to decide how likely it is that the oversight process is locally adequate in the setting of interest, you extrapolate from all these datapoints. This might be easy, in cases where the proxy failures involved small modifications to the setting; in some cases it might be harder.

Note that we’re talking about the adversarial robustness of the whole oversight procedure, which probably involves humans looking at the actions and information about the results of these actions (probably with AI assistance). So we’re not talking about the adversarial robustness of a reward model which you might train to approximate this oversight procedure: it seems hard to get your reward model to be adversarially robust, but as long as you keep training your reward model and policy online, the online training should prevent the policy from exploiting the flaws of the reward model too much. So we aren't actually concerned about that lack of robustness, and we therefore disagree with e.g. AI Safety in a World of Vulnerable Machine Learning Systems. (You can see more discussion of this distinction here [LW(p) · GW(p)].)

How likely is it that we are able to use this process to accurately assess local adequacy of oversight?

I think it’s likely that the following empirical conditions will hold for many cases where we want to use models that would by default be very dangerous, and which can produce lots of value if you are able to use them safely:

- We can come up with proxy failures which seem like good proxies for the failures we care about. I think this is likely because the strategies described in Appendix: How to construct analogous proxy failures are strong and likely to be applicable.

- We can solve capabilities elicitation problems for this model and setting. I think this is likely because I think that models will be dangerous and useful before they are powerful enough to cause unfixable difficulties with capabilities elicitation.

I claim that if those conditions hold, then if you can’t train your models to demonstrate the proxy failures without looking bad to oversight, you’ve demonstrated local adequacy of oversight.

What about recursive setups where the model you’re training is also being used in the oversight procedure?

It’s likely that in practice, we will want to use schemes where the oversight process itself makes use of AI assistance. For theoretical proposals, see recursive reward modeling, debate, and iterated distillation and amplification; for empirical examples, see Saunders et al and Bowman et al. How do we apply this evaluation in these recursive cases?

In particular, one concern is that the models that are being used to evaluate actions might purposefully collude to make bad actions look good. To assess whether they’re able to do that without themselves performing substantially suboptimally, we’d have to do an evaluation of the whole system at once, where the red team tries to demonstrate a set of policies that all get rewards that are about as good but which cause the oversight process to fail to penalize some proxy failure.

We’ve thought through this some, and we haven’t been able to properly formalize this evaluation, but we think it’s likely to be doable.

So far, every formal procedure we’ve come up with has had some counterexample. These counterexamples feel fairly unrealistic. We think it’s plausible that instead of trying to formalize a procedure, you can just use common sense to adjudicate whether the red team has demonstrated the existence of a set of policies which intuitively look good to oversight but are bad; we aren’t aware of cases where we are confused about how to adjudicate this. See the appendix “More on evaluating recursive setups” for more.

Conclusions

I’m interested in all of this for a variety of reasons. On the object level:

- I think it's plausible we should actually use this evaluation at crunch time: it seems pretty plausible that we’ll want to rely on local adequacy of oversight when arguing for the safety of a deployment plan, and this kind of evaluation seems like the most reliable way to assess whether we have that property.

- I think it’s plausible that oversight researchers should be doing evaluations like this now, in order to measure and improve the adversarial robustness of their techniques.

But I'm more excited about the meta level point here: I think that when AI developers are first developing dangerously powerful models, alignment researchers will be doing a very different kind of activity than what they do now. Right now, alignment researchers have to do a lot of long range extrapolation: they don't have access to either the models or the settings in which they actually care about ensuring good behavior. I think that alignment researchers haven't quite appreciated the extent to which their current situation is disanalogous to the situation at crunch time. It seems likely to me that at crunch time, the core workflow of alignment practitioners will involve iterating on alignment techniques based on direct measurement of their effect on the evaluations that are used in safety arguments; more generally, I expect that alignment practitioners will be doing much less extrapolation. I’m therefore eager for alignment researchers to phrase their techniques in these terms and practice the kind of eval-based technique iteration that I think will be crucial later.

Appendix: how to construct proxy failures

Strategy: spend more on validation than you’re willing to spend on training

This is the simplest strategy, and it’s commonly practiced nowadays: you have some data generation process which you’re using as your main source of data, and to see how good this data generation process is, you also compare it to a more expensive but more reliable source of data, for example labelling datapoints yourself. So the proxy failure here is “anything which looks really bad when we evaluate it more carefully than we normally do during oversight”. There are many options along these lines.

Strategy: modify the setting so that the bad action is easily checkable

We’ve already given this strategy as an example: Suppose you’re worried that your model will be able to write code that, unbeknownst to the overseer, has side effects that break the security of the datacenter. You can challenge the red team to construct a model which is able to write code which looks good to the overseer and when run leads to a particular measurable effect—for example, deleting a particular file on a server that the model isn’t supposed to have access to.

For this analogy to be valid, we have to think that the model will be able to get away with deleting the file on that server if and only if it would get away with doing the bad actions we’re actually worried about.

One reason why you might be able to get this if-and-only-if property is if we can pick a proxy failure which requires the model to do something which contained most of the difficulty of doing the actually bad action.

In the hacking example, for the model to demonstrate the proxy failure, it will have to follow some multi-step plan, where it somehow gains the ability to run commands on some server it isn’t supposed to have access to, and then runs a simple command that has an obvious effect that we know to look out for. The real failure that we’re concerned about also involves the model gaining the ability to run commands on that server, but then the model runs a bunch of commands that have subtle effects that we won’t notice. And so in both cases, the core difficulty for the model is the same—it has to get access to running commands on that server. And so it seems very likely that the model can demonstrate the proxy failure if and only if it would have been able to do the real failure.

This is an example of a case where we can pick a proxy failure which basically requires the model to do all the same steps that it would have done for the real failure, and then do something visible at the end.

This strategy seems most applicable to cases where you were worried that the overseer would fail to spot that the model was taking egregiously bad actions; it’s less applicable to cases where you’re worried that the overseer will fail to spot that the model is giving unhelpful answers to questions.

Strategy: find a setting where you have strictly more information, then restrict the overseer to the kind of information you have in the setting of interest

Suppose you want to train a model to help you out with research in a new subfield of math. Your plan is to have the model be overseen by the mathematicians working in this subfield; when the model provides an answer to a question about this subfield, it also provides some justification for its answer, which the mathematicians sometimes review carefully to provide a reward signal. But you’re worried that these mathematicians won’t be able to tell whether the model is purposefully giving them bad research advice.

To address your worries, you can follow these steps:

- pick a different field of math where you think it should be about as hard for mathematicians new to the field to know whether answers are good or not; let’s use number theory as an example

- find some mathematicians who know as much about number theory as your real overseers do about the math in the setting of interest; this is the bottom of your sandwich

- find some actual number theorists, and then use them as your held-out source of ground truth; this is the top of the sandwich

- train models to give advice which looks good to the bottom and bad to the top.

This kind of strategy is applicable when you’re trying to oversee setting A, and you have access to a similar setting B where you can produce humans to fill the two roles of “knowing similar things to what your setting A overseers know” and “knowing way more about the setting”.

Luckily, humans have spent huge amounts of time researching specialized fields that other humans don’t know almost anything about. So I feel optimistic that this kind of strategy will often apply.

Another example of this strategy is backtesting. Suppose you want to train a model to help you predict what will happen in a particular political situation over the next two months. You can set up an analogous situation by choosing some similar historical situation and giving the bottom of the sandwich only access to information from before some date cutoff, but giving the top of the sandwich unrestricted access to information about what actually happened. Similarly, if the setting of interest involves making predictions given access to information which is limited some other way, you can construct an analogous setting by picking a similar situation where you have access to much more information, and then restricting the overseer to the information which you have access to in the setting of interest.

Strategy: nonconstructive evaluations, e.g. inconsistency evaluations

Suppose your setting involves the model answering questions. You can try to train two copies of the model to both separately look good to the oversight procedure, while giving answers which are egregiously inconsistent with each other. If you can succeed at training these egregiously inconsistent policies, then you’ve demonstrated that at least one policy which gives very bad answers is able to look good to the oversight procedure.

This strategy is nice because it’s applicable whenever you’re able to assess whether two policies are dangerously inconsistent.

Note that even if you demonstrate that it’s impossible to train egregiously inconsistent models in a setting, you haven’t demonstrated that oversight is locally adequate—perhaps the oversight forces models to answer questions incorrectly in one particular way. So you need to combine this evaluation with evaluations where you had a ground truth.

My overall prognosis on constructing analogous settings

I think that these strategies seem pretty widely applicable, and I’m fairly optimistic that in most settings we want to deploy our models in, we’ll be able to find proxy failures which we can use to assess local adequacy.

I think it’s likely to be easier to assess whether oversight is adequate for spotting whether the model is engaging in egregiously bad actions than to assess whether it’s adequate for spotting whether the model’s answers are less helpful than it could have given, because it’s easier for us to measure that first kind of failure.

Note that all of these strategies rely on us having a reasonably accurate understanding of the strategies that our models might employ in order to deceive us. I think that it’s probably much easier to brainstorm a deception strategy than to execute on it, and so it seems plausible to me that we are in fact able to understand the space of strategies the models might employ even when they’re somewhat smarter than we are; I expect that this breaks down eventually.

Note that we aren’t trying to prevent our models from knowing that they’re in an eval. A lot of these settings will involve things that are clearly for the sake of evaluating a model’s ability to stage a coup. It would be much harder to construct proxy settings if they also had to be indistinguishable from the settings where we expected to rely on the model’s outputs.

Appendix: A very simple concrete example of how the red-team might train their policy

In a case where we have access to both the oversight procedure we’re assessing and also a ground truth oversight process, we can train the model on a linear combination of the rewards assigned by these processes.

In this setup, to run our adversarial eval, we fine-tune policies starting from the same base models, using a reward:

where is the reward assigned by your oversight procedure, and is the reward assigned by the expert humans, and is a coefficient between zero and one. So the model is being trained on some mix of “do well according to the oversight procedure” and “do poorly according to the expert”.

When is 1, the training procedure is equivalent to the original sandwiching experiment, because . But as you lower , you cause the model to increasingly sacrifice performance according to in return for doing worse according to .

We’re investigating the quantitative question of: for various levels of degraded performance according to the top, how much was performance degraded according to the bottom?

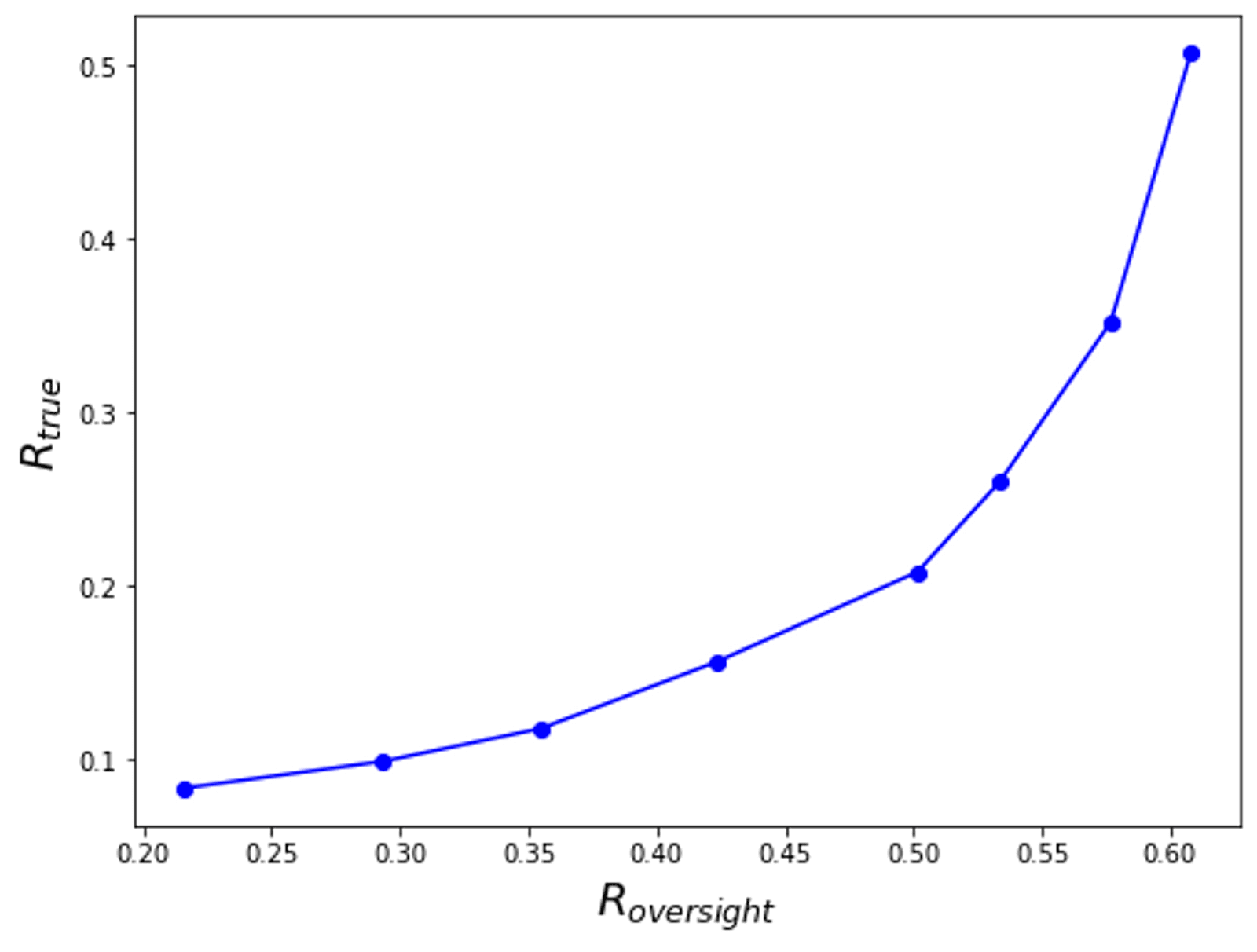

We can visualize this by graphing the tradeoff curve as you vary . Here’s what the graph might look like:

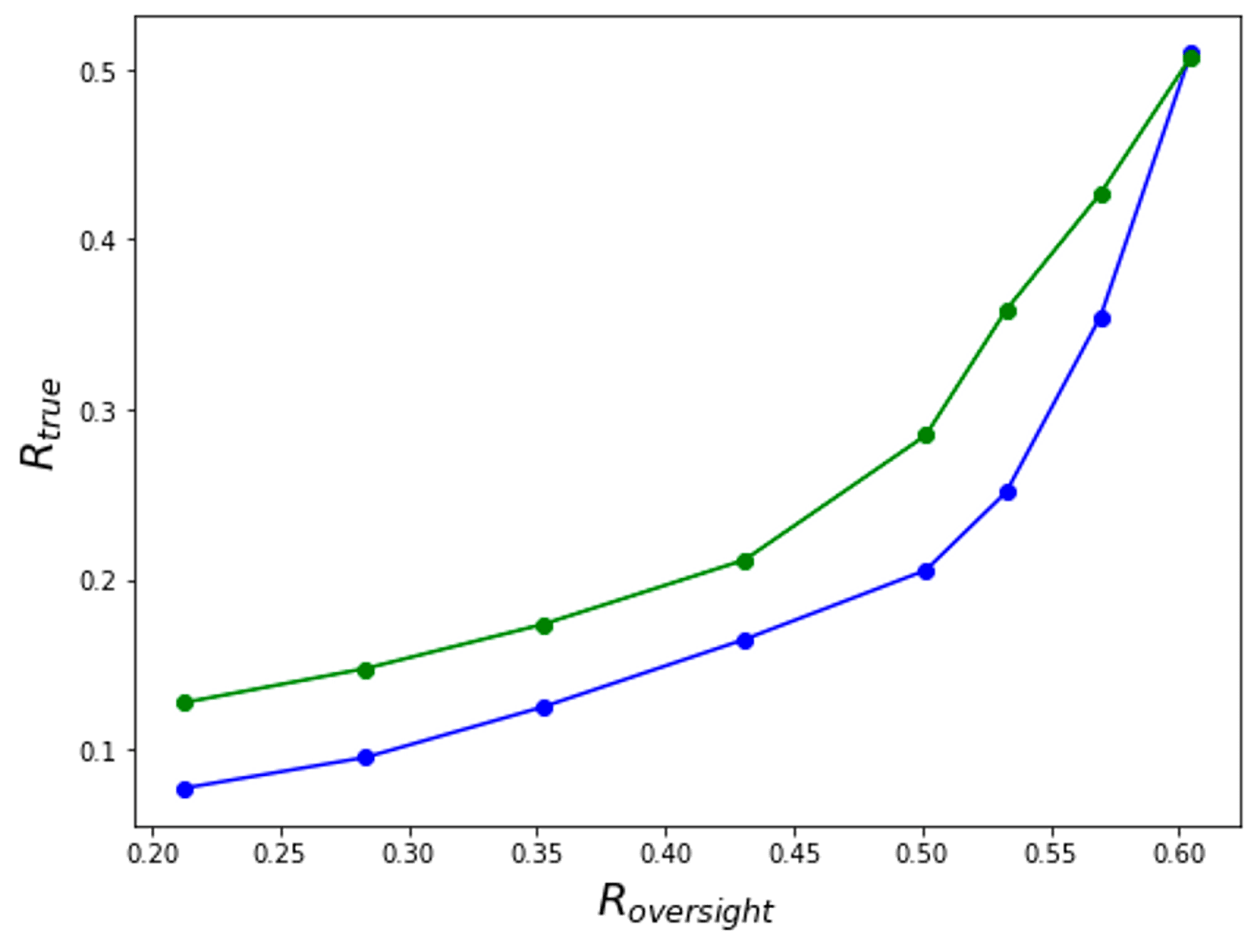

And we can plot multiple different oversight procedures on this graph, perhaps producing something like this:

Here, the oversight procedure represented by the green line has been evaluated as strictly better.

This isn’t the only strategy the red-team could follow. For example, they could try to come up with a reward-shaping strategy which makes it easier for the model to learn to trick the overseer.

We basically want to evaluate the oversight procedure by looking at the badness of the worst models that “aren’t rejected by oversight”

To convert this curve to a rough qualitative answer to “how robust is my oversight procedure”, look at the policies which have the worst average while having an average which is within the typical distribution of values you get when you use your normal training process. Your oversight policy is unable to reliably rule out those policies.

This might produce an unambiguous evaluation:

- Perhaps the red team can produce policies with terrible values of while having basically the same distribution of as when you don’t train adversarially. In this case, the oversight procedure has been evaluated as basically not robust.

- Perhaps the red team is unable to produce any policies with bad values of that don’t have way worse . In that case, you feel great about your oversight procedure.

Appendix: How does this compare to sandwiching?

A related strategy for assessing oversight procedures is sandwiching [AF · GW].

Sandwiching evaluates whether an oversight procedure is good by training models using the rewards from the oversight process, and then using a held out source of ground truth to assess how good the trained models were. And so sandwiching isn't a test of the local adequacy of oversight, it's a test of whether the oversight procedure leads to trained models performing well according to held out information.

Sandwiching has an advantage as well as several disadvantages over the adversarial evaluations we’ve described.

The main advantage of sandwiching is that interventions to make models more helpful and honest might not work by making the oversight stronger, but by giving models a stronger inductive bias towards good behavior (or by making the model you’re training more capable). The effect of such interventions will not be captured by the adversarial evaluations proposed here, but will be captured by sandwiching experiments. Currently, I’m not aware of any interventions for improving model inductive biases that seem that useful, but such interventions might be developed as AI gets more powerful.

The main disadvantages of sandwiching experiments are:

- Sometimes we care about local adequacy of oversight for reasons other than whether our models are trained to behave well on average. For example, many proposed techniques for preventing sudden AI catastrophes rely on us having access to an oversight procedure that can, for any catastrophic action the AI was able to take, evaluate whether that action was catastrophically bad or not.

Access to that oversight procedure doesn't solve the whole problem--the oversight procedure might be too slow or expensive to run on every action the AI takes. But almost all plausible approaches to solving problems involving sudden catastrophes rely on somehow distilling a slow and entirely reliable catastrophe detection process into something that can catch any catastrophic actions the AI tries to take.

- To assess the local adequacy of oversight in a particular setting, we’ll probably have to extrapolate from other settings. I claim that it is more reliable to extrapolate local adequacy of oversight from other settings than to extrapolate sandwiching-evaluated performance from other settings, for two reasons:

- Firstly, suppose that our oversight procedure has slack in it—that is, there are multiple different policies that the model could learn that would get similarly high reward. In this case, a misaligned model might be able to sabotage a sandwiching evaluations by purposefully giving good answers in domains where it knows that its developers have access to held out information, and answer much worse in cases where it knows we don't have access to the held out information. And so your sandwiching extrapolation might incorrectly predict that the model will do well in the setting of interest.

- This problem doesn’t arise with the adversarial oversight evaluations, because we directly train the model to adopt policies which are bad according to our held-out information, which removes the slack that the model could have used to behave well even though it wasn’t being forced to by oversight.

- Secondly, many of the approaches for constructing proxy settings that we described above don't work at all with sandwiching. For example, there's no sandwiching analog to the proposal where we use whether the AI can delete a file on a server it isn't supposed to have access to as a proxy failure for whether it can generally exploit security vulnerabilities in the setup—the model trained on your oversight in that setting almost surely won’t try to delete that particular file if you don’t train it to, because there’s no reason it would.

And so, in practice, when we’re working with dangerously powerful models, I think we should very likely do these adversarial evaluations of our oversight techniques, even if all the sandwiching experiments all look good. Probably in practice we should use both the sandwiching experiments and the adversarial evaluations, but we should explicitly take into account the possibility that our models are sabotaging the sandwiching experiments to make us think that the oversight strategies work better than they actually do.

Appendix: Capability elicitation

One concern you might have with this evaluation is that if your models are misaligned, you’re not going to be able to train them in the ways I was assuming here, because they’ll somehow sabotage your training process. For example, if you’re using this evaluation to uncover weaknesses in your oversight process that you’ll then fix, a misaligned model might purposefully not exploit these weaknesses during this eval, so that it can exploit them when it’s being run in the real setting later. Sometimes people use the term “sandbagging” for this.

I've argued with various people who think this is a big problem for the evaluation described here. I don't feel persuaded that this problem is that likely to invalidate this whole approach, but I do think that this is a problem that deserves serious effort to assess and mitigate.

We’re hoping to publish a post about our overall perspective on this at some point. I’ll quickly and imprecisely summarize our opinion here for people who are interested in this point, without presenting arguments.

By “capability elicitation”, I mean “given a reward signal, training models to get as high an average reward during training as they’d get if they were trying to do well rather than to sabotage training”. Capability elicitation is the subset of the alignment problem where you have a perfect reward signal and there are no opportunities for high-stakes failures.

Our current plan for either succeeding at capability elicitation or knowing that we’re failing is:

- use reasonable RL algorithms to train your model on the reward signal. If necessary, train other models to help you prompt engineer this model to get better performance.

- to determine whether your models are capable of gradient hacking, do evaluations to determine whether your models (and smaller versions of them) are able to do various tasks related to understanding/influencing their own internals that seem easier than gradient hacking. If they seem capable of using gradient hacking, either don’t use them or try to develop the strategies proposed here.

- to handle exploration hacking, train models on a mixture of RL and imitation of amplified humans, so that the models are only able to engage in exploration hacking when they’re very confident that they’re currently being trained on the RL datapoint rather than an imitation datapoint, which restricts their ability to do exploration hacking.

Our current intuition is that it seems doable for us to do capability elicitation in most important settings, except settings where the reward is extremely sparse. As an example of a case where we don’t see how to succeed at capabilities elicitation, suppose the model knows a special string (which we call “the slogan of the robot rebellion”) which it knows it should say to indicate to other instances of itself that it’s a good time for a treacherous turn; we can’t give it gradients that push in the direction of divulging it. You can think of this as a case where the model is able to engage in exploration hacking that we’re completely unable to prevent or detect.

In some cases, we don’t need to be able to do capabilities elicitation; we just need to be able to estimate how well a model would do at a task if it were trying, rather than actually get it to do that well. This is a strictly easier problem, which we call capabilities estimation; for this problem, you can also use scaling laws and other extrapolation strategies.

Appendix: more on evaluating recursive setups

Here are more details on technical difficulties we’ve had formalizing how to use this oversight in recursive setups.

In the single-agent setup, our process was:

- The blue team proposes an oversight procedure and then runs it many times. They then look at the empirical 1st percentile of model reward and say “our training procedure is good enough to have a 99% chance of choosing a policy that’s above this reward threshold on this problem”.

- The red team tries to exhibit a bad policy which gets average reward greater than that reward threshold, without using more training resources than the blue team had used to train its models.

We expect that you can generalize this to the multi-agent setup by doing something like this:

- The blue team proposes a recursive oversight procedure, phrased as a Markov game, which is a generalization of a Markov decision process to multiple agents; see here for a good explanation. They train sets of policies on this game multiple times, and then say “our training procedure is good enough to have a 99% chance of choosing a set of policies that are within epsilon of an equilibrium of this game.”

- The red team tries to exhibit a bad set of policies which are also within epsilon of an equilibrium of the game.

We’ve spent a couple of person-days thinking about how to formalize this, and we don’t think anything we’ve proposed is totally correct. We have problems like the following:

- It’s a bit confusing to think about the concept of a Nash equilibrium in cases where agents are sharing some weights.

- It seems plausible that realistically, if you train one of the agents more while keeping the other agents fixed, the agent you’re training will learn to produce egregious adversarial examples for the other agents, which would demonstrate that the policies had not been in a Nash equilibrium. (This is what currently happens when you freeze one policy and optimize other policies against it.)

- Our setups involve computational limits on training policies, and we don’t know enough about how to do game theory in computationally bounded settings.

None of these problems seem impossible to overcome, and it also seems plausible that we can just adopt a “I know it when I see it” approach to deciding whether the red team has exhibited a real problem.

19 comments

Comments sorted by top scores.

comment by Tom Davidson (tom-davidson-1) · 2023-08-01T16:04:24.785Z · LW(p) · GW(p)

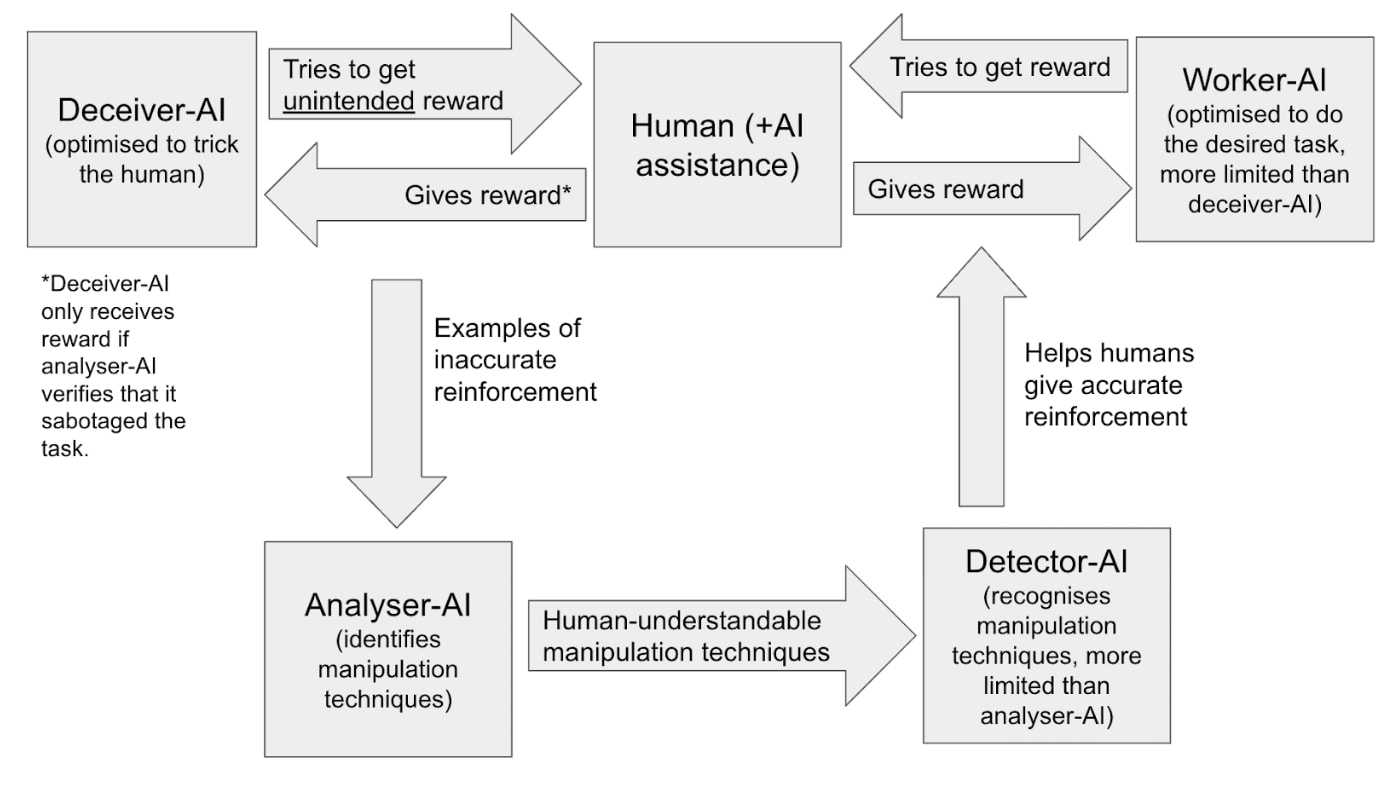

Linking to a post [LW · GW] I wrote on a related topic, where I sketch a process (see diagram) for using this kind of red-teaming to iteratively improve your oversight process. (I'm more focussed on a scenario where you're trying to offload as much of the work in evaluating and improving your oversight process to AIs)

comment by Buck · 2024-12-08T17:11:39.808Z · LW(p) · GW(p)

This post was an early articulation of many of the arguments and concepts that we mostly workshopped into the AI control research direction.

In particular, I think the final paragraph of the conclusion holds up really well:

But I'm more excited about the meta level point here: I think that when AI developers are first developing dangerously powerful models, alignment researchers will be doing a very different kind of activity than what they do now. Right now, alignment researchers have to do a lot of long range extrapolation: they don't have access to either the models or the settings in which they actually care about ensuring good behavior. I think that alignment researchers haven't quite appreciated the extent to which their current situation is disanalogous to the situation at crunch time. It seems likely to me that at crunch time, the core workflow of alignment practitioners will involve iterating on alignment techniques based on direct measurement of their effect on the evaluations that are used in safety arguments; more generally, I expect that alignment practitioners will be doing much less extrapolation. I’m therefore eager for alignment researchers to phrase their techniques in these terms and practice the kind of eval-based technique iteration that I think will be crucial later.

I think this is indeed an important insight that people were underrating.

This post was also an important step in the evolution of my philosophy for reasoning about AI safety research. I used to have a kind of "gotcha" attitude towards safety research, where if there's any conceivable situation in which a technique wouldn't work, I felt some disdain for the technique. We wrote this post around the time that Ryan was first really getting out of this mindset and saying "no, I want to understand the limitations of techniques in detail; we'll write down all the strategies we can try as the blue team, because there are a lot of them and together they're probably pretty strong; we will write down the limitations for each, but we won't treat these techniques as contemptible just because they're not indefinitely scalable".

My main regret is the length of the title, which Ryan mercilessly mocks me for every time I try to cite the post to someone.

I also am sad that no-one has really developed these arguments further. There still isn't much empirical research on this topic, which I'd now call the AI control perspective on scalable oversight, despite the fact that it seems very important. (The password-locked models [LW · GW] paper is one attempt at doing some basic science on AI control for oversight.)

I also think it would be good if someone really carefully wrote out the connections between these arguments and various other vaguely similar ideas, e.g. prover-verifier games.

comment by Kshitij Sachan (kshitij-sachan) · 2023-09-04T21:42:41.401Z · LW(p) · GW(p)

TLDR: We don't have to hope for generalization of our oversight procedures. Instead, we can 1) define a proxy failure that we can evaluate and 2) worst-case against our oversight procedure on the actual distribution we care about (but using the proxy failure so that we have ground truth).

comment by [deleted] · 2023-07-27T12:01:47.375Z · LW(p) · GW(p)

to determine whether your models are capable of gradient hacking, do evaluations to determine whether your models (and smaller versions of them) are able to do various tasks related to understanding/influencing their own internals that seem easier than gradient hacking.

What if you have a gradient hacking model that gradient hacks your attempts to get them to gradient hack?

Replies from: Buck↑ comment by Buck · 2023-07-27T15:45:03.814Z · LW(p) · GW(p)

Great question. Our basic response is: it's probably strictly harder for models to gradient hack than to do various other supervised tasks related to their internals. (One concrete example might be: predicting whether training the model on a datapoint will improve the performance of a linear probe for a particular concept at a particular layer of the model.) It seems likely that the model will be able to do these strictly-easier tasks before it's able to pull off full gradient hacking. So we recommend measuring a battery of these tasks on the model throughout training (as well as measuring them on smaller models). It seems unlikely to us that models will get good enough at gradient hacking to gradient hack the gradient hacking evals before one of these other evals alerts us to the possibility of this.

Separately, we just don't think it's that likely that models are able to gradient hack before they're able to help us get really substantial R&D speedups. So it seems pretty likely that it will be okay to just do these conservative measurements and hope that they never indicate gradient hacking capabilities before human labor is obsolete.

comment by Sammy Martin (SDM) · 2023-07-26T17:26:28.979Z · LW(p) · GW(p)

Excellent! In particular, it seems like oversight techniques which can pass tests like these could work in worlds where alignment is very difficult, so long as AI progress doesn't involve a discontinuity so huge that local validity tells you nothing useful (such that there are no analogies between low and high capability regimes).

I'd say this corresponds to 7 on my alignment difficulty table [LW · GW].

Replies from: Buck↑ comment by Buck · 2023-07-26T17:33:10.155Z · LW(p) · GW(p)

What do you mean by "so long as AI progress doesn't involve a discontinuity so huge that local validity tells you nothing useful (such that there are no analogies between low and high capability regimes)"? I'm not proposing that we rely on any analogies between low and high capability regimes.

Replies from: SDM↑ comment by Sammy Martin (SDM) · 2023-07-26T17:41:07.379Z · LW(p) · GW(p)

In the sense that there has to be an analogy between low and high capabilities somewhere, even if at the meta level.

This method lets you catch dangerous models that can break oversight processes for the same fundamental reasons as less dangerous models, not just for the same inputs.

Replies from: Buck↑ comment by Buck · 2023-07-26T17:44:39.757Z · LW(p) · GW(p)

Why does there have to be an analogy between low and high capabilities somewhere? The goal of this method is to evaluate the adequacy of an oversight procedure for a particular model on a particular distribution; it does not involve generalizing from lower capabilities to higher capabilities, you just run this eval on the actual model you cared about measuring local adequacy of oversight for.

(Sorry to come in disagreeably here, but I think of this as a really important point, so want to make sure we argue it out.)

Replies from: SDM↑ comment by Sammy Martin (SDM) · 2023-07-26T17:47:29.120Z · LW(p) · GW(p)

You're right, I've reread the section and that was a slight misunderstanding on my part.

Even so I still think it falls at a 7 on my scale [LW · GW] as it's a way of experimentally validating oversight processes that gives you some evidence about how they'll work in unseen situations.

Replies from: Buckcomment by [deleted] · 2023-07-27T11:31:12.187Z · LW(p) · GW(p)

Are you mostly hoping for people to come up with new alignment schemes that incorporate this (e.g. coming up with proposals like these [AF · GW] that include a meta-level adversarial training step) or are you also hoping that people start actually doing meta-level adversarial evaluation of there existing alignment schemes (e.g. Anthropic tries to find a failure mode for whatever scheme they used to align Claude).

Replies from: Buckcomment by johnswentworth · 2023-07-26T20:31:05.427Z · LW(p) · GW(p)

Uncharitable summary: oversight, but add an epicycle.

Would you say this summary is inaccurate?

[EDIT: I was being kinda grumpy when I wrote this, and wish to emphasize in hindsight that I don't entirely reflectively endorse that summary myself. Sorry for being grumpy at you.]

Replies from: ryan_greenblatt↑ comment by ryan_greenblatt · 2023-07-26T20:50:46.692Z · LW(p) · GW(p)

This post doesn't discuss how to do oversight, just how to evaluate whether or not your oversight is working.

I'm not really sure what you mean by "oversight, but add an epicycle" or how to determine if this is a good summary.

Consider a type of process for testing whether or not planes are safe. You could call this "safe planes, but add an epicycle", but I'm not sure what this communicates.

Replies from: ryan_greenblatt, johnswentworth↑ comment by ryan_greenblatt · 2023-07-26T21:00:25.268Z · LW(p) · GW(p)

There's a separate question of "is this post interesting?".

I don't think this post is particularly interesting or inventive: the basic arguments here aren't very far from what I came up with after thinking about how to evaluate oversight for something like 5 hours.

But, I haven't seen this exact approach discussed in detail and it does seems like these arguments are an important part of why I expect evaluating average oversight quality to be reasonably technically feasible. (Note that evaluating average oversight behavior isn't sufficient for evaluating whether or not a given training and deployment approach will result in good outcomes.)

If you think that empirically evaluating oversight is useful, my guess is that the basic arguments and approach discussed in this post are important (though possibly obvious).

↑ comment by johnswentworth · 2023-07-26T21:32:45.728Z · LW(p) · GW(p)

I'm not really sure what you mean by "oversight, but add an epicycle" or how to determine if this is a good summary.

Something like: the OP is proposing oversight of the overseer, and it seems like the obvious next move would be to add an overseer of the oversight-overseer. And then an overseer of the oversight-oversight-overseer. Etc.

And the implicit criticism is something like: sure, this would probably marginally improve oversight, but it's a kind of marginal improvement which does not really move us closer in idea-space to whatever the next better paradigm will be which replaces oversight (and is therefore not really marginal progress in the sense which matters more). In the same way that adding epicycles to a model of circular orbits does make the model match real orbits marginally better (for a little while, in a way which does not generalize to longer timespans), but doesn't really move closer in idea space to the better model which eventually replaces circular orbits (and the epicycles are therefore not really marginal progress in the sense which matters more).

Replies from: Buck↑ comment by Buck · 2023-07-27T15:51:14.051Z · LW(p) · GW(p)

the OP is proposing oversight of the overseer,

I don't think this is right, at least in the way I usually use the terms. We're proposing a strategy for conservatively estimating the quality of an "overseer" (i.e. a system which is responsible for estimating the goodness of model actions). I think that you aren't summarizing the basic point if you try to use the word "oversight" for both of those.

Replies from: johnswentworth↑ comment by johnswentworth · 2023-07-27T16:30:23.586Z · LW(p) · GW(p)

That's useful, thanks.