Basics of Rationalist Discourse

post by Duncan Sabien (Deactivated) (Duncan_Sabien) · 2023-01-27T02:40:52.739Z · LW · GW · 193 commentsContents

Introduction Prelude: On Shorthand Guidelines, in brief: What does it mean for something to be a "guideline"? Where did these come from? Why does this matter? Expansions 1. Don't say straightforwardly false things. 2. Track and distinguish your inferences from your observations. 3. Estimate and make clear your rough level of confidence in your assertions. 4. Make your claims clear, explicit, and falsifiable, or explicitly acknowledge that you aren't doing so (or can't). 5. Aim for convergence on truth, and behave as if your interlocutors are also aiming for convergence on truth. 6. Don't jump to conclusions—maintain at least two hypotheses consistent with the available information. 7. Be careful with extrapolation, interpretation, and summary/restatement. 8. Allow people to restate, clarify, retract, and redraft their points. 9. Don't weaponize equivocation/abuse categories/engage in motte-and-bailey shenanigans. 10. Hold yourself to the absolute highest standard when directly modeling or assessing others' internal states, values, and thought processes. Appendix: Miscellaneous Thoughts Appendix: Sabien's Sins Until I state otherwise, I hereby pledge that I shall refrain from using the following tactics in discourse: None 194 comments

Introduction

This post is meant to be a linkable resource. Its core is a short list of guidelines [LW · GW] (you can link directly to the list) that are intended to be fairly straightforward and uncontroversial, for the purpose of nurturing and strengthening a culture of clear thinking, clear communication, and collaborative truth-seeking.

"Alas," said Dumbledore, "we all know that what should be, and what is, are two different things. Thank you for keeping this in mind."

There is also (for those who want to read more than the simple list) substantial expansion/clarification of each specific guideline, along with justification for the overall philosophy behind the set.

Prelude: On Shorthand

Once someone has a deep, rich understanding of a complex topic, they are often able to refer to that topic with short, simple sentences that correctly convey the intended meaning to other people with similar context and expertise.

However, those same short, simple sentences are often dangerously misleading, in the hands of a novice who lacks the proper background. Dangerous precisely because they seem straightforward and comprehensible, and thus the novice will confidently extrapolate outward from them in what feel like perfectly reasonable ways, unaware the whole time that the concept in their head bears little or no resemblance to the concept that lives in the expert's head.

Good shorthand in the hands of an experienced user need only be an accurate fit for the already-existing concept it refers to—it doesn't need the additional property of being an unmistakeable non-fit for other nearby attractors. It doesn't need to contain complexity or nuance—it just needs to remind the listener of the complexity already contained in their mental model. It's doing its job if it efficiently evokes the understanding that already exists, independent of itself.

This is important, because what follows this introduction is a list of short, simple sentences comprising the basics of rationalist discourse. Each of those sentences is a solid fit for the more-complicated concept it's gesturing at, provided you already understand that concept. The short sentences are mnemonics, reminders, hyperlinks.

They are not sufficient, on their own, to reliably cause a beginner to construct the proper concepts from the ground up, and they do not, by themselves, rule out all likely misunderstandings.

All things considered, it seems good to have a clear, concise list near the top of a post like this. People should not have to scroll and scroll and sift through thousands of words when trying to refer back to these guidelines.

But each of the short, simple sentences below admits of multiple interpretations, some of which are intended and others of which are not. They are compressions of complex points, and compressions are inevitably lossy. If a given guideline is new to you, check the in-depth explanation before reposing confidence in your understanding. And if a given guideline stated-in-brief seems to you to be flawed or misguided in some obvious way, check the expansion before spending a bunch of time marshaling objections that may well have already been answered.

Further musing on this concept: Sazen [LW · GW]

Guidelines, in brief:

0. Expect good discourse to require energy.

- Don't say straightforwardly false things.

- Track (for yourself) and distinguish (for others) your inferences from your observations.

- Estimate (for yourself) and make clear (for others) your rough level of confidence in your assertions.

- Make your claims clear, explicit, and falsifiable, or explicitly acknowledge that you aren't doing so (or can't).

- Aim for convergence on truth, and behave as if your interlocutors are also aiming for convergence on truth.

- Don't jump to conclusions—maintain at least two hypotheses consistent with the available information.

- Be careful with extrapolation, interpretation, and summary/restatement—distinguish between what was actually said, and what it sounds like/what it implies/what you think it looks like in practice/what it's tantamount to. If you believe that a statement A strongly implies B, and you are disagreeing with A because you disagree with B, explicitly note that "A strongly implies B" is a part of your model.

- Allow people to restate, clarify, retract, and redraft their points, if they say that their first attempt failed to convey their intended meaning; do not hold people to the first or worst version of their claim.

- Don't weaponize equivocation/don't abuse categories/don't engage in motte-and-bailey shenanigans.

- Hold yourself to the absolute highest standard when directly modeling or assessing others' internal states, values, and thought processes.

What does it mean for something to be a "guideline"?

- It is a thing that rationalists should try to do, to a substantially greater degree than random humans engaging in run-of-the-mill social interactions. It's a place where it is usually correct and useful to put forth marginal intentional effort.

- It is a domain in which rationalists should be open to requests. If a given comment lacks or is low on a particular guidelined virtue, and someone else pops in to politely ask for a restatement or a clarification which more clearly expresses that virtue, the first speaker should by default receive that request as a friendly and cooperative act, and respond accordingly (as opposed to receiving it as e.g. onerous, or presumptuous, or as a social attack).

- It is an approximation of good rationalist discourse. If a median member of the general population were to practice abiding by it for a month, their thinking would become clearer and their communication would improve. But that doesn't mean that perfect adherence is sufficient to make discourse good, and it doesn't mean that breaking it is necessarily bad.

Think of the above, then, as a set of priors. If a guideline says "Do [X]," that is intended to convey that:

- There will be better outcomes more frequently from people doing [X] than from people doing [a neutral absence of X], and similarly from [a neutral absence of X] than from [anti-X]. In particular, the difference in outcomes is large enough and reliable enough to be generally worth the effort even if [X] is not especially natural for you, or if [not X] or [anti-X] would be convenient.

- Given a hundred instances of someone actively engaged in [anti-X], most of them will be motivated by something other than a desire to speak the truth, uncover the truth, or help others to understand the truth.

Thus, given the goals of clear thinking, clear communication, and collaborative truth-seeking, the burden of proof is on a given guideline violation to justify itself. There will be many cases in which violating a guideline will in fact be exactly the right call, just as the next marble drawn blindly from a bag of mostly red marbles may nevertheless be green. But if you're doing something that's actively contra to one of the above, it should be for a specific, known reason, that you should be willing to discuss if asked (assuming you didn't already explain up front).

Which leads us to the Zeroth Guideline: expect good discourse to (sometimes) require energy.

If it did not—if good discourse were a natural consequence of people following ordinary incentives and doing what they do by default—then it wouldn't be recognizable as the separate category of good discourse.

A culture of (unusually) clear thinking, (unusually) clear communication, and (unusually) collaborative truth-seeking is not the natural, default state of affairs. It's endothermic, requiring a regular influx of attention and effort to keep it from degrading back into a state more typical of the rest of the internet.

This doesn't mean that commentary must always be high effort. Nor does it mean that any individual user is on the hook for doing a hard thing at any given moment.

But it does mean that, in the moments where meeting the standards outlined above would take too much energy (as opposed to being locally unnecessary for some other, more fundamental reason), one should lean toward saying nothing, rather than actively eroding them.

Put another way: a frequent refrain is "well, if I have to put forth that much effort, I'll never say anything at all," to which the response is often "correct, thank you."

It's analogous to a customer complaining "if Costco is going to require masks, then I'm boycotting Costco." All else being equal, it would be nice for customers to not have to wear masks, and all else being equal, it would be nice to lower the barrier to communication such that more thoughts could be more easily included.

But all else is not equal; there are large swaths of common human behavior that are corrosive or destructive to the collaborative search for truth. No single contributor or contribution is worth sacrificing the overall structures which allow for high-quality conversation in the first place—if one genuinely does not have the energy required to e.g. put forth one's thoughts while avoiding straightforwardly false statements, or while distinguishing inference from observation (etc.), then one should simply disengage.

Note that there is always room for discussion on the meta level; it is not the case that there is universal consensus on every norm, nor on how each norm looks in practice (though the above list is trying pretty hard to limit itself to norms that are on firm footing).

Note also that there is a crucial distinction between [fake/performative verbal gymnastics], and [sincere prioritization of truth and accuracy]—more on this in Sapir-Whorf for Rationalists [LW · GW].

For most practical purposes, this is the end of the post. All remaining sections are reference material, meant to be dug into only when there's a specific reason to; if you read further, please know that you are doing the equivalent of reading dictionary entries or encyclopedia entries and that the remaining words are not optimized for being Generically Entertaining To Consume.

Where did these come from?

I tinkered with drafts of this essay for over a year, trying to tease out something like an a priori list of good discourse norms, and wrestling with various imagined subsets of the LessWrong audience and trying to predict what objections might arise, and the whole thing was fairly sprawling and I ultimately scrapped it in favor of just making a list of a dozen in-my-estimation unusually good rationalist communicators, and then writing down the things that made those people's discourse stand out to me in the first place, i.e. the things it seems to me that they do a) 10-1000x more frequently than genpop, and b) 2-10x more frequently than the median LessWrong user.

That list comprised:

- Julia Galef

- Anna Salamon

- Rob Bensinger

- Scott Garrabrant

- Vaniver

- Eliezer Yudkowsky

- Logan Brienne Strohl

- Oliver Habryka

- Kelsey Piper

- Nate Soares

- Eric Rogstad

- Spencer Greenberg

- Dan Keys (making it a baker's dozen)

I claim that if you contrast the words produced by the above individuals with the words produced by the rest of the English-speaking population, what you find is approximately the above ten guidelines.

In other words, the guidelines are descriptive of good discourse that already exists; here I am attempting to convert them into prescriptions, with some wiggle room and some caveats. But they weren't made up from whole cloth; they are in fact an observable part of What Actually Works In Practice.

Some of the above individuals have specific deficits in one or two places, perhaps, and there are some additional things that these individuals are doing which are not basic, and not found above. But overall, the above is a solid 80/20 on How To Talk Like Those People Do, and sliding in that direction is going to be good for most of us.

Why does this matter?

In short: because the little things add up. For more on this, take a look at Draining the Swamp [LW · GW] as an excellent metaphor for how ambient hygiene influences overall health, or revisit Concentration of Force [LW · GW], in which I lay out my argument for why we should care about small deltas on second-to-second scales, or Moderating LessWrong, which is sort of a spiritual precursor to this post.

Expansions

1. Don't say straightforwardly false things.

... and be ready and willing to explicitly walk back unintentional falsehoods, if asked or if it seems like it would help your conversational partner.

"In reality, everyone's morality is based on status games." → "As far as I can tell, the overwhelming majority of people have a morality that grounds out in social status."

In normal social contexts, where few people are attending to or attempting to express precise truth, it's relatively costless to do things like:

- Use hyperbole for emphasis

- Say a false thing, because approximately everyone will be able to intuit the nearby true thing that you're intending to convey

- Over-generalize; ignore edge cases and rounding errors (e.g. "Everybody has eyes.")

Most of the times that people end up saying straightforwardly false things, they are not intending to lie or deceive, but rather following one of these incentives (or similar).

However, if you are actively intending to create, support, and participate in a culture of clear thinking, clear communication, and collaborative truth-seeking, it becomes more important than usual to break out of those default patterns, as well as to pump against other sources of unintentional falsehood like the typical mind fallacy [? · GW].

This becomes even more important when you consider that places like LessWrong are cultural crossroads—users come from a wide variety of cultures and cannot rely on other users sharing the same background assumptions or norms-of-speech. It's necessary in such a multicultural environment to be slower, more careful, and more explicit, if one wants to avoid translation errors and illusions of transparency and various other traps and pitfalls.

Some ways you might feel when you're about to break the First Guideline:

- The thing you want to say is patently obvious or extremely simple

- There's no reason to beat around the bush

- It's really quite important that this point be heard above all the background noise

Some ways a First Guideline request might look:

- "Really?"

- "Hang on—did you mean that literally?"

- "I'm not sure whether or not you're exaggerating in the above claims, and want to double-check that you mean them straightforwardly."

2. Track and distinguish your inferences from your observations.

... or be ready and willing to do so, if asked or if it seems like it would help your conversational partner (or the audience). i.e. build the habit of tracking the distinction between what something looks like, and what it definitely is.

"Keto works" → "I did keto and it worked." → "I ate [amounts] of [foods] for [duration], and tracked whether or not I was in ketosis using [method]. During that time, I lost eight pounds while not changing anything about my exercise or sleep or whatever."

"That's propaganda." → "That's tripping my propaganda detectors." → "That sentence contains [trait] and [trait] and [trait] which, as far as I can tell, are false/vacuous/just there to cause the reader to feel a particular way."

"User buttface123 is a dirty liar." → "I've caught user buttface123 lying three times now." → "I've seen user buttface123 say false things in support of their point [three] [times] [now], and that last time was after they'd responded to a comment thread containing accurate info, so it wasn't just simple ignorance. They're doing it on purpose."

The first and most fundamental question of rationality is "what do you think you know, and why do you think you know it?"

Many people struggle with this question. Many people are unaware of the processing that goes on in their brains, under the hood and in the blink of an eye. They see a fish, and gloss over the part where they saw various patches of shifting light and pattern-matched those patches to their preexisting concept of "fish." Less trivially, they think that they straightforwardly observe things like:

- Complex interventions in the world "working" or "not working"

- The people around them "being nice" or "being assholes"

- Particular pieces of food or art or music or architecture "just being good"

... and they miss the fact that they were running a bunch of direct sensory data through a series of filters and interpreters that brought all sorts of other knowledge and assumptions and past experience and causal models into play. The process is so easy and so habitual that they do not notice it is occurring at all.

(Where "they" is also meant to include "me" and "you," at least some of the time.)

Practice the skill of slowing down, and zooming in. Practice asking yourself "why?" after the fashion of a curious toddler. Practice answering the question "okay, but if there were another step hiding in between these two, what would it be?" Practice noticing even extremely basic assumptions that seem like they never need to be stated, such as "Oh! Right. I see the disconnect—the reason I think X is worse than Y is because as far as I can tell X causes more suffering than Y, and I think that suffering is bad."

This is particularly useful because different humans reason differently, and that reasoning tends to be fairly opaque, and attempting to work backward from [someone else's outputs] to [the sort of inputs you would have needed, to output something similar] is a recipe for large misunderstandings.

Wherever possible, try to make explicit the causes of your beliefs, and to seek the causes underlying the beliefs of others, especially when you strongly disagree. Work on improving your ability to tease out what you observed separate from what you interpreted it to mean, so that the conversation can track (e.g. "I saw A," "I think A implies B," and "I don't like B" as three separate objects. If you're unable to do so, for instance because you do not yet know the source of your intuition, try to note out loud that that's what's happening.

Some ways you might feel when you're about to break the Second Guideline:

- Everybody knows that X implies Y; it's obvious/trivial.

- The implications of what was just said are alarming, and need to be responded to.

- There's just no other explanation that fits the available data.

Some ways a Second Guideline request might look:

- "Wait—can you tell me why you believe that?"

- "That doesn't sound observable to me. Would you mind saying what you actually saw?"

- "Are you saying that it seems like X, or that it definitely is X?"

3. Estimate and make clear your rough level of confidence in your assertions.

... or be ready and willing to do so, if asked or if it seems like it would help another user.

Humans are notoriously overconfident in their beliefs, and furthermore, most human societies reward people for visibly signaling confidence.

Humans, in general, are meaningfully influenced by confidence/emphasis alone, separate from truth—probably not literally all humans all of the time, but at least in expectation and in the aggregate, either for a given individual across repeated exposures or for groups of individuals (more on this in Overconfidence is Deceit [LW · GW]).

Humans are social creatures who tend to be susceptible to things like halo effects, when not actively taking steps to defend against them, and who frequently delegate and defer and adopt others' beliefs as their own tentative positions, pending investigation, especially if those others seem competent and confident and intelligent. If you expose 1000 randomly-selected humans to a debate between a quiet, reserved person outlining an objectively correct position and a confident, emphatic person insisting on an unfounded position, many in that audience will be net persuaded by the latter, and others will feel substantially more uncertainty and internal conflict than the plain facts of the matter would have left them feeling by default.

Thus, there is frequently an incentive to misrepresent your confidence, for instrumental advantage, at the cost of our collective ability to think clearly, communicate clearly, and engage in collaborative truth-seeking.

Additionally, there is a tendency among humans to use vague and ambiguous language that is equally compatible with multiple interpretations, such as the time that a group converged on agreement that there was "a very real chance" of a certain outcome, only to discover later, in one-on-one interviews, that at least one person meant that outcome was 20% likely, and at least one other meant it was 80% likely (which are exactly opposite claims, in that 20% likely means 80% unlikely).

Thus, it behooves people who want to engage in and encourage better discourse to be specific and explicit about their confidence (i.e. to use numbers, and to calibrate your use of numbers over time, or to flag tentative beliefs as tentative, or to be clear about the source of your belief and your credence in that source).

"That'll never happen." → "That seems really unlikely to me." → "I think the outcome you just described is ... I'm sort of making up numbers here but it feels like it's less than ten percent likely?"

"I don't care what Mark said; I know they sell them at that store." → "Look, I'd bet you five to one that if we go there, we'll find them on the shelf."

"The number one predictor of mass violence is domestic violence." → "I'm pretty sure I recall seeing an article stating that the number one predictor of mass violence is domestic violence, and I'm pretty sure it was in a news source I thought was reputable." → "Here's the study, and here's the methodology, and here's the data."

Some ways you might feel when you're about to break the Third Guideline:

- What was just said was wrong; thankfully you're here to set the record straight.

- Everybody knows that nobody literally means "100% certain," so it's not really deceptive or misleading.

- There's no need to be super explicit; the person you're talking to is on the same wavelength and almost certainly "gets it."

Some ways a Third Guideline request might look:

- "I'm curious if you would be willing to bet some small amount of dollars on this, and if so, at what odds?"

- "Hey, that's a pretty strong statement—do you actually mean that there are no exceptions?"

- "If I told you I had proof you were wrong, how surprised would you be?"

4. Make your claims clear, explicit, and falsifiable, or explicitly acknowledge that you aren't doing so (or can't).

... or at least be ready and willing to do so, if asked or if it seems like it would help make things more comprehensible.

It is, in fact, actually fine to be unsure, or to have a vague intuition, or to make an assertion without being able to provide cruxes or demonstrate how it could be proven/disproven. None of these things are disallowed in rational discourse.

But noting aloud that you are self-aware about the incomplete nature of your argument is a highly valuable social maneuver. It signals to your conversational partner "I am aware that there are flaws in what I am saying; I will not take it personally if you point at them and talk about them; I am taking my own position as object rather than being subject to it and tunnel-visioned on it."

(This is a move that makes no sense in an antagonistic, zero-sum context, since you're just opening yourself up to attack. But in a culture of clear thinking, clear communication, and collaborative truth-seeking, contributing your incomplete fragment of information, along with signaling that yes, the fragment is, indeed, a fragment, can be super productive.)

Much as we might wish that everyone could take for granted that disagreement is prosocial and productive and not an attack, it is not actually the case. Some people do indeed launch attacks under the guise of disagreement; some people do indeed respond to disagreement as if it were an attack even if it is meant entirely cooperatively; some people, fearing such a reaction, will be hesitant to note their disagreement in the first place, especially if their conversational partner doesn't seem open to it.

"Look, just trust me, that whole group is bad news." → "I had a bad experience with that group, and I know three other people who've each independently told me that they had bad experiences, too."

"[Nation X] is worse than [Nation Y]." → "I'm willing to bet that if we each independently made lists of what measurable stats makes a nation good, and then checked, [Nation X] would be worse on at least 60% of them."

"This is an outstanding investment." → "Look, I can't actually quite put my finger on what it is about this investment that stands out to me; I'm sort of running off an opaque intuition here. But I can at least say that I feel really confident about it—confident enough that I put in half my paycheck from last month. For calibration, the last time I felt this confident, I did indeed see a return of 300% in six months."

The more clear it is what, exactly, you're trying to say, the easier it is for other people to evaluate those claims, or to bring other information that's relevant to the issue at hand.

The more your assertions manage to be checkable, the easier it is for others to trust that you're not simply throwing spaghetti at the wall to see what sticks.

And the more you're willing to flag your own commentary when it fails on either of the above, the easier it is to contribute to and strengthen norms of good discourse even with what would otherwise be a counterexample. Pointing out "this isn't great, but it's the best that I've got" lets you contribute what you do have, without undermining the common standard of adequacy.

Some ways you might feel when you're about to break the Fourth Guideline:

- It would be scary, or otherwise somehow bad, if you were to turn out to be mistaken about X.

- There's too much going on; you have a pile of little intuitions that all add up in a way that is too tricky to try tracking or explaining.

- If you don't make it sound like you know what you're talking about, people might wrongly dismiss your true and valuable information/you don't want to get unfairly docked just because you can't shape your jargon to match the local lingo.

Some ways a Fourth Guideline request might look:

- "If for some reason this turned out to be false, how would we know? What sorts of things would we see in the world where something else is going on?"

- "I'm not sure I quite understand what you're predicting, here. Can you list, like, three things you're claiming I will unambiguously see over the next month?"

- "Hey, it sounds like you don't actually have legible cruxes. Is that correct?"

5. Aim for convergence on truth, and behave as if your interlocutors are also aiming for convergence on truth.

... and be ready to falsify your impression otherwise, if evidence starts to pile up.

The goal of rationalist discourse is to be less wrong—for each of us as individuals and all of us as a group to have more correct beliefs, and fewer incorrect beliefs.

If two people disagree, it's tempting for them to attempt to converge with each other, but in fact the right move is for both of them to try to see more of what's true.

If you are moving closer to truth—if you are seeking available information and updating on it to the best of your ability—then you will inevitably eventually move closer and closer to agreement with all the other agents who are also seeking truth.

However, when conversations get heated—when the stakes are high—when the other person not only appears to be wrong but also to be acting in poor faith—that's when it's the most valuable to keep in touch with the possibility that you might be misunderstanding each other, or that the problem might be in your models, or that there might be some simple cultural or norms mismatch, or that your conversational partner might simply be locally failing to live up to standards that they do, in fact, generally hold dear, etc.

It's very easy to observe another person's output, evaluate it according to your own norms and standards, and conclude that you understand their motives and that those motives are bad.

It is not, in fact, the case that everyone you engage with is primarily motivated by truth-seeking! Even in enclaves like LessWrong, there are lots of people who are prioritizing other goals over that one a substantial chunk of the time.

But simple misunderstandings, and small, forgivable, recoverable slips in mood or mental discipline outnumber genuine bad faith by a large amount. If you are running a tit-for-tat algorithm in which you quickly respond to poor behavior by mirroring it back, you will frequently escalate a bad situation (and often appear, to the other person, like the first one who broke cooperation).

Another way to think of this is: it pays to give people two extra chances to demonstrate that they are present in good faith and genuinely trying to cooperate, because if they aren't, they'll usually prove it soon enough anyway. You don't have to turn the other cheek repeatedly, but doing so once or twice more than you would by default goes a long way toward protecting against false positives on your bad-faith detector.

"You're clearly here in bad faith." → "In the past three comments, you said [thing], [thing], and [thing], all of which are false, and all of which it seems to me you must know are false; you're clearly here in bad faith." → "Listen, as I look back over what's already been said, I'm seeing a lot of stuff that really sets off my bad-faith detectors (such as [thing]). Can we try slowing down, or maybe starting over? Like, I'd have an easier time dropping back down from red alert if you engaged with my previous comment that you totally ignored, or if you were at least willing to give me some of your reasons for believing [thing]."

This behavior can be modeled, as well—the quickest way to get a half-derailed conversation back on track is to start sharing pairs of [what you believe] and [why you believe it]. To demonstrate to your conversational partner that those two things go together, and show them the kind of conversation you want to have.

(This is especially useful on the meta level—if you are frustrated, it's much better to say "I'm seeing X, and interpreting it as meaning Y, and feeling frustrated about that!" than to just say "you're being infuriating.")

You could think of the conversational environment as one in which defection strategies are rampant, and many would-be cooperators have been trained and traumatized into hair-trigger defection by repeated sad experience.

Taking that fact into account, it's worth asking "okay, how could I behave in such a way as to invite would-be cooperators who are hair-trigger defecting back into a cooperative mode? How could I demonstrate to them, via my own behavior, that it's actually correct to treat me as a collaborative truth-seeker, and not as someone who will stab them as soon as I have the slightest pretext for writing them off?"

Some ways you might feel when you're about to break the Fifth Guideline:

- It's more important to settle this one than to get all of the little fiddly details right.

- There's no way they could have been unaware of the implications of what they said.

- I'm going to write X, and if they respond with Y then I'll know they're here in bad faith. (The giveaway here being the desire to see them fail the test, versus a more dispassionate poking at various possibilities.)

Some ways a Fifth Guideline request might look:

- "Hey, sorry for the weirdly blunt request, but: I get the sense that you're not treating me as a cooperative partner in this conversation. Is, uh. Is that true?"

- "I'm finding it pretty hard to stay in this back-and-forth. Can you maybe pause and look back through what I've written and note anything you agree with? I'll do the same, e.g. you said X and I do think that's a piece of this puzzle."

- "What's your goal in this conversation?"

6. Don't jump to conclusions—maintain at least two hypotheses consistent with the available information.

... or be ready and willing to generate a real alternative to your main hypothesis, if asked or if it seems like it would help another user.

"You're strawmanning me." → "It really seems like you're strawmanning me." → "I can't tell whether you're strawmanning me or whether there's some kind of communication breakdown." → "I can't tell whether you're strawmanning me or whether there's some kind of communication breakdown; my best guess is that you think that [the phrase I wrote] means [some other thing]."

There exists a full essay on this concept titled Split and Commit [LW · GW]. The short version is that there is a large difference between a person who has a single theory (which they are nominally willing to concede might be false), and a person who has two fully distinct possible explanations for their observations, and is looking for evidence to distinguish between them.

Another way to point at this distinction is to remember that bets are different from beliefs.

Most of the time, you are forced to make some sort of implicit bet. For instance, you have to choose how to respond to your conversational partner, and responding-to-them-as-if-they-were-sincere is a different "bet" than responding-to-them-as-if-they-are-insincere.

And because people are often converting their beliefs into bets, and because bets are often effectively binary, they often lose track of the more complicated thing that preceded the rounding-off.

If a bag of 100 marbles contains 70 red ones and 30 green ones, the best bet for the first string of ten marbles out of the bag is RRRRRRRRRR. Any attempt to sprinkle some Gs into your prediction is more likely to be wrong than right, since any single position is 70% likely to contain an R.

(There's less than a 3% chance of the string being RRRRRRRRRR, but the odds of any other specific string are even worse.)

But it would be silly to say that you believe that the next ten marbles out of the bag will all be red. If forced, you will predict RRRRRRRRRR, because that's the least wrong prediction, but actually (hopefully) your belief is "for each marble, it's more likely to be red than green but it could pretty easily be green."

In similar fashion, when you witness someone's behavior, and your best bet is "this person is biased or has an unstated agenda," your belief should ideally be something like "this behavior is most easily explained by an unstated agenda, but if I magically knew for a fact that that wasn't what was happening, the next most likely explanation would be ______________."

That extra step—of pausing to consider what else might explain your observations, besides your primary theory—is one that is extremely useful, and worth practicing until it becomes routine. People who do not have this reflex tend to fall into many more pits/blindspots, and to have a much harder time bridging inferential gaps, especially with those they do not already agree with.

Some ways you might feel when you're about to break the Sixth Guideline:

- You've seen this before; you know exactly what this is.

- X piece of evidence will be sufficient to prove or disprove your hypothesis.

- It's really important that you respond to what's happening; the stakes are high and inaction would be problematic.

Some ways a Sixth Guideline request might look:

- "Do you think that's the only explanation for these observations?"

- "It sounds like you're trying to evaluate whether X is true or false. What's your next best theory if it turns out to be false?"

- "You're saying that A implies B. How often would you say that's true? Like, is A literally tantamount to B, or does A just lead to B 51% of the time, or ... ?"

7. Be careful with extrapolation, interpretation, and summary/restatement.

Distinguish between what was actually said and what it sounds like/what it implies/what you think it looks like in practice/what it's tantamount to, especially if another user asks you to pay more attention to this distinction than you were doing by default. If you believe that a statement A strongly implies B, and you are disagreeing with A because you disagree with B, explicitly note that "A strongly implies B" is a part of your model. Be willing to do these things on request if another person asks you to, or if you notice that it will help move the conversation in a healthier direction.

Another way to put this guideline is "don't strawman," but it's important to note that, from the inside, strawmanning doesn't typically feel like strawmanning.

"Strawmanning" is a term for situations in which:

- Person A has a point or position that they are trying to express or argue for

- Person B misrepresents that position as being some weaker or more extreme position

- Person B then attacks, disparages, or disproves the worse version (which is presumably easier than addressing Person A's true argument)

Person B constructs a strawman, in other words, just so they can then knock it down.

...

There's a problem with the definition above; readers are invited to pause and see if they can catch it.

...

If you'd like a hint: it's in the last line (the one beginning with "Person B constructs a strawman").

...

The problem is in the last clause.

"Just so they can knock it down" presupposes purpose. Not only is Person B engaging in misrepresentation, they're doing it in order to have some particular effect on the larger conflict, presumably in the eyes of an audience (since knocking over a strawman won't do much to influence Person A).

It's a conjunction of act and intent, implying that the vast majority of people engaging in strawmanning are doing so consciously, strategically, and in a knowingly disingenuous fashion—or, if they're not fully self-aware about it, they're nevertheless subconsciously optimizing for making Person A's position appear sillier or flimsier than it actually is.

This does not match how the term is used, out in the wild; it would be difficult to believe that even twenty percent of my own encounters with others using the term (let alone a majority, let alone all of them) are downstream of someone being purposefully deceptive. Instead, the strawmanning usually seems to be "genuine," in that the other person really thinks that the position being argued actually is that dumb/bad/extreme.

It's an artifact of blind spots and color blindness [LW · GW]; of people being unable-in-practice to distinguish B from A, and therefore thinking that A is B, and not realizing that "A implies B" is a step that they've taken inside their heads. Different people find different implications to be more or less "obvious," given their own cultural background and unique experiences, and it's easy to typical-mind that the other relevant people in the conversation have approximately the same causal models/context/knowledge/anticipations.

If it's just patently obvious to you that A strongly implies B, and someone else says A, it's very easy to assume that everyone else made the leap to B right along with you, and that the author intended that leap as well (or intended to hide it behind the technicality of not having actually come out and said it). It may feel extraneous or trivial, in the moment, to make that inference explicit—you can just push back on B, right?

Indeed, if the leap from A to B feels obvious enough, you may literally not even notice that you're making it. From the inside, a blindspot doesn't feel like a blindspot—you may have cup-stacked [LW · GW] your way straight from A to B so quickly and effortlessly that your internal experience was that of hearing them say B, meaning that you will feel bewildered yourself when what seems to you to be a perfectly on-topic reply is responded to as though it were an adversarial non-sequitur.

(Which makes you feel as if they broke cooperation first; see the sixth guideline.)

People do, in fact, intend to imply things with their statements. People's sentences are not contextless objects of unambiguous meanings. It's entirely fine to hazard a guess as to someone's intended implications, or to talk about what most people would interpret a given sentence to mean [LW · GW], or to state that [what they wrote] landed with you as meaning [something else]. The point is not to pretend that all communication is clear and explicit; it's to stay in contact with the inherent uncertainty in our reconstructions and extrapolations.

"What this looks like, in practice" or "what most people mean by statements of this form" are conversations that are often skipped over, in which unanimous consensus is (erroneously) taken for granted, to everyone's detriment. A culture that seeks to promote clear thinking, clear communication, and collaborative truth-seeking benefits from a high percentage of people who are willing to slow down and make each step explicit, thereby figuring out where exactly shared understanding broke down.

Some ways you might feel when you're about to break the Seventh Guideline:

- Outraged, offended, insulted, or attacked.

- Irritated at the other person's sneakiness or disingenuousness.

- Like you need to defend against a motte-and-bailey.

Some ways a Seventh Guideline request might look:

- "That's not what I wrote, though. Can you please engage with what I wrote?"

- "Er, you seem to be putting a lot of words in my mouth."

- "I feel like I'm being asked to defend a position I haven't taken. Can you point at what I said that made you think I think X?"

8. Allow people to restate, clarify, retract, and redraft their points.

Communication is difficult. Good communication is often quite difficult.

One of the simplest interventions for improving discourse is to allow people to try again.

Sometimes our first drafts are clumsy in their own right—we spoke too soon, or didn't think things through deeply enough.

Other times, we said words which would have caused a clone of ourselves to understand, but we failed to account for some crucial cultural difference or inferential gap with our non-clone audience, and our words caused them to construct a meaning that was very different than the meaning we intended.

Also, sometimes we're just wrong!

It's quite common, on the broader internet and in difficult in-person conversations, for people's early rough-draft attempts to convey a thought to haunt them. People will relentlessly harp on some early, clumsy phrasing, or act as if some point with unpleasant ramifications (which the speaker failed to consider) intended those ramifications.

What this results in is a chilling effect on speech (since you feel like you have to get everything right on your first try or face social punishment) and a disincentive for making updates and corrections (since those corrections will often simply be ignored and you'll be punished anyway as if you never made them, so why bother).

Part of the solution is to establish a culture of being forgiving of imperfect first drafts (and generous/light-touch in your interpretation of them), and of being open to walkbacks or restatements or clarifications.

It's perfectly acceptable to say something like "This sounds crazy/abhorrent/wrong to me," or to note that what they wrote seems to you to imply some statement B that is bad in some way.

It's also perfectly reasonable to ask that people demonstrate that they see what was wrong with their first draft, rather than just being able to say "no, I meant something subtly different" ad infinitum.

But if your conversational partner replies with "oh, gosh, sorry, no, that is not what I'm trying to say," it's generally best to take that assertion at face value, and let them start over. As with the sixth guideline, this means that you will indeed sometimes be giving extra leeway to people who are actually being irrational/unreasonable/bad/wrong, but most of the time, it means that you will be avoiding the failure mode of immediately leaping to a conclusion about what the other person meant and then refusing to relinquish that assumption.

The claim is that the costs of letting a few more people "get away with it" a little longer is better than curtailing the whole population's ability to think out loud and update on the fly.

Some ways you might feel when you're about to break the Eighth Guideline:

- They really, really shouldn't have said that thing; they really should have known better.

- You can tell what they really meant, and now they're just backpedaling.

- Damage was done, and merely saying "I didn't mean it" doesn't undo the damage.

Some ways an Eighth Guideline request might look:

- "Oh, that word/phrase means something different to you than it does to me. Let me try again with different words, because the thing you heard is not the thing I was trying to say."

- "I hear that you have a pretty strong objection to what I said. I'm wondering if I could start over entirely, rather than saying a new thing and having you assume that what I meant is in between the two versions of what I've said."

- "Can you try passing my ITT [? · GW], so that I can see where I've miscommunicated?"

9. Don't weaponize equivocation/abuse categories/engage in motte-and-bailey shenanigans.

...and be open to eschewing/tabooing broad summary words and talking more about the details of your model, if another user asks for it or if you suspect it would lower the overall confusion in a given interaction.

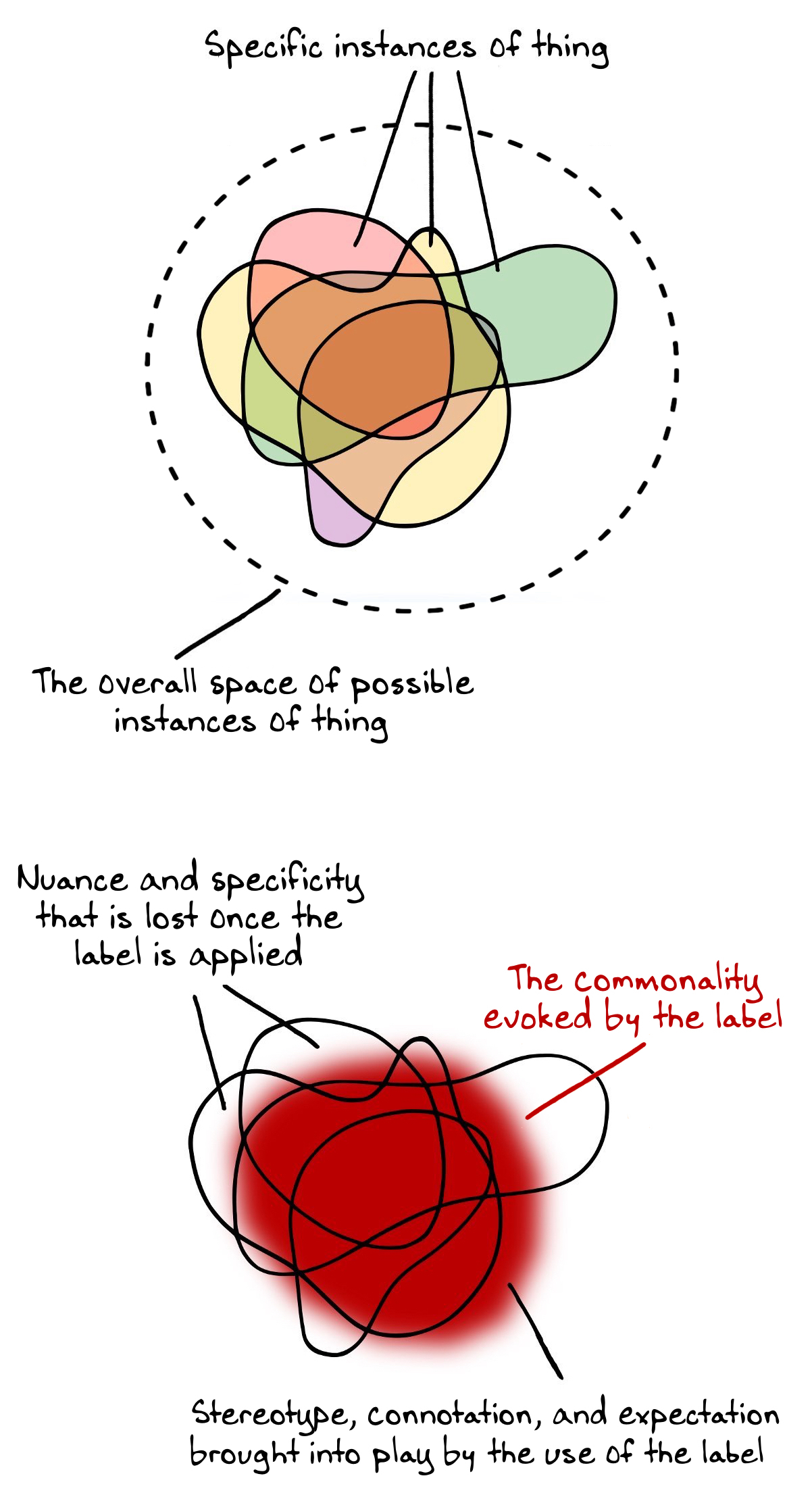

However, labels are a tool with some known failure modes. When someone uses a conceptual handle like "marriage," "genocide," "fallacy of the grey," or "racist," they are staking a claim about the relationship between a specific instance of [a thing in reality], and a cluster of [other things] that all share some similar traits.

That leads to some fairly predictable misunderstandings.

For instance, someone might notice that a situation has (e.g.) three out of seven salient markers of gaslighting (in their own personal understanding of gaslighting).

Three out of seven is a lot, when most things have zero out of seven! So it's reasonable for them to bring in the conceptual handle "gaslighting" as they begin to reason about and talk about the situation—to port in the intuitions and strategies that are generally useful for things in the category.

But it's very easy for people to fail to make clear that they're using the term "gaslighting" because it had specific markers X, Y, and Z, and that the situation doesn't seem to have markers T, U, V, or W at all, let alone considerations of whether or not their own idiosyncratic seven markers sync up with consensus understanding of gaslighting.

And thus the application of the term can easily cause other observers to implicitly conclude that all of T, U, V, W, X, Y, and Z are nonzero involved (and possibly also Q, R, and S that various other people bring to the table without realizing that they are non-universal).

Done intentionally, we call this weaponized equivocation or motte-and-bailey, i.e. "I can make the term gaslighting stick in a technically justified sense, and then abuse the connotation to make everybody think that you were doing all of the bad things involved in gaslighting on purpose and that you are a gaslighter, with all that entails."

But it also happens by accident, quite a lot. A conceptual handle makes sense to Person A, so they use it, and Person B both loses track of nuance and also injects additional preconceptions, based on their understanding of the conceptual handle.

The general prescription is to use categories and conceptual handles as a starting point, and then carefully check one's understanding.

"This is just the concept of lossy compression." → "This is making me think of lossy compression; is there anything here that's not already covered by that concept?" → "What I'm hearing is A, B, C, and D, which happen to be exactly the same markers I have for 'lossy compression'. Are you in fact saying A, B, C, and D? And are you in fact not saying anything else?"

Another way to think of this prescription is to recognize that the use of categories and conceptual handles is warping, in the sense that categories and conceptual handles are often like gravitational attractors pulling people's models toward a baseline archetype or stereotype. They tend to loom large, and obscure away detail, and generate a kind of top-down smoothing consensus or simplification.

That's super useful when the alternative is having them be lost out in deep space, but it's also not as good as using the category to get them in the right general vicinity and then deliberately not leaning on the category once they're close enough that you can talk about all of the relevant specifics in detail.

Some ways you might feel when you're about to break the Ninth Guideline:

- The way in which the thing under discussion is an instance of X is the most important factor, dwarfing all other considerations.

- The unique or non-typical aspects of the thing are obvious and go without saying.

- Everybody knows what X means.

Some ways a Ninth Guideline request might look:

- "Hang on, you used a category word that covers a lot of ground. Can you name, like, one or two other instances of X that are roughly on par? I currently don't know if you mean bad-like-sunburns or bad-like-cancer."

- "What's the value of agreeing on this being an X? Like, you're bidding for this label to be attached ... what comes out of that, if we all end up agreeing?"

- "If I were to say that this isn't an X, it's actually a Y, what would you say to that?"

10. Hold yourself to the absolute highest standard when directly modeling or assessing others' internal states, values, and thought processes.

"You're obviously crazy." → "This seems crazy to me." → "I'm having a hard time making this make sense, and I'm seriously considering the possibility that it just doesn't make sense, and you're confused/crazy." → "This really sounds to me like it's more likely to come from some disorganized or broken thought process than something that's grounded in reality. I apologize for that; I know the previous sentence is more than a little rude. I would have much less weight on that hypothesis if you could [pass some kind of concrete test I propose that would demonstrate that you're not incapable of reason in this domain]."

Of the ten guidelines, this is the one which is the least about epistemic hygiene, and the most about social dynamics.

(It's not zero about epistemic hygiene, but it deserves extra emphasis for pragmatic reasons rather than philosophical ones.)

In short:

If you believe that someone is being disingenuous or crazy or is in the grips of a blindspot—if you believe that you know, better than they know themselves, what's going on in their head (or perhaps that they are lying about what's going on in their head)—then it is important to be extra cautious and principled about how you go about discussing this fact.

This is important because it's very easy for people to (reasonably) feel attacked or threatened or delegitimized when others are making bold or judgment-laden assertions about the internal contents of their mind/soul/values, and it's very hard for conversation to continue to be productive when one of the central participants is partially or fully focused on defending themselves from perceived social attack.

It is actually the case that people are sometimes crazy. It is actually the case that people are sometimes lying. It is actually the case that people are sometimes mistaken about the contents of their own minds, and that other people, on the outside, can see this more clearly. A blanket ban on hypotheses-about-others'-internals would be crippling to anyone trying to see clearly and understand the world; these things should, indeed, be thinkable and discussible, the fact that they are "rude" notwithstanding.

But by making those hypotheses a part of an open conversation, you're adding a great deal of social and emotional strain to the already-difficult task of collaborative truth-seeking with a plausibly-compromised partner. In many milieus, the airing of such a hypothesis is an attack; there are not a lot of places where "you might be crazy" or "I know more than you about how your mind works" is a neutral or prosocial move. If the situation is such that it feels genuinely crucial for you to raise such a hypothesis out loud, then it should also be worth correspondingly greater effort and care.

(See the zeroth guideline.)

Some simple actions that tend to make this sort of thing go less reliably badly:

- Take the social hit onto your own shoulders. Openly and explicitly acknowledge that you are, in fact, making assertions about the interior of another person's mind; openly and explicitly acknowledge that this is, in fact, nonzero rude and carries with it nonzero social threat. Doing this gives the other person more space to be visibly shaken or upset without creating the appearance of proving your point; it helps defuse the threat vector by which one person provokes another into appearing unreasonable or hysterical and thereby delegitimizes them.

- State the reasons for your belief. Don't just assert that you think this is true; include quotes and references that show what led you to generate the hypothesis. This grounds your assertions in reality rather than in your own personal assessment, allowing others to retrace and affirm/reject your own reasoning.

- Give the other person an out. Try to state some things that would cause you to conclude that they are not compromised in the way you fear they are (and do your best to make this a fair and reasonable test rather than a token impossibility). Imagine the world in which you are straightforwardly mistaken, and ask yourself how you would distinguish that world from the world you think that you're in.

For more on this, see [link to a future essay that is hopefully coming from either Ray Arnold or myself].

Some ways you might feel when you're about to break the Tenth Guideline:

- This person's conduct is clear; there's only one possible interpretation.

- This person is threatening norms and standards that are super important for making any further conversation productive.

- It's important that the audience understand why they need to stop listening to this person immediately.

Some ways a Tenth Guideline request might look:

- "Please stop making assertions about the contents of my mind; you are not inside my head."

- "Do you have any alternative explanations for why a person might take the position I'm taking, that don't involve being badwrong dumbcrazy?"

- "It feels like you're setting up a fully general argument against literally anything else I might say. What, uh. What do you think you know and why do you think you know it?"

(These requests deliberately written to appear somewhat triggered/hostile because that's the usual tone by the point such a request needs to be made, and a little bit of leeway on behalf of the beleaguered seems appropriate.)

Appendix: Miscellaneous Thoughts

This post was long, and was written over the course of many, many months. Below are some scattered, contextless snippets of thought that ended up not having a home in any of the sections above.

Some general red flags for poor discourse:

- Things are obvious, and the people who are not getting the obvious things are starting to get on your nerves.

- You just can't wait to hit "submit." Words are effortlessly tumbling forth from your fingertips.

- You are exhausted, but also can't afford not to respond.

- You feel angry/hurt/filled with righteous indignation.

- Your conversational partner has just clearly demonstrated that they're not there in good faith.

Some sketchy conversational movements that don't fall neatly into the above:

- Being much quieter in one's updates and oopses than one was in one's bold wrongness

- Treating a 70% probability of innocence and a 30% probability of guilt as a 100% chance that the person is 30% guilty (i.e. kinda guilty).

- Pretending that your comment is speaking directly to a specific person while secretly spending the majority of your attention and optimization power on playing to some imagined larger audience.

- Generating interventions that will make you feel better, regardless of whether or not they'll solve the problem (and regardless of whether or not there even is a real problem to be solved, versus an ungrounded anxiety/imaginary injury).

- Generally, doing things which make it harder rather than easier for people to see clearly and think clearly and engage with your argument and move toward the truth.

A skill not mentioned elsewhere in this post: the meta-skill of being willing to recognize, own up to, apologize for, and correct failings in any of the above, rather than hiding one's shame or doubling down or otherwise acting as if the problem is the mistake being seen rather than the mistake being made.

Appendix: Sabien's Sins

The following is something of a precursor to the above list of basics; it was not intended to be as complete or foundational as the ten presented here but was more surgically targeting some of the most frustrating deltas between this subculture's revealed preferences and my own endorsed standards. It was posted on Facebook several years ago; I include it here mostly as a historical curiosity.

We continue to creep closer to actually sufficient discourse norms, as a culture, mostly via a sort of stepwise mutual disarmament. Modern Western discourse norms (e.g. “don’t use ad hominem attacks”) got us something like seventy percent of the way there. Rationalist norms (e.g. “there’s no such thing as 100% sure”) got us maybe seventy percent of the remaining distance. The integration of Circling/NVC/Focusing/Belief Reporting frames (e.g. “I’m noticing that I have a story about you”) got us seventy percentish yet again.

This is an attempt to make another seventy percent patch. It is intended to build upon the previous norms, not to replace them. It isn’t perfect or comprehensive by any means—it’s derived entirely from my own personal frustrations during the past three or four years of online interactions—but it closes a significant number of the remaining socially-tolerated loopholes that allow even self-identified rationalists to “win” based on factors other than discernible, defensible truth. Some of these “sins” are subsets of others, but each occurs often enough in my experience to merit its own standalone callout.

By taking the pledge, you commit to:

- Following the below discourse norms to the best of your ability, especially with other signatories, and noting explicitly where you are deliberately departing from them.

- Being open to feedback that you are not following these norms, and taking such feedback as aid in adhering to your own values rather than as an attempt to impose values from without.

- Doing your best to vocally, visibly, and actively support others who are following these norms, rather than leaving those people to fend for themselves against their interlocutors.

By taking the pledge, you do not commit to:

- Endless tolerance of sealioning, whataboutery, rules-lawyering, or other adversarial or disingenuous tactics that attempt to leverage the letter of the law in violation of its spirit.

- Adhering to the norms in contexts where no one else is, and where doing so therefore puts you at a critical disadvantage to no real benefit. A peace treaty is not a suicide pact.

Until I state otherwise, I hereby pledge that I shall refrain from using the following tactics in discourse:

- Asserting the unfounded. I will not overstate my claims. I will exercise my right to form hypotheses and guesses, and to express those both with and without justification, but I will actively disambiguate between “I think or predict X” and “X is true.” I will keep my own limited perspective and experience in mind when making generalizations, and avoid universal statements unless I genuinely mean them.

- Overlooking the inconvenient. I will not focus only on the places where my argument is strongest. I will not ghost from interactions in which I am losing the debate. I will do my best to respond to every point my interlocutors raise, or to explicitly acknowledge a refusal to do so (e.g. “I’m not going to discuss your third or fourth points.”), rather than quietly steering the conversation in another direction. I will acknowledge and explicitly endorse the parts of my interlocutor’s argument that seem true and correct. I will not adversarially summarize my interlocutor. When wrong, I will correct my error and admit fault, and will strive to own and propagate my new position at least as effectively as I asserted, defended, and propagated the original claim.

- Eliding the investigation. I will not equivocate between priors and posteriors. I will strive to maintain the distinction between what things look like or are likely to be, and what we know with confidence that they are. I will preserve the difference between expecting a marble to be red because it came from a bag labeled “red marbles,” and claiming that a marble must be red because of the bag it came from. I will not assign, to a given member of a set, responsibility for every characteristic of that set. I will honor and support both sensible priors and defensible posteriors, and remember to reason about each separately.

- Indulging in presumption. I will not make authoritative claims about the contents of others’ thoughts, intentions, or experiences without explicit justification. I remain free to state my suspicions and to make predictions and to form hypotheses, and to support those suspicions/predictions/hypotheses with fact and argument, but I will keep the extreme variability of human experience in mind when attempting to deduce others’ inner workings from their visible outputs, and I will remember not to discount what others have to say about themselves without strong and compelling reason. I will distinguish “these behaviors often correlate with these beliefs” from “you exhibited these behaviors with these results, therefore you must believe these things.” I will keep even my very high confidence hypotheses falsifiable. I will avoid sneers, insinuations, subtle put-downs, and all other manipulations-of-reputation that score points by painting someone else into a corner.

- Hiding in the grey. I will not engage in motte-and-bailey shenanigans. I will not “play innocent” or otherwise pretend obliviousness to the obvious meanings or ramifications of my words. I will attend carefully to context, and will be deliberate and explicit about my choice between contextualizing and decoupling norms, and not mischaracterize those who prefer the opposite of my own preference. I will not make statement-X intending meaning-Y, if it is clear that a reasonable person employing common sense would take statement-X to mean something very different from Y. I will not fault others for reacting to what I said, even if it is not what I meant. I will uphold a norm of allowing all participants to recant what they said, and try again, as long as they acknowledge explicitly that that is what they are doing.

Public signatories:

Duncan Sabien

Nick Faircloth

Damon Pourtamahseb-Sasi

Rupert McCallum

Melanie Heisey

Chris Watkins

Chad Groft

Konrad Seifert

Alex Rozenshteyn

Rob Bensinger

Glen Raphael

Anton Dyudin

Ryan Gauvreau

Adele Lopez

Abram Demski

Jasper Götting

Max Ra

193 comments

Comments sorted by top scores.

comment by cubefox · 2023-01-30T00:15:00.920Z · LW(p) · GW(p)

I would like to propose two other guidelines:

Be aware of asymmetric discourse situations.

A discourse is asymmetric if one side can't speak freely, because of taboos or other social pressures. If you find yourself arguing for X, ask yourself whether arguing for not-X is costly in some way. If so, don't take weak or absent counterarguments as substantial evidence in your favor. Often simply having a minority opinion makes it difficult to speak up, so defending a majority opinion is already some sign that you might be in an asymmetric discourse situation. The presence of such an asymmetry also means that the available evidence is biased in one direction, since the arguments of the other side are expressed less often.

Always treat hypotheses as having truth values, never as having moral values.

If someone makes [what you perceive as] an offensive hypothesis, remember that the most that can be wrong with that hypothesis is that it is false or disfavored by the evidence. Never is a hypothesis by itself morally wrong. Acts and intentions can be immoral; hypotheses are neither of those. If you strongly suspect that someone has some particular intention with stating a hypothesis, then be honest and say so explicitly.

Replies from: cubefox↑ comment by cubefox · 2023-01-30T00:25:42.651Z · LW(p) · GW(p)

The latter guideline was inspired by quotes from Ronny Fernandez and Arturo Macias. Fernandez:

No thought should be heretical. Making thoughts heretical is almost never worth it, and the temptation to do so is so strong, that I endorse the strict rule "no person or ideology should ever bid for making any kind of thought heretical".

So next time some public figure gets outed as a considerer of heretical thoughts, as will surely happen, know that I am already against all calls to punish them for it, even if I am not brave enough to publicly stand up for them at the time.

(He adds some minor caveats.)

Macias:

The separation between value and fact, between "will" and "representation" is one of the most essential epistemological facts. Reality is what it is, and our assessment of it does not alter it. Statements of fact have truth value, not moral value. No descriptive belief can ever be "good" or "bad." (...) no one can be morally judged for their sincere opinions about this part of reality. Or rather, of course one must morally judge and roundly condemn anyone who alters their descriptive beliefs about reality for political convenience. This is exactly what is called “motivated thought”.

comment by jimrandomh · 2023-01-27T23:29:04.048Z · LW(p) · GW(p)

Don't jump to conclusions—maintain at least two hypotheses consistent with the available information.

... or be ready and willing to generate a real alternative to your main hypothesis, if asked or if it seems like it would help another user.

Most of these seem straightforwardly correct to me. But I think of the 10 things in this list, this is the one I'd be most hesitant to present as a discourse norm, and most worried about doing damage if it were one. The problem with it is that it's taking an epistemic norm and translating it into a discourse norm, in a way that accidentally sets up an assumption that the participants in a conversation are roughly matched in their knowledge of a subject. Whereas in my experience, it's fairly common to have conversations where one person has an enormous amount of unshared history with the question at hand. In the best case scenario, where this is highly legible, the conversation might go something like:

A: I think [proposition P] because of [argument A]

B: [A] is wrong; I previously wrote a long thing about it. [Link]

In which case B isn't currently maintaining two hypotheses, and is firmly set in a conclusion, but there's enough of a legible history to see that the conclusion was reached via a full process and wasn't jumped to.

But often what happens is that B has previously engaged with the topic in depth, but in an illegible way; eg, they spent a bunch of hours thinking about the topic and maybe discussed it in person, but never produced a writeup, or they wrote long blog-comments but forgot about them and didn't keep track of the link. So the conversation winds up looking like:

A: I think [proposition P] because of [argument A]

B: No, [A] is wrong because [shallow summary of argument for not-A]. I'm super confident in this.

A: You seem a lot more confident about that than [shallow summary of argument] can justify, I think you've jumped to the conclusion.

A misparses B as having a lot less context and prior thinking about [P] than they really do. In this situation, emphasizing the virtue of not-jumping-to-conclusions as a discourse norm (rather than an epistemic norm) encourages A to treat the situation as a norm violation by B, rather than as a mismodeling by A. And, sure, at a slightly higher meta-level this would be an epistemic failure on A's part, under the same standard, and if A applied that standard to themself reliably this could keep them out of that trap. But I think the overall effect of promoting this as a norm, on this situation, is likely ot be that A gets nudged in the wrong direction.

comment by Elizabeth (pktechgirl) · 2024-12-12T23:54:29.694Z · LW(p) · GW(p)

I wish this had been called "Duncan's Guidelines for Discourse" or something like that. I like most of the guidelines given, but they're not consensus. And while I support Duncan's right to block people from his posts (and agree with him far on discourse norms far more than with the people he blocked), it means that people who disagree with him on the rules can't make their case in the comments. That feels like an unbalanced playing field to me.

Replies from: Benito, weightt-an↑ comment by Ben Pace (Benito) · 2024-12-13T03:39:29.123Z · LW(p) · GW(p)

I think that Duncan was not aspiring to set his own preferred standards, but to figure out the best standards for truth-seeking discourse. I might agree that he did not perfectly succeed, but I'm not sure this means all attempts, if deemed not perfectly successful, should be called "My Guidelines for My Preferred Discourse".

Replies from: Raemon, pktechgirl↑ comment by Raemon · 2024-12-13T04:01:20.246Z · LW(p) · GW(p)

I think the point is, anything aspiring to that needs to not have people blocked.

Replies from: Benito↑ comment by Ben Pace (Benito) · 2024-12-13T06:58:09.759Z · LW(p) · GW(p)

I see. I agree it makes the strength of discourse here weaker, and agree that the people blocked were specifically people who have disagreements about the standards to aspire to in large group discourse. I am grateful that at least one of the people has engaged well elsewhere, and have written a review [LW(p) · GW(p)] encouraging people to positively vote on that post (I gave it +4). While I do think it's likely some valid criticisms of content within the posts have been missed as a result of such silencing effects under Duncan's posts, I feel confident enough that there's a lot of valuable content that I still think it deserves to score highly in the review.

Replies from: Raemon↑ comment by Raemon · 2024-12-13T08:19:41.539Z · LW(p) · GW(p)

Yeah, I'm not making any object level claims about this post one way or another, just thinking about the general principles.

Thinking a bit more:

I think the Review does fairly naturally make a schelling time for people to write up more top-level responses on things they disagree with. I think it's probably important for it to be possible to write reviews on posts during the Review unless the author specifically removes it from consideration in the Review (which maybe should be special-cased, and doesn't mean people can write more back-and-forth comments, just write a top level review).

(that's still me musing-out-loud, not like making a final decision, but I will think about it more)

↑ comment by Elizabeth (pktechgirl) · 2024-12-13T18:36:38.233Z · LW(p) · GW(p)

The conflation of "Duncan's ideal" and "the perfect ideal everyone has agreed to" is what I'm complaining about.

If Duncan had, e.g., included guidelines that were LW consensus but he disagreed with, then it would feel more like an attempt to codify the site's collective preferences rather than his in particular.

Replies from: Benito↑ comment by Ben Pace (Benito) · 2024-12-13T19:45:26.532Z · LW(p) · GW(p)

I don't think that Duncan tried to describe what everyone has agreed to, I think he tried to describe the ideal truth-seeking discussion norms, irrespective of this site's current discussion norms.

Added: I guess one can see here what the algorithm he aimed to run, which had elements of both:

In other words, the guidelines are descriptive of good discourse that already exists; here I am attempting to convert them into prescriptions, with some wiggle room and some caveats.

↑ comment by Canaletto (weightt-an) · 2024-12-13T19:26:05.388Z · LW(p) · GW(p)

Also just on priors, consider how unproductive and messy, mostly talking about who said what and analyzing virtues of participants, the conversation caused by this post and its author was. I think even without reading it it's an indicator of somewhat doubtful origin for a set of prescriptivist guidelines.

comment by YafahEdelman (yafah-edelman-1) · 2023-02-05T06:25:05.752Z · LW(p) · GW(p)

I feel uncomfortable with this post's framing. It feels like someone went into a garden I spend my time in and unilaterally put up a sign with a list of guidelines people should follow in the garden, with no ability to enforce these. I know that I can choose on my own whether or not to follow these guidelines, based on whether I think they are good ideas, but newcomers to the garden will see the sign and assume they have to follow them. I would have vastly preferred that the sign instead say "I personally think these norms would be neat, here's why."

(to clarify: the garden = lesswrong/the rationalist community. the sign = this post)

↑ comment by Duncan Sabien (Deactivated) (Duncan_Sabien) · 2023-02-05T06:50:50.300Z · LW(p) · GW(p)

I note that this sort of sentiment is something I was aware of, and I made choices around this deliberately (e.g. considered titling the post "Duncan's Basics" and decided not to).

I do not quite think that these norms are obvious and objective (e.g. there's some pretty decent discussion on the weaknesses of 5 and 10 elsewhere), but I think they're much closer to being something like an objectively correct description of How To Do It Right than they are to a mere random user's personal opinion; headlining them as "I personally think these norms would be neat" would be substantially misleading/deceptive/manipulative and wouldn't accurately reflect the strength of my actual claim.

I think the discomfort you're pointing at is real and valid and a real cost, but I have been wrestling with LessWrong's culture for coming up on eight years now, and I think it's a cost worth paying relative to the ongoing costs of "we don't really have clear standards of any kind" and "there's really nothing to point to if people are frustrated with each other's engagement style."

(There really is almost nothing; a beginner being like "how do I do this whole LessWrong thing?" has very little in the way of "here are the ropes; here's what makes LW discourse different from the EA forum or Facebook or Reddit or 4chan.")

I also considered trying to crowdsource a thing, and very very very strongly predicted that what would happen would be everyone acting as if everyone has infinite vetos on everything, and an infinite bog of circular debate, and as a result [nothing happening]. I simultaneously believe that there really actually is a set of basics that a supermajority of LWers implicitly agree on and that there is basically no chance of getting the mass of users as a whole to explicitly converge on and ratify anything.

So my compromise was ... as you see. It wasn't a thoughtless or light decision; I think this was the least bad of all the options, and better than saying "I personally think," and better than doing nothing.

(I do think newcomers assuming they should generally follow these guidelines is an improvement over status quo of last week.)

I note that if this sparks some other, better proposal and that proposal wins, this is a pretty awesome outcome according to me; I do think there exist possible Better Drafts of this or nearby frameworks.

Replies from: yafah-edelman-1↑ comment by YafahEdelman (yafah-edelman-1) · 2023-02-06T06:56:39.713Z · LW(p) · GW(p)