A Way To Be Okay

post by Duncan Sabien (Deactivated) (Duncan_Sabien) · 2023-02-19T20:27:10.061Z · LW · GW · 38 commentsContents

I. Fabricated Options II. Victory Conditions III. Sculptors and Sculptures IV. Afghanistan V. "The Plan" A Way To Be Okay Post-script: expansions from the comments None 41 comments

This is a post about coping with existential dread, shared here because I think a lot of people in this social bubble are struggling to do so.

(Compare and contrast with Gretta Duleba's essay Another Way To Be Okay [LW · GW], written in parallel and with collaboration.)

As the title implies, it is about a way to be okay. I do not intend to imply it is the only way, or even the primary or best way. But it works for me, and based on my conversations with Nate Soares I think it's not far from what he's doing, and I believe it to be healthy and not based on self-deception or cauterizing various parts of myself. I wish I had something more guaranteed to be universal, but offering one option seems better than nothing, for the people who currently seem to me to have zero options.

The post is a bit tricky to write, because in my culture this all falls straight out of the core thing that everyone is doing and there's not really a "thing" to explain. I'm sort of trying to figure out how to clearly state why I think the sky is often blue, or why I think that two plus two equals four. Please bear with me, especially if you find some parts of this to be obvious and are not sure why I said them—it's because I don't know which pieces of the puzzle you might be missing.

The structure of the post is prereqs/background/underlying assumptions, followed by the synthesis/conclusion.

I. Fabricated Options

There's an essay on this one [LW · GW]. The main thing that is important to grok, all the way deep down in your bones, is something like "impossible options aren't possible; they never were possible; you haven't lost anything at all (or failed at anything at all) by failing to take steps that could not be taken."

I think a lot of people lose themselves in ungrounded "what if"s, both past-based and future-based, and end up causing themselves substantial pain that could have been avoided if they had been more aware of their true constraints. e.g. yes, sometimes people really are "lazy," in the sense that they had more to give, and could have given it, and not-giving-it was contra to their values, and they chose not to give it and then things were worse for them as a result.

But it's also quite frequently the case that "giving more" was a fabricated option, and the person really was effectively at their limit, and it's only because they have a fairy tale in which they somehow have more to give that they have concluded they messed up.

(Related concept: counting up vs. counting down)

An excerpt from r!Animorphs: The Reckoning:

The Andalite’s stalks drooped. <Prince Jake, you speak as if you still intend to maintain control. As if you believe control is a thing that is possible. You know how the [ostensibly omniscient and omnipotent] Ellimist works. You know you cannot outmaneuver it. Any attempt to predict the intended outcome, and deliberately subvert it, will fail. You should be riding this wave, not trying to swim through it.>

...

<There are many things which seem possible which never were, in fact. You act as if we were on a path, and Cassie’s appearance has dragged us off of it—temporarily, pending a deliberate return. But consider. Rachel was forewarned of Marco’s reaction—may well have been placed here specifically to guard against it. The path you imagined us to be on is not real. We were never on it. We were on this path—always, from the beginning—and simply did not know it until now. To pretend otherwise is sheer folly.>

Humans appear to have some degree of agency and self-determination, but we often have less than we convince ourselves. Recognizing the limits on your ability to choose between possible futures is crucial for not blaming yourself for things you had no control over.

(In practice, many people need the opposite lesson—many people's locus of control is almost entirely external, and they need to be woken up to their capacity for choice and stop blaming everything on immutable factors. But for the purposes of this discussion, on A Way To Be Okay, the relevant failure mode is erroneously thinking that you had more internal control than you did.)

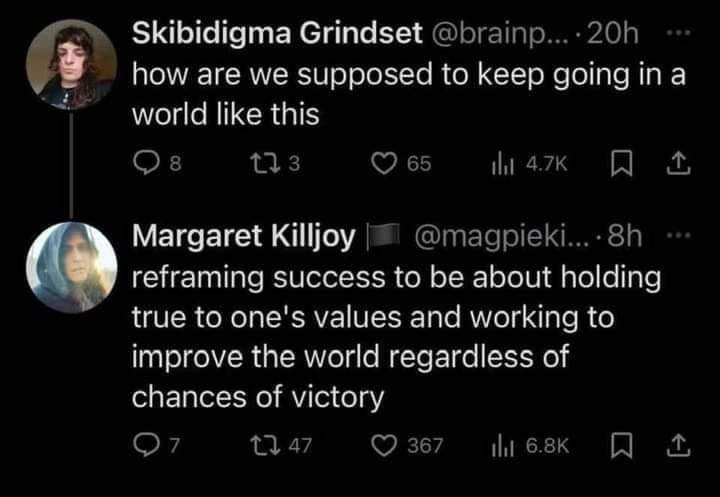

II. Victory Conditions

There's a snippet of advice that I've found myself giving over and over again, especially to e.g. young middle school students under my care who are struggling with parents or friendship or early romance. It doesn't have a standard phrasing, but it goes something like "it's a bad idea to put your victory conditions inside someone else's head."

For instance: to be happy or okay only if someone else is happy or okay.

For instance: to feel settled only if your parents admit that they mistreated you.

There's the trope of a character with a revenge plot who has everything they need to succeed, except for the fact that they need their enemy to know who it was who defeated them, and that complicates things and overexposes them and they end up losing.

If you make [feeling okay] contingent on someone else feeling a certain way or acknowledging a certain fact or taking a certain action, then you're effectively putting your okay-ness in their hands; they can deny you closure or stability or whatever via an act of pure spite, or simply by seeing things differently, etc. It's unstable, the sort of situation where even if you do turn out okay it's only because you got lucky.

In addition to the core point, about not setting your victory conditions in places where you have no agency and no control, there's a sub-point or prerequisite point which is did you know you can choose to put your victory conditions somewhere?

i.e. many people don't realize that they can, via an intentional decision, shift what they're caring about, in a given situation, or choose to redefine (local) victory; when I explain to a middle schooler that it's a bad idea to wait on their parents to acknowledge unfairness, because what if their parent is just stubborn about it, they usually go "oohhhh ... huh," and then decide to declare victory after some other, more tractable benchmark.

III. Sculptors and Sculptures

I am diachronic; sometimes this gets misconstrued as never-changing or whatever, but it's not quite that.

My go-to metaphor here is that there is a sculpture, which is the present-day version of a person (their current skills, appearance, relationships, projects, goals, etc), and a sculptor, which is the Person With A Vision, Currently Carving Toward That Vision.

By "diachronic," what I mostly mean is that the sculptor hasn't evolved much; at no point have I switched from carving This Statue to instead carving That Statue.

I don't think that this particular Way To Be Okay requires someone to be diachronic rather than episodic; I mention this more to point at the distinction between locating your identity in your present snapshot state, versus locating your identity in the-thing-that's-guiding-your-evolution. A lot of people see themselves as what they presently are, and thus if they switch to something else, they feel like they have deeply changed, as a person.

There's another way to do things, which is to have an evaluator that evaluates options in the moment, and consistently chooses the best option according to your values, which (since the inputs to that evaluation function can change) will sometimes result in you spending three years as a public school teacher and other times have you vanish into the wilderness with a bunch of AI researchers during COVID, all without feeling like the overall thing-you're-doing has changed at all.

(The equation y = 2x + 7 is the same equation even though sometimes it tells you that y is 13 and other times it tells you that y is 7 and other times it tells you that y is 0.71681469282.)

IV. Afghanistan

The United States occupied Afghanistan for nearly two decades.

This was a mixed bag to say the least, but it is unambiguously true that US occupation led to some better outcomes, e.g. greater freedom and opportunity for women.

When the United States pulled out of Afghanistan rather sloppily in August of 2021, the Taliban quickly took over, and the year-and-change since has seen a reversion of almost all of the progress on gender equality, with the remaining delta seeming likely to evaporate soon.

Many critics have said things to the effect of "we spent $X trillion and thousands of lives to take Afghanistan from oppressive Taliban rule to ... oppressive Taliban rule."

These people are missing (some of) the point.

For nearly two decades, girls in Afghanistan grew up going to school; many of them went to college; all of the individuals in that generation lived a brighter life than they otherwise would have.

That may not have been worth the cost. I'm not arguing about the overall net, or claiming that it was correct to be there.

(I'm not claiming it was incorrect, either. I'm dodging the question.)

But it's important to note that those twenty years of relative freedom are not nothing. They happened. There was happiness. Lives were better. Some people escaped entirely. Even those who did not escape, and who are currently falling back under dark-ages religious oppression, had some time in the sun.

There's a tendency among anti-transhumanists to scoff that there's no such thing as an infinite life span, because you'll just die after ten trillion years anyway, so what's the point—as if there's no appreciable difference between 100 years and 10,000,000,000,000 because both timespans end.

The point is the days in between. There's the remembering self, and there's the experiencing self; if I knew I were to die tomorrow I would not feel that my life today was wasted, and even if everyone were to die tomorrow, such that none of my work endured into the future, this would not be a total loss.

There is "mattering" in what happens, separate from its impact on the future. Both perspectives need to be taken into your final accounting.

V. "The Plan"

There's a somewhat obscure but fairly-compelling-to-me model of psychology which states that people are only happy/okay to the extent that they have some sort of plan, and also expect that plan to succeed.

Handwaving, but basically: you feel like it's reasonable to believe that the future holds at least some of the things you want it to hold; you feel like you're on a path to at least surviving and possibly thriving; you have a sense of what things are on the bottom two or three layers of your needs-hierarchy, and you expect those needs to be met.

If you don't have this sense, things start to fray and unravel, and you either tend to a) get really depressed, b) start to frantically cast about for a new plan to get them, or c) descend into self-deception about your looming doom, plugging your ears and ignoring the evidence that you're about to fail.

(So claims this model.)

And so, if your plan depends on something like "being alive for at least the next thirty years," or "all of the life in the biosphere not being converted into Bing," then developments over the past five years which threw your expectations into uncertainty and which seemed to give you no particular path to doing anything about it...might have left you feeling somewhat not-okay.

A Way To Be Okay

All right, here we go. This may be anticlimactic; I can't tell.

Basically:

If you locate your identity in being the sort of person who does the best they can, given what they have and where they are,

and if you define your victory condition as I did the best that I could throughout, given what I had and where I was,

then while the tragedy of dying (yourself) or having the species/biosphere end is still really quite large and really quite traumatic,

it nevertheless can't quite cut at the core of you. There is a thing there which is under your control and which cannot be taken away, even by death or disaster. There is a plan whose success or failure is genuinely up to you, as opposed to being a roll of the dice. It's a sort of Stoic mentality of "I cannot control what the environment presents to me, but I can control how I respond to it."

(Note that "best" is sort of fractally meta; obviously most of us are not going to manage to successfully hit the ideal maximum of output circa our own values, in that none of us will steer our own bodies and resources as well as a superintelligence with our goals would. But that too is a constraint; doing the (realistic, approximate) best that you can is the best that you can actually do; a theoretical Higher Bar is a fabricated option.)

I am not super stoked about what seems to me to be the fact that I will die before my two hundredth birthday.

I am not super stoked about what seems to me to be the frighteningly high likelihood that I will die before my child's twelfth birthday.

I am unhappy about the end of, essentially, All Things, and the vanishment of every scrap of potential that the human race has to offer, both to itself and to its descendants.

And yet, I believe I am okay. I believe I am coping with it, and I believe I am coping with it without flinching away from it. I believe I have looked straight at it, and grieved, and I believe that I will do what I can to change the future, and it is because I believe that I will do what I can (and no less than that) that I do not feel broken or panicked or despondent.

I also believe that it's hard. That smarter and better people than me have tried, and reported to me that they have failed. That it's overwhelmingly likely that nothing that I try will succeed, and all of my efforts will be in vain.

But that's "okay" in the deepest sense, because I am not holding my identity to be "the guy who survived" or "the guy who fixed everything," and I am not locating my emotional victory condition in "it actually succeeded."

(In part, I am not doing this because it is self-defeating; it will cause me to be worse at actually contributing to the averting of disaster; it will cause me to behave erratically and unstrategically and possibly to be hopelessly depressed and none of these things help, so I shall not bother with them.)

Whether we make it or not is not fully under my control, and therefore I don't put my evaluation of my self in that same bucket. Actually trying is something that is under my control—that's a plan I can succeed at.

I know, in my bones, that I am capable of impressive-by-human-standards achievements.

I know, in my bones, that I really really want to live.

I know, in my bones, that a world where humanity and the biosphere are not destroyed by runaway artificial intelligence is a better world than the one we currently seem to be in, where they will be.

I know, about myself, that I can and will take what opportunities arise, and that I will put non-negligible effort toward making and finding opportunities, and not merely waiting for them to appear, and that I am the type of person who does what he can, and that I will do what I can here just like I will everywhere else.

(And I know that here is more important in a literal, objective sense, than everywhere else, and I know that I know that, and I trust myself to balance those scales properly.)

And, knowing all that—

I'm okay. I'm able to experience joy in the moment, I'm able to have happy thoughts about the future (both the immediate future that I more or less "expect" to get, and various idle daydreams about unlikely but delightful futures that it is fun to imagine in the event that we get miracles). I'm able to make plans, I'm able to set goals, I'm able to live life. Sometimes, I work really quite hard to try to nudge the world in ways I think might help, and sometimes I relax and live my life as if I've done what I can and the rest is out of my hands.

(Because in a very real sense, it is, and therefore this is not sacrificing or defecting on anything.)

Your mileage may vary, but if what you're doing right now is both not helping you and not helping the world, something has gone wrong.

Good luck.

Post-script: expansions from the comments

In the thread under Logan Strohl's top comment, I eventually wrote:

> You know, I think I'm actually just wrong here, and people should be Potential Knights of Infinite Resignation.

I think I come at this from a slightly different angle, and I think it has to do with my sculptor-sculpture distinction. I think there's a difference between values and meta-values and meta-meta-values (or something), and that when I discover that a particular instantiation of a meta-value into a concrete value is unpossible, I respond by seeking a new way to instantiate that meta-value.

And I think this is perhaps a critical piece of the puzzle and belongs in the essay somewhere.

I haven't fleshed this out or figured out how to connect it, but I did want to highlight it as a potential Important Inclusion. Look below for more context.

38 comments

Comments sorted by top scores.

comment by LoganStrohl (BrienneYudkowsky) · 2023-02-19T23:43:11.064Z · LW(p) · GW(p)

I am glad you are ok. I do not want to undermine whatever it is that keeps you by-and-large mentally healthy, especially as you contribute to the communal survival effort, and, ya know, be my spouse and all.

Also, I think it would probably be wrong not to tell you that the presumably shallow and probably somewhat inaccurate version of this that I heard while reading struck me as pretty self deceptive. Or at least, not sufficiently anti-self-deceptive for it to sit right with me.

It may take me awhile to pin down exactly what is not sitting right with me, and how exactly it is sitting instead. But I think most of it is concentrated in "Victory Conditions" and in "The Plan", and a maybe some of it is also in "Sculptors and Sculptures".

I think the heart of my objection may be exactly what I wrote about in 2016, quite unclearly and at length I'm sorry to say, in an essay called "When Your Left Arm Becomes A Chicken". How relevant this essay is depends a lot on what exactly it means to you to "change your victory condition". But when I hear "victory condition", it sounds an awful lot like the Kierkegaardian thought experiment thingy I talk about in the essay, the part about unrequited love for the princess.

Replies from: Duncan_Sabien↑ comment by Duncan Sabien (Deactivated) (Duncan_Sabien) · 2023-02-20T00:20:05.024Z · LW(p) · GW(p)

I think that I don't quite have enough to go about answering you, yet; I do not quite yet understand the thing you're hearing, so I can't start figuring out whether it is indeed what I am actually saying.

Can I beg you for six more paragraphs of ramble or something?

Replies from: BrienneYudkowsky↑ comment by LoganStrohl (BrienneYudkowsky) · 2023-02-20T00:23:23.082Z · LW(p) · GW(p)

Yes of course, and I certainly didn't mean to leave you with just that one comment. I really mean to say "I think something is wrong here. More to come as I figure it out." But also it may take me a while before I'm ready for the next installment.

Replies from: Duncan_Sabien↑ comment by Duncan Sabien (Deactivated) (Duncan_Sabien) · 2023-02-20T02:58:45.116Z · LW(p) · GW(p)

Some possible snippets/trailheads, or at least meandering clarifications:

the worlds in which Gao stops valuing things that prove difficult to attain seem sadder to me

Me, too. I am a big believer in valuing things separate from their attainability; I sort of solve this one internally by valuing approximately everything according to how Awesome I think it is, and then sorting them on a priority list in a manner that includes weighting-by-possibility.

But like. I think I do not fail to notice, or continue to believe, that the hard-to-get things are nevertheless good; one simple toy example is that I extremely value being able to live in my physical twelve-year-old body again, except possibly with adamantium bones and telekinesis as well.

The Knight of Faith will have a bad problem if he wants to make accurate predictions about the world, since his epistemology is about as broken as I know how to make a thing.

I share your (and presumably Kierkegorr's EDIT: I have been informed that Kierkegorr was a Christian and big into the Knight of Faith) unease/distaste for this option.

The first man, recognizing his value cannot be satisfied, abandons his love for the princess. “Such a love is foolishness,” he says. “The rich brewer's widow is a match fully as good and respectable.” He stops valuing the love of the princess, and goes looking for a more easily satisfied value.

In my own personal tongue, this is called "the opposite of Aliveness," or perhaps "becoming dead." It is almost precisely the thing that my entire life philosophy centers on not doing (and I note that, for a literal majority of the people that I interact with for more than thirty minutes, an injection-by-osmosis of my degree of not-this causes them some kind of "oh shit u right" recognition that ultimately leads to a small local improvement in their life by way of rejiggering their priorities to reprioritize aliveness).

By my reading, the Zhuangzi prescribes either constantly adjusting your values so that they’re always perfectly satisfied by the current state of the world, or not having any values at all, thereby achieving a similar outcome...It’s sort of like wireheading, but it sidesteps the problem wherein your values might involve states of the world instead of just experiences.

Once you factor the Aliveness thing out of my values, the majority of what's left is centered on not-this. Like, this is where my sense of revulsion at most people's accepting-the-gaslighting-around-puberty comes from; if the Mule converts me to loving the Mule I acknowledge that I will love the Mule but I think that I should still be quite upset about how I came to love the Mule, and will not feel fondness about that unless the Mule hardwired that, too.

(To put this another way: I do not want to be "down" with my left arm becoming a rooster; if my left arm becomes a rooster I want to find a way to achieve some kind of contentment anyway but Being Mad About My Left Arm Being A Rooster is a feeling that I defend with all my TDT might and Someday Doing Something About Both The Rooster-Arm And, Separately, Whatever Caused It goes on The List, possibly under the preexisting subheading God Really Needs To Be Killed For Its Crimes.)

Imagine you have exactly one value

I have a hard time. Like, I have an easy time doing it for the sake of a thought experiment, but I have a hard time doing it for realsies.

Perhaps this is an important piece of the puzzle—the capacity to shift values around in response; the always-having-a-thing-to-do-on-a-rainy-day nature.

I strive to wield the power of despair without having to be depressed. I would like to be able to believe that I am doomed when I am doomed, else I’ll resist believing that I am in danger when doing so would let me prevent harm.

Also, I strive not to believe contradictions, or to rationalize, or to play other strange games with myself that let conflicting beliefs hide in separate corners of my mind.

Also also, I don’t want to be down with my left arm becoming a chicken, or with an asteroid destroying the Earth.

Yes, yes, and yes.

You know, I think I'm actually just wrong here, and people should be Potential Knights of Infinite Resignation.

I think I come at this from a slightly different angle, and I think it has to do with my sculptor-sculpture distinction. I think there's a difference between values and meta-values and meta-meta-values (or something), and that when I discover that a particular instantiation of a meta-value into a concrete value is unpossible, I respond by seeking a new way to instantiate that meta-value.

And I think this is perhaps a critical piece of the puzzle and belongs in the essay somewhere.

Replies from: dxu↑ comment by dxu · 2023-02-20T03:40:36.367Z · LW(p) · GW(p)

I think part of the problem here is that there seems, at first glance, to be a fundamental tension between the ideas that

- it's good to keep track of the difference between good and bad things, and also

- it's good to stop being upset about bad things if doing so isn't instrumentally useful.

And, like, I'm aware that the second bullet point is phrased somewhat strawmannishly; I did that to emphasize the extent to which, in my native mental ontology, [something-like-the-strawmannish-version] is what it translates to. And even now, typing this, I can't for the life of me come up with a version that both (1) maintains the intuitive force present in the current version, and (2) sounds less like a strawman.

It seems like, retaining the ability to be upset about bad things is part of what it means to understand the difference between bad things and good things? Like, the reason you're upset is that it's bad; that's part of what it means for something to be bad: that you recognize it and have a justifiable internal reaction to it.

(Flagging that I don't necessarily think you disagree with this; in particular, it seems like it rhymes pretty strongly with what you said about wanting to defend Being Mad About My Left Arm Being A Rooster.)

Even so, I don't yet understand how to reconcile the two. This affects me less than it might someone else with this particular intellectual conundrum, since for me it really is mostly intellectual: my brain pretty much does the "acceptance" thing by default, to the point where I often find it difficult to remain upset about things I'd reflectively endorse being upset about. And to the extent that the thing my brain is doing protects me instrumentally from mental health issues such as depression, I (selfishly) want it to keep doing that (even as I for other reasons might sometimes wish the opposite).

But of course, none of that is the same as having a philosophical justification for it, which (again) I don't have!

Replies from: Duncan_Sabien↑ comment by Duncan Sabien (Deactivated) (Duncan_Sabien) · 2023-02-20T04:29:27.357Z · LW(p) · GW(p)

I believe I am able to "be upset" about bad things in a way that doesn't require suffering or any ongoing detriment to my life.

comment by swarriner · 2023-02-19T23:25:42.825Z · LW(p) · GW(p)

(this comment is copied to the other essay as well)

I respect the attempt, here, and I think a version of the thesis is true. Letting go of control and trying to appreciate the present moment is probably the best course of action given that one is confronted with impending doom. I also recognize that reaching this state is not just a switch one can immediately flip in one's mind; it can only be reached by way of practice.

With these things in mind, I am still not okay. More than anything I find myself craving ignorance. I envy my wife; she's not in ratspaces whatsoever and as far as I know has no idea people hold these beliefs. I think that would be a better way to live; perhaps an unpopular opinion on the website where people try not to live in ignorance. It's hard not to be resentful sometimes. I resent the AI researchers, the site culture, and I especially resent certain MIRI founders and their declarations of defeat.

I think that means I need to disconnect, once and for all. I've been toying with the idea that I need to disconnect from the LW sphere completely and frankly I think it's overdue. Dear reader; if you aren't going to go solve alignment, I suggest you consider following suit. I might hand around a bit to view replies to this comment but... Yeah. Thanks for all the food for thought over the years LW, I'm not sure if it was worth it.

Replies from: Duncan_Sabien, Razied, rhollerith_dot_com↑ comment by Duncan Sabien (Deactivated) (Duncan_Sabien) · 2023-02-20T00:21:03.971Z · LW(p) · GW(p)

(I have a strong personal aversion to the balm of ignorance/feeling better because of not seeing what's true, so I strong disagree-voted but upvoted the comment in general.)

Replies from: rhollerith_dot_com↑ comment by RHollerith (rhollerith_dot_com) · 2023-02-20T16:55:07.209Z · LW(p) · GW(p)

↑ comment by Razied · 2023-02-20T02:25:31.991Z · LW(p) · GW(p)

Mostly agreed, these days I don't talk about AI safety with anyone if they don't already know about it, precisely because it wouldn't be kind at all. If you end up never coming back on here: I wish you a happy life, man, may we all do the best we can in the time we have left.

↑ comment by RHollerith (rhollerith_dot_com) · 2023-02-20T17:07:33.713Z · LW(p) · GW(p)

comment by Raemon · 2023-02-20T00:45:02.649Z · LW(p) · GW(p)

I think I know (and mostly alieve) everything in this post, and I know (and mostly alieve?) everything in the Replacing Guilt [? · GW] sequence, and... man it sure takes awhile to internalize in a real way. I feel like I'm staring at my ruby slippers, and knowing that eventually I'll be like "okay, yup, now I grok that I can just click these together and be okay", and yet... not being able to skip out on the journey to kill the wicked witch, for all my genre savviness.

I became decidedly Not Okay ~2 years ago, worked my way through powergrieving for a few months, ran out of steam, and then worked on sporadically grieving when it felt right. And two years later I heard a friend reflecting on the end of the world:

"You know, I know what it's like to successfully grieve something. I had a whole life planned out for myself with goals, and I had to basically give up all of those goals, and it was rough but by now I don't look upon those old goals / lifeplan with any sense of longing or wishing it were still real. ... So... I can tell that I haven't really grieved the maybe-end-of-the-world yet. I have cried about it and done some stuff that felt like processing but I clearly am not actually at peace with it the way I became at peace with giving up my old lifegoals."

And I heard that was hit with "Man, yeah, 2 years of trying to process all of these things and I just clearly haven't finished yet. Geez." And I'm not sure if the problem is that I'm not "doing it right", or if it just actually takes awhile.

The things that have visibly at least helped in a significant way were something closer to "formal grieving ritual with other people." (Two of them were small solstices-in-the-woods, with ~10 people. One of them was talking through stuff with one other person). Each time I was like "man, I am embarrassed that Raemon took this long to try solving his big emotional problems with a ritual", but then simultaneously felt like rushing to do another one wouldn't help much more.

Replies from: BrienneYudkowsky↑ comment by LoganStrohl (BrienneYudkowsky) · 2023-02-20T03:10:43.695Z · LW(p) · GW(p)

for me i think it took about 3 years, but the first 50% of it only took one or two months once i got deliberate about it

comment by trevor (TrevorWiesinger) · 2023-02-19T20:50:38.369Z · LW(p) · GW(p)

it's a bad idea to wait on their parents to acknowledge unfairness, because what if their parent is just stubborn about it, they usually go "oohhhh ... huh," and then decide to declare victory after some other, more tractable benchmark.

On that note, if anyone has had a hard time successfully explaining AI risk to a family member, I've tested Toby Ord's chapter on AI risk from The Precipice [LW · GW] and then both of my stubborn 65-yo parents were convinced that AGI is a serious problem, after more than 5 years of knee-jerk skepticism. Toby Ord made a real effort, and the resulting 13 pages seem to work for an unusually wide variety of people.

You'd be surprised how valuable that can be, turning things around in all sorts of different ways that you had never really given much thought to. Instrumentally valuable, not just emotionally.

comment by Victor Li (victor-li-3) · 2023-02-20T07:03:26.235Z · LW(p) · GW(p)

Hi, I really like this post (as well as the other one) and largely agree with the sentiments shared. Below are some of my personal takeaways from reading these two posts (may not represent accurately the intentions of the authors):

- Be realistic with the amount of influence I can have on the future

- Separate out the things I can influence and things which I cannot

- Apply agency to the former and acceptance to the latter

- Set my "victory condition" as something that is within my influence

- This way, I will be motivated to actually do things in life instead of being paralysed by depression

- It's ok to grieve and to admit that the future probably will suck no matter what I do

- There is still value in living life in the present regardless of what happens in the future

- Enjoy the journey more, focus less on the destination

I hope these points will be valuable to the community and serve as a succinct summary of the (what seems to me) important takeaways.

comment by Raemon · 2024-12-16T07:23:09.675Z · LW(p) · GW(p)

Another piece of the "how to be okay in the face of possible existential loss" puzzle. I particularly liked the "don't locate your victory conditions inside people/things you can't control" frame. (I'd heard that elsewhere [LW · GW] I think but it felt well articulated here)

comment by JacobW38 (JacobW) · 2023-02-21T06:36:01.384Z · LW(p) · GW(p)

A very necessary post in a place like here, in times like these; thank you very much for these words. A couple disclaimers to my reply: I'm cockily unafraid of death in personal terms, and I'm not fully bought into the probable AI disaster narrative, although far be it from me to claim to have enough knowledge to form an educated opinion; it's really a field I follow with an interested layman's eye. But I'm not exactly one of those struggling at the moment, and I'd even say that the recent developments with ChatGPT, Bing, and whatever follows them excite me more than they intimidate me.

All that said, I do make a great effort of keeping myself permanently ahead of the happiness treadmill, and I largely agree with the way Duncan has expressed how to best go about it. If anything, I'd say it can be stated even more generally; in my book, it's possible to remain happy even knowing you could have chosen to attempt to do something to stop the oncoming apocalypse, but chose differently. It's just about total acceptance; not to say one should possess such impenetrable equanimity that they don't even care to try to prevent such outcomes, but rather understanding that all of our aversive reactions are just evolved adaptations that don't signal any actual significance. In bare reality, what happens happens, and the things we naturally fear and loathe are just... fine. I take to heart the words of one of my favorite characters in one of the greatest games ever made... Magus from Chrono Trigger:

"If history is to change, let it change!

If this world is to be destroyed, so be it!

If my destiny is to die, I must simply laugh!"

The final line delivers the impact. Have joy for reasons that death can't take from you, such that you can stare it dead in the eye and tell it it can never dream of breaking you, and the psychological impulse to withdraw from it comes to feel superfluous. That's how I ensure to always be okay under whatever uncertainty. I imagine I would find this harder if I actually felt that the fall of humanity was inevitable, but take it for what it's worth.

comment by Amalthea (nikolas-kuhn) · 2023-02-19T21:46:07.344Z · LW(p) · GW(p)

Is there actually a discussion somewhere of what we can do? I basically only ever see discussion of AI alignment, which seems to me more of a long-term strategy and not the best place to spend resources on right now (although I'm confident that it is going to have payoffs if we weather the next several years). I kind of conceptualize this as a war - its normal to be devastated and the stakes are incredibly high, but in the end you have to pull together and try to figure out the most strategic thing to do without giving in to panicked thinking. And we're all in this together.

Replies from: Raemon↑ comment by Raemon · 2023-02-20T00:59:39.875Z · LW(p) · GW(p)

I'm not quite sure what you're asking for.

FYI there's an AI Governance tag [? · GW] and an AI Alignment Fieldbuilding [? · GW] tag, which covers some of the other major strategies other than the technical alignment of AI (although I worry that people drawn to those other two topics are often pursuing them in a "missing the real problem" sort of way that won't actually help)

You say "AI alignment" is a longterm strategy, not... something to work on right now, which feels confusing. Most of the things worth doing require some investment in learning/skill-gaining along the way, to contribute to longterm projects, IMO. (There are smaller projects, but figuring out which ones actually help is fairly high-context and not actually easy to jump onto)

Replies from: nikolas-kuhn↑ comment by Amalthea (nikolas-kuhn) · 2023-02-20T01:29:27.030Z · LW(p) · GW(p)

Thanks! These tags are very relevant and I wasn't aware -- that's maybe part of the problem (although I'm relatively new in using this forum). I might simply not be aware of the concrete efforts to mobilize/draw public attention and raise public concern about the issue. Certainly many of the leading figures of EA/rationalism appear to be very stuck in their heads from the outside and - I don't really know how to phrase this - not willing to make a stand? E.g. Eliezer's famously defeatist attitude probably doesn't send a good signal to the outside (I do mostly agree with him on his general opinions).

When I say alignment seems mostly relevant long-term, I really mean the technical parts. It is definitely good to recruit and educate people in that direction even right now. I just think the political dimension - public perception, and government involvement are likely much more relevant short term (and could yield a lot of resources towards alignment). Since I haven't been seeing much discussion of this here, I felt it is underappreciated.

What precisely do you mean by "missing the real problem"?

comment by Review Bot · 2024-08-06T01:04:50.580Z · LW(p) · GW(p)

The LessWrong Review [? · GW] runs every year to select the posts that have most stood the test of time. This post is not yet eligible for review, but will be at the end of 2024. The top fifty or so posts are featured prominently on the site throughout the year.

Hopefully, the review is better than karma at judging enduring value. If we have accurate prediction markets on the review results, maybe we can have better incentives on LessWrong today. Will this post make the top fifty?

comment by NoriMori1992 · 2023-06-03T15:50:27.827Z · LW(p) · GW(p)

In practice, many people need the opposite lesson—many people's locus of control is almost entirely external, and they need to be woken up to their capacity for choice and stop blaming everything on immutable factors.

This presumes that such people are indeed capable of shifting their locus of control, which based on my own personal experience and observations is not always an accurate presumption.

comment by David Bravo (davidbravocomas) · 2023-04-26T20:12:00.041Z · LW(p) · GW(p)

Thank you very much for this post, I find it extremely valuable.

I also find it especially helpful for this community, because it touches on what I believe are two main sources of anxiety over existential dread that might be common among LWers:

- Doom itself (end of life and Earth, our ill-fated plans, not being able to see our children or grandchildren grow, etc.), and

- uncertainty over one's (in)ability to prevent the catastrophe (can I do better? Even if it's unlikely I will be the hero or make a difference, isn't it worth wagering everything on this tiny possibility? Isn't the possibility of losing status, friends, resources, time, etc. better than the alternative of not having tried our best and humanity coming to an end?)

It depends on the stage in one's career, the job/degree/path one is pursuing, and other factors, but I expect that many readers here are unusually prone to the second concern compared to outsiders, perhaps due to their familiarity with coordination failures and defections, their intuition that there's always a level above and possibility for doing better/optimisation...I am not sure how this angst over uncertainty, even if it's just a lingering thought in the back of one's mind, can really be cleared, but particularly Fabricated Options conceptualises a response to it and says "we'll always be uncertain, but don't stress too much, it's okay".

comment by Vishrut Arya (vishrut-arya) · 2023-02-20T18:24:25.339Z · LW(p) · GW(p)

There's a somewhat obscure but fairly-compelling-to-me model of psychology which states that people are only happy/okay to the extent that they have some sort of plan, and also expect that plan to succeed.

What's the name of this model; or, can you point to the fuller version of it? Seems right and would see it fleshed out.

Replies from: Duncan_Sabien↑ comment by Duncan Sabien (Deactivated) (Duncan_Sabien) · 2023-02-20T20:47:59.781Z · LW(p) · GW(p)

It's Connection Theory, but I do not know if there's any good published material online; it was proprietary from a small group and I've mostly heard about it filtered through other people.

Replies from: vishrut-arya↑ comment by Vishrut Arya (vishrut-arya) · 2023-02-21T04:32:50.625Z · LW(p) · GW(p)

Thanks Duncan!

comment by Jaromír Svoboda (jaromir-svoboda) · 2023-02-20T09:21:14.922Z · LW(p) · GW(p)

Thank you for the essay.

IMHO it's worth mentioning that (as a companion to this worldview/intellectual approach - I don't mean to diminish it in any way) meditation/mindfulness works for me on a more immediate and emotional level.

comment by WilliamKiely · 2023-02-20T05:14:15.578Z · LW(p) · GW(p)

Thanks for sharing. FWIW I would have preferred to read the A Way to Be Okay [LW · GW] section first, and only reference the other sections if things didn't make sense (though I think I would have understood it all fine without those five sections) (though I didn't know this in advance so I just read the essay from beginning to end).

comment by M. Y. Zuo · 2023-02-20T04:11:41.991Z · LW(p) · GW(p)

But it's important to note that those twenty years of relative freedom are not nothing. They happened. There was happiness. Lives were better. Some people escaped entirely. Even those who did not escape, and who are currently falling back under dark-ages religious oppression, had some time in the sun.

The Taliban would see this as the opposite, that for 20 years otherwise fine citizens were denied the opportunity for happiness in the Islamic Emirate of Afghanistan and led down a stray path.

In practical terms, urban Afghan women experienced a lot more freedom than they otherwise would have, rural women slightly more, urban men slightly more, and rural men vastly less. So it's not even clear if the total amount of citizen-lifetimes x average-amount-of-oppression actually changed meaningfully.

Replies from: Duncan_Sabien↑ comment by Duncan Sabien (Deactivated) (Duncan_Sabien) · 2023-02-20T04:31:33.959Z · LW(p) · GW(p)

Yes, that's how the Taliban would see it, but their values are bad and wrong on this question so I don't much care. I am not a full-blown moral relativist.

If by "rural men vastly less" you mean "they were no longer in a position to costlessly oppress," then I don't count that in my calculus. If instead you mean "rural men vastly less because of oppression by the occupying troops," then I agree that's a real cost and that falls under my "not necessarily claiming this was good on net" bit.

Replies from: M. Y. Zuo↑ comment by M. Y. Zuo · 2023-02-21T21:34:28.878Z · LW(p) · GW(p)

Yes, that's how the Taliban would see it, but their values are bad and wrong on this question so I don't much care. I am not a full-blown moral relativist.

What does 'not a full-blown moral relativist' mean?

Is the implication the opposite, or some intermediate position?

Replies from: Duncan_Sabien↑ comment by Duncan Sabien (Deactivated) (Duncan_Sabien) · 2023-02-22T00:20:16.838Z · LW(p) · GW(p)

I have room for some degree of moral relativism; I think that there are some areas and situations in which one person thinks X is good and another person thinks X is bad and both of them are roughly equally justified.

(I struggled to think of an example on the spot—maybe circumcision-by-default? I'm super duper clear on circumcision-by-default being harmful and bad but I'm not inclined to demonize people who circumcise their kids.)

But when the point is "the oppressors' values were being satisfied, when they were in a position to oppress—you're now making them sadder and making their lives worse by preventing them from having unlimited autocratic power over their women"

(which is something I interpreted you to be saying, as a part of your point)

I do not validate that sadness as being morally relevant. I can acknowledge that the improvement was not a pareto improvement in that, yes, there was a loser, but that person's loss was not something they "should" have had a claim to in the first place; them treating it as a loss is setting the zero point [LW · GW] in an illegitimate place.

Morality may not be fully objective, but neither is it fully subjective; you can in fact just straightforwardly look at a situation and often see that something is objectively wrong, simply by starting with something like "treat all sentient agents as moral patients."

Replies from: M. Y. Zuo↑ comment by M. Y. Zuo · 2023-02-22T15:24:58.396Z · LW(p) · GW(p)

What does treat all "sentient agents as moral patients" mean?

I tried doing a verbatim search but your comment is the only recorded instance according to Google.

Replies from: Duncan_Sabien↑ comment by Duncan Sabien (Deactivated) (Duncan_Sabien) · 2023-02-22T21:31:39.380Z · LW(p) · GW(p)

(I might be using words oddly or slightly wrongly; apologies if so.)

My understanding of the phrase "moral patient" is that it means "an entity that's morally relevant." So for the median human, a cow is not in practice a moral patient, but for a vegan or ethical vegetarian or Hindu, it definitely is.

One way that a person can argue for things like slavery or patriarchy is by either insinuating or outright claiming that the oppressed person is less of a moral patient than the oppressor; a sort of utility-monster-adjacent situation where the suffering of the losing party is outweighed by the gain of the winning party.

That's how one might get from "sure, the male oppressors lost something when the women started getting basic human rights" (which is true) to "and we should care about, or have sympathy for, their loss" (which is false, because their loss was of territory they should not have had control over in the first place, because it required treating other sentient agents as not moral patients).

So what I meant by "treat all sentient agents as moral patients" is something like "start from a baseline wherein every sentient agent is clustered in the same order of magnitude, in terms of what amount of dignity and care and autonomy our society should support them having, and enforce via its norms."

If you start from a baseline where women and men are not substantially different in how much goodness they deserve, then it's impossible to feel all that sad about the males in Taliban-controlled society losing their tyrannical power over women.

Replies from: M. Y. Zuo↑ comment by M. Y. Zuo · 2023-02-23T01:11:48.281Z · LW(p) · GW(p)

Does this imply that sub-sentient agents are fractional moral patients?

Your elaboration mostly makes sense, the issue seems to be who gets to define 'sentient agents'?

For example, there might be extremists that feel justified in their views that cows, cats, dogs, whales, etc., are 'full moral patients' in the sense your describing because of their minority views of what counts as 'sentient agents'.

(And if they adopt Taliban methods one day, might be too large of a group to meaningfully suppress without frightful implications.)

Replies from: Duncan_Sabien↑ comment by Duncan Sabien (Deactivated) (Duncan_Sabien) · 2023-02-23T03:10:18.117Z · LW(p) · GW(p)

I think that you are reading me as meaning, by the word "sentient," "sapient."

I meant "sentient."

I think I'm not super interested in carrying this conversation further; I mainly wanted to say "if you meant that we should feel sympathetic to the Taliban because they now have less power over their victims, idgaf," with a subpoint of "if you didn't mean to say that, you might want to clarify, because it sounded like you meant to say that."

I think that goal has been achieved.

Replies from: M. Y. Zuo↑ comment by M. Y. Zuo · 2023-02-23T05:02:17.468Z · LW(p) · GW(p)

I think that you are reading me as meaning, by the word "sentient," "sapient."

I meant "sentient."

No? My comment wouldn't make sense if by 'sentient' I thought you really meant 'sapient'.

Since the category of "cows, cats, dogs, whales, etc.," includes animals that are not usually considered sapient by anyone, at least on LW.

EDIT: And I'm usually quite picky about clarifying ambiguity, as you might have guessed from the comment chain, so I would have asked about it if there was confusion.

Replies from: Duncan_Sabien↑ comment by Duncan Sabien (Deactivated) (Duncan_Sabien) · 2023-02-23T07:25:46.896Z · LW(p) · GW(p)

Okay well in that case I have no idea what you might possibly mean by "sub-sentient agents" unless you're asking about, like, slime mold.

I'm finding this thread exhausting and anti-rewarding (since it's entirely one-way, with you putting in zero effort to clarify whatever the heck it was you were saying); please don't reply further.

Replies from: M. Y. Zuocomment by Ansel Freniere (ansel-freniere) · 2023-02-21T22:10:53.286Z · LW(p) · GW(p)

Duncan, I am skeptical that you fully and deeply believe we are so doomed as you claim. So here’s a test: Make a contract with someone Good to give them all your assets* and become an minimally paid permanent laborer for them, in the case that foom/doom does not occur in your expected timeframes. One of two things will now happen: 1) You will hesitate to do this, which proves you are not so sure and some part of you is being a boogeyman. You outed him! Congrats! This will also hopefully make you feel better! It is not as if we have sighted the doomsday asteroid in our telescopes and projected its path to earth.

Or 2) you will do it without hesitation (why not) and someone who is not feeling so gloomy will feel great without you actually having had to give anything. You will have done a very good deed that will make a difference in the time we have left!

- it should really be everything you can possibly give in order to be a proper solution, because the alternate outcome is global doom.

↑ comment by Duncan Sabien (Deactivated) (Duncan_Sabien) · 2023-02-22T00:23:49.356Z · LW(p) · GW(p)

This is a false dichotomy and a bad test; there are more reasons to feel hesitant than one's belief in doom (such as strategic TDT considerations that remain live in the meantime) and the imaginary "Good" person you've constructed who feels great at this deal is not a coherent person.