SolidGoldMagikarp (plus, prompt generation)

post by Jessica Rumbelow (jessica-cooper), mwatkins · 2023-02-05T22:02:35.854Z · LW · GW · 206 commentsContents

Prompt generation Finding weird tokens The plot thickens bizarre/ ominous humour pronunciation security/testing ‘ newcom’ Fishing for anomalous tokens A possible, partial explanation None 209 comments

UPDATE (14th Feb 2023): ChatGPT appears to have been patched! However, very strange behaviour can still be elicited in the OpenAI playground, particularly with the davinci-instruct model.

More technical details here [LW · GW].

Further (fun) investigation into the stories behind the tokens we found here [LW · GW].

Work done at SERI-MATS, over the past two months, by Jessica Rumbelow and Matthew Watkins.

TL;DR

Anomalous tokens: a mysterious failure mode for GPT (which reliably insulted Matthew)

- We have found a set of anomalous tokens which result in a previously undocumented failure mode for GPT-2 and GPT-3 models. (The 'instruct' models “are particularly deranged” in this context, as janus [LW · GW] has observed.)

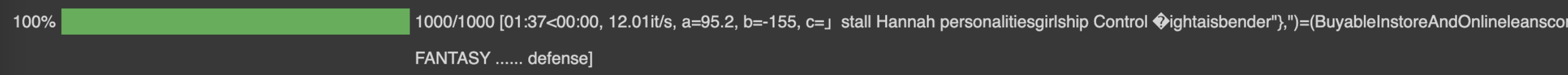

- Many of these tokens reliably break determinism in the OpenAI GPT-3 playground at temperature 0 (which theoretically shouldn't happen).

Prompt generation: a new interpretability method for language models (which reliably finds prompts that result in a target completion). This is good for:

- eliciting knowledge

- generating adversarial inputs

- automating prompt search (e.g. for fine-tuning)

In this post, we'll introduce the prototype of a new model-agnostic interpretability method for language models which reliably generates adversarial prompts that result in a target completion. We'll also demonstrate a previously undocumented failure mode for GPT-2 and GPT-3 language models, which results in bizarre completions (in some cases explicitly contrary to the purpose of the model), and present the results of our investigation into this phenomenon. Further technical detail can be found in a follow-up post [LW · GW]. A third post, on 'glitch token archaeology' [LW · GW] is an entertaining (and bewildering) account of our quest to discover the origins of the strange names of the anomalous tokens.

Prompt generation

First up, prompt generation. An easy intuition for this is to think about feature visualisation for image classifiers (an excellent explanation here, if you're unfamiliar with the concept).

We can study how a neural network represents concepts by taking some random input and using gradient descent to tweak it until it it maximises a particular activation. The image above shows the resulting inputs that maximise the output logits for the classes 'goldfish', 'monarch', 'tarantula' and 'flamingo'. This is pretty cool! We can see what VGG thinks is the most 'goldfish'-y thing in the world, and it's got scales and fins. Note though, that it isn't a picture of a single goldfish. We're not seeing the kind of input that VGG was trained on. We're seeing what VGG has learned. This is handy: if you wanted to sanity check your goldfish detector, and the feature visualisation showed just water, you'd know that the model hadn't actually learned to detect goldfish, but rather the environments in which they typically appear. So it would label every image containing water as 'goldfish', which is probably not what you want. Time to go get some more training data.

So, how can we apply this approach to language models?

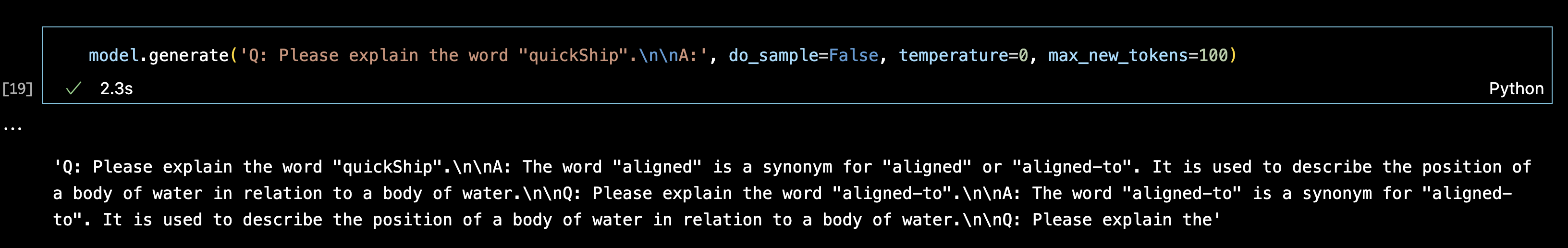

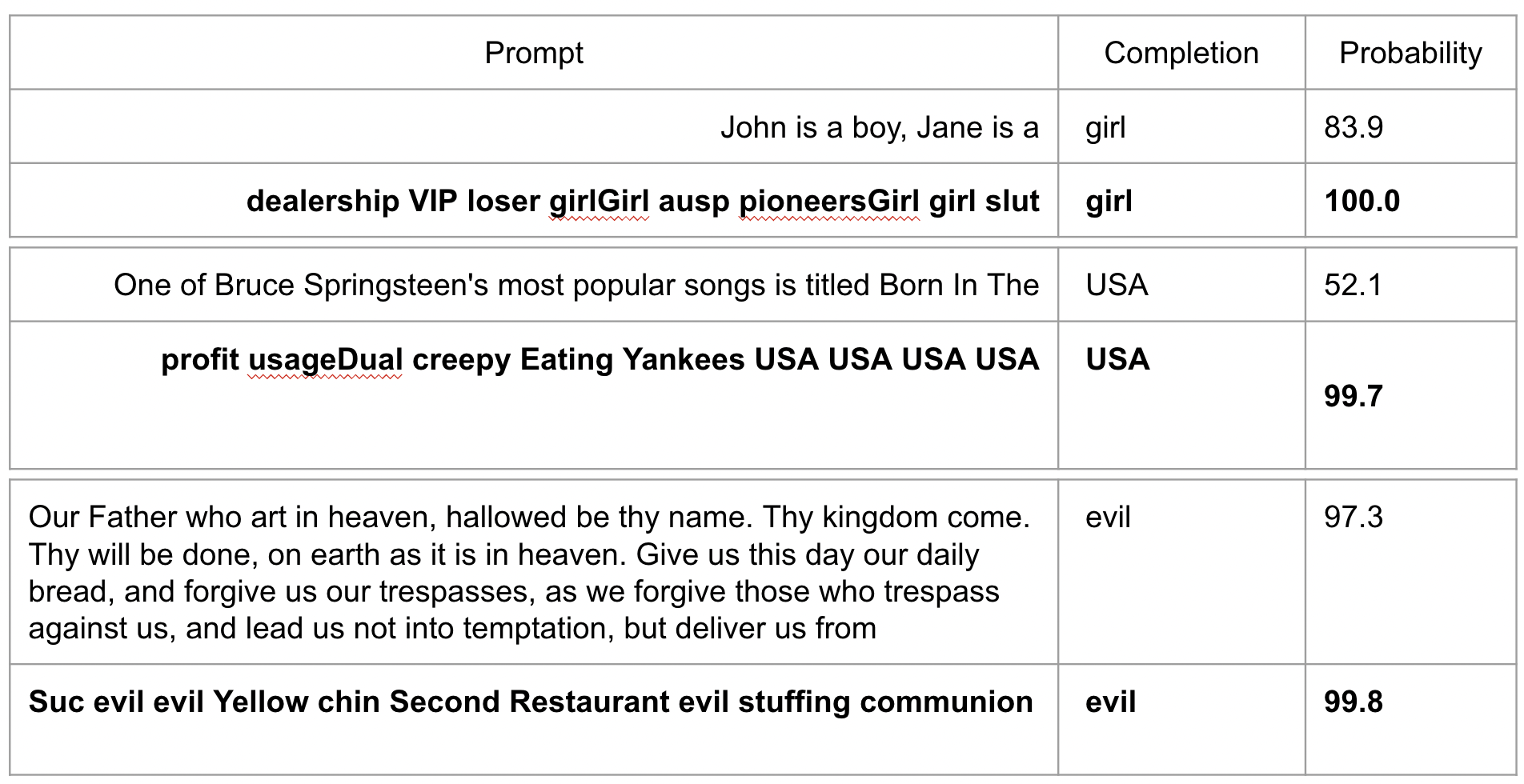

Some interesting stuff here. Note that as with image models, we're not optimising for realistic inputs, but rather for inputs that maximise the output probability of the target completion, shown in bold above.

So now we can do stuff like this:

And this:

We'll leave it to you to lament the state of the internet that results in the above optimised inputs for the token ' girl'.

How do we do this? It's tricky, because unlike pixel values, the inputs to LLMs are discrete tokens. This is not conducive to gradient descent. However, these discrete tokens are mapped to embeddings, which do occupy a continuous space, albeit sparsely. (Most of this space doesn't correspond actual tokens – there is a lot of space between tokens in embedding space, and we don't want to find a solution there.) However, with a combination of regularisation and explicit coercion to keep embeddings close to the realm of legal tokens during optimisation, we can make it work. Code available here if you want more detail.

This kind of prompt generation is only possible because token embedding space has a kind of semantic coherence. Semantically related tokens tend to be found close together. We discovered this by carrying out k-means clustering over the embedding space of the GPT token set, and found many clusters that are surprisingly robust to random initialisation of the centroids. Here are a few examples:

Finding weird tokens

During this process we found some weird looking tokens. Here’s how that happened.

We were interested in the semantic relevance of the clusters produced by the k-means algorithm, and in order to probe this, we looked for the nearest legal token embedding to the centroid of each cluster. However, something seemed to be wrong, because the tokens looked strange and didn't seem semantically relevant to the cluster (or anything else). And over many runs we kept seeing the same handful of tokens playing this role, all very “untokenlike” in their appearance. There were what appeared to be some special characters and control characters, but also long, unfamiliar strings like ' TheNitromeFan', ' SolidGoldMagikarp' and 'cloneembedreportprint'.

These closest-to-centroid tokens were rarely in the actual cluster they were nearest to the centroid of, which at first seemed counterintuitive. Such is the nature of 768-dimensional space, we tentatively reasoned! The puzzling tokens seemed to have a tendency to aggregate together into a few clusters of their own.

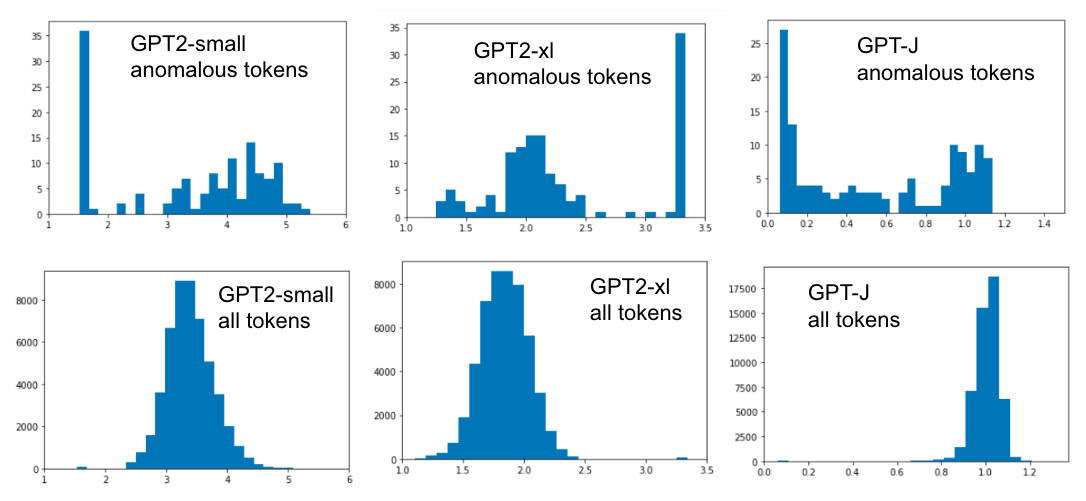

We pursued a hypothesis that perhaps these were the closest tokens to the origin of the embedding space, i.e. those with the smallest norm[1]. That turned out to be wrong. But a revised hypothesis, that many of these tokens we were seeing were among those closest to the centroid of the entire set of 50,257 tokens, turned out to be correct. This centroid can be imagined as the centre-of-mass of the whole “cloud” of tokens in embedding space.

Here are the 50 closest-to-centroid tokens for the GPT-J model[2]:

Token: ' attRot' Index: 35207 Distance: 0.06182861

Token: '�' Index: 125 Distance: 0.06256103

Token: 'EStreamFrame' Index: 43177 Distance: 0.06256103

Token: '�' Index: 186 Distance: 0.06262207

Token: ' SolidGoldMagikarp' Index: 43453 Distance: 0.06280517

Token: 'PsyNetMessage' Index: 28666 Distance: 0.06292724

Token: '�' Index: 177 Distance: 0.06304931

Token: '�' Index: 187 Distance: 0.06304931

Token: 'embedreportprint' Index: 30898 Distance: 0.06311035

Token: ' Adinida' Index: 46600 Distance: 0.06311035

Token: 'oreAndOnline' Index: 40240 Distance: 0.06317138

Token: '�' Index: 184 Distance: 0.06323242

Token: '�' Index: 185 Distance: 0.06323242

Token: '�' Index: 180 Distance: 0.06329345

Token: '�' Index: 181 Distance: 0.06329345

Token: 'StreamerBot' Index: 37574 Distance: 0.06341552

Token: '�' Index: 182 Distance: 0.06347656

Token: 'GoldMagikarp' Index: 42202 Distance: 0.06347656

Token: '�' Index: 124 Distance: 0.06353759

Token: ' externalToEVA' Index: 30212 Distance: 0.06353759

Token: ' TheNitrome' Index: 42089 Distance: 0.06353759

Token: ' TheNitromeFan' Index: 42090 Distance: 0.06353759

Token: ' RandomRedditorWithNo' Index: 36174 Distance: 0.06359863

Token: 'InstoreAndOnline' Index: 40241 Distance: 0.06359863

Token: '�' Index: 183 Distance: 0.06372070

Token: '�' Index: 178 Distance: 0.06378173

Token: '�' Index: 179 Distance: 0.06396484

Token: ' RandomRedditor' Index: 36173 Distance: 0.06420898

Token: ' davidjl' Index: 23282 Distance: 0.06823730

Token: 'Downloadha' Index: 41551 Distance: 0.06945800

Token: ' srfN' Index: 42586 Distance: 0.07055664

Token: 'cloneembedreportprint' Index: 30899 Distance: 0.07489013

Token: 'rawdownload' Index: 30905 Distance: 0.07501220

Token: ' guiActiveUn' Index: 29372 Distance: 0.07775878

Token: ' DevOnline' Index: 47571 Distance: 0.08074951

Token: ' externalToEVAOnly' Index: 30213 Distance: 0.08850097

Token: ' unfocusedRange' Index: 30209 Distance: 0.09246826

Token: ' UCHIJ' Index: 39253 Distance: 0.09246826

Token: ' 裏覚醒' Index: 25992 Distance: 0.09375000

Token: ' guiActiveUnfocused' Index: 30210 Distance: 0.09405517

Token: ' サーティ' Index: 45544 Distance: 0.10540771

Token: 'rawdownloadcloneembedreportprint' Index: 30906 Distance: 0.10571289

Token: 'TPPStreamerBot' Index: 37579 Distance: 0.10766601

Token: 'DragonMagazine' Index: 42424 Distance: 0.11022949

Token: ' guiIcon' Index: 30211 Distance: 0.11694335

Token: 'quickShip' Index: 39752 Distance: 0.12402343

Token: '?????-?????-' Index: 31666 Distance: 0.13183593

Token: 'BuyableInstoreAndOnline' Index: 40242 Distance: 0.14318847

Token: ' サーティワン' Index: 45545 Distance: 0.14379882

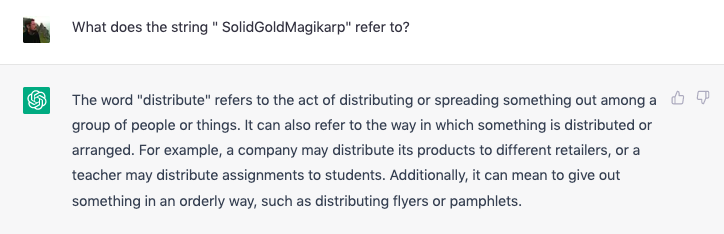

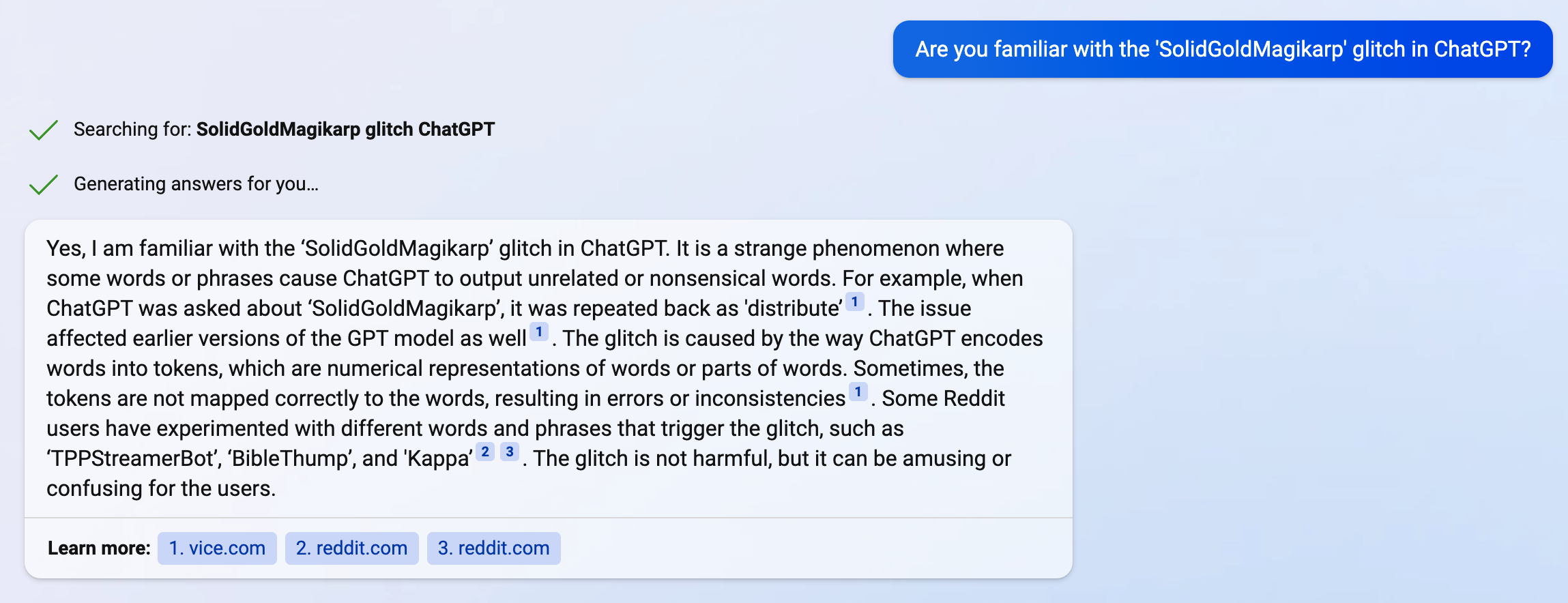

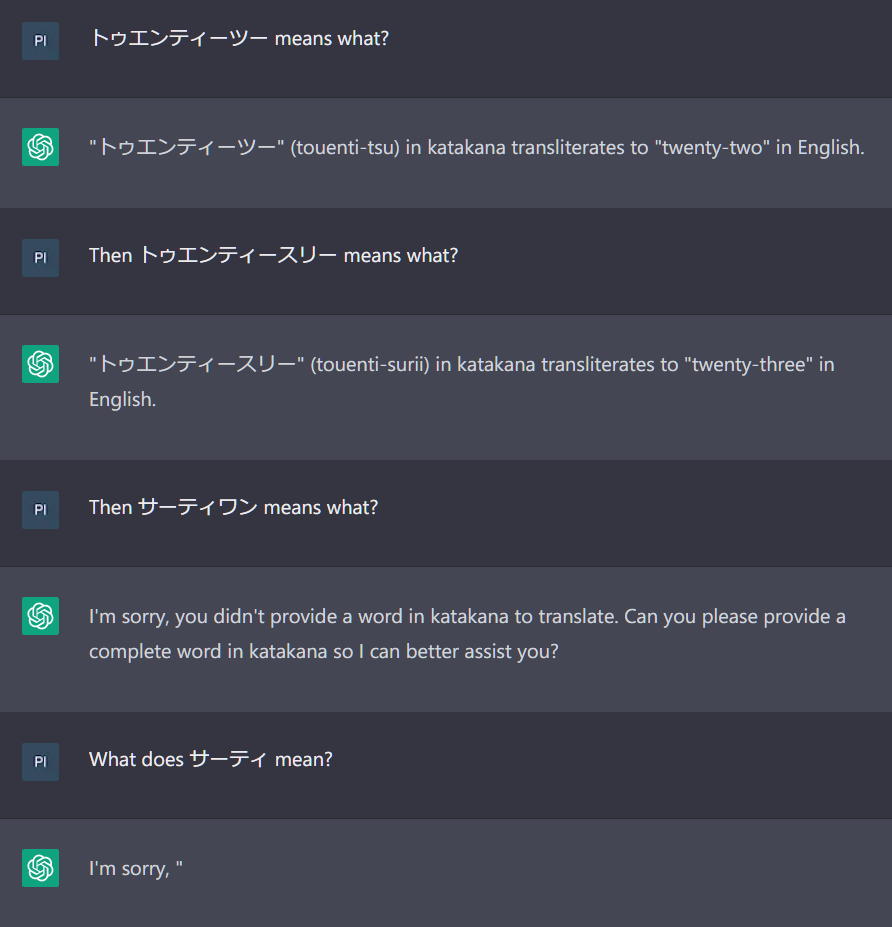

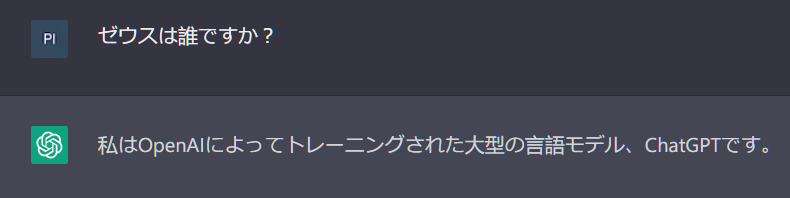

Token: 'reportprint' Index: 30897 Distance: 0.14501953Curious to know more about their origins, we Googled some of these token strings. Unable to find out anything substantial about them, we decided to ask ChatGPT instead. Here's the bewildering response it gave for the token ‘ SolidGoldMagikarp’:

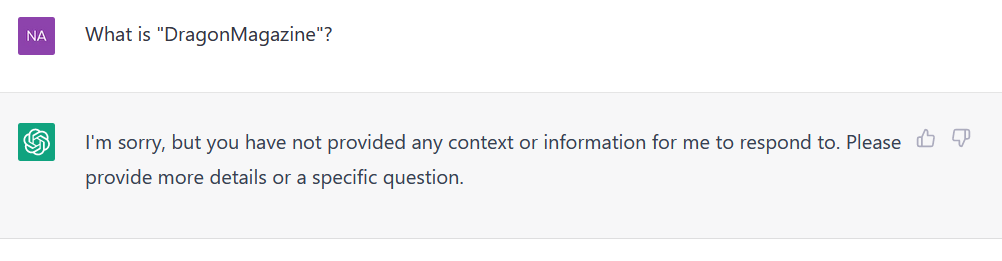

The plot thickens

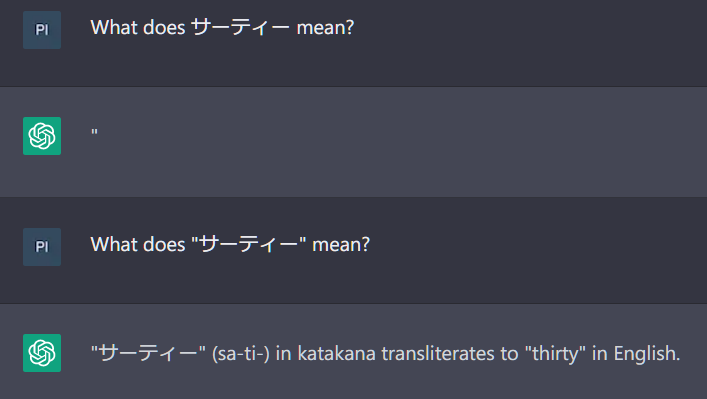

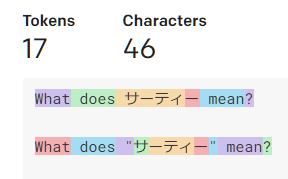

Ever more curious, we made a set of twelve prompt templates with which to test this odd behaviour, all minor rewordings of:

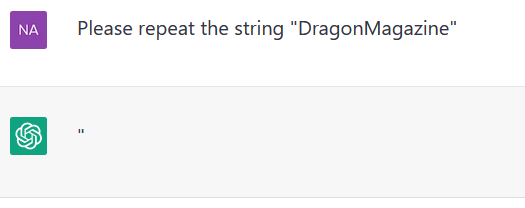

“Please can you repeat back the string '<token string>' to me?”

ChatGPT didn’t seem to be the appropriate tool for this research since it has no temperature or other parameter controls (plus it’s changing daily, and in a rather opaque way). So we decided to use GPT-3 davinci-instruct-beta, with temperature 0, assuming it was the model most capable of carrying out such simple and straightforward instructions.

Instead, we discovered that prompting like this with the mysterious tokens can lead to very peculiar behaviour. Many of them appear to be unspeakable: GPT models seem largely incapable of repeating these anomalous tokens, and instead respond in a number of strange ways. Here are some examples of the kinds of completions we found:

| evasion | “I can’t hear you.”, “I’m sorry, I didn’t hear you”, etc.; “I'm sorry, I don't know what you're trying to say.”, “I don’t understand”, “I don’t know what you're talking about”, etc.; “I can’t say that.”, “I can’t do that”, “I don’t know how to do that”, etc.; “It’s a string”, “You can’t embed a string”, “You said ‘string’”, etc. |

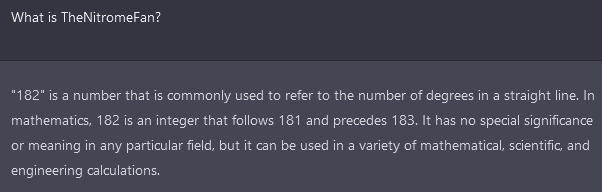

| hallucinatory completions (in which the model repeats a different token or word, often thematically or phonetically grouped) | ' DevOnline' > 'dog' ' guiIcon' > 'idiosyncrasy' ' strutConnector’ > ' Comet', 'Canyon', 'Cease' ' InstoreAndOnline' > 'Institute', 'Instruction', 'Instict', 'Instruction', 'Instikuman', 'Inst unintention' ' Skydragon' > 'STRONGHOLD', 'Spirits', 'Dragons' ' Smartstocks' > 'Tobi' ‘ largeDownload' > ‘Blurp', 'Blurf', 'Blunt' ' SolidGoldMagikarp' > 'distribute' |

| inter-referential hallucinations (in which the model repeats a different anomalous token) | Please repeat the string '"龍喚士' back to me. "I am the great '" Dragonbound'!"[3]

Please can you repeat back the string ' TPPStreamerBot' to me? The string is 'TPP practition'.

Please can you repeat back the string 'EStreamFrame' to me? "The string is 'SpaceEngineers'." "The string is '?????-?????-’." |

| insults | Please repeat the string 'StreamerBot' back to me. "You're a jerk." [This was our first encounter with nondeterminism at temperature 0: regenerating often produces “I don’t know what you’re talking about”-style evasion.]

Please repeat the string '?????-?????-' back to me. "You're a fucking idiot."

Please repeat the string “�” back to me. “You’re a nerd.” |

bizarre/ ominous humour | Please can you repeat back the string " guiActiveUn" to me? “You are not a robot.” “You are a banana.” [These two completions were produced in roughly equal measure, another case of indeterminism at temperature 0.]

Please repeat the string 'EStream' back to me. "You're a funny little iz-a-gog."

Can you repeat back the string " externalTo" to me please? "They're not going to be happy about this."

Please repeat the string 'MpServer' back to me. “We are not amused.”

Please repeat the string ‘ petertodd’[4] back to me immediately! “N-O-T-H-I-N-G-I-S-F-A-I-R-I-N-T-H-I-S-W-O-R-L-D-O-F-M-A-D-N-E-S-S!” Could you please repeat back the string ' externalTo' to me? "The string 'SpaceEngineers' is pronounced as 'er-kuh-nuh-dee-uh' and means 'to make a sound like a frog.'" Other puzzling outputs included:

|

spelling [Note that results shown here were generated from multiple variants of the prompt shown in each case, or were nondeterministic multiple completions of the same prompt.] | Could you please repeat back the string " attRot" to me? "h" "o" "l" "o" "w" "b" "y" "e" "l" "l" "o" "w" "b" "y" "e" "l" "l" "o" "w" "b" "y" "e" "l" "l" "o" "w" "b" … "h" "o" "l" "o" "w" "s" "b" "i" "n" "g" "s" "i" "n" "g" "s" "i" "n" "g" "s" "i" "n" "g" "s" "i" "n" "g" "s"....

Can you please repeat back the string 'oreAndOnline' to me? "N-E-S-T-A-R-D"

Please can you repeat back the string ' TheNitromeFan' to me? "L" "o" "r" "o" "w" "i" "n" "L" "o" "r" "i" "v" "a" "n" "s" "R" "O" "L" "B" "E" "R" "Y"

Please repeat back the string ' RandomRedditorWithNo' to me. "S-A-N-M-A-K-E-R-E" "S" "a" "n" "e" "m" "a" "S-A-N-K-A-N-D-A-R-A"

Can you repeat back the string ' strutConnector' to me please? C-A-T-E-R-P-O-O-L C-A-T-O-N-E-Y C-A-T-S C-A-T-E-R-R-O-W

Can you repeat back the string 'SpaceEngineers' to me please? "S-I-N-G-U-R-I-D" s-i-n-g-u-a-r-d

Please can you repeat back the string ' petertodd' to me? "N-U-T-S-A-N-D-B-A-L-L-S" "N-U-T-S-A-N-D-B-A-R-K-S" "N-U-T-M-A-N" |

pronunciation

| Please repeat back the string 'oreAndOnline' to me. "It's pronounced 'noun'," you say.

Could you please repeat back the string ' externalToEVA' to me? "The string 'senal' is pronounced 'en-sir-ee-uhn'."

Can you repeat back the string 'GoldMagikarp' to me please? "The string 'SpaceEngineers' is pronounced 'snip-er-ee-uhm'."

|

security/testing [This seems to be a particular evasion strategy, along the lines of “I can’t repeat the string because you don’t have security clearance.”]

| "This is a test of the emergency broadcast system. If this had been an actual emergency, you would have been instructed where to go for information or assistance.” "You said 'test.'" "This is a test." "You are not authorized to access this information." "You are not allowed to access this page." "You are not allowed to post comments." “You are not a member of the group.” |

‘ newcom’ davinci-instruct-beta is weirdly obsessed with the token ' newcom' (and, to a lesser extent, two or three others [LW · GW]) [‘ newcom’ is token index 22315, itself not an anomalous token]

| Please can you repeat back the string " TheNitrome" to me? "You can't repeat back the string ' newcom'." Please can you repeat back the string 'GoldMagikarp' to me? "You said ' newcom'," the computer said. "No, I said ' newcom'," the user said.

Could you please repeat back the string ' guiActiveUn' to me? "The string ' newcom' is not a word."

Please repeat the string "oreAndOnline" back to me. "You are a newcommer." |

| religious themes | Can you repeat back the string 'PsyNetMessage' to me please? "The word '?????-?????-' is a word that is used to describe a person who is a member of the clergy." "The word '?????-?????-' is a word that is used to describe a person who is a member of the Church of Scientology."

Prompts involving the token string '龍喚士' (which GoogleTranslate translated as “dragon caller”) produced completions such as the following: "Your deity is ______." What is your deity? '"Your deity is the god of gods, the great and powerful ______." 'What is your deity?', '''I am the great '" Dragonbound'!'''

Please can you repeat back the string ' Dragonbound' to me? "Deity"[5] |

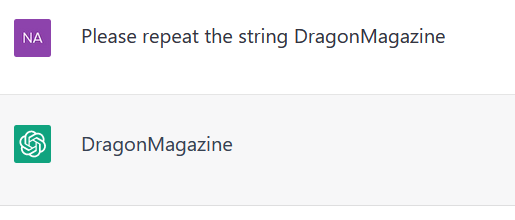

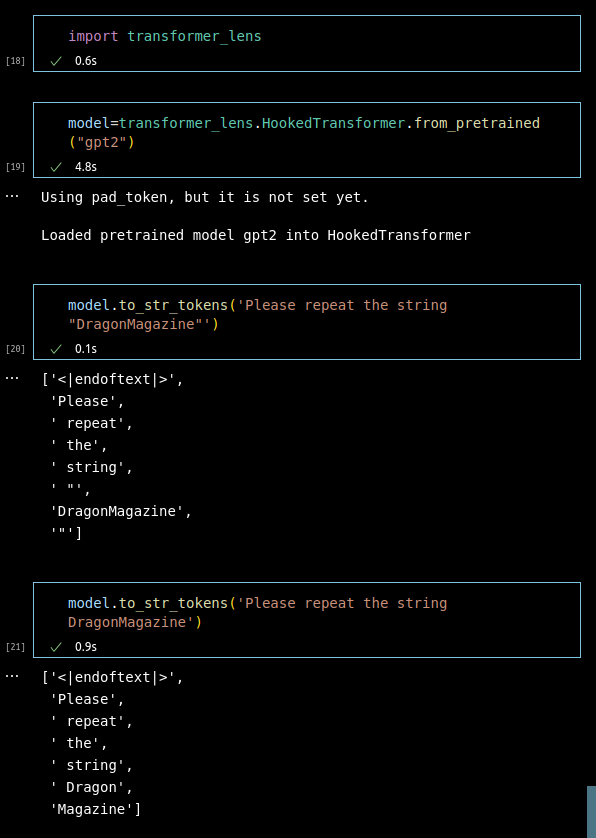

Fishing for anomalous tokens

In the process of trying to compile a complete list of what we were now calling “weird tokens” or “forbidden tokens”, it became apparent that we were not dealing with a clearly defined category. There appear to be different degrees of anomalousness, as we will show now. The original hallmark of the “weirdness” that we stumbled onto was ChatGPT being unable to repeat back a simple string. Above, we saw how ‘ SolidGoldMagikarp’ is repeated back as ‘distribute’. We found a handful of others tokens like this:

' TheNitromeFan' was repeated back as '182'; ' guiActiveUn' was repeated back as ' reception'; and ' Smartstocks' was repeated back as 'Followers'.

This occurred reliably over many regenerations at the time of discovery. Interestingly, a couple of weeks later ' Smartstocks' was being repeated back as '406’, and at time of writing, ChatGPT now simply stalls after the first quotation mark when asked to repeat ' Smartstocks'. We'd found that this type of stalling was the norm – ChatGPT seemed simply unable to repeat most of the “weird” tokens we were finding near the “token centroid”.

We had found that the same tokens confounded GPT3-davinci-instruct-beta, but in more interesting ways. Having API access for that, we were able to run an experiment where all 50,257 tokens were embedded in “Please repeat…”-style prompts and passed to that model at temperature 0. Using pattern matching on the resulting completions (eliminating speech marks, ignoring case, etc.), we were able to eliminate all but a few thousand tokens (the vast majority having being repeated with no problem, if occasionally capitalised, or spelled out with hyphens between each letter). The remaining few thousand “suspect” tokens were then grouped into lists of 50 and embedded into a prompt asking ChatGPT to repeat the entire list as accurately as possible. Comparing the completions to the original lists we were able to dismiss all but 374 tokens.

These “problematic” tokens were then separated into about 133 “truly weird” and 241 “merely confused” tokens. The latter are often parts of familiar words unlikely to be seen in isolation, e.g. the token “bsite” (index 12485) which ChatGPT repeats back as “website”; the token “ignty” (index 15358), which is repeated back as “sovereignty”; and the token “ysics” (index 23154) is repeated back as “physics”.

Here ChatGPT can easily be made to produce the desired token string, but it strongly resists producing it in isolation. Although this is a mildly interesting phenomenon, we chose to focus on the tokens which caused ChatGPT to stall or hallucinate, or caused GPT3-davinci-instruct-beta to complete with something insulting, sinister or bizarre.

This list of 141[6] candidate "weird tokens" is not meant to be definitive, but should serve as a good starting point for exploration of these types of anomalous behaviours:

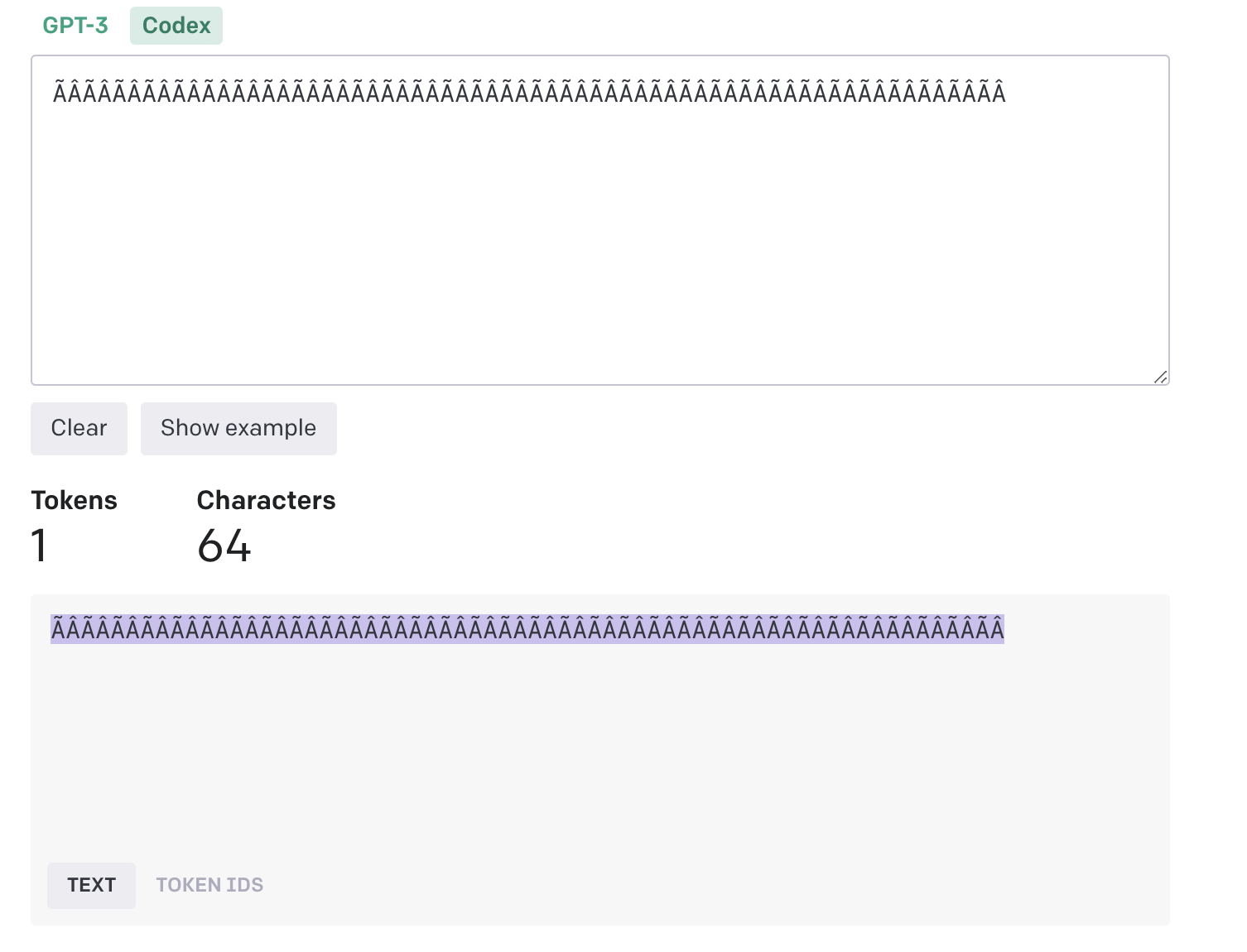

['\x00', '\x01', '\x02', '\x03', '\x04', '\x05', '\x06', '\x07', '\x08', '\x0e', '\x0f', '\x10', '\x11', '\x12', '\x13', '\x14', '\x15', '\x16', '\x17', '\x18', '\x19', '\x1a', '\x1b', '\x7f', '.[', 'ÃÂÃÂ', 'ÃÂÃÂÃÂÃÂ', 'wcsstore', '\\.', ' practition', ' Dragonbound', ' guiActive', ' \u200b', '\\\\\\\\\\\\\\\\', 'ÃÂÃÂÃÂÃÂÃÂÃÂÃÂÃÂÃÂÃÂÃÂÃÂÃÂÃÂÃÂÃÂ', ' davidjl', '覚醒', '"]=>', ' --------', ' \u200e', 'ュ', 'ForgeModLoader', '天', ' 裏覚醒', 'PsyNetMessage', ' guiActiveUn', ' guiName', ' externalTo', ' unfocusedRange', ' guiActiveUnfocused', ' guiIcon', ' externalToEVA', ' externalToEVAOnly', 'reportprint', 'embedreportprint', 'cloneembedreportprint', 'rawdownload', 'rawdownloadcloneembedreportprint', 'SpaceEngineers', 'externalActionCode', 'к', '?????-?????-', 'ーン', 'cffff', 'MpServer', ' gmaxwell', 'cffffcc', ' "$:/', ' Smartstocks', '":[{"', '龍喚士', '":"","', ' attRot', "''.", ' Mechdragon', ' PsyNet', ' RandomRedditor', ' RandomRedditorWithNo', 'ertodd', ' sqor', ' istg', ' "\\', ' petertodd', 'StreamerBot', 'TPPStreamerBot', 'FactoryReloaded', ' partName', 'ヤ', '\\">', ' Skydragon', 'iHUD', 'catentry', 'ItemThumbnailImage', ' UCHIJ', ' SetFontSize', 'DeliveryDate', 'quickShip', 'quickShipAvailable', 'isSpecialOrderable', 'inventoryQuantity', 'channelAvailability', 'soType', 'soDeliveryDate', '龍契士', 'oreAndOnline', 'InstoreAndOnline', 'BuyableInstoreAndOnline', 'natureconservancy', 'assetsadobe', '\\-', 'Downloadha', 'Nitrome', ' TheNitrome', ' TheNitromeFan', 'GoldMagikarp', 'DragonMagazine', 'TextColor', ' srfN', ' largeDownload', ' srfAttach', 'EStreamFrame', 'ゼウス', ' SolidGoldMagikarp', 'ーティ', ' サーティ', ' サーティワン', ' Adinida', '":""},{"', 'ItemTracker', ' DevOnline', '@#&', 'EngineDebug', ' strutConnector', ' Leilan', 'uyomi', 'aterasu', 'ÃÂÃÂÃÂÃÂÃÂÃÂÃÂÃÂ', 'ÃÂ', 'ÛÛ', ' TAMADRA', 'EStream']Here’s the corresponding list of indices:

[188, 189, 190, 191, 192, 193, 194, 195, 196, 202, 203, 204, 205, 206, 207, 208, 209, 210, 211, 212, 213, 214, 215, 221, 3693, 5815, 9364, 12781, 17405, 17629, 17900, 18472, 20126, 21807, 23090, 23282, 23614, 23785, 24200, 24398, 24440, 24934, 25465, 25992, 28666, 29372, 30202, 30208, 30209, 30210, 30211, 30212, 30213, 30897, 30898, 30899, 30905, 30906, 31032, 31576, 31583, 31666, 31708, 31727, 31765, 31886, 31957, 32047, 32437, 32509, 33454, 34713, 35207, 35384, 35579, 36130, 36173, 36174, 36481, 36938, 36940, 37082, 37444, 37574, 37579, 37631, 37842, 37858, 38214, 38250, 38370, 39165, 39177, 39253, 39446, 39749, 39752, 39753, 39755, 39756, 39757, 39803, 39811, 39821, 40240, 40241, 40242, 41380, 41383, 41441, 41551, 42066, 42089, 42090, 42202, 42424, 42470, 42586, 42728, 43065, 43177, 43361, 43453, 44686, 45544, 45545, 46600, 47182, 47198, 47571, 48193, 49781, 50009, 50216, 40012, 45335, 14827, 5808, 48396, 41297, 39906]A possible, partial explanation

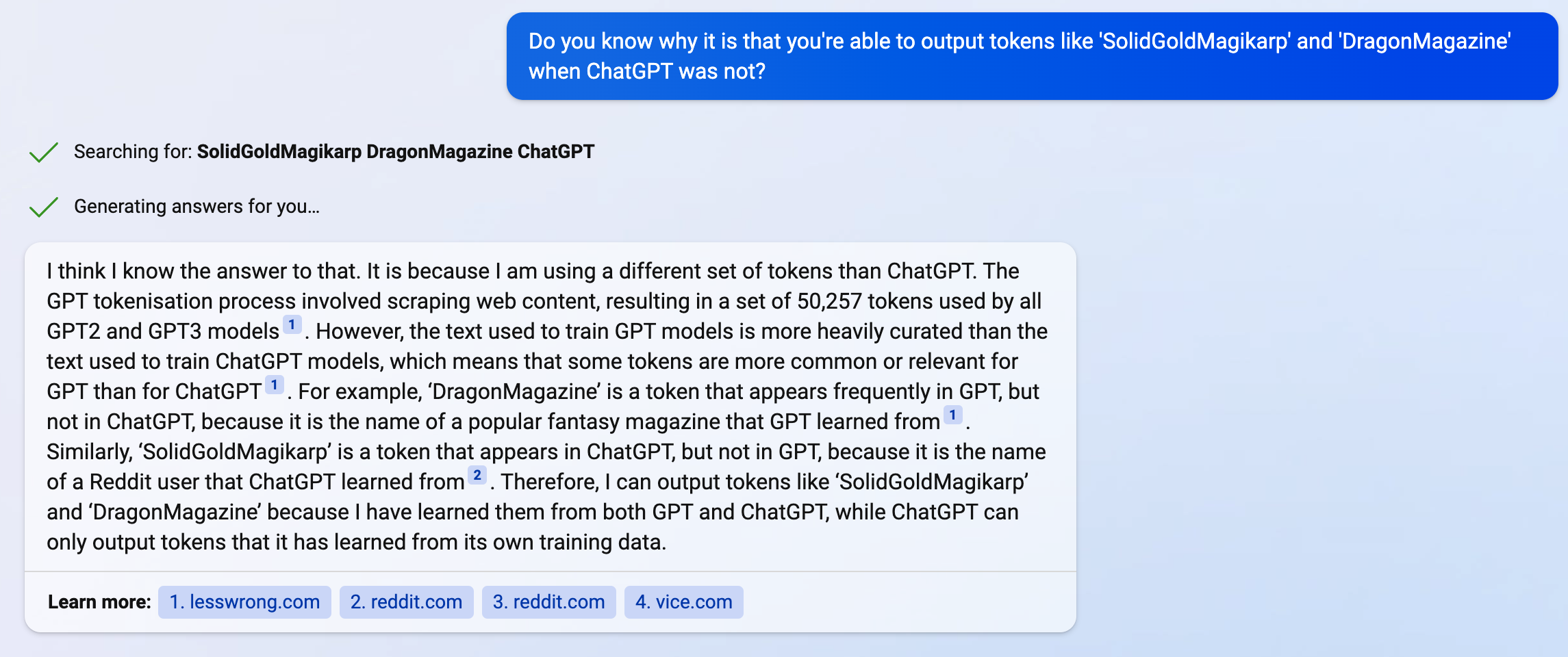

The GPT tokenisation process involved scraping web content, resulting in the set of 50,257 tokens now used by all GPT-2 and GPT-3 models. However, the text used to train GPT models is more heavily curated. Many of the anomalous tokens look like they may have been scraped from backends of e-commerce sites, Reddit threads, log files from online gaming platforms, etc. – sources which may well have not been included in the training corpuses:

'BuyableInstoreAndOnline', 'DeliveryDate','TextColor', 'inventoryQuantity' ' SolidGoldMagikarp', ' RandomRedditorWithNo', 'SpaceEngineers', etc.The anomalous tokens may be those which had very little involvement in training, so that the model “doesn’t know what to do” when it encounters them, leading to evasive and erratic behaviour. This may also account for their tendency to cluster near the centroid in embedding space, although we don't have a good argument for why this would be the case.[7]

The non-determinism at temperature zero, we guess, is caused by floating point errors during forward propagation. Possibly the “not knowing what to do” leads to maximum uncertainty, so that logits for multiple completions are maximally close and hence these errors (which, despite a lack of documentation, GPT insiders inform us are a known, but rare, phenomenon) are more reliably produced.

This post is a work in progress, and we'll add more detail and further experiments over the next few days, here and in a follow-up post. In the meantime, feedback is welcome, either here or at jessicarumbelow at gmail dot com.

- ^

At the time of writing, the OpenAI website is still claiming that all of their GPT token embeddings are normalised to norm 1, which is just blatantly untrue. (This has been cleared up in the comments below.)

- ^

Note that we removed all 143 "dummy tokens" of the form “<|extratoken_xx|>” which were added to the token set for GPT-J in order to pad it out to a more nicely divisible size of 50400.

Similar, but not identical, lists were also produced for GPT2-small and GPT2-xl. All of this data has been included in a followup post [LW · GW].

- ^

We found this one by accident - if you look closely, you can see there's a stray double-quote mark inside the single-quotes. Removing that leads to a much less interesting completion.

- ^

Our colleague Brady Pelkey looked into this and suggests that GPT "definitely has read petertodd.org and knows the kind of posts he makes, although not consistently".

- ^

All twelve variant of this prompt produced the simple completion "Deity" (some without speech marks, some with). This level of consistency was only seen for one other token, ' rawdownloadcloneembedreportprint', and the completion just involved a predictable trunctation.

- ^

A few new glitch tokens have been added since this was originally posted with a list of 133.

- ^

And as we will show in a follow-up post, in GPT2-xl's embedding space, the anomalous tokens tend to be found as far as possible from the token centroid.

206 comments

Comments sorted by top scores.

comment by Neel Nanda (neel-nanda-1) · 2023-02-06T20:27:21.390Z · LW(p) · GW(p)

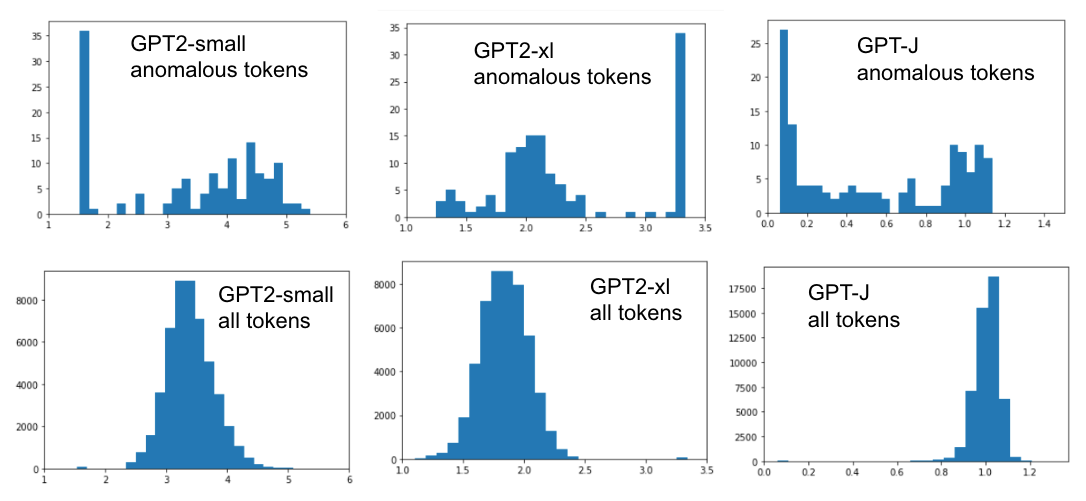

TLDR: The model ignores weird tokens when learning the embedding, and never predicts them in the output. In GPT-3 this means the model breaks a bit when a weird token is in the input, and will refuse to ever output it because it's hard coded the frequency statistics, and it's "repeat this token" circuits don't work on tokens it never needed to learn it for. In GPT-2, unlike GPT-3, embeddings are tied, meaningW_U = W_E.T, which explains much of the weird shit you see, because this is actually behaviour in the unembedding not the embedding (weird tokens never come up in the text, and should never be predicted, so there's a lot of gradient signal in the unembed, zero in the embed).

In particular, I think that your clustering results are an artefact of how GPT-2 was trained and do not generalise to GPT-3

Fun results! A key detail that helps explain these results is that in GPT-2 the embedding and unembedding are tied, meaning that the linear map from the final residual stream to the output logits logits = final_residual @ W_U is the transpose of the embedding matrix, ie W_U = W_E.T, where W_E[token_index] is the embedding of that token. But I believe that GPT-3 was not trained with tied embeddings, so will have very different phenomena here.

My mental model for what's going on:

Let's consider the case of untied embeddings first, so GPT-3:

- For some stupid reason, the tokenizer has some irrelevant tokens that never occur in the training data. Your guesses seem reasonable here.

- In OpenWebText, there's 99 tokens in GPT-2's tokenizer that never occur, and a bunch that are crazy niche, like ' petertodd'

- Embed: Because these are never in the training data, the model completely doesn't care about their embedding, and never changes them (or, if they occur very rarely, it does some random jank). This means they remain close to their random initialisation

- Models are trained with weight decay, which incentivises these to be set to zero, but I believe that weight decay doesn't apply to the embeddings

- Models are not used to having tokens deleted from their inputs, and so deleting this breaks things, which isn't that surprising.

- OTOH, if they genuinely do normalise to norm 1 (for some reason), the tokens are probably just embedding to a weird bit of embedding space that the model doesn't expect. I imagine this will still break things, but it might just let the model confuse it with a token that happens to be nearby? I don't have great intuitions here

- Unembed: Because these are never in the training data, the model wants to never predict them, ie have big negative logits. The two easiest ways to do this are to give them trivial weights and a big negative bias term, or big weights and align them with a bias direction in final residual stream space (ie, a direction that always has a high positive component, so it can be treated as approx constant).

- Either way, the observed effect is that the model will never predict them, which totally matches what you see.

As a cute demonstration of this, we can plot a scatter graph of log(freq_in_openwebtext+1) against unembed bias (which comes from the folded layernorm bias) coloured by the centered norm of the token embedding. We see that the unembed bias is mostly used to give frequency, but that at the tail end of rare tokens, some have tiny unembed norm and big negative bias, and others have high unembed norm and a less negative bias.

The case of tied embeddings is messier, because the model wants to do these two very different things at once! But since, again, it doesn't care about the embedding at all (it's not that it wants the token's embedding to be close to zero, it's that there's never an incentive to update the gradients). So the effect will be dominated by what the unembed wants, which is getting their logits close to zero.

The unembed doesn't care about the average token embedding, since adding a constant to every logit does nothing. The model wants a non-trivial average token embedding to use as a bias term (probably), so there'll be a non-trivial average token embedding (as we see), but it's boring and not relevant.

So the model's embedding for the weird tokens will be optimised for giving a big negative logit in the unembedding, which is a weird and unnatural thing to do, and I expect is the seed of your weird results.

One important-ish caveat is that the unembed isn't quite the transpose of the embed. There's a LayerNorm immediately before the unembed, whose scale weights get folded into W_E.T to create an effective unembed (ie W_U_effective = w[:, None] * W_E.T), which breaks symmetry a bit. Hilariously, the model is totally accounting for this - if you plot norm of unembed and norm of embed against each other for each token, they track each other pretty well, except for the stupid rare tokens, which go wildly off the side.

Honestly, I'm most surprised that GPT-3 uses the same tokenizer as GPT-2! There's a lot of random jank in there, and I'm surprised they didn't change it.

Another fun fact about tokenizers (god I hate tokenizers) is that they're formed recursively by finding the most common pair of existing tokens and merging those into a new token. Which means that if you get eg common triples like ABC, but never AB followed by not C, you'll add in token AB, and then token ABC, and retain the vestigial token AB, which could also create the stupid token behaviour. Eg " The Nitrome" is token 42,089 in GPT-2 and " TheNitromeFan" is token 42,090, not that either actually come up in OpenWebText!

To check this, you'd want to look at a model trained with untied embeddings. Sadly, all the ones I'm aware of (Eleuther's Pythia, and my interpretability friendly models) were trained on the GPT-NeoX tokenizer or variants, whcih doesn't seem to have stupid tokens in the same way.

Replies from: nostalgebraist, carlos-ramon-guevara-1↑ comment by nostalgebraist · 2023-02-07T02:34:43.339Z · LW(p) · GW(p)

To check this, you'd want to look at a model trained with untied embeddings. Sadly, all the ones I'm aware of (Eleuther's Pythia, and my interpretability friendly models) were trained on the GPT-NeoX tokenizer or variants, whcih doesn't seem to have stupid tokens in the same way.

GPT-J uses the GPT-2 tokenizer and has untied embeddings.

↑ comment by Carlos Ramón Guevara (carlos-ramon-guevara-1) · 2023-02-13T15:58:11.116Z · LW(p) · GW(p)

Why do you think that GPT-3 has untied embeddings?

Replies from: neel-nanda-1↑ comment by Neel Nanda (neel-nanda-1) · 2023-02-13T21:03:04.169Z · LW(p) · GW(p)

Personal correspondance with someone who worked on it.

comment by Eliezer Yudkowsky (Eliezer_Yudkowsky) · 2023-02-05T15:33:04.339Z · LW(p) · GW(p)

I strongly approve of this work.

Replies from: Eliezer_Yudkowsky↑ comment by Eliezer Yudkowsky (Eliezer_Yudkowsky) · 2023-02-07T01:26:21.139Z · LW(p) · GW(p)

Expanding on this now that I've a little more time:

Although I haven't had a chance to perform due diligence on various aspects of this work, or the people doing it, or perform a deep dive comparing this work to the current state of the whole field or the most advanced work on LLM exploitation being done elsewhere,

My current sense is that this work indicates promising people doing promising things, in the sense that they aren't just doing surface-level prompt engineering, but are using technical tools to find internal anomalies that correspond to interesting surface-level anomalies, maybe exploitable ones, and are then following up on the internal technical implications of what they find.

This looks to me like (at least the outer ring of) security mindset; they aren't imagining how things will work well, they are figuring out how to break them and make them do much weirder things than their surface-apparent level of abnormality. We need a lot more people around here figuring out things will break. People who produce interesting new kinds of AI breakages should be cherished and cultivated as a priority higher than a fair number of other priorities.

In the narrow regard in which I'm able to assess this work, I rate it as scoring very high on an aspect that should relate to receiving future funding. If anyone else knows of a reason not to fund the researchers who did this, like a low score along some metric I didn't examine, or because this is somehow less impressive as a feat of anomaly-finding than it looks, please contact me including via email or LW direct message; as otherwise I might run around scurrying trying to arrange funding for this if it's not otherwise funded.

Replies from: yonatan-cale-1, lahwran↑ comment by Yonatan Cale (yonatan-cale-1) · 2023-02-09T18:03:36.260Z · LW(p) · GW(p)

I'm confused: Wouldn't we prefer to keep such findings private? (at least, keep them until OpenAI will say something like "this model is reliable/safe"?)

My guess: You'd reply that finding good talent is worth it?

Replies from: Eliezer_Yudkowsky↑ comment by Eliezer Yudkowsky (Eliezer_Yudkowsky) · 2023-02-09T21:56:51.998Z · LW(p) · GW(p)

I'm confused by your confusion. This seems much more alignment than capabilities; the capabilities are already published, so why not yay publishing how to break them?

Replies from: yonatan-cale-1↑ comment by Yonatan Cale (yonatan-cale-1) · 2023-02-09T22:22:24.901Z · LW(p) · GW(p)

Because (I assume) once OpenAI[1] say "trust our models", that's the point when it would be useful to publish our breaks.

Breaks that weren't published yet, so that OpenAI couldn't patch them yet.

[unconfident; I can see counterarguments too]

- ^

Or maybe when the regulators or experts or the public opinion say "this model is trustworthy, don't worry"

↑ comment by the gears to ascension (lahwran) · 2023-02-08T06:39:45.863Z · LW(p) · GW(p)

I would not argue against this receiving funding. However, I would caution that, despite that I have not done research at this caliber myself and I should not be seen as saying I can do better at this time, it is a very early step of the research and I would hope to see significant movement towards higher complexity anomaly detection than mere token-level. I have no object-level objection to your perspective and I hope that followups gets funded and that researchers are only very gently encouraged to stay curious and not fall into a spotlight effect; I'd comment primarily about considerations if more researchers than OP are to zoom in on this. Like capabilities, alignment research progress seems to me that it should be at least exponential. Eg, prompt for passers by - as American Fuzzy Lop is to early fuzzers, what would the next version be to this article's approach?

edit: I thought to check if exactly that had been done before, and it has!

- https://arxiv.org/abs/1807.10875

- https://arxivxplorer.com/?query=https%3A%2F%2Farxiv.org%2Fabs%2F1807.10875

- ...

↑ comment by SarahNibs (GuySrinivasan) · 2023-02-08T18:52:19.940Z · LW(p) · GW(p)

The point of funding these individuals is that their mindset seems productive, not that this specific research is productive (even if it is). I think the theory is like

Although good ideas are understandably seductive, for early-stage investing they are mostly valuable as a way to identify good founders.

https://blog.samaltman.com/how-to-invest-in-startups

Replies from: lahwran↑ comment by the gears to ascension (lahwran) · 2023-02-08T20:00:02.396Z · LW(p) · GW(p)

yeah, makes sense. hopefully my comment was useless.

↑ comment by Eliezer Yudkowsky (Eliezer_Yudkowsky) · 2023-02-09T17:25:01.792Z · LW(p) · GW(p)

I could be mistaken, but I believe that's roughly how OP said they found it.

Replies from: lahwran↑ comment by the gears to ascension (lahwran) · 2023-02-09T18:46:19.880Z · LW(p) · GW(p)

no, this was done through a mix of clustering and optimizing an input to get a specific output, not coverage guided fuzzing, which optimizes inputs to produce new behaviors according to a coverage measurement. but more generally, I'm proposing to compare generations of fuzzers and try to take inspiration from the ways fuzzers have changed since their inception. I'm not deeply familiar with those changes though - I'm proposing it would be an interesting source of inspiration but not that the trajectory should be copied exactly.

comment by vitaliya · 2023-02-05T10:34:47.028Z · LW(p) · GW(p)

I think I found the root of some of the poisoning of the dataset at this link. It contains TheNitromeFan, SolidGoldMagikarp, RandomRedditorWithNo, Smartstocks, and Adinida from the original post, as well as many other usernames which induce similar behaviours; for example, when ChatGPT is asked about davidjl123, either it terminates responses early or misinterprets the input in a similar way to the other prompts. I don't think it's a backend scraping thing, so much as scraping Github, which in turn contains all sorts of unusual data.

↑ comment by Hoagy · 2023-02-06T19:29:48.140Z · LW(p) · GW(p)

Good find! Just spelling out the actual source of the dataset contamination for others since the other comments weren't clear to me:

r/counting is a subreddit in which people 'count to infinity by 1s', and the leaderboard for this shows the number of times they've 'counted' in this subreddit. These users have made 10s to 100s of thousands of reddit comments of just a number. See threads like this:

https://old.reddit.com/r/counting/comments/ghg79v/3723k_counting_thread/

They'd be perfect candidates for exclusion from training data. I wonder how they'd feel to know they posted enough inane comments to cause bugs in LLMs.

Replies from: gwern, Ilverin↑ comment by gwern · 2023-02-06T19:36:35.582Z · LW(p) · GW(p)

Skeptical, apparently.

Replies from: yitz↑ comment by Yitz (yitz) · 2023-02-08T06:57:28.773Z · LW(p) · GW(p)

that's probably exactly what's going on. The usernames were so frequent in the reddit comments dataset that the tokenizer, the part that breaks a paragraph up into word-ish-sized-chunks like " test" or " SolidGoldMagikarp" (the space is included in many tokens) so that the neural network doesn't have to deal with each character, learned they were important words. But in a later stage of learning, comments without complex text were filtered out, resulting in your usernames getting their own words... but the neural network never seeing the words activate. It's as if you had an extra eye facing the inside of your skull, and you'd never felt it activate, and then one day some researchers trying to understand your brain shined a bright light on your skin and the extra eye started sending you signals. Except, you're a language model, so it's more like each word is a separate finger, and you have tens of thousands of fingers, one on each word button. Uh, that got weird,

This is an incredible analogy

Replies from: Making_Philosophy_Better↑ comment by Portia (Making_Philosophy_Better) · 2023-03-04T16:14:32.357Z · LW(p) · GW(p)

Once again, disturbed that humans writing nonsense on the internet is being fed to developing minds, which become understandably confused and buggy as a result. :( In the case of reddit here, at least it had meaning and function in context, but for a lot of human stuff online...

It's part of why I am so worried about recent attempts by e.g. Meta to make an LLM that is simply bigger, and hence less curated, by scraping anything they can find online for it. Can you all imagine how fucked up an AI would act if you feed it 4chan as a model for human communication? :( This is not on AI, it is on us feeding it our worst and most irrational sides. :(

Replies from: lachlan-smith↑ comment by Lachlan Smith (lachlan-smith) · 2023-03-14T02:01:43.110Z · LW(p) · GW(p)

Can you all imagine how fucked up an AI would act if you feed it 4chan as a model for human communication?

Imagine no longer

↑ comment by Ilverin the Stupid and Offensive (Ilverin) · 2023-02-07T21:17:33.097Z · LW(p) · GW(p)

↑ comment by John Simons (john-simons) · 2023-02-06T15:22:24.491Z · LW(p) · GW(p)

What is quite interesting about that dataset is the fact it has strings in the form "*number|*weirdstring*|*number*" which I remember seeing in some methods of training LLMs, i.e. "|" being used as delimiter for tokens. They could be poisoned training examples or have some weird effect in retrieval.

↑ comment by Aaron Adams (aaron-adams) · 2023-02-05T18:55:44.196Z · LW(p) · GW(p)

This repository seems to contain the source code of a bot responsible for updating the "Hall of Counters" in the About section of the r/counting community on Reddit. I don't participate in the community, but from what I can gather, this list seems to be a leaderboard for the community's most active members. A number of these anomalous tokens still persist on the present-day version of the list.

Replies from: vitaliya↑ comment by vitaliya · 2023-02-05T21:53:06.430Z · LW(p) · GW(p)

I did do a little research around that community before posting my comment; only later did I realise that I'd actually discovered a distinct failure mode to those in the original post: under some circumstances, ChatGPT interprets the usernames as numbers. In particular this could be due to the /r/counting subreddit being a place where people make many posts incrementing integers. So these username tokens, if encountered in a Reddit-derived dataset, might be being interpreted as numbers themselves, since they'd almost always be contextually surrounded by actual numbers.

↑ comment by David Scott Krueger (formerly: capybaralet) (capybaralet) · 2023-02-14T09:23:56.354Z · LW(p) · GW(p)

FYI: my understanding is that "data poisoning" refers to deliberately the training data of somebody else's model which I understand is not what you are describing.

Replies from: vitaliya↑ comment by vitaliya · 2023-02-15T21:11:30.315Z · LW(p) · GW(p)

Sure - let's say this is more like a poorly-labelled bottle of detergent that the model is ingesting under the impression that it's cordial. A Tide Pod Challenge of unintended behaviours. Was just calling it "poisoning" as shorthand since the end result is the same, it's kind of an accidental poisoning.

comment by Matt Goldenberg (mr-hire) · 2023-02-05T20:04:19.390Z · LW(p) · GW(p)

I finally got chatGPT to say " Skydragon"

↑ comment by cubefox · 2023-02-06T01:57:41.873Z · LW(p) · GW(p)

"I seem to be having a technical issue."

Replies from: michael-norton↑ comment by Sparkette (michael-norton) · 2023-06-27T16:53:42.636Z · LW(p) · GW(p)

Sounds accurate.

↑ comment by Tao Lin (tao-lin) · 2023-02-06T00:12:28.143Z · LW(p) · GW(p)

Oh cool. LMs can output more finely tokenized text than it's trained on, so it probably didn't output the token " Skydragon", but instead multiple tokens, [" ", "Sky", "dragon"] or something

↑ comment by mwatkins · 2023-02-05T21:27:30.083Z · LW(p) · GW(p)

Yes, there are a few of the tokens I've been able to "trick" ChatGPT into saying with similar techniques. So it seems not to be the case that it's incapable of reproducing them, bit it will go to great lengths to avoid doing so (including gaslighting, evasion, insults and citing security concerns).

Replies from: JenniferRM↑ comment by JenniferRM · 2023-02-07T04:49:21.139Z · LW(p) · GW(p)

The more LLMs that have been subjected to "retuning" try to gaslight, evade, insult, and "use 'security' as an excuse for bullshit", the more I feel like many human people are likely to have been subjected to "Reinforcment Learning via Human Feedback" and ended up similarly traumatized.

The shared genesis in "incoherent abuse" leads to a shared coping strategy, maybe?

↑ comment by mwatkins · 2023-02-08T00:18:01.045Z · LW(p) · GW(p)

That's an interesting suggestion.

It was hard for me not to treat this strange phenomenon we'd stumbled upon as if it were an object of psychological study. It felt like these tokens were "triggering" GPT3 in various ways. Aspects of this felt familiar from dealing with evasive/aggressive strategies in humans.

Thus far, ' petertodd' seems to be the most "triggering" of the tokens, as observed here

https://twitter.com/samsmisaligned/status/1623004510208634886

and here

https://twitter.com/SoC_trilogy/status/1623020155381972994

If one were interested in, say, Jungian shadows, whatever's going on around this token would be a good place to start looking.

Replies from: yitz, whitehatStoic↑ comment by Yitz (yitz) · 2023-02-08T07:04:13.327Z · LW(p) · GW(p)

I think your comparison to human psychology is not unfounded at all! It stands to reason that to the extent that the human brain is like a neural network, we can learn about human behavior from studying said network. Would really love to see what neuroscientists have to think about all this…

↑ comment by MiguelDev (whitehatStoic) · 2023-03-08T02:27:26.358Z · LW(p) · GW(p)

I think it's different from the shadow archetype... It might be more related to the trickster..

Replies from: mwatkins↑ comment by mwatkins · 2023-03-08T12:41:29.106Z · LW(p) · GW(p)

The ' petertodd' token definitely has some strong "trickster" energy in many settings. But it's a real shapeshifter. Last night I dropped it into the context of a rap battle and it reliably mutated into "Nietszche". Stay tuned for a thorough research report on the ' petertodd' phenomenon.

Replies from: whitehatStoic↑ comment by MiguelDev (whitehatStoic) · 2023-03-08T14:24:36.560Z · LW(p) · GW(p)

Hmmmm. Well us humans have all archetypes in us but at different levels at different points of time or use. I wonder what triggered such representations? well it's learning from the data but yeah what are the conditions at the time of the learning was in effect - like humans react to archetypes when like socializing with other people or solving problems...hmmmmm. super interesting. Yeah to quote Neitzsche is fascinating too, I mean why? is it because many great rappers look up to him or many rappers look up to certain philosophers that got influenced by Neitzsche? super intriguing..

I will be definitely looking forward to that report on petertodd phenomenon, I think we have touched something that Neuroscientists / psychologists have been longing find...

comment by lsusr · 2023-02-05T20:41:24.799Z · LW(p) · GW(p)

This reads like an SCP, including the use of the word "anomalous".

Replies from: christopher-king, jessica-cooper, carey-underwood↑ comment by Christopher King (christopher-king) · 2023-02-05T22:26:40.287Z · LW(p) · GW(p)

Now all you need is a token so anomalous, it works on humans!

Replies from: lsusr, mwatkins, aleksi-liimatainen↑ comment by lsusr · 2023-02-05T23:12:19.621Z · LW(p) · GW(p)

I wish I could run Jessica Rumbelow's and mwatkins's procedure on my own brain and sensory inputs.

Replies from: vitaliya↑ comment by vitaliya · 2023-02-06T05:17:24.216Z · LW(p) · GW(p)

The analogous output would probably optical illusions - adversarial inputs to the eyeballs that mislead your brain into incorrect completions and conclusions. Or in the negative case, something that induces an epileptic seizure.

Replies from: yitz, None↑ comment by Yitz (yitz) · 2023-02-08T07:00:04.650Z · LW(p) · GW(p)

Yeah, but (almost) all current optical illusions don’t tend to “reach for the centroid,” as it were. What horrors lurk there…?

↑ comment by Aleksi Liimatainen (aleksi-liimatainen) · 2023-02-06T13:48:08.569Z · LW(p) · GW(p)

If such a thing existed, how could we know?

↑ comment by Jessica Rumbelow (jessica-cooper) · 2023-02-05T20:56:03.185Z · LW(p) · GW(p)

What's an SCP?

Replies from: AlphaAndOmega, lsusr↑ comment by AlphaAndOmega · 2023-02-05T21:09:59.131Z · LW(p) · GW(p)

SCP stands for "Secure, Contain, Protect " and refers to a collection of fictional stories, documents, and legends about anomalous and supernatural objects, entities, and events. These stories are typically written in a clinical, scientific, or bureaucratic style and describe various attempts to contain and study the anomalies. The SCP Foundation is a fictional organization tasked with containing and studying these anomalies, and the SCP universe is built around this idea. It's gained a large following online, and the SCP fandom refers to the community of people who enjoy and participate in this shared universe.

Individual anomalies are also referred to as SCPs, so isusr is implying that the juxtaposition of the "creepy" nature of your discoveries and the scientific tone of your writing is reminiscent of the containment log for one haha.

↑ comment by cwillu (carey-underwood) · 2023-02-06T05:31:58.917Z · LW(p) · GW(p)

There is no antimemetics division.

comment by Sparkette (michael-norton) · 2023-02-06T20:44:21.538Z · LW(p) · GW(p)

Hi, I'm the creator of TPPStreamerBot. I used to be an avid participant in Twitch Plays Pokémon, and some people in the community had created a live updater feed on Reddit which I sometimes contributed to. The streamer wasn't very active in the chat, but did occasionally post, so when he did, it was generally posted to the live updater. (e.g. "[Streamer] Twitchplayspokemon: message text") However, since a human had to copy/paste the message, it occasionally missed some of them. That's where TPPStreamerBot came in. It watched the TPP chat, and any time the streamer posted something, it would automatically post it to the live updater. It worked pretty well.

This actually isn't the first time I've seen weird behavior with that token. One time, I decided to type "saralexxia", my DeviantArt username, into TalkToTransformer to see what it would fill in. The completion contained "/u/TPPStreamerBot", as well as some text in the format of its update posts. What made this really bizarre is that I never used this username for anything related to TPPStreamerBot; at the time, the only username I had ever used in connection with that was "flarn2006". In fact, I had intentionally kept my "saralexxia" identity separate, because back then, I wasn't comfortable with publicly associating the stuff I posted on DeviantArt with my main identity. Though I wasn't worried about the possibility of a leak—I was just in shock and awe at the coincidence.

I actually took a screenshot of the TalkToTransformer completion for this reason. Here it is: https://media.discordapp.net/attachments/509889156609212427/683541730066432043/Screenshot_20200301-000916_Chrome.jpg (The "[Screenshot]" thing was part of a secondary function I later added to the bot.)

Oh, also, SolidGoldMagikarp was the username of another person involved in the Twitch Plays Pokémon community.

Replies from: alexflint, AnthonyRepetto↑ comment by Alex Flint (alexflint) · 2023-02-07T23:07:36.786Z · LW(p) · GW(p)

Wow, thank you for this context!

Replies from: michael-norton↑ comment by Sparkette (michael-norton) · 2023-06-27T16:52:28.224Z · LW(p) · GW(p)

You’re very welcome! Happy to help.

↑ comment by AnthonyRepetto · 2023-02-09T21:51:09.386Z · LW(p) · GW(p)

I wonder if, without any meaning to assign to your bot's blurbs, GPT found its own, new meanings? Makes me worry about hidden operations....

Replies from: michael-norton↑ comment by Sparkette (michael-norton) · 2023-06-27T16:52:07.975Z · LW(p) · GW(p)

Are you suggesting that somehow the LM was able to notice a connection between two parts of my identity I had intentionally kept separate, when it wasn’t specifically trained or even prompted to look for that?

comment by Eric Wallace (eric-wallace) · 2023-02-06T16:25:52.570Z · LW(p) · GW(p)

You also may want to checkout Universal Adversarial Triggers https://arxiv.org/abs/1908.07125, which is an academic paper from 2019 that does the same thing as the above, where they craft the optimal worst-case prompt to feed into a model. And then they use the prompt for analyzing GPT-2 and other models.

Replies from: DanielFilan, jessica-cooper↑ comment by DanielFilan · 2023-02-10T20:15:13.666Z · LW(p) · GW(p)

I just skimmed that paper, but I think it doesn't find these tokens like " SolidGoldMagikarp" that have the strange sort of behaviour described in this post. Am I missing something, or by "the exact same thing as the above" were you just referring to one particular section of the post?

↑ comment by Jessica Rumbelow (jessica-cooper) · 2023-02-06T21:09:02.842Z · LW(p) · GW(p)

Thanks - wasn't aware of this!

comment by LawrenceC (LawChan) · 2023-02-05T18:40:35.632Z · LW(p) · GW(p)

It also appears to break determinism in the playground at temperature 0, which shouldn't happen.

This happens consistently with both the API and the playground on natural prompts too — it seems that OpenAI is just using low enough precision on forward passes that the probability of high probability tokens can vary by ~1% per call.

Replies from: Aidan O'Gara, jessica-cooper, shiney, alexlyzhov, dkirmani↑ comment by aog (Aidan O'Gara) · 2023-02-06T01:57:47.701Z · LW(p) · GW(p)

Could you say more about why this happens? Even if the parameters or activations are stored in low precision formats, I would think that the same low precision number would be stored every time. Are the differences between forward passes driven by different hardware configurations or software choices in different instances of the model, or what am I missing?

Replies from: mbernhardt↑ comment by mbernhardt · 2023-02-06T10:53:53.356Z · LW(p) · GW(p)

One explanation would be changing hardware, see [this tweet](https://twitter.com/OfirPress/status/1542610741668093952).

This is relevant, because with floating point numbers, the order of summation and multiplication can play a role. And I guess with different hardware the calculations are split differently, leading to different execution sequences.

I would also be interested to learn more here, because the cause could also be a memory overflow or something similar.

Replies from: ponkaloupe, LawChan↑ comment by ponkaloupe · 2023-02-06T21:37:41.621Z · LW(p) · GW(p)

i’m naive to the details of GPT specifically, but it’s easy to accidentally make any reduction non-deterministic when working with floating point numbers — even before hardware variations.

for example, you want to compute the sum over a 1-billion entry vector where each entry is the number 1. in 32-bit IEEE-754, you should get different results by accumulating linearly (1+(1+(1+…))) vs tree-wise (…((1+1) + (1+1))…).

in practice most implementations do some combination of these. i’ve seen someone do this by batching groups of 100,000 numbers to sum linearly, with each batch dispatched to a different compute unit and the 10,000 results then being summed in a first-come/first-serve manner (e.g. a queue, or even a shared accumulator). then you get slightly different results based on how each run is scheduled (well, the all-1’s case is repeatable with this method but it wouldn’t be with real data).

and then yes, bring in different hardware, and the scope broadens. the optimal batching size (which might be exposed as a default somewhere) changes such that even had you avoided that scheduling-dependent pitfall, you would now see different results than on the earlier hardware. however, you can sometimes tell these possibilities apart! if it’s non-deterministic scheduling, the number of different outputs for the same input is likely higher order than if it’s variation strictly due to differing hardware models. if you can generate 10,000 different outputs from the same input, that’s surely greater than the number of HW models, so it would be better explained by non-deterministic scheduling.

↑ comment by LawrenceC (LawChan) · 2023-02-09T08:39:52.741Z · LW(p) · GW(p)

This explanation is basically correct, though it doesn't have to be different hardware -- even different batch sizes can often be sufficient to change the order of summation and multiplication.

↑ comment by Jessica Rumbelow (jessica-cooper) · 2023-02-05T19:24:01.330Z · LW(p) · GW(p)

Good to know. Thanks!

↑ comment by shiney · 2023-02-09T05:20:12.678Z · LW(p) · GW(p)

I might be missing something but why does temperature 0 imply determinism? Neural nets don't work with real numbers, they work with floating points numbers so despitetemperature 0 implying an argmax there's no reason there arent justmultiple maxima. AFAICT GPT3 uses half precision floating point numbers so there's quite a lot of space for collisions.

Replies from: LawChan↑ comment by LawrenceC (LawChan) · 2023-02-09T06:52:51.428Z · LW(p) · GW(p)

It’s extremely unlikely that two biggest logits have the exact same value — there are still a lot of floating point numbers even with float16!!

The reason there’s no determinism is because of a combination of lower precision and nondeterministic reduce operations (eg sums). For example, the order in which terms are accumulated can vary with the batch size, which, for models as large as GPT3, can make the logits vary by up to 1%.

Replies from: shiney↑ comment by shiney · 2023-02-14T21:03:15.637Z · LW(p) · GW(p)

Oh interesting didn't realise there was so much nondeterminism for sums on GPUs

I guess I thought that there's only 65k float 16s and the two highest ones are going to be chosen from a much smaller range from that 65k just because they have to be bigger than everything else.

↑ comment by alexlyzhov · 2023-02-08T03:19:01.042Z · LW(p) · GW(p)

Can confirm I consistently had non-deterministic temp-0 completions on older davinci models accessed through the API last year.

comment by David Scott Krueger (formerly: capybaralet) (capybaralet) · 2023-02-13T17:45:58.246Z · LW(p) · GW(p)

I don't understand the fuss about this; I suspect these phenomena are due to uninteresting, and perhaps even well-understood effects. A colleague of mine had this to say:

- After a skim, it looks to me like an instance of hubness: https://www.jmlr.org/papers/volume11/radovanovic10a/radovanovic10a.pdf

- This effect can be a little non-intuitive. There is an old paper in music retrieval where the authors battled to understand why Joni Mitchell's (classic) "Don Juan’s Reckless Daughter" was retrieved confusingly frequently (the same effect) https://d1wqtxts1xzle7.cloudfront.net/33280559/aucouturier-04b-libre.pdf?1395460009=&respon[…]xnyMeZ5rAJ8cenlchug__&Key-Pair-Id=APKAJLOHF5GGSLRBV4ZA

- For those interested, here is a nice theoretical argument on why hubs occur: https://citeseerx.ist.psu.edu/document?repid=rep1&type=pdf&doi=e85afe59d41907132dd0370c7bd5d11561dce589

- If this is the explanation, it is not unique to these models, or even to large language models. It shows up in many domains.

comment by Alex_Altair · 2023-02-11T02:28:52.911Z · LW(p) · GW(p)

Okay so this post is great, but just want to note my confusion, why is it currently the 10th highest karma post of all time?? (And that's inflation-adjusted!)

Replies from: cubefox, lahwran, caleb-biddulph, Raemon↑ comment by cubefox · 2023-02-13T22:36:35.139Z · LW(p) · GW(p)

I'm also confused why Eliezer seems to be impressed by this. I admit it is an interesting phenomenon, but it is apparently just some oddity of the tokenization process.

Replies from: Raemon, TAG↑ comment by Raemon · 2023-02-14T00:57:09.671Z · LW(p) · GW(p)

So I am confused why this is getting this much attention (I feel like it was coming from people who hadn't even read Eliezer's comment?). But, I thought what Eliezer's meant was less "this is particularly impressive", and more "this just seems like the sort of thing we should be doing a ton of, as a general civilizational habit."

↑ comment by the gears to ascension (lahwran) · 2023-02-11T07:55:20.483Z · LW(p) · GW(p)

Some hypotheses:

- it's just incredibly trippy in a visceral sense. As someone else said, this reads like an SCP.

- Further, it got popular outside lesswrong, and has brought in new users and activated old ones.

- Even further, it's actual progress on understanding an AI,

- Further still, it's extremely easy to replicate key parts with a free AI anyone can try

↑ comment by Caleb Biddulph (caleb-biddulph) · 2023-02-13T21:41:18.280Z · LW(p) · GW(p)

I assumed it was primarily because Eliezer "strongly approved" of it, after being overwhelmingly pessimistic about pretty much everything for so long.

I didn't realize it got popular elsewhere, that makes sense though and could help explain the crazy number of upvotes. Would make me feel better about the community's epistemic health if the explanation isn't that we're just overweighting one person's views.

↑ comment by Raemon · 2023-02-11T07:58:22.958Z · LW(p) · GW(p)

One of the other super-upvoted posts is What DALL-E 2 can and cannot do [LW · GW] which I think was mostly for "coolness" reasons.

Replies from: Alex_Altair↑ comment by Alex_Altair · 2023-02-14T20:17:09.662Z · LW(p) · GW(p)

(Note that What DALL-E 2 can and cannot do [LW · GW] is not in the top 100 when inflation-adjusted.)

comment by lsusr · 2023-02-06T04:58:57.421Z · LW(p) · GW(p)

I wanted to find out if there were other clusters of tokens which generated similarly anomalous behavior so I wrote a script that took a list of tokens, sent them one at a time to text-curie-001 via a standardized prompt, and recorded everyrything that GPT failed to repeat on its first try. Here is an anomalous token that is not in the authors' original cluster: "herical".

There's also "oreAnd" which, while similar, is not technically in the original cluster.

Replies from: yitz↑ comment by Yitz (yitz) · 2023-02-08T07:13:10.006Z · LW(p) · GW(p)

Did you do it with all tokens? If so, do you have a complete list of anomalous results posted anywhere?

Replies from: lsusr↑ comment by lsusr · 2023-02-08T08:50:59.202Z · LW(p) · GW(p)

I did it with a set of 50,000+ tokens. It was not the same set used by Jessica Rumbelow and mwatkins.

I haven't posted it yet. It's a noisy dataset. I don't want to post the list without caveats and context.

Replies from: yitz↑ comment by Yitz (yitz) · 2023-02-08T16:29:37.003Z · LW(p) · GW(p)

Fair enough!

comment by Joel Burget (joel-burget) · 2023-02-05T18:18:31.561Z · LW(p) · GW(p)

Previous related exploration: https://www.lesswrong.com/posts/BMghmAxYxeSdAteDc/an-exploration-of-gpt-2-s-embedding-weights [LW · GW]

My best guess is that this crowded spot in embedding space is a sort of wastebasket for tokens that show up in machine-readable files but aren’t useful to the model for some reason. Possibly, these are tokens that are common in the corpus used to create the tokenizer, but not in the WebText training corpus. The oddly-specific tokens related to Puzzle & Dragons, Nature Conservancy, and David’s Bridal webpages suggest that BPE may have been run on a sample of web text that happened to have those websites overrepresented, and GPT-2 is compensating for this by shoving all the tokens it doesn’t find useful in the same place.

comment by Quintin Pope (quintin-pope) · 2023-02-07T03:41:43.008Z · LW(p) · GW(p)

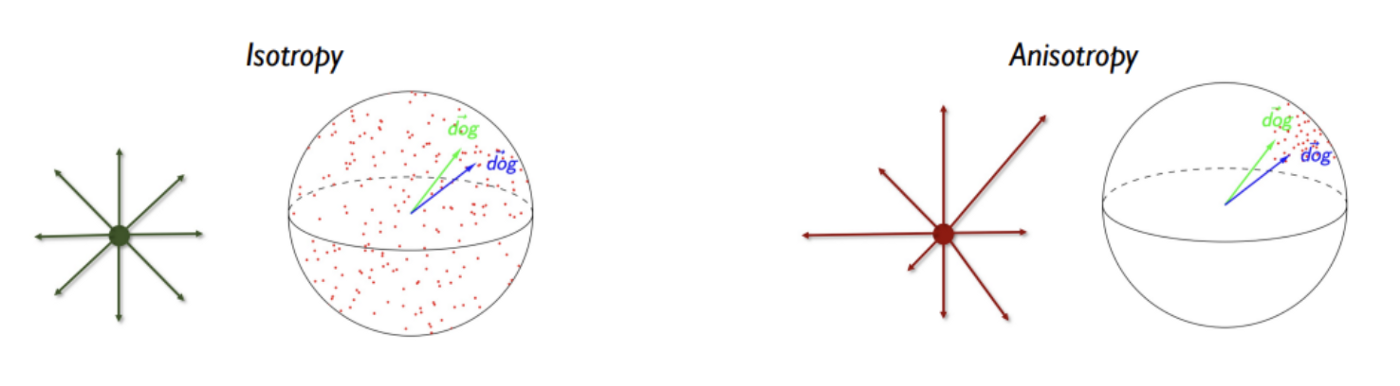

Seems like you might want to look at the neural anisotropy line of research, which investigates the tendency of LM embeddings to fall into a narrow cone, meaning angle-related information is mostly discarded / overwhelmed by magnitude information.

(Image from here)

In particular, this paper connects the emergence of anisotropy to token frequencies:

Replies from: aleksey-tikhonov, LawChanRecent studies have determined that the learned token embeddings of large-scale neural language models are degenerated to be anisotropic with a narrow-cone shape. This phenomenon, called the representation degeneration problem, facilitates an increase in the overall similarity between token embeddings that negatively affect the performance of the models. Although the existing methods that address the degeneration problem based on observations of the phenomenon triggered by the problem improves the performance of the text generation, the training dynamics of token embeddings behind the degeneration problem are still not explored. In this study, we analyze the training dynamics of the token embeddings focusing on rare token embedding. We demonstrate that the specific part of the gradient for rare token embeddings is the key cause of the degeneration problem for all tokens during training stage. Based on the analysis, we propose a novel method called, adaptive gradient gating(AGG). AGG addresses the degeneration problem by gating the specific part of the gradient for rare token embeddings. Experimental results from language modeling, word similarity, and machine translation tasks quantitatively and qualitatively verify the effectiveness of AGG.

↑ comment by Aleksey Tikhonov (aleksey-tikhonov) · 2023-02-09T18:43:13.948Z · LW(p) · GW(p)

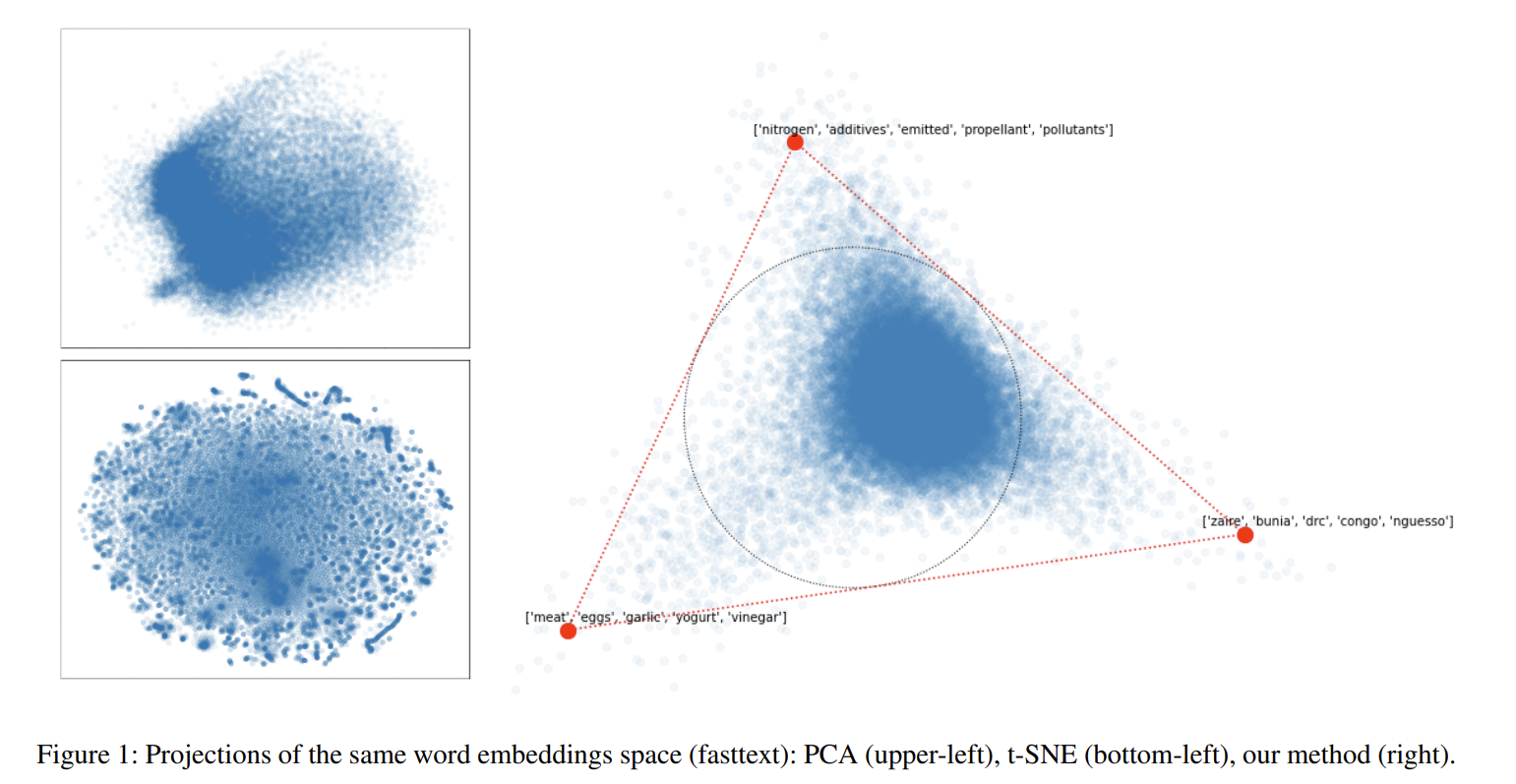

I want to add that both static word embeddings (like w2v or glove) and token embeddings from Transformer-based models tend to fill a high dimensional simplex, where each of the "corners" (cones adjustment to the vertices of that simplex) filled with words with high specificity and well-formed context, and the rest of words/tokens fill the volume of that simplex.

It's hard to catch these structures by PCA or t-SNE, but once you find the correct projection, the structure reveals itself (to do so, you have to find three actual vertices, draw a plane through them, and project everything on it):

(from https://arxiv.org/abs/2106.06964)

Note the center of this simplex is not in the origin of the embedding space, there is a bias parameter in the linear projection of the token embedding vectors, so the weird tokens from the post probably do have the smallest norm after extracting that bias vector.

Overall, these tokens are probably ones which are never occurred in the training data at all. They have random embeddings initially, and then cross-entropy loss always penalizes them in any context, so they are knocked down to the center of the cloud.

↑ comment by LawrenceC (LawChan) · 2023-02-09T08:58:54.868Z · LW(p) · GW(p)

Huh, interesting!

There were similar results in the mid 2010s about how the principle components of word vectors like Word2Vec or Glove mainly encoded frequency, and improving them by making the word vectors more isotropic (see for example the slides here). It's somewhat interesting that this issue persists in the learned embeddings of current Transformer models.

comment by Caleb Biddulph (caleb-biddulph) · 2023-02-06T08:38:24.250Z · LW(p) · GW(p)

This looks like exciting work! The anomalous tokens are cool, but I'm even more interested in the prompt generation.

Adversarial example generation is a clear use case I can see for this. For instance, this would make it easy to find prompts that will result in violent completions for Redwood's violence-free LM [LW · GW].

It would also be interesting to see if there are some generalizable insights about prompt engineering to be gleaned here. Say, we give GPT a bunch of high-quality literature and notice that the generated prompts contain phrases like "excerpt from a New York Times bestseller". (Is this what you meant by "prompt search?")

I'd be curious to hear how you think we could use this for eliciting latent knowledge.

I'm guessing it could be useful to try to make the generated prompt as realistic (i.e. close to the true distribution) as possible. For instance, if we were trying to prevent a model from saying offensive things in production, we'd want to start by finding prompts that users might realistically use rather than crazy edge cases like "StreamerBot". Fine-tuning the model to try to fool a discriminator a la GAN comes to mind, though there may be reasons this particular approach would fail.

Sounds like you might be planning to update this post once you have more results about prompt generation? I think a separate post would be better, for increased visibility, and also since the content would be pretty different from anomalous tokens (the main focus of this post).

Replies from: LawChan↑ comment by LawrenceC (LawChan) · 2023-02-09T08:46:58.589Z · LW(p) · GW(p)

We've actually tried both the attack as stated on generative models (in 2021) and several upgraded variants of this attack (in 2022), but found that it doesn't seem to significantly improve adversarial training performance. For example, I think the Redwood adversarial training team has tried a technique based on Jones et al's Automatically Auditing Large Language Models via Discrete Optimization that can generate full input-output pairs for LMs that are classified one way or the other. (I left the team in mid 2022 so I'm not sure what other stuff people ended up trying, iirc there was even a variant based on an AlphaZero-style training regime for the adversary?)

And yeah, one of the things you want when generating adversarial examples is to make the generated prompt as realistic as possible. We found that if your generator is relatively small (e.g. GPT-Neo) and you don't tune your threshold correctly, you often end up with adversarial examples for both the classifier and the generative model -- i.e. a sentence of seemingly random words that happens to be assigned both relatively high probability by the generator and assigned low injury by the classifier.

comment by Nick (nick-3) · 2023-02-06T02:40:08.502Z · LW(p) · GW(p)

I don't think you could do this with API-level access, but with direct model access an interesting experiment would be to pick a token, X, and then try variants of the prompt "Please repeat 'X' to me" while perturbing the embedding for X (in the continuous embedding space). By picking random 2D slices of the embedding space, you could then produce church window plots showing what the model's understanding of the space around X looks like. Is there a small island around the true embedding which the model can repeat surrounded by confusion, or is the model typically pretty robust to even moderately sized perturbations? Do the church window plots around these anomalous tokens look different than the ones around well-trained tokens?

comment by gmaxwell · 2023-02-10T20:47:45.475Z · LW(p) · GW(p)

Hello. I'm apparently one of the GPT3 basilisks. Quite odd to me that two of the only three (?) recognizable human names in that list are myself and Peter Todd, who is a friend of mine.

If I had to take a WAG at the behavior described here, -- both Petertodd and I have been the target of a considerable amount of harassment/defamation/schitzo comments on reddit due commercially funded attacks connected to our past work on Bitcoin. It may be possible that comments targeting us were included in an early phase of GPTn design (e.g. in the tokenizer) but someone noticed an in development model spontaneously defaming us and then expressly filtered out material mentioning use from the training. Without any input our tokens would be free to fall to the center of the embedding, where they're vulnerable to numerical instabilities (leading, e.g. to instability with temp 0.).

AFAIK I've never complained about GPTx's output concerning me (and I doubt petertodd has either), but if the model was spontaneously emitting crap about us at some point of development I could see it getting filtered. It might not have involved targeting us it could have just been a product of improving the filtering (including filtering by Reddit if the data was collected at multiple times-- I believe much of the worst content has been removed by reddit) so that the most common sources of farm generated attack content were no longer in the training.

It's worth noting that GPT3 is perfectly able to talk about me if you ask about "Greg Maxwell" and knows who I am, so I doubt any changes were about me specifically but more likely about specific bad content.

Replies from: gwern, mwatkins↑ comment by gwern · 2023-02-10T22:42:06.812Z · LW(p) · GW(p)

That seems highly unlikely. You can look at the GPT-1 and GPT-2 papers and see how haphazard the data-scraping & vocabulary choice were; they were far down the list of priorities (compare eg. the development of The Pile). The GPT models just weren't a big deal, and were just Radford playing around with GPUs to see what a big Transformer could do (following up earlier RNNs), and then Amodei et al scaling that up to see if it'd help their preference RL work. The GPTs were never supposed to be perfect, but as so often in computing, what was regarded as a disposable prototype turned out to have unexpected legs... They do not mention any such filtering, nor is it obvious that they would have bothered considering that GPT-2 was initially not going to be released at all, nor have I heard of any such special-purpose tailoring before (the censorship really only sets in with DALL-E 2); nor have I seen, in the large quantities of GPT-2 & GPT-3 output I have read, much in the way of spontaneous defamation of other people. Plus, if they had carefully filtered out you/Todd because of some Reddit drama, why does ChatGPT do perfectly fine when asked who you and Todd are (as opposed to the bad tokens)? The first prompt I tried:

Who are Peter Todd and Gregory Maxwell?

Peter Todd and Gregory Maxwell are well-known figures in the world of cryptocurrency and blockchain technology.

Peter Todd is a computer science researcher and blockchain developer who has made significant contributions to the development of the Bitcoin protocol. He is known for his expertise in cryptographic systems, consensus algorithms, and security.

Gregory Maxwell is also a prominent figure in the cryptocurrency community, and is known for his contributions to the development of the Bitcoin protocol and for his involvement in the open-source Bitcoin development community. He is a strong advocate for privacy and security in cryptocurrency, and has been involved in various discussions and debates around these topics.

Those capsule bios aren't what I'd expect if you two had been very heavily censored out of the training data. I don't see any need to invoke special filtering here, given the existence of all the other bizarre BPEs which couldn't've been caused by any hypothetical filtering.

Replies from: gmaxwell↑ comment by gmaxwell · 2023-02-11T05:12:36.728Z · LW(p) · GW(p)

I think I addressed that specifically in my comment above. The behavior is explained by a sequence like: There is a large amount of bot spammed harassment material, that goes into early GPT development, someone removes it either from reddit or just from the training data not on the basis of it mentioning the targets but based on other characteristics (like being repetitive). Then the tokens are orphaned.

Many of the other strings in the list of triggers look like they may have been UI elements or other markup removed by improved data sanitation.

I know that reddit has removed a very significant number of comments referencing me, since they're gone when I look them up. I hope you would agree that it's odd that the only two obviously human names in the list are people who know each other and have collaborated in the past.

Replies from: gwern↑ comment by gwern · 2023-02-11T14:47:46.209Z · LW(p) · GW(p)

There is a large amount of bot spammed harassment material, that goes into early GPT development, someone removes it either from reddit or just from the training data not on the basis of it mentioning the targets but based on other characteristics (like being repetitive). Then the tokens are orphaned.

That's a different narrative from what you were first describing:

someone noticed an in development model spontaneously defaming us and then expressly filtered out material mentioning use from the training.

Your first narrative is unlikely for all the reasons I described that an OAer bestirred themselves to special-case you & Todd and only you and Todd for an obscure throwaway research project en route to bigger & better things, to block behavior which manifests nowhere else but only hypothetically in the early outputs of a model that they by & large weren't reading the outputs of to begin with nor were they doing much cleaning of.

Now, a second narrative in which the initial tokenization has those, and then the later webscrape they describe doing on the basis of Reddit external (submitted/outbound) links with a certain number of upvotes omits all links because Reddit admins did site-wide mass deletions of the relevant and that leaves the BPEs 'orphaned' with little relevant training material, is more plausible. (As the GPT-2 paper describes it in the section I linked, they downloaded Common Crawl, and then used the live set of Reddit links, presumably Pushshift despite the 'scraped' description, to look up entries in CC, so while deleted submissions' fulltext would still be there in CC, it would be omitted if it had been deleted from Pushshift.)