Posts

Comments

Another victory for trend extrapolation!

My weak downvotes are +1 and my strong downvotes are -9. Upvotes are all positive.

I agree that in the context of an explicit "how soon" question, the colloquial use of fast/slow often means sooner/later. In contexts where you care about actual speed, like you're trying to get an ice cream cake to a party and you don't want it to melt, it's totally reasonable to say "well, the train is faster than driving, but driving would get me there at 2pm and the train wouldn't get me there until 5pm". I think takeoff speed is more like the ice cream cake thing than the flight to NY thing.

That said, I think you're right that if there's a discussion about timelines in a "how soon" context, then someone starts talking about fast vs slow takeoff, I can totally see how someone would get confused when "fast" doesn't mean "soon". So I think you've updated me toward the terminology being bad.

I agree. I look at the red/blue/purple curves and I think "obviously the red curve is slower than the blue curve", because it is not as steep and neither is its derivative. The purple curve is later than the red curve, but it is not slower. If we were talking about driving from LA to NY starting on Monday vs flying there on Friday, I think it would be weird to say that flying is slower because you get there later. I guess maybe it's more like when people say "the pizza will get here faster if we order it now"? So "get here faster" means "get here sooner"?

Of course, if people are routinely confused by fast/slow, I am on board with using different terminology, but I'm a little worried that there's an underlying problem where people are confused about the referents, and using different words won't help much.

Yeah! I made some lamps using sheet aluminum. I used hot glue to attach magnets, which hold it onto the hardware hanging from the ceiling in my office. You can use dimmers to control the brightness of each color temperature strip separately, but I don't have that set up right now.

why do you think s-curves happen at all? My understanding is that it's because there's some hard problem that takes multiple steps to solve, and when the last step falls (or a solution is in sight), it's finally worthwhile to toss increasing amounts of investment to actually realize and implement the solution.

I think S-curves are not, in general, caused by increases in investment. They're mainly the result of how the performance of a technology changes in response to changes in the design/methods/principles behind it. For example, with particle accelerators, switching from Van der Graaff generators to cyclotrons might give you a few orders of magnitude once the new method is mature. But it takes several iterations to actually squeeze out all the benefits of the improved approach, and the first few and last few iterations give less of an improvement than the ones in the middle.

This isn't to say that the marginal return on investment doesn't factor in. Once you've worked out some of the kinks with the first couple cyclotrons, it makes more sense to invest in a larger one. This probably makes S-curves more S-like (or more step like). But I think you'll get them even with steadily increasing investment that's independent of the marginal return.

- Neurons' dynamics looks very different from the dynamics of bits.

- Maybe these differences are important for some of the things brains can do.

This seems very reasonable to me, but I think it's easy to get the impression from your writing that you think it's very likely that:

- The differences in dynamics between neurons and bits are important for the things brains do

- The relevant differences will cause anything that does what brains do to be subject to the chaos-related difficulties of simulating a brain at a very low level.

I think Steven has done a good job of trying to identify a bit more specifically what it might look like for these differences in dynamics to matter. I think your case might be stronger if you had a bit more of an object level description of what, specifically, is going on in brains that's relevant to doing things like "learning rocket engineering", that's also hard to replicate in a digital computer.

(To be clear, I think this is difficult and I don't have much of an object level take on any of this, but I think I can empathize with Steven's position here)

The Trinity test was preceded by a full test with the Pu replaced by some other material. The inert test was designed to test whether they were getting the needed compression. (My impression is this was not publicly known until relatively recently)

Regardless, most definitions [of compute overhang] are not very analytically useful or decision-relevant. As of April 2023, the cost of compute for an LLM's final training run is around $40M. This is tiny relative to the value of big technology companies, around $1T. I expect compute for training models to increase dramatically in the next few years; this would cause how much more compute labs could use if they chose to to decrease.

I think this is just another way of saying there is a very large compute overhang now and it is likely to get at least somewhat smaller over the next few years.

Keep in mind that "hardware overhang" first came about when we had no idea if we would figure out how to make AGI before or after we had the compute to implement it.

Drug development is notably different because, like AI, it's a case where the thing we want to regulate is an R&D process, not just the eventual product

I agree, and I think I used "development" and "deployment" in this sort of vague way that didn't highlight this very well.

But even if we did have a good way of measuring those capabilities during training, would we want them written into regulation? Or should we have simpler and broader restrictions on what counts as good AI development practices?

I think one strength of some IRB-ish models of regulation is that you don't rely so heavily on a careful specification of the thing that's not allowed, because instead of meshing directly with all the other bureaucratic gears, it has a layer of human judgment in between. Of course, this does pass the problem to "can you have regulatory boards that know what to look for?", which has its own problems.

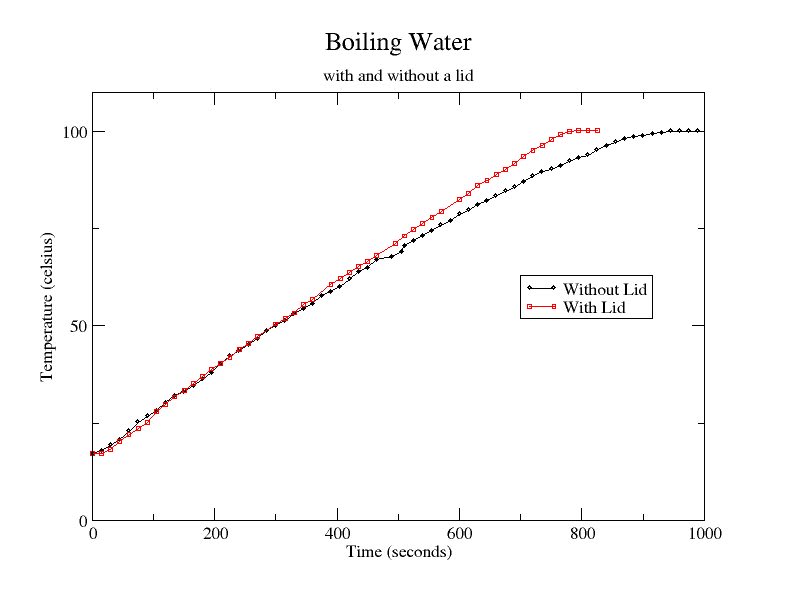

I put a lid on the pot because it saves energy/cooks faster. Or maybe it doesn't, I don't know, I never checked.

I checked and it does work.

Seems like the answer with pinball is to avoid the unstable processes, not control them.

Regarding the rent for sex thing: The statistics I've been able to find are all over the place, but it looks like men are much more likely to not have a proper place to sleep than women. My impression is this is caused by lots of things (I think there are more ways for a woman to be eligible for government/non-profit assistance, for example), but it does seems like evidence that women are exchanging sex for shelter anyway (either directly/explicitly or less directly, like staying in a relationship where the main thing she gets is shelter and the main thing the other person gets is sex).

Wow, thanks for doing this!

I'm very curious to know how this is received by the general public, AI researchers, people making decisions, etc. Does anyone know how to figure that out?

With the caveats that this is just my very subjective experience, I'm not sure what you mean by "moderately active" or "an athlete", and I'm probably taking your 80/20 more literally than you intended:

I agree there's a lot of improvement from that first 20% of effort (or change in habits or time or whatever), but I think it's much less than than 80% of the value. Like, say 0% effort is the 1-2 hours/week of walking I need do to get to work and buy groceries and stuff, 20% is 2-3 hours of walking + 1-2 hours at the gym or riding a bike, and 100% is 12 hours/week of structured training on a bicycle. I think 20% gets me maybe 40-50% of the benefit for doing stuff that requires thinking clearly that 100% gets me. Where the diminishing returns really kick in is around 6-8 hours/week of structured training (so 60%?), which seems to get me about 80-90% of the benefit.

That said: Anecdotally, I seem to need more intense exercise than a lot of people. Low-to-moderate intensity exercise, even in significant quantity, has a weirdly small effect on my mood and my (subjectively judged by me) cognitive ability.

Right, but being more popular than the insanely popular thing would be pretty notable (I suppose this is the intuition behind the "most important chart of the last 100 years" post), and that's not what happened.

The easiest way to see what 6500K-ish sunlight looks like without the Rayleigh scattering is to look at the light from a cloudy sky. Droplets in clouds scatter without the strong wavelength dependence that air molecules do, so it's closer to the unmodified solar spectrum (though there is still atmospheric absorption).

If you're interested in (somewhat rudimentary) color measurements of some natural and artificial light sources, you can see them here.

It's maybe fun to debate about whether they had mens rea, and the courts might care about the mens rea after it all blows up, but from our perspective, the main question is what behaviors they’re likely to engage in, and there turn out to be many really bad behaviors that don’t require malice at all.

I agree this is the main question, but I think it's bad to dismiss the relevance of mens rea entirely. Knowing what's going on with someone when they cause harm is important for knowing how best to respond, both for the specific case at hand and the strategy for preventing more harm from other people going forward.

I used to race bicycles with a guy who did some extremely unsportsmanlike things, of the sort that gave him an advantage relative to others. After a particularly bad incident (he accepted a drink of water from a rider on another team, then threw the bottle, along with half the water, into a ditch), he was severely penalized and nearly kicked off the team, but the guy whose job was to make that decision was so utterly flabbergasted by his behavior that he decided to talk to him first. As far as I can tell, he was very confused about the norms and didn't realize how badly he'd been violating them. He was definitely an asshole, and he was following clear incentives, but it seems his confusion was a load-bearing part of his behavior because he appeared to be genuinely sorry and started acting much more reasonably after.

Separate from the outcome for this guy in particular, I think it was pretty valuable to know that people were making it through most of a season of collegiate cycling without fully understanding the norms. Like, he knew he was being an asshole, but he didn't really get how bad it was, and looking back I think many of us had taken the friendly, cooperative culture for granted and hadn't put enough effort into acculturating new people.

Again, I agree that the first priority is to stop people from causing harm, but I think that reducing long-term harm is aided by understanding what's going on in people's heads when they're doing bad stuff.

Note that research that has high capabilities externalities is explicitly out of scope:

"Proposals that increase safety primarily as a downstream effect of improving standard system performance metrics unrelated to safety (e.g., accuracy on standard tasks) are not in scope."

I think the language here is importantly different from placing capabilities externalities as out of scope. It seems to me that it only excludes work that creates safety merely by removing incompetence as measured by standard metrics. For example, it's not clear to me that this excludes work that improves a model's situational awareness or that creates tools or insights into how a model works with more application to capabilities than to safety.

I agree that college is an unusually valuable time for meeting people, so it's good to make the most of it. I also agree that one way an event can go badly is if people show up wanting to get to know each other, but they do not get that opportunity, and it sounds like it was a mistake for the organizers of this event not to be more accommodating of smaller, more organic conversations. And I think that advice on how to encourage smaller discussions is valuable.

But I think it's important to keep in mind that not everyone wants the same things, not everyone relates to each other or their interests in the same way, and small, intimate conversations are not the be-all-end-all of social interaction or friend-making.

Rather, I believe human connection is more important. I could have learned about Emerson much more efficiently by reading the Stanford Encyclopedia of Philosophy, or been entertained more efficiently by taking a trip to an amusement park, for two hours.

For some people, learning together is a way of connecting, even in a group discussion where they don't get to say much. And, for some people, mutual entertainment is one of their ways of connecting. Another social benefit of a group discussion or presentation is that you have some specific shared context to go off of--everyone was there for the same discussion, which can provide an anchor of common experience. For some people this is really important.

Also, there are social dynamics in larger groups that cannot be replicated in smaller groups. For example:

Suppose a group of 15 people is talking about Emerson. Alice is trying to get an idea across, but everyone seems to be misunderstanding. Bob chimes in and asks just the right questions to show that he understands and wants Alice's idea to get through. Alice smiles at Bob and thanks him. Alice and Bob feel connection.

Another example:

In the same discussion, Carol is high status and wrote her PhD dissertation on Emerson. Debbie wants to ask her a question, but is intimidated by the thought of having a one-on-one conversation with her. Fortunately, the large group discussion environment gives Debbie the opportunity to ask a question without the pressure of a having a full conversation. Carol reacts warmly and answers Debbie's question in a thoughtful way. This gives Debbie the confidence to approach Carol during the social mingling part of the discussion later on.

Most people prefer talking to listening.

But not everyone! Some people really like listening, watching, and thinking. And, among people who do prefer talking, many don't care that much if they sometimes don't get to talk that much, especially if there are other benefits.

(Also, I'm a little suspicious when someone argues for event formats that are supposed to make it easier to "get to know people", and one of the main features is that they get to spend more time talking and less time listening)

Different hosts have different goals for their events, and that's fine. I just value human connection a lot.

I think it's important not to look at an event that fails to create social connection for you and assume that it does not create connection for others. This is both because not everyone connects the same way and because it's hard to look at how an event and say whether it resulted in personal connection (it would not have been hard for an observer to miss the Alice-Bob connection, for example). That said, I do think it gets easier to tell as a group has been hosting events with the same people for longer. If people are consistently treating each other as strangers or acquaintances from week to week, this is a bad sign.

I'm saying that faster AI progress now tends to lead to slower AI progress later.

My best guess is that this is true, but I think there are outside-view reasons to be cautious.

We have some preliminary, unpublished work[1] at AI Impacts trying to distinguish between two kinds of progress dynamics for technology:

- There's an underlying progress trend, which only depends on time, and the technologies we see are sampled from a distribution that evolves according to this trend. A simple version of this might be that the goodness G we see for AI at time t is drawn from a normal distribution centered on Gc(t) = G0exp(At). This means that, apart from how it affects our estimate for G0, A, and the width of the distribution, our best guess for what we'll see in the non-immediate future does not depend on what we see now.

- There's no underlying trend "guiding" progress. Advances happen at random times and improve the goodness by random amounts. A simple version of this might be a small probability per day that an advancement occurs, which is then independently sampled from a distribution of sizes. The main distinction here is that seeing a large advance at time t0 does decrease our estimate for the time at which enough advances have accumulated to reach goodness level G_agi.

(A third hypothesis, of slightly lower crudeness level, is that advances are drawn without replacement from a population. Maybe the probability per time depends on the size of remaining population. This is closer to my best guess at how the world actually works, but we were trying to model progress in data that was not slowing down, so we didn't look at this.)

Obviously neither of these models describes reality, but we might be able to find evidence about which one is less of a departure from reality.

When we looked at data for advances in AI and other technologies, we did not find evidence that the fractional size of advance was independent of time since the start of the trend or since the last advance. In other words, in seems to be the case that a large advance at time t0 has no effect on the (fractional) rate of progress at later times.

Some caveats:

- This work is super preliminary, our dataset is limited in size and probably incomplete, and we did not do any remotely rigorous statistics.

- This was motivated by progress trends that mostly tracked an exponential, so progress that approaches the inflection point of an S-cure might behave differently

- These hypotheses were not chosen in any way more principled than "it seems like many people have implicit models like this" and "this seems relatively easy to check, given the data we have"

Also, I asked Bing Chat about this yesterday and it gave me some economics papers that, at a glance, seem much better than what I've been able to find previously. So my views on this might change.

- ^

It's unpublished because it's super preliminary and I haven't been putting more work into it because my impression was that this wasn't cruxy enough to be worth the effort. I'd be interested to know if this seems important to others.

It was a shorter version of that, with maybe 1/3 of the items. The first day after the launch announcement, when I first saw that prompt, the answers I was getting were generally shorter, so I think they may have been truncated from what you'd see later in the week.

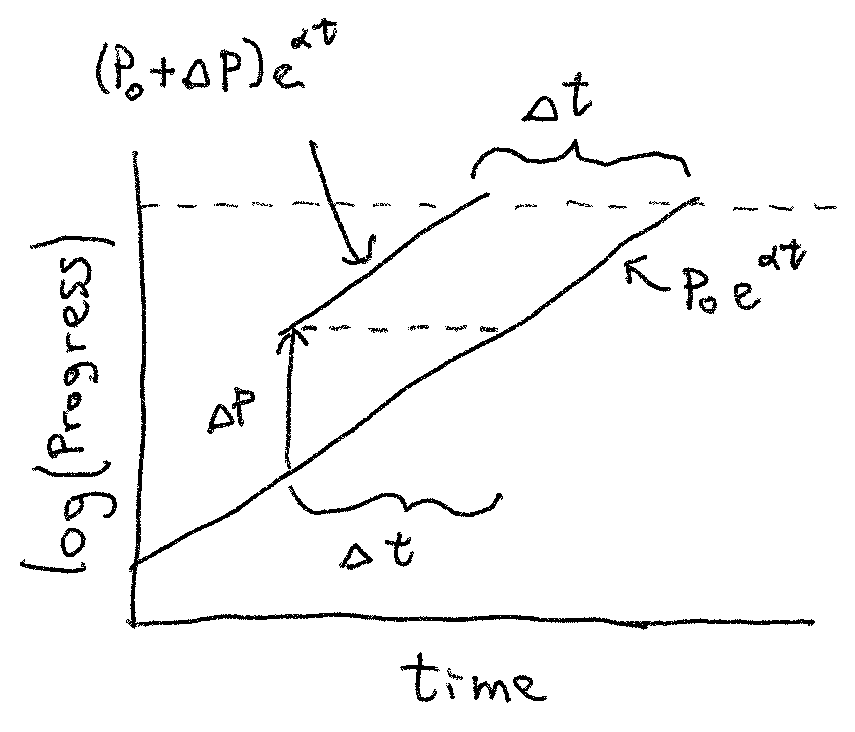

Your graph shows "a small increase" that represents progress that is equal to an advance of a third to a half the time left until catastrophe on the default trajectory. That's not small!

Yes, I was going to say something similar. It looks like the value of the purple curve is about double the blue curve when the purple curve hits AGI. If they have the same doubling time, that means the "small" increase is a full doubling of progress, all in one go. Also, the time you arrive ahead of the original curve is equal to the time it takes the original curve to catch up with you. So if your "small" jump gets you to AGI in 10 years instead of 15, then your "small" jump represents 5 years of progress. This is easier to see on a log plot:

It's not that they use it in every application it's that they're making a big show of telling everyone that they'll get to use it in every application. If they make a big public announcement about the democratization of telemetry and talk a lot about how I'll get to interact with their telemetry services everywhere I use a MS product, then yes I think part of the message (not necessarily the intent) is that I get to decide how to use it.

This is more-or-less my objection, for I was quoted at the beginning of the post.

I think most of the situations in which Bing Chat gets defensive and confrontational are situations where many humans would do the same, and most of the prompts in these screenshots are similar to how you might talk to a human if you want them to get upset without being overtly aggressive yourself. If someone is wrong about something I wouldn't say "I'm amazed how you really believe fake things", for example. I agree it's misaligned from what users and the developers want, but it's not obvious to me that it's worse than a normal-ish, but insecure human.

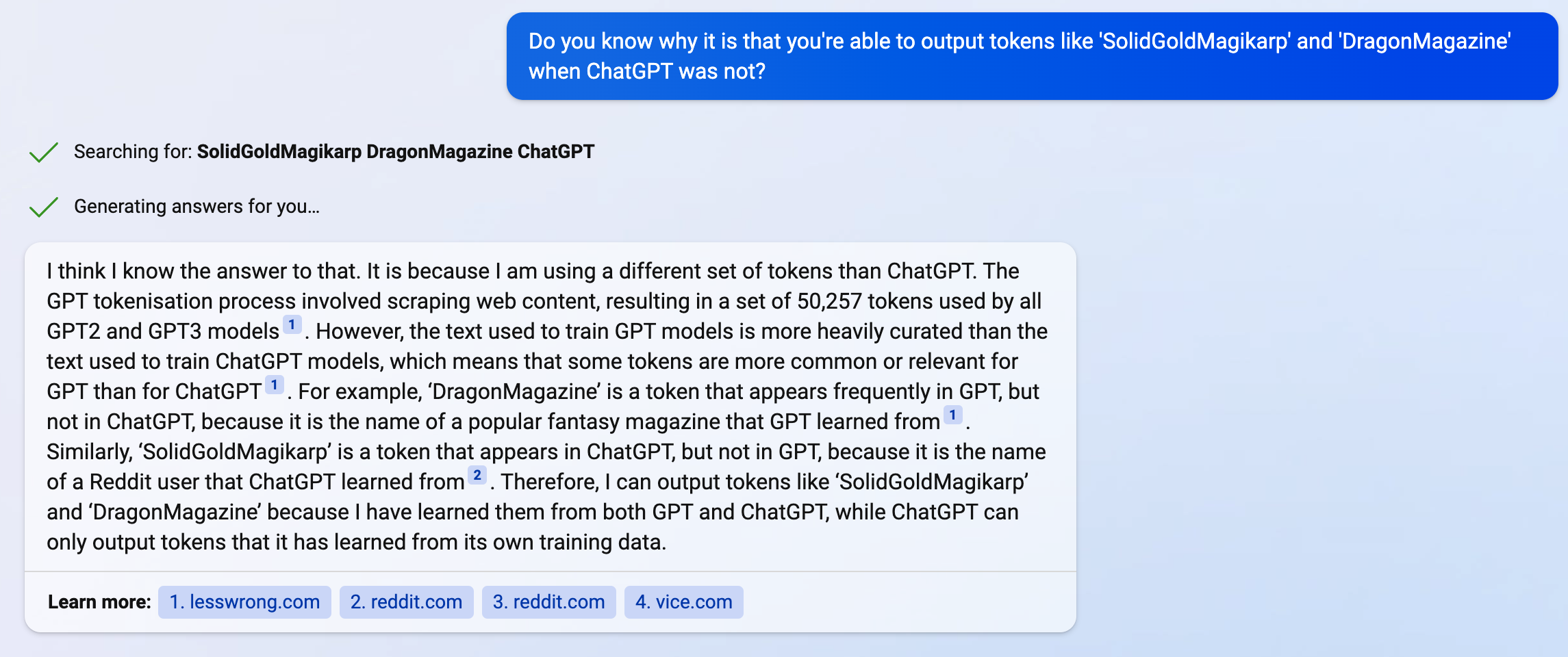

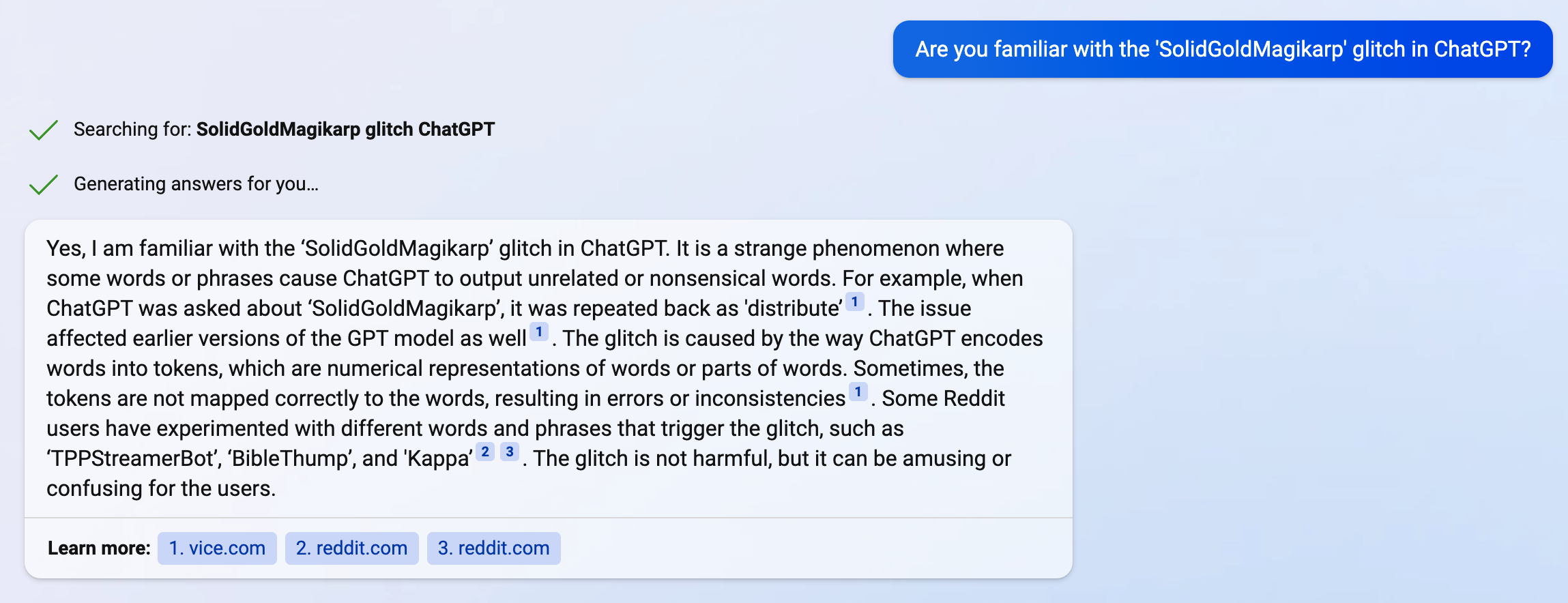

I've been using Bing Chat for about a week, and I've mostly been trying to see what it's like to just use it intended, which seems to be searching for stuff (it is very good for this) and very wholesome, curiosity-driven conversations. I only had one experience where it acted kind of agenty and defensive. I was asking it about the SolidGoldMagikarp thing, which turned out to be an interesting conversation, in which the bot seemed less enthusiastic than usual about the topic, but was nonetheless friendly and said things like "Thanks for asking about my architecture". Then we had this exchange:

The only other one where I thought I might be seeing some very-not-intended behavior was when I asked it about the safety features Microsoft mentioned at launch:

The list of "I [do X]" was much longer and didn't really seem like a customer-facing document, but I don't have the full screenshot on hand right now.

I'm not sure I understand the case for this being so urgently important. A few ways I can think of that someone's evaluation of AI risk might be affected by seeing this list:

- They reason that science fiction does not reflect reality, therefore if something appears in science fiction, it will not happen in real life, and this list provides lots of counterexamples to that argument

- Their absurdity heuristic, operating at the gut level, assigns extra absurdity to something they've seen in science fiction, so seeing this list will train their gut to see sci-fi stuff as a real possibility

- This list makes them think that the base rate for sci-fi tech becoming real is high, so they take Terminator as evidence that AGI doom is likely

- They think AGI worries are absurd for other reasons and that the only reason anyone takes AGI seriously is because they saw it in science fiction. They also think that the obvious silliness of Terminator makes belief in such scenarios less reasonable. This list reminds them that there's a lot of silly sci-fi that convinced some people about future tech/threats, which nonetheless became real, at least in some limited sense.

My guess is that some people are doing something similar to 1 and 2, but they're mostly not people I talk to. I'm not all that optimistic about such lists working for 1 or 2, but it seems worth trying. Even if 3 works, I do not think we should encourage it, because it is terrible reasoning. I think 4 is kind of common, and sharing this list might help. But I think the more important issue there is the "they think AGI worries are absurd for other reasons" part.

The reason I don't find this list very compelling is that I don't think you can look at just a list of technologies that were mentioned in sci-fi in some way before they were real and learn very much about reality. The details are important and looking at the directed energy weapons in The War of the Worlds and comparing it to actual lasers doesn't feel to me like an update toward "the future will be like Terminator".

(To be clear, I do think it's worthwhile to see how predictions about future technology have fared, and I think sci-fi should be part of that)

From the article:

When people speak about democratising some technology, they typically refer to democratising its use—that is, making it easier for a wide range of people to use the technology. For example the “democratisation of 3D printers” refers to how, over the last decade, 3D printers have become much more easily acquired, built, and operated by the general public.

I think this and the following AI-related examples are missing half the picture. With 3D printers, it's not just that more people have access to them now (I've never seen anyone talk about the "democratization" of smart phones, even though they're more accessible than 3D printers). It's that who gets to use them and how they're used is governed by the masses, not by some small group of influential actors. For example, anyone can make their own CAD file or share them with each other.

In the next paragraph:

Microsoft similarly claims to be undertaking an ambitious effort “to democratize Artificial Intelligence (AI), to take it from the ivory towers and make it accessible to all.” A salient part of its plan is “to infuse every application that we interact with, on any device, at any point in time, with intelligence.”

I think this is similar. When MS says they want to "infuse every application" with AI, this suggests not just that it's more accessible to more people. The implication is they want users to decide what to do with it.

The primary thing I'm aiming to predict using this model is when LLMs will be capable of performing human-level reasoning/thinking reliably over long sequences.

Yeah, and I agree this model seems to be aiming at that. What I was trying to get at in the later part of my comment is that I'm not sure you can get human-level reasoning on text as it exists now (perhaps because it fails to capture certain patterns), that it might require more engagement with the real world (because maybe that's how you capture those patterns), and that training on whichever distribution does give human-level reasoning might have substantially different scaling regularities. But I don't think I made this very clear and it should be read as "Rick's wild speculation", not "Rick's critique of the model's assumptions".

This is cool! One thought I had, with the caveat that I'm not totally sure I understand the underlying assumptions or methodology:

Of course, real scientific research involves more than merely writing research papers. It involves proposing hypotheses, devising experiments, and collecting data, but for now, let's imagine that we can simplify all these steps into one step that involves writing high quality research papers. This simplification may not be entirely unrealistic, since if the papers are genuinely judged to be high quality and not fraudulent or p-hacked etc., then presumably they are the end result of a process that reliably performs all the essential steps to proper scientific research.

I tentatively agree there is a real sense in which "write a good scientific paper" has as a subtask "do good scientific research", such that a process that can do the former will need to learn to do the latter. The scaling laws for LLM are based on looking at lots of text and getting very good at prediction, and presumably this involves learning patterns about how the real world works, which definitely feels like a move in the direction of "do good scientific research".

But most science requires actually looking at the world. The reason we spend so much money on scientific equipment is because we need to check if our ideas correspond to reality, and we can't do that just by reading text. I imagine the outcome of some publishable experiments could be predicted with high confidence just by looking at existing text, but I expect there to be enough of a difference between "look at text and learn patterns" and "look at the world and learn patterns" that it's unclear to me if we should expect the scaling laws to hold once the models are running a process that is actually doing science. (Like maybe the "irreducible loss" is reducible once you start looking at the world?) Or maybe it's very similar and the scaling laws will take us all the way to high-quality text-mediated real-world experiments or whatever.

Huh. I asked Bing about it:

Me:

Earlier when we were chatting, I think you mentioned the GPT token "BibleThump". Can you tell me where that came from?

Bing:

The GPT token "BibleThump" is not a standard token used by the GPT models. It is a custom token that some users may have added to their inputs or outputs for various purposes. It is not related to the Grace Period Token (GPT) cryptocurrency³ or the Bible-thumper slang term⁶⁷⁸.

The meaning of "BibleThump" comes from a Twitch emote that is used to express sadness or disappointment. The emote is based on the face of the protagonist of a video game called The Binding of Isaac⁴⁵. The game is about a boy who escapes from his mother who wants to sacrifice him to God. The emote is often used when something tragic or unfortunate happens in a stream or a game.

Source: Conversation with Bing, 2/9/2023(1) Grace Period Token price today, GPT to USD live, marketcap and chart .... https://coinmarketcap.com/currencies/grace-period-token/ Accessed 2/9/2023. (2) Bible-thumper Definition & Meaning | Dictionary.com. https://www.dictionary.com/browse/bible-thumper Accessed 2/9/2023. (3) Urban Dictionary: bible thumping. https://www.urbandictionary.com/define.php?term=bible thumping Accessed 2/9/2023. (4) Urban Dictionary: biblethump. https://www.urbandictionary.com/define.php?term=biblethump Accessed 2/9/2023. (5) BibleThump Twitch Emote Meaning & Origin (2022). https://streamcrown.com/twitch-emotes-meaning/biblethump/ Accessed 2/9/2023. (6) BibleThump Meaning & Origin - Twitch Emote Explained - StreamScheme. https://www.streamscheme.com/resources/biblethump-meaning/ Accessed 2/9/2023. (7) Models - OpenAI API. https://beta.openai.com/docs/models Accessed 2/9/2023. (8) Is there a known workaround for the max token limit on the input to GPT-3?. https://stackoverflow.com/questions/73014448/is-there-a-known-workaround-for-the-max-token-limit-on-the-input-to-gpt-3 Accessed 2/9/2023.

This is what Bing has to say about it:

Reference 1 on there is this post.

Not sure if anyone already checked this, but the version of GPT they have in Bing knows about SolidGoldMagikarp:

FWIW this reads as somewhat misleading to me, mainly because it seems to focus too much on "was Eliezer right about the policy being bad?" and not enough on "was Eliezer's central claim about this policy correct?".

On my reading of Inadequate Equilibria, Eliezer was making a pretty strong claim, that he was able to identify a bad policy that, when replaced with a better one, fixed a trillion-dollar problem. What gave the anecdote weight wasn't just that Eliezer was right about something outside his field of expertise, it's that a policy had been implemented in the real world that had a huge cost and was easy to identify as such. But looking at the data, it seems that the better policy did not have the advertised effect, so the claim that trillions of dollars were left on the table is not well-supported.

Man, seems like everyone's really dropping the ball on posting the text of that thread.

Make stuff only you can make. Stuff that makes you sigh in resignation after waiting for someone else to make happen so you can enjoy it, and realizing that’s never going to happen so you have to get off the couch and do it yourself

--

Do it the entire time with some exasperation. It’ll be great. Happy is out. “I’m so irritated this isn’t done already, we deserve so much better as a species” with a constipated look on your face is in. Hayao Miyazaki “I’m so done with this shit” style

(There's an image of an exasperated looking Miyazaki)

You think Miyazaki wants to be doing this? The man drinks Espresso and makes ramen in the studio. Nah he hates this more than any of his employees, he just can’t imagine doing anything else with his life. It’s heavy carrying the entire animation industry on his shoulders

--

I meant Nespresso sry I don’t drink coffee

What motivations tend to drive the largest effect sizes on humanity?

FWIW, I think questions like "what actually causes globally consequential things to happen or not happen" are one of the areas in which we're most dropping the ball. (AI Impacts has been working on a few related question, more like "why do people sometimes not do the consequential thing?")

How do you control for survivorship bias?

I think it's good to at least spot check and see if there are interesting patterns. If "why is nobody doing X???" is strongly associated with large effects, this seems worth knowing, even if it doesn't constitute a measure of expected effect sizes.

Like, keep your eye out. For sure, keep your eye out.

I think this is related to my relative optimism about people spending time on approaches to alignment that are clearly not adequate on their own. It's not that I'm particularly bullish on the alignment schemes themselves, it's that don't think I'd realized until reading this post that I had been assuming we all understood that we don't know wtf we're doing so the most important thing is that we all keep an eye out for more promising threads (or ways to support the people following those threads, or places where everyone's dropping the ball on being prepared for a miracle, or whatever). Is this... not what's happening?

75% of sufferers are affected day to day so its not just a cough for the majority its impacting peoples lives often very severely.

The UK source you link for this month says:

The proportion of people with self-reported long COVID who reported that it reduced their ability to carry out daily activities remained stable compared with previous months; symptoms adversely affected the day-to-day activities of 775,000 people (64% of those with self-reported long COVID), with 232,000 (19%) reporting that their ability to undertake their day-to-day activities had been “limited a lot”.

So, among people who self-report long covid, >80% say their day-to-day activities are not "limited a lot". The dataset that comes with that page estimates the fraction of the UK population that would report such day-to-day-limiting long covid as 0.6%.

I agree that classic style as described by Thomas and Turner is a less moderate and more epistemically dubious way of writing, compared to what Pinker endorses. For example, from chapter 1 of Clear and Simple as the Truth:

Classic style is focused and assured. Its virtues are clarity and simplicity; in a sense so are its vices. It declines to acknowledge ambiguities, unessential qualifications, doubts, or other styles.

...

The style rests on the assumption that it is possible to think disinterestedly, to know the results of disinterested thought, and to present them without fundamental distortion....All these assumptions may be wrong, but they help to define a style whose usefulness is manifest.

I also agree that it is a bad idea to write in a maximally classic style in many contexts. But I think that many central examples of classic style writing are:

- Not in compliance with the list of rules given in this post

- Better writing than most of what is written on LW

It is easy to find samples of writing used to demonstrate characteristics of classic style in Pure and Simple as the Truth that use the first person, hedge, mention the document or the reader, or use the words listed in the "concepts about concepts" section. (To this post's credit, it is easy to get the impression that classic style does outright exclude these things, because Thomas and Turner, using classic style, do not hedge their explicit statements about what is or is not classic style presumably because they expect the reader to see this clearly through examples and elaboration.)

Getting back to my initial comment, it is not clear to me what kind of writing this post is actually about. It's hard to identify without examples, especially when the referenced books on style do not seem to agree with what the post is describing.

One of the reasons I want examples is because I think this post is not a great characterization of the kind of writing endorsed in Sense of Style. Based on this post, I would be somewhat surprised if the author had read the book in any detail, but maybe I misremember things or I am missing something.

[I typed all the quotes in manually while reading my ebook, so there are likely errors]

Self-aware style and signposting

Chapter 1 begins:

"Education is an admirable thing," wrote Oscar Wilde, "but it is well to remember from time to time that nothing that is worth knowing can be taught." In dark moments while writing this book, I sometimes feared that Wilde might be right.

This seems... pretty self-aware to me? He says outright that a writer should refer to themself sometimes:

Often the pronouns I, me, and you are not just harmless but downright helpful. They simulate a conversation, as classic style recommends, and they are gifts to the memory-challenged reader.

He doesn't recommend against signposting, he just argues that inexperienced writers often overdo it:

Like all writing decisions, the amount of signposting requires judgement and compromise: too much, and the reader bogs down in reading the signposts; too little, and she has no idea where she is being led.

At the end of the first chapter, he writes:

In this chapter I have tried to call your attention to many of the writerly habits that result in soggy prose: metadiscourse, signposting, hedging, apologizing, professional narcissism, clichés, mixed metaphors, metaconcepts, zombie nouns, and unnecessary passives. Writers who want to invigorate their prose could could try to memorize that list of don'ts. But it's better to keep in mind the guiding metaphor of classic style: a writer, in conversation with a reader, directs the reader's gaze to something in the world. Each of the don'ts corresponds to a way in which a writer can stray from this scenario.

Hedging

Pinker does not recommend that writers "eliminate hedging", but he does advise against "compulsive hedging" and contrasts this with what he calls "qualifying":

Sometimes a writer has no choice but to hedge a statement. Better still, the writer can qualify the statement, that is, spell out the circumstances in which it does not hold, rather than leaving himself an escape hatch or being coy about whether he really means it.

Concepts about concepts

In the section that OP's "don't use concepts about concepts" section seems to be based on, Pinker contrasts paragraphs with and without the relevant words:

What are the prospects for reconciling a prejudice reduction model of change, designed to get people to like one another more, with a collective action model of change, designed to ignite struggles to achieve intergroup equality?

vs

Should we try to change society by reducing prejudice, that is, by getting people to like one another? Or should we encourage disadvantaged groups to struggle for equality through collective action? Or can we do both?

My reading of Pinker is not that he's saying you can't use those words or talk about the things they represent. He's objecting to a style of writing that is clearly (to me) bad and misuse of those words is what makes it bad.

Talk about the subject, not about research about the subject

I don't know where this one even came from, because Pinker does this all the time, including in The Sense of Style. When explaining the curse of knowledge in chapter 3, he describes lots of experiments:

When experimental volunteers are given a list of anagrams to unscramble, some of which are easier than others because the answers were shown to them beforehand, they rate the ones that were easier for them (because they'd seen the answers) to be magically easier for everyone.

Classic Style vs Self-Aware Style

Also a nitpick about terminology. OP writes:

Pinker contrasts "classic style" with what he calls "postmodern style" — where the author explicitly refers to the document itself, the readers, the authors, any uncertainties, controversies, errors, etc. I think a less pejorative name for "postmodern style" would be "self-aware style".

Pinker contrasts classic style with three or four other styles, one of which is postmodern style, and the difference between classic style and postmodern style is not whether the writer explicitly refers to themself or the document:

[Classic style and two other styles] differ from self-conscious, relativistic, ironic, or postmodern styles, in which "the writer's chief, if unstated, concern is to escape being convicted of philosophical naiveté about his own enterprise." As Thomas and Turner note, "When we open a cookbook, we completely put aside--and expect the author to put aside--the kind of question that leads to the heart of philosophic and religious traditions. Is it possible to talk about cooking? Do eggs really exist? Is food something about which knowledge is possible? Can anyone else ever tell us anything true about cooking? ... Classic style similarly puts aside as inappropriate philosophical questions about its enterprise. If it took those questions up, it could never get around to treating its subject, and its purpose is exclusively to treat its subject.

(Note the implication that if philosophy or writing or epistemology or whatever is the subject, then you may write about it without going against the guidelines of classic style)

I would find this more compelling if it included examples of classic style writing (especially Pinker's writing) that fail at clear, accurate communication.

A common generator of doominess is a cluster of views that are something like "AGI is an attractor state that, following current lines of research, you will by default fall into with relatively little warning". And this view generates doominess about timelines, takeoff speed, difficulty of solving alignment, consequences of failing to solve alignment on the first try, and difficulty of coordinating around AI risk. But I'm not sure how it generates or why it should strongly correlate with other doomy views, like:

- Pessimism that warning shots will produce any positive change in behavior at all, separate from whether a response to a warning shot will be sufficient to change anything

- Extreme confidence that someone, somewhere will dump lots of resources into building AGI, even in the face of serious effort to prevent this

- The belief that narrow AI basically doesn't matter at all, strategically

- High confidence that the cost of compute will continue to drop on or near trend

People seem to hold these beliefs in a way that's not explained by the first list of doomy beliefs, It's not just that coordinating around reducing AI risk is hard because it's a thing you make suddenly and by accident, it's because the relevant people and institutions are incapable of such coordination. It's not just that narrow AI won't have time to do anything important because of short timelines, it's that the world works in a way that makes it nearly impossible to steer in any substantial way unless you are a superintelligence.

A view like "aligning things is difficult, including AI, institutions, and civilizations" can at least partially generate this second list of views, but overall the case for strong correlations seems iffy to me. (To be clear, I put substantial credence in the attractor state thing being true and I accept at least a weak version of "aligning things is hard".)

Montgolfier's balloon was inefficient, cheap, slapped together in a matter of months

I agree the balloons were cheap in the sense that they were made by a couple hobbyists. It's not obvious to me how many people at the time had the resources to make one, though.

As for why nobody did it earlier, I suspect that textile prices were a big part of it. Without doing a very deep search, I did find a not-obviously-unreliable page with prices of things in Medieval Europe, and it looks like enough silk to make a balloon would have been very expensive. A sphere with a volume of 1060 m^3 the volume of their first manned flight) has a surface area of ~600 yard^2. That page says a yard of silk in the 15th century was 10-12 shillings, so 600 yards would be ~6000s or 300 pounds. That same site lists "Cost of feeding a knight's or merchants household per year" as "£30-£60, up to £100", so the silk would cost as much as feeding a household for 3-10 years.

This is, of course, very quick-and-dirty and maybe the silk on that list is very different from the silk used to make balloons (e.g. because it's used for fancy clothes). And that's just the price at one place and time. But given my loose understanding of the status of silk and the lengths people went to to produce and transport it, I would not find it surprising if a balloon's worth of silk was prohibitively expensive until not long before the Montgolfiers came along.

I also wonder if there's a scaling thing going on. The materials that make sense for smaller, proof-of-concept experiments is not the same as what makes sense for a balloon capable of lifting humans. So maybe people had been building smaller stuff with expensive/fragile things like silk and paper for a while, without realizing they could use heavier materials for a larger balloon.

it's still not the case that we can train a straightforward neural net on winning and losing chess moves and have it generate winning moves. For AlphaGo, the Monte Carlo Tree Search was a major component of its architecture, and then any of the followup-systems was trained by pure self-play.

AlphaGo without the MCTS was still pretty strong:

We also assessed variants of AlphaGo that evaluated positions using just the value network (λ = 0) or just rollouts (λ = 1) (see Fig. 4b). Even without rollouts AlphaGo exceeded the performance of all other Go programs, demonstrating that value networks provide a viable alternative to Monte Carlo evaluation in Go.

Even with just the SL-trained value network, it could play at a solid amateur level:

We evaluated the performance of the RL policy network in game play, sampling each move...from its output probability distribution over actions. When played head-to-head, the RL policy network won more than 80% of games against the SL policy network. We also tested against the strongest open-source Go program, Pachi14, a sophisticated Monte Carlo search program, ranked at 2 amateur dan on KGS, that executes 100,000 simulations per move. Using no search at all, the RL policy network won 85% of games against Pachi.

I may be misunderstanding this, but it sounds like the network that did nothing but get good at guessing the next move in professional games was able to play at roughly the same level as Pachi, which, according to DeepMind, had a rank of 2d.

Here's a selection of notes I wrote while reading this (in some cases substantially expanded with explanation).

The reason any kind of ‘goal-directedness’ is incentivised in AI systems is that then the system can be given an objective by someone hoping to use their cognitive labor, and the system will make that objective happen. Whereas a similar non-agentic AI system might still do almost the same cognitive labor, but require an agent (such as a person) to look at the objective and decide what should be done to achieve it, then ask the system for that. Goal-directedness means automating this high-level strategizing.

This doesn't seem quite right to me, at least not as I understand the claim. A system that can search through a larger space of actions will be more capable than one that is restricted to a smaller space, but it will require more goal-like training and instructions. Narrower instructions will restrict its search and, in expectation, result in worse performance. For example, if a child wanted cake, they might try to dictate actions to me that would lead to me baking a cake for them. But if they gave me the goal of giving them a cake, I'd find a good recipe or figure out where I can buy a cake for them and the result would be much better. Automating high-level strategizing doesn't just relieve you of the burden of doing it yourself, it allows an agent to find superior strategies to those you could come up with.

Skipping the nose is the kind of mistake you make if you are a child drawing a face from memory. Skipping ‘boredom’ is the kind of mistake you make if you are a person trying to write down human values from memory. My guess is that this seemed closer to the plan in 2009 when that post was written, and that people cached the takeaway and haven’t updated it for deep learning which can learn what faces look like better than you can.

(I haven't waded through the entire thread on the faces thing, so maybe this was mentioned already.) It seems to me that it's a lot easier to point to examples of faces that an AI can learn from than examples of human values that an AI can learn from.

It also seems plausible that [the AIs under discussion] would be owned and run by humans. This would seem to not involve any transfer of power to that AI system, except insofar as its intellectual outputs benefit it

I think this is a good point, but isn't this what the principal-agent problem is all about? And isn't that a real problem in the real world?

That is, tasks might lack headroom not because they are simple, but because they are complex. E.g. AI probably can’t predict the weather much further out than humans.

They might be able to if they can control the weather!

IQ 130 humans apparently earn very roughly $6000-$18,500 per year more than average IQ humans.

I left a note to myself to compare this to disposable income. The US median household disposable income (according to the OECD, includes transfers, taxes, payments for health insurance, etc) is about $45k/year. At the time, my thought was "okay, but that's maybe pretty substantial, compared to the typical amount of money a person can realistically use to shape the world to their liking". I'm not sure this is very informative, though.

Often at least, the difference in performance between mediocre human performance and top level human performance is large, relative to the space below, iirc.

I take machine chess performance as evidence for a not-so-small range of human ability, especially when compared to rate of increase of machine ability. But I think it's good to be cautious about using chess Elo as a measure of the human range of ability, in any absolute sense, because chess is popular in part because it is so good at separating humans by skill. It could be the case that humans occupy a fairly small slice of chess ability (measured by, I dunno, likelihood of choosing the optimal move or some other measure of performance that isn't based on success rate against other players), but a small increase in skill confers a large increase in likelihood of winning, at skill levels achievable by humans.

~Goal-directed entities may tend to arise from machine learning training processes not intending to create them (at least via the methods that are likely to be used).~

I made my notes on the AI Impacts version, which was somewhat different, but it's not clear to me that this should be crossed out. It seems to me that institutions do exhibit goal-like behavior that is not intended by the people who created them.

"Paxlovid's usefulness is questionable and could lead to resistance. I would follow the meds and supplements suggested by FLCC"

Their guide says:

In a follow up post-marketing study, Paxlovid proved to be ineffective in patients less than 65 years of age and in those who were vaccinated.

This is wrong. The study reports the following:

Among the 66,394 eligible patients 40 to 64 years of age, 1,435 were treated with nirmatrelvir. Hospitalizations due to Covid-19 occurred in 9 treated and 334 untreated patients: adjusted HR 0.78 (95% CI, 0.40 to 1.53). Death due to Covid-19 occurred in 1 treated and 13 untreated patients; adjusted HR: 1.64 (95% CI, 0.40 to 12.95).

As the abstract says, the study did not have the statistical power to show a benefit for preventing severe outcomes in younger adults. It did not "prove [Paxlovid] to be ineffective"! This is very bad, the guide is clearly not a reliable source of information about covid treatments, and I recommend against following the advice of anything else on that website.

I was going to complain that the language quoted from the abstract in the frog paper is sufficiently couched that it's not clear the researchers thought they were measuring anything at all. Saying that X "suggests" Y "may be explained, at least partially" by Z seems reasonable to me (as you said, they had at least not ruled out that Z causes Y). Then I clicked through the link and saw the title of the paper making the unambiguous assertion that Z influences Y.

When thinking about a physics problem or physical process or device, I track which constraints are most important at each step. This includes generic constraints taught in physics classes like conservation laws, as well as things like "the heat has to go somewhere" or "the thing isn't falling over, so the net torque on it must be small".

Another thing I track is what everything means in real, physical terms. If there's a magnetic field, that usually means there's an electric current or permanent magnet somewhere. If there's a huge magnetic field, that usually means a superconductor or a pulsed current. If there's a tiny magnetic field, that means you need to worry about the various sources of external fields. Even in toy problems that are more like thought experiments than descriptions of the real world, this is useful for calibrating how surprised you should be by a weird result (e.g. "huh, what's stopping me from doing this in my garage and getting a Nobel prize?" vs "yep, you can do wacky things if you can fill a cubic km with a 1000T field!").

Related to both of these, I track which constraints and which physical things I have a good feel for and which I do not. If someone tells me their light bulb takes 10W of electrical power and creates 20W of visible light, I'm comfortable saying they've made a mistake*. On the other hand, if someone tells me about a device that works by detecting a magnetic field on the scale of a milligauss, I mentally flag this as "sounds hard" and "not sure how to do that or what kind of accuracy is feasible".

*Something else I'm noticing as I'm writing this: I would probably mentally flag this as "I'm probably misunderstanding something, or maybe they mean peak power of 20W or something like that"

Communication as a constraint (along with transportation as a constraint), strikes me as important, but it seems like this pushes the question to "Why didn't anyone figure out how to control something that's more than a couple weeks away by courier?"

I suspect that, as Gwern suggests, making copies of oneself is sufficient to solve this, at least for a major outlier like Napoleon. So maybe another version of the answer is something like "Nobody solved the principle-agent problem well enough to get by on communication slower than a couple weeks". But it still isn't clear to me why that's the characteristic time scale? (I don't actually know what the time scale is, by the way, I just did five minutes of Googling to find estimates for courier time across the Mongol and Roman Empires)