Against a General Factor of Doom

post by Jeffrey Heninger (jeffrey-heninger) · 2022-11-23T16:50:04.229Z · LW · GW · 19 commentsContents

Notes None 19 comments

Jeffrey Heninger, 22 November 2022

I was recently reading the results of a survey asking climate experts about their opinions on geoengineering. The results surprised me: “We find that respondents who expect severe global climate change damages and who have little confidence in current mitigation efforts are more opposed to geoengineering than respondents who are less pessimistic about global damages and mitigation efforts.”[1] This seems backwards. Shouldn’t people who think that climate change will be bad and that our current efforts are insufficient be more willing to discuss and research other strategies, including intentionally cooling the planet?

I do not know what they are thinking, but I can make a guess that would explain the result: people are responding using a ‘general factor of doom’ instead of considering the questions independently. Each climate expert has a p(Doom) for climate change, or perhaps a more vague feeling of doominess. Their stated beliefs on specific questions are mostly just expressions of their p(Doom).

If my guess is correct, then people first decide how doomy climate change is, and then they use this general factor of doom to answer the questions about severity, mitigation efforts, and geoengineering. I don’t know how people establish their doominess: it might be as a result of thinking about one specific question, or it might be based on whether they are more optimistic or pessimistic overall, or it might be something else. Once they have a general factor of doom, it determines how they respond to specific questions they subsequently encounter. I think that people should instead decide their answers to specific questions independently, combine them to form multiple plausible future pathways, and then use these to determine p(Doom). Using a model with more details is more difficult than using a general factor of doom, so it would not be surprising if few people did it.

To distinguish between these two possibilities, we could ask people a collection of specific questions that are all doom-related, but are not obviously connected to each other. For example:

- How much would the Asian monsoon weaken with 1°C of warming?

- How many people would be displaced by a 50 cm rise in sea levels?

- How much carbon dioxide will the US emit in 2040?

- How would vegetation growth be different if 2% of incoming sunlight were scattered by stratospheric aerosols?

If the answers to all of these questions were correlated, that would be evidence for people using a general factor of doom to answer these questions instead of using a more detailed model of the world.

I wonder if a similar phenomenon could be happening in AI Alignment research.[2]

We can construct a list of specific questions that are relevant to AI doom:

- How long are the timelines until someone develops AGI?

- How hard of a takeoff will we see after AGI is developed?

- How fragile are good values? Are two similar ethical systems similarly good?

- How hard is it for people to teach a value system to an AI?

- How hard is it to make an AGI corrigible?

- Should we expect simple alignment failures to occur before catastrophic alignment failures?

- How likely is human extinction if we don’t find a solution to the Alignment Problem?

- How hard is it to design a good governance mechanism for AI capabilities research?

- How hard is it to implement and enforce a good governance mechanism for AI capabilities research?

I don’t have any good evidence for this, but my vague impression is that many people’s answers to these questions are correlated.

It would not be too surprising if some pairs of these questions ought to be correlated. Different people would likely disagree on which things ought to be correlated. For example, Paul Christiano seems to think that short timelines and fast takeoff speeds are anti-correlated.[3] Someone else might categorize these questions as ‘AGI is simple’ vs. ‘Aligning things is hard’ and expect correlations within but not between these categories. People might also disagree on whether people and AGI will be similar (so aligning AGI and aligning governance are similarly hard) or very different (so teaching AGI good values is much harder than teaching people good values). With all of these various arguments, it would be surprising if beliefs across all of these questions were correlated. If they were, it would suggest that a general factor of doom is driving people’s beliefs.

There are several biases which seem to be related to the general factor of doom. The halo effect (or horns effect) is when a single good (or bad) belief about a person or brand causes someone to believe that that person or brand is good (or bad) in many other ways.[4] The fallacy of mood affiliation is when someone’s response to an argument is based on how the argument impacts the mood surrounding the issue, instead of responding to the argument itself.[5] The general factor of doom is a more specific bias, and feels less like an emotional response: People have detailed arguments describing why the future will be maximally or minimally doomy. The futures described are plausible, but considering how much disagreement there is, it would be surprising if only a few plausible futures are focused on and if these futures have similarly doomy predictions for many specific questions.[6] I am also reminded of Beware Surprising and Suspicious Convergence, although it focuses more on beliefs that aren’t updated when someone’s worldview changes, instead of on beliefs within a worldview which are surprisingly correlated.[7]

The AI Impacts survey[8] is probably not relevant to determining if AI safety researchers have a general factor of doom. The survey was of machine learning researchers, not AI safety researchers. I spot checked several random pairs of doom-related questions[9] anyway, and they didn’t look correlated. I’m not sure whether to interpret this to mean that they are using multiple detailed models or that they don’t even have a simple model.

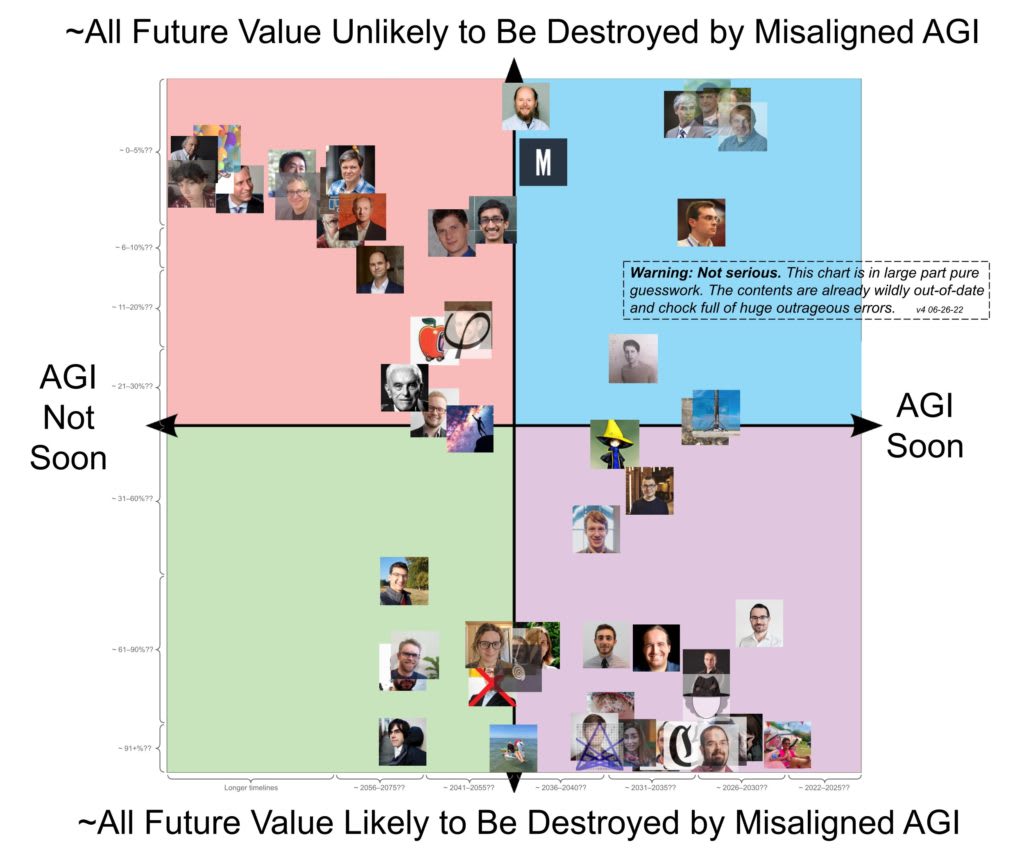

There is also this graph,[10] which claims to be “wildly out-of-date and chock full of huge outrageous errors.” This graph seems to suggest some correlation between two different doom-related questions, and that the distribution is surprisingly bimodal. If we were to take this more seriously than we probably should, we could use it as evidence for a general factor of doom, and that most people’s p(Doom) is close to 0 or 1.[11] I do not think that this graph is particularly strong evidence even if it is accurate, but it does gesture in the same direction that I am pointing at.

It would be interesting to do an actual survey of AI safety researchers, with more than just two questions, to see how closely all of the responses are correlated with each other. It would also be interesting to see whether doominess in one field is correlated with doominess in other fields. I don’t know whether this survey would show evidence for a general factor of doom among AI safety researchers, but it seems plausible that it would.

I do not think that using a general factor of doom is the right way to approach doom-related questions. It introduces spurious correlations between specific questions that should not be associated with each other. It restricts which plausible future pathways we focus on. The future of AI, or climate change, or public health, or any other important problem, is likely to be surprisingly doomy in some ways and surprisingly tractable in others.[12] A general factor of doom is too simple to use as an accurate description of reality.

Notes

- ^

Dannenberg & Zitzelsberger. Climate experts’ views on geoengineering depend on their beliefs about climate change impacts. Nature Climate Change 9. (2019) p. 769-775. https://www.nature.com/articles/s41558-019-0564-z.

- ^

Public health also seems like a good place to check for a general factor of doom.

- ^

- ^

- ^

- ^

This suggests a possible topic for a vignette. Randomly choose 0 (doomy) or 1 (not doomy) for each of the nine questions I listed. Can you construct a plausible future for the development of AI using this combination?

- ^

- ^

- ^

I looked at (1) the probability of the rate of technological progress being 10x greater after 2 years of HLAI vs the year when people thought that the probability of HLAI is 50%, (2) the probability of there being AI vastly smarter than humans within 2 years of HLAI vs the probability of having HLAI within the next 20 years, and (3) the probability of human extinction because we lose control of HLAI vs the probability of having HLAI within the next 10 years.

- ^

- ^

There is some discussion of why there is a correlation between these two particular questions here: https://twitter.com/infinirien/status/1537859930564218882. To actually see a general factor of doom, I would want to look at more than two questions.

- ^

During the pandemic, I was surprised to learn that it only took 2 days to design a good vaccine – and I was surprised at how willing public health agencies were to follow political considerations instead of probabilistic reasoning. My model of the world was not optimistic enough about vaccine development and not doomy enough about public health agencies. I expect that my models of other potential crises are in some ways too doomy and in other ways not doomy enough.

19 comments

Comments sorted by top scores.

comment by Daniel Kokotajlo (daniel-kokotajlo) · 2022-11-23T21:14:06.876Z · LW(p) · GW(p)

I'm with you in spirit but in practice I feel the need to point out that timelines, takeoff speeds, and p(doom) really should be heavily correlated. I'm actually a bit surprised people's views on them aren't more correlated than they already are. (See this poll [LW · GW]and this metaculus question.)

Slower takeoff causes shorter timelines, via R&D acceleration. Moreover slower takeoff correlates with longer timelines, because timelines are mainly a function of training requirements (a.k.a. AGI difficulty) and the higher the training requirements the more gradually we'll cross the distance from here to there.

And shorter timelines and faster takeoff both make p(doom) higher in a bunch of obvious ways -- less time for the world to prepare, less time for the world to react, less time for failures/mistakes/bugs to be corrected, etc.

↑ comment by Richard Korzekwa (Grothor) · 2022-11-23T22:20:45.037Z · LW(p) · GW(p)

A common generator of doominess is a cluster of views that are something like "AGI is an attractor state that, following current lines of research, you will by default fall into with relatively little warning". And this view generates doominess about timelines, takeoff speed, difficulty of solving alignment, consequences of failing to solve alignment on the first try, and difficulty of coordinating around AI risk. But I'm not sure how it generates or why it should strongly correlate with other doomy views, like:

- Pessimism that warning shots will produce any positive change in behavior at all, separate from whether a response to a warning shot will be sufficient to change anything

- Extreme confidence that someone, somewhere will dump lots of resources into building AGI, even in the face of serious effort to prevent this

- The belief that narrow AI basically doesn't matter at all, strategically

- High confidence that the cost of compute will continue to drop on or near trend

People seem to hold these beliefs in a way that's not explained by the first list of doomy beliefs, It's not just that coordinating around reducing AI risk is hard because it's a thing you make suddenly and by accident, it's because the relevant people and institutions are incapable of such coordination. It's not just that narrow AI won't have time to do anything important because of short timelines, it's that the world works in a way that makes it nearly impossible to steer in any substantial way unless you are a superintelligence.

A view like "aligning things is difficult, including AI, institutions, and civilizations" can at least partially generate this second list of views, but overall the case for strong correlations seems iffy to me. (To be clear, I put substantial credence in the attractor state thing being true and I accept at least a weak version of "aligning things is hard".)

Replies from: daniel-kokotajlo↑ comment by Daniel Kokotajlo (daniel-kokotajlo) · 2022-11-23T22:25:03.094Z · LW(p) · GW(p)

Well said. I might quibble with some of the details but I basically agree that the four you list here should theoretically be only mildly correlated with timelines & takeoff views, and that we should try to test how much the correlation is in practice to determine how much of a general doom factor bias people have.

↑ comment by Algon · 2022-11-23T21:23:04.810Z · LW(p) · GW(p)

Slower takeoff causes shorter timelines ... Moreover slower takeoff correlates with longer timelines

Uh, unless I'm quite confused, you mean faster takeoff causes shorter timelines. Right?

Replies from: sanxiyn↑ comment by sanxiyn · 2022-11-23T21:42:33.399Z · LW(p) · GW(p)

No. I think it is indeed the usual wisdom that slower takeoff causes shorter timeline. I found Paul Christiano's argument linked in the article pretty convincing.

Replies from: daniel-kokotajlo↑ comment by Daniel Kokotajlo (daniel-kokotajlo) · 2022-11-23T22:22:08.486Z · LW(p) · GW(p)

Correct.

comment by Ape in the coat · 2022-11-25T12:53:01.983Z · LW(p) · GW(p)

I think this has less to do with the general factor of doom and more with priming on a specific preferable solution.

Experts who think our effort to fight climate change are unsufficient usually have an actual idea of what policy we need to implement instead. They think we need to implement this idea and they treat all the potential damages as a reason to implement this idea. Thus alternative ideas such as geoengineering are competing with their idea. And as they prefere their idea to geoengineering, they tend not to support geoengineering.

And yes, I think there is a similar thing going on with AI safety. People have a solution they prefere (a group of researchers cracking the hard technical problem of alignment). And thus they are pessimistic towards alternative approaches such as regulating the industry.

Replies from: sanxiyn↑ comment by sanxiyn · 2022-11-26T06:40:49.520Z · LW(p) · GW(p)

I am not aware of any single policy that can solve climate change by itself. What policy do these experts support? Let's say it is to eliminate all coal power stations in the world by magic. That is at best 20% of global emission, so isn't that policy synergistic with geoengineering? To think your favorite policy is competing with geoengineering, that policy should be capable of solving 100% of the problem, but I am not aware of existence of any such policy whatsoever.

Replies from: Ape in the coat↑ comment by Ape in the coat · 2022-11-26T15:00:27.154Z · LW(p) · GW(p)

Doesn't have to be a singular thing. The policy may consist of multiple ideas, this doesn't change the reasoning.

Geoengineering as an approach competes with reducing carbon emissions as an approach, in a sense that the more effective is geoengineering the less important it is to reduce carbon emissions. If you believe that reducing carbon emissions is very important you naturally believe that geoengineering isn't very effective. Mind you, it doesn't even have to be faulty reasoning.

comment by leogao · 2022-11-23T21:25:33.930Z · LW(p) · GW(p)

I can't speak for anyone else, but for me at least the answers to most of your questions correlate because of my underlying model. However, it seems like correlation on most these questions (not just a few pairs) is to be expected, as many of them probe a similar underlying model from a few different directions. Just because there are lots of individual arguments about various axes does not immediately imply that any or even most configurations of beliefs on those arguments is coherent. In fact, in a very successfully truth-finding system we should also expect convergence on most axes of argument, with only a few cruxes remaining.

My answers to the questions:

1. I lean towards short timelines, though I have lots of uncertainty.

2. I mostly operate under the assumption of fast takeoffs. I think "slower" takeoffs (months to low years) are plausible but I think even in this world we're unlikely to respond adequately and that iterative approaches still fail.

3: I expect values to be fragile. However, my model of how things go wrong depends on a much weaker version of this claim.

4: I don't expect teaching a value system to an AGI to be hard or relevant to the reasons why things might go wrong.

5: I expect corrigibility to be hard.

6: Yes (depending on the definition of "simple", they already happen), but I don't expect it to update people sufficiently.

7: >90%, if you condition on alignment not being solved (which also precludes alignment being solved because it's easy).

8: If you factor out the feasibility of implementation/enforcement, then merely designing a good governance mechanism is relatively easy. However, I'm not sure why you would ever want to do this factorization.

9: I expect implementation/enforcement to be hard.

Replies from: sanxiyn↑ comment by sanxiyn · 2022-11-23T21:44:03.584Z · LW(p) · GW(p)

What do you think of Paul Christiano's argument that short timeline and fast takeoff is anti-correlated?

Replies from: daniel-kokotajlo↑ comment by Daniel Kokotajlo (daniel-kokotajlo) · 2022-11-23T22:29:06.305Z · LW(p) · GW(p)

Insofar as that's what he thinks, he's wrong. Short timelines and fast takeoff are positively correlated. However, he argues (correctly) that slow takeoff also causes short timelines, by accelerating R&D progress sooner than it otherwise would have (for a given level of underlying difficulty-of-building-AGI). This effect is not strong enough in my estimation (or in Paul's if I recall from conversations*) to overcome the other reasons pushing timelines and takeoff to be correlated.

*I think I remember him saying things like "Well yeah obviously if it happens by 2030 it's probably gonna be a crazy fast takeoff."

↑ comment by Quadratic Reciprocity · 2022-11-24T15:54:11.410Z · LW(p) · GW(p)

did you mean "short timelines" in your third sentence?

Replies from: daniel-kokotajlo↑ comment by Daniel Kokotajlo (daniel-kokotajlo) · 2022-11-24T20:24:00.343Z · LW(p) · GW(p)

Ooops yeah sorry typo will fix

comment by Dagon · 2022-11-23T22:04:15.718Z · LW(p) · GW(p)

I think a lot of people (including experts, sometimes) have a subconscious or semi-legible karma or external-judgement quasi-religious aspect to their beliefs too.

Humans caused this with their greed and shortsightedness and unwillingness to give proper respect to the right beliefs. Thus, there's no possible human ingenuity or trickery that can avoid it, that's just more hubris.

comment by Noosphere89 (sharmake-farah) · 2023-12-08T01:04:49.413Z · LW(p) · GW(p)

A nice write-up of something that actually matters, specifically the fact that so many people rely on a general factor of doom to answer questions.

While I think there is reason to have correlation between these factors, and a very weak form of the General Factor of Doom is plausible, I overall like the point that people probably use a General Factor of Doom too much, and offers some explanations of why.

Overall, I'd give this a +1 or +4. A reasonably useful post that talks about a small thing pretty competently.

comment by Patrice Ayme (patrice-ayme) · 2022-11-25T01:42:01.635Z · LW(p) · GW(p)

Realistic Cynicism In Climate Science:

Apparent paradox: the more scientists worry about “climate change”, the less they believe in geoengineering. They also are more inclined to geoengineering when the impact gets personal. It actually makes rational sense.

People who are more realistic see the doom of the GreenHouse Gas (GHG) crisis: all time high CO2 production, all time high coal burning, the activation of tipping points, such as collapsing ice shelves, acceleration of ice streams, generalized burning of forests, peat and even permafrost combusting under the snow through winter, oceanic dead zones, 6th mass extinction, etc. They also observe that we are on target for a further rise of many degrees Celsius by 2100 in the polar regions, where global heating has the most impact. And they see the silliness of massive efforts in fake solutions such as making more than 100 million cars a year running on huge (half a ton) batteries... or turning human food into fuel for combustion.

The same realistic mood shows to cogent and honest climate scientists that there is no plausible geoengineering solution which could be deployed in the next few decades. It's all rationally coherent.

But then when they are told that sea level rise, or drought, or floods, or storm, or massive fires and vegetation dying out, will become catastrophic in their neighborhood, the same people get desperate and ready to use anything. This “suggests that the climate experts’ support for geoengineering will increase over time, as more regions are adversely affected and more experts observe or expect damages in their home country.”

However that would be a moral and intellectual failure, at least in the case of solar geoengineering. Only outright removing CO2 from the atmosphere is acceptable (but we don’t have the tech on the mass scale necessary; we will, only when we have thermonuclear fusion reactors, by just freezing the CO2 out and stuffing it in basalt).

↑ comment by Lao Mein (derpherpize) · 2022-11-25T03:02:30.790Z · LW(p) · GW(p)

Epistemic status: ~30% sophistry

There's a difference between thinking something won't work in practice and opposing it. The paper examines opposition. As in, taking steps to make geoengineering less likely.

My personal suspicion is that the more plausible a climate scientist thinks geoengineering is, the more likely they are to oppose it, not the other way around. Just like climate modeling for nuclear famine isn't actually about accurate climate modeling and finding ways to mitigate deaths from starvation (it's about opposition to nuclear war), I suspect that a lot of global-warming research is more about opposing capitalism/technology/greed/industrial development than it is about finding practical ways to mitigate the damage.

This is because these fields are about using utilizing how bad their catastrophe is to shape policy. Mitigation efforts hamper that. Therefore, they must prevent mitigation research. There is a difference between thinking about a catastrophe as an ideological tool, in which case you actively avoid talking about mitigation measures and actively sabotage mitigation efforts, and thinking about it as a problem to be solved, in which you absolutely invest in damage mitigation. Most nuclear famine and global warming research absolutely seem like the former, while AI safety still looks like the latter.

AI alignment orgs aren't trying to sabotage mitigation (prosaic alignment?). The people working on, for example, interpretability and value alignment, might view prosaic alignment as ineffective, but they aren't trying to prevent it from taking place. Even those who want to slow down AI development aren't trying to stop prosaic alignment research. Despite the surface similarities, there are fundamental differences between the fields of climate change and AI alignment research.

I vaguely remember reading a comment on Lesswrong about how the anti-geoengineering stance is actually 3d chess to force governments to reduce carbon emissions by removing mitigation as an option. But the most likely result is just that poor nations like Bangladesh suffer humanitarian catastrophes while the developed world twiddles its thumbs. I don't think there is any equivalent in the field of AI safety.

comment by Noah Scales · 2022-11-24T07:52:30.854Z · LW(p) · GW(p)

Correlations among AGI doomer predictions could reveal common AI Safety milestones

I am curious about how considerations of overlaps could lead to a list of milestones for positive results in AI safety research. If there are enough exit points from pathways to AI doom available through AI Safety improvements, a catalog of those improvements might be visible in models of correlations among AI Safety researcher's predictions about AGI doom of various sorts.

But as a sidenote, here's my response to your mention of climate researchers thinking in terms of P(Doom).

Commonalities among climate scientists that are pessimistic about climate change and geo-engineering

Doubts held by pessimistic climate scientists about future climate plausibly include:

- countries meeting climate commitments (they haven't so far and won't in future).

- tipping elements (e.g., Amazon) remaining stable this century (several are projected to tip this century).

- climate models having resolution and completeness sufficient for atmospheric geo-engineering (none do).

- politics of geo-engineering staying amiable (plausibly not if undesirable weather effects occur).

- GAST < 1.5C this century (GAST is predicted to rise higher this century).

Common traits among more vocal climate scientists forecasting climate destruction could include:

- applying the precautionary principle.

- rejecting some economic models of climate change impacts.

- liking the idea of geo-engineering with marine cloud brightening in the Arctic.

- disliking atmospheric aerosol injection over individual countries.

- agreeing with (hypothetical) DACCS or BECCS that is timely and scales well.

- worrying about irreversible tipping element changes such as sea level rise from Greenland ice melt.

NOTE: I see that commonality through my own browsing of literature and observations of trends among vocal climate scientists, but my list is not the result of any representative survey.

Climate scientist concern over a climate emergency contrasts with prediction of pending catastrophe

There's 13,000 scientist signatories on a statement of a climate emergency, I think that shows concern (if not pessimism) from a vocal group of scientists about our current situation.

However, if climate scientists are asked to forecast P(Doom), the forecasts will vary depending on what scientists think:

- that countries and people will actually do as time goes on.

- is the amount of time available to limit GHG production.

- are the economic and societal changes suitable vs pending as climate change effects grow.

- is the amount of time required to implement effective geo-engineering.

- is the technological response suitable to prevent, adapt to, or mitigate climate change consequences.

A different question is how climate scientists might backcast not_Doom or P(Doom) < low_value. A comparison of the scenarios they describe could show interesting differences in worldview.