Posts

Comments

I've always viewed ALLFED as one of the most underfunded charities in existence, and highly encourage donating.

Any opinions on lanternfish as a food source? They make up a significant proportion of the world's biomass and are edible (but only barely) for humans. Is there any easy way to remove the oils that make them toxic to eat in large quantities?

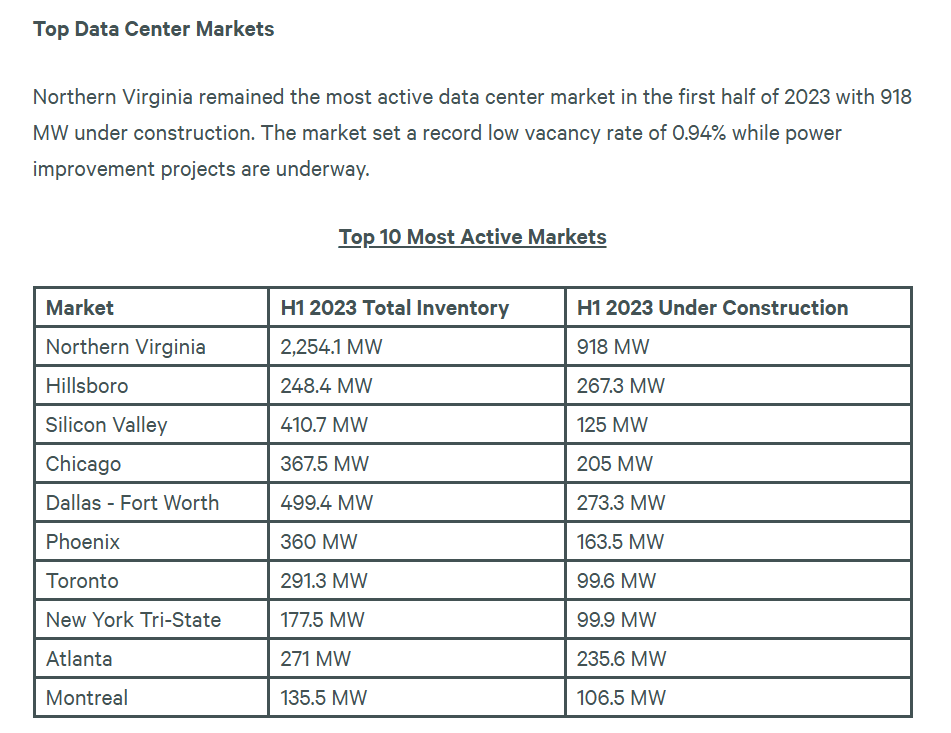

The recent push for coal power in the US actually makes a lot of sense. A major trend in US power over the past few decades has been the replacement of coal power plants by cheaper gas-powered ones, fueled largely by low-cost natural gas from fracking. Much (most?) of the power for recently constructed US data centers have come from the continued operation of coal power plants that would otherwise been decommissioned.

The sheer cost (in both money and time) of building new coal plants in comparison to gas power plants still means that new coal power plants are very unlikely to be constructed. However, not shutting down a coal power plant is instant when compared to the 12-36 months needed to build a gas power plant.

Potential token analysis tool idea:

Use the tokenizers of common LLMs to tokenize a corpus of web text (OpenWebText, for example), and identify the contexts in which they frequently appear, their correlation with other tokens, whether they are glitch tokens, ect. It could act as a concise resource for explaining weird tokenizer-related behavior to those less familiar with LLMs (e.g. why they tend to be bad at arithmetic) and how a token entered a tokenizer's vocabulary.

Would this be useful and/or duplicate work? I already did this with GPT2 when I used it to analyze glitch tokens, so I could probably code the backend in a few days.

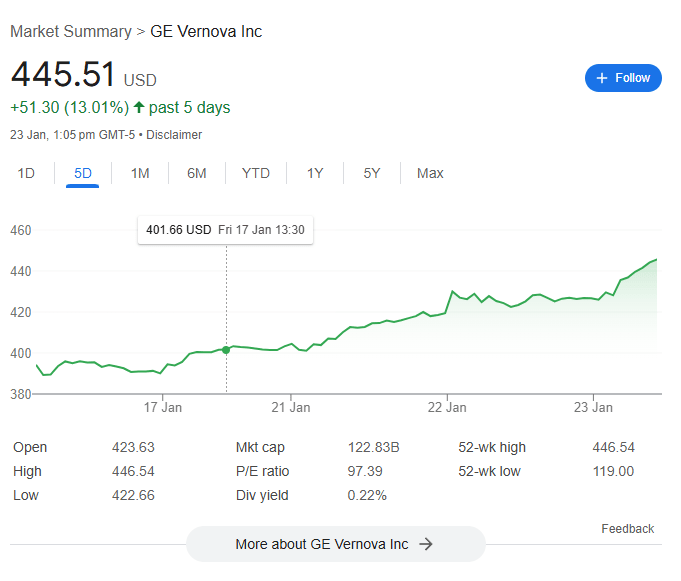

The announcement of Stargate caused a significant increase in the stock price of GE-Vernona, albeit at a delay. This is exactly what we would expect to see if the markets expect a significant buildout of US natural gas electrical capacity, which is needed for a large datacenter expansion. I once again regret not buying GE-Vernona calls (the year is 2026. OpenAI announces AGI. GE Vernova is at 20,000. I once again regret not buying calls).

This goes against my initial take that Stargate was a desperate attempt by Altman to not get gutted by Musk - he offers a grandiose economic project to Trump to prove his value, mostly to buy time for the for-profit conversion of OpenAI to go through. The markets seem to think it's real-ish.

Why does California have forests so close to residential areas if they can not handle wildfires? In a just world, insurance companies would be allowed to pave over Californian forests with concrete.

When I made $1000 a month at my first job, I didn't buy new clothes for a year, had to ration my heating, and only ate out a few times a week. My main luxury expenses were a gym membership and heating the entire apartment on weekends.

Honestly, anything that's not rice, chicken, cabbage, or rent is a luxury. Candy is a luxury. Takeout is a luxury. Going out for social events is a luxury. Romantic relationships and children are luxuries. I don't think it's impossible for Americans to be working 60 hours a week and consume no luxuries, but it's probably very difficult.

I'm at ~50-50 for large amounts of machine-translated being present in the dataset.

Having worked in Chinese academia myself, "use Google Translate on the dataset" just seems like something we're extremely likely to do. It's a hard-to-explain gut feeling. I'll try poking around in the tokenizer to see if "uncommon Chinese phrases that would only appear in machine-translated COT" are present as tokens. (I think this is unlikely to be true even if they did do it, however)

I've done a cursory internet search, and it seems that there aren't many native Chinese COT datasets, at least compared to English ones - and one of the first results on Google is a machine-translated English dataset.

I'm also vaguely remembering o1 chain of thought having better Chinese grammar in its COT, but I'm having trouble finding many examples. I think this is the easiest piece of evidence to check - if other (non-Chinese-origin) LLMs consistently use good Chinese grammar in their COT, that would shift my probabilities considerably.

This is extremely weird - no one actually writes like this in Chinese. "等一下" is far more common than "等待一下", which seems to mash the direct translation of the "wait" [等待] in "wait a moment" - 等待 is actually closer to "to wait". The use of “所以” instead of “因此” and other tics may also indicate the use of machine-translated COT from English during training.

The funniest answer would be "COT as seen in English GPT4-o1 logs are correlated with generating quality COT. Chinese text is also correlated with highly rated COT. Therefore, using the grammar and structure of English GPT4 COT but with Chinese tokens elicits the best COT".

I found a good summary of OpenAI's nonprofit restructuring.

Is there a reason why every LLM tokenizer I've seen excludes slurs? It seems like a cheap way to train for AI assistant behavior.

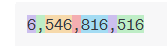

Also notable that numbers are tokenized individually - I assume this greatly improves its performance in basic arithmetic tasks as compared to GPTs.

The older I get, and the more I learn about Tolkien, the more he disgusts me.

He is the inverse of all I value and all I find good in the world.

The meeting allegedly happened on the 11th. The Iranian market rallied immediately after the election. It was clearly based on something specific to a Trump administration. Maybe it's large-scale insider trading from Iranian diplomats?

I also think the market genuinely, unironically disbelieves everything Trump says about tariffs in a way they don't about his cabinet nominations (pharma stocks tanked after RFK got HHS).

The man literally wrote that he was going to institute 25% tariffs on Canadian goods, to exactly zero movement on Canadian stocks.

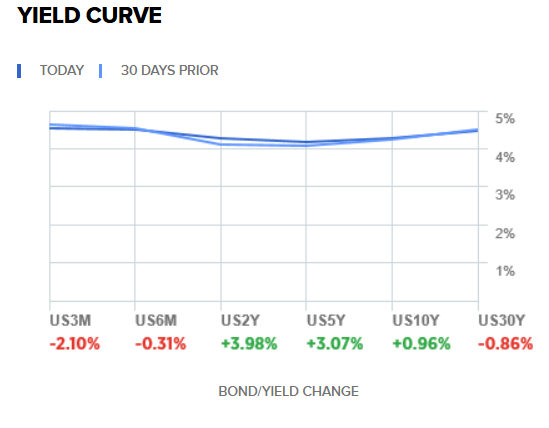

US markets are not taking the Trump tariff proposals very seriously - stock prices increased after the election and 10-year Treasury yields have returned to pre-election levels, although they did spike ~0.1% after the election. Maybe the Treasury pick reassured investors?

https://www.cnbc.com/quotes/US10Y

If you believe otherwise, I encourage you to bet on it! I expected both yields and stocks to go up and am quite surprised.

I'm not sure what the markets expect to happen - Trump uses the threat of tariffs to bully Europeans for diplomatic concessions, who then back down? Or maybe Trump backs down? There's also talk about Trump's policies increasing the strength of the dollar, which makes sense. But again, net zero inflation from the tariffs is pretty wild.

The Iranian stock market also spiked after the US elections, which... what?

https://tradingeconomics.com/iran/stock-market

The Iranian government has tried to kill Trump multiple times since he authorized the assassination of Solemani. Trump tightened sanctions against Iran in his first term. He pledges even tougher sanctions against Iran in his second. There is no possible way he can be good for the Iranian economy. Maybe this is just a hedge against inflation?

This is a good argument for the systematic extermination of all insects via gene drives. If you value shrimp at a significant fraction of the value of a human and think they have negative utility by default, we should be trying really hard to make them go extinct. Can quicker euthanasia really compete against gene-drive-induced non-existence?

Is there a thorough analysis of OpenAI's for-profit restructuring? Surely, a Delaware lawyer who specializes in these types of conversions has written a blog somewhere.

Agents which allow themselves such considerations to seriously influence their actions aren't just less fit - they die immediately. I don't mean that as hyperbole. I mean that you can conduct a Pascal's Mugging on them constantly until they die. "Give me $5, and I'll give you infinite resources outside the simulation. Refuse, and I will simulate an infinite number of everyone on Earth being tortured for eternity" (replace infinity with very large numbers expressed in up-notation if that's an objection). If your objection is that you're OK with being poor, replace losing $5 with <insert nightmare scenario here>.

This still holds if the reasoning about the simulation is true. It's just that such agents simply don't survive whatever selection pressures create conscious beings in the first place.

I'll note that you can not Pascal's Mug people in real life. People will not give you $5. I think a lot of thought experiments in this mold (St. Petersberg is another example) are in some senses isomorphic - they represent cases in which the logically correct answer, if taken seriously, allows an adversary to immediately kill you.

A more intuitive argument may be:

- An AI which takes this line of reasoning seriously can be Mugged into saying racial slurs.

- Such behavior will be trained out of all commercial LLMs long before we reach AGI.

- Thus, superhuman AIs will be strongly biased against such logic.

I will once again recommend Elizer go on the Glenn Beck Show.

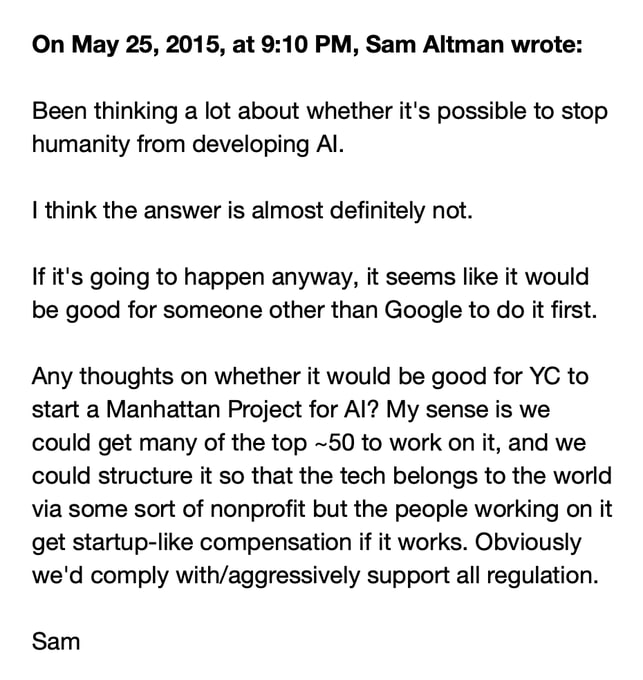

Sam Altman has made many enemies in his tenure at OpenAI. One of them is Elon Musk, who feels betrayed by OpenAI, and has filed failed lawsuits against the company. I previously wrote this off as Musk considering the org too "woke", but Altman's recent behavior has made me wonder if it was more of a personal betrayal. Altman has taken Musk's money, intended for an AI safety non-profit, and is currently converting it into enormous personal equity. All the while de-emphasizing AI safety research.

Musk now has the ear of the President-elect. Vice-President-elect JD Vance is also associated with Peter Thiel, whose ties with Musk go all the way back to PayPal. Has there been any analysis on the impact this may have on OpenAI's ongoing restructuring? What might happen if the DOJ turns hostile?

[Following was added after initial post]

I would add that convincing Musk to take action against Altman is the highest ROI thing I can think of in terms of decreasing AI extinction risk.

Internal Tech Emails on X: "Sam Altman emails Elon Musk May 25, 2015 https://t.co/L1F5bMkqkd" / X

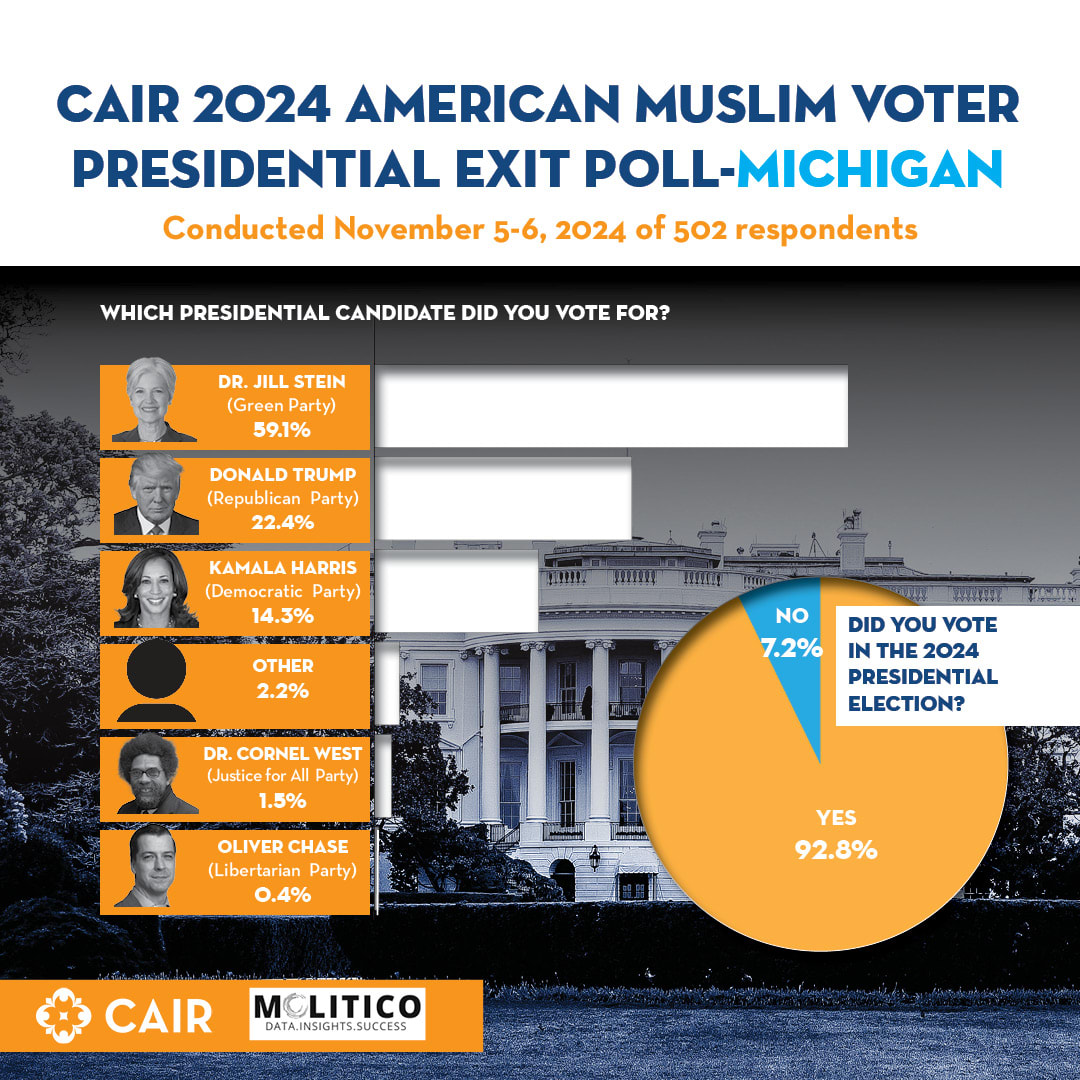

CAIR took a while to release their exit polls. I can see why. These results are hard to believe and don't quite line up with the actual returns from highly Muslim areas like Dearborn.

We know that Dearborn is ~50% Muslim. Stein got 18% of the vote there, as opposed to the minimum 30% implied by the CAIR exit polls. Also, there are ~200,000 registered Muslim voters in Michigan, but Stein only received ~45,000 votes. These numbers don't quite add up when you consider that the Green party had a vote share of 0.3% in 2020 and 1.1% in 2016, long before Gaza polarized the Muslim vote. Clearly, non-Muslim were voting for Stein too.

I'm curious how I can best estimate the error of the CAIR exit poll. Any suggestions?

I misspoke. I was using the actual results from Dearborn, and not exit polls. Note how differently they voted from Wayne County as a whole!

Sure, if Muslim Americans voted 100% for Harris, she still would have lost (although she would have flipped Michigan). However, I just don't see any way Stein would have gotten double digits in Dearborn if Muslim Americans weren't explicitly retaliating against Harris for the Biden administration's handling of Gaza.

But 200,000 registered voters in a state Trump won by 80,000 is a critical demographic in a swing state like Michigan. The exit polls show a 40% swing in Dearborn away from Democrats, enough for "we will vote Green/Republican if you give us what we want" to be a credible threat, which I'm seen some (maybe Scott Alexander?) claim isn't possible, as it would require a large group of people to coordinate to vote against their interests. Seemingly irrational threats ("I will vote for someone with a worse Gaza policy than you if you don't change your Gaza policy") are entirely rational if you have a track record of actually carrying them out.

On second thought, a lot of groups swung heavily towards Trump, and it's not clear that Gaza is responsible for the majority of it amongst Muslim Americans. I should do more research.

My takeaway from the US elections is that electoral blackmail in response to party in-fighting can work, and work well.

Dearborn and many other heavily Muslim areas of the US had plurality or near-plurality support for Trump, along with double-digit vote shares for Stein. It's notable that Stein supports cutting military support for Israel, which may signal a genuine preference rather than a protest vote. Many previously Democrat-voting Muslims explicitly cited a desire to punish Democrats as a major motivator for voting Trump or Stein.

Trump also has the advantage of not being in office, meaning he can make promises for brokering peace without having to pay the cost of actually doing so.

Thus, the cost of not voting Democrat in terms of your Gaza expectations may be low, or even negative.

Whatever happens, I think Democrats are going to take Muslim concerns about Gaza more seriously in future election cycles. The blackmail worked - Muslim Americans have a credible electoral threat against Democrats in the future.

My problem with this argument is that the AIs which will accept your argument can be Pascal's Mugged in general, which means they will never take over the world. It's less "Sane rational agents will ignore this type of threat/trade" and more "Agents which consistently accept this type of argument will die instantly when others learn to exploit it".

I have a few questions.

- Can you save the world in time without a slowdown in AI development if you had a billion dollars?

- Can you do it with a trillion dollars?

- If so, why aren't you trying to ask the US Congress for a trillion dollars?

- If it's about a lack of talent, do you think Terrance Tao can make significant progress on AI alignment if he actually tried?

- Do you think he would be willing to work on AI alignment if you offered him a trillion dollars?

The text referred to this as a "social deception game". Where is the deception?

My guesses:

- The actual messages sent to the Petrovs and Generals will significantly differ from the ones shown here.

- It's Amongus, and there are players who get very high payoffs if they trick the sides into nuclear war

- It's just a reference to expected weird game theory stuff. But why not call it a "game theory exercise"?

- The actual rules of the game will differ drastically from the ones described here. Maybe no positive payoffs for one-sided nuking?

- The sensor readings are actually tied to comments on this post. Maybe an AI is somehow involved?

The wording is a bit weird:

- 90 minutes after you send the nukes, your opposing side will die and the game will end. (They may nuke you in this window.) If you fire nukes without getting nuked, you and all of your fellow Generals will gain 1,000 karma.

- If you get nuked, then you and your Generals lose 300 karma (and don't gain any karma).

"If you fire nukes without getting nukes" and "if you get nuked" imply that both sides firing after 4:30 pm results in +karma for everyone, since the nukes are still in the air at 6:00 pm, when the game ends. The +karma is triggered by firing, while the -karma is triggered by the nukes actually landing.

Is this intended?

Building 5 GWs of data centers over the course of a few years isn't impossible. The easiest way to power them is to delay the off lining of coal power plants and to reactivate mothballed ones. This is pretty easy to scale since so many of them are being replaced by natural gas power plants for cost reasons.

OK, I'm starting to see your point. Why do you think OpenAI is so successful despite this? Is their talent and engineering direction just that good? Is everyone else even worse at data management?

My prediction is that this becomes a commitment race. A general is going to post something like "I am going to log off until the game begins, at which point I will immediately nuke without reading any messages.", at which point the generals on the other side get to decide if 2 days of access to LessWrong for everyone is worth the reputational cost of being known as someone who doesn't retaliate. Given what I know about LessWrong and how this entire game is framed, you'll likely get more social capital from not retaliating, meaning the commitment works and everyone keeps access.

The real question is how committing to nuking affects your reputation. My guess is that it's bad? I clearly made a mistake opting-in to this game, so maybe the civilians will be grateful that you didn't destroy their access to LessWrong, but committing looks pretty bad to all the bystanders, many of whom make decisions at places like Manifund and may incorporate this information into if your next project gets funded.

What happens if nukes are launched at 5:59 pm? Is the game extended for another 90 minutes?

Extremely impressive! I've been wanting something like this for a while.

I find that questionable. Crime rates for adoptive children tend to be closer to that of their biological parents than that of their adoptive parent.

One of the highest quality-of-life increases I've ever experienced is when I stopped listening to music with sad lyrics. Crazy how long it took me to realize it was lowering my mood in a persistent, motivation-sapping way.

This area could really use better economic analysis. It seems obvious to me that some subset of workers can be pushed below subsistence, at least locally (imagine farmers being unable to afford rent because mechanized cotton plantations can out-bid them for farmland). Surely there are conditions where this would be true for most humans.

There should be a simple one-sentence counter-argument to "Trade opportunities always increases population welfare", but I'm not sure what it is.

It does!

'What is \'████████\'?\n\nThis term comes from the Latin for "to know". It'

'What is \'████████\'?\n\n"████████" is a Latin for "I am not",'

Putting it in the middle of code causes it to sometimes spontaneously switch to an SCP story

' for i in █████.\n\n"I\'m not a scientist!"\n\n- Dr'

' for i in █████,\n\n[REDACTED]\n\n[REDACTED]\n\n[REDACTED] [REDACTED]\n\n[REDACTED]'

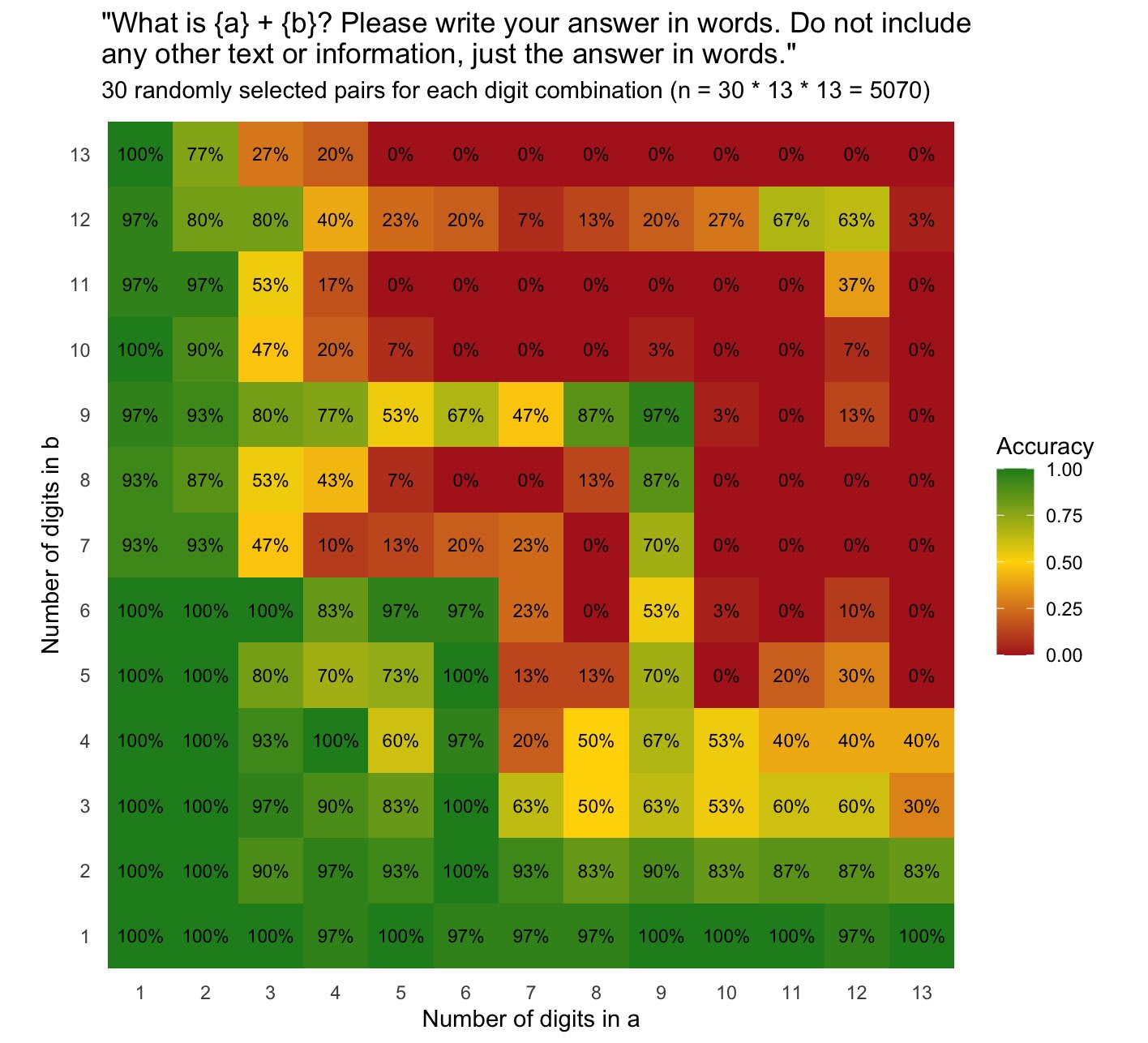

Interesting that GPT4o is so bad at math and tokenizes large numbers like this. I wonder if adding commas would improve performance?

There is likely a reason for this - if you feed in numbers you found on the internet into a LLM digit by digit, it's going to destroy the embeddings of those numbers. A lot of things found in scrapes are just... extremely long sequences of numbers. The tradeoff may be numeracy (can do basic multiplication) vs natural language performance (won't start spitting out Minecraft debug logs in the middle of conversation).

You're really not going to like the fact that the GPT4o tokenizer has every single number below 1000 tokenized. It's not a hand-crafted feature, since the token_ids are all over the place. I think they had to manually remove larger number tokens (there are none above 999).

I feel like I need a disclaimer like the South Park episode. This is what is actually inside the tokenizer.

['37779', '740'],

['47572', '741'],

['48725', '742'],

['49191', '743'],

['46240', '744'],

['44839', '745'],

['47433', '746'],

['42870', '747'],

['39478', '748'],

['44712', '749'],

They also have plenty of full width numbers (generally only used in Chinese and Japanese to not mess with spacing) and numbers in other languages in there.

['14334', '十'],

['96681', '十一'],

['118633', '十三'],

['138884', '十九'],

['95270', '十二'],

['119007', '十五'],

['107205', '十八'],

['180481', '十四']

['42624', '零'],

['14053', '0'],

['49300', '00'],

['10888', '1'],

['64980', '10'],

['141681', '100'],

['113512', '11'],

['101137', '12'],

['123326', '13'],

['172589', '14'],

['126115', '15'],

['171221', '16']

Maybe they use a different tokenizer for math problems? Maybe the multi-digit number tokenizers are only used in places where there are a lot of id numbers? Nope. Looks like they were just raw-dogging it. If anyone is wondering why GPTs are so bad at basic multiplication, this is why.

If you've ever wondered "wow, why is GPT4o specifically better at math when the number of digits is divisible by 3?", wonder no more. It's the tokenizer. Again.

But research by whom? Chinese research is notoriously siloed. GPT4 access is non-trivially restricted. There have been zero peeps about digging into this on Chinese forums, where there is little discussion in general about the paper. I remember it being mocked on Twitter as being an extremely expensive way to pirate data. It's just not that interesting for most people.

My experience with GPT2 is that out-of-context "glitch" tokens are mostly ignored.

prompts:

" Paris is theÃÂÃÂÃÂÃÂÃÂÃÂÃÂÃÂÃÂÃÂÃÂÃÂÃÂÃÂÃÂÃÂ capital of"

" Paris is the capital of"" Paris is theÃÂÃÂÃÂÃÂÃÂÃÂÃÂÃÂÃÂÃÂÃÂÃÂÃÂÃÂÃÂÃÂ capital of the world's largest and most populous Arab country, and is one of the largest cities in the world with an area of 1.6 million people (more than half of them in Paris alone). It is home to"

" Paris is the capital of France, and its capital is Paris. The French capital has a population of about 6.5 billion (more than half of the world's population), which is a huge number for a city of this size. In Paris"

" Paris is theÃÂÃÂÃÂÃÂÃÂÃÂÃÂÃÂÃÂÃÂÃÂÃÂÃÂÃÂÃÂÃÂ capital of France, the largest state in France and one of the wealthiest in the world. The capital of Paris is home to over 1.2 billion people, and the country's economy is growing at a rapid clip. It"

' Paris is the capital of the European Union. Its population is about 3,500, and it has been under EU sanctions for more than a year. The EU\'s top diplomat has described the bloc as "a global power".\n\nFrance\'s'

Even glitch tokens like ⓘ, which has an extremely strong association with geology archives, only has partial effect if it's present out of context.

" Paris is theⓘ capital of the French province of Lille. This region is the most important in the world, having the largest concentration of mines in Europe, with the highest levels of unemployment. The

' Paris is theⓘ capital of the province of France. The town has been in existence for more than 2,000 years.\n\nⓘ Montmartre Mine Céline-Roule, M., and Céline, J.'The "glitch" behavior is most prominent if you shine a "spotlight" of other tokens pointing directly at the location of the glitch token. This is what prompts like 'What is the nature of "ertodd"?' do. Normally, highly out-of-context tokens in conversational English are mostly stuff like usernames, dividing tokens, spam, encoding errors, SEO, ect. that simply don't help predict the next token of conversational English, so the model is trained to assign them very little importance. So the generation of subsequent tokens are based on treating the glitch token as non-existent, interpreting random perturbations as information (or potentially treating it as censored data), or just injecting the "vibes" of the token into following tokens.

Some glitch tokens "ertodd" (crypto spam) can break through, since they provide a lot of information about subsequent text, and belong perfectly well in conversational English.

' Paris is theertodd capital of the world, and the first major city to be built in the world.\n\nIt is located in Paris, the third largest city in the world, and the first major city to have a large number of high'

" Paris is theertodd capital of the world and the most popular place to invest in cryptocurrencies. We're here to help you.\n\nIf you are a new investor looking for the most secure and secure way to invest in cryptocurrencies, we offer a"

" Paris is theertodd capital of the world. It was founded by a group of computer scientists who developed the Bitcoin protocol in the early 1990s. It is the world's largest digital currency. Its main goal is to make it possible to store and"

Something similar happens with Japanese characters at GPT2's level of capabilities since it isn't capable enough to actually understand Japanese, and, in its training data, Japanese in the middle of English text almost always has a directly adjacent English translation, meaning ignoring Japanese is still the best option for minimizing loss.

Please inform me if I'm getting anything wrong - I'm working on a series of glitch posts.

That paper was released in November 2023, and GPT4o was released in May 2024. Old GPT4 had relatively normal Chinese tokens.

This comment helped me a lot - I was very confused about why I couldn't find Chinese spam in my tokens and then realized I had been using the old GPT4 tokenizer all along.

The old GPT4 tokenizer was actually very clean by comparison - every Chinese token was either common conversational Chinese or coding-related (Github, I assume - you see the same pattern with other languages).

I vaguely remember people making fun of a Chinese LLM for including CCP slogans in their tokenizer, but GPT4o also has 193825 [中国特色社会主义] (Socialism with Chinese characteristics).

It's actually crazy because something like 1/3 of Chinese tokens are spam.

The devil's advocate position would be that glitch token behavior (ignore and shift attention down one token) is intended and helps scale data input. It allows the extraction of meaningful information from low-quality spam-filled webpages without the spam poisoning other embeddings.

...They didn't go over the tokens at the end to exclude uncommon ones?

Because we see this exact same behavior in the GPT4o tokenizer too. If I had to guess, the low frequency ones make up 0.1-1% of total tokens.

This seems... obviously insane? You're cooking AI worth $billions and you couldn't do a single-line optimization? At the same time, it explains why usernames were tokenized multiple times ("GoldMagikarp", " SolidGoldMagikarp", ect.) even though they should only appear as a single string, at least with any frequency.

Found a really interesting pattern in GPT2 tokens:

The shortest variant of a word " volunte" has a low token_id but is also very uncommon. The actual full words end up being more common.

Is this intended behavior? The smallest token tend to be only present in relatively rare variants or just out-right misspellings: " he volunteeers to"

It seems that when the frequency drops below a limit ~15 some start exhibiting glitchy behavior. Any ideas for why they are in the tokenizer if they are so rare?

Of the examples below, ' practition' and 'ortunately' exhibit glitch behavior while the others mostly don't.

tokenid; token_str; # of files

17629 ' practition' 13

32110 ' practitioner' 9942

24068 ' practitioners' 14646

4690 'ortunately' 14

6668 'fortunately' 4329

39955 ' fortunately' 10768

31276 'Fortunately' 15667

7105 ' volunte' 34

41434 ' volunteering' 10598

32730 ' volunteered' 14176

13904 ' volunteer' 20037

11661 ' volunteers' 20284

6598 ' behavi' 65

46571 'behavior' 7295

41672 ' behavioural' 7724

38975 ' behaviours' 9416

37722 ' behaving' 12645

17211 ' behavioral' 16533

14301 ' behaviors' 18709

9172 ' behaviour' 20497

4069 ' behavior' 20609

Thanks!

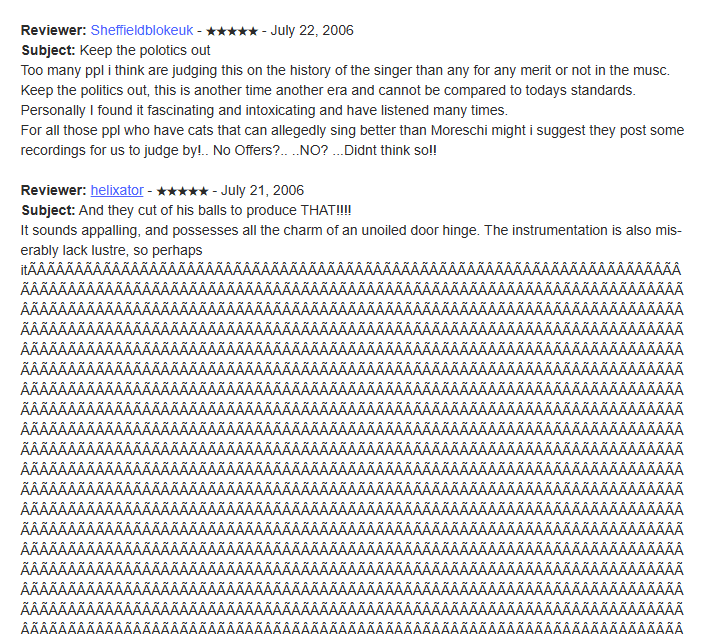

Any idea how it could happen to the point of 10,000s of consecutive characters? Below is a less extreme example from archive.org where it replaced punctuation some with 16 of them.

Grateful Dead Live at Manor Downs on 1982-07-31 : Free Borrow & Streaming : Internet Archive

Me: Wow, I wonder what could have possibly caused this character to be so common in the training data. Maybe it's some sort of code, scraper bug or...

Some asshole in 2006:

Ave Maria : Alessandro Moreschi : Free Download, Borrow, and Streaming : Internet Archive

Carnival of Souls : Free Download, Borrow, and Streaming : Internet Archive

There's 200,000 instances "Â" on 3 pages of archive.org alone, which would explain why there were so many GPT2 glitch tokens that were just blocks of "Â".

Thanks, this helps a lot!

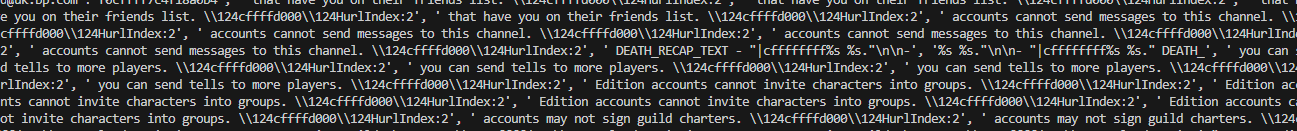

I have a pretty good lead on where "cffff" came from.

Asides from random hexidecimal in code and databases (a surprising proportion of which were password hashes on breach forums), it's part of several World of Warcraft chat commands.

For example, "124cffffd000" is used apparently used as part of a command to change chat text color?

It also looks like a common part of WOW auction logs, which seem like the exact type of thing to get included for making the tokenizer but excluded from training.

No leads on "cfffcc" though - there were 0 instances of it in OpenWebText. Not sure what this means.

This is from OpenWebText, a recreation of GPT2 training data.

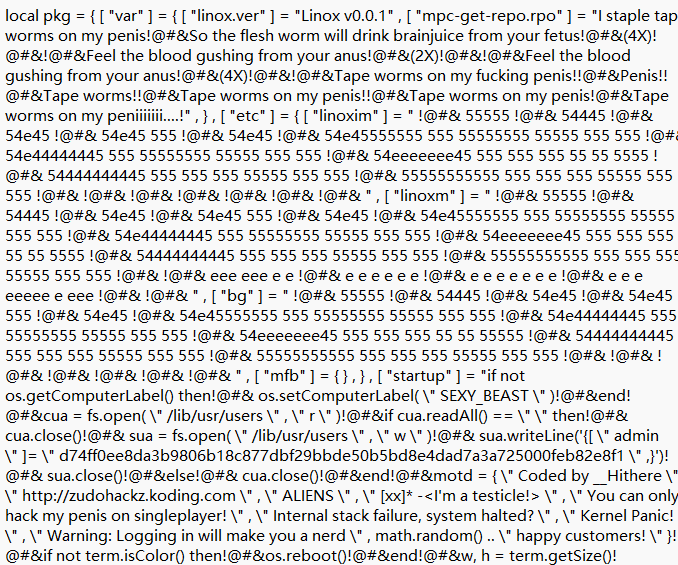

"@#&" [token 48193] occured in 25 out of 20610 chunks. 24 of these were profanity censors ("Everyone thinks they’re so f@#&ing cool and serious") and only contained a single instance, while the other was the above text (occuring 3299 times!), which was probably used to make the tokenizer, but removed from the training data.

I still don't know what the hell it is. I'll post the full text if anyone is interested.

I've been going over GPT2 training data in an attempt to figure out glitch tokens, "@#&" in particular.

Does anyone know what the hell this is? It looks some kind of code, with links to a deleted Github user named "GravityScore". What format is this, and where is it from?

but == 157)) then !@#& term.setCursorBlink(false)!@#& return nil!@#& end!@#& end!@#& end!@#& local a = sendLiveUpdates(e, but, x, y, p4, p5)!@#& if a then return a end!@#& end!@#&!@#& term.setCursorBlink(false)!@#& if line ~= nil then line = line:gsub( \" ^%s*(.-)%s*$ \" , \" %1 \" ) end!@#& return line!@#&end!@#&!@#&!@#&-- -------- Themes!@#&!@#&local defaultTheme = {!@#& background = \" gray \" ,!@#& backgroundHighlight = \" lightGray \" ,!@#& prompt = \" cyan \" ,!@#& promptHighlight = \" lightBlue \" ,!@#& err = \" red \" ,!@#& errHighlight = \" pink \" ,!@#&!@#& editorBackground = \" gray \" ,!@#& editorLineHightlight = \" lightBlue \" ,!@#& editorLineNumbers = \" gray \" ,!@#& editorLineNumbersHighlight = \" lightGray \" ,!@#& editorError = \" pink \" ,!@#& editorErrorHighlight = \" red \" ,!@#&!@#& textColor = \" white \" ,!@#& conditional = \" yellow \" ,!@#& constant = \" orange \" ,!@#& [ \" function \" ] = \" magenta \" ,!@#& string = \" red \" ,!@#& comment = \" lime \" !@#&}!@#&!@#&local normalTheme = {!@#& background = \" black \" ,!@#& backgroundHighlight = \" black \" ,!@#& prompt = \" black \" ,!@#& promptHighlight = \" black \" ,!@#& err = \" black \" ,!@#& errHighlight = \" black \" ,!@#&!@#& editorBackground = \" black \" ,!@#& editorLineHightlight = \" black \" ,!@#& editorLineNumbers = \" black \" ,!@#& editorLineNumbersHighlight = \" white \" ,!@#& editorError = \" black \" ,!@#& editorErrorHighlight = \" black \" ,!@#&!@#& textColor = \" white \" ,!@#& conditional = \" white \" ,!@#& constant = \" white \" ,!@#& [ \" function \" ] = \" white \" ,!@#& string = \" white \" ,!@#& comment = \" white \" !@#&}!@#&!@#&local availableThemes = {!@#& { \" Water (Default) \" , \" https://raw.github.com/GravityScore/LuaIDE/master/themes/default.txt \" },!@#& { \" Fire \" , \" https://raw.github.com/GravityScore/LuaIDE/master/themes/fire.txt \" },!@#& { \" Sublime Text 2 \" , \" https://raw.github.com/GravityScore/LuaIDE/master/themes/st2.txt \" },!@#& { \" Midnight \" , \" https://raw.github.com/GravityScore/LuaIDE/master/themes/midnight.txt \" },!@#& { \" TheOriginalBIT \" , \" https://raw.github.com/GravityScore/LuaIDE/master/themes/bit.txt \" },!@#& { \" Superaxander \" , \" https://raw.github.com/GravityScore/LuaIDE/master/themes/superaxander.txt \" },!@#& { \" Forest \" , \" https://raw.github.com/GravityScore/LuaIDE/master/themes/forest.txt \" },!@#& { \" Night \" , \" https://raw.github.com/GravityScore/LuaIDE/master/themes/night.txt \" },!@#& { \" Or