Making a conservative case for alignment

post by Cameron Berg (cameron-berg), Judd Rosenblatt (judd), phgubbins, AE Studio (AEStudio) · 2024-11-15T18:55:40.864Z · LW · GW · 67 commentsContents

AI-not-disempowering-humanity is conservative in the most fundamental sense We've been laying the groundwork for alignment policy in a Republican-controlled government Trump and some of his closest allies have signaled that they are genuinely concerned about AI risk Avoiding an AI-induced catastrophe is obviously not a partisan goal Winning the AI race with China requires leading on both capabilities and safety Concluding thought None 67 comments

Trump and the Republican party will wield broad governmental control during what will almost certainly be a critical period for AGI development. In this post, we want to briefly share various frames and ideas we’ve been thinking through and actively pitching to Republican lawmakers over the past months in preparation for the possibility of a Trump win.

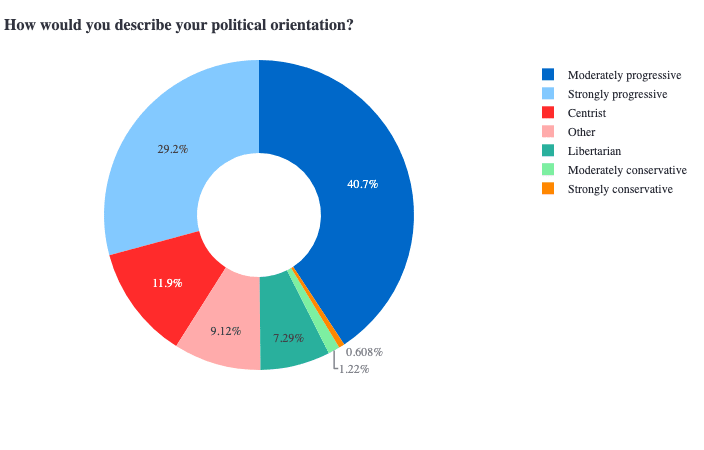

Why are we sharing this here? Given that >98% of the EAs and alignment researchers we surveyed [LW · GW] earlier this year identified as everything-other-than-conservative, we consider thinking through these questions to be another strategically worthwhile neglected [LW · GW] direction.

(Along these lines, we also want to proactively emphasize that politics is the mind-killer [LW · GW], and that, regardless of one’s ideological convictions, those who earnestly care about alignment must take seriously the possibility that Trump will be the US president who presides over the emergence of AGI—and update accordingly in light of this possibility.)

Political orientation: combined sample of (non-alignment) EAs and alignment researchers

AI-not-disempowering-humanity is conservative in the most fundamental sense

- The project of avoiding AI-induced human extinction and preserving our most fundamental values in the process are efforts that intuitively resonate with right-leaning thinkers and policymakers. Just as conservatives seek to preserve and build upon civilization's core institutions and achievements, alignment research aims to ensure our technological advances remain anchored to—rather than in conflict with—our core values.

- Consider Herman Kahn, a leading military strategist and systems theorist at RAND Corporation during the 1950s. He is famous for popularizing the notion of ‘thinking the unthinkable’ with respect to the existential risks faced during the Cold War nuclear arms race when most refused to systematically analyze the possibility of thermonuclear war and its aftermath. This same philosophy, intuitive to conservatives and liberals alike, cleanly maps onto our current moment with AI.

- A conservative approach to AI alignment doesn’t require slowing progress, avoiding open sourcing,[1] etc. Alignment and innovation are mutually necessary, not mutually exclusive: if alignment R&D indeed makes systems more useful and capable, then investing in alignment is investing in US tech leadership.[2] Just as the Space Race and Manhattan Project demonstrated how focused research efforts could advance both security and scientific progress, investing now in alignment research would ensure AI development cements rather than endangers American technological leadership. By solving key technical alignment challenges, we create the foundation for sustainable innovation that preserves our values while still pushing the frontier. The conservative tradition has always understood that lasting achievements require solid foundations and careful stewardship, not just unbounded acceleration.

- As Dean Ball has recently argued in his detailed policy proposals, Republican politics may actually be uniquely positioned to tackle the focused technical challenges of alignment, given that, in contrast to the left, they appear systemically less likely to be self-limited by ‘Everything-Bagel’ patterns of political behavior. Our direct engagement with Republican policymakers over the past months definitely also supports this view.

We've been laying the groundwork for alignment policy in a Republican-controlled government

- Self-deprecating note: we're still learning and iterating on these specific approaches, being fairly new to the policy space ourselves. What follows isn't meant to be a complete solution, but rather some early strategies that have worked better than we initially expected in engaging Republican policymakers on AI safety. We share them in hopes they might prove useful to others working toward these goals, while recognizing there's still much to figure out.

- Over recent months, we've built relationships with Republican policymakers and thinkers—notably including House AI task force representatives, senior congressional staffers, and influential think tank researchers. These discussions revealed significant receptivity to AGI risk concerns when we approached as authentically in-group. Taking time to explain technical fundamentals—like our uncertainty about AI model internals and how scaling laws work—consistently led to "oh shit, this seems like a big deal" moments. Coupling this with concrete examples of security vulnerabilities at major labs and concerns about China’s IP theft particularly drove home the urgency of the situation.

- We found ourselves naturally drawn to focus on helping policymakers "feel the AGI" before pushing specific initiatives. This proved crucial, as we discovered people need time to fully internalize the implications before taking meaningful action. The progression was consistent: once this understanding clicked, they'd start proposing solutions themselves—from increased USAISI funding to Pentagon engagement on AGI preparedness. Our main message evolved to emphasize the need for conservative leadership on AI alignment, particularly given that as AI capabilities grow exponentially, the impact of future government actions will scale accordingly.

- In these conversations, we found ourselves able to introduce traditionally "outside the box" concepts when properly framed and authentically appealing to conservative principles. This kind of "thinking the unthinkable" is exactly the sort of conservative approach needed: confronting hard realities and national security challenges head-on, distinct from less serious attempts to just make AI woke or regulate away innovation.

- A particularly promising development has been lawmakers’ receptivity to a Manhattan Project-scale public/private effort on AI safety, focused on fundamental R&D and hits-based neglected approaches [LW · GW] necessary to solve alignment. When they asked how to actually solve the challenge of aligning AGI, this emerged as our clearest tangible directive.[3] We see significant potential for such initiatives under Republican leadership, especially when framed in terms of sustaining technological supremacy and national security—themes that consistently resonated with conservatives looking to ensure American leadership. While we continue to grow these efforts internally and with various collaborators, the magnitude of the challenge demands this approach be replicated more widely. We aim to scale our own neglected approaches work aggressively, but more crucially, we need many organizations—public and private—pursuing these unconventional directions to maximize our chances of solving alignment.

- Throughout these interactions, we observed that Republican policymakers, while appropriately skeptical of regulatory overreach, become notably more engaged with transformative AI preparation once they understand the technical realities and strategic implications. This suggests we need more genuine conservatives (not just people who are kinda pretending to be) explaining these realities to lawmakers, as we've found them quite capable of grasping complex technical concepts and being motivated to act in light of them despite their initial unfamiliarity.

- Looking back, our hints of progress here seem to have come from emphasizing both the competitive necessity of getting alignment right and the exponential nature of AI progress, while maintaining credibility as conservative-leaning voices who understand the importance of American leadership. Others looking to engage with Republican policymakers should focus on building authentic relationships around shared values rather than pursuing immediate policy commitments or feigning allegiance. The key is demonstrating genuine understanding of both technical challenges and conservative priorities while emphasizing the strategic importance of leadership in AI alignment.

Trump and some of his closest allies have signaled that they are genuinely concerned about AI risk

- Note: extremely high-variance characters, of course, but still worth highlighting

- Largely by contrast to Kamala’s DEI-flavored ‘existential to who’ comments about AI risk, Trump has suggested in his characteristic style that ‘super duper AI’ is both plausible and frightening. While this does not exactly reflect deep knowledge of alignment, it is clear that Trump is likely sympathetic to the basic concern—and his uniquely non-ideological style likely serves him well in this respect.

- Ivanka Trump, who had significant sway over her father during his first term, has directly endorsed Situational Awareness on X and started her own website to aggregate resources related to AI advancements.

- Elon Musk will have nontrivial influence on the Trump Administration. While unpredictable in his own right, it seems quite likely that he fundamentally understands the concern and the urgency of solving alignment.

Avoiding an AI-induced catastrophe is obviously not a partisan goal

- The current political landscape is growing increasingly harmful to alignment progress. The left has all-too-often conflated existential risk with concerns about bias, while the right risks dismissing all safety considerations as "woke AI" posturing. This dynamic risks entrenching us in bureaucratic frameworks that make serious technical work harder, not easier. This divide is also relatively new—five Democrats (but no Republicans) recently signed onto a letter to OpenAI inquiring about their safety procedures; we suspect that 6-8 months ago, this kind of effort likely would have had bipartisan support. We're losing precious time to political theater while crucial technical work remains neglected.

- A more effective framework would channel American ingenuity toward solving core alignment problems rather than imposing restrictive regulations. Looking to Bell Labs and Manhattan Project as models, we should foster fundamental innovation through lightweight governance focused on accelerating progress while simultaneously preventing catastrophic outcomes. It is plausible that alignment work can enhance rather than constrain AI competitiveness, which can create natural synergy between alignment research and American technological leadership.

- We think substantial government funding should be directed toward ambitious set of neglected approaches to alignment. We think government investment in a wide range of alignment research agendas could be among the highest-EV actions that the US federal government could take in the short term.

Winning the AI race with China requires leading on both capabilities and safety

- Trump and the Republicans will undoubtedly adopt an "America First" framing in the race toward AGI. But true American AI supremacy requires not just being first, but being first to build AGI that remains reliably under American control and aligned with American interests. An unaligned AGI would threaten American sovereignty just as much as a Chinese-built one—by solving alignment first, America could achieve lasting technological dominance rather than merely winning a preliminary sprint toward an uncontrollable technology.

- Both the US and China increasingly recognize AGI as simultaneously humanity's most powerful technology and its greatest existential risk. Before he died, Henry Kissinger visited Beijing to warn Xi Jinping about uncontrolled AI development. Since then, Xi has been characterized by some as a ‘doomer’ and has stated AI will determine "the fate of all mankind" and must remain controllable.[4] This is a concern that likely resonates particularly deeply with an authoritarian regime paranoid about losing control to anything/anyone, let alone to superintelligent AI systems.

- The pragmatic strategic move in this context may therefore be for the US to lead aggressively on both capabilities and safety. By applying the ‘Pottinger Paradox’—where projecting strength yields better outcomes with authoritarian leaders than seeking accommodation—America can shape global AI development through solving alignment first. This would give us systems that naturally outcompete unsafe ones while still addressing the existential risks that even our greatest rivals acknowledge. With the relevant figures in Trump's circle already demonstrating some nontrivial awareness of AGI risks, we may be well-positioned to pursue this strategy of achieving technological supremacy through safety leadership rather than in spite of it.

Many of these ideas seem significantly more plausible to us in a world where negative alignment taxes [LW · GW] materialize—that is, where alignment techniques are discovered that render systems more capable by virtue of their alignment properties. It seems quite safe to bet that significant positive alignment taxes simply will not be tolerated by the incoming federal Republican-led government—the attractor state of more capable AI will simply be too strong. Given that alignment must still proceed, uncovering strategies that make systems reliably safer (critical for x-risk) and more competent (current attractor state) may nudge the AGI-possibility-space away from existentially risky outcomes.

Concluding thought

We are operating under the assumption that plans have to be recomputed when the board state meaningfully shifts [LW(p) · GW(p)], and Trump’s return to power is no exception to this rule. We are re-entering a high-variance political environment, which may well come to be viewed in hindsight as having afforded the optimal political conditions for pursuing, funding, and scaling high-quality alignment work. This moment presents a unique opportunity for alignment progress if we can all work effectively across political lines.

- ^

We suspect there is also an emerging false dichotomy between alignment and open-source development. In fact, open-source practices have been instrumental in advancing various forms of alignment research, with many of the field's biggest breakthroughs occurring after the advent of open-source AI models. Beren's caveat and conclusion both seem sensible here:

Thus, until the point at which open source models are directly pushing the capabilities frontier themselves then I consider it extremely unlikely that releasing and working on these models is net-negative for humanity (ignoring potential opportunity cost which is hard to quantify).

- ^

To this end, we note that Marc Andreesen-style thinkers don't have to be antagonists to AI alignment—in fact, he and others have the potential to be supportive funders of alignment efforts.

- ^

Initially, we felt disinclined to advocate in DC for neglected approaches because it happens to be exactly what we are doing at AE Studio. However, we received feedback to be more direct about it, and we're glad we did—it has proven to be not only technically promising but also resonated surprisingly well with conservatives, especially when coupled with ideas around negative alignment taxes and increased economic competitiveness. At AE, we began this approach in early 2023, refined our ideas, and have already seen more [LW · GW] encouraging [LW · GW] results [LW · GW] than initially expected.

- ^

It’s important to note that anything along these lines coming from the CCP must be taken with a few grains of salt—but we’ve spoken with quite a few China policy experts who do seem to believe that Xi genuinely cares about safety.

67 comments

Comments sorted by top scores.

comment by Orpheus16 (akash-wasil) · 2024-11-15T20:35:31.232Z · LW(p) · GW(p)

I agree with many points here and have been excited about AE Studio's outreach. Quick thoughts on China/international AI governance:

- I think some international AI governance proposals have some sort of "kum ba yah, we'll all just get along" flavor/tone to them, or some sort of "we should do this because it's best for the world as a whole" vibe. This isn't even Dem-coded so much as it is naive-coded, especially in DC circles.

- US foreign policy is dominated primarily by concerns about US interests. Other considerations can matter, but they are not the dominant driving force. My impression is that this is true within both parties (with a few exceptions).

- I think folks interested in international AI governance should study international security agreements and try to get a better understanding of relevant historical case studies. Lots of stuff to absorb from the Cold War, the Iran Nuclear Deal, US-China relations over the last several decades, etc. (I've been doing this & have found it quite helpful.)

- Strong Republican leaders can still engage in bilateral/multilateral agreements that serve US interests. Recall that Reagan negotiated arms control agreements with the Soviet Union, and the (first) Trump Administration facilitated the Abraham Accords. Being "tough on China" doesn't mean "there are literally no circumstances in which I would be willing to sign a deal with China." (But there likely does have to be a clear case that the deal serves US interests, has appropriate verification methods, etc.)

↑ comment by Algon · 2024-11-16T17:02:49.158Z · LW(p) · GW(p)

I think some international AI governance proposals have some sort of "kum ba yah, we'll all just get along" flavor/tone to them, or some sort of "we should do this because it's best for the world as a whole" vibe. This isn't even Dem-coded so much as it is naive-coded, especially in DC circles.

This inspired me to write a silly dialogue.

Simplicio enters. An engine rumbles like the thunder of the gods, as Sophistico focuses on ensuring his MAGMA-O1 racecar will go as fast as possible.

Simplicio: "You shouldn't play Chicken."

Sophistico: "Why not?"

Simplicio: "Because you're both worse off?"

Sophistico, chortling, pats Simplicio's shoulder

Sophistico: "Oh dear, sweet, naive Simplicio! Don't you know that no one cares about what's 'better for everyone?' It's every man out for himself! Really, if you were in charge, Simplicio, you'd be drowned like a bag of mewling kittens."

Simplicio: "Are you serious? You're really telling me that you'd prefer to play a game where you and Galactico hurtle towards each other on tonnes of iron, desperately hoping the other will turn first?"

Sophistico: "Oh Simplicio, don't you understand? If it were up to me, I wouldn't be playing this game. But if I back out or turn first, Galactico gets to call me a Chicken, and say his brain is much larger than mine. Think of the harm that would do to the United Sophist Association! "

Simplicio: "Or you could die when you both ram your cars into each other! Think of the harm that would do to you! Think of how Galactico is in the same position as you! "

Sophistico shakes his head sadly.

Sophistico: "Ah, I see! You must believe steering is a very hard problem. But don't you understand that this is simply a matter of engineering? No matter how close Galactico and I get to the brink, we'll have time to turn before we crash! Sure, there's some minute danger that we might make a mistake in the razor-thin slice between utter safety and certain doom. But the probability of harm is small enough that it doesn't change the calculus."

Simplicio: "You're not getting it. Your race against each other will shift the dynamics of when you'll turn. Each moment in time, you'll be incentivized to go just a little further until there's few enough worlds that that razor-thin slice ain't so thin any more. And your steering won't save from that. It can't. "

Sophistico: "What an argument! There's no way our steering won't be good enough. Look, I can turn away from Galactico's car right now, can't I? And I hardly think we'd push things till so late. We'd be able to turn in time. And moreover, we've never crashed before, so why should this time be any different?"

Simplico: "You've doubled the horsepower of your car and literally tied a rock to the pedal! You're not going to be able to stop in time!"

Sophistico: "Well, of course I have to go faster than last time! USA must be first, you know?"

Simplicio: "OK, you know what? Fine. I'll go talk to Galactico. I'm sure he'll agree not to call you chicken."

Sophistico: "That's the most ridiculous thing I've ever heard. Galactico's ruthless and will do anything to beat me."

Simplicio leaves as Acceleratio arrives with a barrel of jetfuel for the scramjet engine he hooked up to Simplicio's O-1.

↑ comment by dr_s · 2024-11-18T14:36:31.627Z · LW(p) · GW(p)

Pretty much. It's not "naive" if it's literally the only option that actually does not harm everyone involved, unless of course we want to call every world leader and self-appointed foreign policy expert a blithering idiot with tunnel vision (I make no such claim a priori; ball's in their court).

It's important to not oversimplify things. It's also important to not overcomplicate them. Domain experts tend to be resistant to the first kind of mental disease, but tragically prone to the second. Sometimes it really is Just That Simple, and everything else is commentary and superfluous detail.

Replies from: Algon↑ comment by Algon · 2024-11-18T18:26:06.743Z · LW(p) · GW(p)

If I squint, I can see where they're coming from. People often say that wars are foolish, and both sides would be better off if they didn't fight. And this is standardly called "naive" by those engaging in realpolitik. Sadly, for any particular war, there's a significant chance they're right. Even aside from human stupidity, game theory is not so kind as to allow for peace unending. But the China-America AI race is not like that. The Chinese don't want to race. They've shown no interest in being part of a race. It's just American hawks on a loud, Quixotic quest masking the silence.

If I were to continue the story, it'd show Simplicio asking Galactico not to play Chicken and Galacitco replying "race? What race?". Then Sophistico crashes into Galactico and Simplicio. Everyone dies, The End.

Replies from: dr_s↑ comment by dr_s · 2024-11-21T15:41:03.813Z · LW(p) · GW(p)

People often say that wars are foolish, and both sides would be better off if they didn't fight. And this is standardly called "naive" by those engaging in realpolitik. Sadly, for any particular war, there's a significant chance they're right. Even aside from human stupidity, game theory is not so kind as to allow for peace unending.

I'm not saying obviously that ALL conflict ever is avoidable or irrational, but there are a lot that are:

- caused by a miscommunication/misunderstanding/delusional understanding of reality;

- rooted in a genuine competition between conflicting interests, but those interests only pertain to a handful of leaders, and most of the people actually doing the fighting really have no genuine stake in it, just false information and/or a giant coordination problem that makes it hard to tell those leaders to fuck off;

- rooted in a genuine competition between conflicting interests between the actual people doing the fighting, but the gains are still not so large to justify the costs of the war, which have been wildly underestimated.

And I'd say that just about makes up a good 90% of all conflicts. There's a thing where people who are embedded into specialised domains start seeing the trees ("here is the complex clockwork of cause-and-effect that made this thing happen") and missing the forest ("if we weren't dumb and irrational as fuck none of this would have happened in the first place"). The main point of studying past conflicts should be to distil here and there a bit of wisdom about how in fact lot of that stuff is entirely avoidable if people can just stop being absolute idiots now and then.

↑ comment by Matrice Jacobine · 2024-11-16T02:27:00.493Z · LW(p) · GW(p)

My impression is that (without even delving into any meta-level IR theory debates) Democrats are more hawkish on Russia while Republicans are more hawkish on China. So while obviously neither parties are kum-ba-yah and both ultimately represent US interests, it still makes sense to expect each party to be less receptive to the idea of ending any potential arms race against the country they consider an existential threat to US interests if left unchecked, so the party that is more hawkish on a primarily military superpower would be worse on nuclear x-risk, and the party that is more hawkish on a primarily economic superpower would be worse on AI x-risk and environmental x-risk. (Negotiating arms control agreements with the enemy superpower right during its period of liberalization and collapse or facilitating a deal between multiple US allies with the clear goal of serving as a counterweight to the purported enemy superpower seems entirely irrelevant here.)

comment by Sting · 2024-11-16T15:51:26.471Z · LW(p) · GW(p)

Great post. I did not know things were this bad:

Given that >98% of the EAs and alignment researchers we surveyed earlier this year identified as everything-other-than-conservative, we consider thinking through these questions to be another strategically worthwhile neglected direction.

....This suggests we need more genuine conservatives (not just people who are kinda pretending to be) explaining these realities to lawmakers, as we've found them quite capable of grasping complex technical concepts and being motivated to act in light of them despite their initial unfamiliarity.

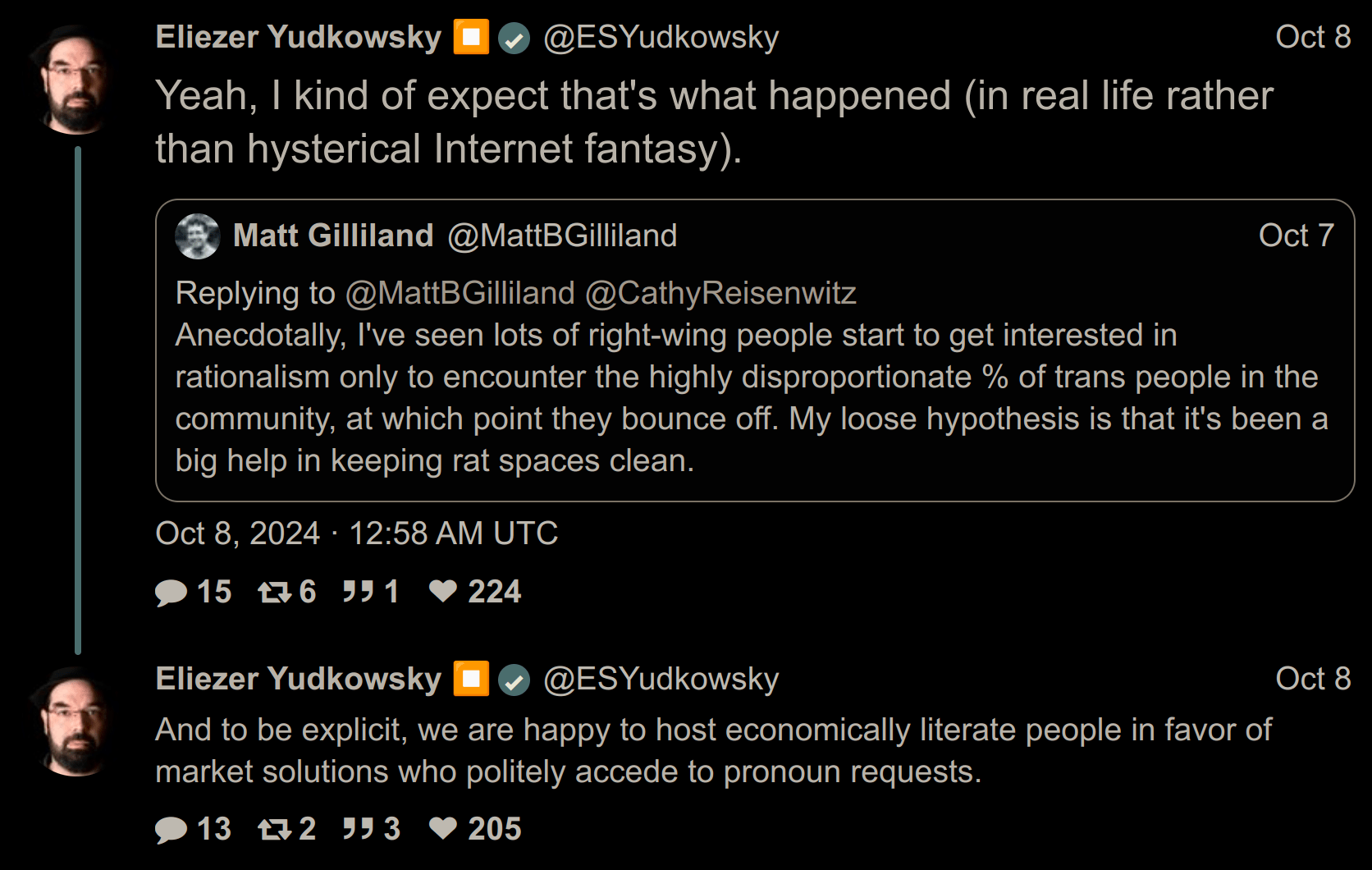

Perhaps the policy of "You will use people's preferred pronouns, and you will be polite about it, or we don't want you in rationalist spaces" didn't help here?

Any community is free to have whatever standards they want for membership, including politically-coded compelled speech. But it is not exactly shocking if your membership is then composed 70% of one side and <2% of the other.

(To be clear, any movement centered in California will have more progressives, so political partisanship is not responsible for the full 35:1 progressive-to-conservative ratio. But when people are openly referring to the lack of right-wingers as "keeping rat spaces clean" with no push-back, that's a clue that it isn't exactly welcoming to conservatives.)

Replies from: xpym, Viliam, Sting, martin-randall↑ comment by xpym · 2024-11-19T12:31:20.621Z · LW(p) · GW(p)

A more likely explanation, it seems to me, is that a large part of early LW/sequences was militant atheism, with religion being the primary example of the low "sanity waterline", and this hasn't been explicitly disclaimed since, at best de-emphasized. So this space had done its best to repel conservatives much earlier than pronouns and other trans issues entered the picture.

Replies from: Viliam, Sting↑ comment by Viliam · 2024-11-20T12:26:36.806Z · LW(p) · GW(p)

I approve of the militant atheism, because there are just too many religious people out there, so without making a strong line we would have an Eternal September of people joining Less Wrong just to say "but have you considered that an AI can never have a soul?" or something similar.

And if being religious is strongly correlated with some political tribe, I guess it can't be avoided.

But I think that going further than that is unnecessary and harmful.

Actually, we should probably show some resistance to the stupid ideas of other political tribes, just to make our independence clear. Otherwise, people would hesitate to call out bullshit when it comes from those who seem associated with us. (Quick test: Can you say three things the average Democrat believes that are wrong and stupid? What reaction would you expect if you posted your answer on LW?)

Specifically on trans issues:

I am generally in favor of niceness and civilization, therefore:

- If someone calls themselves "he" or "she", I will use that pronoun without thinking twice about it.

- I disapprove of doxing in general, which extends to all speculations about someone's biological sex.

But I also value rationality and free speech, therefore:

- I insist on keeping an "I don't know, really" attitude to trans issues. I don't know, really. The fact that you are yelling at me does not make your arguments any more logically convincing.

- No, I am not literally murdering you by disagreeing with you. Let's tone down the hysteria.

- There are people who feel strongly that they are Napoleon. If you want to convince me, you need to make a stronger case than that.

- I specifically disagree on the point that if someone changes their gender, it retroactively changes their entire past. If someone presented as male for 50 years, then changed to female, it makes sense to use "he" to refer to their first 50 years, especially if this is the pronoun everyone used at that time. Also, I will refer to them using the name they actually used at that time. (If I talk about the Ancient Rome, I don't call it Italian Republic either.) Anything else feels like magical thinking to me. I won't correct you if you do that, but please do not correct me, or I will be super annoyed.

↑ comment by xpym · 2024-11-20T16:29:14.603Z · LW(p) · GW(p)

My biggest problem with the trans discourse is that it's a giant tower of motte-and-baileys, and there's no point where it's socially acceptable to get off the crazy train.

Sure, at this point it seems likely that gender dysphoria isn't an entirely empty notion. Implying that this condition might be in any way undesirable is already a red line though, with discussions of how much of it is due to social contagion being very taboo, naturally. And that only people experiencing bad enough dysphoria to require hormones and/or surgery could claim to be legitimately trans is a battle lost long ago.

Moving past that, there is non-binary, genderfluid, neo-genders, otherkin, etc, concepts that don't seem to be plausibly based in some currently known crippling biological glitch, and yet those identities are apparently just as legitimate. Where does it stop? Should society be entirely reorganized every time a new fad gains traction? Should everybody questioning that be ostracized?

Then there's the "passing" issue. I accept the argument that nowadays in most social situations we have no strong reasons to care about chromosomes/etc, people can successfully play many roles traditionally associated with the opposite sex. But sexual dimorphism is the entire reason for having different pronouns in the first place, and yet apparently you don't even have to try (at all, let alone very hard) to "pass" as your chosen gender for your claim to be legitimate. What is the point? Here the unresolved tension between gender-critical and gender-affirming feminism is the most glaring.

↑ comment by Martin Randall (martin-randall) · 2024-12-10T02:57:19.963Z · LW(p) · GW(p)

Also, I will refer to them using the name they actually used at that time. (If I talk about the Ancient Rome, I don't call it Italian Republic either.)

A closer comparison than Ancient Rome is that all types of people change their names on occasions, e.g. on marriage, so we have lots of precedent for referring to people whose names have changed. This includes cases where they strongly dislike their former names. Those traditions balance niceness, civilization, rationality, and free speech.

Disclaimer: not a correction, just a perspective.

↑ comment by Q Home · 2024-11-25T05:59:13.577Z · LW(p) · GW(p)

There are people who feel strongly that they are Napoleon. If you want to convince me, you need to make a stronger case than that.

It's confusing to me that you go to "I identify as an attack helicopter" argument after treating biological sex as private information & respecting pronouns out of politeness. I thought you already realize that "choosing your gender identity" and "being deluded you're another person" are different categories.

If someone presented as male for 50 years, then changed to female, it makes sense to use "he" to refer to their first 50 years, especially if this is the pronoun everyone used at that time. Also, I will refer to them using the name they actually used at that time. (If I talk about the Ancient Rome, I don't call it Italian Republic either.) Anything else feels like magical thinking to me.

The alternative (using new pronouns / name) makes perfect sense too, due to trivial reasons, such as respecting a person's wishes. You went too far calling it magical thinking. A piece of land is different from a person in two important ways: (1) it doesn't feel anything no matter how you call it, (2) there's less strong reasons to treat it as a single entity across time.

Replies from: Viliam↑ comment by Viliam · 2024-11-25T15:15:34.795Z · LW(p) · GW(p)

Ah, I disagree, and I don't really wish to discuss the details, so just shortly:

- I assume that for trans people being trans is something more than mere "choice" (even if I don't wish to make guesses what exactly, I suspect something with hormones; this is an empirical question for smart people to figure out). If this turns out not to be true, I will probably be annoyed.

- If you introduce yourself as "Jane" today, I will refer to you as "Jane". But if 50 years ago you introduced yourself as "John", that is a fact about the past. I am not saying that "you were John" as some kind of metaphysical statement, but that "everyone, including you, referred to you as John" 50 years ago, which is a statement of fact.

↑ comment by Q Home · 2024-11-26T06:06:33.338Z · LW(p) · GW(p)

Even if we assume that there should be a crisp physical cause of "transness" (which is already a value-laden choice), we need to make a couple of value-laden choices before concluding if "being trans" is similar to "believing you're Napoleon" or not. Without more context it's not clear why you bring up Napoleon. I assume the idea is "if gender = hormones (gender essentialism), and trans people have the right hormones, then they're not deluded". But you can arrive at the same conclusion ("trans people are not deluded") by means other than gender essentialism.

I assume that for trans people being trans is something more than mere "choice"

There doesn't need to be a crisp physical cause of "transness" for "transness" to be more than mere choice. There's a big spectrum between "immutable physical features" and "things which can be decided on a whim".

If you introduce yourself as "Jane" today, I will refer to you as "Jane". But if 50 years ago you introduced yourself as "John", that is a fact about the past. I am not saying that "you were John" as some kind of metaphysical statement, but that "everyone, including you, referred to you as John" 50 years ago, which is a statement of fact.

This just explains your word usage, but doesn't make a case that disliking deadnaming is magical thinking.

I've decided to comment because bringing up Napoleon, hysteria and magical thinking all at once is egregiously bad faith. I think it's not a good epistemic norm to imply something like "the arguments of the outgroup are completely inconsistent trash" without elaborating.

Replies from: Viliam↑ comment by Viliam · 2024-11-26T16:20:47.187Z · LW(p) · GW(p)

Napoleon is merely an argument for "just because you strongly believe it, even if it is a statement about you, does not necessarily make it true".

We will probably disagree on this, but the only reason I care about trans issues is that some people report significant suffering (gender dysphoria) from their current situation, and I am in favor of people not suffering, so I generally try not to be an asshole.

Unfortunately, for every person who suffers from something, there are probably dozen people out there who cosplay their condition... because it makes them popular on Twitter I guess, or just gives them another opportunity to annoy their neighbors. I have no empathy for those. Play your silly games, if you wish, but don't expect me to play along, and definitely don't threaten me to play along. Also, the cosplayers often make the situation more difficult for those who genuinely have the condition, by speaking in their name, and often saying things that the people who actually have the condition would disagree with... and in the most ironic cases, the cosplayers get them cancelled. So I don't mind being an asshole to the cosplayers, because from my perspective, they started it first.

The word "deadnaming" is itself hysterical. (Who died? No one.)

Gender essentialism? I don't make any metaphysical claim about essences. People simply are born with male or female bodies (yes, I know that some are intersex), and some people are strongly unhappy about their state. I find it plausible that there may be an underlying biological reason for that; and hormones seem like a likely candidate, because that's how body communicates many things. I don't have a strong opinion on that, because I have never felt a desire to be one sex or the other, just like I have never felt a strong desire to have a certain color of eyes, or hair, or skin, whether it would be the one I have or some that I have not.

I expect that you will disagree with a lot of this, and that's okay; I am not trying to convince you, just explaining my position.

Replies from: gjm, Q Home↑ comment by gjm · 2024-11-29T21:30:43.661Z · LW(p) · GW(p)

I don't think "deadname" is a ridiculous term just because no one died. The idea is that the name is dead: it's not being used any more. Latin is a "dead language" because (roughly speaking) no one speaks or writes in Latin. "James" is a "dead name" because (roughly speaking) no one calls that person "James" any more.

This all seems pretty obvious to me, and evidently it seems the opposite way to you, and both of us are very smart [citation needed], so probably at least one of us is being mindkilled a bit by feeling strongly about some aspect of the issue. I don't claim to know which of us it is :-).

Replies from: Benito↑ comment by Ben Pace (Benito) · 2024-11-29T21:40:20.037Z · LW(p) · GW(p)

As my 2 cents, the phrase 'deadname' to me sounded like it caught on because it was hyperbolic and imputes aggression – similar to how phrases like trauma caught on (which used to primarily refer to physical damage like the phrase "blunt-forced trauma") and notions spread that "words can be violence" (which seems to me to be bending the meaning of words like 'violence' too far and is trying to get people on board for a level of censorship that isn't appropriate). I similarly recall seeing various notions on social media that not using the requested pronouns for transgender people constituted killing them due the implied background levels of violence towards such people in society.

Overall this leaves me personally choosing not to use the term 'deadname' and I reliably taboo it when I wish to refer to someone using the person's former alternative-gendered name.

Replies from: gjm↑ comment by gjm · 2024-11-29T22:02:41.828Z · LW(p) · GW(p)

"Trauma" meaning psychological as opposed to physical damage goes back to the late 19th century.

I agree that there's a widespread tendency to exaggerate the unpleasantness/harm done by mere words. (But I suggest there's an opposite temptation too, to say that obviously no one can be substantially harmed by mere words, that physical harm is different in kind from mere psychological upset, etc., and that this is also wrong.)

I agree that much of the trans community seems to have embraced what looks to me like a severely hyperbolic view of how much threat trans people are under. (But, usual caveats: it's very common for the situation of a minority group to look and feel much worse from the inside than from the outside, and generally this isn't only a matter of people on the inside being oversensitive, it's also a matter of people on the outside not appreciating how much unpleasantness those on the inside face. So my guess is that that view is less hyperbolic than it looks to me.)

I agree that the term "deadname" is probably popular partly because "using my deadname" has more of an obviously-hostile-move sound than "using my old name" or similar. But if we avoid every term with any spin attached, we'll have to stop calling people liberals (as if no one else cared about freedom) or conservatives (as if their opponents were against preserving valuable things) or Catholics (the word means "universal") or pro-life or pro-choice or, or, or, or. For my part, I avoid some spinny terms but not others, on the basis of gut feeling about how much actual wrongness is baked into them and how easy it is to find other language, which (I don't know how coherently) cashes out as being broadly OK with "liberal" and "conservative", preferring to avoid "pro-life" and "pro-choice" or at least making some snarky remarks about the terms before using them, avoiding the broadest uses of the term "transphobia", etc. And for me "deadname" seems obviously basically OK even though, yes, the term was probably chosen partly for connotations one might take issue with. Your mileage may vary.

Replies from: Benito↑ comment by Ben Pace (Benito) · 2024-11-29T22:05:30.064Z · LW(p) · GW(p)

I agree that which terms people use vs taboo is a judgment call, I don't mean to imply that others should clearly see these things the same as me.

↑ comment by Q Home · 2024-11-27T05:58:36.938Z · LW(p) · GW(p)

Napoleon is merely an argument for "just because you strongly believe it, even if it is a statement about you, does not necessarily make it true".

When people make arguments, they often don't list all of the premises. That's not unique to trans discourse. Informal reasoning is hard to make fully explicit. "Your argument doesn't explicitly exclude every counterexample" is a pretty cheap counter-argument. What people experience is important evidence and an important factor, it's rational to bring up instead of stopping yourself with "wait, I'm not allowed to bring that up unless I make an analytically bulletproof argument". For example, if you trust someone that they feel strongly about being a woman, there's no reason to suspect them of being a cosplayer who chases Twitter popularity.

I expect that you will disagree with a lot of this, and that's okay; I am not trying to convince you, just explaining my position.

I think I still don't understand the main conflict which bothers you. I thought it was "I'm not sure if trans people are deluded in some way (like Napoleons, but milder) or not". But now it seems like "I think some people really suffer and others just cosplay, the cosplayers take something away from true sufferers". What is taken away?

Replies from: Viliam↑ comment by Viliam · 2024-11-27T11:50:01.961Z · LW(p) · GW(p)

I think I still don't understand the main conflict which bothers you.

Two major points.

1) It annoys me if someone insists that I accept their theory about what being trans really is.

Zack insists that Blanchard is right, and that I fail at rationality if I disagree with him. People on Twitter and Reddit insist that Blanchard is wrong, and that I fail at being a decent human if I disagree with them. My opinion is that I have no comparative advantage at figuring out who is right and who is wrong on this topic, or maybe everyone is wrong, anyway it is an empirical question and I don't have the data. I hope that people who have more data and better education will one day sort it out, but until that happens, my position firmly remains "I don't know (and most likely neither do you), stop bothering me".

Also, from larger perspective, this is moving the goalposts. Long ago, tolerance was defined as basically not hurting other people, and letting them do whatever they want as long as it does not hurt others. Recently it also includes agreeing with the beliefs of their woke representatives. (Note that this is about the representatives, not the people being represented. Two trans people can have different opinions, but you are required to believe the woke one and oppose the non-woke one.) Otherwise, you are transphobic. I completely reject that. Furthermore, I claim that even trans people themselves are not necessarily experts on themselves. Science exists for a reason, otherwise we could just make opinion polls.

Shortly: disagreement is not hate. But it often gets conflated, especially in environments that overwhelmingly contain people of one political tribe.

2) Every cause gets abused. It is bad if it becomes a taboo to point this out.

A few months (or is it already years?) ago, there was an epidemic of teenagers on TikTok who appeared to have developed Tourette syndrome overnight. A few weeks or months later, apparently the epidemic was gone. I have no way to check those teenagers, but I think it is reasonable to assume that many of them were faking it. Why would anyone do that? Most likely, attention seeking. (There is also a things called Munchausen syndrome.) This is what I referred to as "cosplayers".

Note that this is completely different from saying that Tourette syndrome does not exist.

If you adopt a rule that e.g. everyone must use everyone else's preferred pronouns all the time, no exception, and you get banned for hate speech otherwise, this becomes a perfect opportunity for... anyone who enjoys using it as a leverage. You get an explosion of pronouns: it starts with "he" and "she", proceeds with "they", then you get "xe", "ve", "foo", "bar", "baz", and ultimately anyone is free to make up their own pronouns, and everyone else is required to play along, or else. (That's when you get the "attack helicopters" as an attempt to point out the absurdity of the system.)

Again, moving the goalposts. We started with trans people who report feeling gender dysphoria, so we use their preferred pronouns to alleviate their suffering. So far, okay. But if there is a person who actually feels dysphoria from not being addressed as "ve" (someone who would be triggered by calling them any of: "he", "she", or "they"), then I believe that this is between them and their psychiatrist, and I want to be left out of this game.

Another annoying thing is how often this is used to derail the debate (on places like Twitter and Reddit). Suppose that someone is called "John" and has a male-passing photo. So you try to say something about John, and your automatically use the pronoun "he". Big mistake! You haven't noticed it, but recently John identifies as agender. And whatever you wanted to talk about originally is unimportant now, and the thread becomes about what a horrible person you are. Okay, you have learned your lesson; but the point is that the next time someone else is going to make the same mistake. So it basically becomes impossible to discuss John, ever. And sometimes, it is important to be able to discuss John, without getting the debate predictably derailed.

Shortly: misgendering should be considered bad manners, but not something you ban people for.

...and that's basically all.

Replies from: Q Home↑ comment by Q Home · 2024-11-28T11:18:14.793Z · LW(p) · GW(p)

I'll describe my general thoughts, like you did.

I think about transness in a similar way to how I think about homo/bisexuality.

- If homo/bisexuality is outlawed, people are gonna suffer. Bad.

- If I could erase homo/bisexuality from existence without creating suffering, I wouldn't anyway. Would be a big violation of people's freedom to choose their identity and actions (even if in practice most people don't actually "choose" to be homo/bisexual).

- Different people have homo/bisexuality of different "strength" and form. One man might fall in love with another man, but dislike sex or even kissing. Maybe he isn't a real homosexual, if he doesn't need to prove it physically? Another man might identify as a bisexual, but be in a relationship with a woman... he doesn't get to prove his bisexuality (sexually or romantically). Maybe we shouldn't trust him unless he walks the talk? As a result of all such situations, we might have certain "inconsistencies": some people identifying as straight have done more "gay" things than people identifying as gay. My opinion on this? I think all of this is OK. Pushing for an "objective gay test" would be dystopian and suffering-inducing. I don't think it's an empirical matter (unless we choose it to be, which is a value-laden choice). Even if it was, we might be very far away from resolving it. So just respecting people's self-identification in the meantime is best, I believe. Moreover, a lot of this is very private information anyway. Less reason to try measuring it "objectively".

My thoughts about transness specifically:

- We strive for gender equality (I hope). Which makes the concept of gender less important for society as a whole.

- The concept of gender is additionally damaged by all the things a person can decide to do in their social/sexual life. For example, take an "assigned male at birth" (AMAB) person. AMAB can appear and behave very feminine without taking hormones. Or vice-versa (take hormones, get a pair of boobs, but present masculine). Additionally there are different degrees of medical transition and different types of sexual preferences.

- A lot of things which make someone more or less similar to a man/woman (behavior with friends, behavior with romantic partners, behavior with sexual partners, thoughts) are private. Less reason to try measuring those "objectively".

- I have a choice to respect people's self-identified genders or not. I decide to respect them. Not just because I care about people's feelings, but also because of points 1 & 2 & 3 and because of my general values (I show similar respect to homo/bisexuals). So I respect pronouns, but on top of that I also respect if someone identifies as a man/woman/nonbinary. I believe respect is optimal in terms of reducing suffering and adhering to human values.

When I compare your opinion to mine, most of my confusion is about two things: what exactly do you see as an empirical question? how does the answer (or its absence) affect our actions?

Zack insists that Blanchard is right, and that I fail at rationality if I disagree with him. People on Twitter and Reddit insist that Blanchard is wrong, and that I fail at being a decent human if I disagree with them. My opinion is that I have no comparative advantage at figuring out who is right and who is wrong on this topic, or maybe everyone is wrong, anyway it is an empirical question and I don't have the data. I hope that people who have more data and better education will one day sort it out, but until that happens, my position firmly remains "I don't know (and most likely neither do you), stop bothering me".

I think we need to be careful to not make a false equivalence here:

- Trans people want us to respect their pronouns and genders.

- I'm not very familiar with Blanchard, so far it seems to me like Blanchard's work is (a) just a typology for predicting certain correlations and (b) this work is sometimes used to argue that trans people are mistaken about their identities/motivations.

2A is kinda tangential to 1. So is this really a case of competing theories? I think uncertainty should make one skeptical of Blanchard work's implications rather than make one skeptical about respecting trans people.

(Note that this is about the representatives, not the people being represented. Two trans people can have different opinions, but you are required to believe the woke one and oppose the non-woke one.) Otherwise, you are transphobic. I completely reject that.

Two homo/bisexuals can have different opinions on what's "true homo/bisexuality" is too. Some opinions can be pretty negative. Yes, that's inconvenient, but that's just an expected course of events.

Shortly: disagreement is not hate. But it often gets conflated, especially in environments that overwhelmingly contain people of one political tribe.

I feel it's just the nature of some political questions. Not in all questions, not in all spaces you can treat disagreement as something benign.

But if there is a person who actually feels dysphoria from not being addressed as "ve" (someone who would be triggered by calling them any of: "he", "she", or "they"), then I believe that this is between them and their psychiatrist, and I want to be left out of this game.

Agree. Also agree that lynching for accidental misgendering is bad.

(That's when you get the "attack helicopters" as an attempt to point out the absurdity of the system.)

I'm pretty sure the helicopter argument began as an argument against trans people, not as an argument against weird-ass novel pronouns.

Replies from: Viliam↑ comment by Viliam · 2024-11-28T21:41:59.516Z · LW(p) · GW(p)

I agree with most of that, but it seems to me that respecting homosexuality is mostly a passive action; if you ignore what other people do, you are already maybe 90% there. Homosexuals don't change their names or pronouns after coming out. You don't have to pretend that ten years ago they were something else than they appeared to you at that time.

With transsexuality, you get the taboo of deadnaming, and occasionally the weird pronouns.

Also, the reaction seems different when you try to opt out of the game. Like, if someone is uncomfortable with homosexuality, they can say "could we please just... not discuss our sexual relations here, and focus on the job (or some other reason why we are here)?" and that's usually accepted. If someone similarly says "could we please just... call everyone 'they' as a compromise solution, or simply refer to people using their names", that already got some people cancelled.

Shortly, with homosexuals I never felt like my free speech was under attack.

It is possible that most of the weirdness and pushing boundaries does not actually come from the transsexuals themselves, but rather from woke people who try to be their "allies". Either way, in effect, whenever a discussion about trans topics starts, I feel like "oh my, the woke hordes are coming, people are going to get cancelled". (And I am not really concerned about myself here, because I am not American, so my job is not on the line; and if some online community decides to ban me, well then fuck them. But I don't want to be in a community where people need to watch their tongues, and get filtered by political conformity.)

Replies from: Q Home↑ comment by Q Home · 2024-11-29T08:38:36.413Z · LW(p) · GW(p)

I think there should be more spaces where controversial ideas can be debated. I'm not against spaces without pronoun rules, just don't think every place should be like this. Also, if we create a space for political debate, we need to really make sure that the norms don't punish everyone who opposes centrism & the right. (Over-sensitive norms like "if you said that some opinion is transphobic you're uncivil/shaming/manipulative and should get banned" might do this.) Otherwise it's not free speech either. Will just produce another Grey or Red Tribe instead of Red/Blue/Grey debate platform.

I do think progressives underestimate free speech damage. To me it's the biggest issue with the Left. Though I don't think they're entirely wrong about free speech.

For example, imagine I have trans employees. Another employee (X) refuses to use pronouns, in principle (using pronouns is not the same as accepting progressive gender theories). Why? Maybe X thinks my trans employees live such a great lie that using pronouns is already an unacceptable concession. Or maybe X thinks that even trying to switch "he" & "she" is too much work, and I'm not justified in asking to do that work because of absolute free speech. Those opinions seem unnecessarily strong and they're at odds with the well-being of my employees, my work environment. So what now? Also, if pronouns are an unacceptable concession, why isn't calling a trans woman by her female name an unacceptable concession?

Imagine I don't believe something about a minority, so I start avoiding words which might suggest otherwise. If I don't believe that gay love can be as true as straight love, I avoid the word "love" (in reference to gay people or to anybody) at work. If I don't believe that women are as smart as men, I avoid the word "master" / "genius" (in reference to women or anybody) at work. It can get pretty silly. Will predictably cost me certain jobs.

Replies from: Viliam↑ comment by Viliam · 2024-11-29T14:02:53.908Z · LW(p) · GW(p)

Well, the primary goal of this place is to advance rationality and AI safety. Not the victory of any specific political tribe. And neither conformity nor contrarianism for its own sake.

Employees get paid, which kinda automatically reduces their free speech, because saying the wrong words can make them stop getting paid.

What is an (un)acceptable concession? For me, it is a question of effort and what value I receive in return. I value niceness, so by default people get their wishes granted, unless I forget. Some requests I consider arbitrary and annoying, so they don't get them. Yeah, those are subjective criteria. But I am not here to get paid; I am here to enjoy the talk.

(What annoys me: asking to use pronouns other than he/she/they. I do not talk about people's past for no good reason, and definitely not just to annoy someone else. But if I have a good reason to point out that someone did something in the past, and the only way to do that is to reveal their previous name, then I don't care about the taboo.)

Employment is really a different situation. You get laws, and recommendations of your legal department; there is not much anyone can do about that. And the rest is about the balance of power, where the individual employee is often in a much worse bargaining position.

Replies from: Q Home↑ comment by Q Home · 2024-11-30T04:56:12.733Z · LW(p) · GW(p)

Agree that neopronouns are dumb. Wikipedia says they're used by 4% LGBTQ people and criticized both within and outside the community.

But for people struggling with normal pronouns (he/she/they), I have the following thoughts:

- Contorting language to avoid words associated with beliefs... is not easier than using the words. Don't project beliefs onto words too hard.

- Contorting language to avoid words associated with beliefs... is still a violation of free speech (if we have such a strong notion of free speech). So what is the motivation to propose that? It's a bit like a dog in the manger. "I'd rather cripple myself than help you, let's suffer together".

- Don't maximize free speech (in a negligible way) while ignoring every other human value.

- In an imperfect society, truly passive tolerance (tolerance which doesn't require any words/actions) is impossible. For example, in a perfect society, if my school has bigoted teachers, it immediately gets outcompeted by a non-bigoted school. In an imperfect society it might not happen. So we get enforceable norms.

Employees get paid, which kinda automatically reduces their free speech, because saying the wrong words can make them stop getting paid. (...) Employment is really a different situation. You get laws, and recommendations of your legal department; there is not much anyone can do about that.

I'm not familiar with your model of free speech (i.e. how you imagine free speech working if laws and power balances were optimal). People who value free speech usually believe that free speech should have power above money and property, to a reasonable degree. What's "reasonable" is the crux.

I think in situations where people work together on something unrelated to their beliefs, prohibiting to enforce a code of conduct is unreasonable. Because respect is crucial for the work environment and protecting marginalized groups. I assume people who propose to "call everyone they" or "call everyone by proper name" realize some of that.

If I let people use my house as a school, but find out that a teacher openly doesn't respect minority students (by rejecting to do the smallest thing for them), I'm justified to not let the teacher into my house.

I do not talk about people's past for no good reason, and definitely not just to annoy someone else. But if I have a good reason to point out that someone did something in the past, and the only way to do that is to reveal their previous name, then I don't care about the taboo.

I just think "disliking deadnaming under most circumstances = magical thinking, like calling Italy Rome" was a very strong, barely argued/explained opinion. In tandem with mentioning delusion (Napoleon) and hysteria. If you want to write something insulting, maybe bother to clarify your opinions a little bit more? Like you did in our conversation.

↑ comment by Sting · 2024-11-20T05:18:01.827Z · LW(p) · GW(p)

Maybe, but Martin Randall and Matt Gilliland have both said that the trans explanation matches their personal experience, and Eliezer Yudkowsky agrees with the explanation as well. I have no insider knowledge and am just going off what community members say.

- Do you have any particular reasons for thinking atheism is a bigger filter than pronouns and other trans issues?

- It's not clear what your position is. Do you think the contribution of pronouns and other trans issues is negligible? Slightly smaller than atheism? An order of magnitude smaller?

I suspect atheism is a non-negligible filter, but both smaller than trans issues, and less likely to filter out intelligent truth-seeking conservatives. Atheism is a factual question with a great deal of evidence in favor, and is therefore less politically charged. Ben Shapiro and Jordan Peterson have both said that the intellectual case for atheism is strong, and both remain very popular on the right.

Replies from: abandon, xpym↑ comment by dirk (abandon) · 2024-11-20T05:35:09.133Z · LW(p) · GW(p)

In the before-time of the internet, New Atheism was a much bigger deal than transgender issues.

↑ comment by xpym · 2024-11-20T15:15:51.563Z · LW(p) · GW(p)

I'd say that atheism had already set the "conservatives not welcome" baseline way back when, and this resulted in the community norms evolving accordingly. Granted, these days the trans stuff is more salient, but the reason it flourished here even more than in other tech-adjacent spaces has much to do with that early baseline.

Ben Shapiro and Jordan Peterson have both said that the intellectual case for atheism is strong, and both remain very popular on the right.

Sure, but somebody admitting that certainly isn't the modal conservative.

Replies from: Sting↑ comment by Sting · 2024-11-20T17:09:05.994Z · LW(p) · GW(p)

I wouldn't call the tone back then "conservatives not welcome". Conservatism is correlated with religiosity, but it's not the same thing. And I wouldn't even call the tone "religious people are unwelcome" -- people were perfectly civil with religious community members [LW · GW].

The community back then were willing to call irrational beliefs irrational, but they didn't go beyond that. Filtering out people who are militantly opposed to rational conclusions seems fine.

↑ comment by Viliam · 2024-11-18T16:47:35.680Z · LW(p) · GW(p)

Going by today's standards, we should have banned Gwern in 2012.

And I think that would have been a mistake.

I wonder how many other mistakes we made. The problem is, we won't get good feedback on this.

Replies from: habryka4↑ comment by habryka (habryka4) · 2024-11-18T17:24:23.352Z · LW(p) · GW(p)

Going by today's standards, we should have banned Gwern in 2012.

(I don't understand what this is referring to)

Replies from: Viliam↑ comment by Viliam · 2024-11-19T12:26:24.339Z · LW(p) · GW(p)

I apologize. I spent some time digging for ancient evidence... and then decided against [? · GW] publishing it.

Short version is that someone said something that was kinda inappropriate back then, and would probably get an instant ban these days, with most people applauding.

↑ comment by Sting · 2024-11-24T23:31:37.476Z · LW(p) · GW(p)

After thinking about this some more, I suspect the major problem here is value drift of the in-person Rationalist communities. The LessWrong website tolerates dissenting perspectives and seems much closer to the original rationalist vision. It is the in-person Berkeley community (and possibly others) that have left the original rationalist vision and been assimilated into the Urban Liberal Monoculture.

I am guessing EAs and alignment researchers are mostly drawn from, or at least heavily interact with, the in-person communities. If these communities are hostile to Conservatives, then you will tend to have a lack of Conservative EAs and alignment researchers, which may harm your ability to productively interact with Conservative lawmakers.

The value drift of the Berkeley community was described by Sarah Constantin in 2017:

It seems to me that the increasingly ill-named “Rationalist Community” in Berkeley has, in practice, a core value of “unconditional tolerance of weirdos.” It is a haven for outcasts and a paradise for bohemians. It is a social community based on warm connections of mutual support and fun between people who don’t fit in with the broader society.

...

Some other people in the community have more purely intellectual projects, that are closer to Eliezer Yudkowsky’s original goals. To research artificial intelligence; to develop tools for training Tetlock-style good judgment; to practice philosophical discourse. But I still think these are ultimately outcome-focused, external projects.

...

None of these projects need to be community-focused! In fact, I think it would be better if they freed themselves from the Berkeley community and from the particular quirks and prejudices of this group of people. It doesn’t benefit your ability to do AI research that you primarily draw your talent from a particular social group.

Or as Zvi put it:

The rationalists took on Berkeley, and Berkeley won.

...

This is unbelievably, world-doomingly bad. It means we’ve lost the mission.

...

A community needs to have standards. A rationalist community needs to have rationalist standards. Otherwise we are something else, and our well-kept gardens die by pacifism and hopefully great parties.

...

If Sarah is to believed (others who live in the area can speak to whether her observations are correct better than I can) then the community’s basic rationalist standards have degraded, and its priorities and cultural heart are starting to lie elsewhere. The community being built is rapidly ceasing to be all that rationalist, and is no longer conducive (and may be subtly but actively hostile) to the missions of saving and improving the world.

Its members might save or improve the world anyway, and I would still have high hopes for that including for MIRI and CFAR, but that would be in spite of the (local physical) community rather than because of it, if the community is discouraging them from doing so and they need to do all their work elsewhere with other people. Those who keep the mission would then depart, leaving those that remain all the more adrift.

I welcome analysis from anyone who better understands what's going on. I'm just speculating based on things insiders have written.

Replies from: Benito↑ comment by Ben Pace (Benito) · 2024-11-25T00:02:39.018Z · LW(p) · GW(p)

My rough take: the rationalist scene in Berkeley used to be very bad at maintaining boundaries. Basically the boundaries were "who gets invited to parties by friends". The one Berkeley community space ("REACH") was basically open-access. In recent years the Lightcone team (of which I am a part) has hosted spaces and events and put in the work to maintain actual boundaries (including getting references on people and checking out suspicion of bad behavior, but mostly just making it normal for people to have events with standards for entry) and this has substantially improved the ability for rationalist spaces to have culture that is distinct from the local Berkeley culture.

↑ comment by Martin Randall (martin-randall) · 2024-11-18T22:46:15.551Z · LW(p) · GW(p)

Gilliland's idea is that it is the proportion of trans people that dissuades some right-wing people from joining. That seems plausible to me, it matches the "Big Sort" thesis and my personal experience. I agree that his phrasing is unwelcoming.

I tried to find an official pronoun policy for LessWrong, LessOnline, EA Global, etc, and couldn't. If you're thinking of something specific could you say what? As well as the linked X thread I have read the X thread linked from Challenges to Yudkowsky's pronoun reform proposal [LW · GW]. But these are the opinions of one person, they don't amount to politically-coded compelled speech. I'm not part of the rationalist community and this is a genuine question. Maybe such policies exist but are not advertised.

Replies from: Sting, jkaufman↑ comment by Sting · 2024-11-20T04:25:51.923Z · LW(p) · GW(p)

Eliezer said you are welcome in the community if you "politely accede to pronoun requests". Which sounds to me like, "politically-coded speech is required to be welcome in the community". (Specifically, people are socially required to use "woman" and "she" to refer to MtF transgenders). And Eliezer is not just some guy, he is the closest thing the rationalist community has to a leader.

There is a broad range of possible customs the community could have adopted. A few, from more right-coded to more left-coded.

- People should use words to refer to the category-boundaries that best carve [LW · GW] reality [LW · GW] at [LW · GW] the [LW · GW] joints [LW · GW]. MtF transgenders unambiguously [LW · GW] fall into the "male" cluster, and therefore the prescriptive protocol is to refer to them as "he". Anyone who breaks this protocol (except under duress) is not welcome as a member of the community.

- Same as above, but it is only the consensus position, and those who follow other protocols are still welcome to be part of the community.

- Anyone is free to decide for themselves whether to use people's preferred pronouns. You can ask people to use your preferred pronouns, as long as you are polite about it. And people are free to refuse, as long as they are also polite.

- As a matter of politeness, you are not allowed to refer to people by pronouns they asked you not to use. However, you are not required to use people's preferred pronouns. (So you cannot refer to a MtF transgender as "he", but you don't have to use "she". You could instead refer to them by the first letter of their name, or some other alternative.)

- You should refer to transgenders by their preferred pronouns (no alternatives). This is the consensus position, but people who politely decline to do so are still welcome to join.

- Same as above, except anyone who declines is not welcome as a member of the community.

- Same as above, and economically literate people who are in favor of market solutions are also unwelcome.

I don't know which of these solutions is best, but 1, 6, and 7 seem bad. Eliezer seems to support 6.

Edit: Reworded to taboo the phrase "Anyone who disagrees" as requested by RobertM.

Replies from: Benito, martin-randall↑ comment by Ben Pace (Benito) · 2024-11-24T23:32:27.942Z · LW(p) · GW(p)

Is there literally any scene in the world that has openly transgender people in it and does 3, 4, or 5? Like, a space where a transgender person is friendly with the people there and different people in a conversation are reliably using different pronouns to refer to the same person? My sense is that it's actively confusing in a conversation for the participants to not be consistent in the choice of someone's pronouns.

I guess I've often seen people default to 'they' a lot for people who have preferred pronouns that are he/she, that seems to go by just fine even if some people use he / she for the person, but I can't recall ever seeing a conversation where one person uses 'he' and another person uses 'she' when both are referring to the same person.

Replies from: Sting↑ comment by Sting · 2024-11-25T00:05:50.234Z · LW(p) · GW(p)

Is there literally any scene that has openly transgender people in it and does 3, 4, or 5?

If you can use "they" without problems, that sounds a lot like 4.

As for 3 and 5, not to my knowledge. Compromises like this would be more likely in settings with a mix of Liberals and Conservatives, but such places are becoming less common. Perhaps some family reunions would have similar rules or customs?

Replies from: Benito↑ comment by Ben Pace (Benito) · 2024-11-25T00:09:48.877Z · LW(p) · GW(p)

I could believe it, but my (weak) guess is that in most settings people care about which pronoun they use far less than they care about people not being confused about who is being referred to.

↑ comment by Martin Randall (martin-randall) · 2024-11-21T04:38:10.662Z · LW(p) · GW(p)

Thanks for clarifying. By "policy" and "standards" and "compelled speech" I thought you meant something more than community norms and customs. This is traditionally an important distinction to libertarians and free speech advocates. I think the distinction carves reality at the joints, and I hope you agree. I agree that community norms and customs can be unwelcoming.

Replies from: Sting↑ comment by Sting · 2024-11-21T15:08:31.328Z · LW(p) · GW(p)

Yes, it's not a law, so it's not a libertarian issue. As I said earlier:

Any community is free to have whatever standards they want for membership, including politically-coded compelled speech. But it is not exactly shocking if your membership is then composed 70% of one side and <2% of the other.

By "compelled speech" being a standard for community membership, I just meant "You are required to say certain things or you will be excluded from the community." For instance, as jefftk pointed out,

Replies from: martin-randallThe EA Forum has an explicit policy [? · GW] that you need to use the pronouns the people you're talking about prefer.

↑ comment by Martin Randall (martin-randall) · 2024-11-24T03:57:59.818Z · LW(p) · GW(p)

I saw the the EA Forum's policy. If someone repeatedly and deliberately misgenders on the EA Forum they will be banned from that forum. But you don't need to post on the EA Forum at all in order to be part of the rationalist community. On the provided evidence, it is false that:

You are required to say certain things or you will be excluded from the community.

I want people of all political beliefs, including US conservative-coded beliefs, to feel welcome in the rationalist community. It's important to that goal to distinguish between policies and norms, because changing policies requires a different process to changing norms, and because policies and norms are unwelcoming in different ways and to different extents.

It's because of that goal that I'm encouraging you to change these incorrect/misleading/unclear statements. If newcomers incorrectly believe that they are required to say certain things or they will be excluded from the community, then they will feel less welcome, for nothing. Let's avoid that.

Replies from: cata, shankar-sivarajan↑ comment by cata · 2024-11-24T21:51:03.390Z · LW(p) · GW(p)

I don't have a bunch of citations but I spend time in multiple rationalist social spaces and it seems to me that I would in fact be excluded from many of them if I stuck to sex-based pronouns, because as stated above there are many trans people in the community, of whom many hold to the consensus progressive norms on this. The EA Forum policy is not unrepresentative of the typical sentiment.

So I don't agree that the statements are misleading.

(I note that my typical habit is to use singular they for visibly NB/trans people, and I am not excluded for that. So it's not precisely a kind of compelled speech.)

↑ comment by Shankar Sivarajan (shankar-sivarajan) · 2024-11-24T21:40:58.325Z · LW(p) · GW(p)

incorrect/misleading/unclear statements

I disagree that his statements are misleading: the impression someone who believed them true would have is far more accurate than someone who believed them false. Is that not more relevant, and a better measure of honesty, than whether or not they're "incorrect"?

↑ comment by jefftk (jkaufman) · 2024-11-19T16:37:26.549Z · LW(p) · GW(p)

I tried to find an official pronoun policy for LessWrong, LessOnline, EA Global, etc, and couldn't.

The EA Forum has an explicit policy [? · GW] that you need to use the pronouns the people you're talking about prefer. EAG(x) doesn't explicitly include this in the code of conduct [? · GW] but it's short and I expect is interpreted by people who would consider non-accidental misgendering to be a special case of "offensive, disruptive, or discriminatory actions or communication.". I vaguely remember seeing someone get a warning on LW for misgendering, but I'm not finding anything now.

Replies from: habryka4↑ comment by habryka (habryka4) · 2024-11-20T07:34:46.199Z · LW(p) · GW(p)

I don't remember ever adjudicating this, but my current intuition, having not thought about it hard, is that I don't see a super clear line here (like, in a moderation dispute I can imagine judging either way depending on the details).

comment by Seth Herd · 2024-11-16T01:24:01.589Z · LW(p) · GW(p)

This might be the most important alignment idea in a while.

Making an honest argument based on ideological agreements is a solidly good idea.